I. network strategy

If you want to control network traffic at the IP address or port level (OSI layer 3 or 4), you can consider using Kubernetes network policy for specific applications in the cluster. NetworkPolicy is an application-centered structure that allows you to set how to allow Pod to communicate with various network "entities" on the network (we use entities here to avoid overusing common terms such as "endpoint" and "service", which have a specific meaning in Kubernetes).

The Pod that the Pod can communicate with is identified by a combination of the following three identifiers:

Other permitted Pods(Exceptions: Pod Cannot block access to itself) Allowed namespaces IP Block (exception: and Pod The communication of the running node is always allowed, regardless of Pod Or node IP (address)

When defining a NetworkPolicy based on Pod or namespace, you will use the selection operator to set which traffic can enter or leave the Pod matching the operator.

At the same time, when the IP based NetworkPolicy is created, we define the policy based on the IP block (CIDR range).

II. Preconditions

Network policy is implemented through network plug-ins. To use network policy, you must use a network solution that supports NetworkPolicy. Creating a NetworkPolicy resource object without a controller to make it effective has no effect.

The network plug-in we use here is calico, which we have deployed earlier,

Now just start the network plug-in

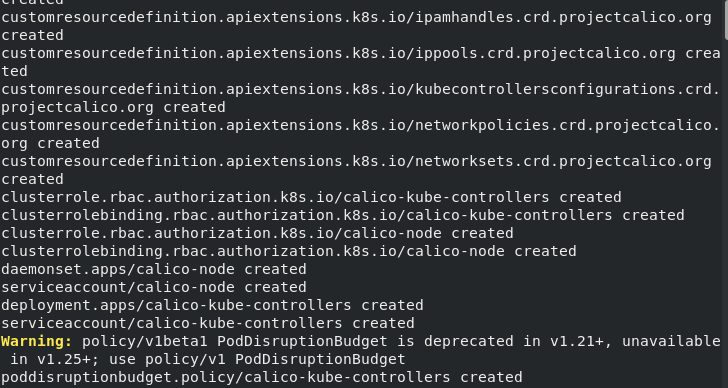

[root@server1 calico]# kubectl apply -f calico.yaml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget poddisruptionbudget.policy/calico-kube-controllers created

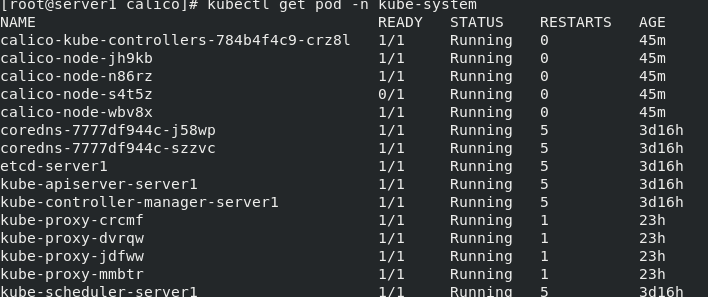

[root@server1 calico]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-784b4f4c9-crz8l 1/1 Running 0 45m calico-node-jh9kb 1/1 Running 0 45m calico-node-n86rz 1/1 Running 0 45m calico-node-s4t5z 0/1 Running 0 45m calico-node-wbv8x 1/1 Running 0 45m coredns-7777df944c-j58wp 1/1 Running 5 3d16h coredns-7777df944c-szzvc 1/1 Running 5 3d16h etcd-server1 1/1 Running 5 3d16h kube-apiserver-server1 1/1 Running 5 3d16h kube-controller-manager-server1 1/1 Running 5 3d16h kube-proxy-crcmf 1/1 Running 1 23h kube-proxy-dvrqw 1/1 Running 1 23h kube-proxy-jdfww 1/1 Running 1 23h kube-proxy-mmbtr 1/1 Running 1 23h kube-scheduler-server1 1/1 Running 5 3d16h

(III) isolated and non isolated Pod

By default, pods are non isolated and accept traffic from any source.

When a Pod is selected by a NetworkPolicy, it enters the isolated state. Once a specific Pod is selected by a NetworkPolicy in the namespace, the Pod will reject the connection not allowed by the NetworkPolicy. (other pods in the namespace that are not selected by the NetworkPolicy will continue to accept all traffic)

Network policies do not conflict, they are cumulative. If any one or more policies select a Pod, the Pod is subject to the union of the inbound / outbound rules of these policies. Therefore, the order of evaluation does not affect the results of the strategy.

In order to allow the network data flow between two Pods, the outbound rule on the source side Pod and the inbound rule on the target side Pod need to allow the traffic. If the outbound rule on the source side or the inbound rule on the target side rejects the traffic, the traffic will be rejected.

IV. NetworkPolicy resources

Note: sending the above example to the API server has no effect unless you select a network solution that supports network policy.

4.1 required fields

Like all other Kubernetes configurations, the NetworkPolicy requires the apiVersion, kind, and metadata fields.

4.2 spec

The network policy specification contains all the information needed to define a specific network policy in a namespace.

4.3 podSelector

Each network policy includes a podSelector that selects a set of pods to which the policy applies. The policy in the example selects a Pod with a "role=db" label. An empty podSelector selects all pods in the namespace.

4.4 policyTypes

Each NetworkPolicy contains a list of policyTypes containing either progress or progress or both. The policyTypes field indicates whether the given policy applies to inbound traffic into the selected Pod, outbound traffic from the selected Pod, or both. If policyTypes is not specified in NetworkPolicy, Ingress is always set by default; If the NetworkPolicy has any exit rules, set progress.

4.5 ingress

Each NetworkPolicy can contain a whitelist of ingress rules. Each traffic rule and ports are allowed to match at the same time. The sample policy contains a simple rule: it matches a specific port from one of three sources, the first specified by ipBlock, the second specified by namespaceSelector, and the third specified by podSelector.

4.6 egress

Each NetworkPolicy can contain a whitelist of egress rules. Each rule allows traffic that matches the to and port sections. The sample policy contains a rule that matches the traffic on the specified port to any destination in 10.0.0.0/24.

4.7 description

quarantine "default" Under namespace "role=db" of Pod (If they are not already isolated).

(Ingress Rules) allow the following Pod connection to "default" Namespace with "role=db" Label all Pod 6379 TCP Port:

"default" Namespace with "role=frontend" Label all Pod

have "project=myproject" In all namespaces of the tag Pod

IP The address range is 172.17.0.0–172.17.0.255 And 172.17.2.0–172.17.255.255 (That is, except 172.17.1.0/24 All except 172.17.0.0/16)

(Egress Rules) allow from "role=db" Any in the namespace of the tag Pod reach CIDR 10.0.0.0/24 Lower 5978 TCP Port connection.

V. behavior of selectors to and from

You can specify four selectors in the from section of ingress or the to section of egress

5.1 podSelector

This selector will select a specific Pod in the same namespace as NetworkPolicy, which should be allowed as an inbound traffic source or outbound traffic destination.

5.2 namespaceSelector

This selector will select a specific namespace, and all pods should be used as their inbound traffic source or outbound traffic destination.

5.3 namespaceSelector and podSelector

A to/from entry specifying namespace selector and podSelector selects a specific Pod in a specific namespace. Be careful to use the correct YAML syntax.

5.4 ipBlock

This selector selects a specific IP CIDR range to use as an inbound traffic source or outbound traffic destination. These should be external IPS of the cluster, because pod IPS exist for a short time and are generated randomly.

The inbound and outbound mechanisms of the cluster usually need to rewrite the source IP or destination IP of the packet. When this happens, it is uncertain whether it occurs before or after the NetworkPolicy processing, and the behavior may be different for different combinations of network plug-ins, cloud providers, Service implementations, etc.

For inbound traffic, this means that in some cases, you can filter incoming packets according to the actual original source IP, while in other cases, the source IP used by NetworkPolicy may be the node of LoadBalancer or Pod.

For outbound traffic, this means that the connection from the Pod to the Service IP rewritten as the IP outside the cluster may or may not be constrained by the ipBlock based policy.

Vi. relevant strategies

6.1 restrict access to designated services

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-nginx

spec:

podSelector:

matchLabels:

app: nginx

6.2 allow the specified pod to access the service

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-nginx

spec:

podSelector:

matchLabels:

app: nginx

ingress:

- from:

- podSelector:

matchLabels:

app: demo

6.3 mutual access between all pods in the namespace is prohibited

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny

namespace: default

spec:

podSelector: {}

6.4 prohibit other namespace s from accessing the service

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: deny-namespace

spec:

podSelector:

matchLabels:

ingress:

- from:

- podSelector: {}

6.5 only the specified namespace is allowed to access the service

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-namespace

spec:

podSelector:

matchLabels:

app: myapp

ingress:

- from:

- namespaceSelector:

matchLabels:

role: prod

6.6 allow Internet access

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: web-allow-external

spec:

podSelector:

matchLabels:

app: web

ingress:

- ports:

- port: 80

from: []

VII. SCTP support

As a Beta feature, SCTP support is enabled by default. To disable SCTP at the cluster level, you (or your Cluster Administrator) need to specify -- feature gates = SCTPSupport = false,... For the API server to disable SCTPSupport feature gating. After this feature gating is enabled, the user can set the protocol field of NetworkPolicy to SCTP.

explain: You must use support SCTP Protocol network policy CNI plug-in unit.

8. Point to a namespace based on a name

As long as the NamespaceDefaultLabelName property gating is enabled, the Kubernetes control surface will set an unalterable label Kubernetes on all namespaces io/metadata. name. The value of this tag is the name of the namespace.

If NetworkPolicy cannot point to a namespace in some object fields, you can use standard labeling to point to a specific namespace.