8. Data storage

As mentioned earlier, containers may have a short life cycle and are frequently created and destroyed. When the container is destroyed, the data saved in the container will also be cleared. This result is undesirable to users in some cases. In order to persist the container data, kubernetes introduces the concept of Volume.

Volume is a shared directory that can be accessed by multiple containers in a Pod. It is defined on the Pod and then mounted to a specific file directory by multiple containers in a Pod. kubernetes realizes data sharing and persistent storage between different containers in the same Pod through volume. The life container of a volume is not related to the life cycle of a single container in the Pod. When the container is terminated or restarted, the data in the volume will not be lost.

kubernetes Volume supports many types, including the following:

- Simple storage: EmptyDir, HostPath, NFS

- Advanced storage: PV, PVC

- Configuration storage: ConfigMap, Secret

8.1 basic storage

8.1.1 EmptyDir

EmptyDir is the most basic Volume type. An EmptyDir is an empty directory on the Host.

EmptyDir is created when the Pod is allocated to the Node. Its initial content is empty, and there is no need to specify the corresponding directory file on the host, because kubernetes will automatically allocate a directory. When the Pod is destroyed, the data in EmptyDir will also be permanently deleted. The purpose of EmptyDir is as follows:

- Temporary space, such as a temporary directory required for some applications to run, and does not need to be permanently reserved

- A directory where one container needs to get data from another container (multi container shared directory)

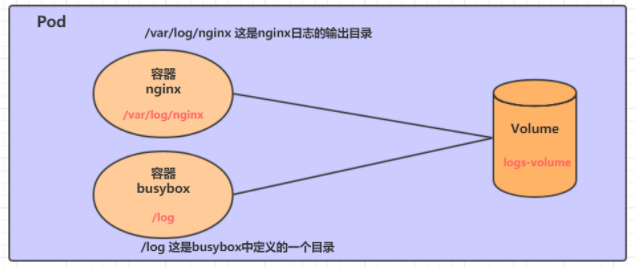

Next, use EmptyDir through a case of file sharing between containers.

Prepare two containers nginx and busybox in a Pod, and then declare that a Volume is hung in the directories of the two containers respectively. Then the nginx container is responsible for writing logs to the Volume, and busybox reads the log contents to the console through commands.

Create a volume-emptydir.yaml

apiVersion: v1

kind: Pod

metadata:

name: volume-emptydir

namespace: dev

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

volumeMounts: # Hang logs volume in the nginx container, and the corresponding directory is / var/log/nginx

- name: logs-volume

mountPath: /var/log/nginx

- name: busybox

image: busybox:1.30

command: ["/bin/sh","-c","tail -f /logs/access.log"] # Initial command to dynamically read the contents of the specified file

volumeMounts: # Hang logs volume in the busybox container, and the corresponding directory is / logs

- name: logs-volume

mountPath: /logs

volumes: # Declare volume, the name is logs volume, and the type is emptyDir

- name: logs-volume

emptyDir: {}

# Create Pod [root@k8s-master01 ~]# kubectl create -f volume-emptydir.yaml pod/volume-emptydir created # View pod [root@k8s-master01 ~]# kubectl get pods volume-emptydir -n dev -o wide NAME READY STATUS RESTARTS AGE IP NODE ...... volume-emptydir 2/2 Running 0 97s 10.42.2.9 node1 ...... # Accessing nginx through podIp [root@k8s-master01 ~]# curl 10.42.2.9 ...... # View the standard output of the specified container through the kubectl logs command [root@k8s-master01 ~]# kubectl logs -f volume-emptydir -n dev -c busybox 10.42.1.0 - - [27/Jun/2021:15:08:54 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

8.1.2 HostPath

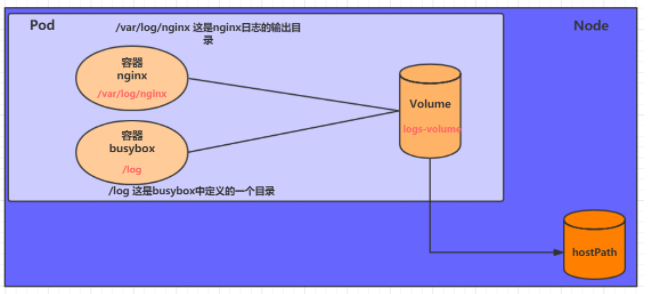

As mentioned in the last lesson, the data in EmptyDir will not be persisted. It will be destroyed with the end of Pod. If you want to simply persist the data to the host, you can choose HostPath.

HostPath is to hang an actual directory in the Node host in the Pod for use by the container. This design can ensure that the Pod is destroyed, but the data basis can exist on the Node host.

Create a volume-hostpath.yaml:

apiVersion: v1

kind: Pod

metadata:

name: volume-hostpath

namespace: dev

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

volumeMounts:

- name: logs-volume

mountPath: /var/log/nginx

- name: busybox

image: busybox:1.30

command: ["/bin/sh","-c","tail -f /logs/access.log"]

volumeMounts:

- name: logs-volume

mountPath: /logs

volumes:

- name: logs-volume

hostPath:

path: /root/logs

type: DirectoryOrCreate # If the directory exists, it will be used. If it does not exist, it will be created first and then used

about type A description of the value of:

DirectoryOrCreate If the directory exists, it will be used. If it does not exist, it will be created first and then used

Directory Directory must exist

FileOrCreate If a file exists, it will be used. If it does not exist, it will be created first and then used

File File must exist

Socket unix Socket must exist

CharDevice Character device must exist

BlockDevice Block device must exist

# Create Pod [root@k8s-master01 ~]# kubectl create -f volume-hostpath.yaml pod/volume-hostpath created # View Pod [root@k8s-master01 ~]# kubectl get pods volume-hostpath -n dev -o wide NAME READY STATUS RESTARTS AGE IP NODE ...... pod-volume-hostpath 2/2 Running 0 16s 10.42.2.10 node1 ...... #Access nginx [root@k8s-master01 ~]# curl 10.42.2.10 [root@k8s-master01 ~]# kubectl logs -f volume-emptydir -n dev -c busybox # Next, you can view the stored files in the / root/logs directory of the host ### Note: the following operations need to run on the node where the Pod is located (node1 in the case) [root@node1 ~]# ls /root/logs/ access.log error.log # Similarly, if you create a file in this directory, you can see it in the container

8.1.3 NFS

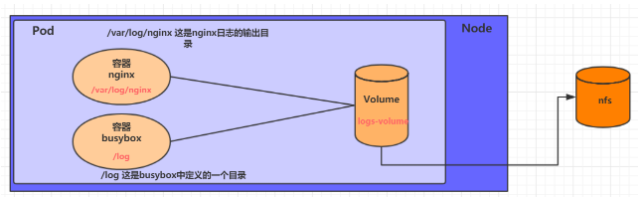

HostPath can solve the problem of data persistence, but once the Node fails, the Pod will have problems if it is transferred to another Node. At this time, it is necessary to prepare a separate network storage system. NFS and CIFS are commonly used.

NFS is a network file storage system. You can build an NFS server, and then directly connect the storage in the Pod to the NFS system. In this way, no matter how the Pod is transferred on the Node, as long as the connection between the Node and NFS is OK, the data can be accessed successfully.

1) First, prepare the nfs server. For simplicity, the master node is directly used as the nfs server

# Installing nfs services on nfs [root@nfs ~]# yum install nfs-utils -y # Prepare a shared directory [root@nfs ~]# mkdir /root/data/nfs -pv # Expose the shared directory to all hosts in the 192.168.5.0/24 network segment with read and write permissions [root@nfs ~]# vim /etc/exports [root@nfs ~]# more /etc/exports /root/data/nfs 192.168.5.0/24(rw,no_root_squash) # Start nfs service [root@nfs ~]# systemctl restart nfs

2) Next, you need to install nfs on each node node so that the node node can drive nfs devices

# Install the nfs service on the node. Note that it does not need to be started [root@k8s-master01 ~]# yum install nfs-utils -y

3) Next, you can write the configuration file of pod and create volume-nfs.yaml

apiVersion: v1

kind: Pod

metadata:

name: volume-nfs

namespace: dev

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

volumeMounts:

- name: logs-volume

mountPath: /var/log/nginx

- name: busybox

image: busybox:1.30

command: ["/bin/sh","-c","tail -f /logs/access.log"]

volumeMounts:

- name: logs-volume

mountPath: /logs

volumes:

- name: logs-volume

nfs:

server: 192.168.5.6 #nfs server address

path: /root/data/nfs #Shared file path

4) Finally, run the pod and observe the results

# Create pod [root@k8s-master01 ~]# kubectl create -f volume-nfs.yaml pod/volume-nfs created # View pod [root@k8s-master01 ~]# kubectl get pods volume-nfs -n dev NAME READY STATUS RESTARTS AGE volume-nfs 2/2 Running 0 2m9s [root@k8s-master01 ~]# kubectl get pod volume-nfs -n volume -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES volume-nfs 2/2 Running 0 3m58s 10.244.1.53 node1 <none> <none> [root@k8s-master01 ~]#curl 10.244.1.53:80ls [root@k8s-master01 ~]#kubectl logs -f volume-nfs -n volume -c busybox # Check the shared directory on the nfs server and find that there are already files [root@k8s-master01 ~]# ls /root/data/ access.log error.log

8.2 advanced storage

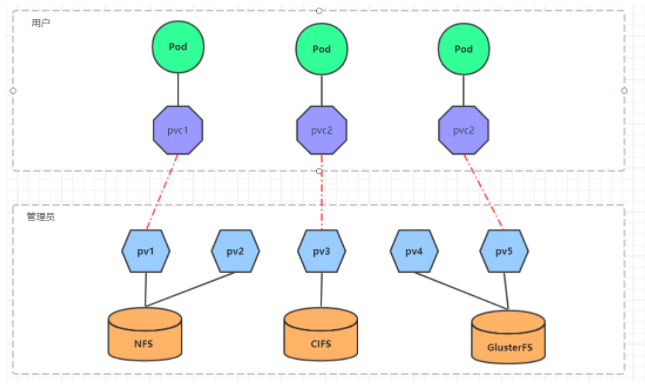

We have learned to use NFS to provide storage. At this time, users are required to build NFS systems and configure NFS in yaml. Since kubernetes supports many storage systems, it is obviously unrealistic for customers to master them all. In order to shield the details of the underlying storage implementation and facilitate users, kubernetes introduces PV and PVC resource objects.

-

PV (Persistent Volume) means Persistent Volume. It is an abstraction of the underlying shared storage. Generally, PV is created and configured by kubernetes administrator. It is related to the underlying specific shared storage technology, and connects with shared storage through plug-ins.

-

PVC (Persistent Volume Claim) is a persistent volume declaration, which means a user's declaration of storage requirements. In other words, PVC is actually a resource demand application sent by users to kubernetes system.

After using PV and PVC, the work can be further subdivided:

- Storage: Storage Engineer Maintenance

- PV: kubernetes administrator maintenance

- PVC: kubernetes user maintenance

8.2.1 PV

PV is the abstraction of storage resources. The following is the resource manifest file:

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv2

spec:

nfs: # Storage type, corresponding to the underlying real storage

capacity: # Storage capacity. At present, only storage space settings are supported

storage: 2Gi

accessModes: # Access mode

storageClassName: # Storage category

persistentVolumeReclaimPolicy: # Recycling strategy

Description of key configuration parameters of PV:

-

Storage type

kubernetes supports multiple storage types, and the configuration of each storage type is different

-

Storage capacity

At present, only the storage space setting (storage=1Gi) is supported, but the configuration of IOPS, throughput and other indicators may be added in the future

-

Access modes

It is used to describe the user application's access rights to storage resources. The access rights include the following ways:

- ReadWriteOnce (RWO): read and write permission, but can only be mounted by a single node

- ReadOnlyMany (ROX): read-only permission, which can be mounted by multiple nodes

- ReadWriteMany (RWX): read-write permission, which can be mounted by multiple nodes

It should be noted that different underlying storage types may support different access modes

-

Persistent volumereclaimpolicy

When PV is no longer used, the treatment of it. Currently, three strategies are supported:

- Retain to retain data, which requires the administrator to manually clean up the data

- Recycle clears the data in the PV. The effect is equivalent to executing rm -rf /thevolume/*

- Delete: the back-end storage connected to the PV completes the deletion of volume. Of course, this is common in the storage services of cloud service providers

It should be noted that different underlying storage types may support different recycling strategies

-

Storage category

PV can specify a storage category through the storageClassName parameter

- A PV with a specific category can only be bound to a PVC that requests that category

- PV without a category can only be bound with PVC that does not request any category

-

status

In the life cycle of a PV, it may be in 4 different stages:

- Available: indicates the available status and has not been bound by any PVC

- Bound: indicates that PV has been bound by PVC

- Released: indicates that the PVC has been deleted, but the resource has not been redeclared by the cluster

- Failed: indicates that the automatic recovery of the PV failed

experiment

Use NFS as storage to demonstrate the use of PVS. Create three PVS corresponding to three exposed paths in NFS.

- Prepare NFS environment

# Create directory

[root@nfs ~]# mkdir /root/data/{pv1,pv2,pv3} -pv

# Exposure services

[root@nfs ~]# more /etc/exports

/root/data/pv1 192.168.5.0/24(rw,no_root_squash)

/root/data/pv2 192.168.5.0/24(rw,no_root_squash)

/root/data/pv3 192.168.5.0/24(rw,no_root_squash)

# Restart service

[root@nfs ~]# systemctl restart nfs

- Create pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /root/data/pv1

server: 192.168.5.6

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv2

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /root/data/pv2

server: 192.168.5.6

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv3

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /root/data/pv3

server: 192.168.5.6

# Create pv [root@k8s-master01 ~]# kubectl create -f pv.yaml persistentvolume/pv1 created persistentvolume/pv2 created persistentvolume/pv3 created # View pv [root@k8s-master01 ~]# kubectl get pv -o wide NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS AGE VOLUMEMODE pv1 1Gi RWX Retain Available 10s Filesystem pv2 2Gi RWX Retain Available 10s Filesystem pv3 3Gi RWX Retain Available 9s Filesystem

8.2.2 PVC

PVC is a resource application, which is used to declare the demand information for storage space, access mode and storage category. Here is the resource manifest file:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc

namespace: dev

spec:

accessModes: # Access mode

selector: # PV selection with label

storageClassName: # Storage category

resources: # Request space

requests:

storage: 5Gi

Description of key configuration parameters of PVC:

- Access modes

Used to describe the access rights of user applications to storage resources

-

Selection criteria (selector)

Through the setting of Label Selector, PVC can filter the existing PV in the system

-

Storage category (storageClassName)

When defining PVC, you can set the required back-end storage category. Only the pv with this class can be selected by the system

-

Resource requests

Describes the request for storage resources

experiment

- Create pvc.yaml and apply for pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc2

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc3

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

# Create pvc [root@k8s-master01 ~]# kubectl create -f pvc.yaml persistentvolumeclaim/pvc1 created persistentvolumeclaim/pvc2 created persistentvolumeclaim/pvc3 created # View pvc [root@k8s-master01 ~]# kubectl get pvc -n dev -o wide NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE pvc1 Bound pv1 1Gi RWX 15s Filesystem pvc2 Bound pv2 2Gi RWX 15s Filesystem pvc3 Bound pv3 3Gi RWX 15s Filesystem # View pv [root@k8s-master01 ~]# kubectl get pv -o wide NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM AGE VOLUMEMODE pv1 1Gi RWx Retain Bound dev/pvc1 3h37m Filesystem pv2 2Gi RWX Retain Bound dev/pvc2 3h37m Filesystem pv3 3Gi RWX Retain Bound dev/pvc3 3h37m Filesystem

- Create pods.yaml and use pv

apiVersion: v1

kind: Pod

metadata:

name: pod1

namespace: dev

spec:

containers:

- name: busybox

image: busybox:1.30

command: ["/bin/sh","-c","while true;do echo pod1 >> /root/out.txt; sleep 10; done;"]

volumeMounts:

- name: volume

mountPath: /root/

volumes:

- name: volume

persistentVolumeClaim:

claimName: pvc1

readOnly: false

---

apiVersion: v1

kind: Pod

metadata:

name: pod2

namespace: dev

spec:

containers:

- name: busybox

image: busybox:1.30

command: ["/bin/sh","-c","while true;do echo pod2 >> /root/out.txt; sleep 10; done;"]

volumeMounts:

- name: volume

mountPath: /root/

volumes:

- name: volume

persistentVolumeClaim:

claimName: pvc2

readOnly: false

# Create pod [root@k8s-master01 ~]# kubectl create -f pods.yaml pod/pod1 created pod/pod2 created # View pod [root@k8s-master01 ~]# kubectl get pods -n dev -o wide NAME READY STATUS RESTARTS AGE IP NODE pod1 1/1 Running 0 14s 10.244.1.69 node1 pod2 1/1 Running 0 14s 10.244.1.70 node1 # View pvc [root@k8s-master01 ~]# kubectl get pvc -n dev -o wide NAME STATUS VOLUME CAPACITY ACCESS MODES AGE VOLUMEMODE pvc1 Bound pv1 1Gi RWX 94m Filesystem pvc2 Bound pv2 2Gi RWX 94m Filesystem pvc3 Bound pv3 3Gi RWX 94m Filesystem # View pv [root@k8s-master01 ~]# kubectl get pv -n dev -o wide NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM AGE VOLUMEMODE pv1 1Gi RWX Retain Bound dev/pvc1 5h11m Filesystem pv2 2Gi RWX Retain Bound dev/pvc2 5h11m Filesystem pv3 3Gi RWX Retain Bound dev/pvc3 5h11m Filesystem # View file stores in nfs [root@nfs ~]# more /root/data/pv1/out.txt node1 node1 [root@nfs ~]# more /root/data/pv2/out.txt node2 node2

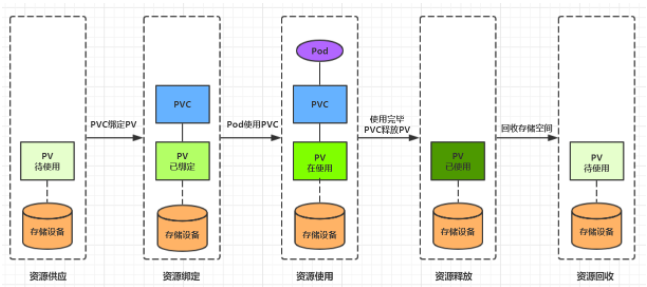

8.2.3 life cycle

PVC and PV are one-to-one correspondence, and the interaction between PV and PVC follows the following life cycle:

-

Provisioning: the administrator manually creates the underlying storage and PV

-

Resource binding: users create PVC, and kubernetes is responsible for finding PV according to the declaration of PVC and binding it

After the user defines the PVC, the system will select one of the existing PVS that meets the conditions according to the PVC's request for storage resources

- Once found, bind the PV with the user-defined PVC, and the user's application can use the PVC

- If it cannot be found, the PVC will be in the Pending state indefinitely until the system administrator creates a PV that meets its requirements

Once PV is bound to a PVC, it will be monopolized by this PVC and can no longer be bound with other PVC

-

Resource usage: users can use pvc in pod like volume

Pod uses the definition of Volume to mount PVC to a path in the container for use.

-

Resource release: users delete pvc to release pv

After the storage resource is used, the user can delete the PVC, and the PV bound to the PVC will be marked as "released", but it cannot be bound to other PVC immediately. Data written through the previous PVC may still be left on the storage device, and the PV can be used again only after it is cleared.

-

Resource recycling: kubernetes recycles resources according to the recycling policy set by pv

For PV, the administrator can set the recycling policy to set how to deal with the legacy data after the bound PVC releases resources. Only after the storage space of PV is recycled can it be bound and used by new PVC

8.3 configuration storage

8.3.1 ConfigMap

ConfigMap is a special storage volume, which is mainly used to store configuration information.

Create configmap.yaml as follows:

apiVersion: v1

kind: ConfigMap

metadata:

name: configmap

namespace: dev

data:

info: |

username:admin

password:123456

Next, use this configuration file to create a configmap

# Create configmap [root@k8s-master01 ~]# kubectl create -f configmap.yaml configmap/configmap created # View configmap details [root@k8s-master01 ~]# kubectl describe cm configmap -n dev Name: configmap Namespace: dev Labels: <none> Annotations: <none> Data ==== info: ---- username:admin password:123456 Events: <none>

Next, create a pod-configmap.yaml and mount the configmap created above

apiVersion: v1

kind: Pod

metadata:

name: pod-configmap

namespace: dev

spec:

containers:

- name: nginx

image: nginx:1.17.1

volumeMounts: # Mount the configmap to the directory

- name: config

mountPath: /configmap/config

volumes: # Reference configmap

- name: config

configMap:

name: configmap

# Create pod [root@k8s-master01 ~]# kubectl create -f pod-configmap.yaml pod/pod-configmap created # View pod [root@k8s-master01 ~]# kubectl get pod pod-configmap -n dev NAME READY STATUS RESTARTS AGE pod-configmap 1/1 Running 0 6s #Enter container [root@k8s-master01 ~]# kubectl exec -it pod-configmap -n dev /bin/sh # cd /configmap/config/ # ls info # more info username:admin password:123456 # You can see that the mapping has been successful, and each configmap has been mapped to a directory # Key --- > file value --- > contents in the file # At this time, if the contents of the configmap are updated, the values in the container will also be updated dynamically

8.3.2 Secret

In kubernetes, there is also an object very similar to ConfigMap, called Secret object. It is mainly used to store sensitive information, such as passwords, Secret keys, certificates, etc.

- First, use base64 to encode the data

[root@k8s-master01 ~]# echo -n 'admin' | base64 #Prepare username YWRtaW4= [root@k8s-master01 ~]# echo -n '123456' | base64 #Prepare password MTIzNDU2

- Next, write secret.yaml and create Secret

apiVersion: v1 kind: Secret metadata: name: secret namespace: dev type: Opaque data: username: YWRtaW4= password: MTIzNDU2

# Create secret [root@k8s-master01 ~]# kubectl create -f secret.yaml secret/secret created # View secret details [root@k8s-master01 ~]# kubectl describe secret secret -n dev Name: secret Namespace: dev Labels: <none> Annotations: <none> Type: Opaque Data ==== password: 6 bytes username: 5 bytes

- Create pod-secret.yaml and mount the secret created above:

apiVersion: v1

kind: Pod

metadata:

name: pod-secret

namespace: dev

spec:

containers:

- name: nginx

image: nginx:1.17.1

volumeMounts: # Mount secret to directory

- name: config

mountPath: /secret/config

volumes:

- name: config

secret:

secretName: secret

# Create pod [root@k8s-master01 ~]# kubectl create -f pod-secret.yaml pod/pod-secret created # View pod [root@k8s-master01 ~]# kubectl get pod pod-secret -n dev NAME READY STATUS RESTARTS AGE pod-secret 1/1 Running 0 2m28s # Enter the container, check the secret information, and find that it has been automatically decoded [root@k8s-master01 ~]# kubectl exec -it pod-secret /bin/sh -n dev / # ls /secret/config/ password username / # more /secret/config/username admin / # more /secret/config/password 123456

So far, the encoding of information using secret has been realized.