Introduction to Kubernetes

Kubernetes is a container cluster management system that Google opened source in June 2014. It was developed in the GoLanguage and is also known as K8S.K8S is derived from a container cluster management system called Borg within Google, which has been running on a large scale for ten years.

K8S is mainly used for automated deployment, expansion and management of container applications, providing a complete set of functions such as resource scheduling, deployment management, service discovery, scaling, monitoring, etc. In July 2015, Kubernetes v1.0 was officially released, the latest stable version as of August 2, 2018 is v1.15.1, the latest stable version is v1.16.0-alpha.2, KubeThe goal of rnetes is to make deployment containerized applications simple and efficient.

Official website: https://kubernetes.io

Main efficacy of Kubernetes

Data Volume

Data volumes are used when sharing data between containers in a Pod;

Application Health Check

Check containers where services may be blocked and unable to process requests, you can set a monitoring check policy to ensure robust application.

Copy Application Instances

The controller maintains the number of copies of a Pod to ensure that a Pod or a group of similar Pods are always available.

Elastic Stretch

Automatically scale the number of Pod copies according to the set criteria (CPU utilization);

Service Discovery

Use environment variables or DNS service plug-ins to ensure that programs in the container discover Pod entry access addresses;

load balancing

A set of Pod replicas assigns a private cluster IP address, and load balancing forwards requests to the back-end container through which other Pods within the cluster can access applications;

Scroll Update

Update services uninterrupted, updating one Pod at a time instead of deleting the entire service at the same time;

Service Orchestration

Deploy services through file descriptions to make application deployment more efficient;

Resource Monitoring

Node node components integrate the cAdvisor resource collection tool, which aggregates cluster node resource data through Heapster, stores it in InfluxDB time series database, and then displays it by Grafana.

Provide authentication and authorization

Supports Attribute Access Control (ABAC), Role Access Control (RBAC) authentication and authorization policies;

Kubernetes Basic Object Concepts

PodPod

Is the smallest deployment unit, a Pod has one or more containers that share storage and network and run on the same Docker host.

Service

Service is an application service abstraction that defines a Pod logical collection and policies for accessing this Pod collection. The Service Proxy Pod collection externally manifests itself as an access entry, assigns a cluster IP address, requests from this IP forward load balancing to the container in the back-end Pod, and Service provides services through Lable Selector by selecting a set of Pods;

Volume

Data volumes that share data used by containers in Pod;

Namespace

Namespaces logically assign objects to different Namespaces, which can be managed differently by different projects, users, and so on, and set control policies to achieve multitenancy; namespaces are also known as virtual clusters;

Lable

Labels are used to distinguish objects (e.g. Pod, Service), and key/value pairs exist. Each object can have multiple labels, which associate objects through labels.

ReplicaSet

Next-generation Replication Controller; ensures the number of Pod copies specified at any given time, provides declarative updates, etc. The only difference between RC and RS is that lable selector supports different sets of new labels, while RC only supports equation-based labels;

Deployment

Deployment is a higher level API object that manages ReplicaSets and Pod s and provides declarative updates. It is officially recommended to use Deployment to manage ReplicaSets instead of using ReplicaSets directly, which means you may never need to directly manipulate ReplicaSet objects.

Stateful

SetStatefulSet is suitable for persistent applications with unique network identifiers (IP), persistent storage, orderly deployment, expansion, deletion, and rolling updates;

DaemonSet

The DaemonSet ensures that all (or some) nodes run the same Pod; when a node joins the Kubernetes cluster, the Pod is scheduled to run on that node, and when a node is removed from the cluster, the Pod of the DaemonSet is deleted; deleting the DaemonSet cleans up all the pods it creates;

Job

One-time tasks, the Pod is destroyed after running, new containers are no longer restarted, and tasks can run regularly.

Kubernetes Components

Master components:

kube-apiserver

The Kubernetes API, the unified entry to the cluster, the coordinator of each component, provides interface services with the HTTP API. All object resource addition, deletion, monitoring and monitoring operations are handed over to APIServer before being submitted to Etcd storage.

kube-controller-manager

Handles the common background tasks in a cluster, one resource for each controller, and Controller Manager is responsible for managing these controllers;

kube-scheduler

Select a Node node for the newly created od according to the scheduling algorithm;

Node components:

kubelet

kubelet is Master's Agent on Node node, which manages the life cycle of native running containers, such as creating containers, mounting data volumes on Pods, downloading secret s, getting containers and node status, etc. kubelet converts each Pod into a set of containers.

kube-proxy

Implement Pod network proxy on Node node, maintain network rules and four-tier load balancing;

docker or rocket/rkt

Run the container;

Third-party services:

etcd

Distributed key-value storage system; used to maintain cluster state, such as Pod, Service, and other object information;

Unnecessary components:

kube-dns

Responsible for providing DNS services to the entire cluster

Ingress Controller

Provide access to external networks for services

Heapster

Provide resource monitoring

Dashboard

Provide GUI

Federation

Provide clusters across availability zones

Fluentd-elasticsearch

Provide cluster log collection, storage, and query

Kubernetes recipe

| role | IP | assembly |

|---|---|---|

| master | 192.168.0.201 | etcd kube-apiserver kube-controller-manager kube-scheduler |

| node01 | 192.168.0.202 | kubelet kube-proxy docker |

| node02 | 192.168.0.203 | kubelet kube-proxy docker |

| Pod Network | 10.244.0.0/16 | |

| Service Network | 10.96.0.0/12 |

Get yum source

Install Docker, Kubelet, Kubeadm, Kubectl to all nodes

Master Node Initialization

Flannel Installation

Node Join

Get yum source

#Get into yum Of repo Catalog cd /etc/yum.repos.d/#Obtain Docker Of yum source wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo#Write the source vim/etc/yum.repos.d/kubernetes.repo of Kubernetes. [Kubernetes] name=Kubernetes Repo baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg enabled=1#Detect availability of yum source yum repolist

Install Docker, Kubelet, Kubeadm, Kubectl to all nodes

#yum Install required parts yum -y install docker-ce kubelet kubeadm kubectl#start-up Docker service systemctl start docker#Set startup self-startup systemctl enable docker systemctl enable kubelet

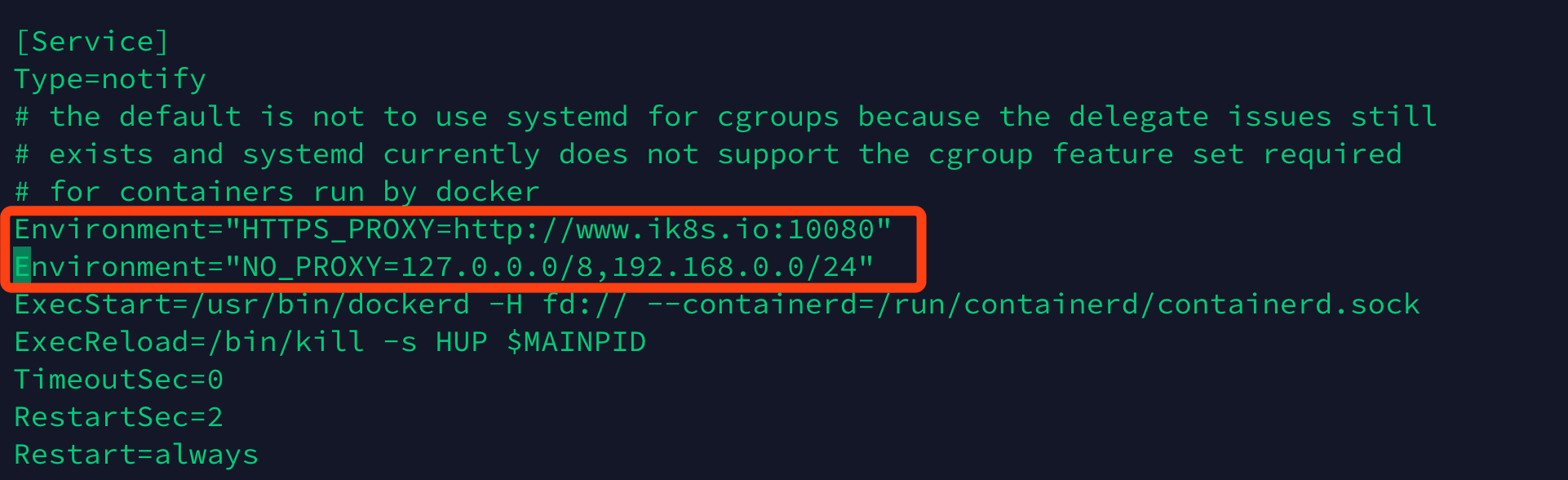

Before starting Docker, for some force majeure and undescribable reason, it is recommended that domestic apes modify the parameters of HttpsProxy as follows:

vim /usr/lib/systemd/system/docker.service Environment="HTTPS_PROXY=http://www.ik8s.io:10080"Environment="NO_PROXY=127.0.0.0/8,192.168.0.0/24"systemctl daemon-reload systemctl restart docker docker info

#Check bridge-nfcat/proc/sys/net/bridge/bridge-nf-call-ip6tables1cat/proc/sys/net/bridge/bridge-nf-call-iptables1

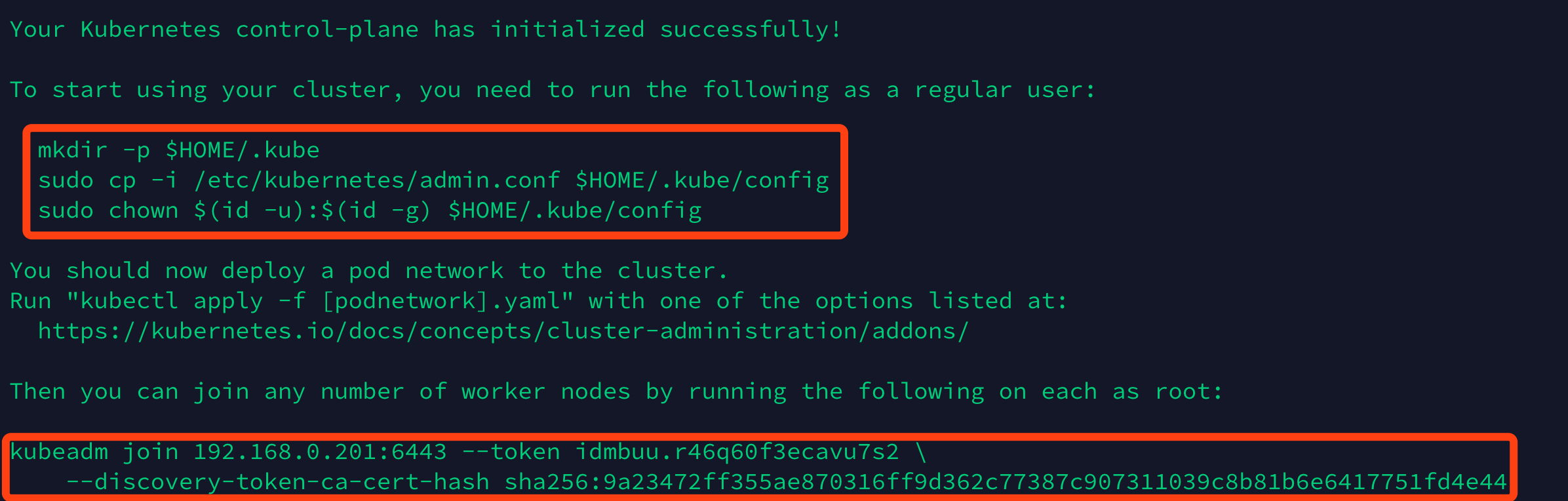

Master Node Initialization

#Configure the kubelet file to ignore Swap's error vim/etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--fail-swap-on=false"kubeadm init --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

#Set mkdir-p $HOME/.kube based on the prompts given when initialization is complete (it is recommended that ordinary users be created to operate on)

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config#View cluster health kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}#View cluster node information kubectl get node

NAME STATUS ROLES AGE VERSION

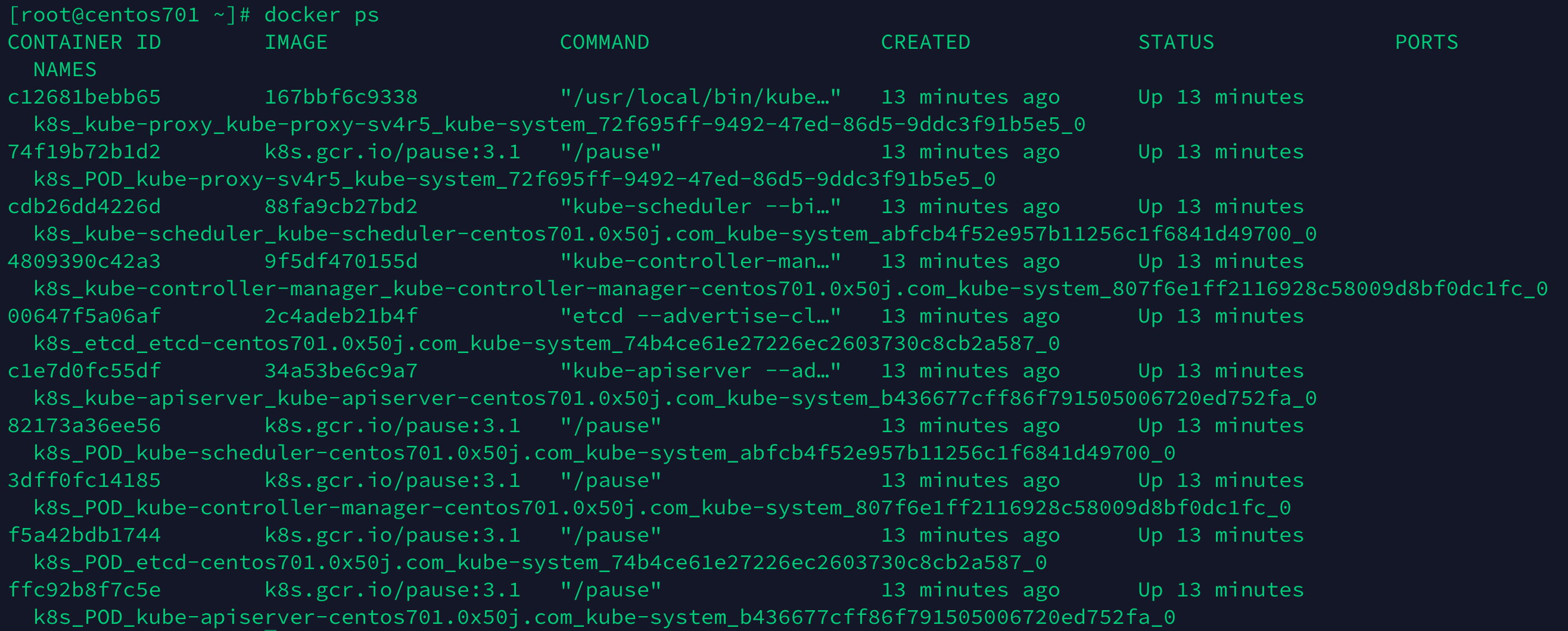

centos701.0x50j.com NotReady master 9m52s v1.15.2#View docker container docker ps

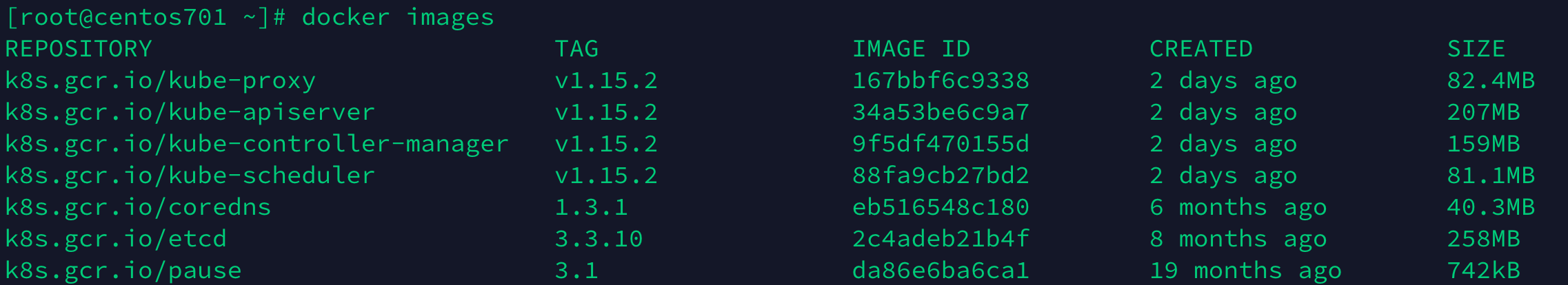

#View docker mirror docker images

NoReady should be shown because flannel has not been installed yet

Flannel Installation

#Auto Pull Mirror Start kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml#View the Pods network information kubectl get pods-n kube-system-o wide#Check whether the node status is Ready kubectl get node status NAME STATUS ROLES AGE VERSION centos701.0x50j.com Ready master 27m v1.15.2

Node joins cluster

#Join Cluster kubeadm join [MastertIP address]:6443 --token [token value] --discovery-token-ca-cert-hash [Certificate hash value] --ignore-preflight-errors=Swap#Get node information on the primary node kubectl get node NAME STATUS ROLES AGE VERSION centos701.0x50j.com Ready master 15h v1.15.2centos702.0x50j.com Ready <none> 15h v1.15.2centos703.0x50j.com Ready <none> 15h v1.15.2

The token and certificate hash values are provided when initializing the cluster

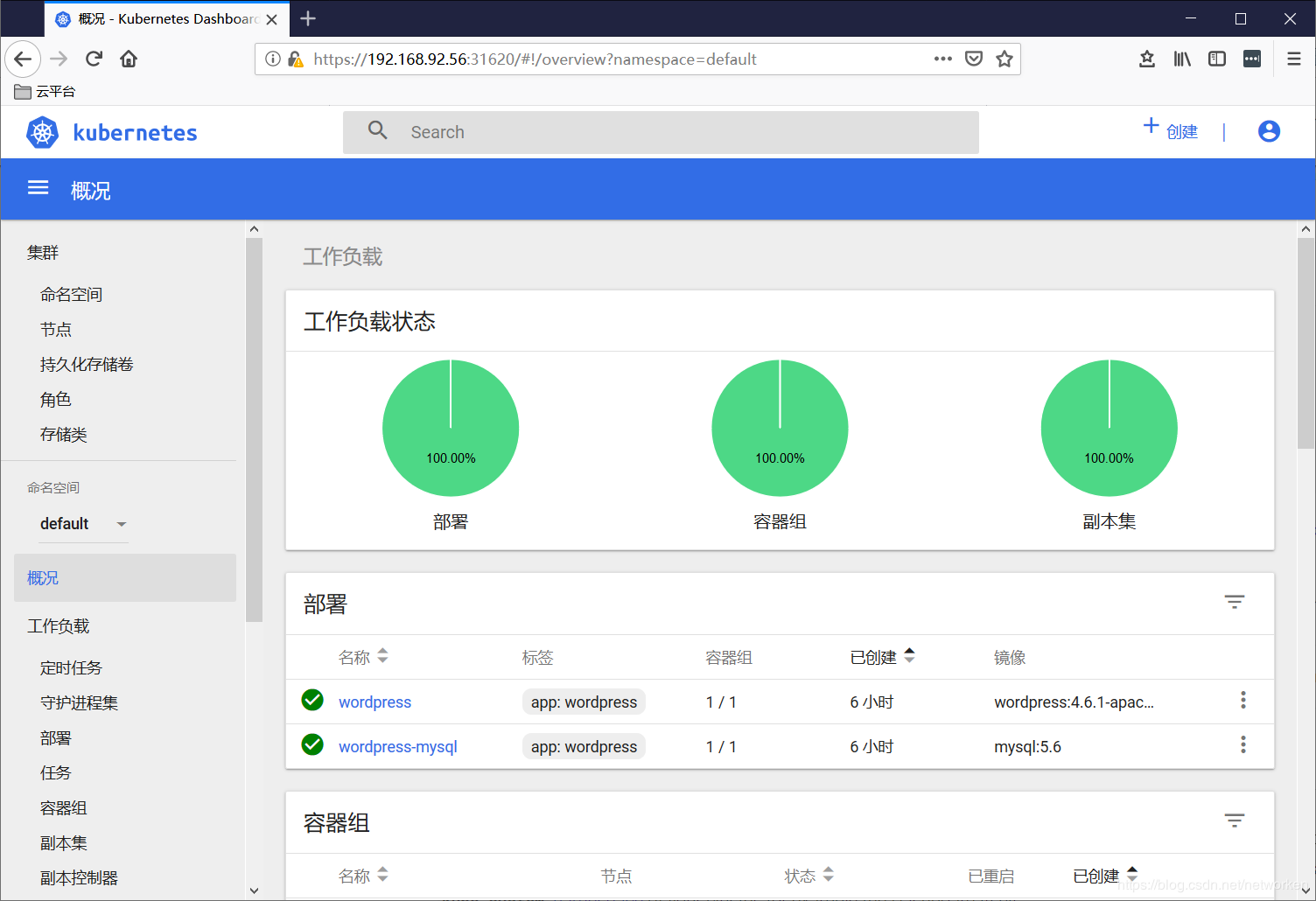

Dashboard Visualization Plugin

#download yaml File to Local wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml#Modify the yaml file so that its external network can access vim kubernetes-dashboard.yaml ...... ---# ------------------- Dashboard Service ------------------- #kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort #Add type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 31620 #Add nodePort: 31620 selector: k8s-app: kubernetes-dashboard#Modify configuration to pull mirror vim kubernetes-dashboard.yaml from Ali Cloud Warehouse ...... containers: - name: kubernetes-dashboard #image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1 image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1 ports: ......#Execute deployment of dashboard service kubectl create-f kubernetes-dashboard.yaml

Viewing Pod status as running indicates that dashboard has been deployed successfully

kubectl get pod --namespace=kube-system -o wide | grep dashboard

Dashboard creates its own Deployment and Service in the kube-system namespace

kubectl get deployment kubernetes-dashboard --namespace=kube-system

kubectl get service kubernetes-dashboard --namespace=kube-system

Access https://[host_ip]:31620/#!/login

#Create dashboard-adminuser.yaml to get Tokenvim dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

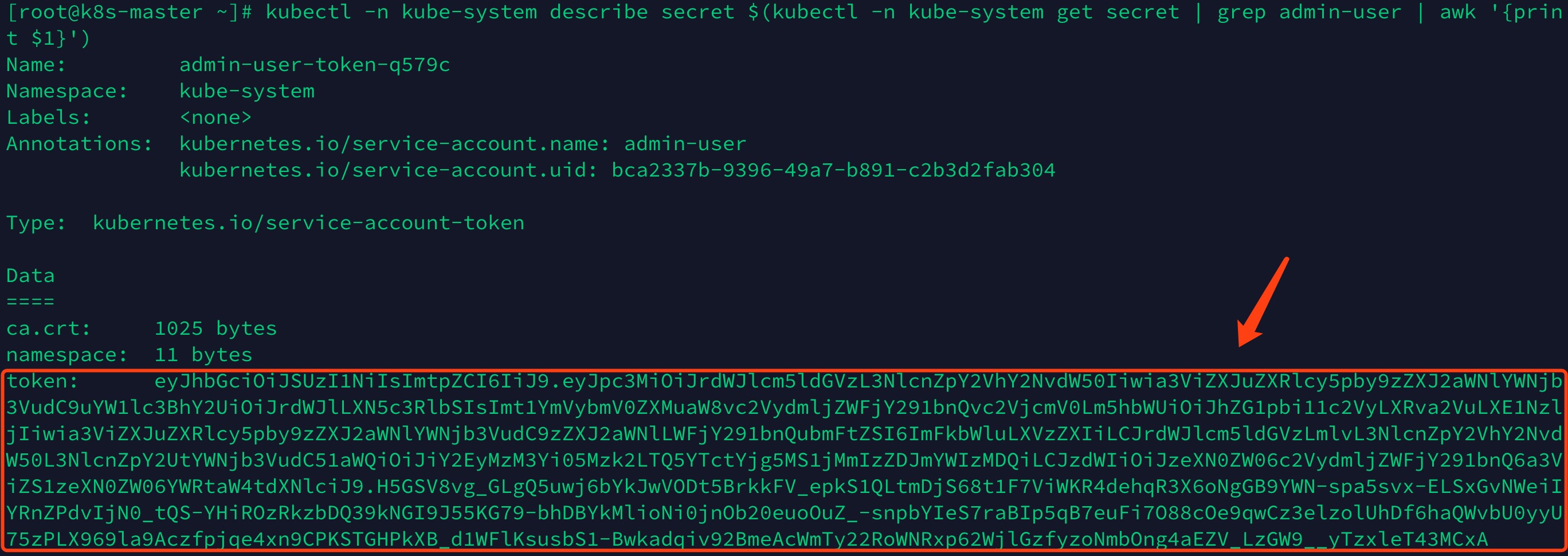

namespace: kube-system#implement yaml file kubectl create -f dashboard-adminuser.yaml#View tokenkubectl-n kube-system describe secret $(kubectl-n kube-system get secret | grep admin-user | awk'{print $1}') for admin-user accountLog in to the Dashboard panel with the obtained Token value

Kubernetes way of eating

#Query node information kubectl describe node [node name]#View cluster information kubectl cluster-info#View the created Podskubectl get pods-o wide#View the created Serviceskubectl get services-o wide#View Pod's network information kubectl describe pods [Pod name]#View Pod's WebNetwork information kubectl describe service [Pod name]

#Function Podkubectl run NAME --image=image [--env="key=value"] [--port=port] [--replicas=replicas] [--dry-run=bool] [--overrides=inline-json] [--command] -- [COMMAND] [args...] [options]#Chestnuts: Run Nginx kubectl run nginx --image=nginx:1.14 --port=80 --replicas=1 Function Busybox kubectl run client --image=busybox --replicas=1 -it --restart=Never

--image specifies the mirror to eat

--port Specifies Exposed Port

--replicas specifies the number of creations

#delete Podkubectl delete ([-f FILENAME] | [-k DIRECTORY] | TYPE [(NAME | -l label | --all)])#Chestnuts: kubectl delete pods nginx-7c45b84548-7bnr6

TYPE specifies what type of deletion is, such as services,pods

NAME Specified Name

-l Specify label

#Establish servicekubectl expose (-f FILENAME | TYPE NAME) [--port=port] [--protocol=TCP|UDP|SCTP] [--target-port=number-or-name] [--name=name] [--external-ip=external-ip-of-service] [--type=type] [options]#Chestnuts: kubectl expose deployment nginx --name=nginx-service --port=8081 --target-port=80--protocol=TCP

Deployment specifies the name of the deployment pod

--name service name

--port Outside

--target-port internal port (pod exposed port)

--protocol Specifies Protocol

#extend/reduce pod Quantity kubectl scale [--resource-version=version] [--current-replicas=count] --replicas=COUNT (-f FILENAME | TYPE NAME)#Chestnuts: kubectl scale --replicas=0 deployment myapp

--replicas specified quantity

#Rolling upgrade kubectl set image (-f FILENAME | TYPE NAME) CONTAINER_NAME_1=CONTAINER_IMAGE_1#Chestnuts: kubectl set image deployment nginx-web nginx-web=nginx:1.10#Rolling back to previous version of kubectl rollout undo deployment [Pod name]#View status kubectl rollout status

Name of CONTAINER_NAME_1 pod

CONTAINER_IMAGE_1 Upgraded to that mirror

#Add Tags kubectl label [--overwrite] (-f FILENAME | TYPE NAME) KEY_1=VAL_1 ... KEY_N=VAL_N [--resource-version=version]#Chestnuts: kubectl label pods test-pod release=canary

TYPE specifies the type, such as svc,pods

NAME Specified Name

KEY Specifies Key Value

VAL Specifies Tag Value

You can add--show-labels view label when get information