summary:

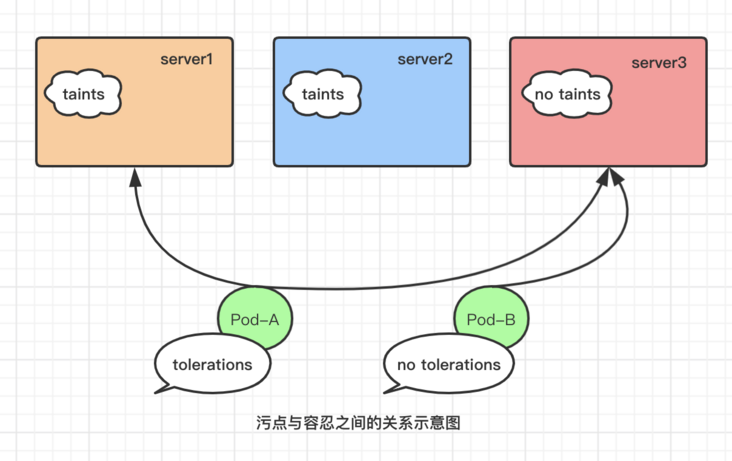

Taints are key value attribute data defined on the node, which is used to make the node refuse to run Pod scheduling on it, unless the Pod object has the tolerance of accepting node taints. Tolerance tolerances are key value attribute data defined on the Pod object, which is used to configure its tolerable node stains, and the scheduler can only schedule the Pod object to the node that can tolerate the node stains, as shown in the figure

- Whether a Pod can be scheduled to a node depends on

- Is there a stain on the node

- There is a stain on the node. Can Pod tolerate this stain

Stain and tolerance

The stain is defined in the node Spec of the node, while the tolerance is defined in the podSpec of the Pod. They are all key value data, but they also support an effect effect tag. The syntax format is key=value:effect. The usage and format of key and value are similar to resource note information, and effect is used to define the exclusion level of Pod objects, It mainly includes the following three types of utility identification

- NoSchedule

The new Pod object that cannot tolerate this stain cannot be scheduled to the current node. It belongs to a mandatory constraint relationship, and the existing Pod objects on the node will not be affected. - PreferNoSchedule

The flexible constraint version of, that is, the new Pod object that cannot tolerate this stain should not be scheduled to the current node as far as possible, but the corresponding Pod object is allowed to be accepted when there are no other nodes for scheduling. Existing Pod objects on the node are not affected. - NoExecute

A new Pod object that cannot tolerate this stain cannot be scheduled to the current node, which belongs to a mandatory constraint relationship, and when the existing Pod object on the node no longer meets the matching rules due to the change of node stain or Pod tolerance, the Pod object will be expelled.

When defining tolerance on the Pod object, it supports two operators: one is equivalent comparison Equal, which means that the tolerance and stain must completely match above key, value and effect; The other is the existence judgment Exists, which means that the key and effect of the two must match exactly, and the value field in the tolerance must use a null value.

Pod scheduling sequence

A node can be configured to use multiple stains, and a Pod object can also have multiple tolerances. However, the following logic should be followed when checking the match between the two.

- First, deal with each stain with a matching tolerance

- If there is a stain that uses the NoSchedule utility ID, the scheduling Pod object will be rejected to this node

- If no one uses the NoSchedule utility ID, but at least one uses the PreferNoScheduler, you should try to avoid scheduling the Pod object to this node

- If at least one mismatched stain uses the NoExecute utility ID, the node will immediately expel the Pod object or not schedule to the given node; In addition, even if the tolerance can match the stain using the NoExecute utility ID, if the tolerance time limit is defined by using the tolerationSeconds attribute when defining the tolerance, it will also be expelled by the node after the time limit is exceeded.

For Kubernetes clusters deployed with kubedm, the Master node will automatically add stain information to prevent Pod objects that cannot tolerate this stain from scheduling to this node. Therefore, Pod objects manually created by users that do not intentionally add tolerance for this stain will not be scheduled to this node

Example 1: the pod is scheduled to the master and tolerates the master:NoSchedule ID

[root@k8s-master Scheduler]# kubectl describe node k8s-master.org #View master stain utility ID

...

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

[root@k8s-master Scheduler]# cat tolerations-daemonset-demo.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: daemonset-demo

namespace: default

labels:

app: prometheus

component: node-exporter

spec:

selector:

matchLabels:

app: prometheus

component: node-exporter

template:

metadata:

name: prometheus-node-exporter

labels:

app: prometheus

component: node-exporter

spec:

tolerations: #Tolerance master noschedule ID

- key: node-role.kubernetes.io/master #Is the key value

effect: NoSchedule #Utility identification

operator: Exists #Just exist

containers:

- image: prom/node-exporter:latest

name: prometheus-node-exporter

ports:

- name: prom-node-exp

containerPort: 9100

hostPort: 9100

[root@k8s-master Scheduler]# kubectl apply -f tolerations-daemonset-demo.yaml

[root@k8s-master Scheduler]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-demo-7fgnd 2/2 Running 0 5m15s 10.244.91.106 k8s-node2.org <none> <none>

daemonset-demo-dmd47 2/2 Running 0 5m15s 10.244.70.105 k8s-node1.org <none> <none>

daemonset-demo-jhzwf 2/2 Running 0 5m15s 10.244.42.29 k8s-node3.org <none> <none>

daemonset-demo-rcjmv 2/2 Running 0 5m15s 10.244.59.16 k8s-master.org <none> <none>Example 2: add the effect utility ID NoExecute for the node to expel all pods

[root@k8s-master Scheduler]# kubectl taint --help Update the taints on one or more nodes. * A taint consists of a key, value, and effect. As an argument here, it is expressed as key=value:effect. * The key must begin with a letter or number, and may contain letters, numbers, hyphens, dots, and underscores, up to 253 characters. * Optionally, the key can begin with a DNS subdomain prefix and a single '/', like example.com/my-app * The value is optional. If given, it must begin with a letter or number, and may contain letters, numbers, hyphens, dots, and underscores, up to 63 characters. * The effect must be NoSchedule, PreferNoSchedule or NoExecute. * Currently taint can only apply to node. Examples: #Example # Update node 'foo' with a taint with key 'dedicated' and value 'special-user' and effect 'NoSchedule'. # If a taint with that key and effect already exists, its value is replaced as specified. kubectl taint nodes foo dedicated=special-user:NoSchedule # Remove from node 'foo' the taint with key 'dedicated' and effect 'NoSchedule' if one exists. kubectl taint nodes foo dedicated:NoSchedule- # Remove from node 'foo' all the taints with key 'dedicated' kubectl taint nodes foo dedicated- # Add a taint with key 'dedicated' on nodes having label mylabel=X kubectl taint node -l myLabel=X dedicated=foo:PreferNoSchedule # Add to node 'foo' a taint with key 'bar' and no value kubectl taint nodes foo bar:NoSchedule [root@k8s-master Scheduler]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES daemonset-demo-7ghhd 1/1 Running 0 23m 192.168.113.35 k8s-node1 <none> <none> daemonset-demo-cjxd5 1/1 Running 0 23m 192.168.12.35 k8s-node2 <none> <none> daemonset-demo-lhng4 1/1 Running 0 23m 192.168.237.4 k8s-master <none> <none> daemonset-demo-x5nhg 1/1 Running 0 23m 192.168.51.54 k8s-node3 <none> <none> pod-antiaffinity-required-697f7d764d-69vx4 0/1 Pending 0 8s <none> <none> <none> <none> pod-antiaffinity-required-697f7d764d-7cxp2 1/1 Running 0 8s 192.168.51.55 k8s-node3 <none> <none> pod-antiaffinity-required-697f7d764d-rpb5r 1/1 Running 0 8s 192.168.12.36 k8s-node2 <none> <none> pod-antiaffinity-required-697f7d764d-vf2x8 1/1 Running 0 8s 192.168.113.36 k8s-node1 <none> <none>

- Label Node 3 with NoExecute utility and expel all Node pods

[root@k8s-master Scheduler]# kubectl taint node k8s-node3 diskfull=true:NoExecute node/k8s-node3 tainted [root@k8s-master Scheduler]# kubectl describe node k8s-node3 ... CreationTimestamp: Sun, 29 Aug 2021 22:45:43 +0800 Taints: diskfull=true:NoExecute

- All pods of the node node have been evicted, but because the Pod is defined as only one Pod of the same type per node, it will be suspended and will not be created on other nodes

[root@k8s-master Scheduler]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES daemonset-demo-7ghhd 1/1 Running 0 31m 192.168.113.35 k8s-node1 <none> <none> daemonset-demo-cjxd5 1/1 Running 0 31m 192.168.12.35 k8s-node2 <none> <none> daemonset-demo-lhng4 1/1 Running 0 31m 192.168.237.4 k8s-master <none> <none> pod-antiaffinity-required-697f7d764d-69vx4 0/1 Pending 0 7m45s <none> <none> <none> <none> pod-antiaffinity-required-697f7d764d-l86td 0/1 Pending 0 6m5s <none> <none> <none> <none> pod-antiaffinity-required-697f7d764d-rpb5r 1/1 Running 0 7m45s 192.168.12.36 k8s-node2 <none> <none> pod-antiaffinity-required-697f7d764d-vf2x8 1/1 Running 0 7m45s 192.168.113.36 k8s-node1 <none> <none>

- Delete the stain Pod and recreate it

[root@k8s-master Scheduler]# kubectl taint node k8s-node3 diskfull- node/k8s-node3 untainted [root@k8s-master Scheduler]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES daemonset-demo-7ghhd 1/1 Running 0 34m 192.168.113.35 k8s-node1 <none> <none> daemonset-demo-cjxd5 1/1 Running 0 34m 192.168.12.35 k8s-node2 <none> <none> daemonset-demo-lhng4 1/1 Running 0 34m 192.168.237.4 k8s-master <none> <none> daemonset-demo-m6g26 0/1 ContainerCreating 0 4s <none> k8s-node3 <none> <none> pod-antiaffinity-required-697f7d764d-69vx4 0/1 ContainerCreating 0 10m <none> k8s-node3 <none> <none> pod-antiaffinity-required-697f7d764d-l86td 0/1 Pending 0 9m1s <none> <none> <none> <none> pod-antiaffinity-required-697f7d764d-rpb5r 1/1 Running 0 10m 192.168.12.36 k8s-node2 <none> <none> pod-antiaffinity-required-697f7d764d-vf2x8 1/1 Running 0 10m 192.168.113.36 k8s-node1 <none> <none>

Reference documents: