01 Preface

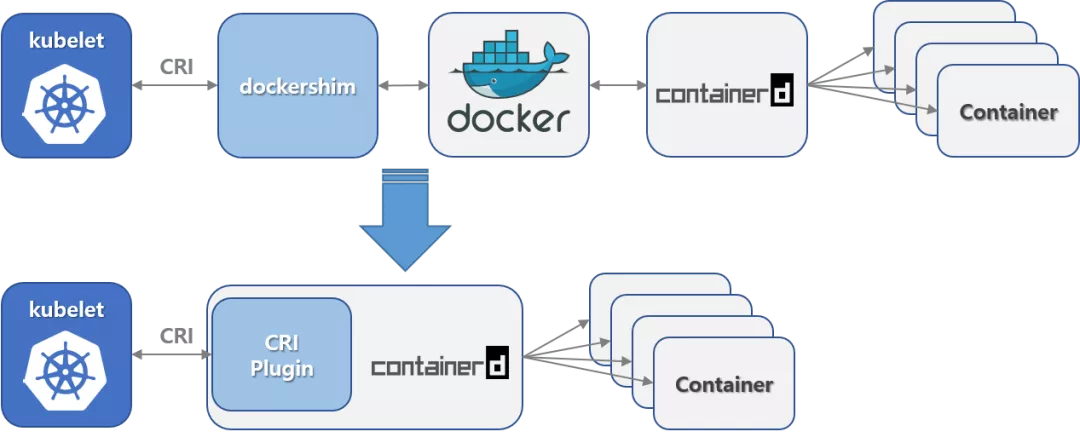

Kubernetes (hereinafter referred to as k8s) announced that docker will be abandoned as a container after version 1.20, and the dockershim component will be completely removed in version 1.23 released at the end of 2021. Dockershim is a built-in component of kubelet. Its function is to make k8s it possible to operate dockers through CRI (Container Runtime Interface). Once there is any change in the functionality of docker, the dockershim code must be changed to ensure that it can continue to communicate with docker. In addition, the underlying runtime of docker is containerd, and containerd itself can support CRI, that is, k8s can bypass docker and communicate directly with containerd through CRI, which is also the reason why k8s community wants to abandon dockership.

Although CRI is considered in container 1.0, CRI container exists as an independent component, that is, k8s you need to call CRI container through CRI interface first, and then this component calls containerd. After containerd1.1, this feature has been redesigned. It embeds CRI plugin in containerd to achieve the purpose of communication with containerd, and the call link is shorter. Containerd1.1 supports k8s1.10 and above as container runtime, and supports all features of k8s.

The following figure illustrates how docker and containerd work when they run as containers. It can be seen that if docker was used as the container runtime before, migrating to containerd is a relatively easy choice, and containerd has better performance and lower cost.

Next, we will mainly introduce how to migrate k8s runtime from docker to containerd, and some changes in usage after migration.

02 K8s runtime migration from docker to containerd

(1) Environmental preparation

Operating system: SUSE 12 SP5

Kernel version: 4.12.14-120

K8s version: v1.14.0

Docker version: docker-ee-18.09.9

Container version: 1.4.4

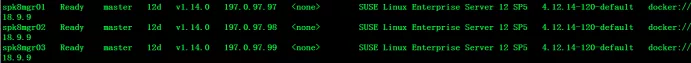

(2) View the runtime information of the current node

kubectl get node -o wide

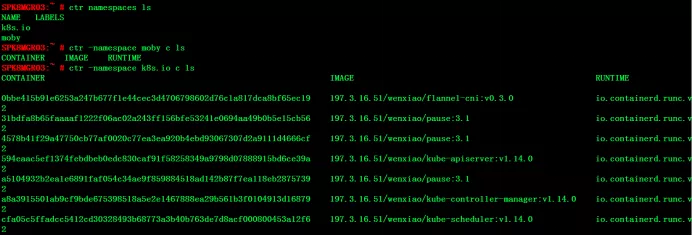

You can see that the runtime used by all nodes is docker. You can see that the containerd service is also started by default through systemctl status containerd. Use the following command to list the namespaces of containerd.

ctr namespaces list

You can see that there is a moby namespace, which is also the default namespace used by the docker service.

ctr -namespace moby container list

Use the above command to list all containers running under the moby namespace. The results are shown in the figure below. You can see that the number of containers is the same as that output by docker ps.

(3) Evict the node and stop the docker and kubelet services on the node

Next, take node spk8mgr03 as an example to illustrate the migration process from docker to containerd.

kubectl drain spk8mgr03 --ignore-daemonsets --delete-local-data --force systemctl stop kubelet systemctl stop docker

Uninstall docker (this step is optional. In order to eliminate the interference of docker during the test, uninstall is selected here)

zypper rm -y docker-ee docker-ee-cli containerd.io

(4) Installing and configuring containerd

Download containerd and unzip the installation

wget https://github.com/containerd/containerd/releases/download/v1.4.4/cri-containerd-cni-1.4.4-linux-amd64.tar.gz tar -C / -xzvf cri-containerd-cni-1.4.4-linux-amd64.tar.gz

The extracted file includes the following contents:

/ /etc/ /etc/systemd/ /etc/systemd/system/ /etc/systemd/system/containerd.service /etc/crictl.yaml /etc/cni/ /etc/cni/net.d/ /etc/cni/net.d/10-containerd-net.conflist /usr/ /usr/local/ /usr/local/bin/ /usr/local/bin/containerd /usr/local/bin/containerd-shim /usr/local/bin/crictl /usr/local/bin/containerd-shim-runc-v2 /usr/local/bin/critest /usr/local/bin/containerd-shim-runc-v1 /usr/local/bin/ctr /usr/local/sbin/ /usr/local/sbin/runc /opt/ /opt/containerd/ /opt/containerd/cluster/ /opt/containerd/cluster/gce/ /opt/containerd/cluster/gce/env /opt/containerd/cluster/gce/cni.template /opt/containerd/cluster/gce/configure.sh /opt/containerd/cluster/gce/cloud-init/ /opt/containerd/cluster/gce/cloud-init/node.yaml /opt/containerd/cluster/gce/cloud-init/master.yaml /opt/containerd/cluster/version /opt/cni/ /opt/cni/bin/ /opt/cni/bin/bandwidth /opt/cni/bin/host-device /opt/cni/bin/flannel /opt/cni/bin/static /opt/cni/bin/loopback /opt/cni/bin/dhcp /opt/cni/bin/ptp /opt/cni/bin/ipvlan /opt/cni/bin/vlan /opt/cni/bin/host-local /opt/cni/bin/firewall /opt/cni/bin/tuning /opt/cni/bin/sbr /opt/cni/bin/bridge /opt/cni/bin/portmap /opt/cni/bin/macvlan

Start and configure containerd

systemctl start containerd systemctl enable containerd mkdir -p /etc/containerd containerd config default > /etc/containerd/config.toml

The contents of the config.toml file are as follows. Pay attention to modifying the sandbox_image parameters

version = 2

root = "/var/lib/containerd"

state = "/run/containerd"

plugin_dir = ""

disabled_plugins = []

required_plugins = []

oom_score = 0

[grpc]

address = "/run/containerd/containerd.sock"

tcp_address = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

[ttrpc]

address = ""

uid = 0

gid = 0

[debug]

address = ""

uid = 0

gid = 0

level = ""

[metrics]

address = ""

grpc_histogram = false

[cgroup]

path = ""

[timeouts]

"io.containerd.timeout.shim.cleanup" = "5s"

"io.containerd.timeout.shim.load" = "5s"

"io.containerd.timeout.shim.shutdown" = "3s"

"io.containerd.timeout.task.state" = "2s"

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

pause_threshold = 0.02

deletion_threshold = 0

mutation_threshold = 100

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

disable_tcp_service = true

stream_server_address = "127.0.0.1"

stream_server_port = "0"

stream_idle_timeout = "4h0m0s"

enable_selinux = false

selinux_category_range = 1024

sandbox_image = "k8s.gc.io/pause:3.1"

stats_collect_period = 10

systemd_cgroup = false

enable_tls_streaming = false

max_container_log_line_size = 16384

disable_cgroup = false

disable_apparmor = false

restrict_oom_score_adj = false

max_concurrent_downloads = 3

disable_proc_mount = false

unset_seccomp_profile = ""

tolerate_missing_hugetlb_controller = true

disable_hugetlb_controller = true

ignore_image_defined_volumes = false

[plugins."io.containerd.grpc.v1.cri".containerd]

snapshotter = "overlayfs"

default_runtime_name = "runc"

no_pivot = false

disable_snapshot_annotations = true

discard_unpacked_layers = false

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

runtime_type = ""

runtime_engine = ""

runtime_root = ""

privileged_without_host_devices = false

base_runtime_spec = ""

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

runtime_type = ""

runtime_engine = ""

runtime_root = ""

privileged_without_host_devices = false

base_runtime_spec = ""

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

runtime_engine = ""

runtime_root = ""

privileged_without_host_devices = false

base_runtime_spec = ""

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

max_conf_num = 1

conf_template = ""

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://registry-1.docker.io"]

[plugins."io.containerd.grpc.v1.cri".image_decryption]

key_model = ""

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

[plugins."io.containerd.internal.v1.opt"]

path = "/opt/containerd"

[plugins."io.containerd.internal.v1.restart"]

interval = "10s"

[plugins."io.containerd.metadata.v1.bolt"]

content_sharing_policy = "shared"

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.runtime.v1.linux"]

shim = "containerd-shim"

runtime = "runc"

runtime_root = ""

no_shim = false

shim_debug = false

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.snapshotter.v1.devmapper"]

root_path = ""

pool_name = ""

base_image_size = ""

async_remove = false

After modifying the configuration, restart the containerd service

systemctl restart containerd

Test containerd

ctr images pull docker.io/library/nginx:alpine

When you see the output done, it indicates that containerd is running normally.

(5) Configure crictl

Crictl communicates with docker by default. If you want crictl to communicate directly with containerd, you need to modify the crictl configuration file and add the following contents in / etc/crictl.yaml:

runtime-endpoint: unix:///run/containerd/containerd.sock

Note: the extracted file when installing containerd has been added by default.

Test whether the cri plug-in is available

crictl pull docker.io/library/nginx:alpine crictl images

(6) Configure kubelet

By default, kubelet uses docker as the container runtime. If you want to use containerd, you need to modify the kubelet configuration file. Edit the / etc / SYSTEMd / system / kubelet.service.d/10-kubedm.conf file and add the following:

[Service] Environment="KUBELET_EXTRA_ARGS=--container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

Restart kubelet service

systemctl daemon-reload systemctl restart kubelet

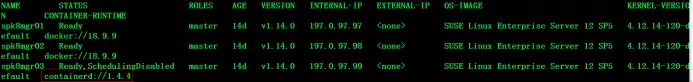

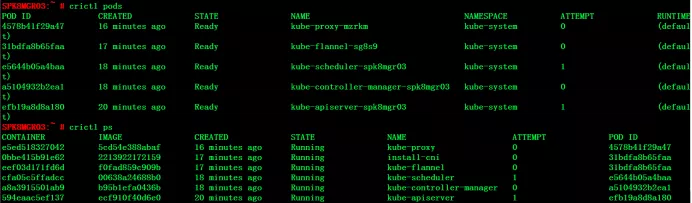

(7) Verify

kubectl get node -o wide

You can see that the container of spk8mgr03 node has changed to containerd when running, and the node is still in the non schedulable state. Execute the following command to change it to the schedulable state.

kubectl uncordon spk8mgr03

At this time, if you look at the namespace of containerd, you will find that there is an additional namespace of k8s.io, and all containers will run in this namespace, while no container in the moby namespace will run.

So far, we have successfully completed the migration of the container runtime from docker to containerd. Other nodes in the cluster can repeat the above steps to complete all the migration.

03 comparison between container and docker

When using docker as a container, system administrators sometimes log in to k8s nodes and execute docker commands to collect system or application information. These commands are implemented through docker cli. After migrating to containerd, you can use the containerd CLI tool ctr to interact with containerd. However, in terms of convenience and functionality, critl is more recommended as a troubleshooting tool. Crictl is a client debugging tool similar to docker CLI and is applicable to all CRI compatible container runtimes, including docker. Next, we will compare the common commands of docker, ctr and crictl in terms of image, container and pod.

(1) Image related functions

(2) Container related functions

In particular, after creating a container through ctr containers create, it is only a static container, and the user process in the container has not been started, so you need to start the container process through ctr task start. Of course, you can also directly create and run containers with the command ctr run. When entering the container operation, unlike docker, you must specify the – exec id parameter after the ctr task exec command. This id can be written as long as it is unique. In addition, ctr does not have the function of stop ping the container. It can only pause (ctr task pause) or kill (ctr task kill) the container.

(3) Pod related functions

Note here: crictl pods lists the information of the pod, including the namespace and status of the pod. crictl ps lists the information of the application container, while docker ps lists the information of the initialization container (pause container) and application container. The initialization container will be created when each pod is started, and it is usually not concerned. From this point of view, crictl is simpler to use.

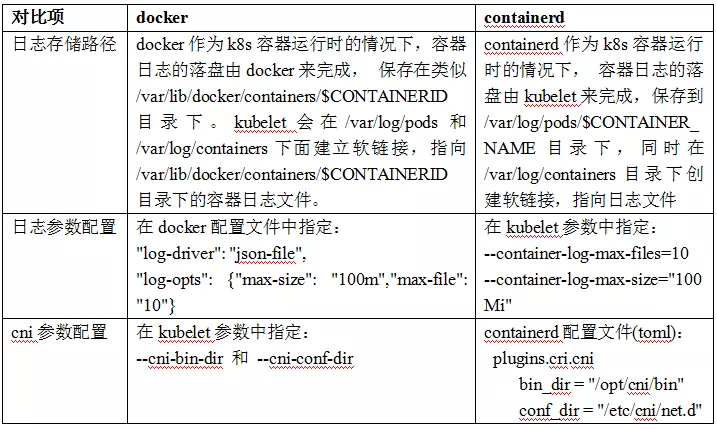

In addition to the above common commands, docker and containerd also have some differences in container log and related parameter configuration. See the following table for details.

04 summary

K8s abandoning docker may be a bit of a surprise for those engaged in relevant work, but in fact, there is no need to worry. For the end users of k8s, this is only a runtime change of the back-end container, and there is almost no difference in use; For application developers / operation and maintenance personnel, they can still use docker to build images, push images to registry in the same way, and deploy these images to k8s environment; For k8s cluster administrators, you only need to switch docker to another container runtime (such as containerd) and switch the node troubleshooting tool from docker CLI to crictl.

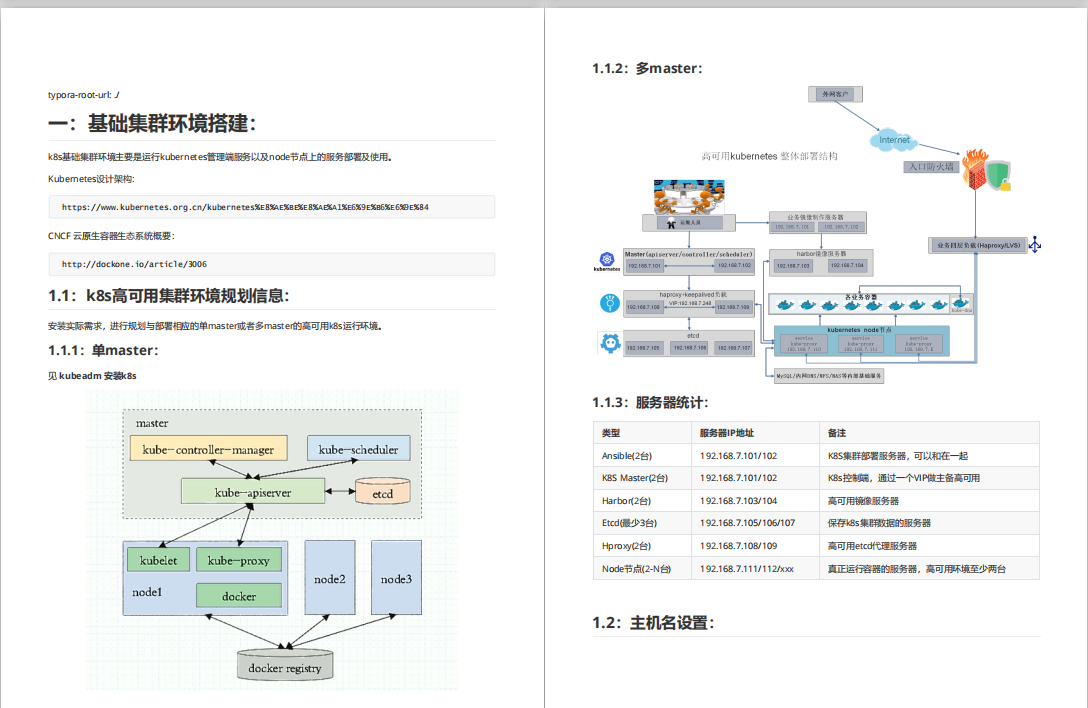

That's all for today's dry goods. Next, I'll share a 138 page kubernetes actual combat document. The dry goods are full!

Click the link to get the complete document

※ some articles come from the Internet. If there is infringement, please contact to delete; More articles and materials | click the text directly behind ↓↓

100GPython self study package

Alibaba cloud K8s combat manual

Alibaba cloud CDN pit drainage guide

ECS operation and maintenance guide

DevOps Practice Manual

Hadoop big data Practice Manual

Knative cloud native application development guide

OSS operation and maintenance manual

Cloud native architecture white paper

Zabbix enterprise distributed monitoring system source code document

10G large factory interview questions