Tags (space delimited): kubernetes series

1: Introduction to system environment

2: Deploy Etcd Cluster

3: Install docker

IV: Deploy k8s Master Node

Five: Deploy k8s Worker Node

Six: Deploy Dashboard and Core DNS

1: Introduction to system environment

1.1 Environmental Preparation

Before you begin, deploying the Kubernetes cluster machine requires the following conditions: Operating System: CentOS7.8-86_x64 Hardware Configuration: 2GB or more RAM, 2 or more CPU s, hard disk 30GB or more clusters for network communication between all machines Access to the external network, need to pull the mirror, if the server cannot go online, need to download the mirror and import the node in advance Prohibit swap partitions

1.2 Software environment:

Operating System: CentOS7.8_x64 (mini) Docker: 19-ce Kubernetes: 1.18.3

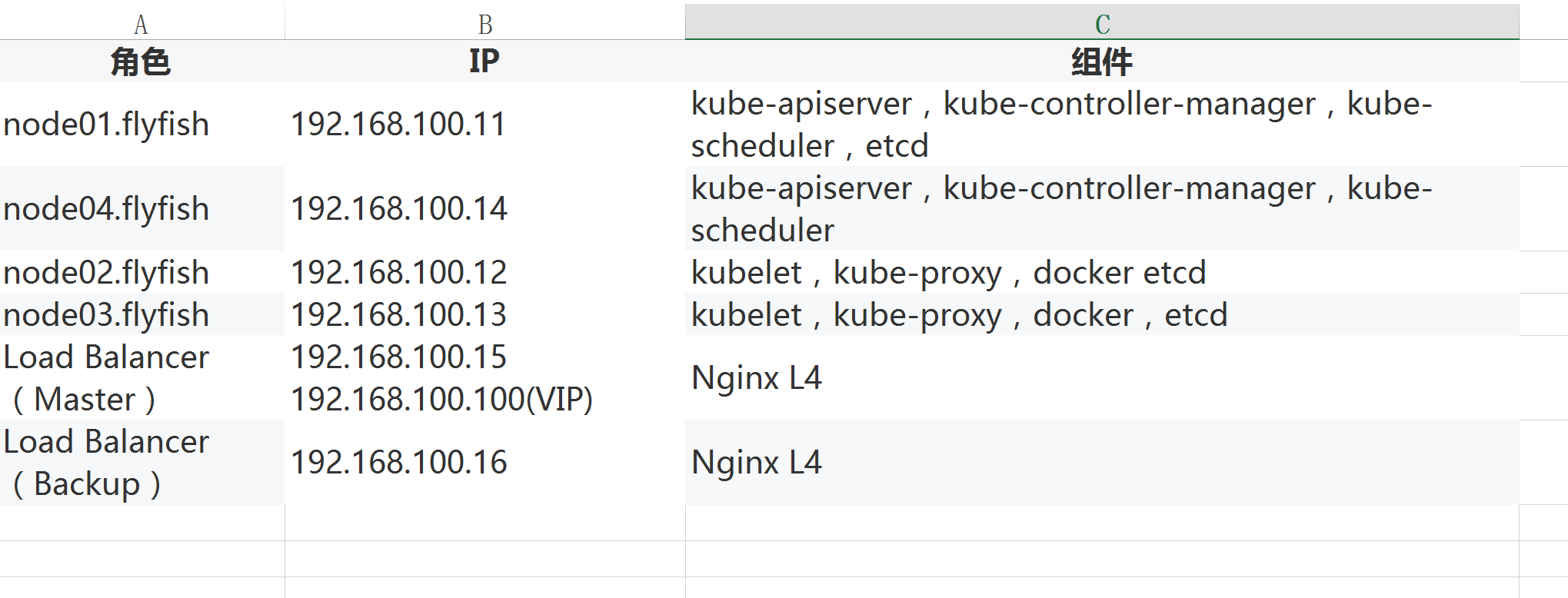

1.3 Environmental Planning

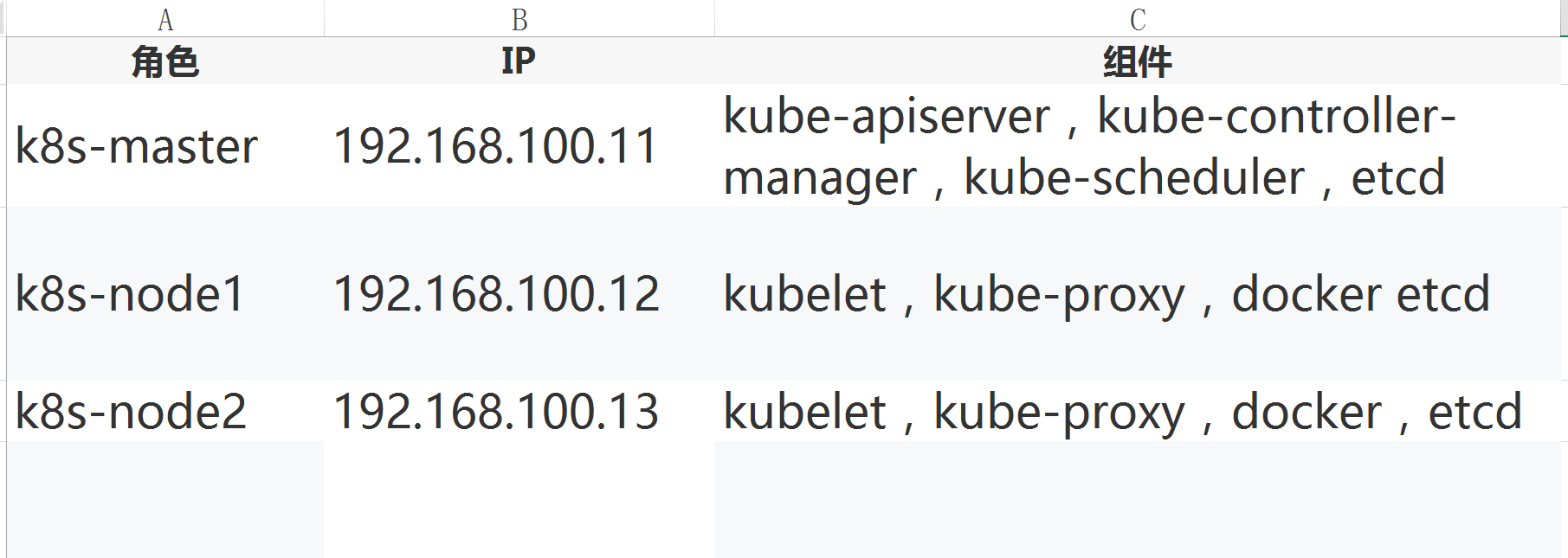

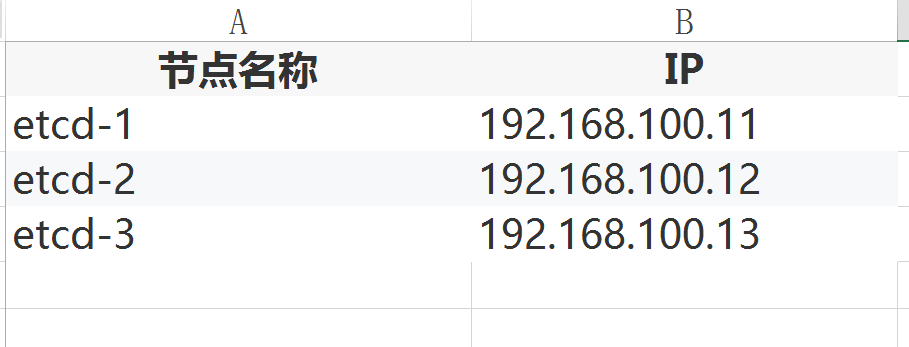

Server Overall Planning:

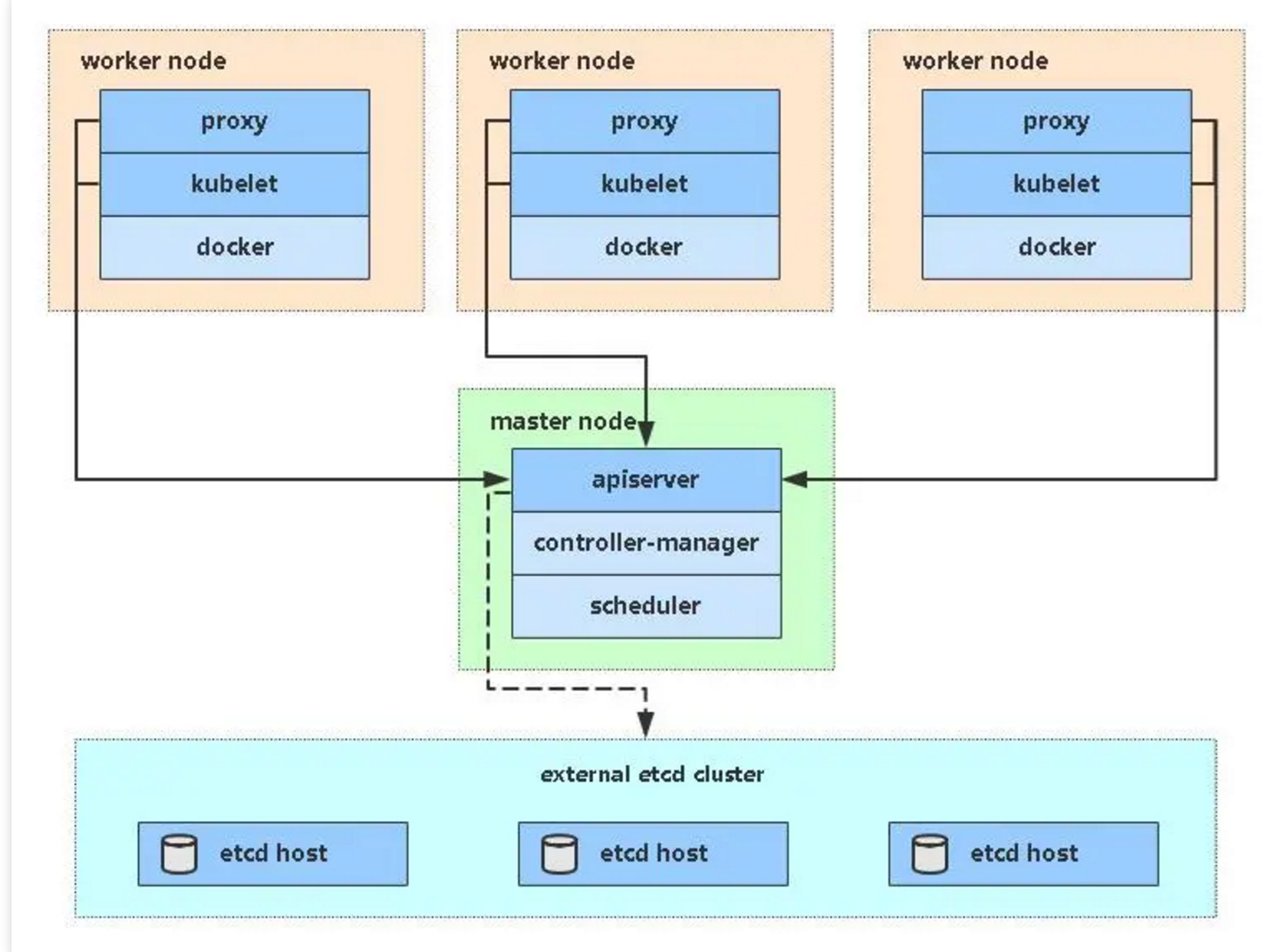

1.4 Single Master architecture diagram:

1.5 Single Master Server Planning:

1.6 OS Initialization Configuration

# Close Firewall systemctl stop firewalld systemctl disable firewalld # Close selinux sed -i 's/enforcing/disabled/' /etc/selinux/config # permanent setenforce 0 # temporary # Close swap swapoff -a # temporary sed -ri 's/.*swap.*/#&/' /etc/fstab # permanent # Set host name according to plan hostnamectl set-hostname <hostname> # Add hosts to master cat >> /etc/hosts << EOF 192.168.100.11 node01.flyfish 192.168.100.12 node02.flyfish 192.168.100.13 node03.flyfish EOF # Chain that delivers bridged IPv4 traffic to iptables cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system # Take effect # time synchronization yum install chronyd server ntp1.aliyun.com

2. Deploying Etcd Clusters

2.1 ETCD cluster concept

Etcd is a distributed key-value storage system. Kubernetes uses Etcd for data storage. So first, prepare an Etcd database. In order to solve the single point failure of Etcd, it should be deployed in cluster mode. There are 3 clusters to build a cluster, which can tolerate one machine failure. Of course, you can also use 5 clusters to build a cluster, which can tolerate two machine failures.

Note: To save machines, this is multiplexed with K8s node machines.It can also be deployed independently of the k8s cluster as long as the apiserver can be connected.

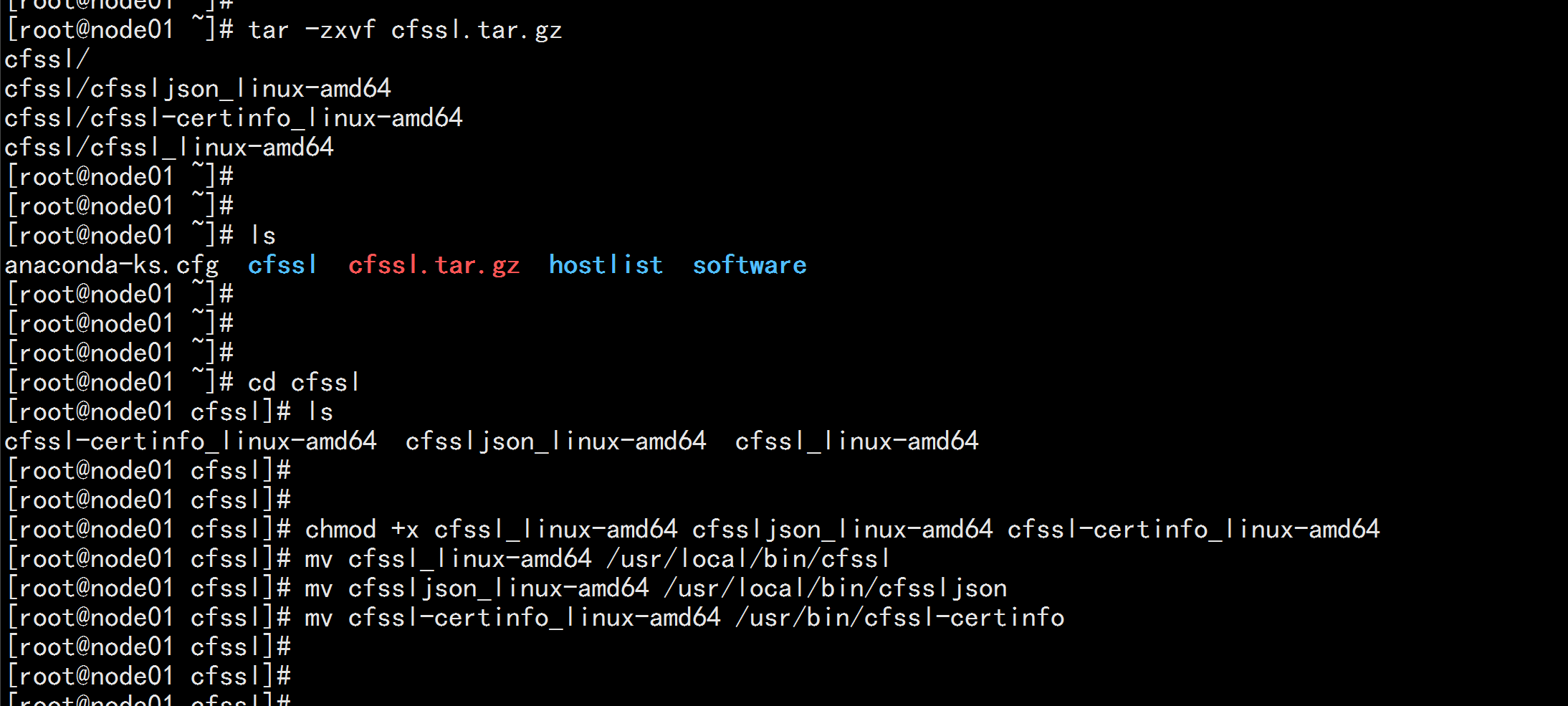

2.2 Prepare cfssl Certificate Generation Tool

cfssl Is an open source certificate management tool that uses json File Generation Certificate, compared to openssl More convenient to use. //Find any server operation, using the Master node here. --- wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo ---

2.3 Generating Etcd Certificates

1. From a Certificate Authority (CA)

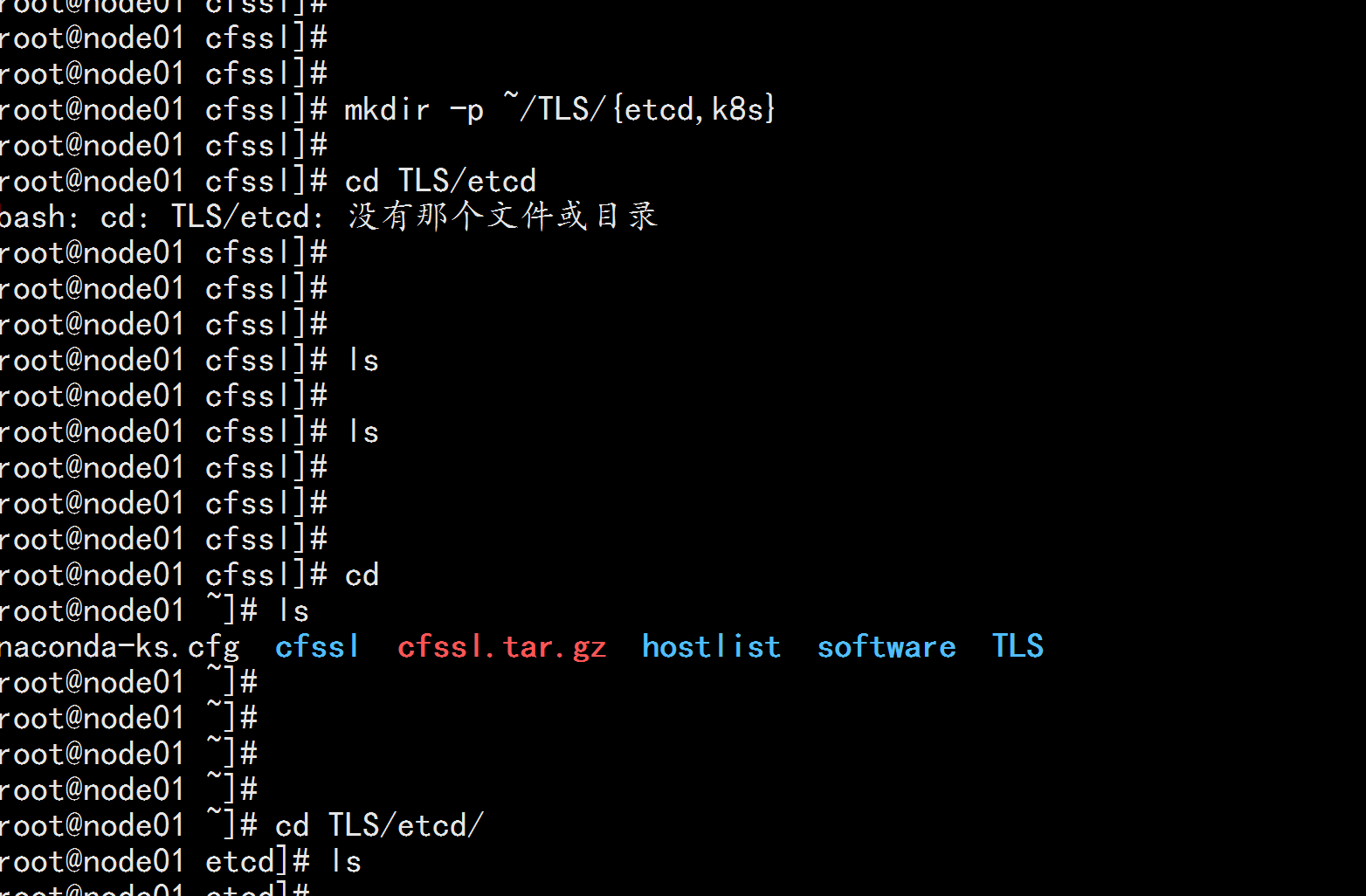

Create a working directory:

mkdir -p ~/TLS/{etcd,k8s}

cd TLS/etcd

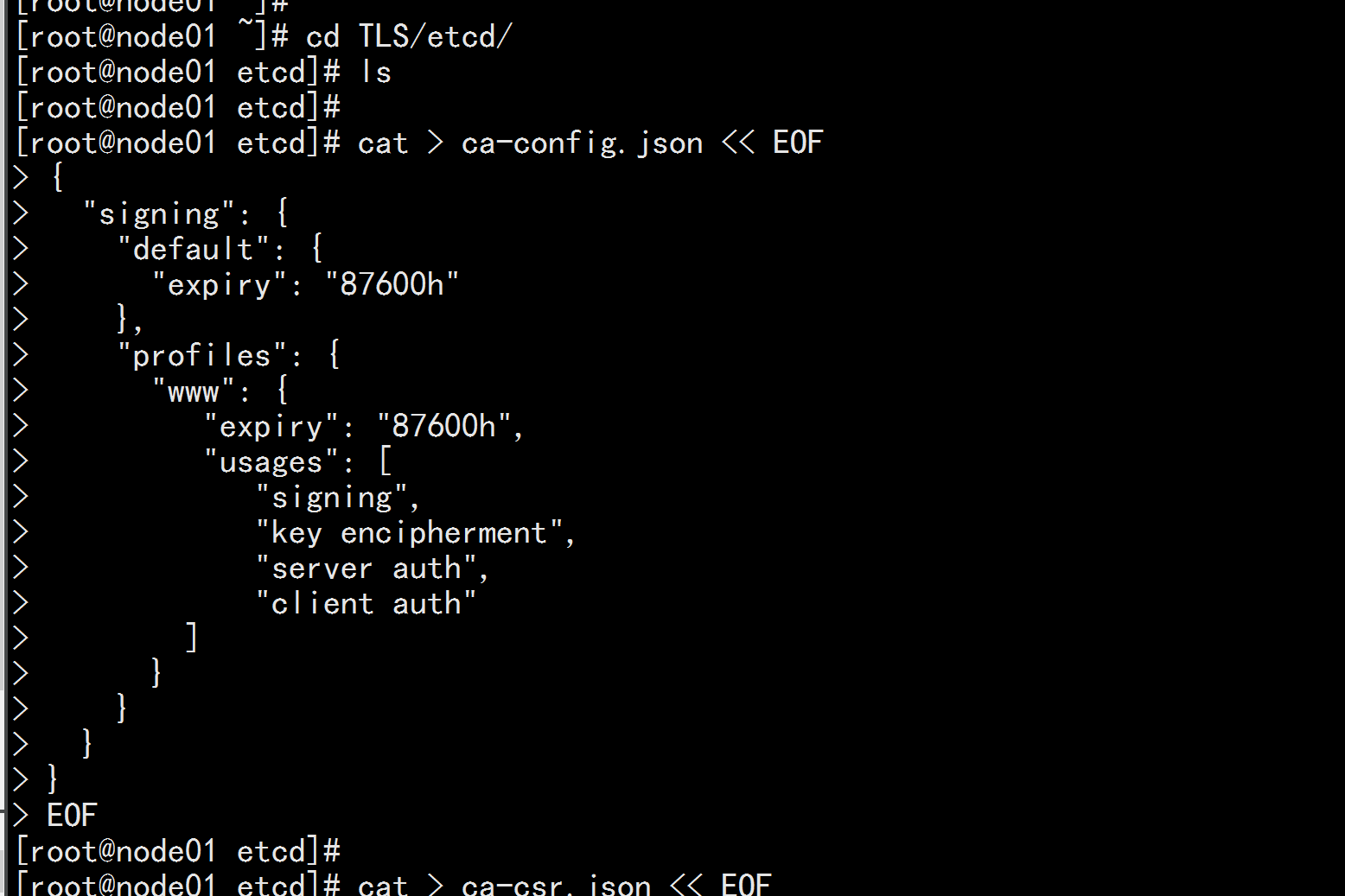

Self-signed CA:

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

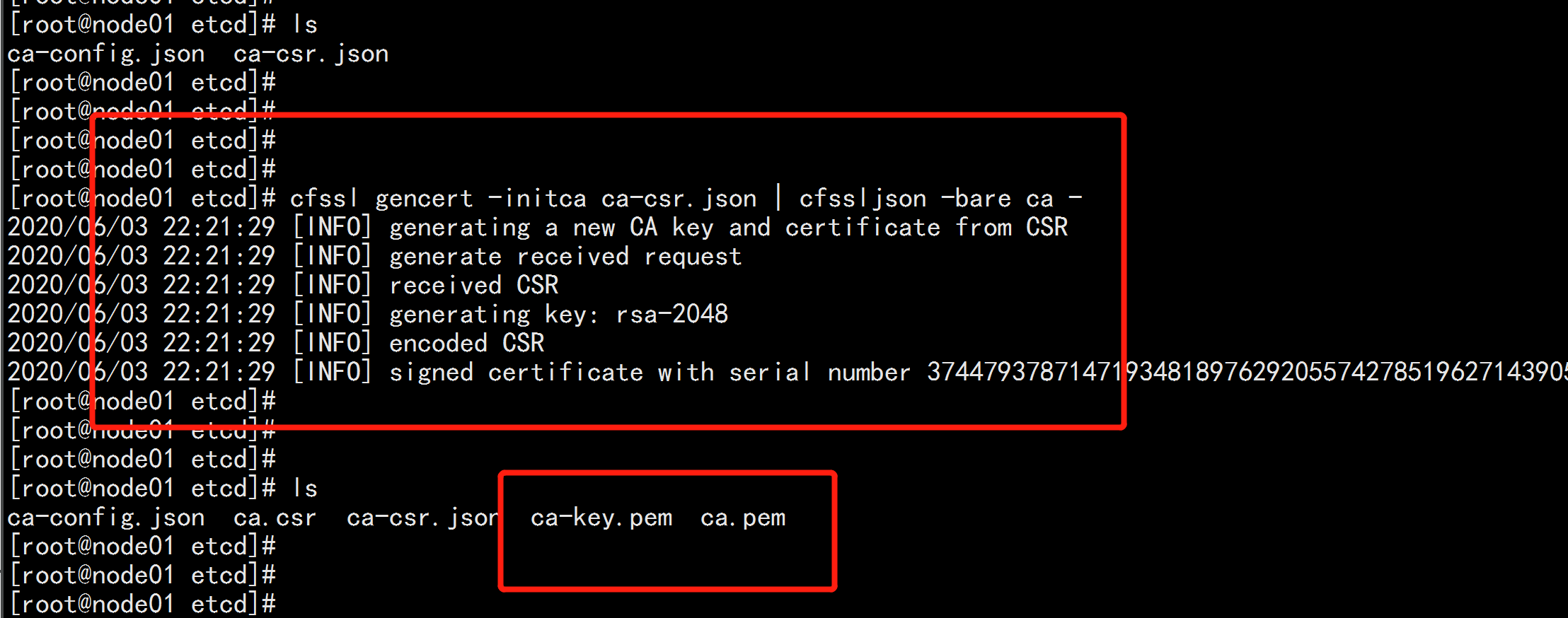

//Generate Certificate

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

ca-key.pem ca.pem

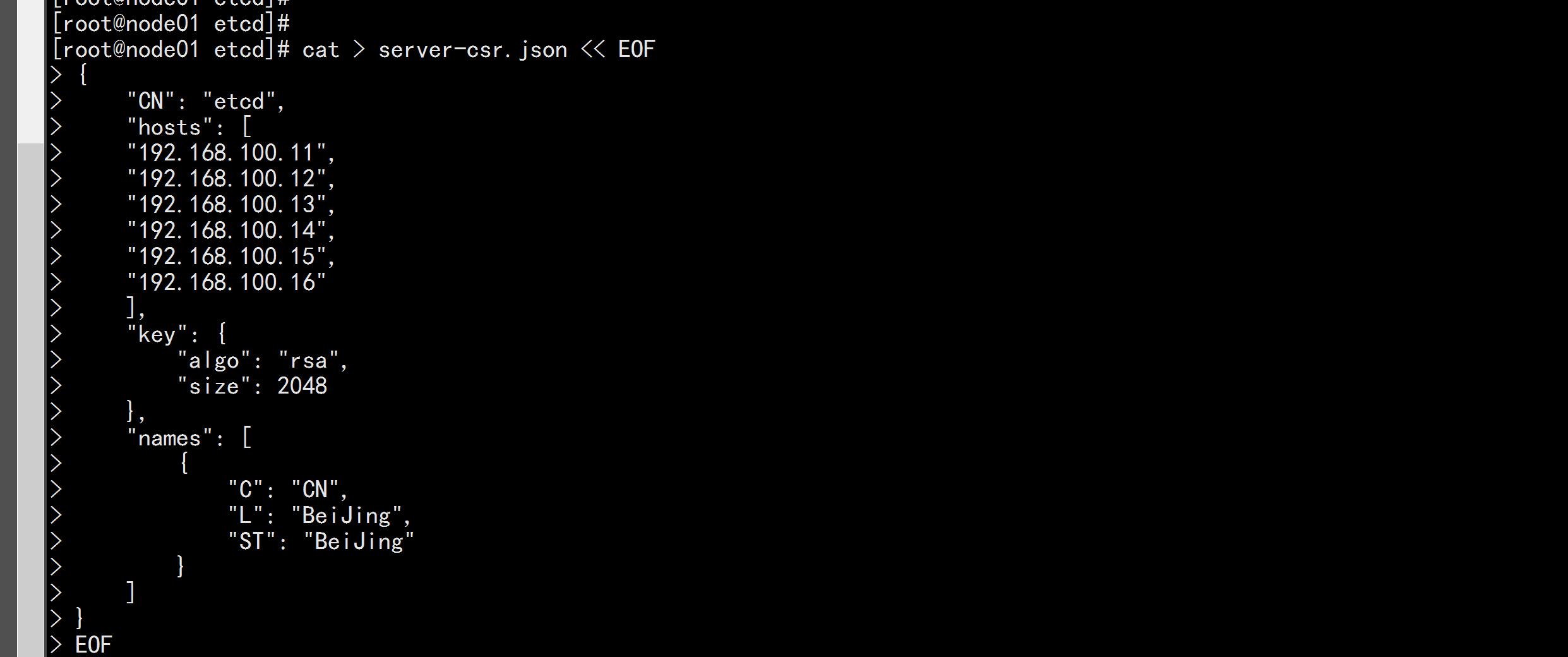

2. Use self-signed CA Issuance Etcd HTTPS certificate

//Create a certificate request file:

cat > server-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"192.168.100.11",

"192.168.100.12",

"192.168.100.13",

"192.168.100.14",

"192.168.100.15",

"192.168.100.16",

"192.168.100.17",

"192.168.100.100"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

//Generate certificate:

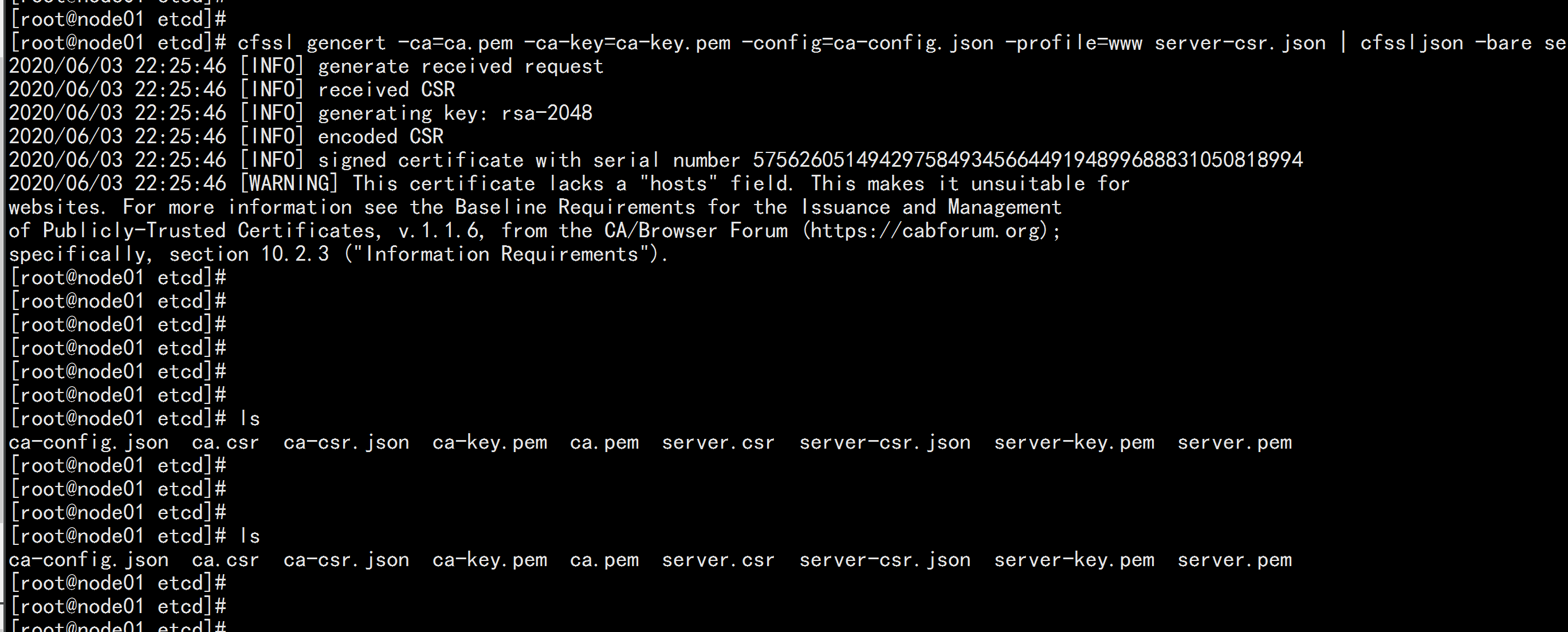

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

ls server*pem

server-key.pem server.pem

2.4 Download binaries from Github

Download address:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

The following operate on Node 1. To simplify the operation, all files generated by Node 1 will be copied to Node 2 and Node 3.

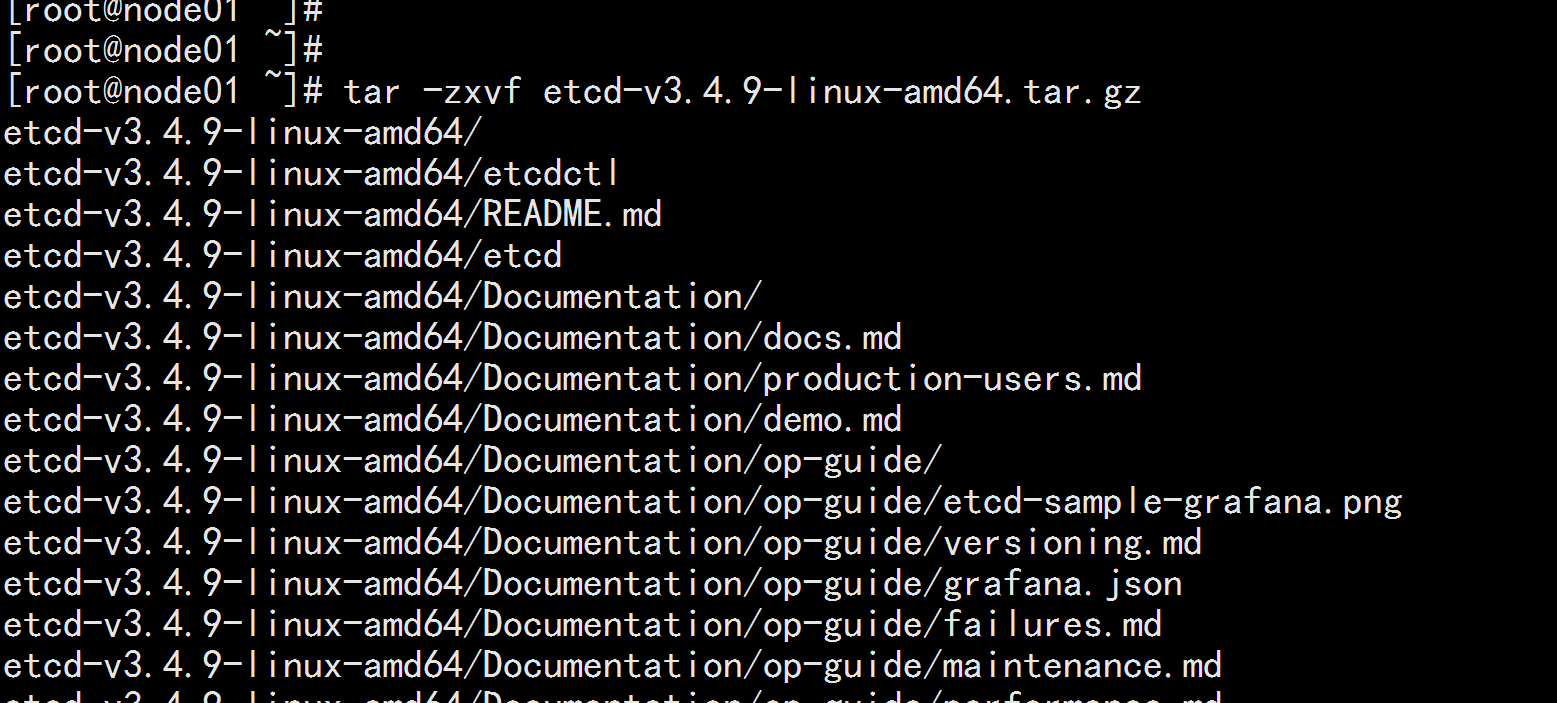

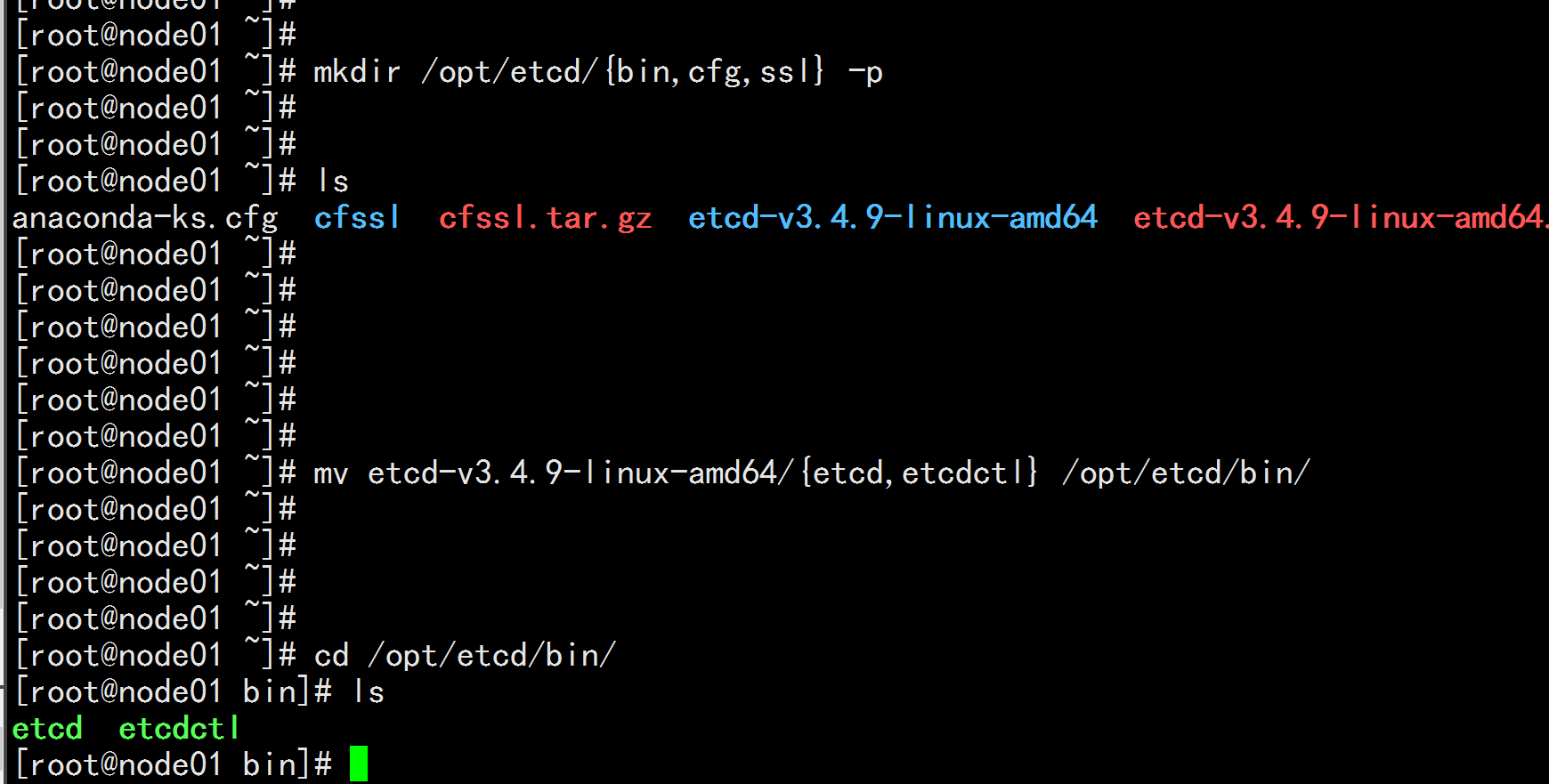

1. Create a working directory and unzip binary packages

mkdir /opt/etcd/{bin,cfg,ssl} -p

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

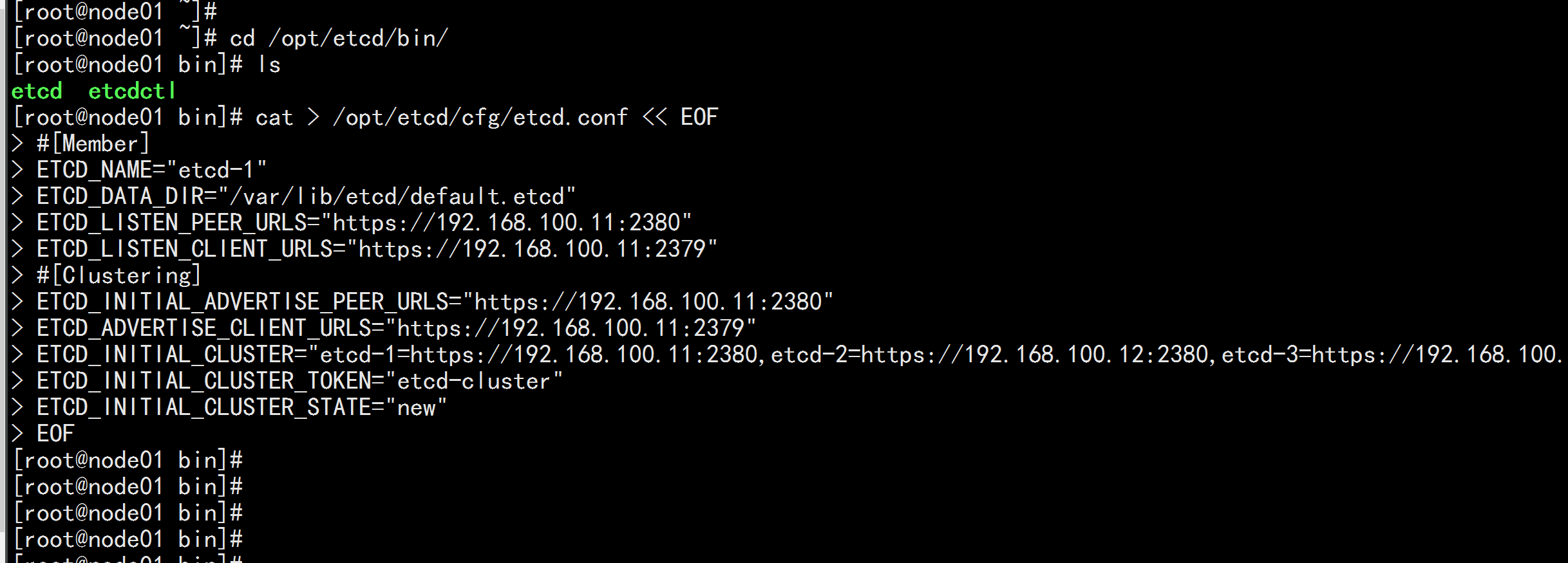

2.5 Create etcd profile

cat > /opt/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.100.11:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.100.11:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.11:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.11:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.100.11:2380,etcd-2=https://192.168.100.12:2380,etcd-3=https://192.168.100.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF --- ETCD_NAME: Node name, unique in cluster ETCD_DATA_DIR: Data directory ETCD_LISTEN_PEER_URLS: Cluster Communication Listening Address ETCD_LISTEN_CLIENT_URLS: Client Access Listening Address ETCD_INITIAL_ADVERTISE_PEER_URLS: Cluster Announcement Address ETCD_ADVERTISE_CLIENT_URLS: Client Notification Address ETCD_INITIAL_CLUSTER: Cluster Node Address ETCD_INITIAL_CLUSTER_TOKEN: colony Token ETCD_INITIAL_CLUSTER_STATE: The current state of joining the cluster, new Is a new cluster, existing Indicates joining an existing cluster

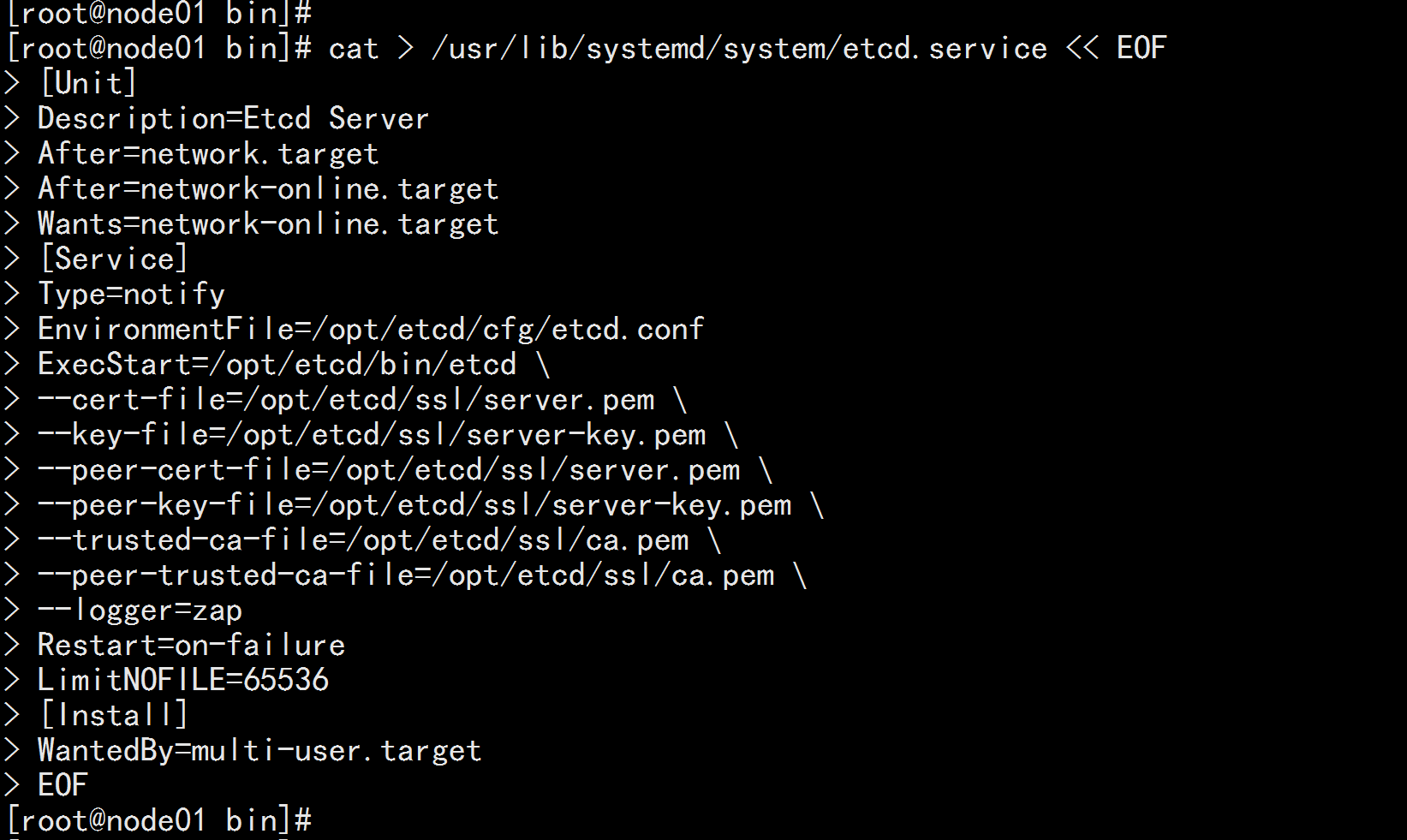

2.6. systemd management etcd

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd \ --cert-file=/opt/etcd/ssl/server.pem \ --key-file=/opt/etcd/ssl/server-key.pem \ --peer-cert-file=/opt/etcd/ssl/server.pem \ --peer-key-file=/opt/etcd/ssl/server-key.pem \ --trusted-ca-file=/opt/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \ --logger=zap Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

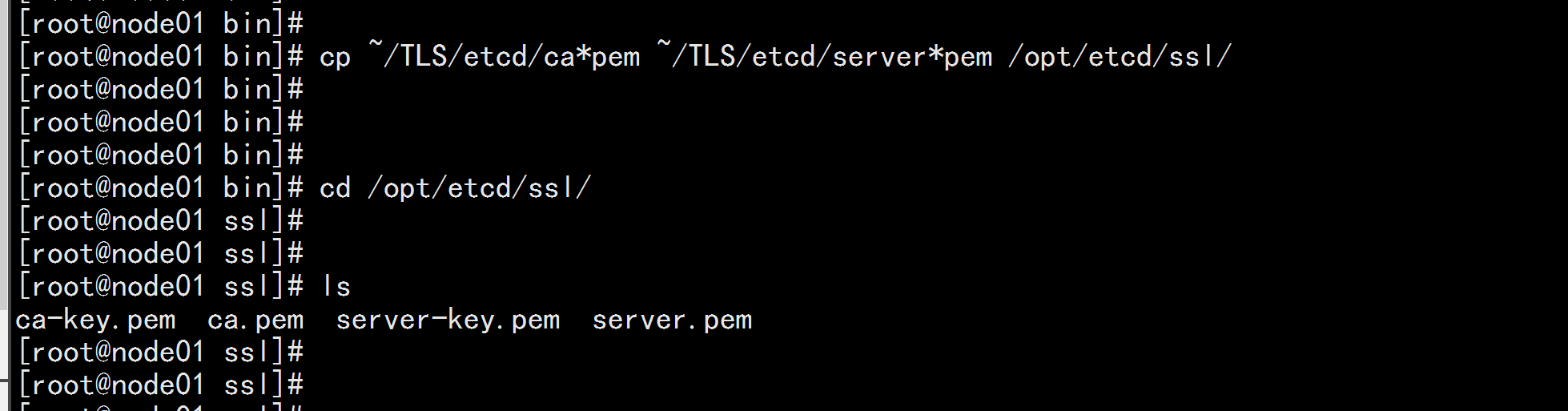

4. Copy the certificate just generated Copy the certificate you just generated to the path in the configuration file: cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

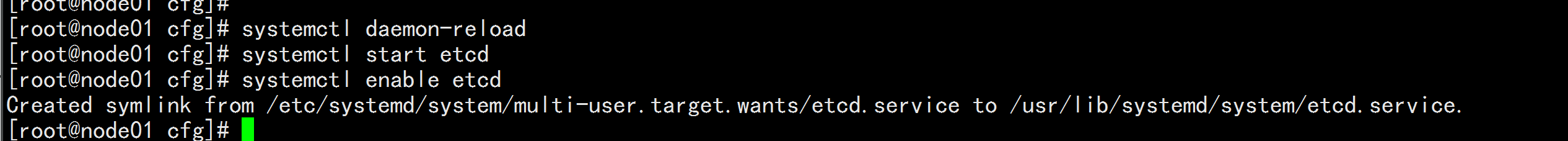

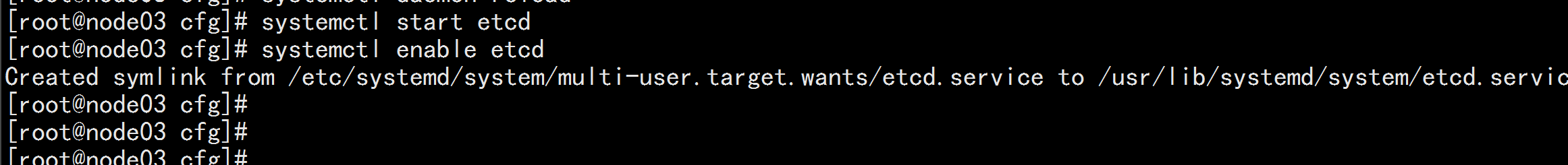

5. Start and set boot start systemctl daemon-reload systemctl start etcd systemctl enable etcd

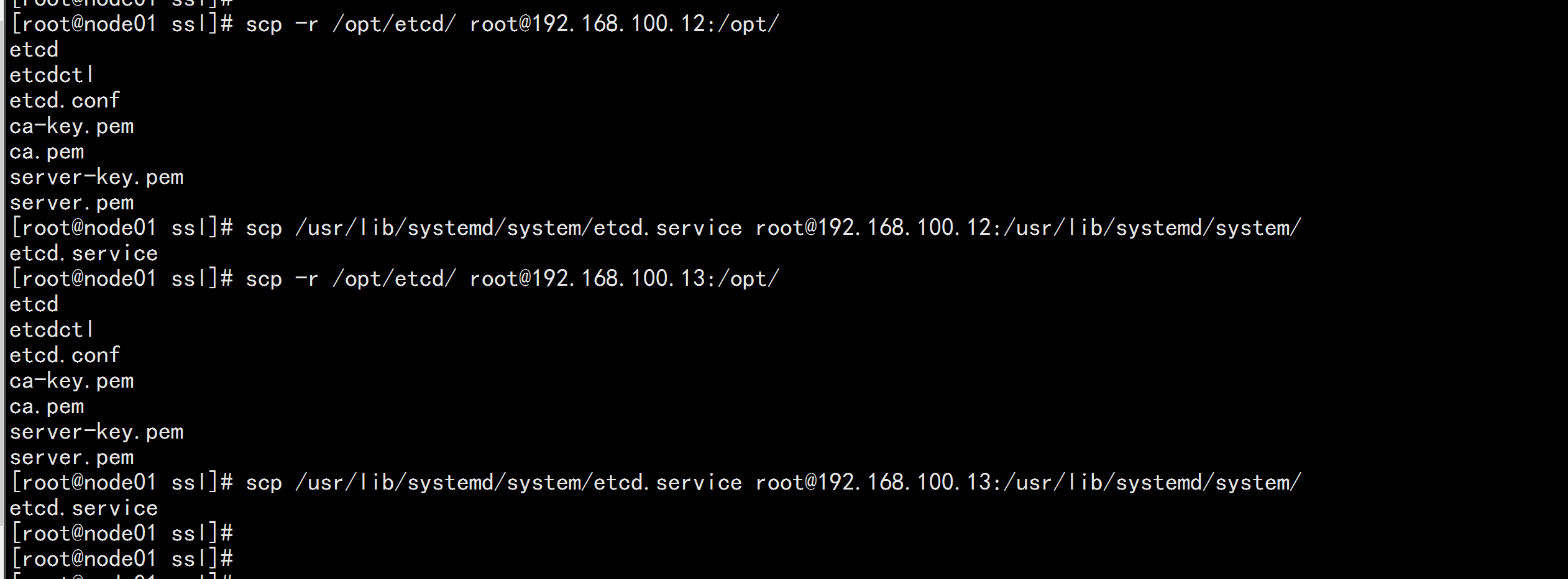

scp -r /opt/etcd/ root@192.168.100.12:/opt/ scp /usr/lib/systemd/system/etcd.service root@192.168.100.12:/usr/lib/systemd/system/ scp -r /opt/etcd/ root@192.168.100.13:/opt/ scp /usr/lib/systemd/system/etcd.service root@192.168.100.13:/usr/lib/systemd/system/

Then modify at Node 2 and Node 3, respectively etcd.conf Node name and current server in configuration file IP: vi /opt/etcd/cfg/etcd.conf #[Member] ETCD_NAME="etcd-1" # Modify here to change Node 2 to etcd-2 and Node 3 to etcd-3 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.100.11:2380 "#Modify here to current server IP ETCD_LISTEN_CLIENT_URLS="https://192.168.100.11:2379 "#Modify here to current server IP #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.11:2380 "#Modify here to current server IP ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.11:2379 "#Modify here to current server IP ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.100.11:2380,etcd-2=https://192.168.100.12:2380,etcd-3=https://192.168.100.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

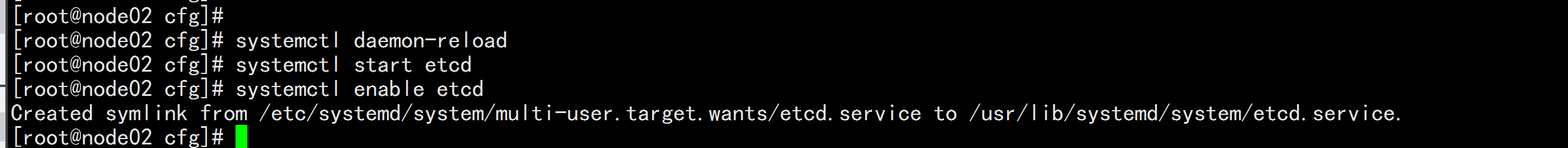

Finally, start etcd and set the boot start, as above.

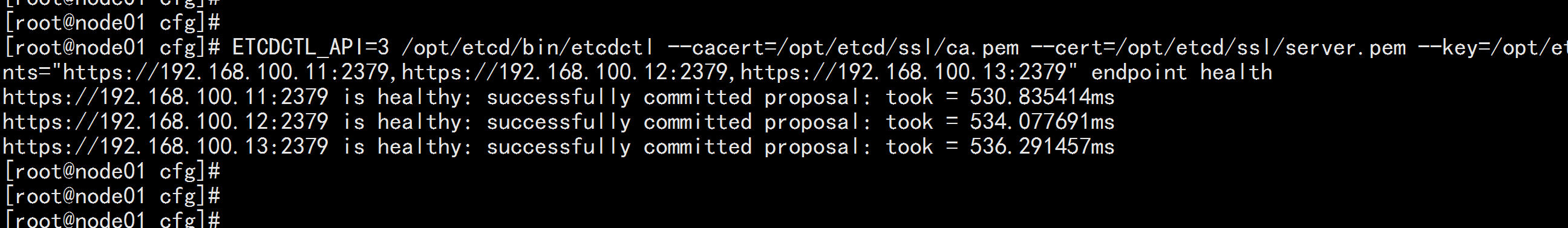

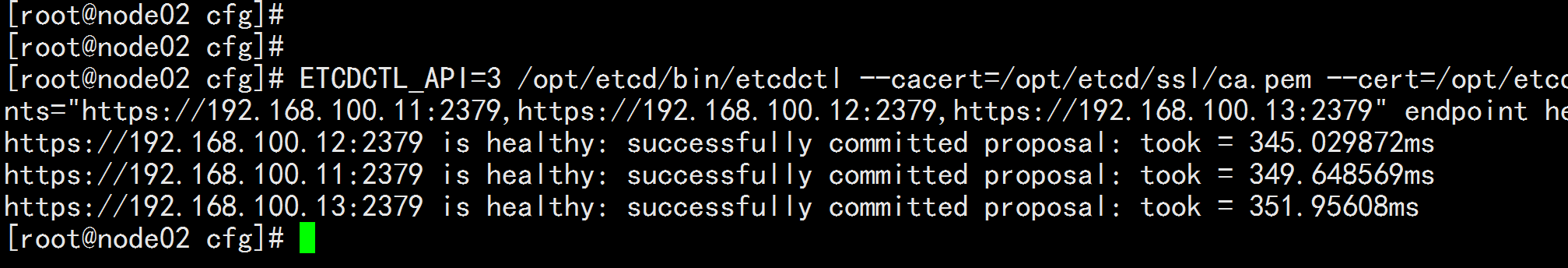

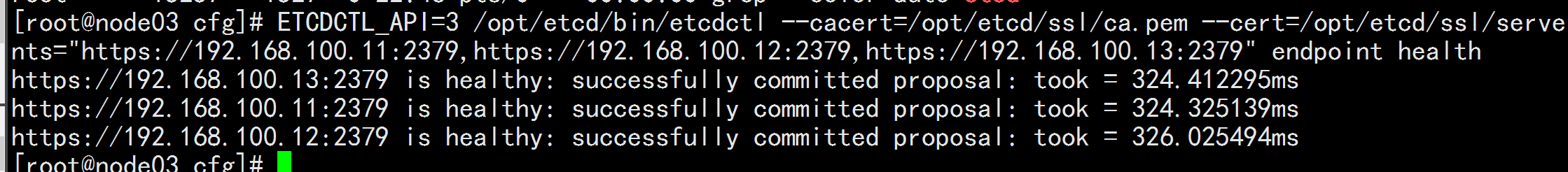

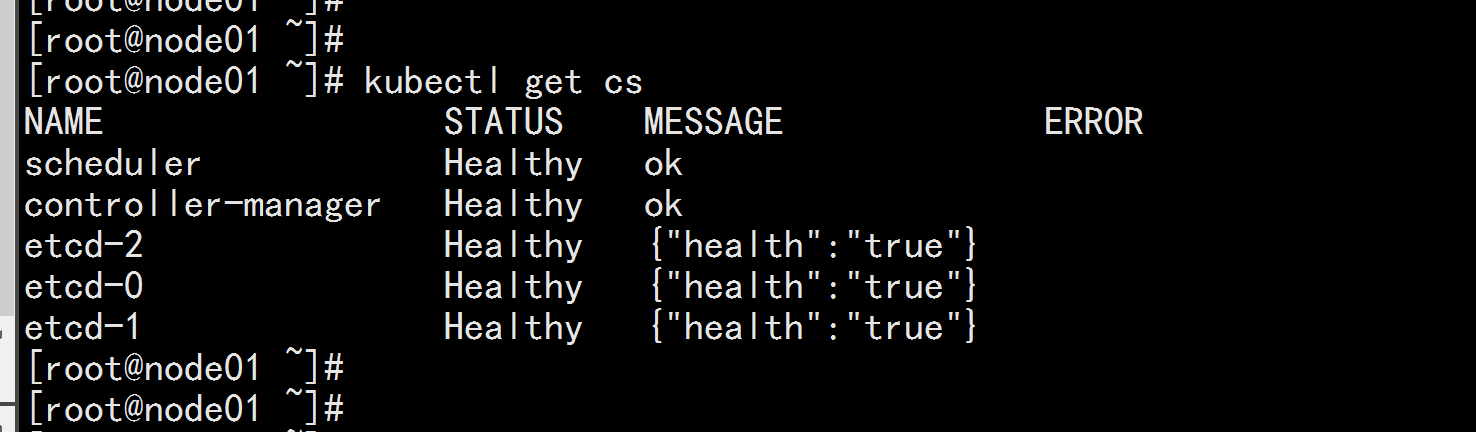

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.100.11:2379,https://192.168.100.12:2379,https://192.168.100.13:2379" endpoint health

If you output the above information, the cluster deployment is successful.If there is a problem, first look at the log: /var/log/message or journalctl-u etcd

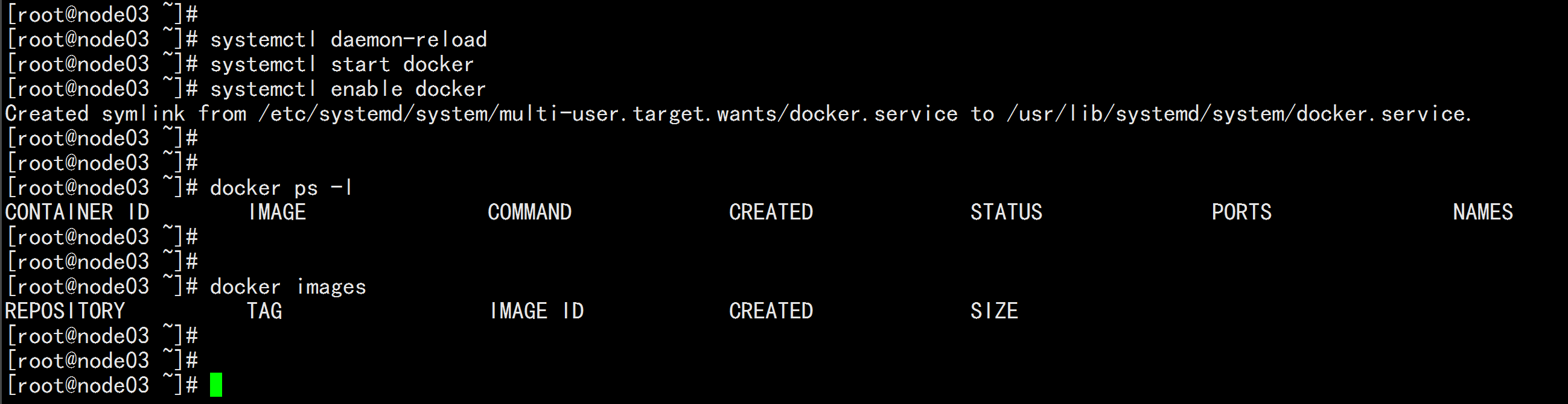

3. Install Docker

Download address:https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz The following operate on all nodes.Binary installation is used here, as is yum installation. Install on node01.flyfish,node02.flyfish and node03.flyfish nodes

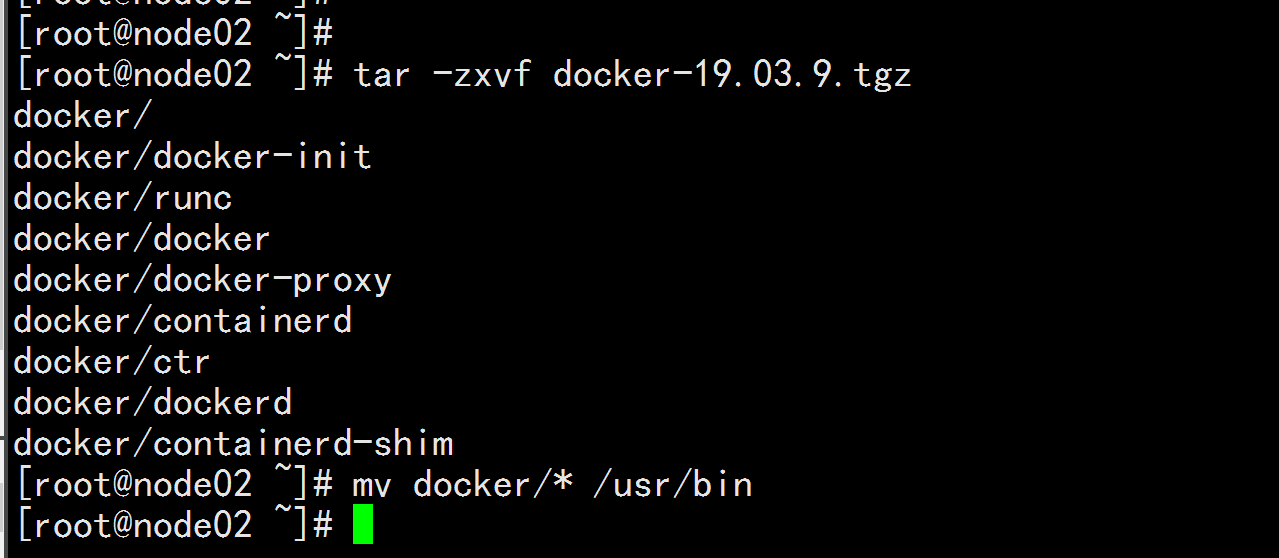

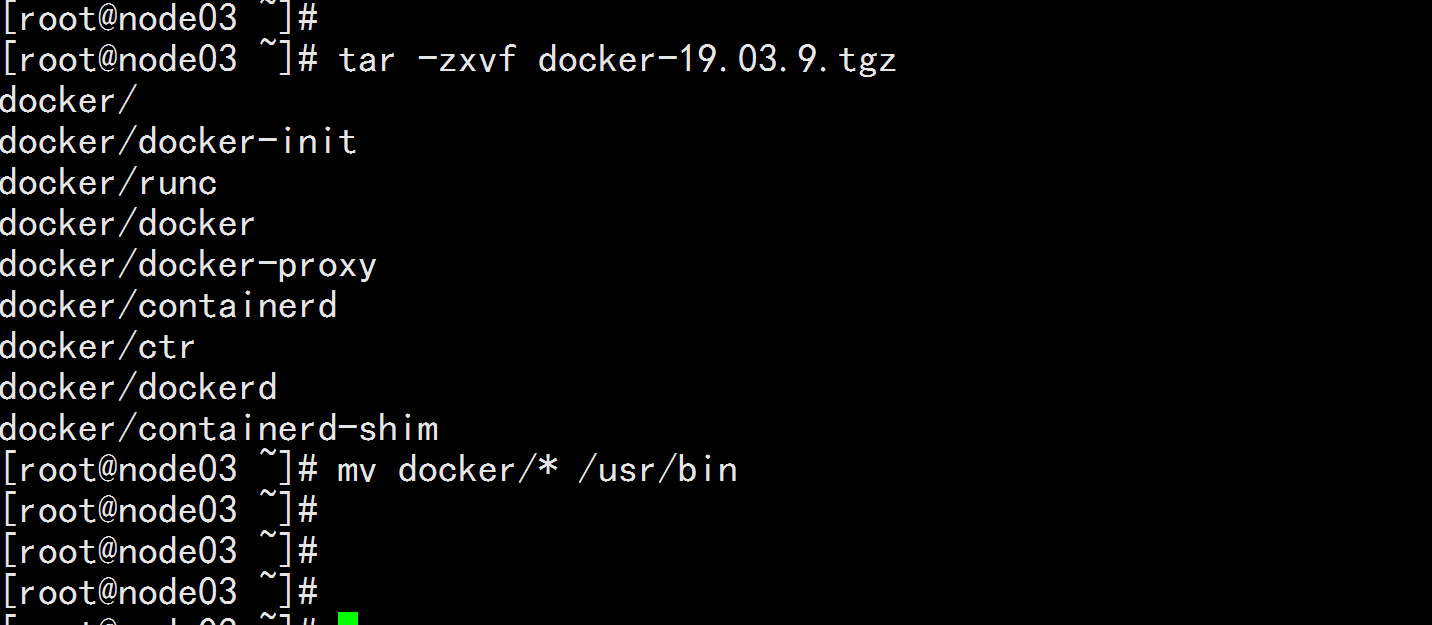

3.1 Unzip Binary Package tar zxvf docker-19.03.9.tgz mv docker/* /usr/bin

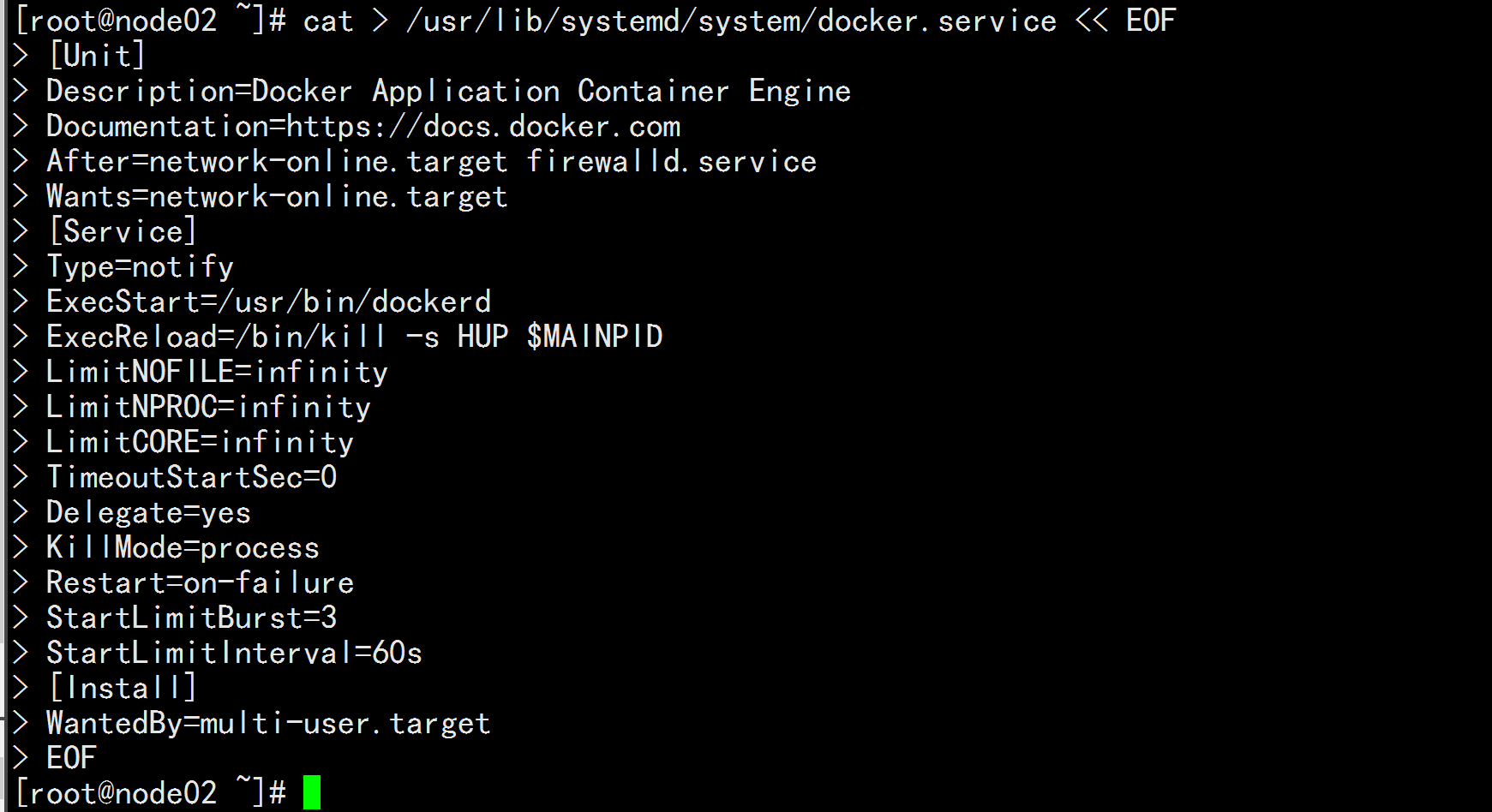

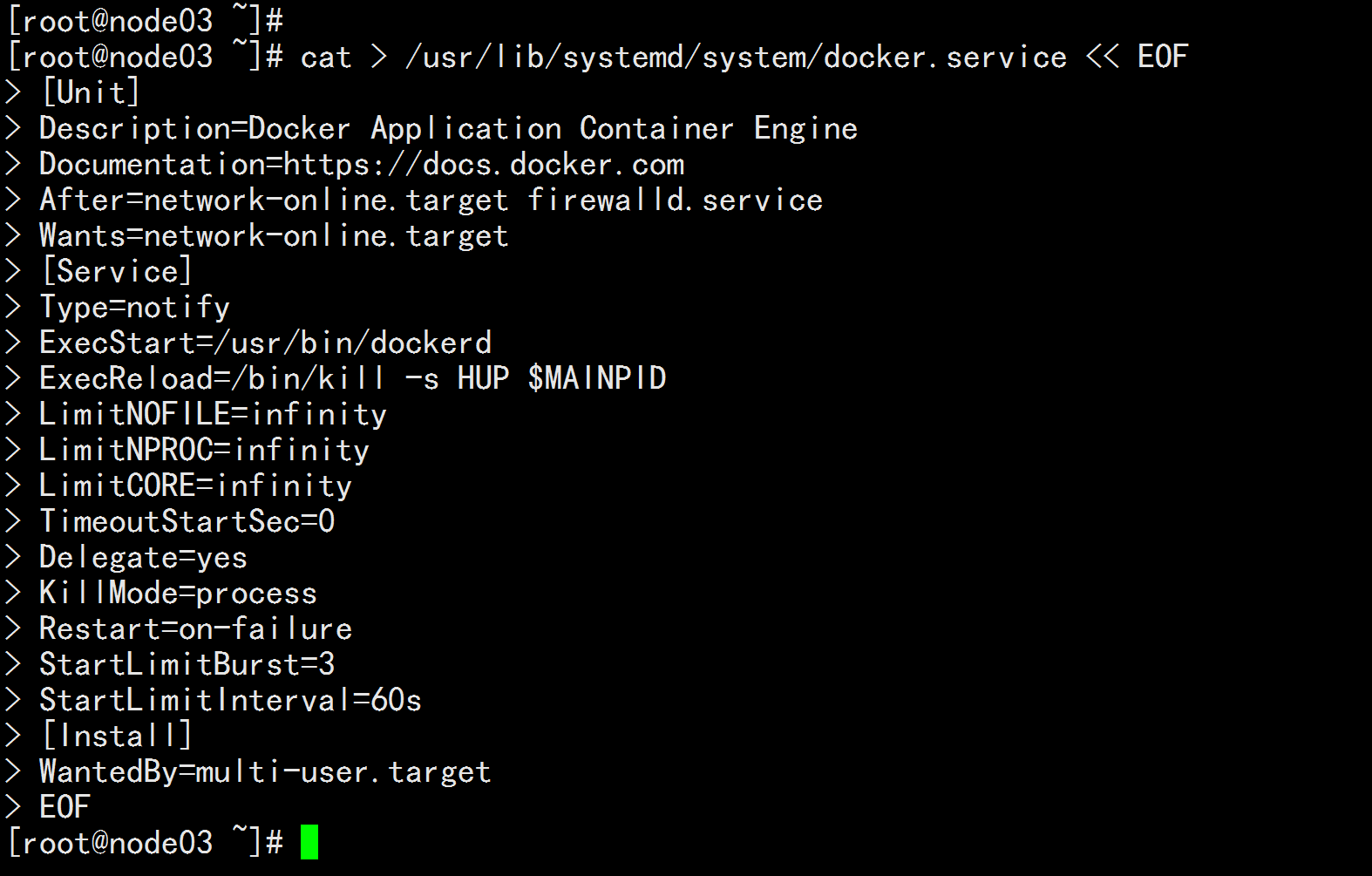

3.2 systemd Administration docker cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF

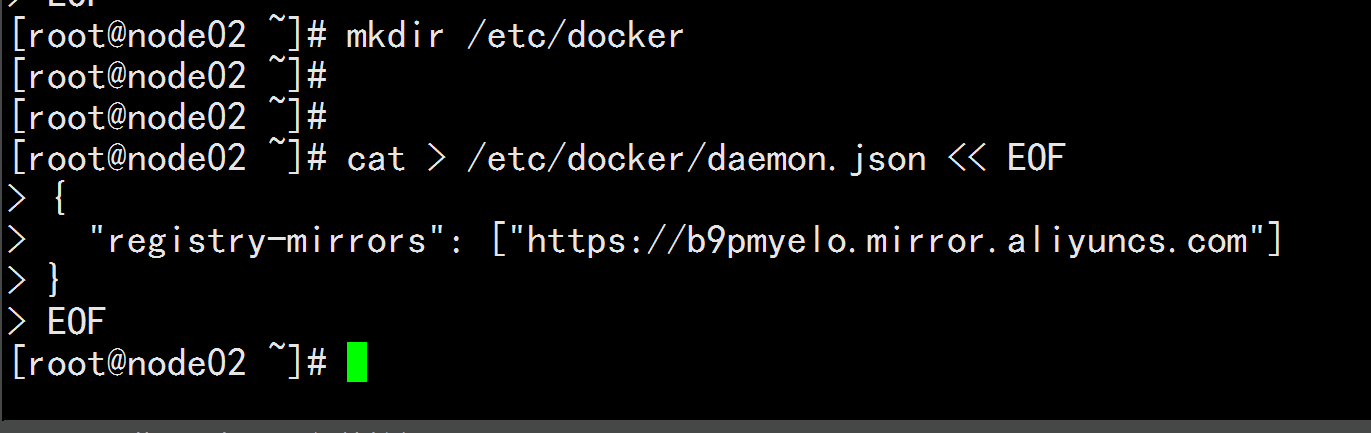

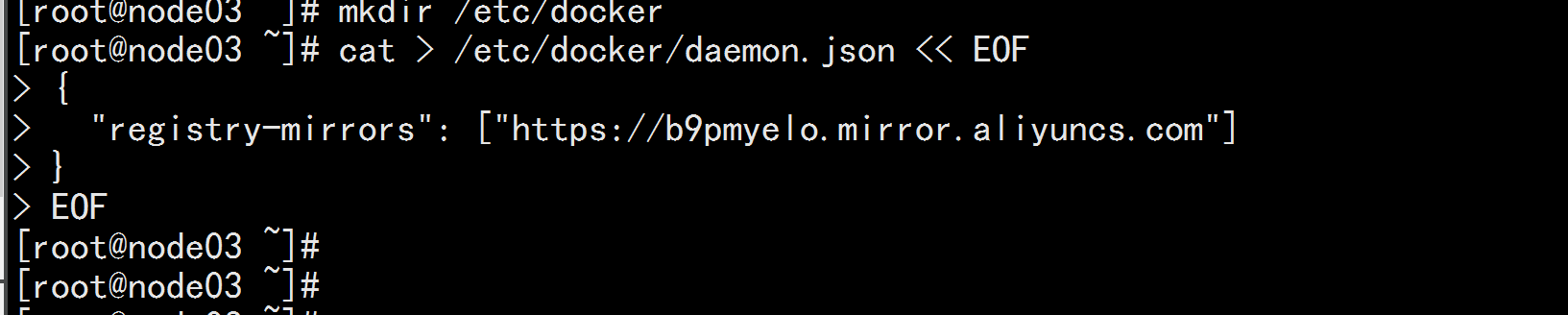

3.3 create profile

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

registry-mirrors Ali Cloud Mirror Accelerator

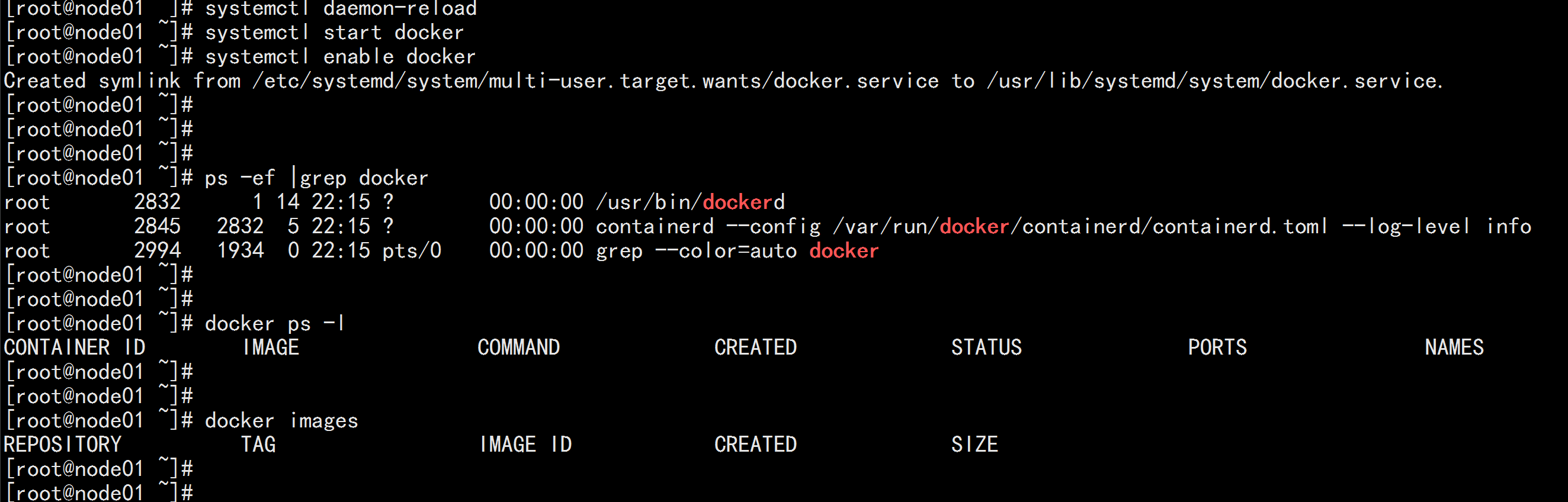

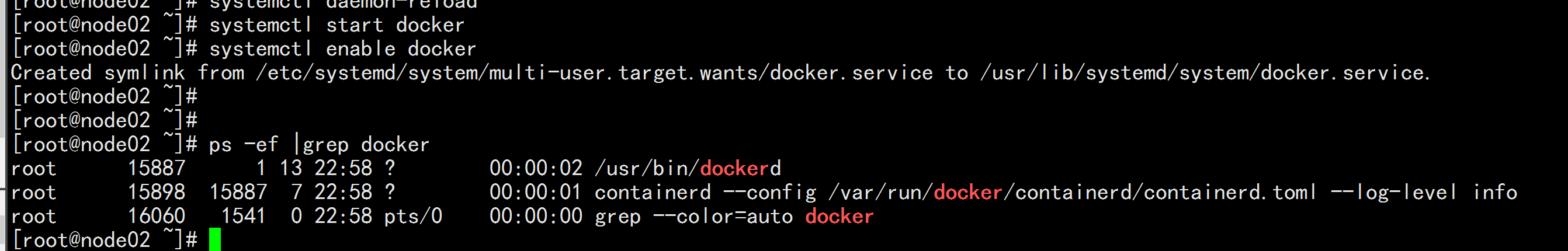

3.4 Start and set boot start systemctl daemon-reload systemctl start docker systemctl enable docker

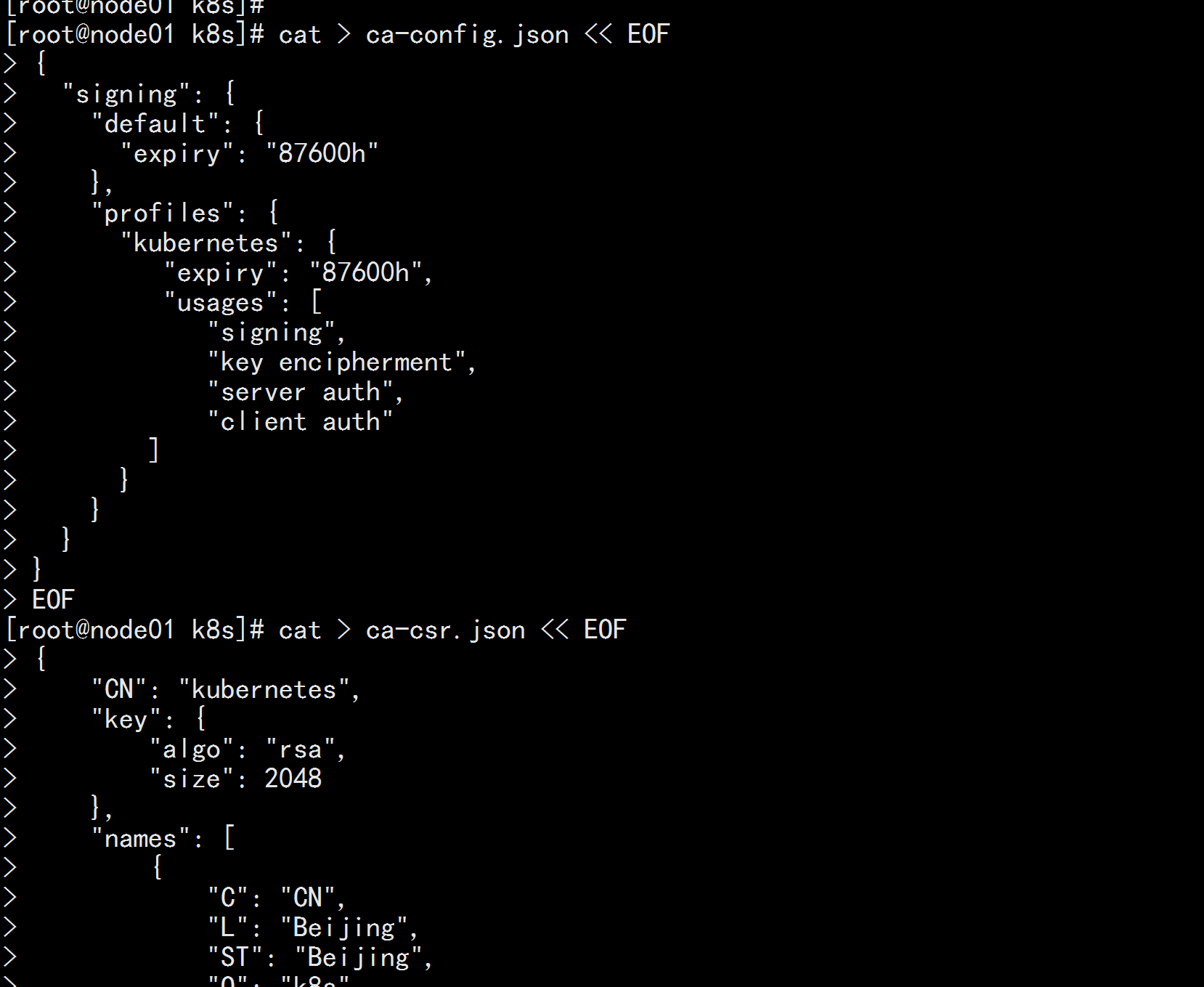

IV. Deployment of Master Node

4.1 generate kube-apiserver certificate

1. From the visa issuing authority( CA)

cd /root/TLS/k8s/

---

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

//Generate certificate:

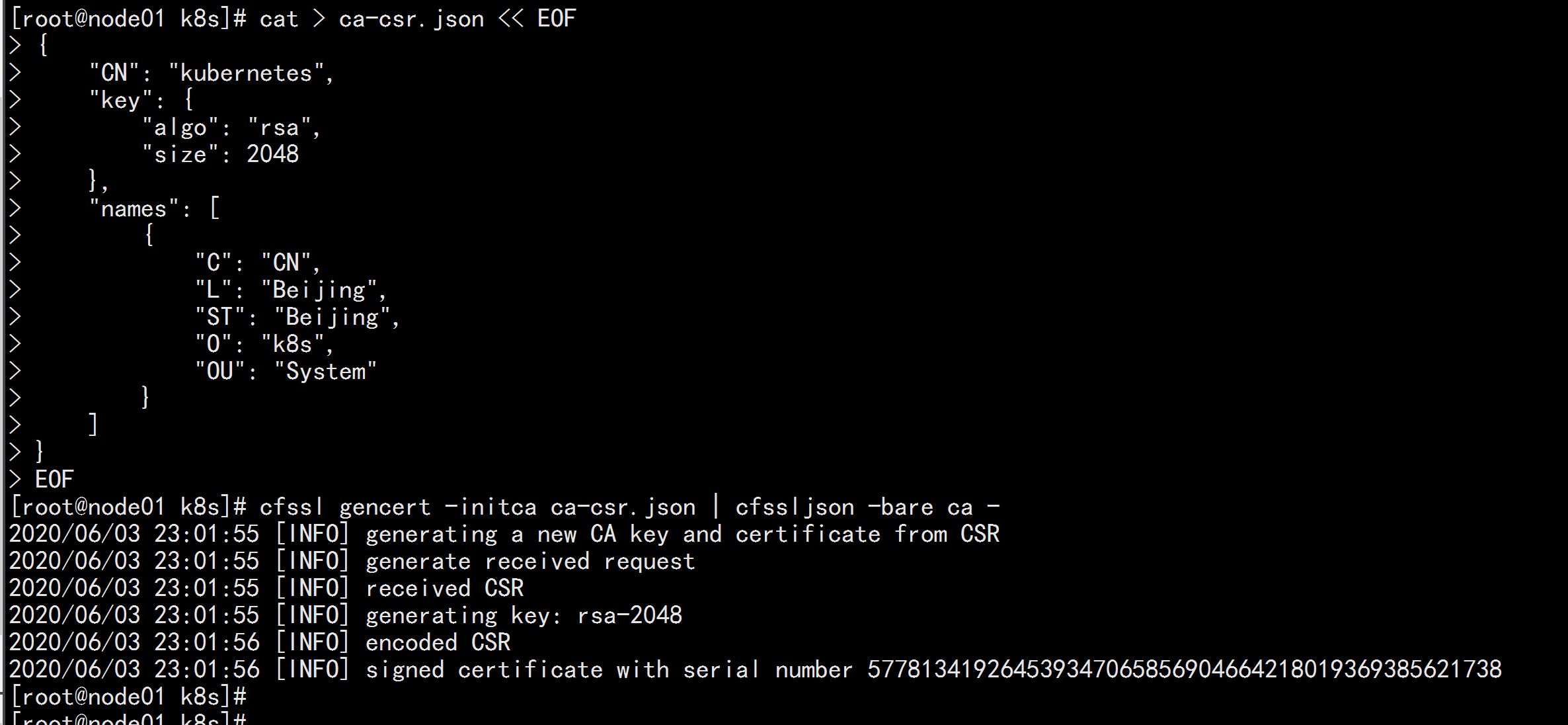

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

ca-key.pem ca.pem

---

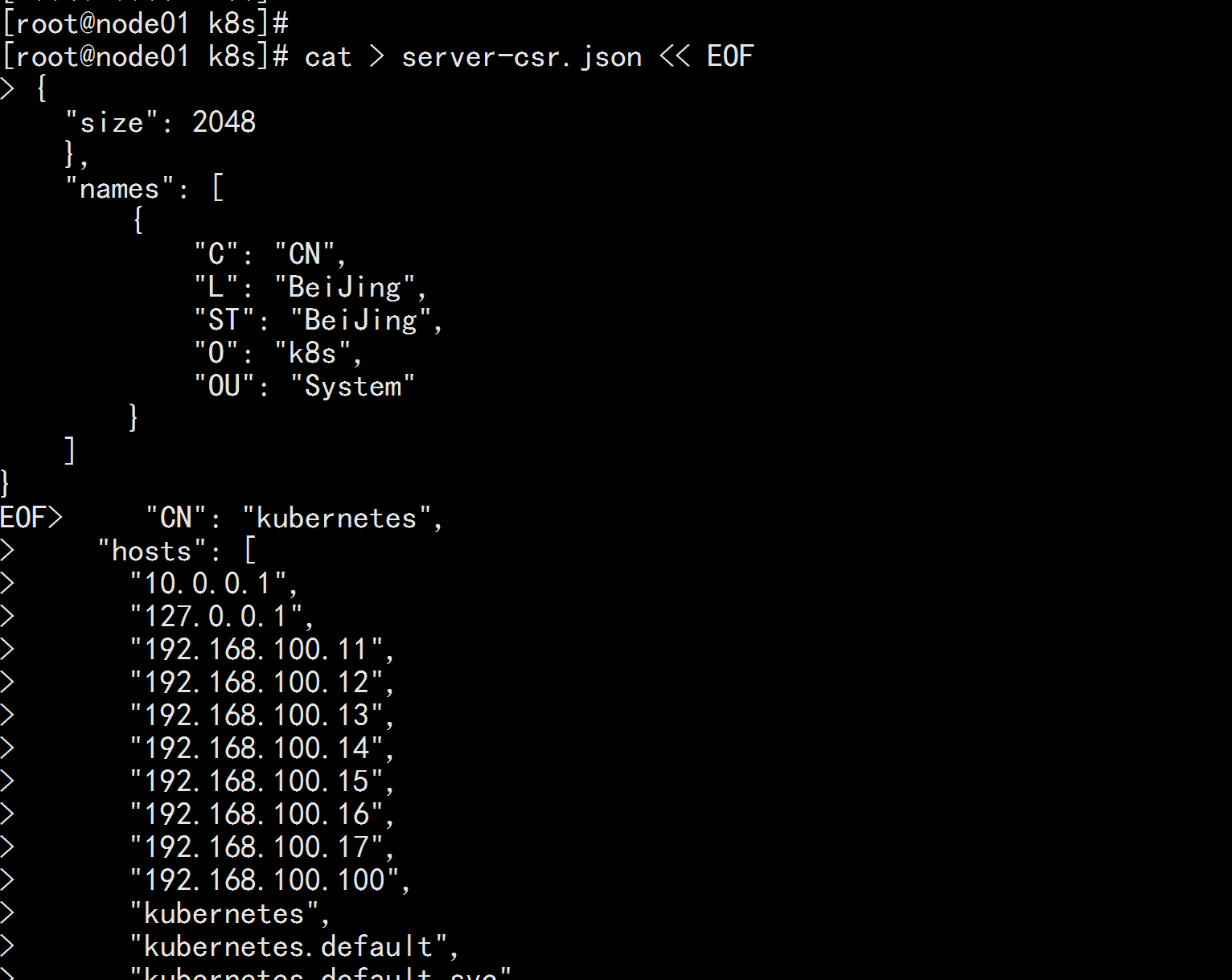

2. Use a self-signed CA to issue a kube-apiserver HTTPS certificate

Create a certificate request file:

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.100.11",

"192.168.100.12",

"192.168.100.13",

"192.168.100.14",

"192.168.100.15",

"192.168.100.16",

"192.168.100.17",

"192.168.100.100",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

Note: IP in the hosts field of the above file is all Master/LB/VIP IP, none can be less!Several more reserved IPs can be written for later expansion.

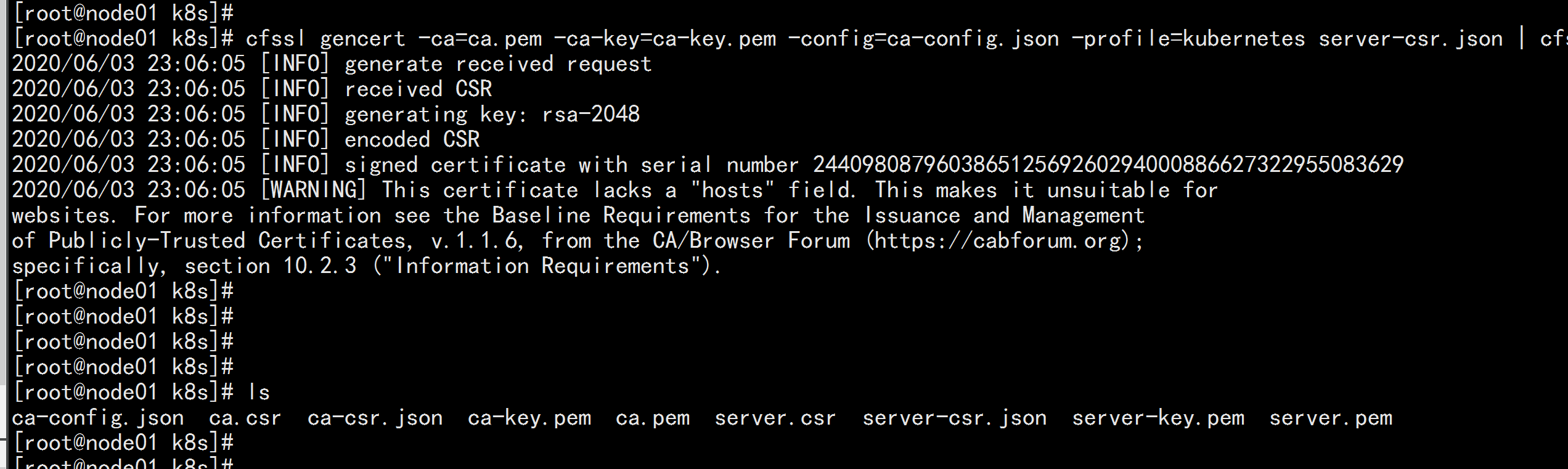

Generate certificate: cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server ls server*pem server-key.pem server.pem

4.2 Download binaries from Github Download address:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md#v1183 Note: Open the link and you will find that there are many packages in it. Downloading a server package is enough, including the Master and Worker Node binaries.

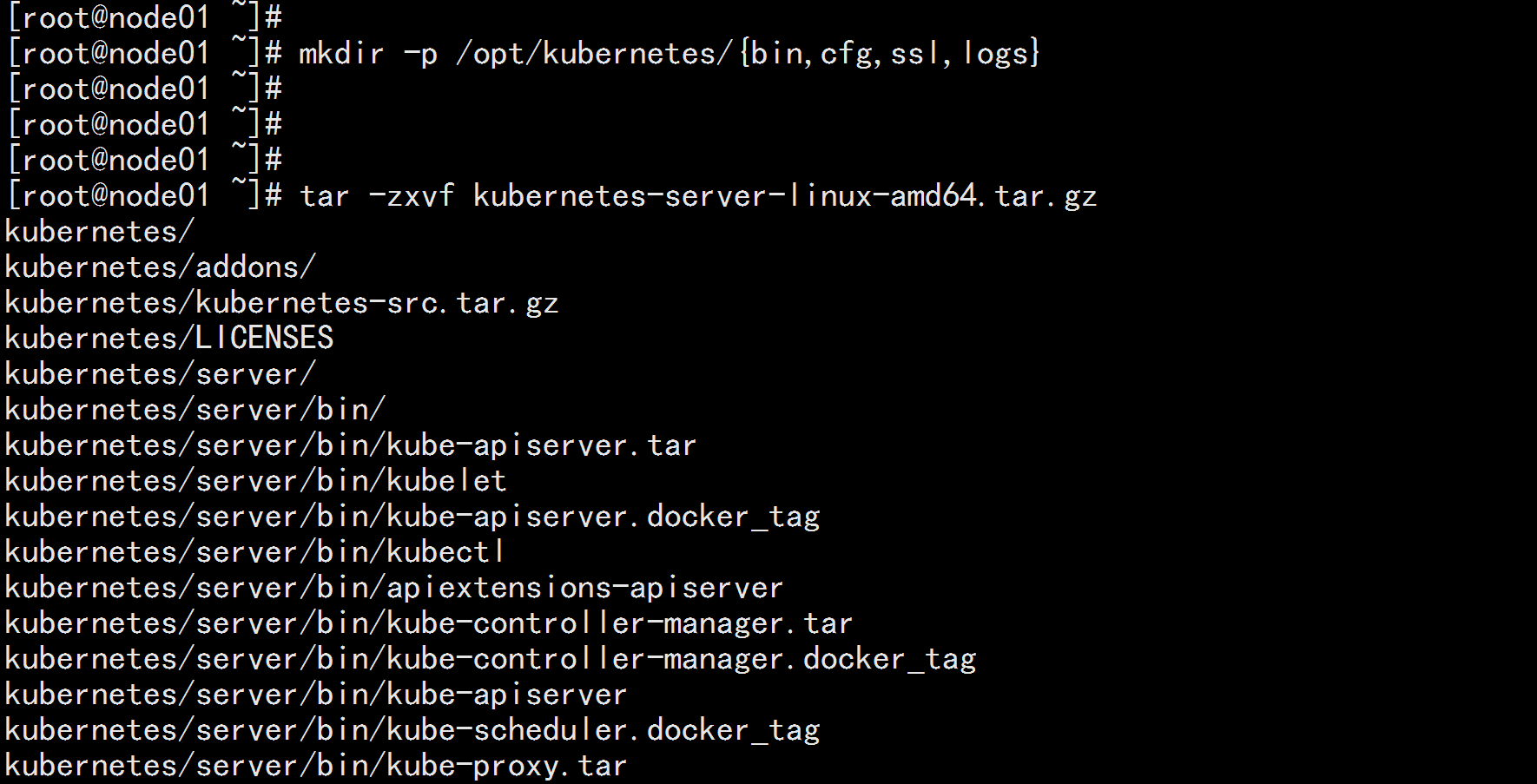

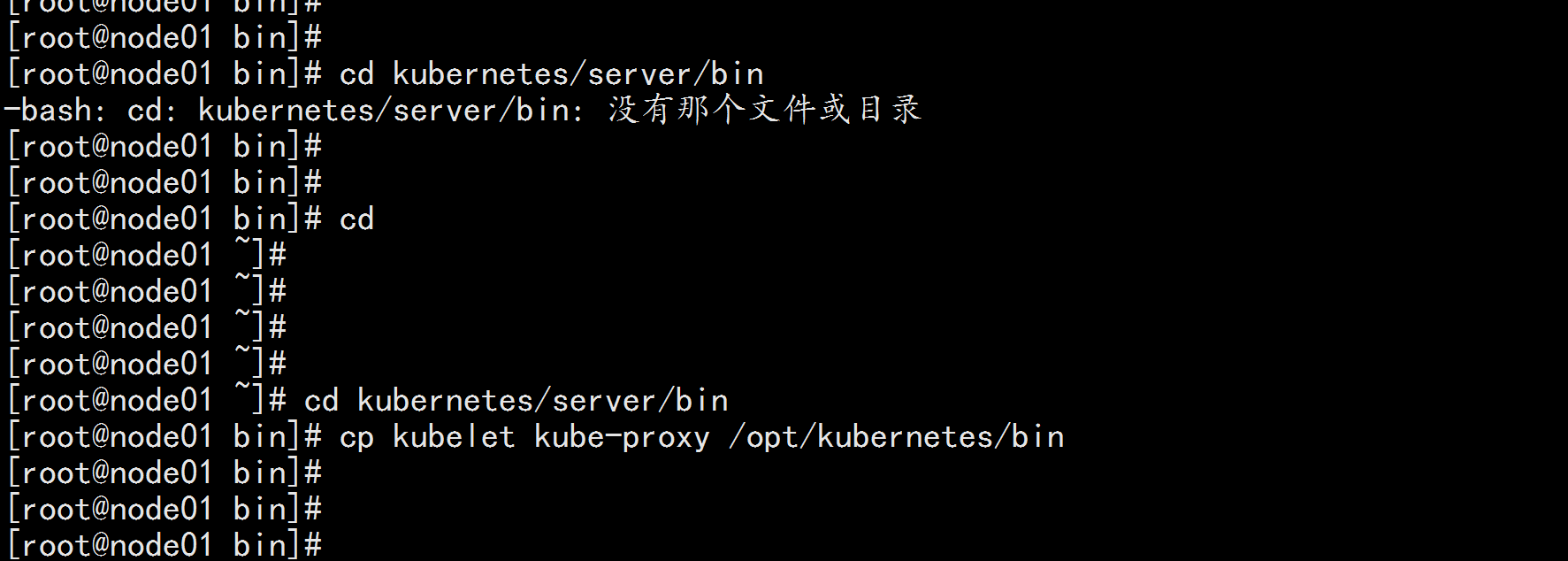

4.3 Unzip Binary Package

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

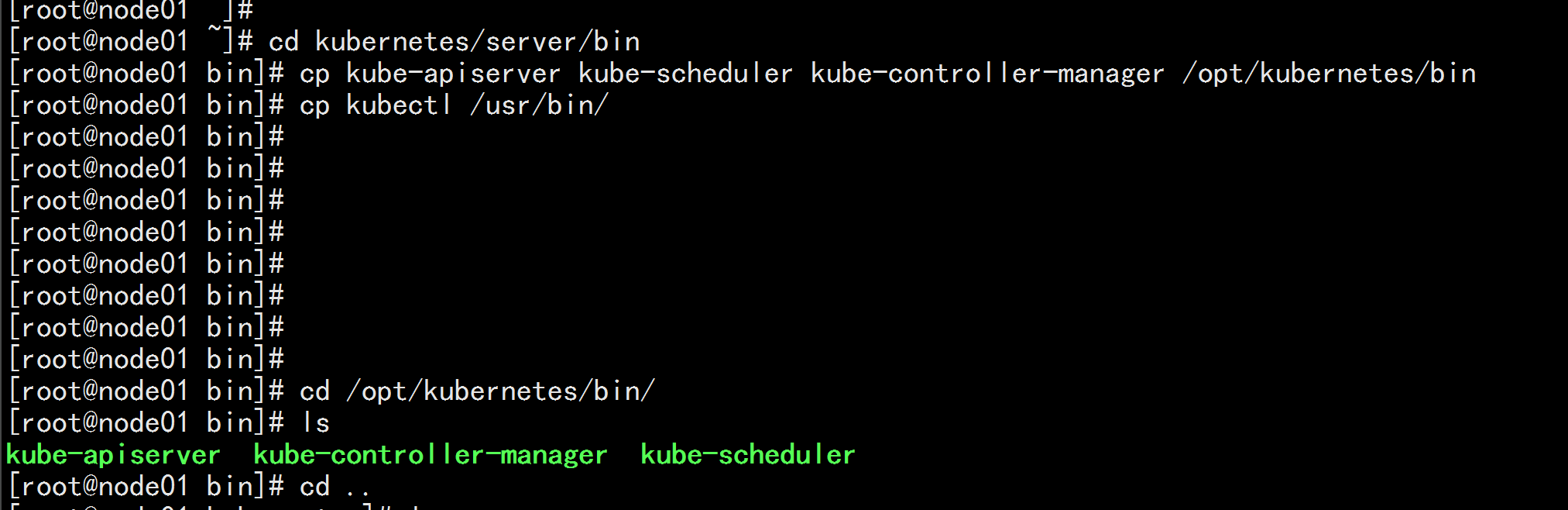

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/

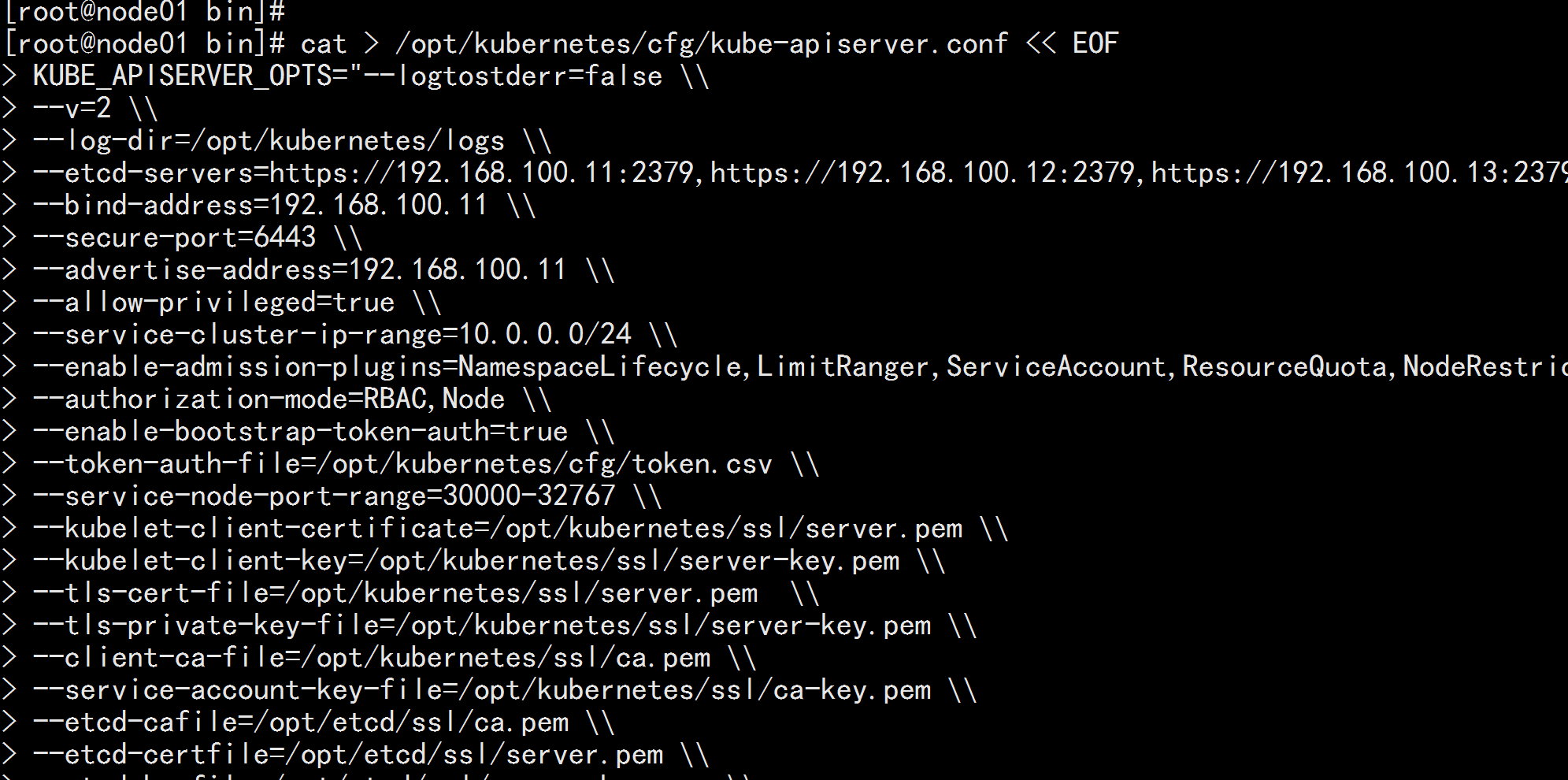

4.4 Deploy kube-apiserver 1. Create a configuration file cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --etcd-servers=https://192.168.100.11:2379,https://192.168.100.12:2379,https://192.168.100.13:2379 \\ --bind-address=192.168.100.11 \\ --secure-port=6443 \\ --advertise-address=192.168.100.11 \\ --allow-privileged=true \\ --service-cluster-ip-range=10.0.0.0/24 \\ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --enable-bootstrap-token-auth=true \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-32767 \\ --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\ --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --etcd-cafile=/opt/etcd/ssl/ca.pem \\ --etcd-certfile=/opt/etcd/ssl/server.pem \\ --etcd-keyfile=/opt/etcd/ssl/server-key.pem \\ --audit-log-maxage=30 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-path=/opt/kubernetes/logs/k8s-audit.log" EOF --- Note: The first two \ above are escape characters, the second are line breaks, and the escape characters are used to preserve line breaks using EOF. - logtostderr: Enable logging - v: Log level - log-dir: log directory - etcd-servers:etcd cluster address - bind-address: listening address - secure-port:https secure port - advertise-address: Cluster announcement address - allow-privileged: Enable authorization - service-cluster-ip-range:ServiceVirtual IP Address Segment - enable-admission-plugins: access control module - authorization-mode: Authentication authorization, enable RBAC authorization and node self-management - enable-bootstrap-token-auth: Enable the TLS bootstrap mechanism - token-auth-file:bootstrap token file - service-node-port-range:Service node port type default allocation port range - kubelet-client-xxx:apiserver access kubelet client certificate - tls-xxx-file:apiserver https certificate - etcd-xxxfile: Connect Etcd Cluster Certificate - audit-log-xxx: audit log ---

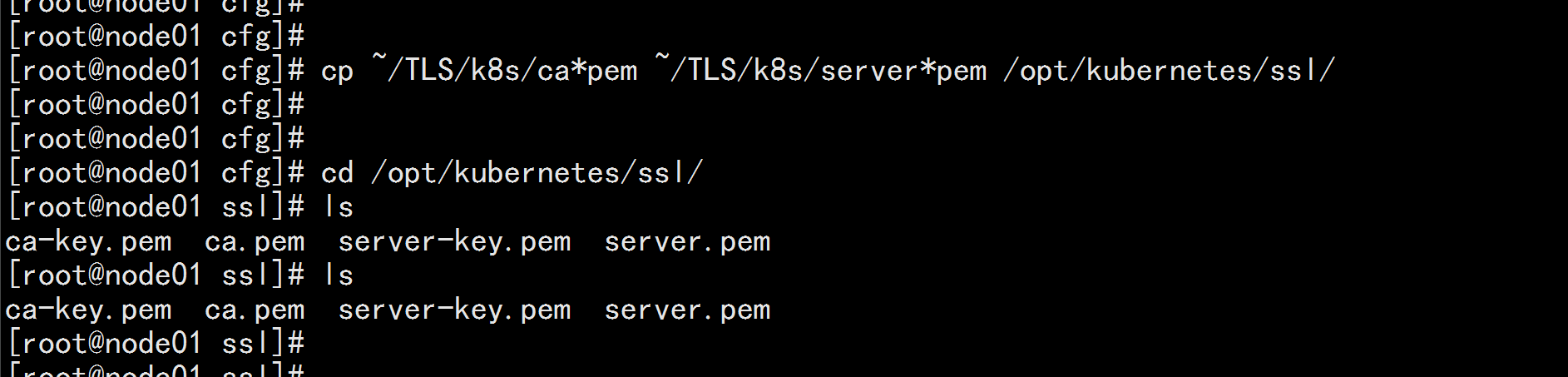

2. Copy the certificate just generated Copy the certificate you just generated to the path in the configuration file: cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

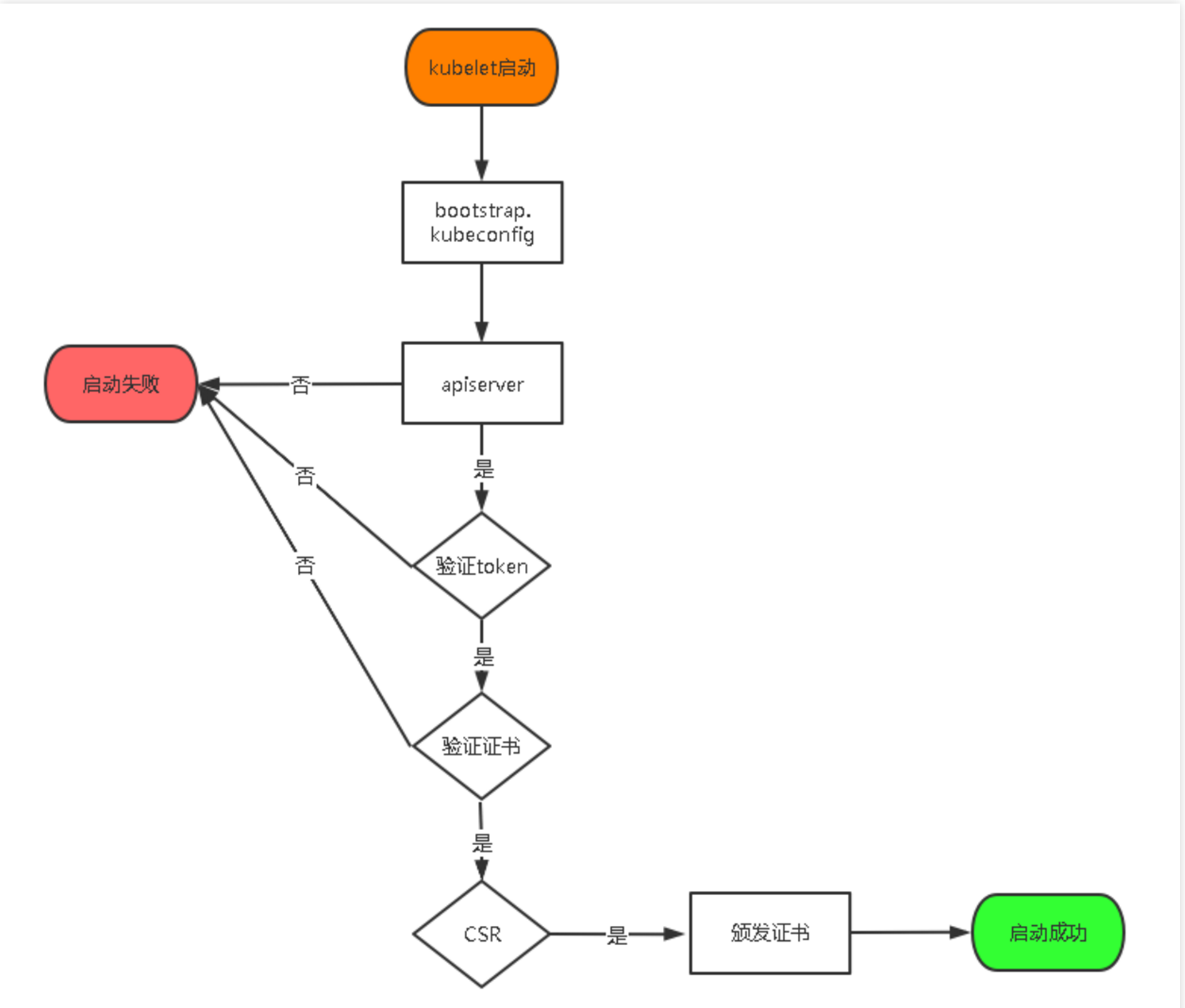

3. Enable the TLS Bootstrapping mechanism TLS Bootstraping: Master apiserver enables TLS authentication. To communicate with kube-apiserver, Node nodes kubelet and kube-proxy must use a valid certificate issued by a CA. When Node nodes are large, this client certificate issuance requires a lot of work and also increases the complexity of cluster expansion.To simplify the process, Kubernetes introduced the TLS bootstrapping mechanism to automatically issue client certificates. Kubelet automatically requests a certificate from apiserver as a low-privileged user. Kubelet's certificate is dynamically signed by apiserver.Therefore, it is strongly recommended to use this method on Node, mainly for kubelet at present, and kube-proxy is still a unified certificate issued by us. TLS bootstraping workflow:

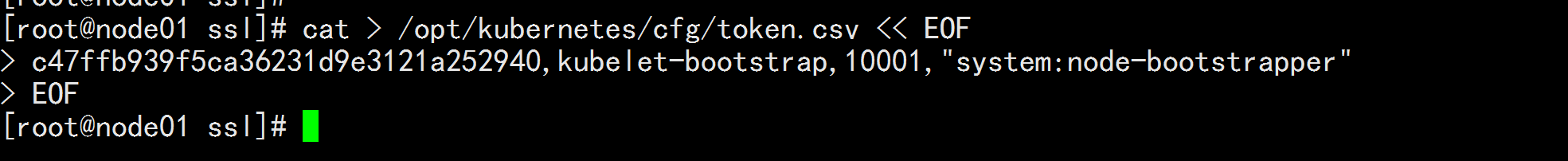

Create the token file in the above configuration file: cat > /opt/kubernetes/cfg/token.csv << EOF c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper" EOF Format: token, username, UID, user group token can also generate its own substitutions: head -c 16 /dev/urandom | od -An -t x | tr -d ' '

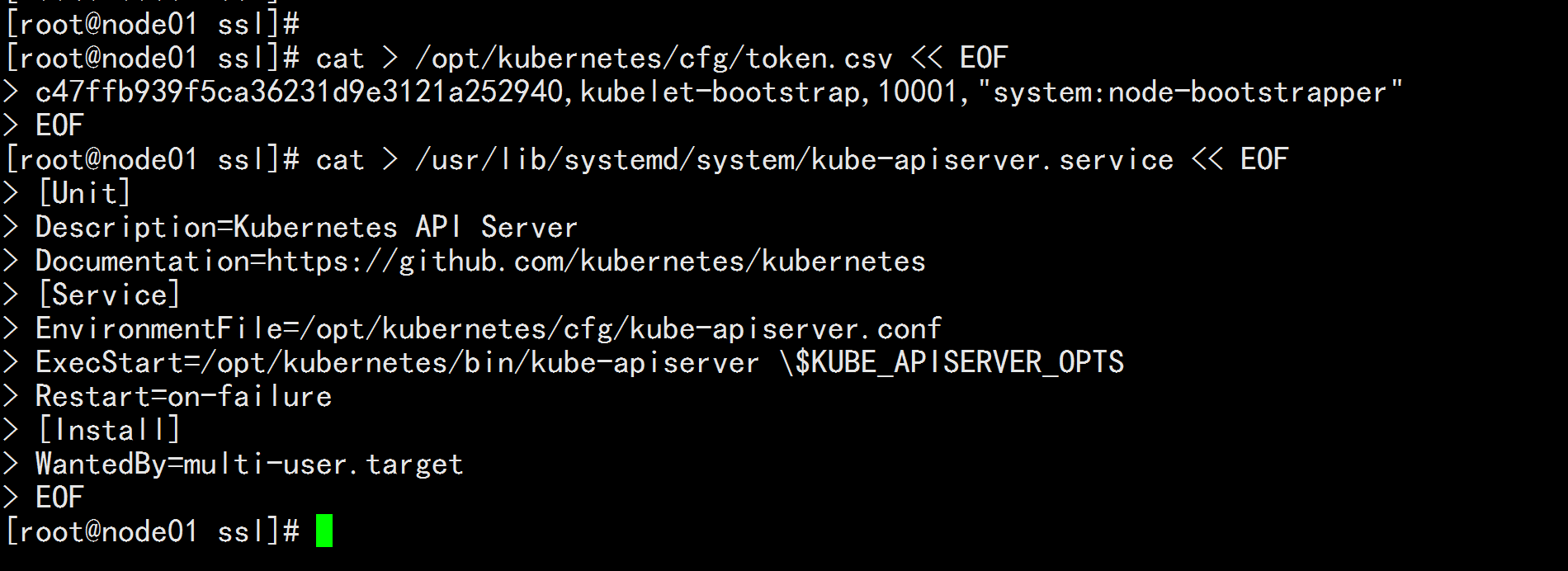

4. systemd Administration apiserver cat > /usr/lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

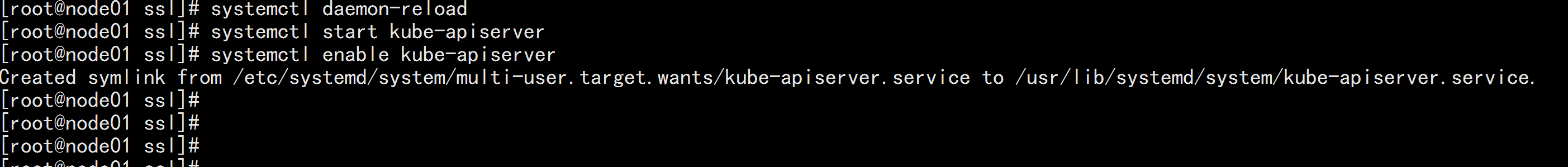

5. Start and set boot start systemctl daemon-reload systemctl start kube-apiserver systemctl enable kube-apiserver

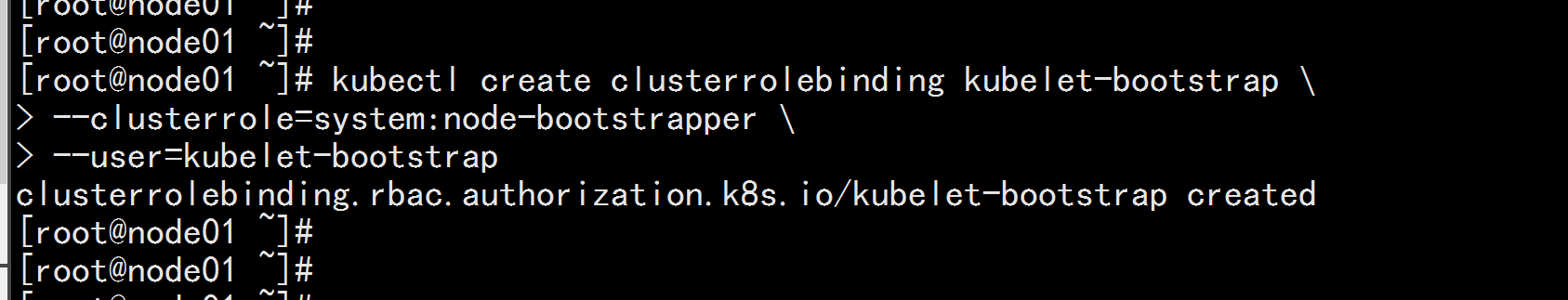

6. To grant authorization kubelet-bootstrap User is allowed to request certificates kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap

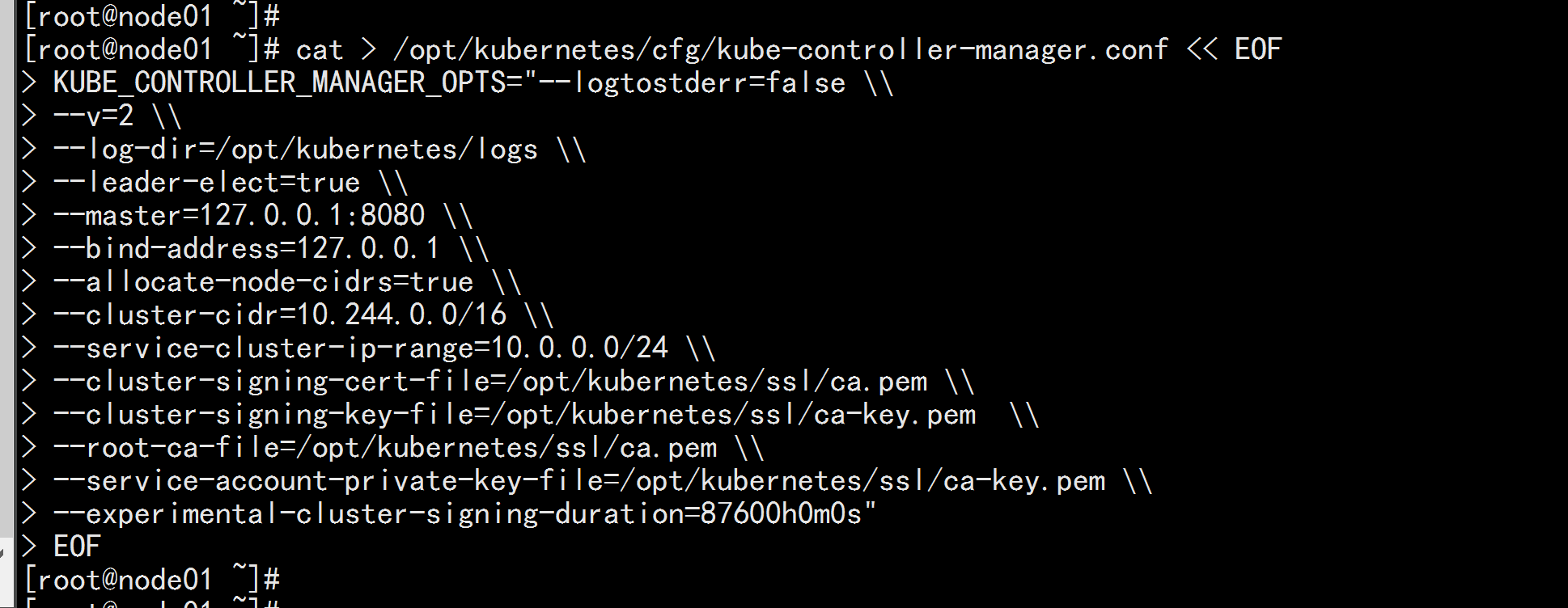

4.5 deploy kube-controller-manager 1. create profile cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --leader-elect=true \\ --master=127.0.0.1:8080 \\ --bind-address=127.0.0.1 \\ --allocate-node-cidrs=true \\ --cluster-cidr=10.244.0.0/16 \\ --service-cluster-ip-range=10.0.0.0/24 \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --experimental-cluster-signing-duration=87600h0m0s" EOF

- master: Connect apiserver through local unsecured local port 8080. - leader-elect: Auto-elect (HA) when the component starts more than one - cluster-signing-cert-file/- cluster-signing-key-file: CA that automatically issues certificates for kubelet, consistent with apiserver

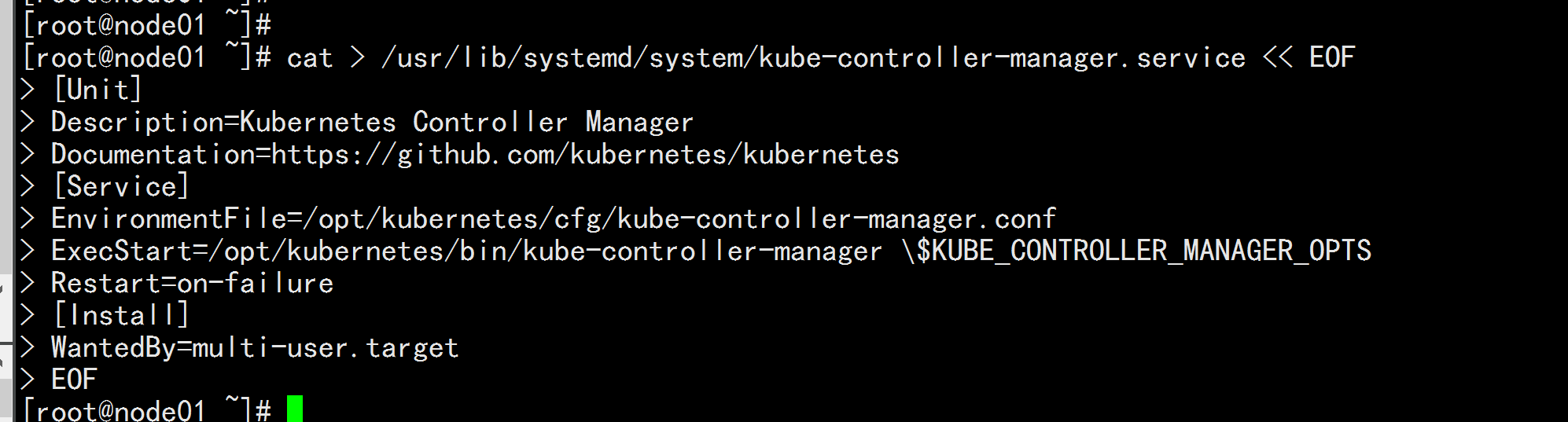

2. systemd Administration controller-manager cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

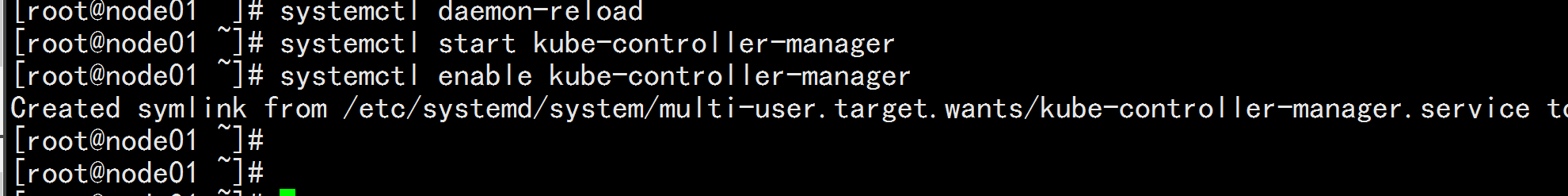

3. Start and set boot start systemctl daemon-reload systemctl start kube-controller-manager systemctl enable kube-controller-manager

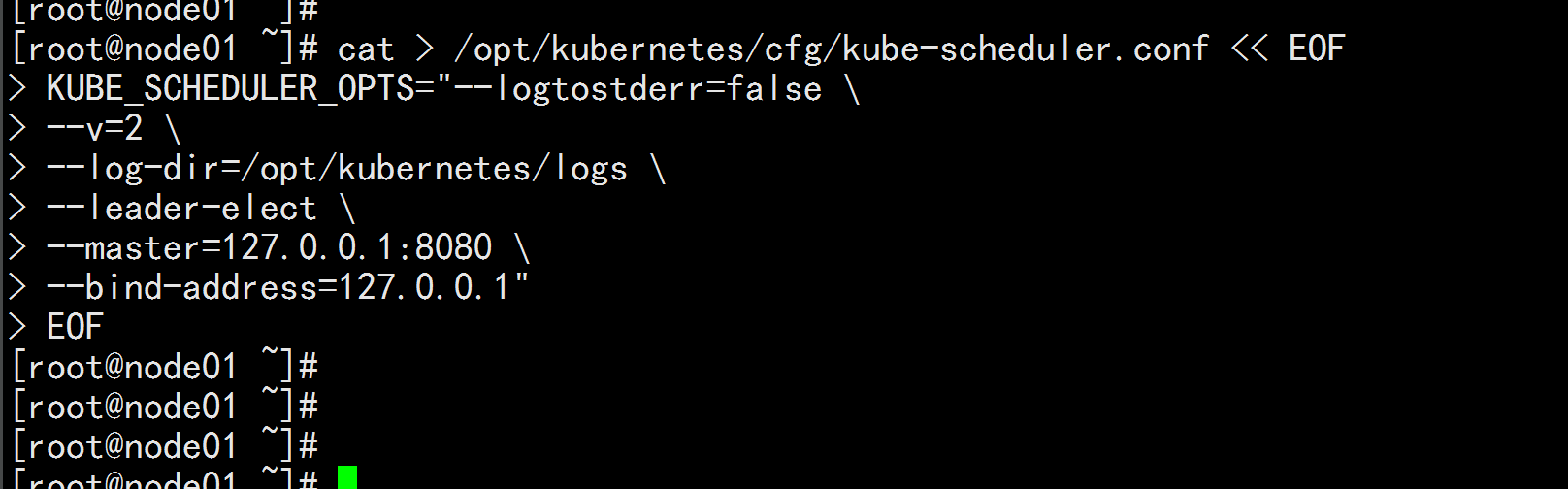

4.6 deploy kube-scheduler 1. create profile cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/opt/kubernetes/logs \ --leader-elect \ --master=127.0.0.1:8080 \ --bind-address=127.0.0.1" EOF

- master: Connect apiserver through local unsecured local port 8080. - leader-elect: Auto-elect (HA) when the component starts more than one

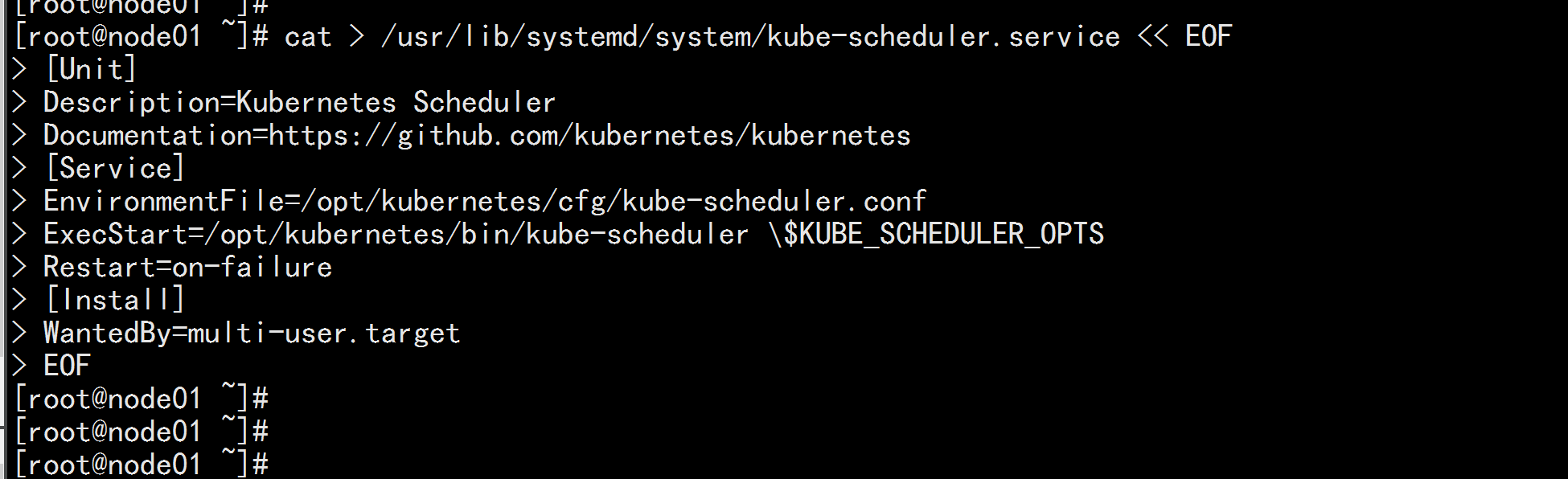

2. systemd Administration scheduler cat > /usr/lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

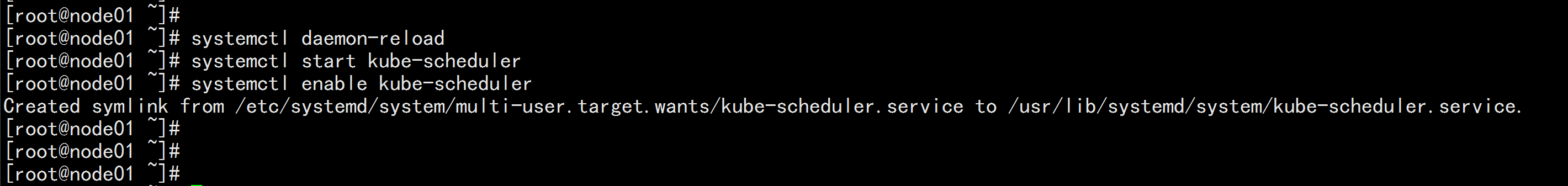

3. Start and set boot start systemctl daemon-reload systemctl start kube-scheduler systemctl enable kube-scheduler

4. View cluster status All components have been successfully started. View the current state of cluster components through the kubectl tool: kubectl get cs The output above indicates that the Master node component is functioning properly.

V. Deployment of Worker Node

The following is also done on Master Node, which acts as Worker Node at the same time

5.1 Create a working directory and copy binary files

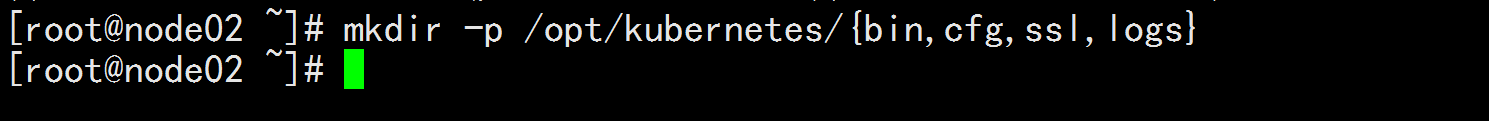

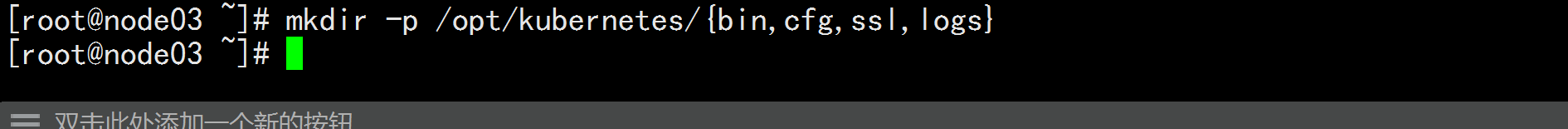

Create a working directory on all worker node s:

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

//Copy from master node cd kubernetes/server/bin cp kubelet kube-proxy /opt/kubernetes/bin # Local copy

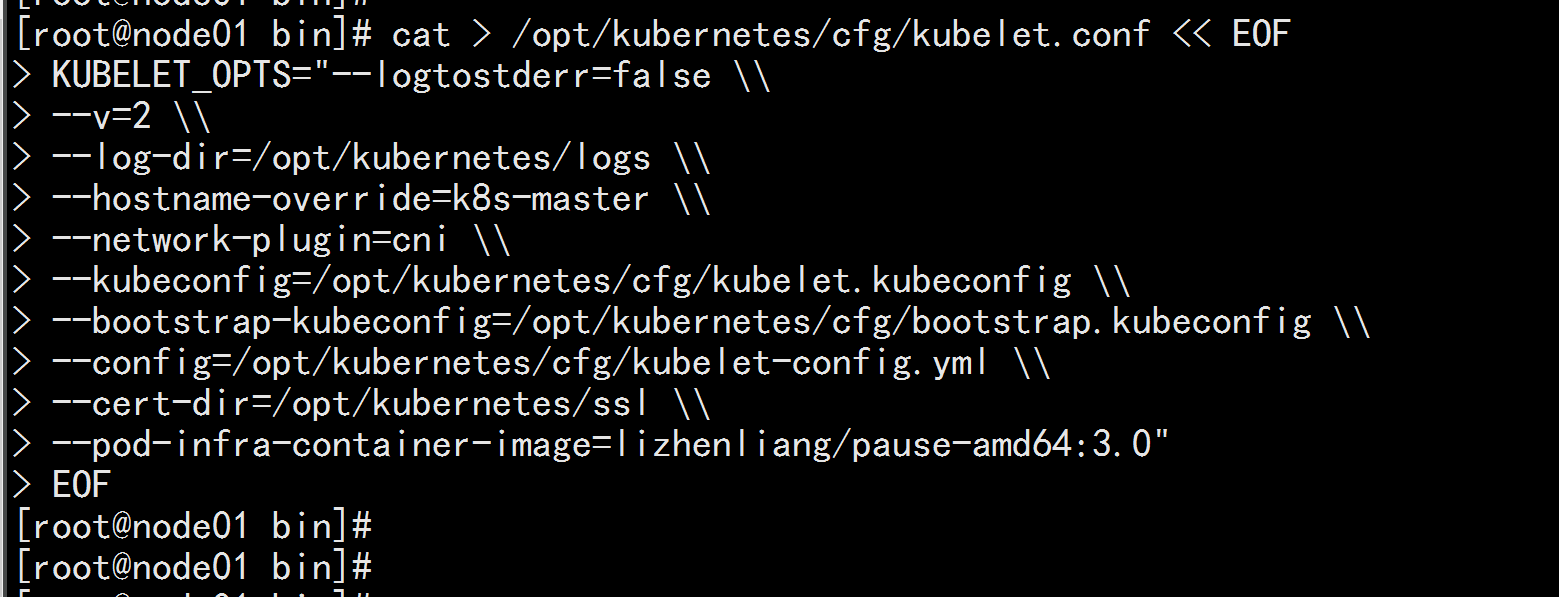

Execute above master node 5.2 Deployment of kubelet 1. Create a configuration file cat > /opt/kubernetes/cfg/kubelet.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --hostname-override=node01.flyfish \\ --network-plugin=cni \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet-config.yml \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=lizhenliang/pause-amd64:3.0" EOF ----- - hostname-override: Display name, unique in cluster - network-plugin: Enable CNI - kubeconfig: An empty path, which is automatically generated and later used to connect apiserver - bootstrap-kubeconfig: Request a certificate from apiserver for the first time - config: Configuration parameter file - cert-dir:kubelet Certificate Generation Directory - pod-infra-container-image: Manage mirroring of Pod network containers ---

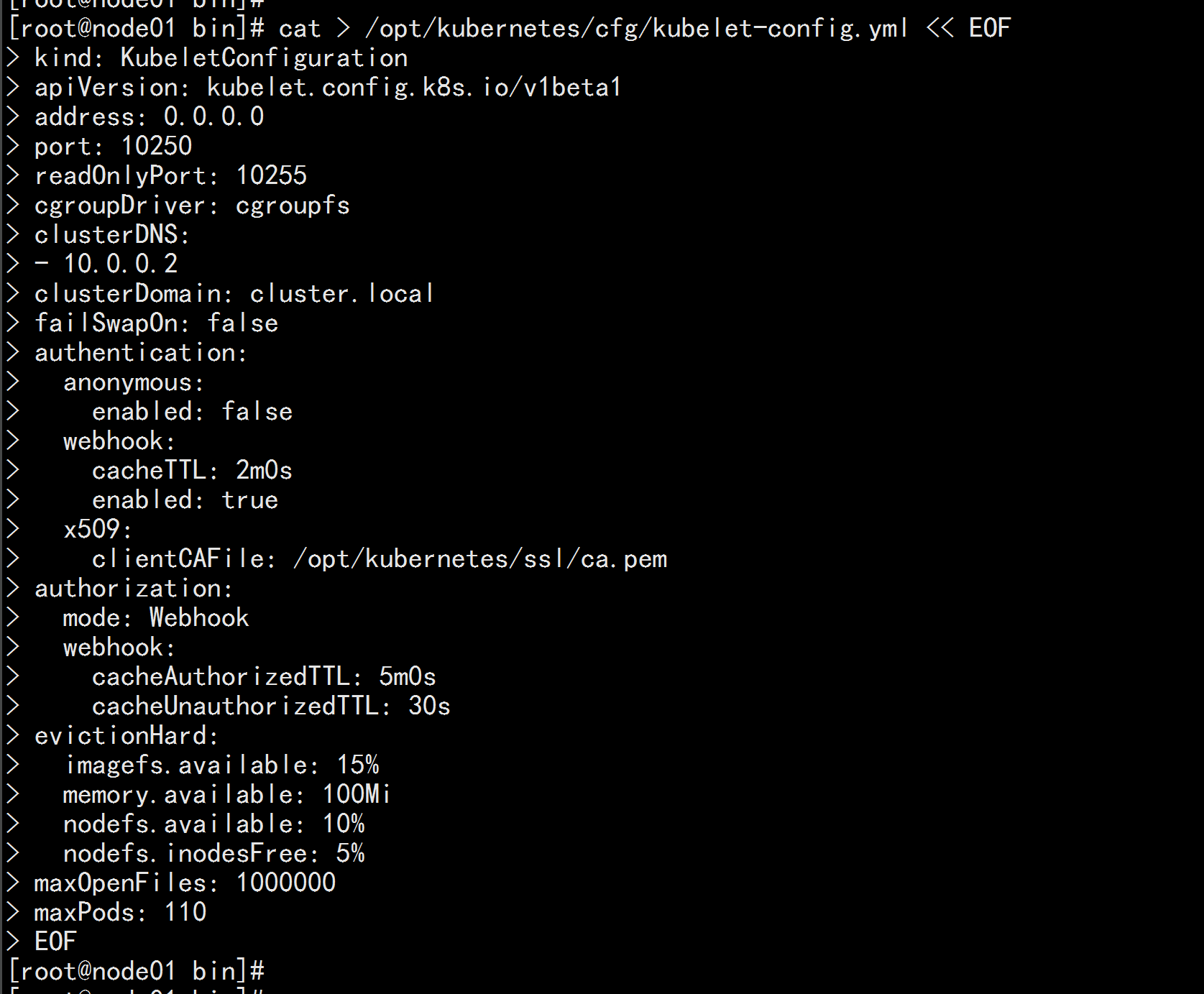

2. Configuration parameter file

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

stay server Execute above node

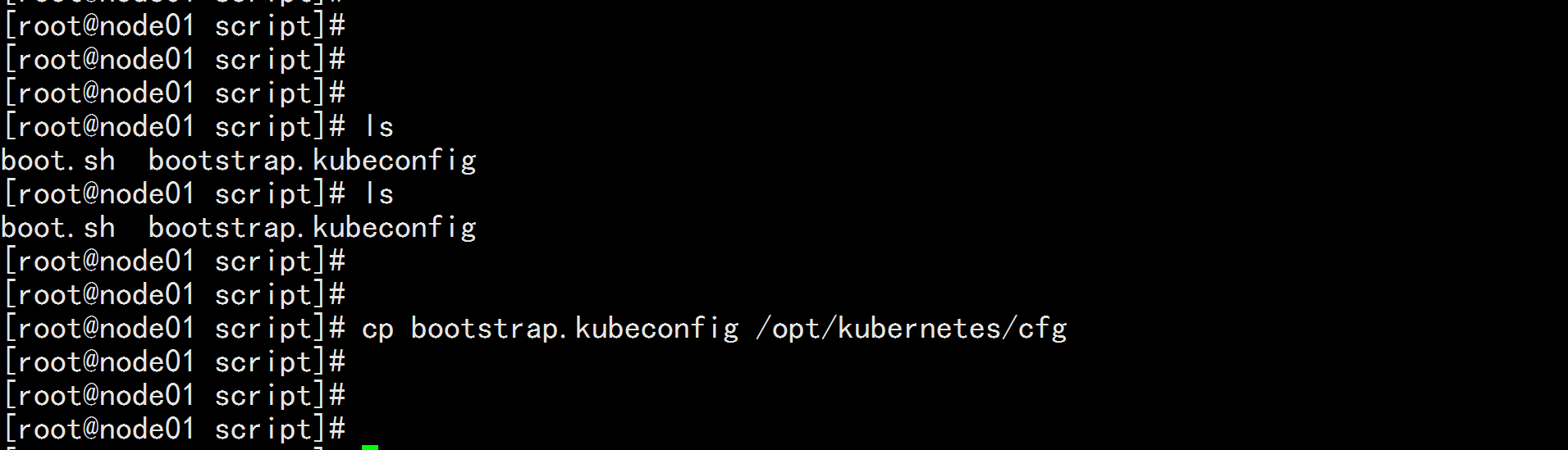

3. generate bootstrap.kubeconfig file

//Write oneBoot.shThe script puts the following in

---

KUBE_APISERVER="https://192.168.100.11:6443" # apiserver IP:PORT

TOKEN="c47ffb939f5ca36231d9e3121a252940" # andToken.csvKeep consistent inside

# Generate kubelet bootstrap kubeconfig configuration file

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

---

. ./boot.sh

Copy to profile path: cp bootstrap.kubeconfig /opt/kubernetes/cfg

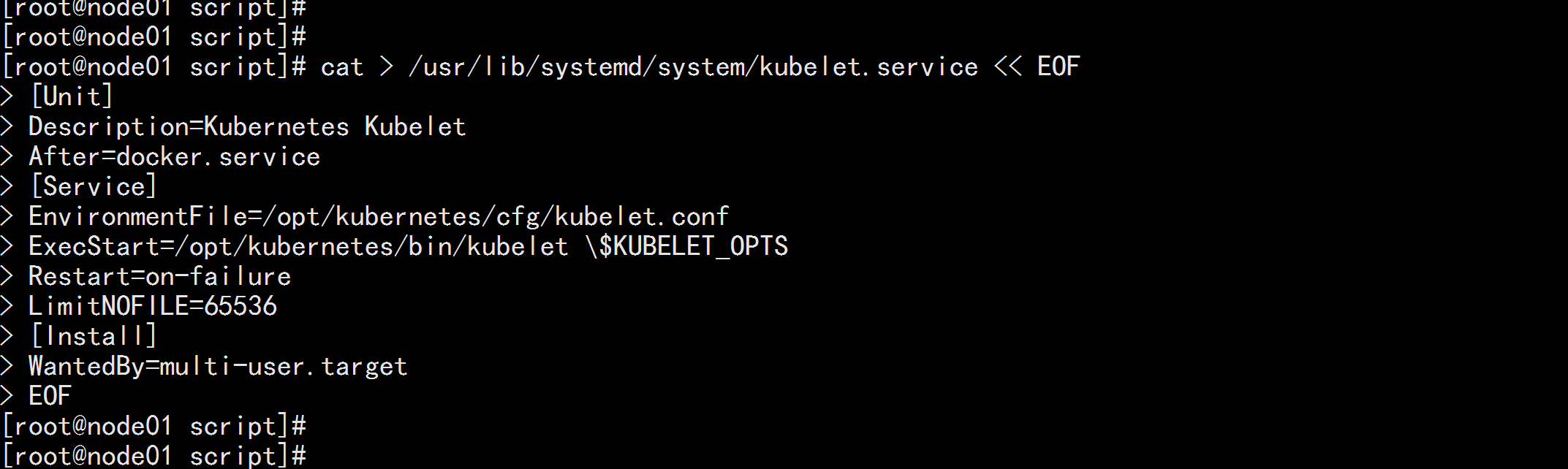

4. systemd Administration kubelet cat > /usr/lib/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet After=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

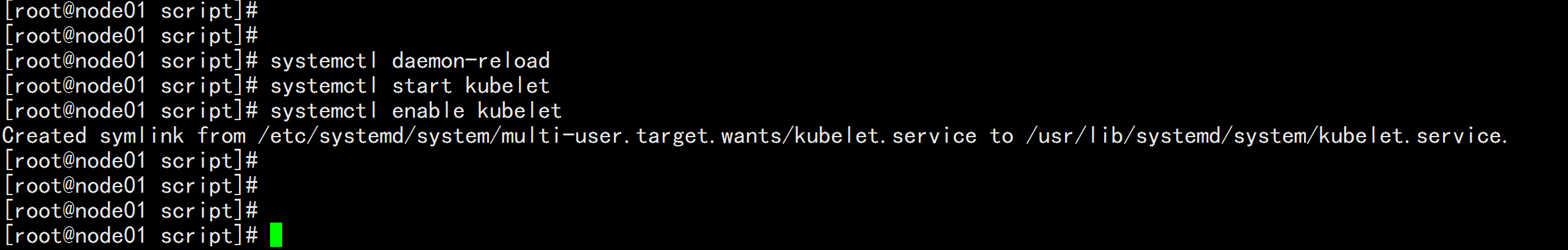

5. Start and set boot start systemctl daemon-reload systemctl start kubelet systemctl enable kubelet

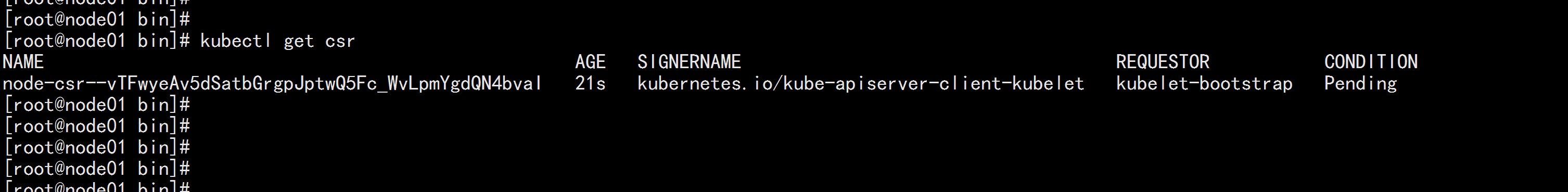

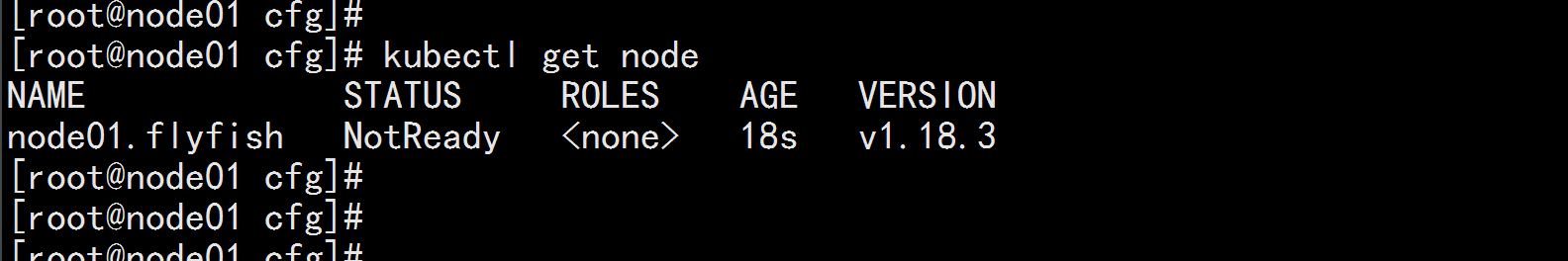

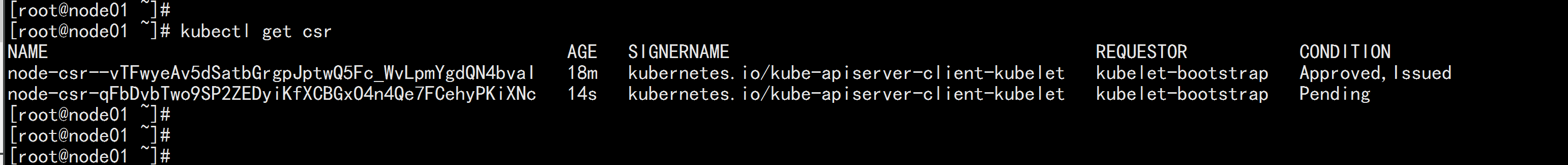

5.3 Approval kubelet Certificate Request and Join Cluster # View kubelet certificate request kubectl get csr

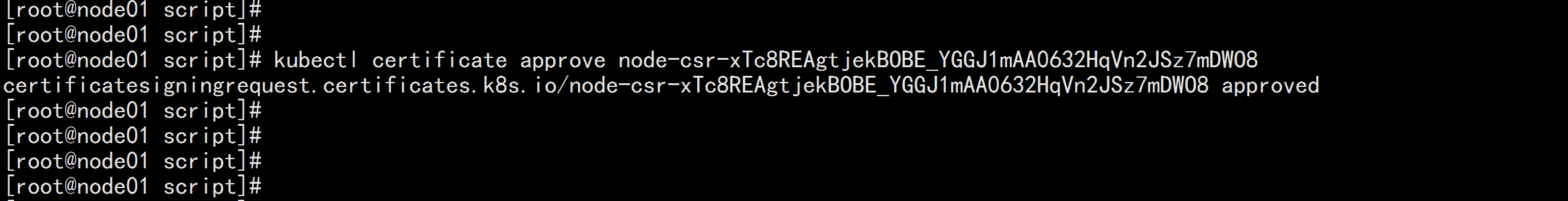

# Approve application kubectl certificate approve node-csr--vTFwyeAv5dSatbGrgpJptwQ5Fc_WvLpmYgdQN4bvaI

Note: Since the network plug-in has not been deployed, the node will not be ready for NotReady

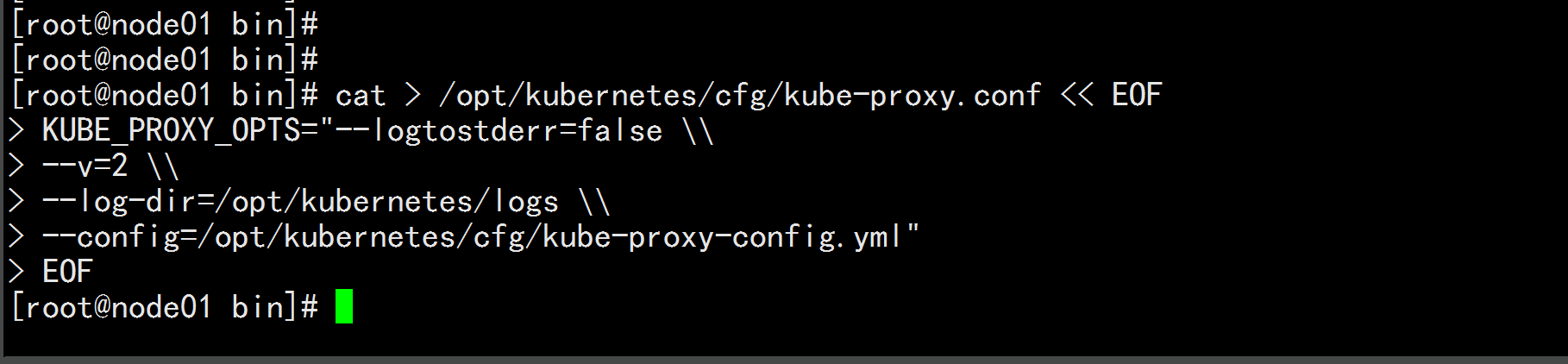

5.4 deploy kube-proxy 1. create profile cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF KUBE_PROXY_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --config=/opt/kubernetes/cfg/kube-proxy-config.yml" EOF

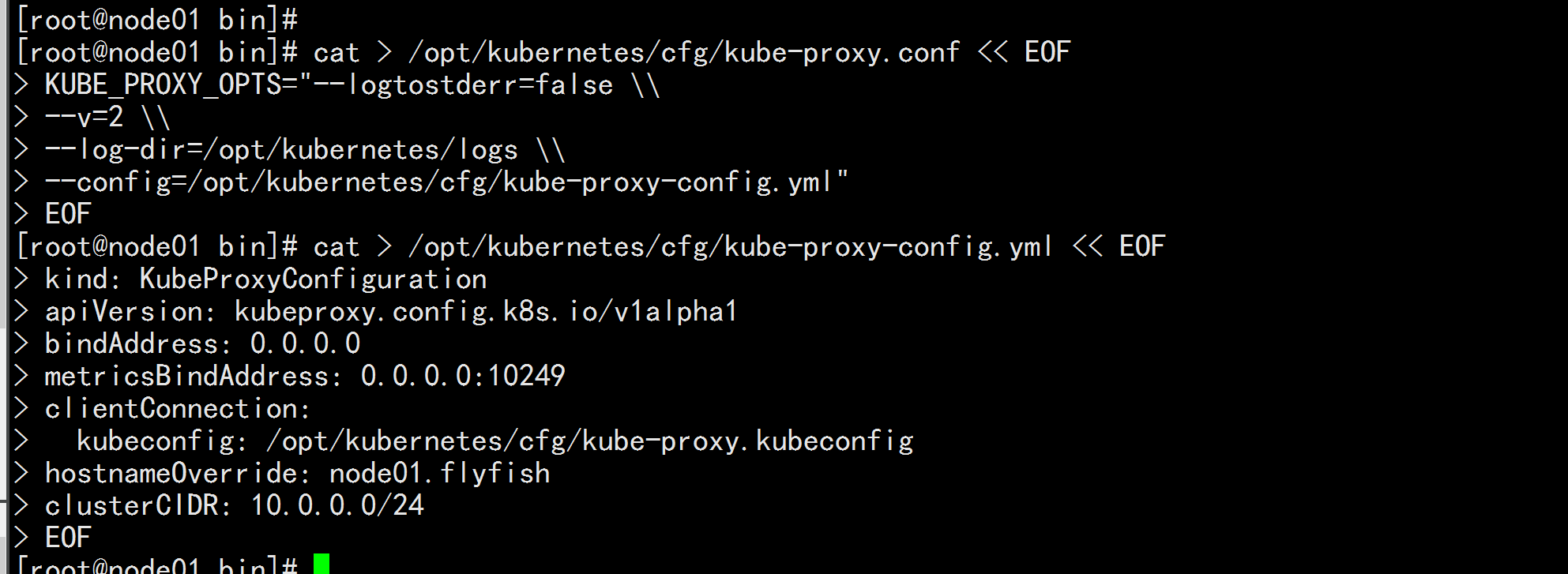

2. Configuration parameter file cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 metricsBindAddress: 0.0.0.0:10249 clientConnection: kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig hostnameOverride: node01.flyfish clusterCIDR: 10.0.0.0/24 EOF

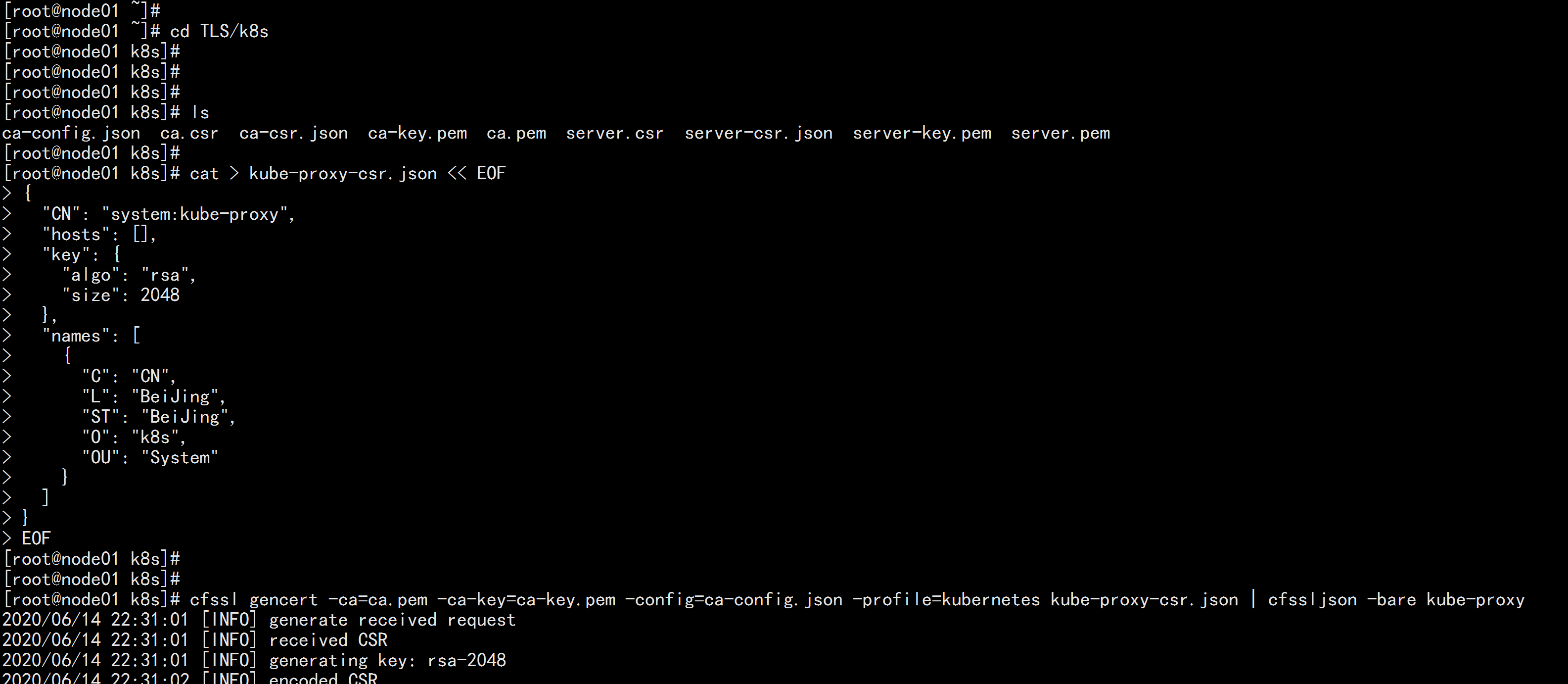

# Switch working directory

cd TLS/k8s

# Create Certificate Request File

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

---

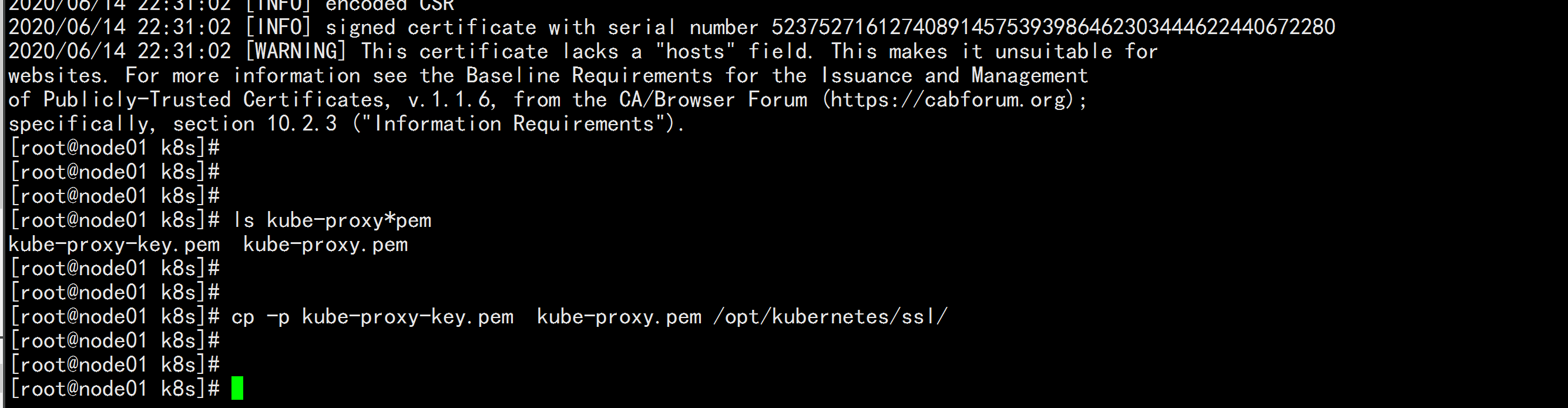

# Generate Certificate

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

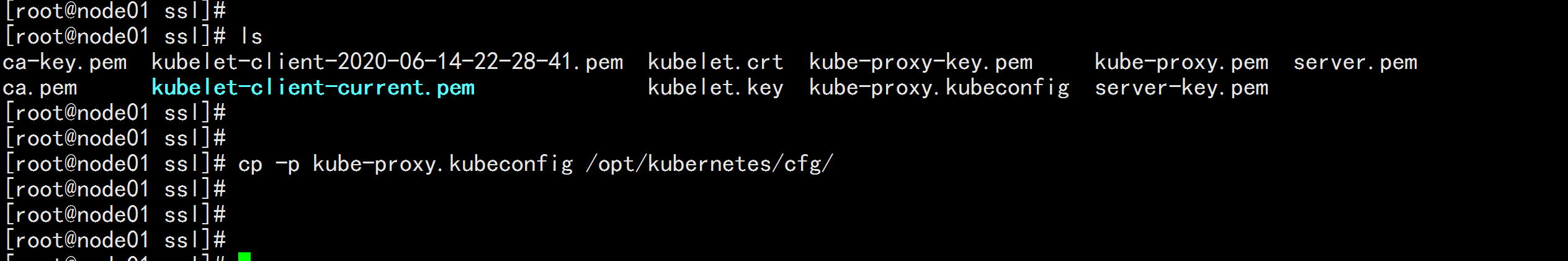

ls kube-proxy*pem

kube-proxy-key.pem kube-proxy.pem

cp -p kube-proxy-key.pem kube-proxy.pem /opt/kubernetes/ssl/

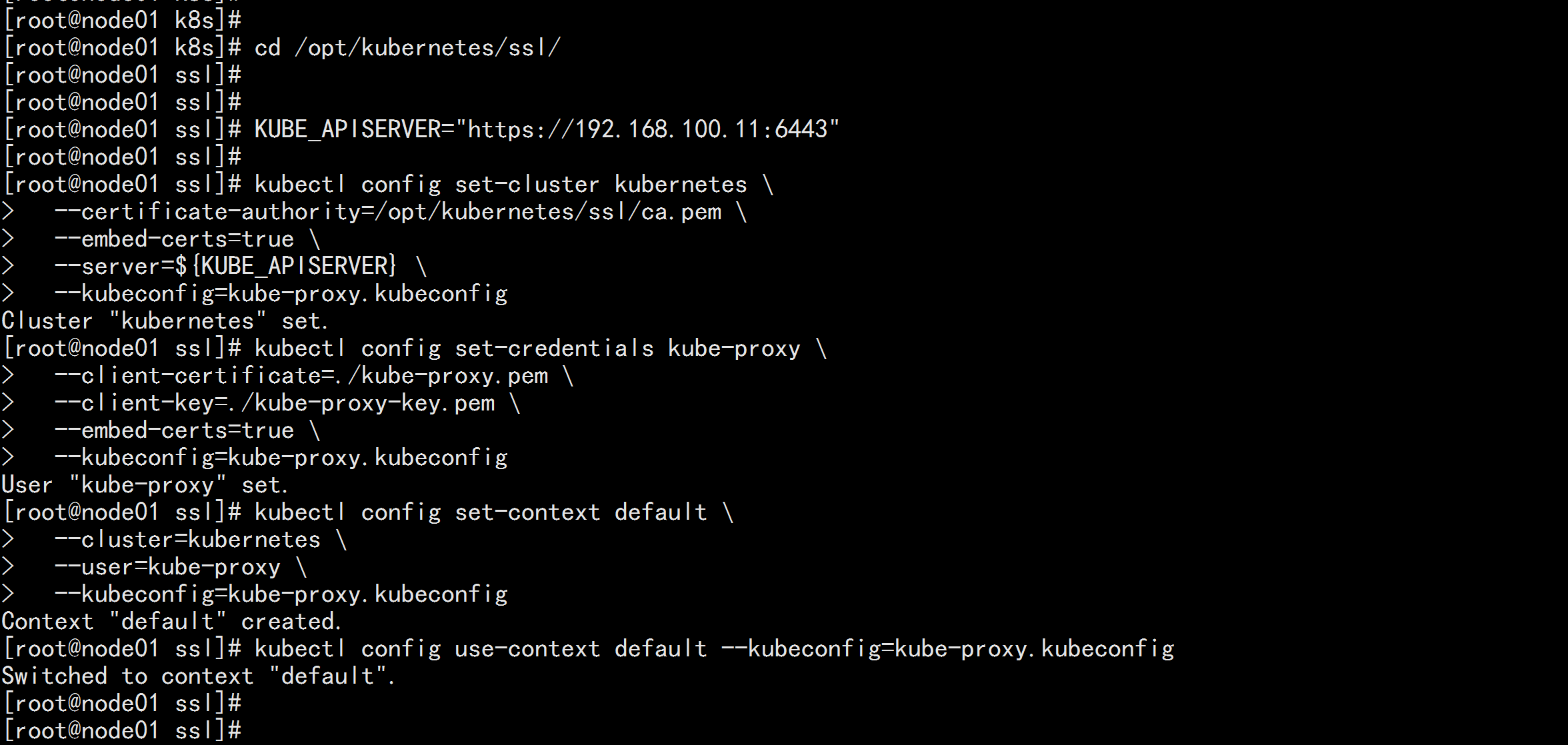

generate kubeconfig Files:

cd /opt/kubernetes/ssl/

vim kubeconfig.sh

---

KUBE_APISERVER="https://192.168.100.11:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

---

. ./kubeconfig.sh

cp -p kube-proxy.kubeconfig /opt/kubernetes/cfg/

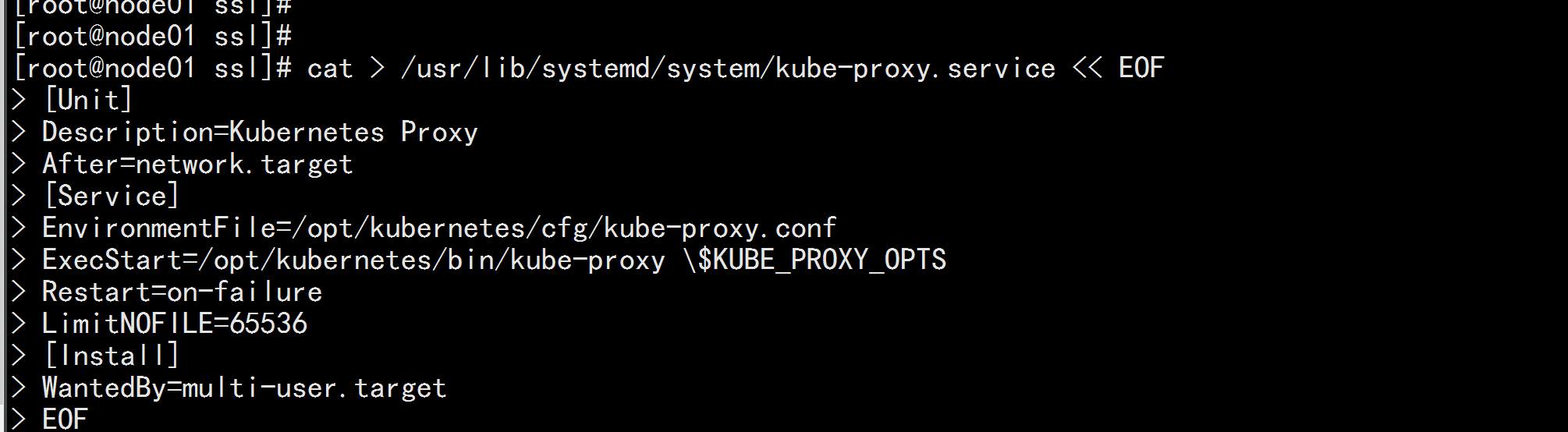

4. systemd Administration kube-proxy cat > /usr/lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

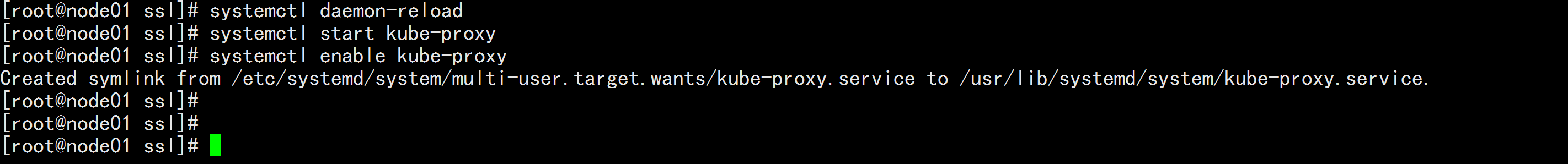

5. Start and set boot start systemctl daemon-reload systemctl start kube-proxy systemctl enable kube-proxy

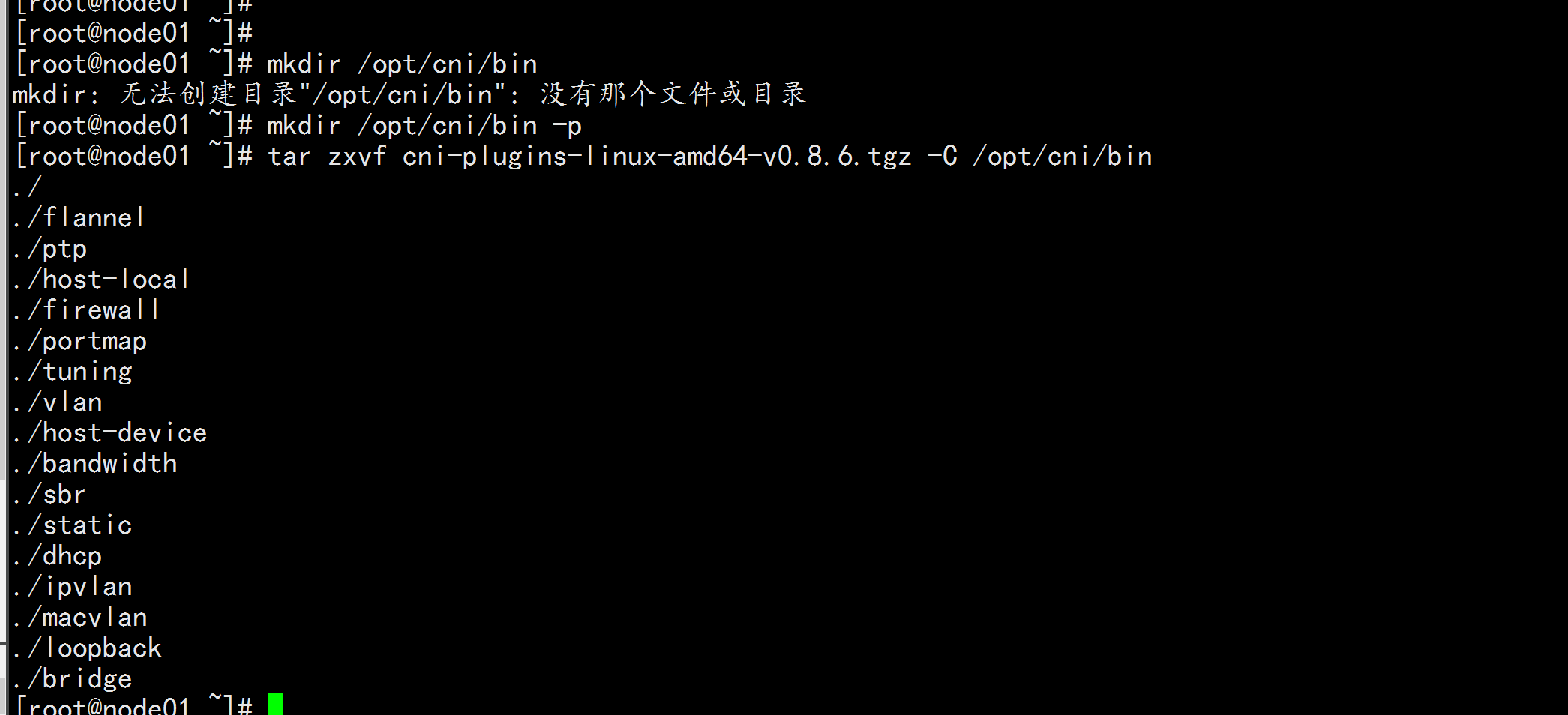

5.5 Deploy CNI network Prepare the CNI binaries first: Download address:https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz Unzip the binary package and move to the default working directory: mkdir /opt/cni/bin -p tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

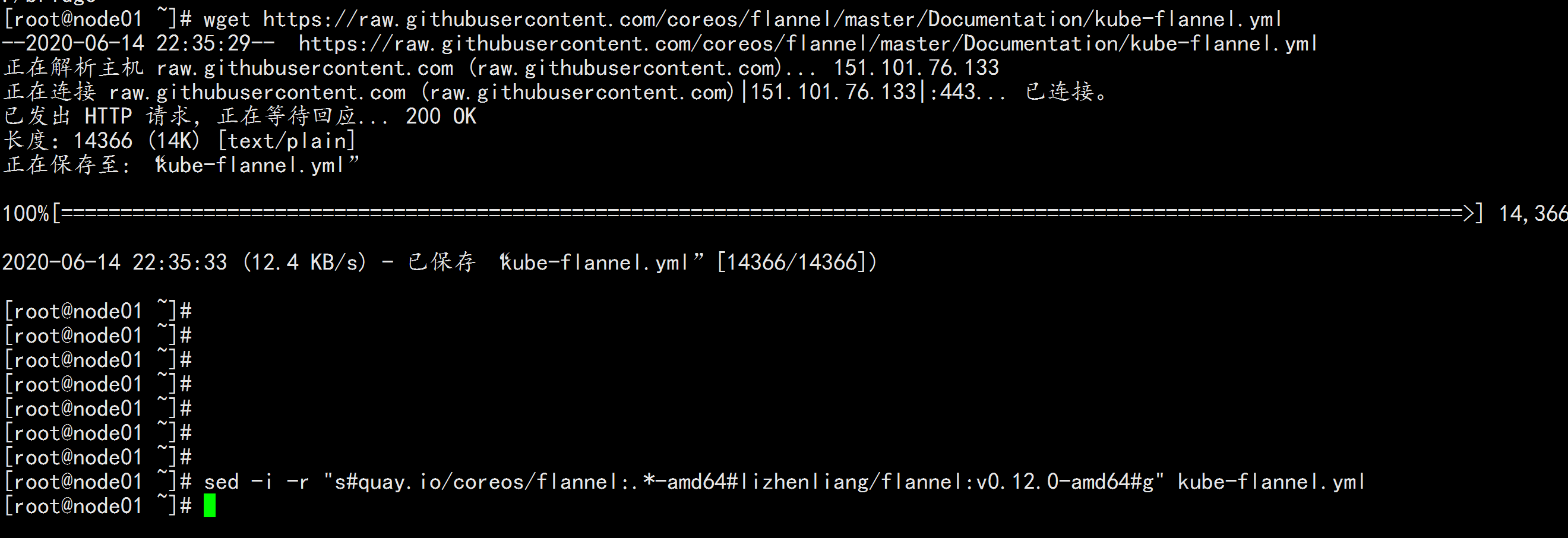

deploy CNI Network: wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yml

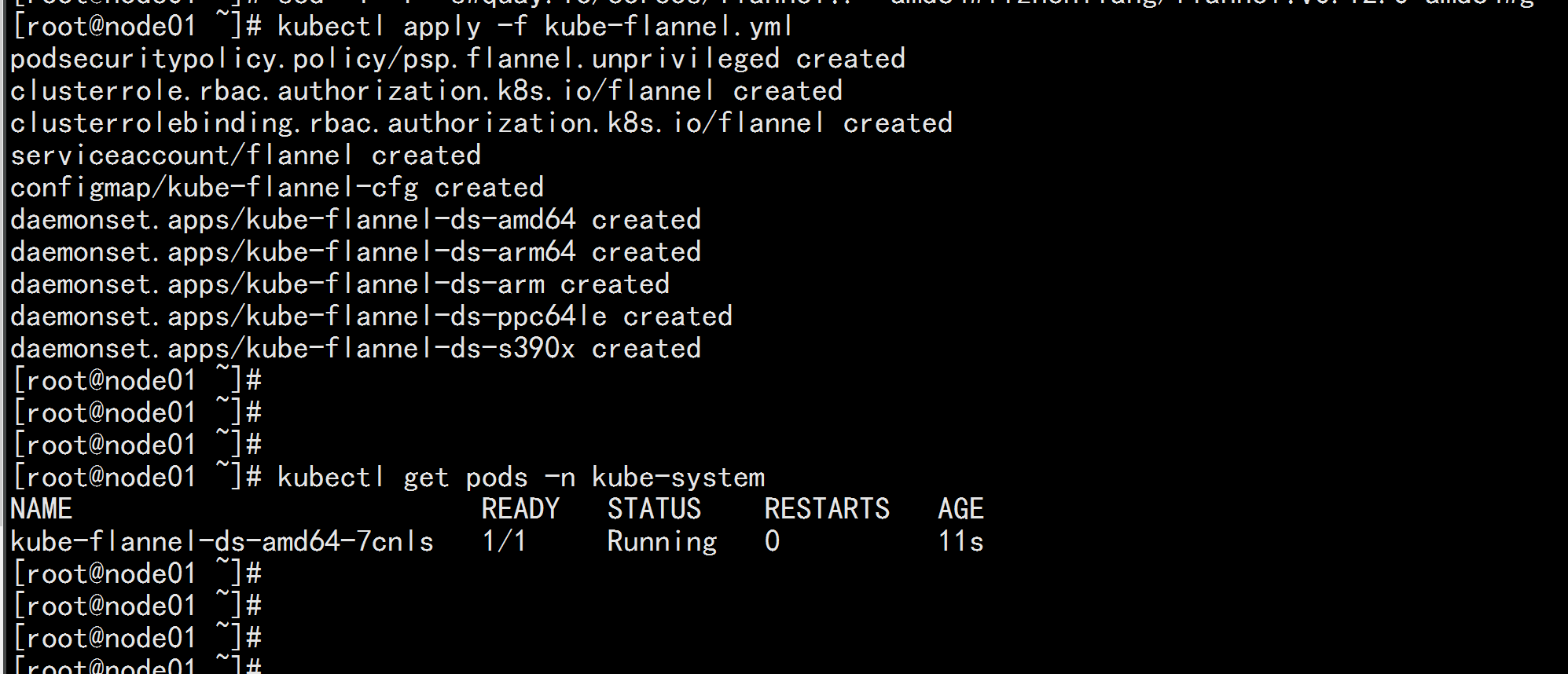

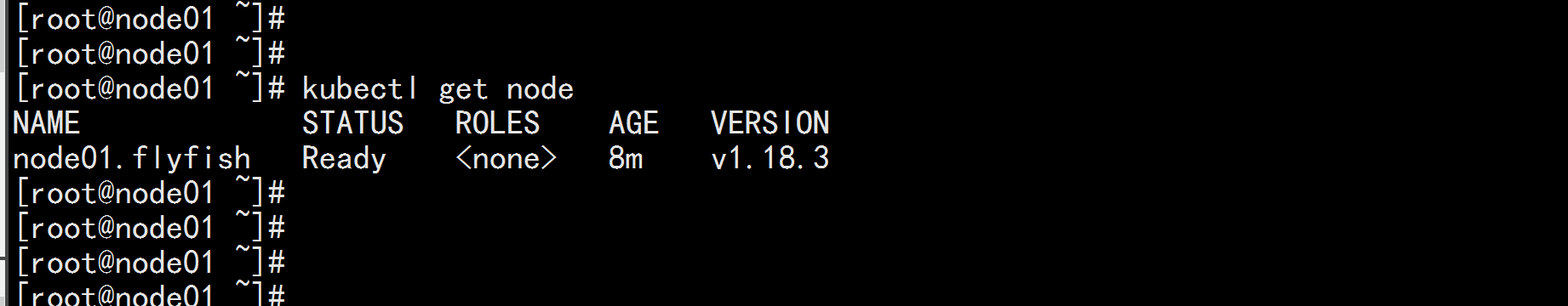

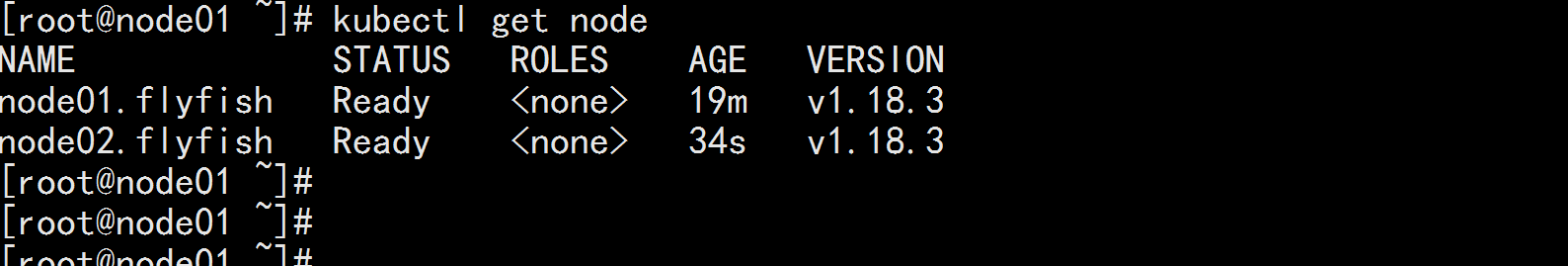

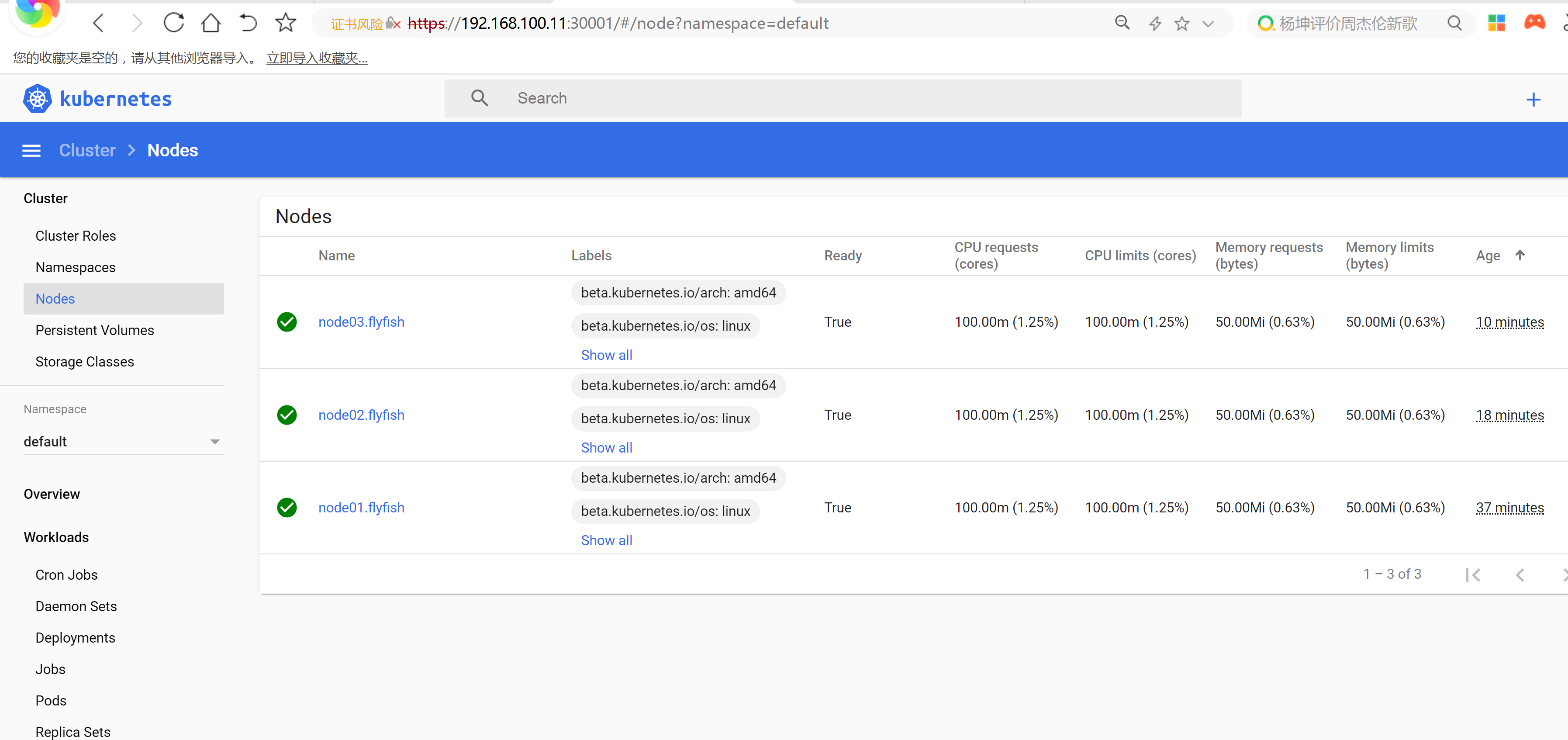

kubectl apply -f kube-flannel.yml kubectl get pods -n kube-system Deploy the network plug-in and Node is ready. kubectl get node

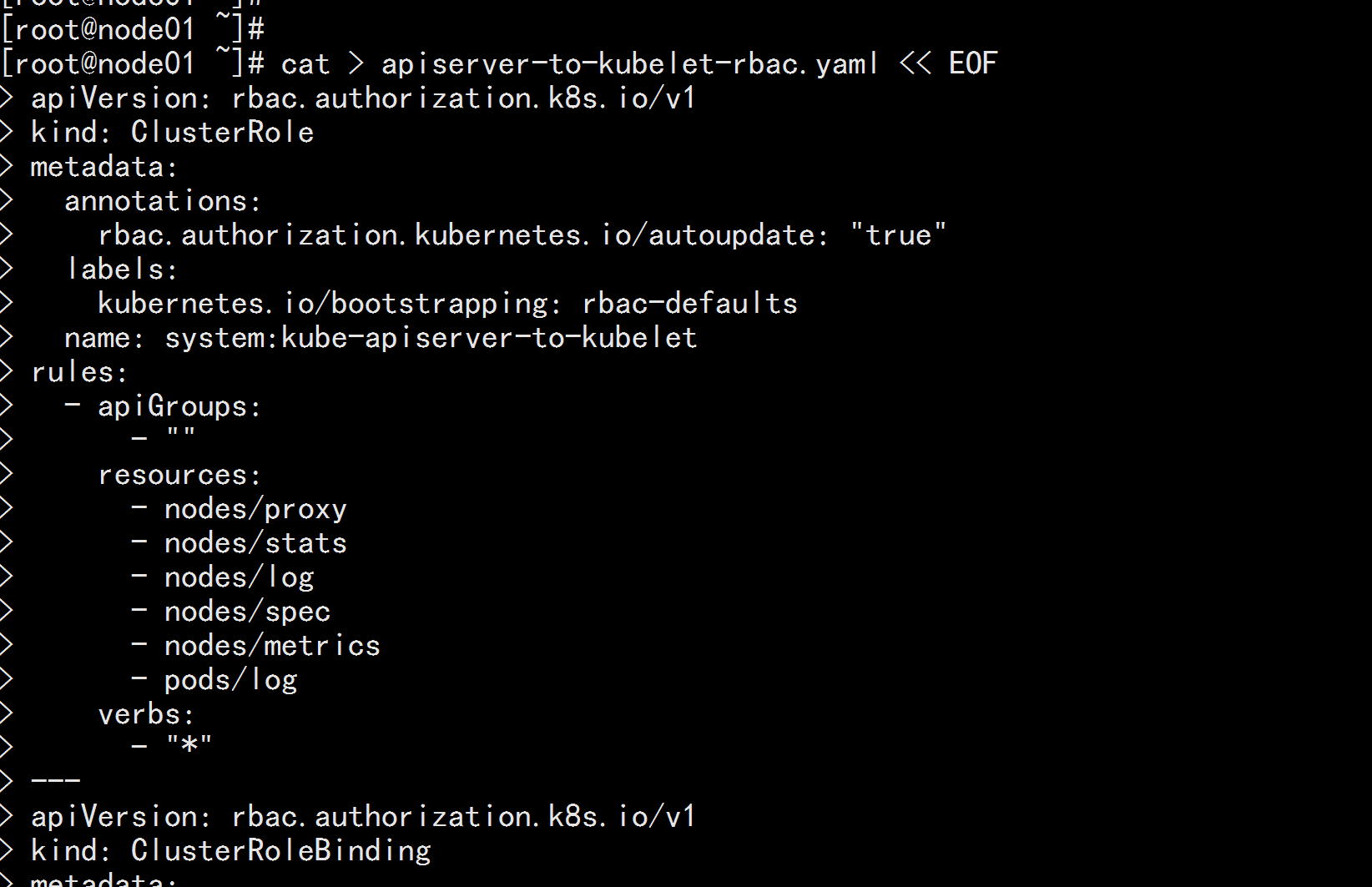

5.6 To grant authorization apiserver Visit kubelet

cat > apiserver-to-kubelet-rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

---

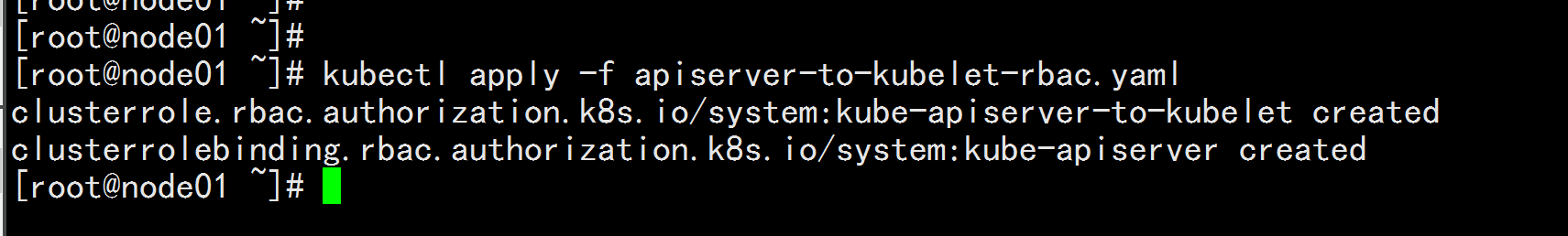

kubectl apply -f apiserver-to-kubelet-rbac.yaml

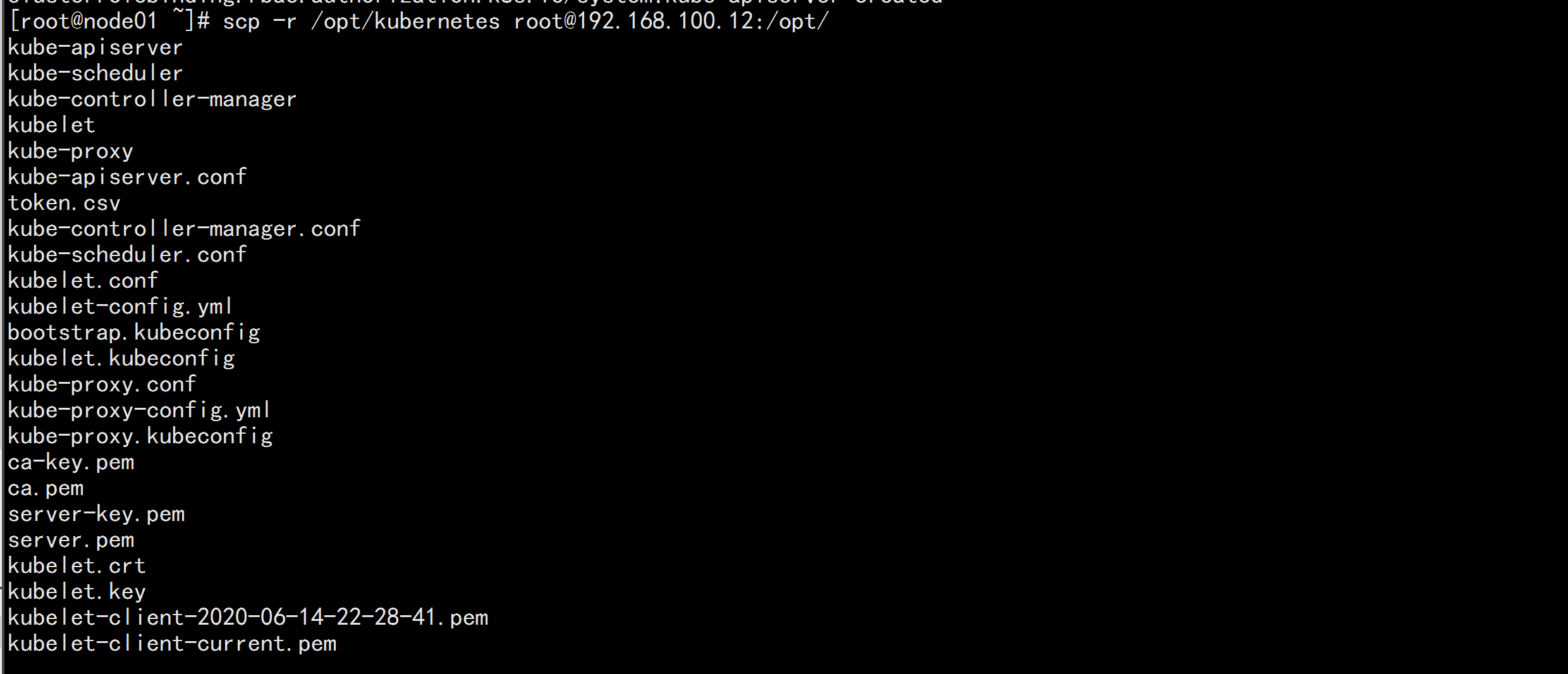

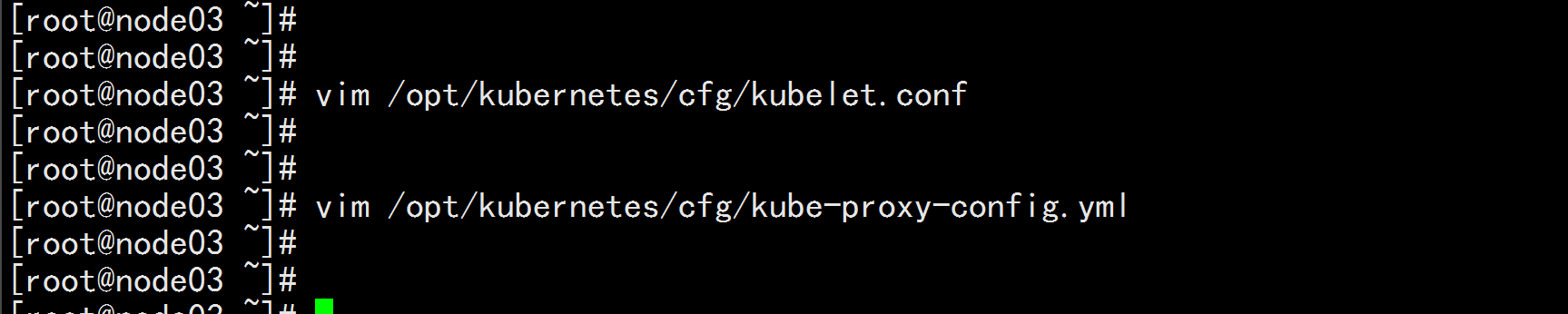

5.7 New Worker Node

1. Copy the deployed Node-related files to the new node

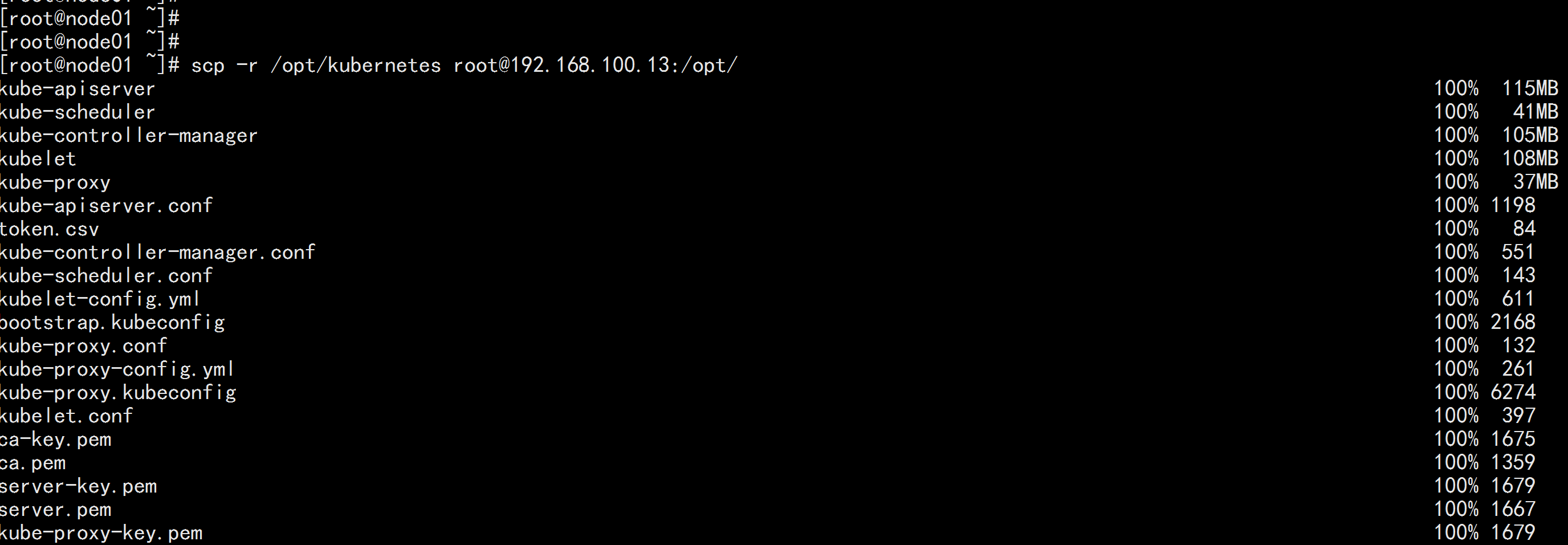

Copy Worker Node involving files to the new node 192.168.100.13 at the master node

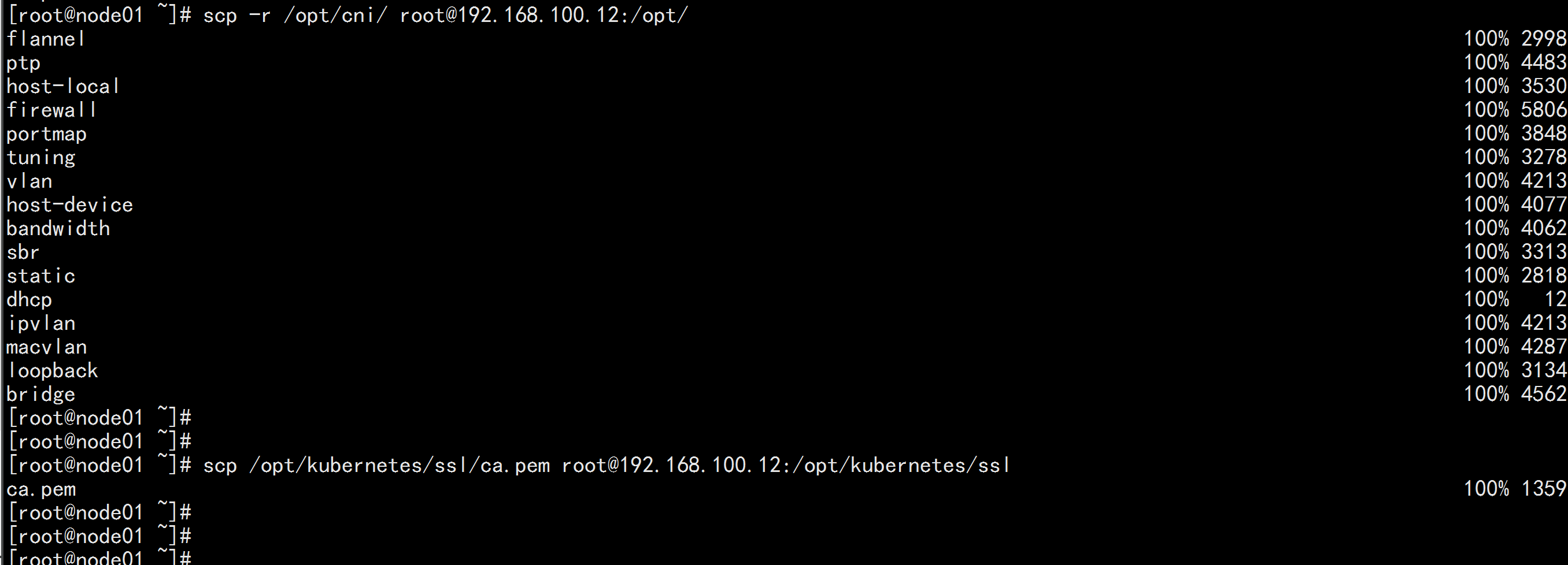

scp -r /opt/kubernetes root@192.168.100.12:/opt/

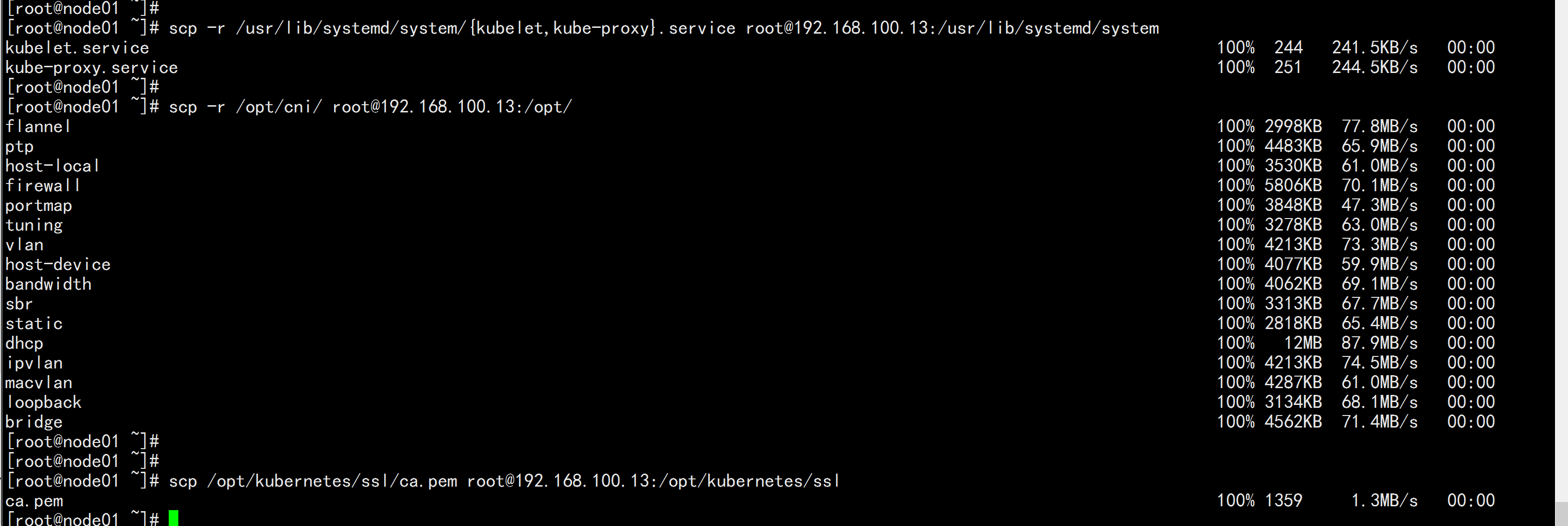

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.100.12:/usr/lib/systemd/system

scp -r /opt/cni/ root@192.168.100.12:/opt/

scp /opt/kubernetes/ssl/ca.pem root@192.168.100.12:/opt/kubernetes/ssl

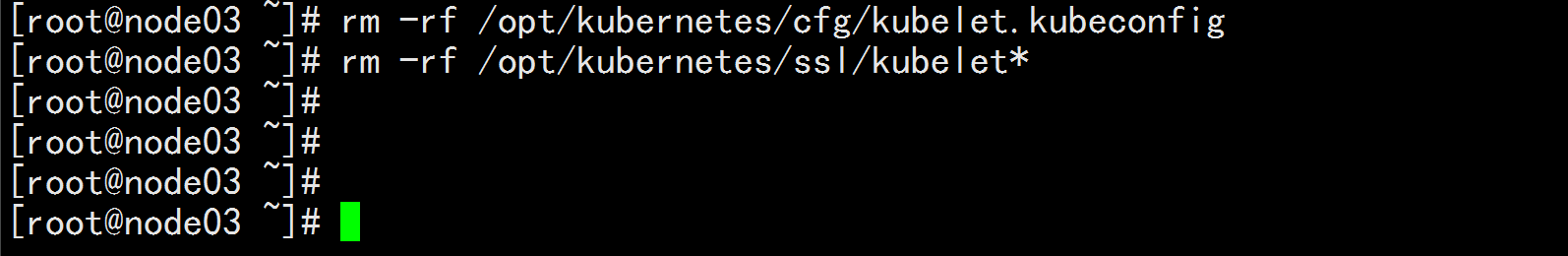

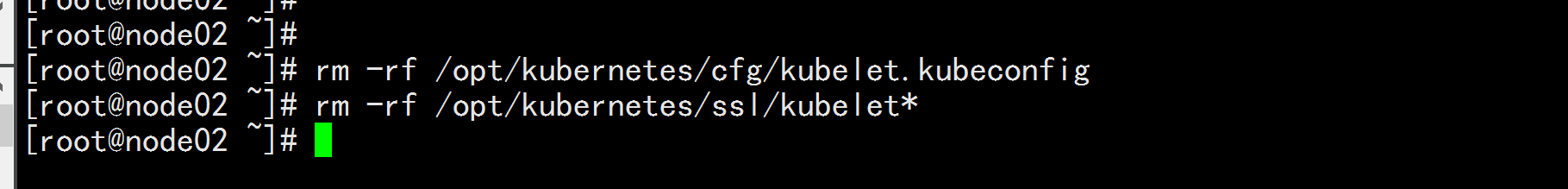

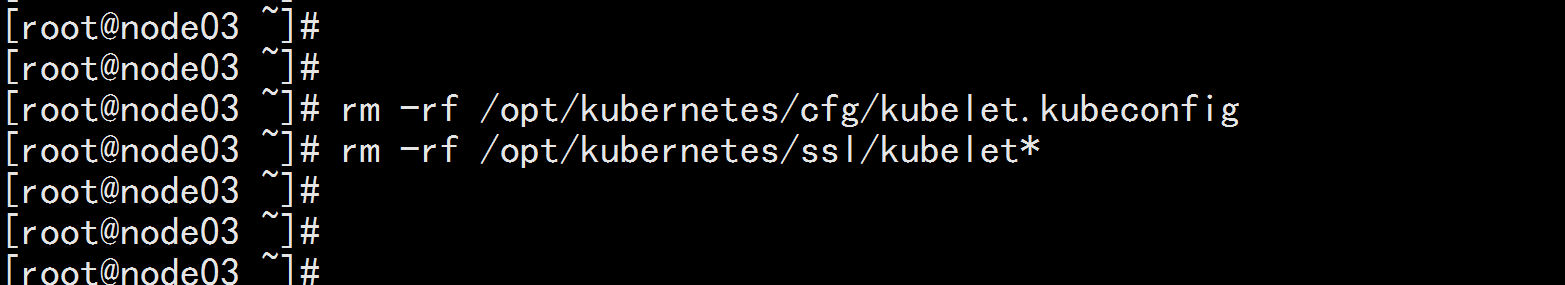

2. delete kubelet Certificates and kubeconfig file rm -rf /opt/kubernetes/cfg/kubelet.kubeconfig rm -rf /opt/kubernetes/ssl/kubelet*

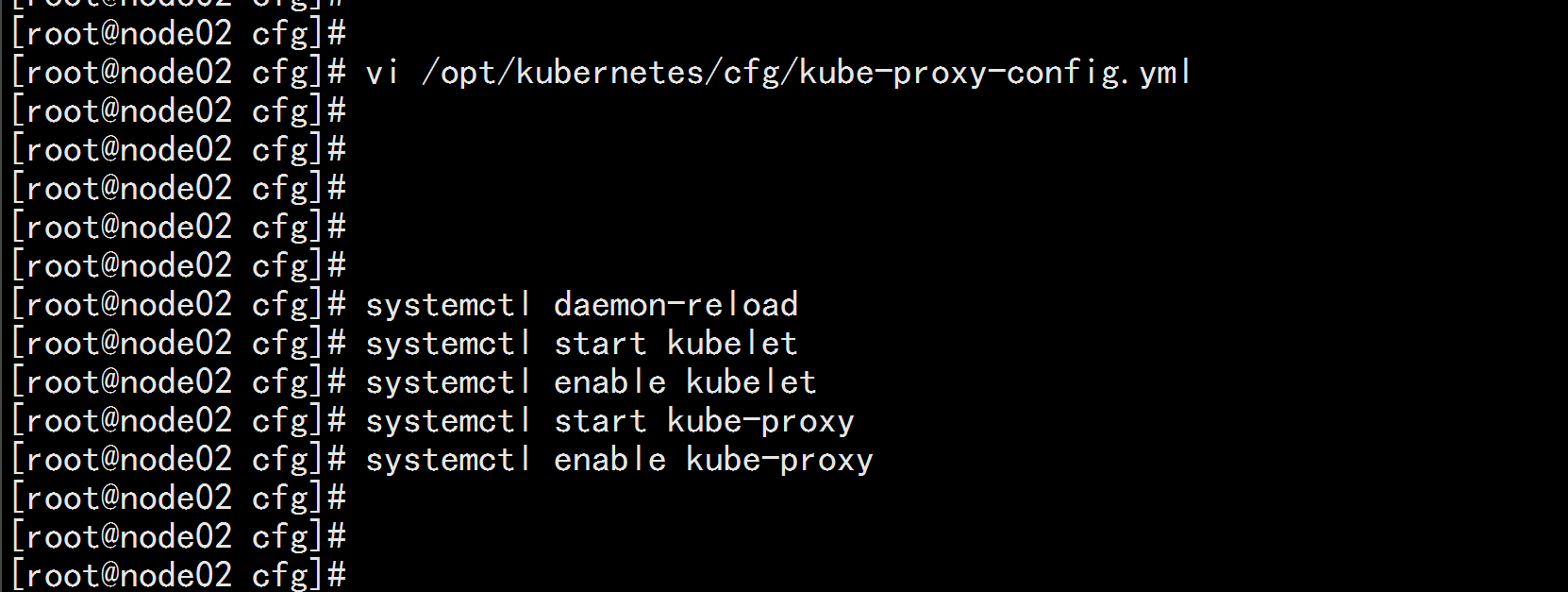

3. Modify Host Name vim /opt/kubernetes/cfg/kubelet.conf --hostname-override=node02.flyfish vim /opt/kubernetes/cfg/kube-proxy-config.yml hostnameOverride: node02.flyfish

4. Start and set boot start systemctl daemon-reload systemctl start kubelet systemctl enable kubelet systemctl start kube-proxy systemctl enable kube-proxy

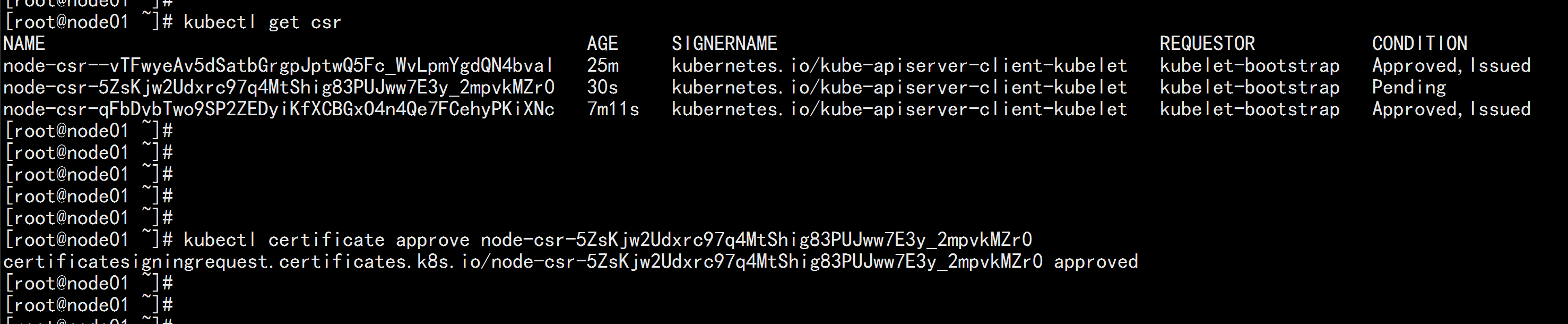

5. stay Master Up approve new Node kubelet Certificate Request kubectl get csr

kubectl certificate approve node-csr-qFbDvbTwo9SP2ZEDyiKfXCBGxO4n4Qe7FCehyPKiXNc

Add a work node:

Add one work Node:

scp -r /opt/kubernetes root@192.168.100.13:/opt/

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.100.13:/usr/lib/systemd/system

scp -r /opt/cni/ root@192.168.100.13:/opt/

scp /opt/kubernetes/ssl/ca.pem root@192.168.100.13:/opt/kubernetes/ssl

2. delete kubelet Certificates and kubeconfig file rm -rf /opt/kubernetes/cfg/kubelet.kubeconfig rm -rf /opt/kubernetes/ssl/kubelet*

3. Modify Host Name vim /opt/kubernetes/cfg/kubelet.conf --hostname-override=node03.flyfish vim /opt/kubernetes/cfg/kube-proxy-config.yml hostnameOverride: node03.flyfish

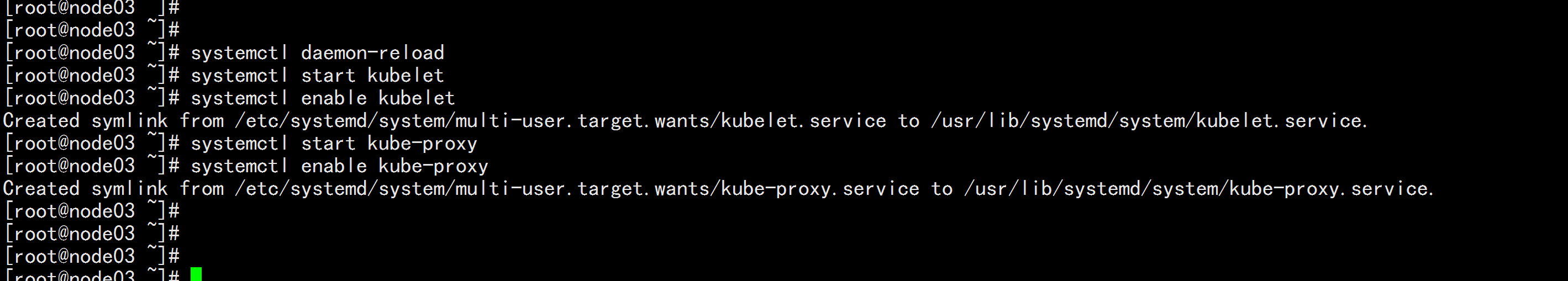

4. Start and set boot start systemctl daemon-reload systemctl start kubelet systemctl enable kubelet systemctl start kube-proxy systemctl enable kube-proxy

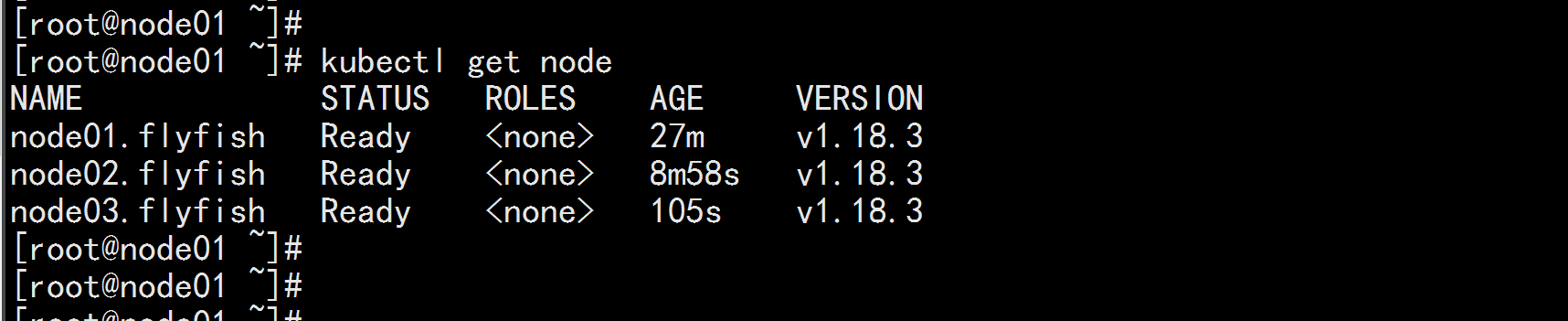

5. stay Master Up approve new Node kubelet Certificate Request kubectl get csr kubectl certificate approve node-csr-5ZsKjw2Udxrc97q4MtShig83PUJww7E3y_2mpvkMZr0

kubectl get node

6. Deploying Dashboard and Core DNS

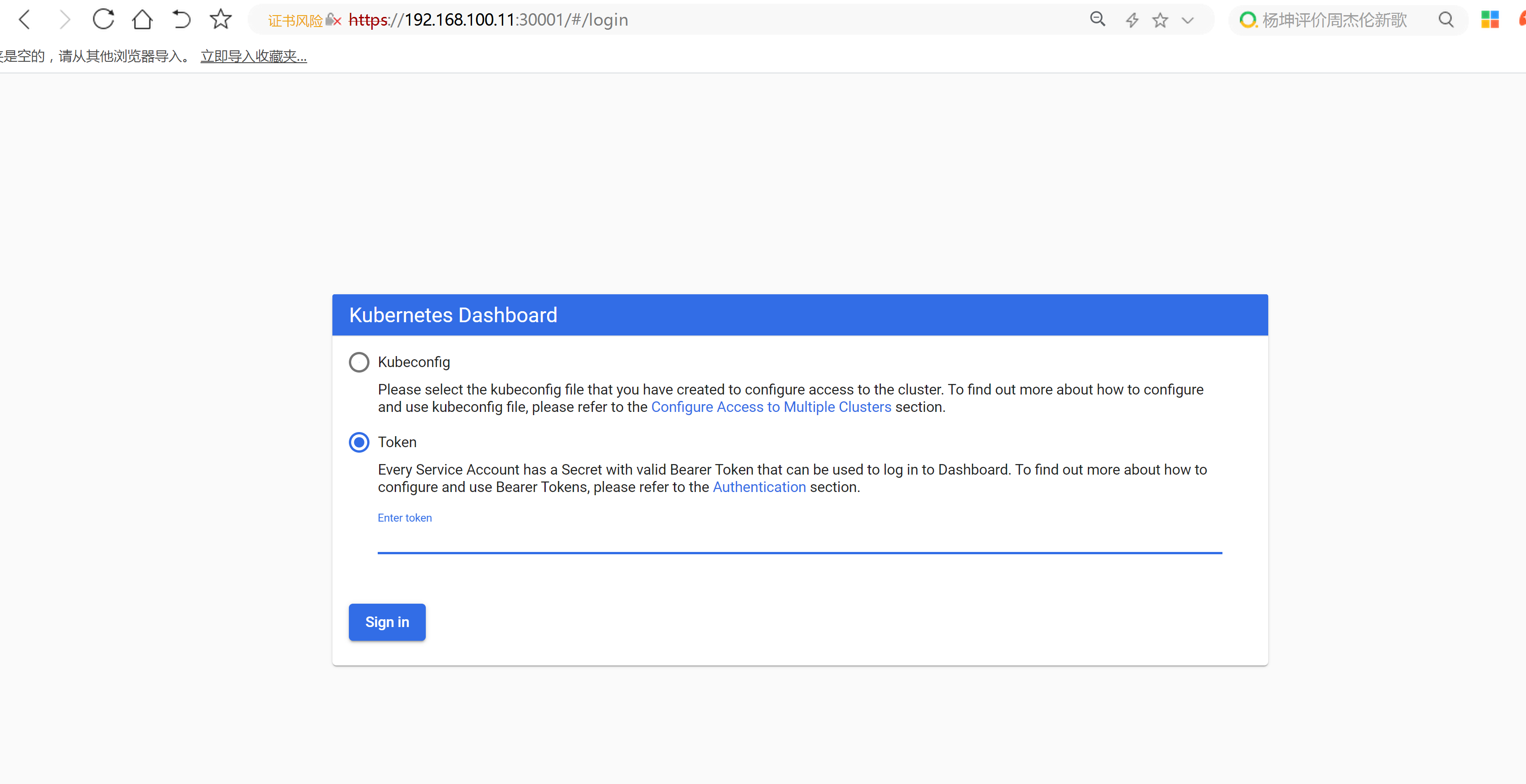

6.1 deploy Dashboard

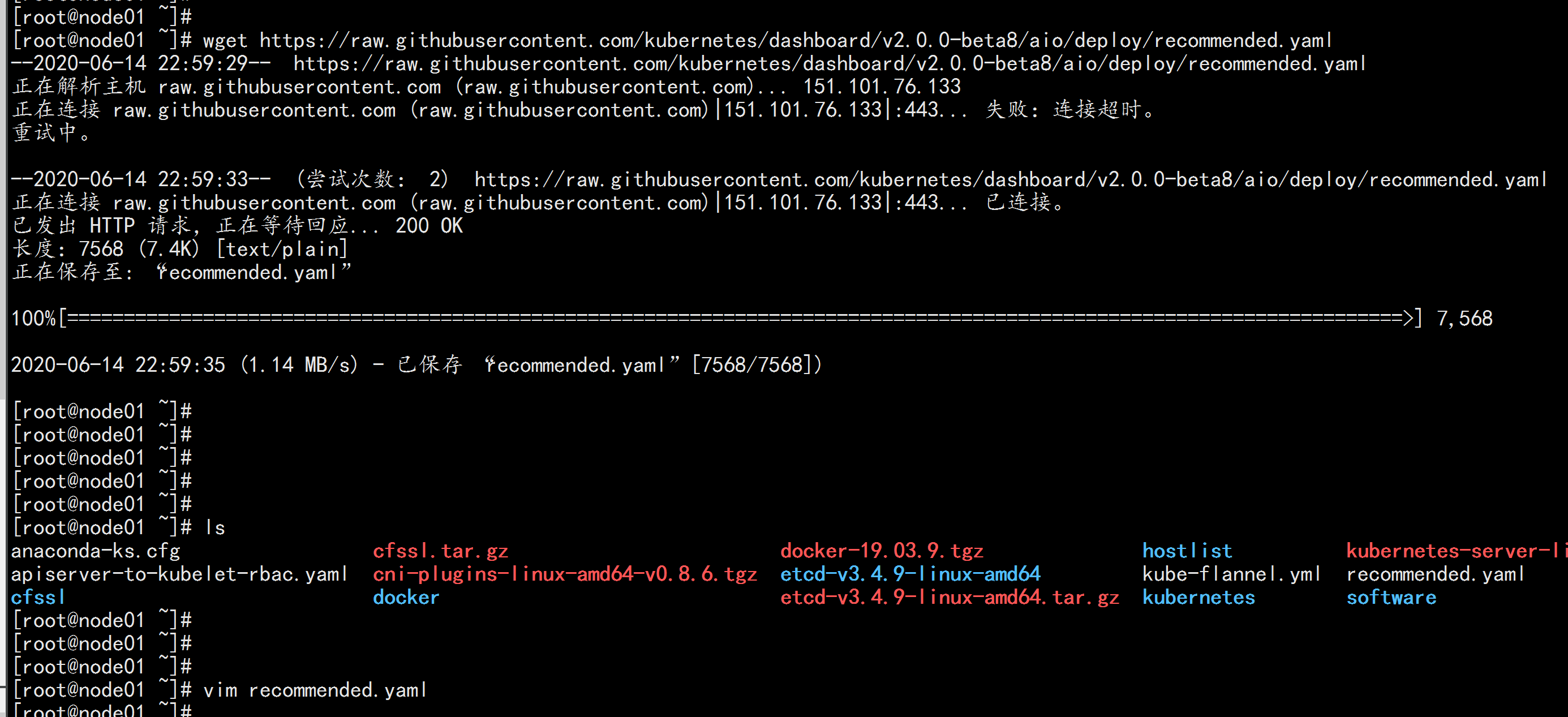

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

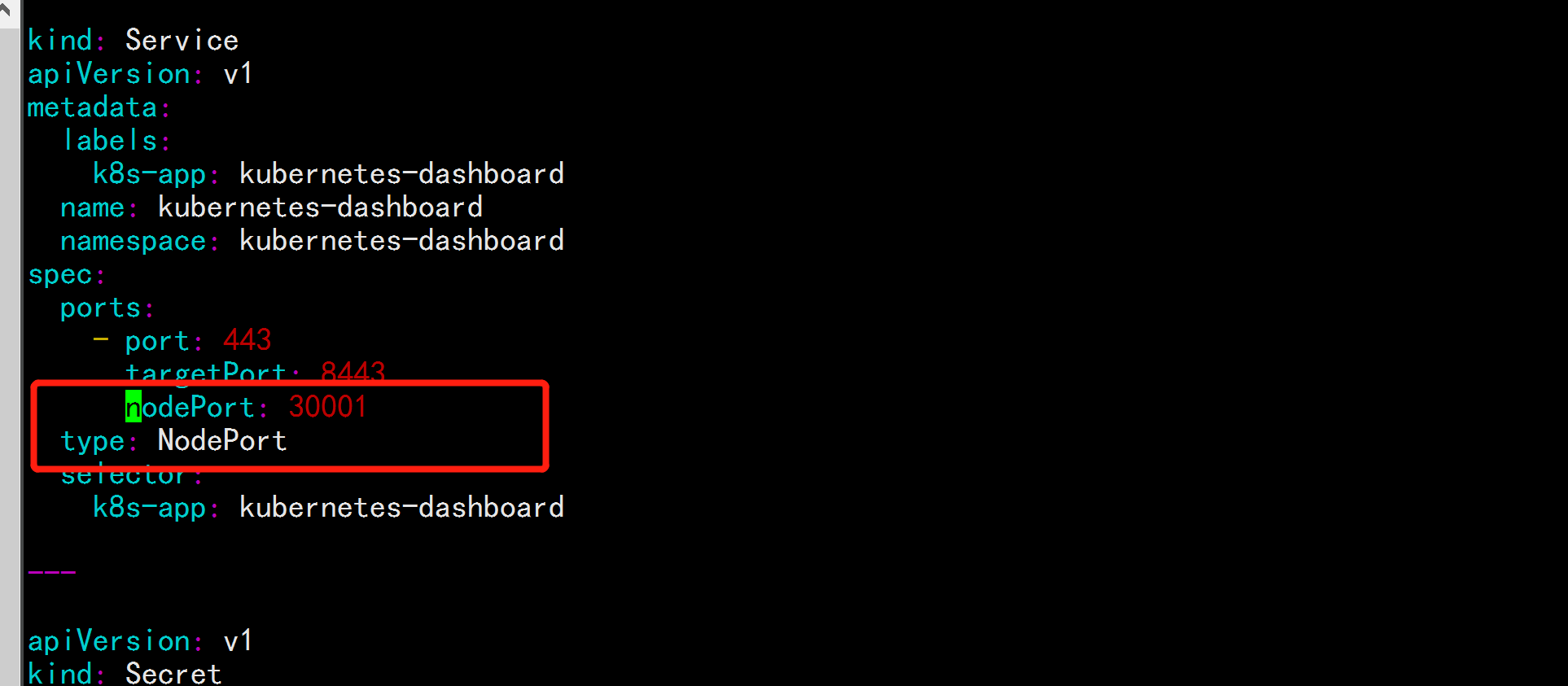

//The default Dashboard can only be accessed within a cluster, modify the Service to NodePort type, and expose it externally:

vim recommended.yaml

----

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

type: NodePort

selector:

k8s-app: kubernetes-dashboard

----

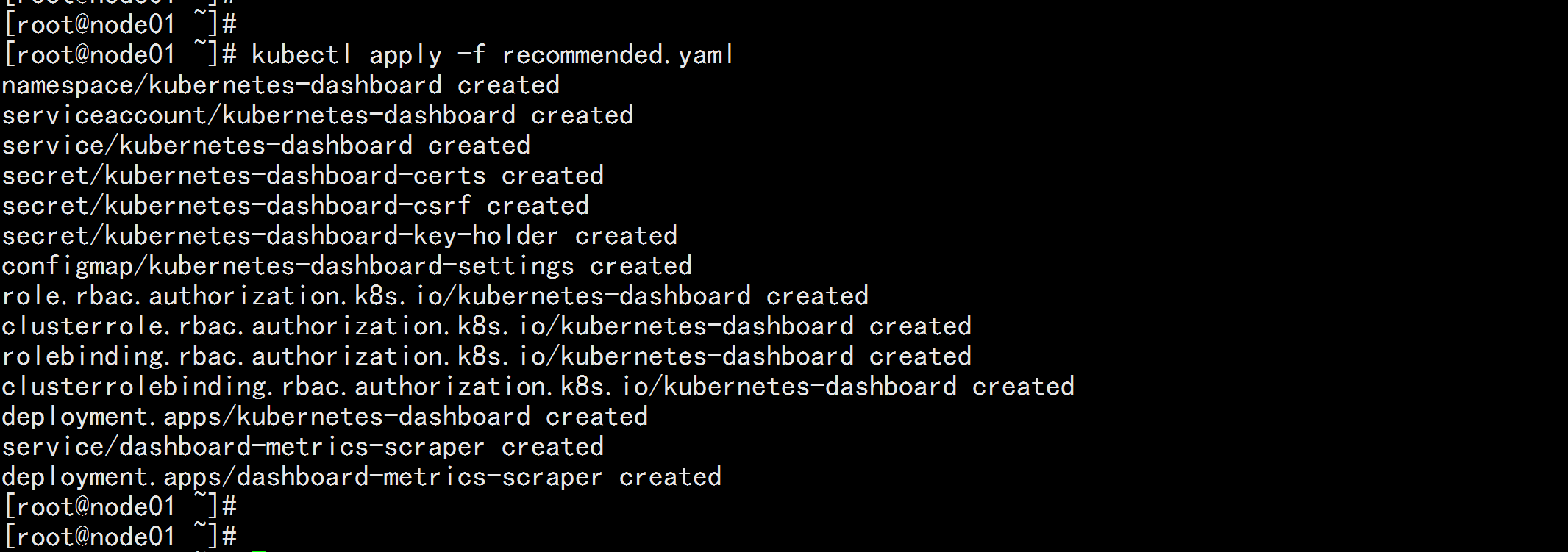

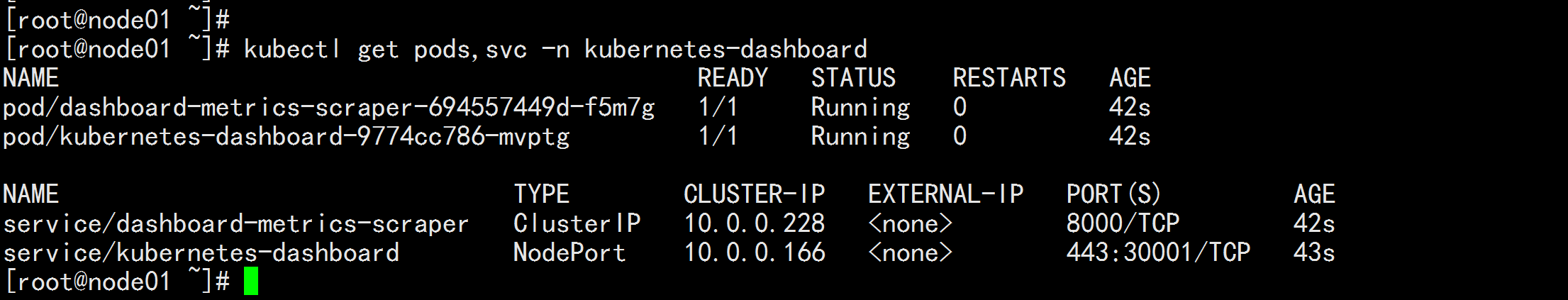

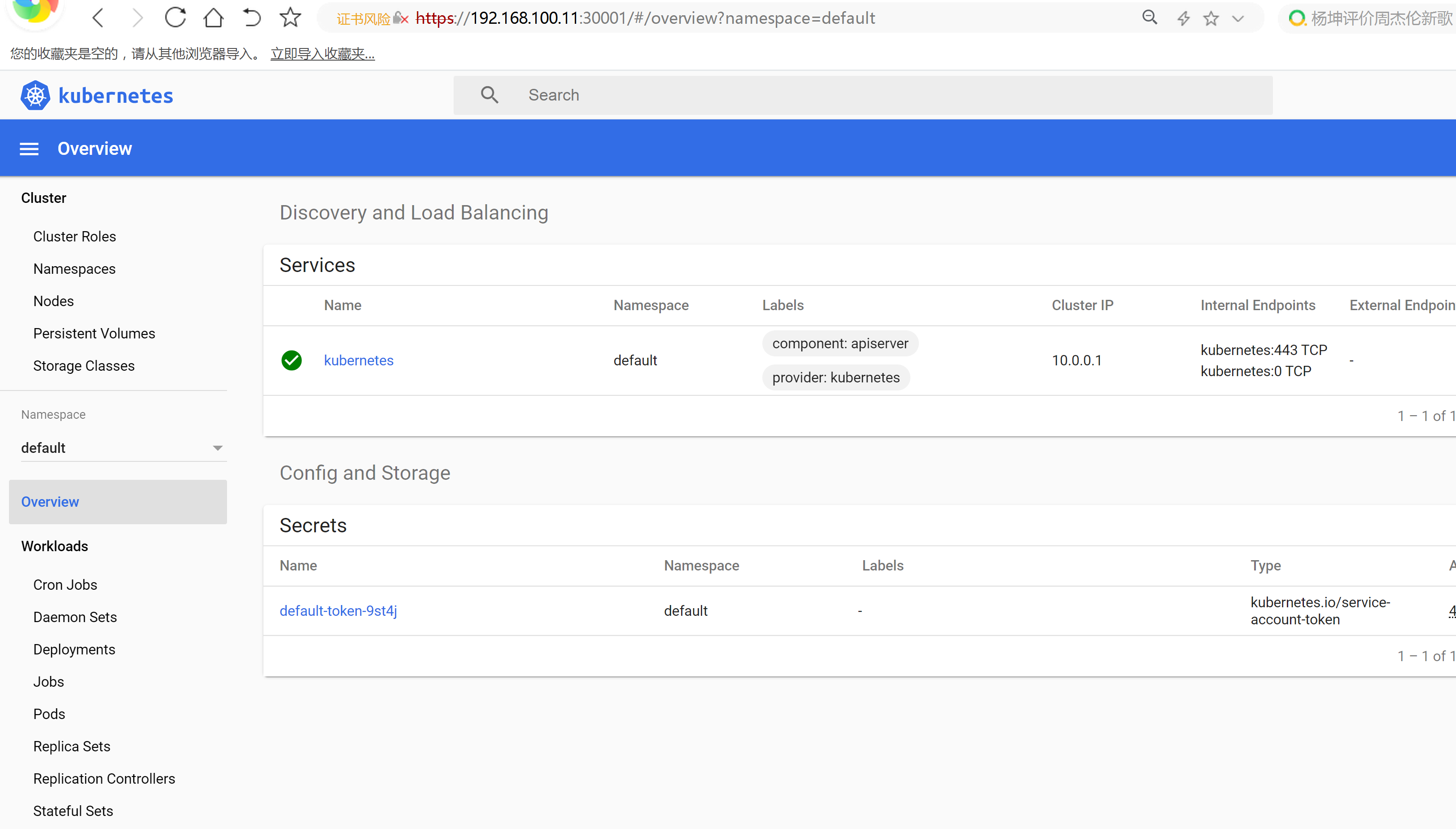

kubectl get pods,svc -n kubernetes-dashboard

Access address: https://NodeIP:30001

//Create a service account and bind the default cluster-admin administrator cluster role:

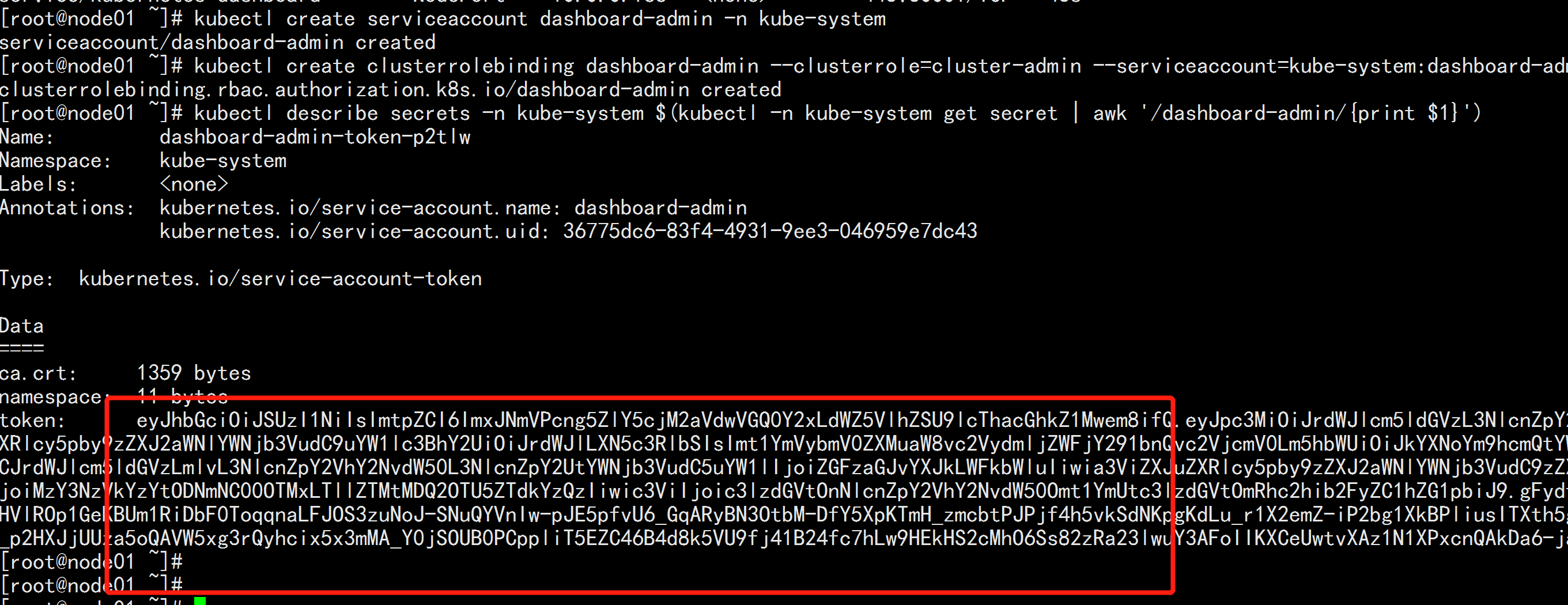

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

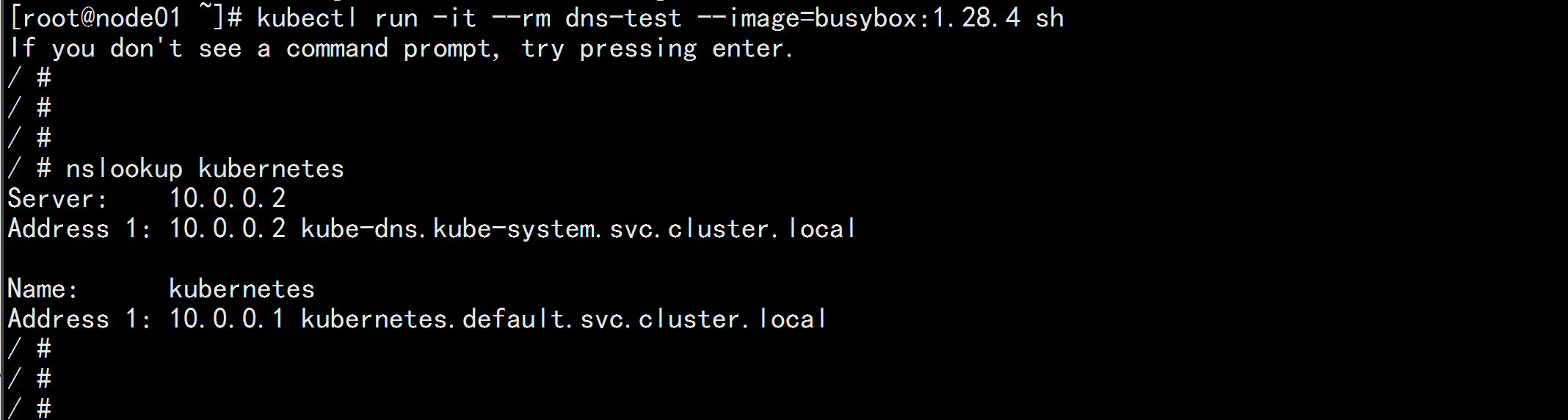

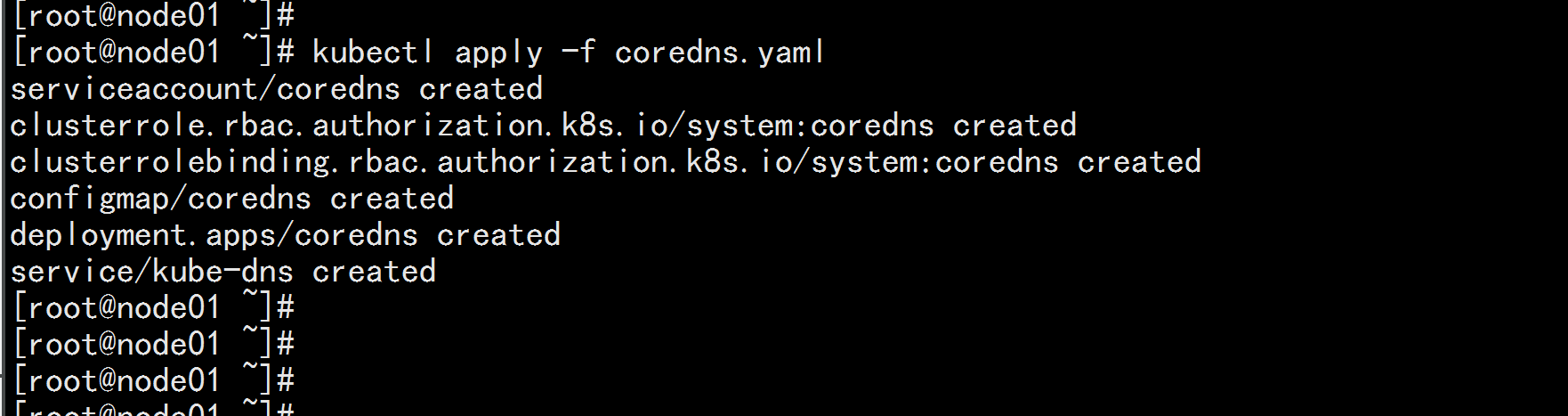

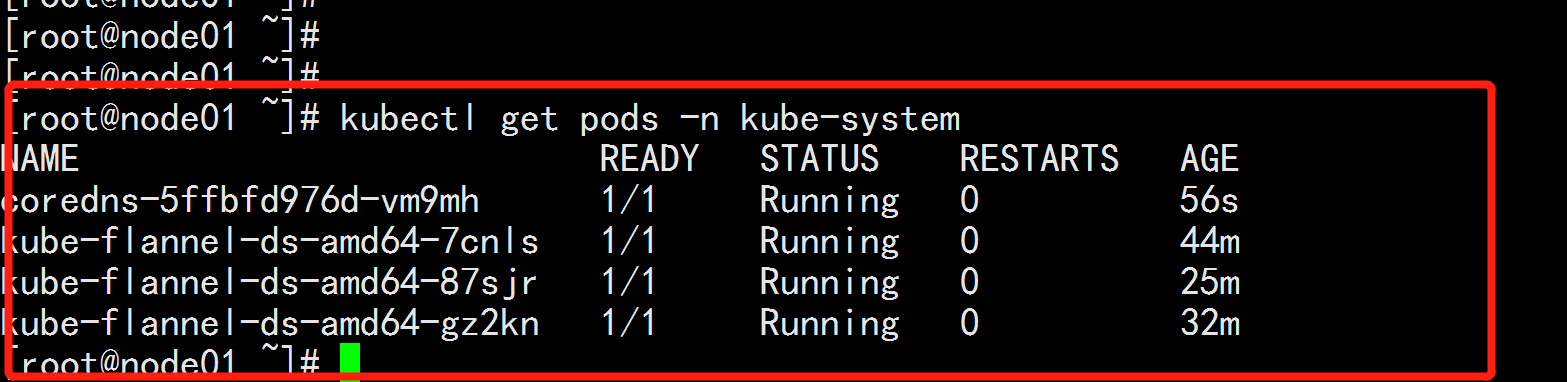

6.2 Deploy CoreDNS

CoreDNS Used within a cluster Service Name resolution. kubectl apply -f coredns.yaml

DNS Parse test: kubectl run -it --rm dns-test --image=busybox:1.28.4 sh nslookup kubernetes