Overview: Kubesphere, which is using qincloud recently, has excellent user experience, privatization deployment, no infrastructure dependence, no Kubernetes dependence. It supports cross physical machine, virtual machine and cloud platform deployment, and can manage Kubernetes clusters of different versions and manufacturers. In the k8s layer, it encapsulates and realizes role-based permission control, DevOPS pipeline quickly realizes CI/CD, built-in harbor/gitlab/jenkins/sonarqube and other common tools, based on OpenPitrix to provide application full life cycle management, including development, test, release, upgrade, off shelf and other application related operation experience is very good.

As an open-source project, there are inevitably some bug s. In the use of their own, they encounter the next row of wrong ideas. Thank you very much for the technical assistance provided by the qingcloud community. If you are interested in k8s, you can experience the domestic platform, such as the silky smooth experience. Users of lancher can also come to experience it.

1. Clear the container in exit state

After the cluster has been running for a period of time, some container s exit Exited due to abnormal status, so they need to clean up and release the disk in time, which can be set as scheduled task execution

docker rm `docker ps -a |grep Exited |awk '{print $1}'`(II) clean up abnormal or expelled pod

- Clean up under ns of kubesphere Devops system

kubectl delete pods -n kubesphere-devops-system $(kubectl get pods -n kubesphere-devops-system |grep Evicted|awk '{print $1}')

kubectl delete pods -n kubesphere-devops-system $(kubectl get pods -n kubesphere-devops-system |grep CrashLoopBackOff|awk '{print $1}')- To facilitate cleaning, specify ns to clean up the pop / cleaned exited container of evicted / crashlobbackoff

#!/bin/bash

# auth:kaliarch

clear_evicted_pod() {

ns=$1

kubectl delete pods -n ${ns} $(kubectl get pods -n ${ns} |grep Evicted|awk '{print $1}')

}

clear_crash_pod() {

ns=$1

kubectl delete pods -n ${ns} $(kubectl get pods -n ${ns} |grep CrashLoopBackOff|awk '{print $1}')

}

clear_exited_container() {

docker rm `docker ps -a |grep Exited |awk '{print $1}'`

}

echo "1.clear exicted pod"

echo "2.clear crash pod"

echo "3.clear exited container"

read -p "Please input num:" num

case ${num} in

"1")

read -p "Please input oper namespace:" ns

clear_evicted_pod ${ns}

;;

"2")

read -p "Please input oper namespace:" ns

clear_crash_pod ${ns}

;;

"3")

clear_exited_container

;;

"*")

echo "input error"

;;

esac- Clean up the pod of evicted / crashlobbackoff in all ns

# Get all ns

kubectl get ns|grep -v "NAME"|awk '{print $1}'

# Clean up pod in expulsion status

for ns in `kubectl get ns|grep -v "NAME"|awk '{print $1}'`;do kubectl delete pods -n ${ns} $(kubectl get pods -n ${ns} |grep Evicted|awk '{print $1}');done

# Clean up abnormal pod

for ns in `kubectl get ns|grep -v "NAME"|awk '{print $1}'`;do kubectl delete pods -n ${ns} $(kubectl get pods -n ${ns} |grep CrashLoopBackOff|awk '{print $1}');done3. Migration of docker data

During the installation process, the docker data directory is not specified, and the system disk is 50G. Over time, the disk is not enough, so you need to migrate the docker data, using the soft connection method:

Prefer to mount a new disk to the / data directory

systemctl stop docker mkdir -p /data/docker/ rsync -avz /var/lib/docker/ /data/docker/ mv /var/lib/docker /data/docker_bak ln -s /data/docker /var/lib/ systemctl daemon-reload systemctl start docker

Four kubesphere network troubleshooting

- Problem Description:

In the node or master node of kubesphere, start the container manually, and the public network cannot be connected in the container. What's wrong with my configuration? Previously, calico was used by default, but now it's not allowed to change to fluannel. The public network can be set up on the container where the pod in deployment is deployed in kubesphere, and the public network cannot be accessed if the node or master node is started manually alone

View docker0 on the container network started manually

root@fd1b8101475d:/# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1

link/ipip 0.0.0.0 brd 0.0.0.0

105: eth0@if106: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft foreverThe container network in pods uses Kube ipvs0

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if18: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether c2:27:44:13:df:5d brd ff:ff:ff:ff:ff:ff

inet 10.233.97.175/32 scope global eth0

valid_lft forever preferred_lft forever- Solution:

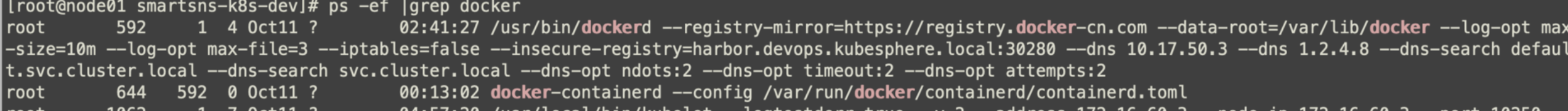

View docker startup configuration

Modify the file / etc / system D / system / docker.service.d/docker-options.conf to remove the parameter: - iptables=false when this parameter is equal to false, iptables will not be written

[Service] Environment="DOCKER_OPTS= --registry-mirror=https://registry.docker-cn.com --data-root=/var/lib/docker --log-opt max-size=10m --log-opt max-file=3 --insecure-registry=harbor.devops.kubesphere.local:30280"

Five kubesphere application routing exceptions

In kubesphere, nginx is used in the application of routing ingress. The configuration of web interface will cause two host s to use the same ca certificate, which can be configured through the annotation file

A kind of Note: ingress controls deployment at:

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: prod-app-ingress

namespace: prod-net-route

resourceVersion: '8631859'

labels:

app: prod-app-ingress

annotations:

desc: Application routing in production environment

nginx.ingress.kubernetes.io/client-body-buffer-size: 1024m

nginx.ingress.kubernetes.io/proxy-body-size: 2048m

nginx.ingress.kubernetes.io/proxy-read-timeout: '3600'

nginx.ingress.kubernetes.io/proxy-send-timeout: '1800'

nginx.ingress.kubernetes.io/service-upstream: 'true'

spec:

tls:

- hosts:

- smartms.tools.anchnet.com

secretName: smartms-ca

- hosts:

- smartsds.tools.anchnet.com

secretName: smartsds-ca

rules:

- host: smartms.tools.anchnet.com

http:

paths:

- path: /

backend:

serviceName: smartms-frontend-svc

servicePort: 80

- host: smartsds.tools.anchnet.com

http:

paths:

- path: /

backend:

serviceName: smartsds-frontend-svc

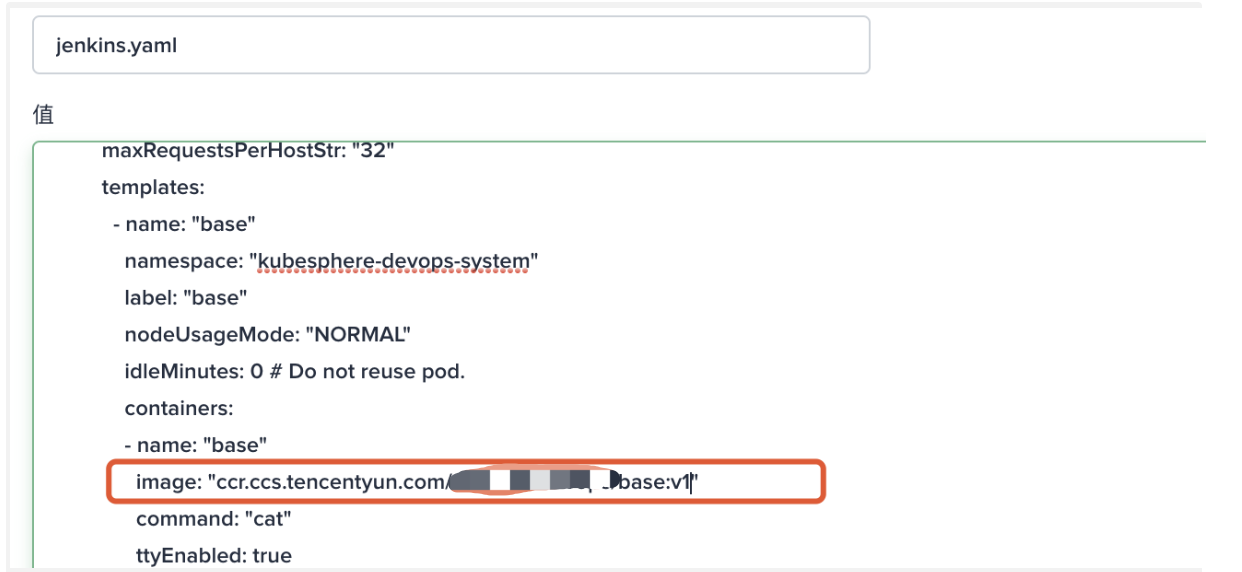

servicePort: 80agent of six kubesphere updating jenkins

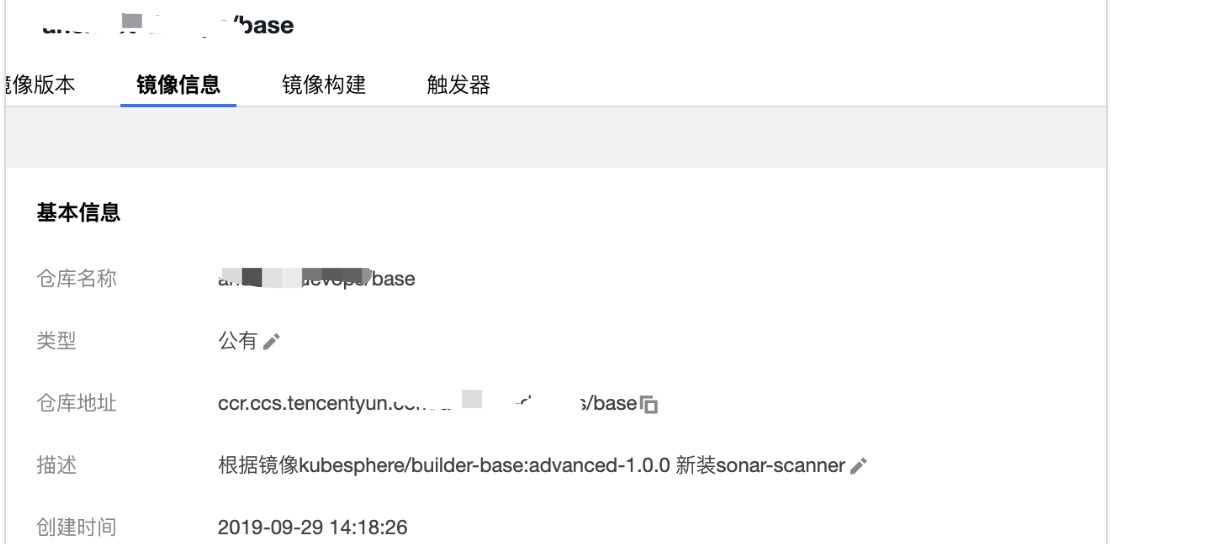

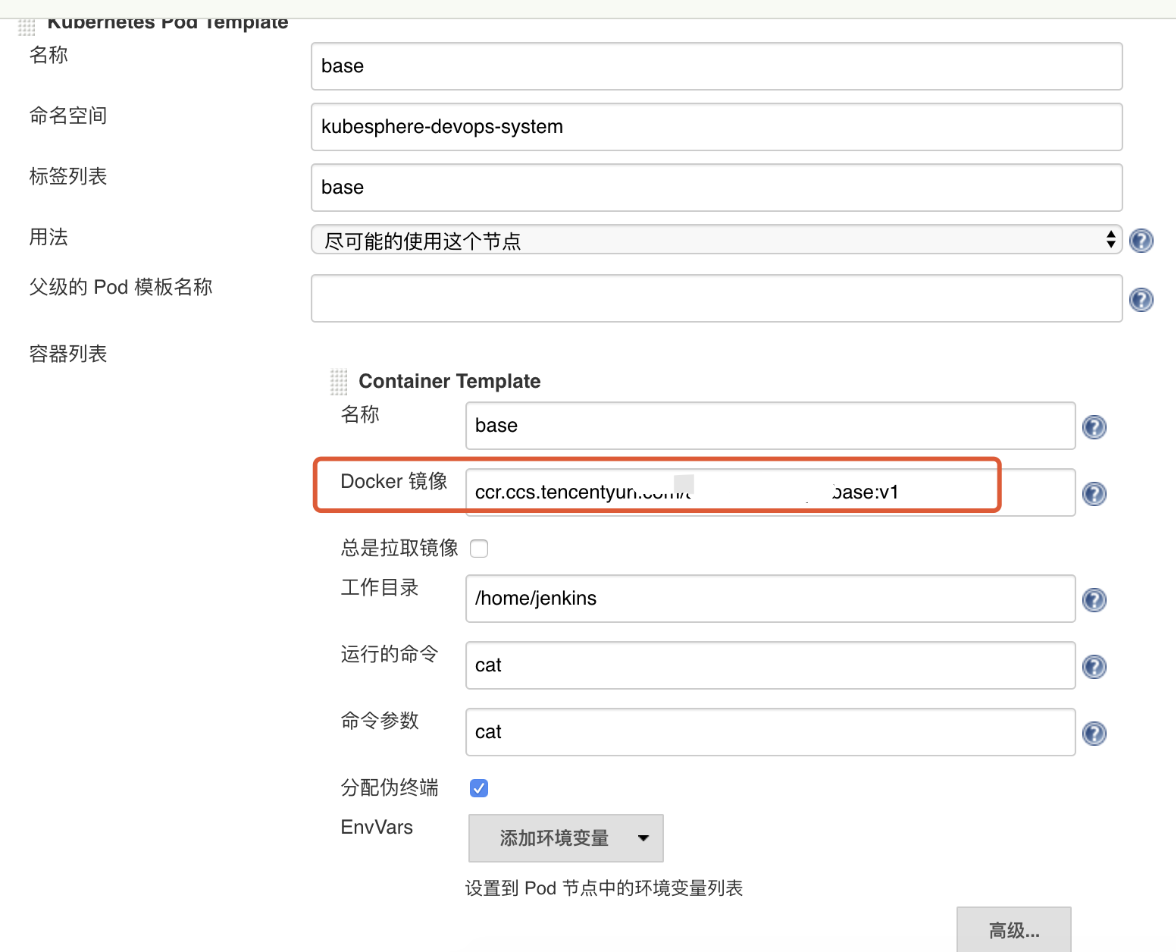

Users may use different language versions or different tool versions in their own scenarios. This document mainly introduces how to replace the built-in agent.

By default, there is no sonar scanner tool in the base build image. Every agent of Kubesphere Jenkins is a Pod. If you want to replace the built-in agent, you need to replace the corresponding image of the agent.

Build the agent image of the latest kubesphere / builder base: advanced-1.0.0

Update to the specified custom image: ccr.ccs.tencentyun.com/testns/base:v1

Reference link: https://kubesphere.io/docs/advanced-v2.0/zh-CN/devops/devops-admin-faq/#%E5%8D%87%E7%BA%A7-jenkins-agent-%E7%9A%84%E5%8C%85%E7%89%88%E6%9C%AC

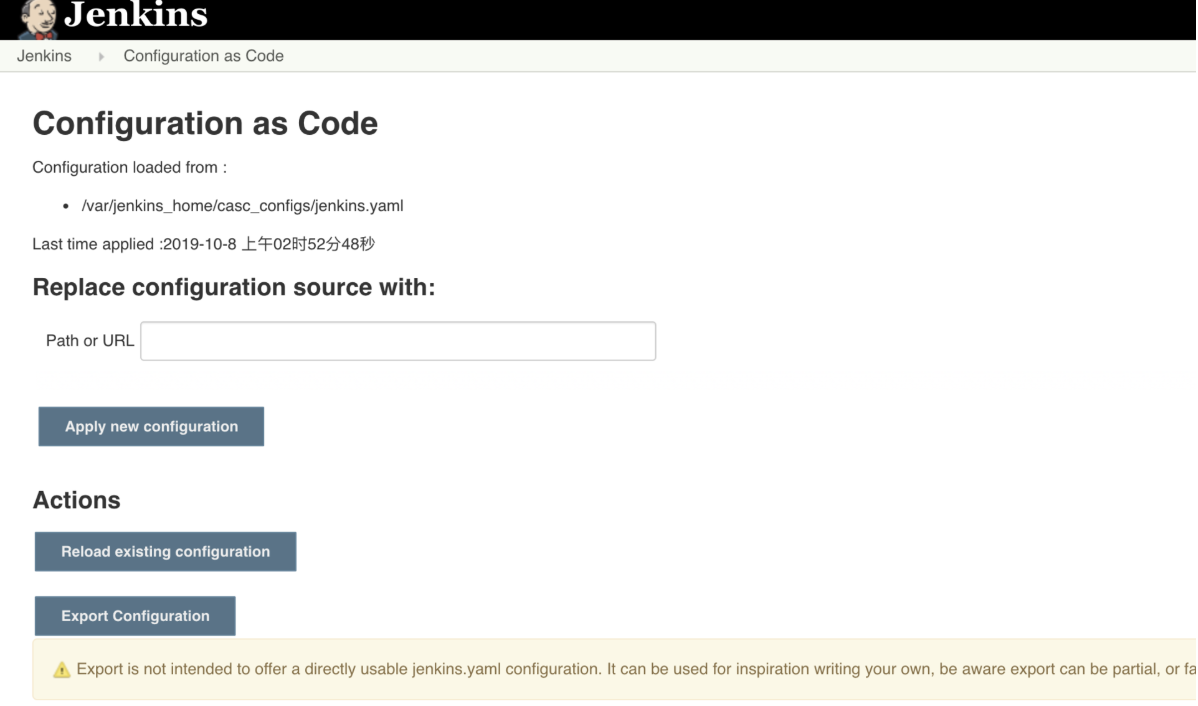

After KubeSphere modifies Jenkins CASC config, you need to reload your updated system configuration in the configuration as code page under the Jenkins Dashboard system management.

Reference resources:

https://kubesphere.io/docs/advanced-v2.0/zh-CN/devops/jenkins-setting/#%E7%99%BB%E9%99%86-jenkins-%E9%87%8D%E6%96%B0%E5%8A%A0%E8%BD%BD

Update base image in jenkins

A kind of First, modify the configuration of jenkins in kubesphere, jenkins-casc-config

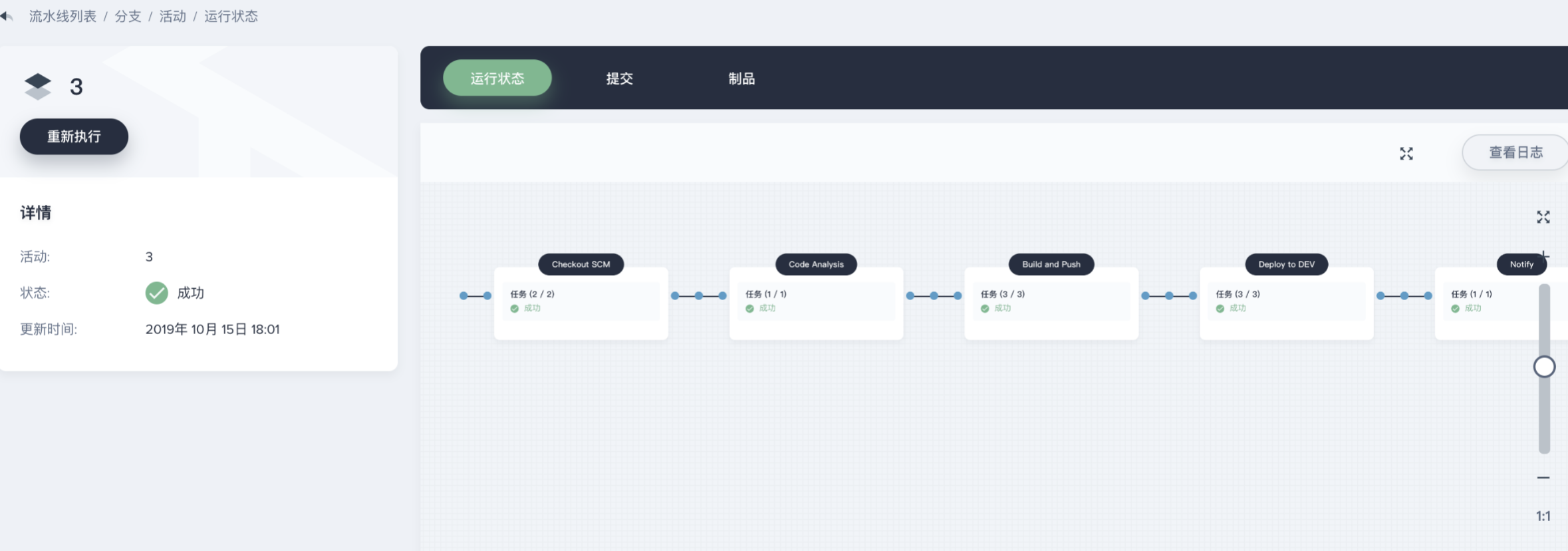

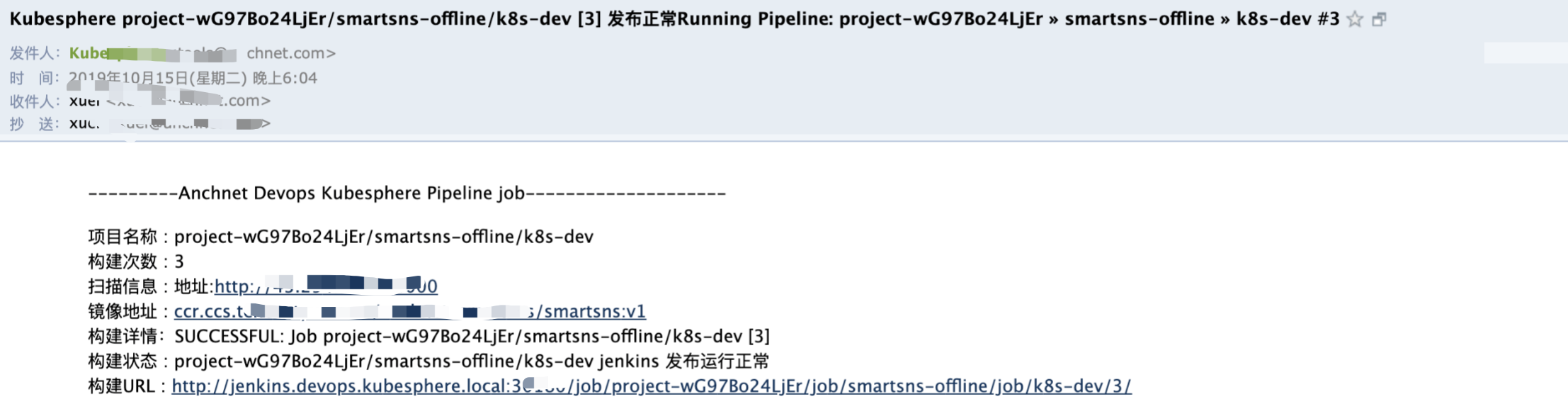

7. Mail sending in Devops

Reference resources: https://www.cloudbees.com/blog/mail-step-jenkins-workflow

Built in variables:

| Variable name | explain |

|---|---|

| BUILD_NUMBER | The current build number, such as "153" |

| BUILD_ID | The current build ID, identical to BUILD_NUMBER for builds created in 1.597+, but a YYYY-MM-DD_hh-mm-ss timestamp for older builds |

| BUILD_DISPLAY_NAME | The display name of the current build, which is something like "#153" by default. |

| JOB_NAME | Name of the project of this build, such as "foo" or "foo/bar". (To strip off folder paths from a Bourne shell script, try: ${JOB_NAME##*/}) |

| BUILD_TAG | String of "jenkins-${JOB_NAME}-${BUILD_NUMBER}". Convenient to put into a resource file, a jar file, etc for easier identification. |

| EXECUTOR_NUMBER | The unique number that identifies the current executor (among executors of the same machine) that's carrying out this build. This is the number you see in the "build executor status", except that the number starts from 0, not 1. |

| NODE_NAME | Name of the slave if the build is on a slave, or "master" if run on master |

| NODE_LABELS | Whitespace-separated list of labels that the node is assigned. |

| WORKSPACE | The absolute path of the directory assigned to the build as a workspace. |

| JENKINS_HOME | The absolute path of the directory assigned on the master node for Jenkins to store data. |

| JENKINS_URL | Full URL of Jenkins, like http://server:port/jenkins/ (note: only available if Jenkins URL set in system configuration) |

| BUILD_URL | Full URL of this build, like http://server:port/jenkins/job/foo/15/ (Jenkins URL must be set) |

| SVN_REVISION | Subversion revision number that's currently checked out to the workspace, such as "12345" |

| SVN_URL | Subversion URL that's currently checked out to the workspace. |

| JOB_URL | Full URL of this job, like http://server:port/jenkins/job/foo/ (Jenkins URL must be set) |

Finally, I wrote a template to adapt to my business, which can be used directly

mail to: 'xuel@net.com',

charset:'UTF-8', // or GBK/GB18030

mimeType:'text/plain', // or text/html

subject: "Kubesphere ${env.JOB_NAME} [${env.BUILD_NUMBER}] Release normal Running Pipeline: ${currentBuild.fullDisplayName}",

body: """

---------Anchnet Devops Kubesphere Pipeline job--------------------

//Project Name: ${env. Job [name}

//Build times: ${env.BUILD_NUMBER}

//Scan information: Address: ${SONAR_HOST}

//Image address: ${registry} / ${qhub ﹣ namespace} / ${app ﹣ name}: ${image ﹣ tag}

//Build details: Success: job ${env. Job [name} [${env. Build [number}]

//Build status: ${env.JOB_NAME} jenkins release is running normally

//Build URL: ${env. Build} ""“