1.Four cornerstones of Flink

Flink can be so popular without its four most important cornerstones: Checkpoint, State, Time, Window.

◼ Checkpoint

This is one of Flink's most important features.

Flink implements a distributed and consistent snapshot based on Handy-Lamport algorithm, which provides consistent semantics.

The Chandy-Lamport algorithm was actually proposed in 1985, but it has not been widely used, and Flink has expanded it.

Spark recently implemented Continue streaming, which is designed to reduce processing latency. It also needs to provide the semantics of this consistency. Finally, the Chandy-Lamport algorithm is used, which shows that the Chandy-Lamport algorithm has been recognized by the industry.

https://zhuanlan.zhihu.com/p/53482103

◼ State

After providing consistent semantics, Flink also provides a very straightforward State API, including ValueState, ListState, MapState, and BroadcastState, to make state management easier and easier for users when programming.

◼ Time

In addition, Flink implements a Watermark mechanism that supports event-based time processing and tolerates late/out-of-order data.

◼ Window

In addition, in stream computing, it is common to open windows before manipulating convective data, that is, based on what kind of window

Do this calculation. Flink provides out-of-the-box windows such as sliding windows, scrolling windows, session windows, and

Very flexible custom window.

2.Flink-Window operation

2.1 Why do you need Window s

In streaming applications, data is continuous and sometimes we need to do some aggregation, such as how many users have clicked on our pages in the last minute.

In this case, we must define a window to collect data in the last minute and calculate the data in that window.

2.2 Classification of Windows

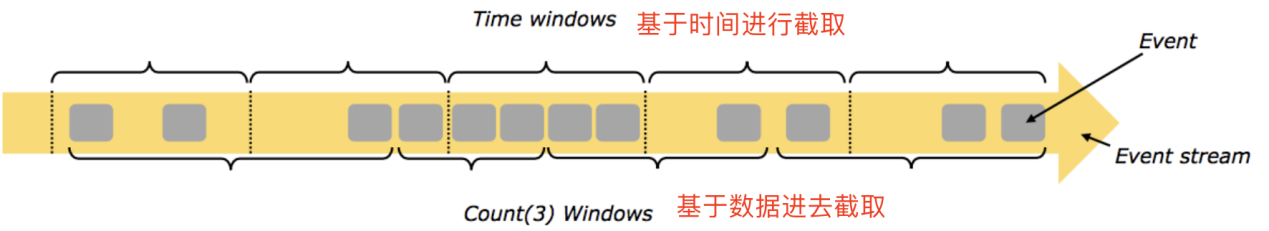

2.2.1 Classified by time and count

time-window: a window divided by time, such as: counting the last xx minutes per xx minute: a window divided by quantity, such as: counting the last xx minutes per xx minute

2.2.2 Classified by slide and size

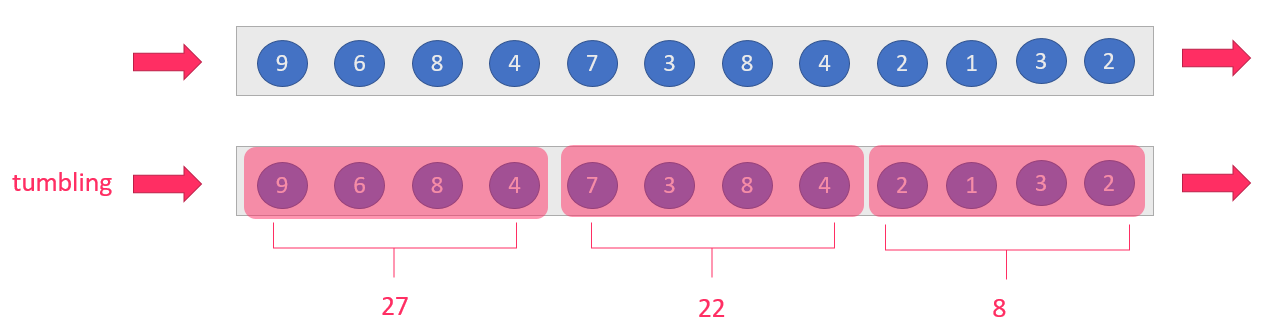

Window has two important properties: window size size size size size size and sliding interval slide, which can be divided into tumbling-window: scrolling window: size=slide, for example: statistics the last 10 seconds data every 10 seconds

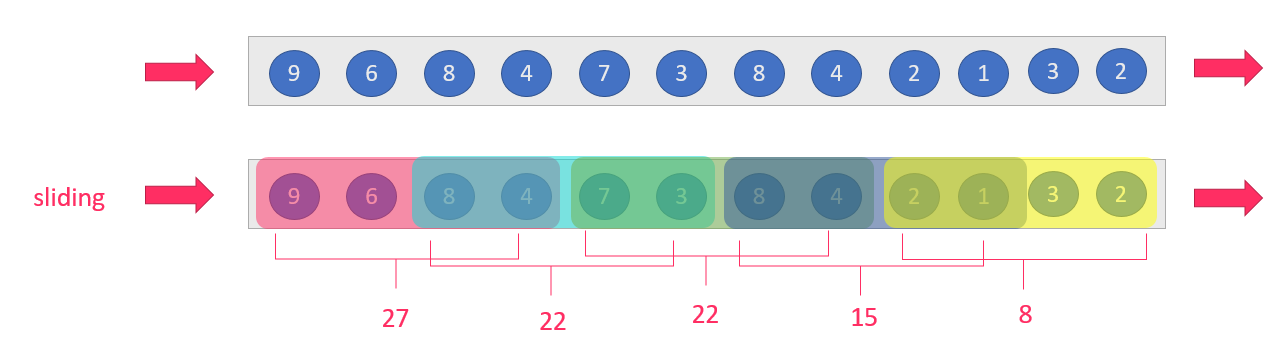

sliding-window: sliding window: size > slide, e.g. statistics for the last 10 seconds every 5 seconds

Note: When size <slide, if the last 10 seconds are counted every 15 seconds, then the middle 5 seconds will be lost and not used in all development

2.2.3 Summary

Combining these windows according to their classification, you can get the following windows:

1. Time-based scrolling window tumbling-time-window-uses more

2. Time-based sliding-time-window - uses more

3. Number-based scrolling window tumbling-count-window - less used

4. sliding-count-window based on number - less used

Note: Flink also supports a special window: Session Session Window, which requires setting a session timeout, such as 30s, which means within 30s

Trigger the calculation of the previous window if no data arrives

2.3 Window API

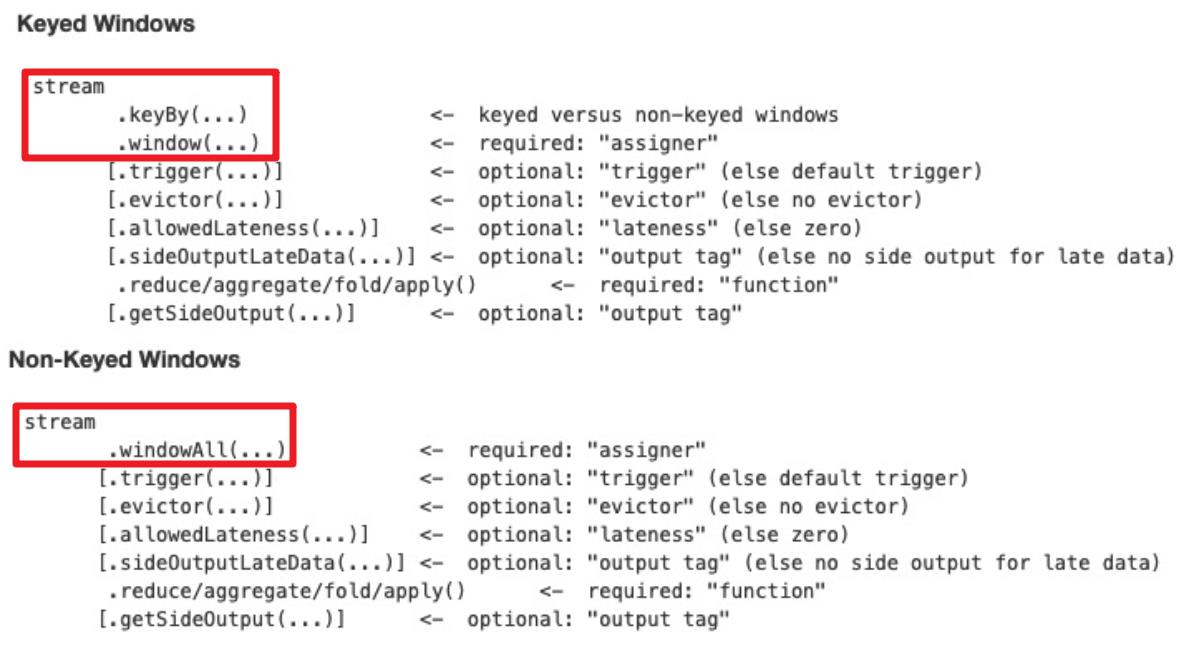

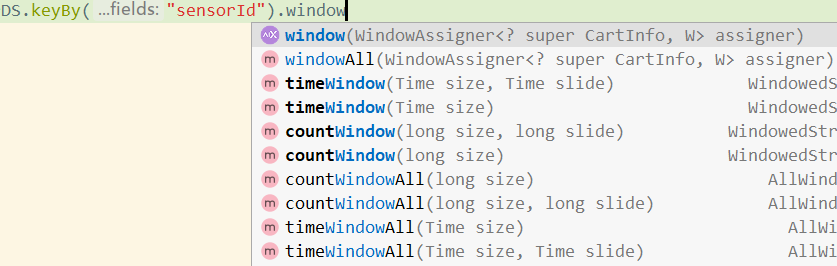

2.3.1 window and windowAll

Streams using keyby should use the window method

Stream without keyby, should call windowAll method

2.3.2 WindowAssigner

The input received by the window/windowAll method is a WindowAssigner, which distributes the data for each input to the correct window.

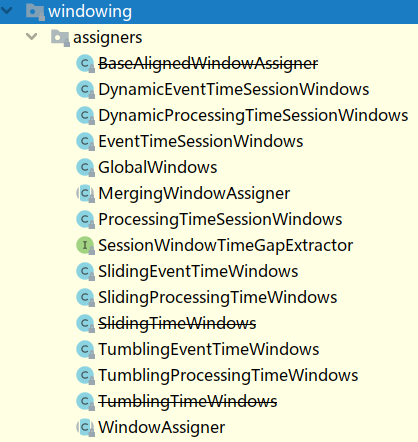

Flink offers WindowAssigner for many scenarios:

If you need to customize your own data distribution policy, you can implement a class that inherits from WindowAssigner.

2.3.3 evictor - Understanding

evictor is mainly used to do some customization of the data. You can refer to the evicBefore and evicAfter methods of org.apache.flink.streaming.api.windowing.evictors.Evictor for a more detailed description before or after executing user code.

Flink offers the following three general evictor s:

- CountEvictor retains a specified number of elements

- TimeEvictor sets a threshold interval to delete all elements no longer in the max_ts - interval range

Prime, where max_ts is the maximum time stamp in the window. - DeltaEvictor determines whether to delete by executing a user-given DeltaFunction and a preset theshold

Divide by one element.

2.3.4 trigger - Understanding

Trigger is used to determine whether a window needs to be triggered. Each WindowAssigner comes with a default trigger. If the default trigger does not meet your needs, you can customize a class that inherits from Trigger. We describe the interface and meaning of Trigger in detail:

- onElement() triggers every time an element is added to the window

- onEventTime() is called when event-time timer is triggered

- onProcessingTime() is called when processing-time timer is triggered

- onMerge() merge two `rigger States

- Clear() Called when window is destroyed

The first three of the above interfaces return a TriggerResult, which has several possible choices

Choose: - CONTINUE does nothing

- FIRE Trigger window

- PURGE empties the entire window element and destroys the window

- FIRE_AND_PURGE Triggers the window and destroys it

2.3.5 API Call Example

source.keyBy(0).window(TumblingProcessingTimeWindows.of(Time.seconds(5)));

or

source.keyBy(0)...timeWindow(Time.seconds(5))

2.4 Case Demo - Time-based scrolling and sliding windows

2.4.1 Requirements

nc -lk 9999

The following data are available:

Signal number and number of cars passing through it

9,3

9,2

9,7

4,9

2,6

1,5

2,3

5,7

5,4

Requirement 1: Statistics every 5 seconds, the number of cars passing through traffic lights at each intersection in the last 5 seconds - a time-based rolling window

Requirement 2: Statistics every 5 seconds, the number of cars passing through traffic lights at each intersection in the last 10 seconds - a time-based sliding window

2.4.2 Code implementation

package cn.itcast.window;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.SlidingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

/**

* Author itcast

* Desc

* nc -lk 9999

* The following data are available:

* Signal number and number of cars passing through it

9,3

9,2

9,7

4,9

2,6

1,5

2,3

5,7

5,4

* Requirement 1: Statistics every 5 seconds, the number of cars passing through traffic lights at each intersection in the last 5 seconds--time-based rolling windows

* Requirement 2: Statistics every 5 seconds, the number of cars passing through traffic lights at each intersection in the last 10 seconds--time-based sliding windows

*/

public class WindowDemo01_TimeWindow {

public static void main(String[] args) throws Exception {

//1.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//2.Source

DataStreamSource<String> socketDS = env.socketTextStream("node1", 9999);

//3.Transformation

//Convert 9,3 to CartInfo(9,3)

SingleOutputStreamOperator<CartInfo> cartInfoDS = socketDS.map(new MapFunction<String, CartInfo>() {

@Override

public CartInfo map(String value) throws Exception {

String[] arr = value.split(",");

return new CartInfo(arr[0], Integer.parseInt(arr[1]));

}

});

//Grouping

//KeyedStream<CartInfo, Tuple> keyedDS = cartInfoDS.keyBy("sensorId");

// *Requirement 1: Statistics every 5 seconds, the number of cars passing traffic lights at each intersection in the last 5 seconds--time-based rolling windows

//timeWindow(Time size window, Time slide interval)

SingleOutputStreamOperator<CartInfo> result1 = cartInfoDS

.keyBy(CartInfo::getSensorId)

//.timeWindow (Time.seconds(5)//When size==slide, you can write only one

//.timeWindow(Time.seconds(5), Time.seconds(5))

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

.sum("count");

// *Requirement 2: Statistics every 5 seconds, the number of cars passing through traffic lights at each intersection in the last 10 seconds--time-based sliding windows

SingleOutputStreamOperator<CartInfo> result2 = cartInfoDS

.keyBy(CartInfo::getSensorId)

//.timeWindow(Time.seconds(10), Time.seconds(5))

.window(SlidingProcessingTimeWindows.of(Time.seconds(10), Time.seconds(5)))

.sum("count");

//4.Sink

/*

1,5

2,5

3,5

4,5

*/

//result1.print();

result2.print();

//5.execute

env.execute();

}

@Data

@AllArgsConstructor

@NoArgsConstructor

public static class CartInfo {

private String sensorId;//Semaphore id

private Integer count;//Number of cars passing this light

}

}

2.5 Case Demo - Number-based scrolling and sliding windows

2.5.1 Requirements

Requirement 1: Count the number of cars passing at each intersection in the last five messages, 5 times for the same key - quantity based

scroll window

Requirement 2: Count the number of cars passing at each intersection in the last five messages, and count the same key three times per occurrence - based on quantity

Slide window of

2.5.2 code implementation

package cn.itcast.window;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* Author itcast

* Desc

* nc -lk 9999

* The following data are available:

* Signal number and number of cars passing through it

9,3

9,2

9,7

4,9

2,6

1,5

2,3

5,7

5,4

* Requirement 1: Count the number of cars passing at each intersection in the last five messages. Count five times for the same key--a rolling window based on number

* Requirement 2: Count the number of cars passing at each intersection in the last five messages, and count the same key three times per occurrence--a sliding window based on number

*/

public class WindowDemo02_CountWindow {

public static void main(String[] args) throws Exception {

//1.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//2.Source

DataStreamSource<String> socketDS = env.socketTextStream("node1", 9999);

//3.Transformation

//Convert 9,3 to CartInfo(9,3)

SingleOutputStreamOperator<CartInfo> cartInfoDS = socketDS.map(new MapFunction<String, CartInfo>() {

@Override

public CartInfo map(String value) throws Exception {

String[] arr = value.split(",");

return new CartInfo(arr[0], Integer.parseInt(arr[1]));

}

});

//Grouping

//KeyedStream<CartInfo, Tuple> keyedDS = cartInfoDS.keyBy("sensorId");

// *Requirement 1: Count the number of cars passing at each intersection in the last five messages, 5 times for the same key -- rolling window based on number

//countWindow(long size, long slide)

SingleOutputStreamOperator<CartInfo> result1 = cartInfoDS

.keyBy(CartInfo::getSensorId)

//.countWindow(5L, 5L)

.countWindow( 5L)

.sum("count");

// *Requirement 2: Count the number of cars passing at each intersection in the last five messages, and count the same key every three times it appears--a sliding window based on number

//countWindow(long size, long slide)

SingleOutputStreamOperator<CartInfo> result2 = cartInfoDS

.keyBy(CartInfo::getSensorId)

.countWindow(5L, 3L)

.sum("count");

//4.Sink

//result1.print();

/*

1,1

1,1

1,1

1,1

2,1

1,1

*/

result2.print();

/*

1,1

1,1

2,1

1,1

2,1

3,1

4,1

*/

//5.execute

env.execute();

}

@Data

@AllArgsConstructor

@NoArgsConstructor

public static class CartInfo {

private String sensorId;//Semaphore id

private Integer count;//Number of cars passing this light

}

}

2.6 Case Demo-Session Window

2.6.1 Requirements

Set the session timeout to 10s and no data will arrive within 10s, triggering the calculation of the previous window

2.6.2 Code implementation

package cn.oldlu.window;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.ProcessingTimeSessionWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

/**

* Author oldlu

* Desc

* nc -lk 9999

* The following data are available:

* Signal number and number of cars passing through it

9,3

9,2

9,7

4,9

2,6

1,5

2,3

5,7

5,4

* Requirement: Set the session timeout to 10s and no data will arrive within 10s, triggering the calculation of the previous window (provided the previous window has data!)

*/

public class WindowDemo03_SessionWindow {

public static void main(String[] args) throws Exception {

//1.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//2.Source

DataStreamSource<String> socketDS = env.socketTextStream("node1", 9999);

//3.Transformation

//Convert 9,3 to CartInfo(9,3)

SingleOutputStreamOperator<CartInfo> cartInfoDS = socketDS.map(new MapFunction<String, CartInfo>() {

@Override

public CartInfo map(String value) throws Exception {

String[] arr = value.split(",");

return new CartInfo(arr[0], Integer.parseInt(arr[1]));

}

});

//Requirement: Set the session timeout to 10s and no data will arrive within 10s, triggering the calculation of the previous window (provided the previous window has data!)

SingleOutputStreamOperator<CartInfo> result = cartInfoDS.keyBy(CartInfo::getSensorId)

.window(ProcessingTimeSessionWindows.withGap(Time.seconds(10)))

.sum("count");

//4.Sink

result.print();

//5.execute

env.execute();

}

@Data

@AllArgsConstructor

@NoArgsConstructor

public static class CartInfo {

private String sensorId;//Semaphore id

private Integer count;//Number of cars passing this light

}

}