Search engine quality indicator (nDCG) reference blog https://blog.csdn.net/LintaoD/article/details/82661206

feature: relevance timeliness quality click through rate authority cold start

Which scenarios of search / recommendation business should be put into the cache

Searching: Features

Implementation difference between large page cache and small page cache

The small page cache is to improve the loading speed of the search result page. It is progressive loading or preloading. The original 1 page content is returned in two pages. The first page requests 30 and is returned in two pages. The first page returns 10 and the second page returns 20.

The large page cache is a complete request result, but it is not like the original request of 30 results per page. Now the first request will have more than 70 results. At the beginning, the request returns 5 pages of 150 results

Recommendation: user and item are stored separately. Then the user is divided into many feature s

Requirement 1: give a batch of queries to ensure that the a field of card a is returned under each query (data desensitization)

Requirement 2: for tens of thousands of keywords, check the ratio of recalling A under each keyword algorithm interface and no B or A or B or AB

Requirement 3: intention understanding test (manual test and annotation are required, but it can be implemented by script). Give a batch of new vocabulary. The total number of error correction classes is 70. The total number of others is 700, with a total of 400 at the head, 200 at the waist and 100 at the tail. It is arranged in disorder, and there are all layers.

Intention interface test: category prediction, error correction, rewriting, qt (query tagging participle). badcase will also appear in the current online result of qp, so the data needs to be reviewed manually

If there is no result after timeout, you can check the supplementary data of the interface, as follows:

Overwrite results:

Design: query preparation. The Excel format provided by PM is converted to txt format, but it is not,

shell script processing

Put the document 1 Txt is added at the end of each line and written to 2 txt

➜ ~ sed 's/$/&,/g' 1.txt > 2.txt

[text processing] SHELL with multiple columns merged into one row, separated by

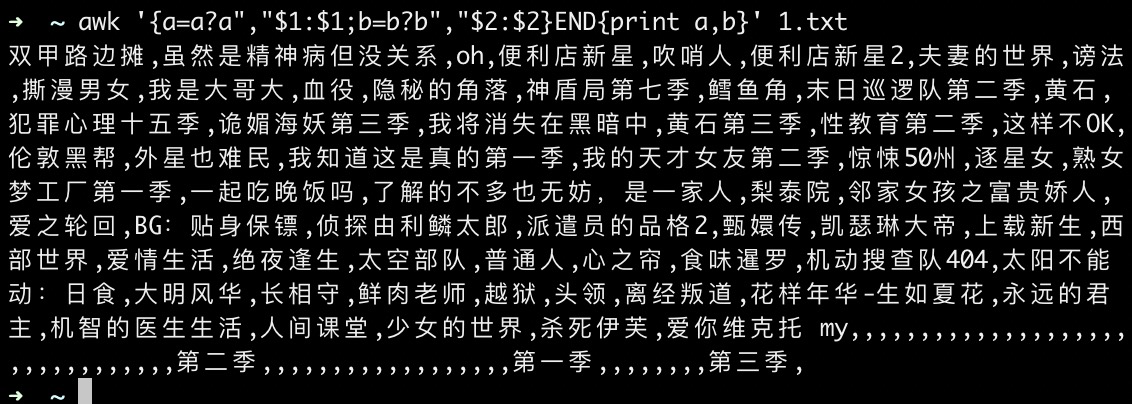

➜ ~ awk '{a=a?a","$1:$1;b=b?b","$2:$2}END{print a,b}' 1.txt

Script part - online environment:

Business requirement 1: logOnlineReadFiles70 error correction

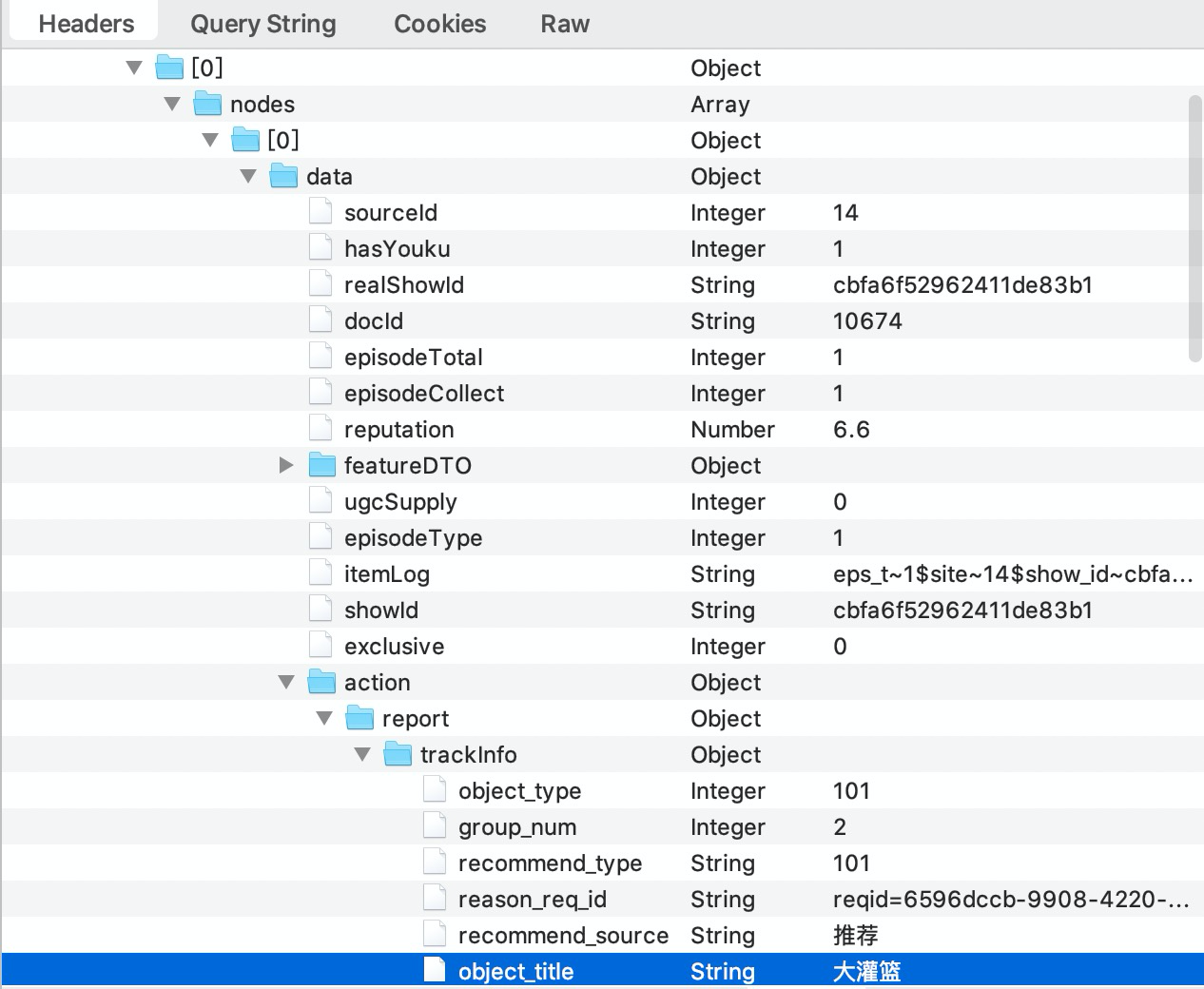

Interface value -- multi-layer nodes fetching demand fields

Data returned by QP algorithm interface: qc (error correction word) qaGrade (error correction word level) keyword (original query)

Business interface: take the type of the second card - Title doc source object_ Title, because the nodes of the first (index=0) is an error correction word, and the second (index=1) is a business card

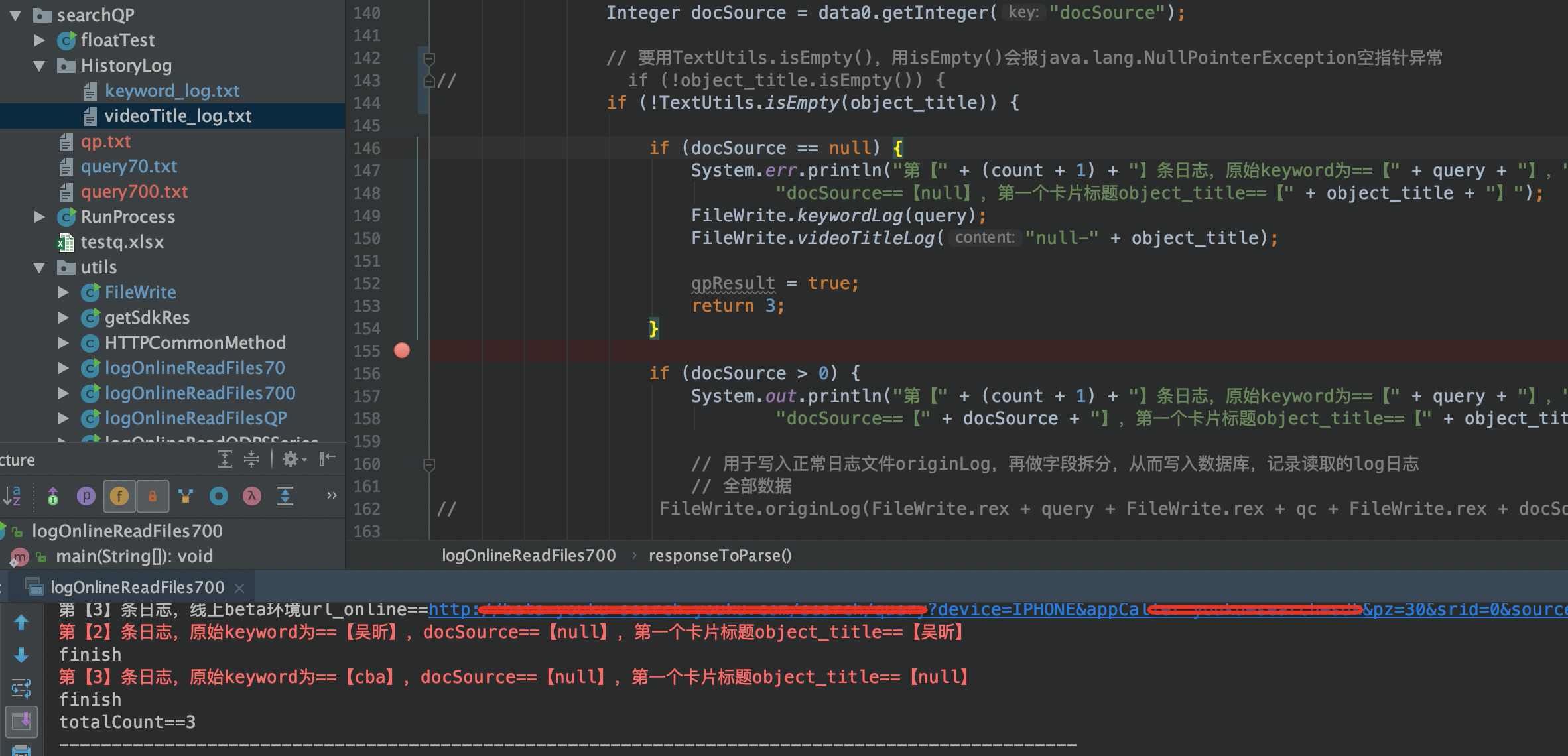

Business requirement 2: logOnlineReadFiles700 - rewrite

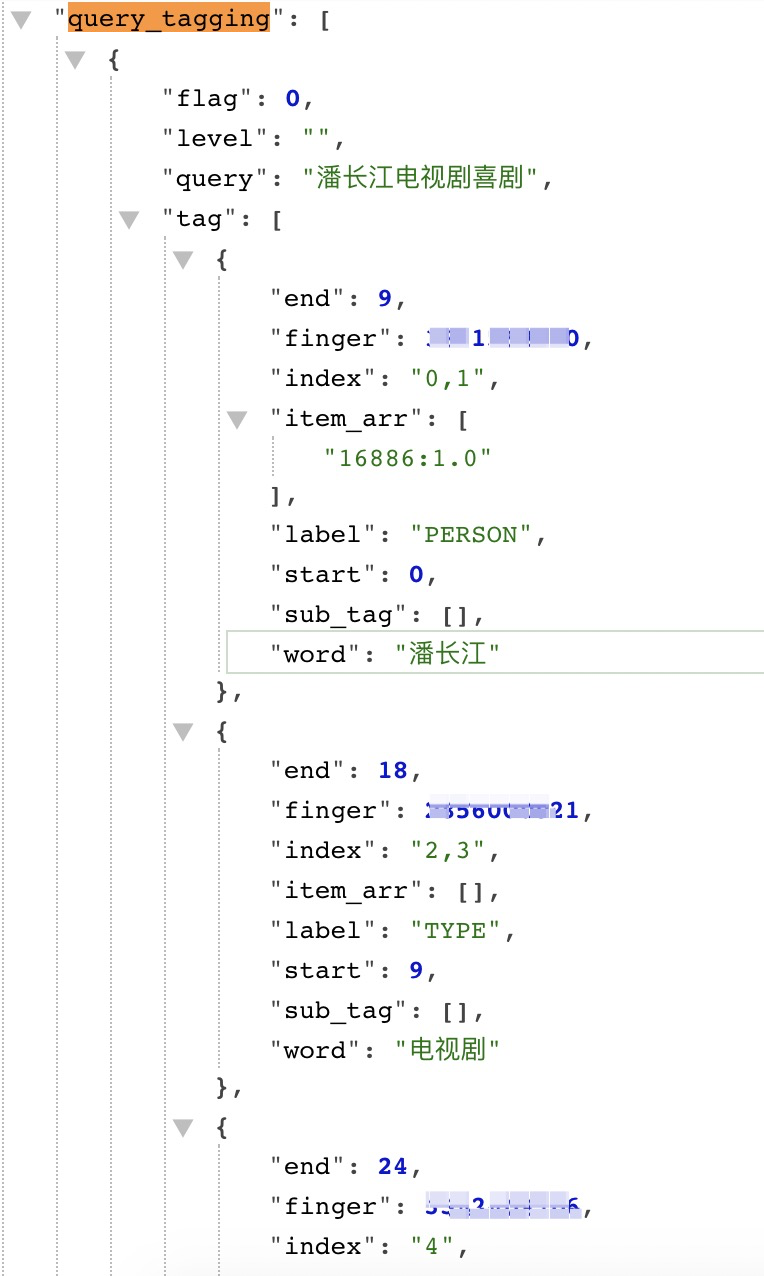

logOnlineReadFilesQP - category prediction, scoring level, word segmentation array, query tagging

Business interface: get the type of the first card - Title doc source object_ Title, because there is no error correction word, the first (index=0) is the business card

Algorithm interface: scoring, word segmentation, rewriting, error correction and type annotation

Special field search, CR Code:

/** * Error correction word level: 1 prompt, 2 replacement query */ private int qcGrade;

Framework design: judge the algorithm interface and business interface at the same time

Based on the httpclient framework and file parsing, the required columns are designed according to the requirements, such as ABC column and ABC three files respectively. CD two column write c file

Overall design: the shell parses the contents of Excel files, reads txt files, and classifies query (3 types). The results of the 3 types of interfaces are written into the text as required. The txt files are deleted before running the program each time, and new data can be written each time

The code is as follows:

1. 70 error correction words query service code implementation

package com.alibaba.searchQP.utils;

import com.alibaba.fastjson.JSONObject;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.*;

import static com.alibaba.searchQP.utils.ReadFiles.readTxt;

/**

* Excel Text processing: paste query into 1 Txt file, execute awk '{a = a? A', $1: $1; b = B? B ', $2: $2} end {print a, B}' 1 txt

* Process 70 error correction words query, and take the error correction word and the first card type - title from the original Excel query

*/

public class logOnlineReadFiles70 {

private static Logger logger = LoggerFactory.getLogger(logOnlineReadFiles700.class);

public static void main(String[] args) {

// Before running the program (reading the new log file), empty the old file (last log information)

FileWrite.deleteAllLogFile();

FileWrite.deleteAllLogCopyFile();

startSearch();

}

// Define the set and put the search scenario into the list set

public static List<String> list = new ArrayList<>();

public static void startSearch() {

long startTime = System.currentTimeMillis();

System.out.println("===The program begins to execute===");

// The concatenated parameter is in Chinese, so you need to put the Chinese in the map

// Method 1: query the top 1000 of the query to be tested in odps, save it locally, and then splice it through the interface

String filePath = "/Users/lishan/Desktop/code/xx/qp.txt";

System.out.println(filePath);

String[] keywords = readTxt(filePath);

// System.out.println("strings:" + Arrays.toString(keywords));

// String keywords=record.getString("f1");

// Method 2: read the code from the odps tool class, query the query of top1000, and then splice it through the interface

// See logOnlineReadODPS

// String [] keywords = {"Wu Yifan", "Yang Mi", "Tang Yan"};

// String[] keywords = {"Wu Yifan"};

int only1 = 0;

String query1 = "";

int totalCount = 0;

try {

for (int i = 0; i < keywords.length; i++) {

Map<String, String> query = new HashMap<>();

query.put("keyword", keywords[i]);

// If the URL does not have a public parameter, set the "public parameter" to "public parameter"? Remove;

// Add cmd=4 to the business interface parameters and get the engine field to return

String url_pre = "http://xx";

// Start the request, domain name, interface name = = url + request parameter param (hashMap)

// String response = HTTPCommonMethod.doGet(url_pre, url_online, map, count);

System.out.println("The first" + (i + 1) + "Data bar==" + query);

String response = HTTPCommonMethod.doGet(url_pre, query, i);

JSONObject responseJson = JSONObject.parseObject(response);

int type = responseToParse(i, keywords[i], responseJson);

// Program card only

if (type == 1) {

only1++;

query1 = query1 + keywords[i] + ",";

// Series cards only

}

// Print the data returned by the interface

// System.out.println("for the [" + i + "] log, the response returned by the pre interface of the advance environment is = = = = = = =" + response);

totalCount = i + 1;

// System.out.println("totalcount of each cycle = =" + totalcount ");

}

} catch (Exception e) {

e.printStackTrace();

}

System.out.println("totalCount==" + totalCount);

float rate3 = (float) only1 / (float) totalCount;

System.out.println("------------------------------------------------------------------------------------------------");

System.out.println("------------------------------------------------------------------------------------------------");

System.out.println("only1---Recall error correction word card==[" + only1 + "]Number, total" + totalCount + "One,---The ratio is==[" + rate3 + "]---query1==[" + query1 + "]");

long endTime = System.currentTimeMillis();

System.out.println("===Program end execution===");

long durationTime = endTime - startTime;

System.out.println("===Program time===[" + durationTime + "]millisecond");

// System.out.println("= = = program time = = [" + durationTime / 1000 / 60 + "] minutes");

}

/**

* @param count

* @param query

* @param response

* @return 1: Error correction word card 2 returned: not returned

*/

public static int responseToParse(int count, String query, JSONObject response) {

try {

// HashMap<Integer, Integer> hm = new HashMap<Integer, Integer>();

boolean qpResult = false;

if (!response.isEmpty()) {

// Get JSONObject

// Intention to understand the algorithm interface: whether to correct qcGrade. Error correction word level: 1 prompt, 2 replacement query

// Business interface: get the card title and card type of the first error correction word result

// QP interface

JSONObject data0 = response.getJSONArray("nodes").getJSONObject(0).

getJSONArray("nodes").getJSONObject(0).

getJSONArray("nodes").getJSONObject(0).

getJSONObject("data");

// Error correction word type

Integer qcGrade = data0.getInteger("qcGrade");

// qc is an error correction word, such as keyword = legend of new white fat man, qc = legend of new white lady

String qc = data0.getString("qc");

// Keyword is the original query (user input), such as keyword = legend of the new white fat man

String keyword = data0.getString("keyword");

// Service interface

JSONObject data1 = response.getJSONArray("nodes").getJSONObject(1).

getJSONArray("nodes").getJSONObject(0).

getJSONArray("nodes").getJSONObject(0).

getJSONObject("data");

String object_title = data1.getJSONObject("action").getJSONObject("report").

getJSONObject("trackInfo").getString("object_title");

Integer docSource = data1.getInteger("docSource");

// When QP algorithm interface and service interface are satisfied at the same time

if (qcGrade == 2 && (!qc.equals(keyword))) {

if (!object_title.isEmpty() && docSource != 0) {

System.out.println("The first[" + (count + 1) + "]Logs, searching query by==[" + query + "],Error correction words qc by==[" + qc + "]," +

"docSource==[" + docSource + "],First card title==[" + object_title + "]");

// It is used to write the normal log file originLog, and then split the fields to write to the database and record the read log

// All data

// FileWrite.originLog(FileWrite.rex + query + FileWrite.rex + qc + FileWrite.rex + docSource + "-" + object_title);

// Single field write - original query error correction word qc type - main title of the first card

FileWrite.keywordLog(query);

FileWrite.qcLog(qc);

FileWrite.videoTitleLog(docSource + "-" + object_title);

qpResult = true;

} else {

System.err.println("BUG!BUG!BUG!QP Interface identification error, not corrected!!! The first[" + (count + 1) + "]Logs," +

"search query by==[" + query + "],Error correction words qc by==[" + qc + "]," +

"docSource==[" + docSource + "],First card title==[" + object_title + "]");

}

}

if (qpResult) {

// It's an error correction word card

return 1;

//

} else {

return 0;

}

} else {

System.err.println("The first[" + count + "]Logs, searching query by==[" + query + "],Interface returned null");

}

} catch (Exception e) {

e.printStackTrace();

}

return 2;

}

public static JSONObject jsonObject = new JSONObject();

}700 query category prediction - business code implementation

Algorithm interface - word segmentation, corresponding to query_tagging.tag.word

package com.alibaba.searchQP.utils;

import com.alibaba.fastjson.JSONObject;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.*;

import static com.alibaba.searchQP.utils.ReadFiles.readTxt;

/**

* Excel Text processing: paste query into 1 Txt file, execute awk '{a = a? A', $1: $1; b = B? B ', $2: $2} end {print a, B}' 1 txt

* 700 queries are processed, including error correction, category prediction, rewriting and qt (query tagging participle). The original Excel query takes the first card type - title

* The json format returned by character card-12 SCG broadcast-98 banner (large picture promotion) - 24 program big word-10 is slightly different. Due to time reasons, support for such query has not been developed yet. Therefore, this part of the data capture result is empty and can be moved manually

* Run 700 queries directly with this script

*/

public class logOnlineReadFiles700 {

private static Logger logger = LoggerFactory.getLogger(logOnlineReadFiles700.class);

public static void main(String[] args) {

// Before running the program (reading the new log file), empty the old file (last log information)

FileWrite.deleteAllLogFile();

FileWrite.deleteAllLogCopyFile();

startSearch();

}

// Define the set and put the search scenario into the list set

public static List<String> list = new ArrayList<>();

public static void startSearch() {

long startTime = System.currentTimeMillis();

System.out.println("===The program begins to execute===");

// The concatenated parameter is in Chinese, so you need to put the Chinese in the map

// Method 1: query the top 1000 of the query to be tested in odps, save it locally, and then splice it through the interface

String filePath = "/Users/lishan/Desktop/code/xx/qp.txt";

System.out.println(filePath);

String[] keywords = readTxt(filePath);

// System.out.println("strings:" + Arrays.toString(keywords));

// String keywords=record.getString("f1");

// Method 2: read the code from the odps tool class, query the query of top1000, and then splice it through the interface

// See logOnlineReadODPS

// String [] keywords = {"Wu Yifan", "Yang Mi", "Tang Yan"};

// String[] keywords = {"Wu Yifan"};

int only1 = 0;

String query1 = "";

int totalCount = 0;

try {

for (int i = 0; i < keywords.length; i++) {

Map<String, String> query = new HashMap<>();

query.put("keyword", keywords[i]);

// If the URL does not have a public parameter, set the "public parameter" to "public parameter"? Remove;

// Add cmd=4 to the business interface parameters and get the engine field to return

String url_pre = "http://xx";

// Start the request, domain name, interface name = = url + request parameter param (hashMap)

// String response = HTTPCommonMethod.doGet(url_pre, url_online, map, count);

System.out.println("The first" + (i + 1) + "Data bar==" + query);

String response = HTTPCommonMethod.doGet(url_pre, query, i);

JSONObject responseJson = JSONObject.parseObject(response);

int type = responseToParse(i, keywords[i], responseJson);

// Program card only

if (type == 1) {

only1++;

query1 = query1 + keywords[i] + ",";

// Series cards only

}

// Print the data returned by the interface

// System.out.println("for the [" + i + "] log, the response returned by the pre interface of the advance environment is = = = = = = =" + response);

totalCount = i + 1;

// System.out.println("totalcount of each cycle = =" + totalcount ");

}

} catch (Exception e) {

e.printStackTrace();

}

System.out.println("totalCount==" + totalCount);

float rate3 = (float) only1 / (float) totalCount;

System.out.println("------------------------------------------------------------------------------------------------");

System.out.println("------------------------------------------------------------------------------------------------");

System.out.println("only1---Recall error correction word card==[" + only1 + "]Number, total" + totalCount + "One,---The ratio is==[" + rate3 + "]---query1==[" + query1 + "]");

long endTime = System.currentTimeMillis();

System.out.println("===Program end execution===");

long durationTime = endTime - startTime;

System.out.println("===Program time===[" + durationTime + "]millisecond");

// System.out.println("= = = program time = = [" + durationTime / 1000 / 60 + "] minutes");

}

/**

* @param count

* @param query

* @param response

* @return 1: Get the type of the first card - Title 2: not returned

*/

public static int responseToParse(int count, String query, JSONObject response) {

try {

// HashMap<Integer, Integer> hm = new HashMap<Integer, Integer>();

boolean qpResult = false;

if (!response.isEmpty()) {

// Get JSONObject

// Intention to understand the algorithm interface: whether to correct qcGrade. Error correction word level: 1 prompt, 2 replacement query

// Business interface: get the card title and card type of the first error correction word result

// QP interface

JSONObject data0 = response.getJSONArray("nodes").getJSONObject(0).

getJSONArray("nodes").getJSONObject(0).

getJSONArray("nodes").getJSONObject(0).

getJSONObject("data");

// Service interface

String object_title = data0.getJSONObject("action").getJSONObject("report").

getJSONObject("trackInfo").getString("object_title");

Integer docSource = data0.getInteger("docSource");

if (!object_title.isEmpty()) {

if (docSource == null) {

System.err.println("The first[" + (count + 1) + "]Log, original keyword by==[" + query + "]," +

"docSource==[null],First card title object_title==[" + object_title + "]");

FileWrite.keywordLog(query);

FileWrite.videoTitleLog("null-" + object_title);

qpResult = true;

return 3;

}

if (docSource > 0) {

System.out.println("The first[" + (count + 1) + "]Log, original keyword by==[" + query + "]," +

"docSource==[" + docSource + "],First card title object_title==[" + object_title + "]");

// It is used to write the normal log file originLog, and then split the fields to write to the database and record the read log

// All data

// FileWrite.originLog(FileWrite.rex + query + FileWrite.rex + qc + FileWrite.rex + docSource + "-" + object_title);

// Single field write - original query error correction word qc type - main title of the first card

FileWrite.keywordLog(query);

FileWrite.videoTitleLog(docSource + "-" + object_title);

qpResult = true;

} else if (docSource <= 0) {

System.err.println("BUG!BUG!BUG!Service interface docSource Enumeration value error!!! The first[" + (count + 1) + "]Logs," +

"original keyword by==[" + query + "]," +

"docSource==[" + docSource + "],First card title object_title==[" + object_title + "]");

}

// }

} else if (object_title == null) {

System.err.println("The first[" + (count + 1) + "]Log, original keyword by==[" + query + "]," +

"docSource==[" + docSource + "],First card title object_title==[null]");

// Single field write - original query error correction word qc type - main title of the first card

FileWrite.keywordLog(query);

FileWrite.videoTitleLog(docSource + "-null");

// System.err.println("the [" + count + "] log, the original keyword is = = [" + query + "], and the object_title is empty");

qpResult = true;

return 3;

}

if (object_title == null && docSource == null) {

System.err.println("The first[" + (count + 1) + "]Log, original keyword by==[" + query + "]," +

"docSource==[null],First card title object_title==[null]");

// Single field write - original query error correction word qc type - main title of the first card

FileWrite.keywordLog(query);

FileWrite.videoTitleLog("null-null");

qpResult = true;

return 3;

}

if (qpResult) {

// It's an error correction word card

return 1;

//

} else {

return 0;

}

} else {

System.err.println("The first[" + count + "]Log, original keyword by==[" + query + "],Interface returned null");

}

} catch (Exception e) {

e.printStackTrace();

}

return 2;

}

public static JSONObject jsonObject = new JSONObject();

}2. Parse the request based on the httpclient framework

package com.alibaba.searchQP.utils;

import org.apache.commons.httpclient.Header;

import org.apache.commons.httpclient.HttpClient;

import org.apache.commons.httpclient.NameValuePair;

import org.apache.commons.httpclient.methods.GetMethod;

import org.apache.commons.httpclient.util.EncodingUtil;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

public class HTTPCommonMethod {

/**

* get Request, just pass the changed parameters into params

*

* @param url_pre

* @param params

* @return

*/

public static String requestURL;

public static String doGet(String url_pre, Map<String, String> params, int count) {

try {

Header header = new Header("Content-type", "application/json");

String response = "";

// HttpClient is a sub project under Apache Jakarta Common, which is used to provide an efficient, up-to-date and feature rich client programming toolkit supporting HTTP protocol, and it supports the latest versions and suggestions of HTTP protocol.

// HttpClient has been used in many projects, such as the other two famous open source projects Cactus and HTMLUnit on Apache Jakarta.

// Use HttpClient to send requests and receive responses

HttpClient httpClient = new HttpClient();

if (url_pre != null) {

// NameValuePair is a simple name value pair node type. Mostly used for Java like URLs_ Pre sends a post request. This list is used to store parameters when sending a post request

// getParamsList(url_online, params, count);

// Advance environment value replaces online environment value

List<NameValuePair> qparams_pre = getParamsList_pre(params);

if (qparams_pre != null && qparams_pre.size() > 0) {

String formatParams = EncodingUtil.formUrlEncode(qparams_pre.toArray(new NameValuePair[qparams_pre.size()]),

"utf-8");

url_pre = url_pre.indexOf("?") < 0 ? url_pre + "?" + formatParams : url_pre + "&" + formatParams;

}

requestURL = url_pre;

System.out.println("The first[" + (count+1) + "]Log, advance environment pre imerge Requested url_pre==" + url_pre);

GetMethod getMethod = new GetMethod(url_pre);

getMethod.addRequestHeader(header);

/*if (null != headers) {

Iterator var8 = headers.entrySet().iterator();

while (var8.hasNext()) {

Map.Entry<String, String> entry = (Map.Entry)var8.next();

getMethod.addRequestHeader((String)entry.getKey(), (String)entry.getValue());

}

}*/

//System.out.println(getMethod.getRequestHeader("User-Agent"));

int statusCode = httpClient.executeMethod(getMethod);

// If the request fails, the failed return code is printed out

if (statusCode != 200) {

System.out.println("The first" + statusCode + "[" + count + "]Logs, error in advance environment request, error code:=======" + statusCode);

return response;

}

response = new String(getMethod.getResponseBody(), "utf-8");

}

return response;

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

// Parameter formatting

private static List<NameValuePair> getParamsList_pre(Map<String, String> paramsMap) {

if (paramsMap != null && paramsMap.size() != 0) {

List<NameValuePair> params = new ArrayList();

Iterator var2 = paramsMap.entrySet().iterator();

while (var2.hasNext()) {

Map.Entry<String, String> map = (Map.Entry) var2.next();

// For log playback of the latest version of the pre issuance environment, open the following if else and comment out the last line

// For parameter formatting, the NameValuePair method of Commons httpclient will automatically convert = = to =, as well as special symbol formatting

// NameValuePair is a simple name value pair node type. Mostly used for Java like URLs_ Pre sends a post request. This list is used to store parameters when sending a post request

params.add(new NameValuePair(map.getKey() + "", map.getValue() + ""));

// params.add(new NameValuePair(map.getKey() + "", map.getValue() + ""));

}

return params;

} else {

return null;

}

}

}

3. File reading and parsing

package com.alibaba.searchQP.utils;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.util.Arrays;

public class ReadFiles {

public static String[] readTxt(String filePath) {

StringBuilder builder = new StringBuilder();

try {

File file = new File(filePath);

if (file.isFile() && file.exists()) {

InputStreamReader isr = new InputStreamReader(new FileInputStream(file), "utf-8");

BufferedReader br = new BufferedReader(isr);

String lineTxt = null;

int num = 0;

long time1 = System.currentTimeMillis();

while ((lineTxt = br.readLine()) != null) {

System.out.println(lineTxt);

builder.append(lineTxt);

builder.append(",");

num++;

// System.out.println("total" + num + "pieces of data!");

}

//System.out.println("total" + num + "pieces of data!");

long time2 = System.currentTimeMillis();

long time = time1 - time2;

// System.out.println("total time + time + seconds");

br.close();

} else {

System.out.println("file does not exist!");

}

} catch (Exception e) {

System.out.println("File read error!");

}

String[] strings = builder.toString().split(",");

return strings;

}

public static void main(String[] args) {

String filePath = "/Users/lishan/Desktop/xx.txt";

System.out.println(filePath);

String[] strings = readTxt(filePath);

System.out.println("strings:"+Arrays.toString(strings));

}

}4. Test results: write by Excel (due to time, direct insertion into Excel is not supported, and manual copy is required)

Recall error correction word cards = = [10], 10 in total, --- ratio = = [1.0] - query1 = = [seven little heroes, because of how beautiful the kiss of love is, the little Lord walks slowly, the big can of blue, the trigeminal record, the love of rural materials 8, the youth that calls, the biography of Chu Qiao starring in the domestic TV drama Zhao Liyu, the eye of God's war power, and the little sister buried by dry things,]

===Program end execution===

===Program time = = [4194] MS

5. Optimize the script and write the log output from the console to the file. Delete the history log file before each run of the program

package com.alibaba.searchQP.utils;

import java.io.BufferedWriter;

import java.io.File;

import java.io.FileWriter;

import java.io.IOException;

public class FileWrite {

// Define path global path original log storage path pathOrigin error log storage path pathError

public static String pathAll = "/Users/lishan/Desktop/xx/HistoryLog";

public static String rex = " ";

public static void main(String[] args) {

String content = "a log will be write in file";

System.out.println(content + "" + "");

originLog(content + "" + "");

errorLog(content + "" + "");

}

public static void originLog(String content) {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

File file = new File(pathAll + File.separator + "origin_log.txt");

if (!file.exists()) {

file.createNewFile();

}

FileWriter fileWriter = new FileWriter(file.getAbsoluteFile(), true);

BufferedWriter bw = new BufferedWriter(fileWriter);

bw.write(content + "\r\n");

bw.close();

System.out.println("finish");

} catch (IOException e) {

e.printStackTrace();

}

}

public static void keywordLog(String content) {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

File file = new File(pathAll + File.separator + "keyword_log.txt");

if (!file.exists()) {

file.createNewFile();

}

FileWriter fileWriter = new FileWriter(file.getAbsoluteFile(), true);

BufferedWriter bw = new BufferedWriter(fileWriter);

bw.write(content + "\r\n");

bw.close();

System.out.println("finish");

} catch (IOException e) {

e.printStackTrace();

}

}

public static void qcLog(String content) {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

File file = new File(pathAll + File.separator + "qc_log.txt");

if (!file.exists()) {

file.createNewFile();

}

FileWriter fileWriter = new FileWriter(file.getAbsoluteFile(), true);

BufferedWriter bw = new BufferedWriter(fileWriter);

bw.write(content + "\r\n");

bw.close();

System.out.println("finish");

} catch (IOException e) {

e.printStackTrace();

}

}

public static void videoTitleLog(String content) {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

File file = new File(pathAll + File.separator + "videoTitle_log.txt");

if (!file.exists()) {

file.createNewFile();

}

FileWriter fileWriter = new FileWriter(file.getAbsoluteFile(), true);

BufferedWriter bw = new BufferedWriter(fileWriter);

bw.write(content + "\r\n");

bw.close();

System.out.println("finish");

} catch (IOException e) {

e.printStackTrace();

}

}

public static void errorLog(String content) {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

File file = new File(pathAll + File.separator + "error_log.txt");

if (!file.exists()) {

file.createNewFile();

}

FileWriter fileWriter = new FileWriter(file.getAbsoluteFile(), true);

BufferedWriter bw = new BufferedWriter(fileWriter);

bw.write("\r\n" + content);

bw.close();

System.out.println("finish");

} catch (IOException e) {

e.printStackTrace();

}

}

public static String getErrorLog(String currentTimeMillis, String clientTimeStamp, int count, String printMsg) {

String errorLog = "";

errorLog = currentTimeMillis + FileWrite.rex + clientTimeStamp + FileWrite.rex + count + FileWrite.rex + printMsg;

return errorLog;

}

// Error log write cpw_log_error data table. There is no log of clientTimeStamp

public static String getErrorLogNoclientTimeStamp(String currentTimeMillis, int count, String printMsg, int errorLevel) {

String errorLogNoclientTimeStamp = "";

// errorLogNoclientTimeStamp = currentTimeMillis + FileWrite.rex + clientTimeStamp + FileWrite.rex + count + FileWrite.rex +printMsg;

errorLogNoclientTimeStamp = currentTimeMillis + FileWrite.rex + "Not yet clientTimeStamp" + FileWrite.rex + count + FileWrite.rex + printMsg + FileWrite.rex + errorLevel;

return errorLogNoclientTimeStamp;

}

// Error log write cpw_log_error data table, with the log of clientTimeStamp

public static String getErrorLogHasclientTimeStamp(String currentTimeMillis, String clientTimeStamp, int count, String printMsg, int errorLevel) {

String getErrorLogHasclientTimeStamp = "";

// errorLogNoclientTimeStamp = currentTimeMillis + FileWrite.rex + clientTimeStamp + FileWrite.rex + count + FileWrite.rex +printMsg;

getErrorLogHasclientTimeStamp = currentTimeMillis + FileWrite.rex + clientTimeStamp + FileWrite.rex + count + FileWrite.rex + printMsg + FileWrite.rex + errorLevel;

return getErrorLogHasclientTimeStamp;

}

// Delete original origin_log.txt and error_log.txt to prevent repeated log writes

public static void deleteAllLogFile() {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

// Delete the original keyword log

File fileKeyword = new File(pathAll + File.separator + "keyword_log.txt");

if (fileKeyword.exists()) {

fileKeyword.delete();

}

System.out.println("original keyword The log has been deleted. delete keyword_log.txt file success");

// Delete error correction word qc error log

File fileQc = new File(pathAll + File.separator + "qc_log.txt");

if (fileQc.exists()) {

fileQc.delete();

}

System.out.println("Error correction word log deleted. delete qc_log.txt file success");

// Delete the original keyword log

File fileVideoTitle = new File(pathAll + File.separator + "videoTitle_log.txt");

if (fileVideoTitle.exists()) {

fileVideoTitle.delete();

}

System.out.println("The first card main title log has been deleted. delete videoTitle_log.txt file success");

} catch (Exception e) {

e.printStackTrace();

}

}

// Delete original origin_log.txt to prevent repeated log writing caused by repeated interface requests

public static void deleteOriginLogFirstRunFile() {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

// Delete original log

File fileOrigin = new File(pathAll + File.separator + "origin_log.txt");

if (fileOrigin.exists()) {

fileOrigin.delete();

}

System.out.println("The original log has been deleted. delete origin_log.txt file success");

} catch (Exception e) {

e.printStackTrace();

}

}

// Delete original error_log.txt to prevent repeated log writing caused by repeated interface requests

public static void deleteErrorLogFirstRunFile() {

try {

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

// Delete error log

File fileError = new File(pathAll + File.separator + "error_log.txt");

if (fileError.exists()) {

fileError.delete();

}

System.out.println("Error parameter assertion log deleted. delete error_log.txt file success");

} catch (Exception e) {

e.printStackTrace();

}

}

// Delete replica origin_log_copy.txt and error_log_copy.txt

public static void deleteAllLogCopyFile() {

try {

// Delete original log copy

// File.separator stands for the separator in the system directory. In other words, it is a slash '\', but sometimes double lines and sometimes single lines are required. Using this static variable can solve the compatibility problem.

File fileOrigin = new File(pathAll + File.separator + "origin_log_copy.txt");

if (fileOrigin.exists()) {

fileOrigin.delete();

}

System.out.println("The original log copy has been deleted. delete origin_log_copy.txt file ");

// Delete error log copy

File fileError = new File(pathAll + File.separator + "error_log_copy.txt");

if (fileError.exists()) {

fileError.delete();

}

System.out.println("Error parameter assertion log copy deleted. delete error_log_copy file ");

} catch (Exception e) {

e.printStackTrace();

}

}

}

Then copy and paste the log of txt file (the required column to Excel)

To be continued