brief introduction

PV is the number of clicks, which is usually an important indicator to measure a network news channel or website or even a network news. To some extent, PV has become the most important measure for investors to measure the performance of commercial websites. PV is a visitor in 24 hours to see several pages of the site.

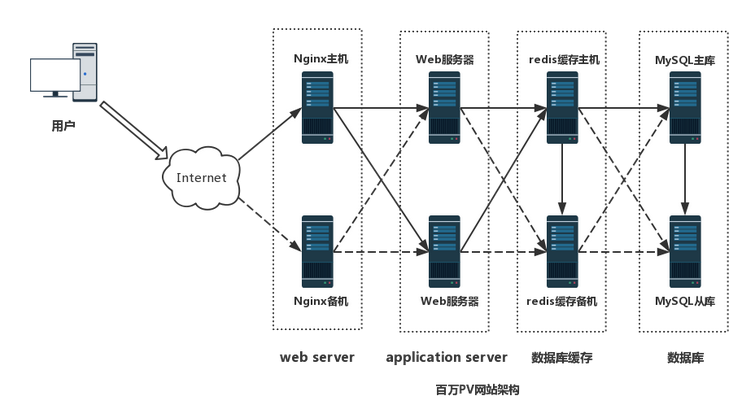

Case description

The design of this case is implemented in four layers, which are mainly divided into front-end reverse agent, web layer, database cache layer and database layer. The front-end reverse agent layer adopts the master-slave mode, the web layer adopts the cluster mode, the database cache adopts the master-slave mode, and the database layer adopts the master-slave mode.

Case environment

Main: 192.168.177.145 centos7-1

From: 192.168.177.135 centos7-2

Node 1: 192.168.177.132 centos7-3

Node 2: 192.168.177.133 centos7-4

Master server slave server

Install source with nginx rpm package

#rpm -ivh http://nginx.org/packages/centos/7/noarch/RPMS/\

nginx-release-centos-7-0.el7.ngx.noarch.rpm

//Use the centos default repository to complete the following installation

#yum install -y keepalived nginx

#vim /etc/keepalived/keepalived.conf / / modify the three parameters from the

! Configuration File for keepalived

vrrp_script nginx {

script "/opt/shell/nginx.sh"

interval 2

} //Add to

global_defs {

route_id NGINX_HA //modify

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

nginx

}

virtual_ipaddress {

192.168.177.188 //Virtual IP

192.168.200.188

}

}

#mkdir /opt/shell

#cd /opt/shell

#vim /opt/shell/nginx.sh

#!/bin/bash

k=`ps -ef | grep keepalived | grep -v grep | wc -l`

if [ $k -gt 0 ];then

/bin/systemctl start nginx.service

else

/bin/systemctl stop nginx.service

fi

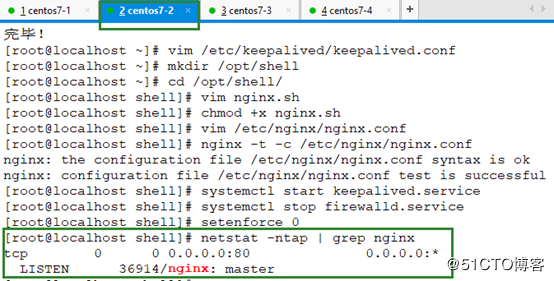

#chmod +x /opt/shell/nginx.sh / / becomes an executable scriptThe secondary server is the same as the primary server configuration (content to be modified)

#yum install -y keepalived nginx

#vim /etc/keepalived/keepalived.conf / / modify the three parameters from the

! Configuration File for keepalived

vrrp_script nginx {

script "/opt/shell/nginx.sh"

interval 2

} //Add to

global_defs {

route_id NGINX_HB //modify

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 52

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

nginx

}

virtual_ipaddress {

192.168.177.188 //Virtual IP

192.168.200.188

}

}

#mkdir /opt/shell

#cd /opt/shell

#vim /opt/shell/nginx.sh

#!/bin/bash

k=`ps -ef | grep keepalived | grep -v grep | wc -l`

if [ $k -gt 0 ];then

/bin/systemctl start nginx.service

else

/bin/systemctl stop nginx.service

fi

#chmod +x /opt/shell/nginx.sh / / becomes an executable script

Configure nginx front-end scheduling function (Master, slave)

#vim /etc/nginx/nginx.conf //Under gzip on; add:

upstream tomcat_pool {

server 192.168.177.132:8080;

server 192.168.177.133:8080;

ip_hash; #Session stabilization function, otherwise you cannot log in through vip address

}

server {

listen 80;

server_name 192.168.177.188; #Virtual out IP

location / {

proxy_pass http://tomcat_pool;

proxy_set_header X-Real-IP $remote_addr;

}

}

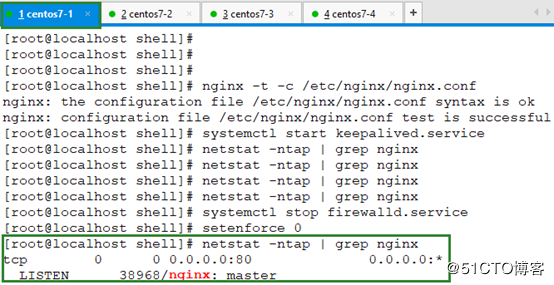

#nginx -t -c /etc/nginx/nginx.conf / / test configuration file syntax

#Systemctl start kept.service / / nginx start will wait for a whiletomcat is required for both nodes

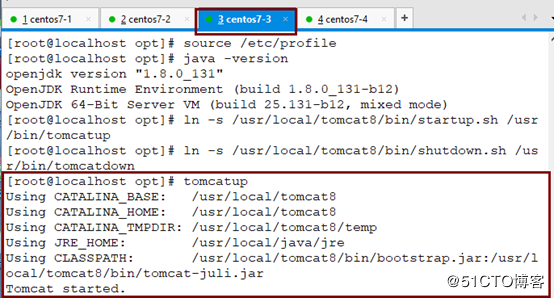

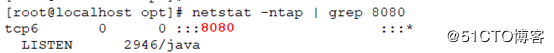

#tar xf apache-tomcat-8.5.23.tar.gz #tar xf jdk-8u144-linux-x64.tar.gz #mv apache-tomcat-8.5.23/ /usr/local/tomcat8 #mv jdk1.8.0_144/ /usr/local/java #vim /etc/profile export JAVA_HOME=/usr/local/java export JRE_HOME=/usr/local/java/jre export PATH=$PATH:/usr/local/java/bin export CLASSPATH=./:/usr/local/java/lib:/usr/local/java/jre/lib #source /etc/profile #java -version //One million PV of large website architecture #ln -s /usr/local/tomcat8/bin/startup.sh /usr/bin/tomcatup #ln -s /usr/local/tomcat8/bin/shutdown.sh /usr/bin/tomcatdown #tomcatup / / start the service #netstat -anpt | grep 8080

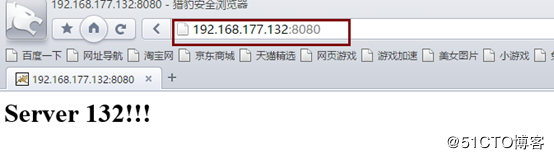

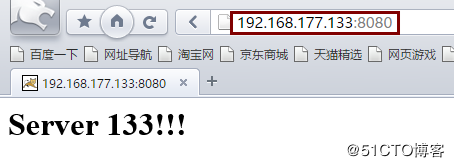

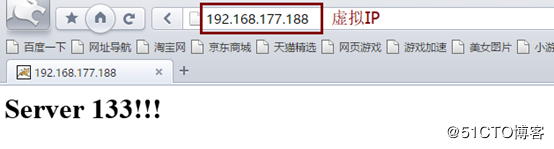

#vim /usr/local/tomcat8/webapps/ROOT/index.jsp / / modify the default page content <h1>Server 132!!!</h1> http://192.168.177.132:8080 / / / test whether the default test page displays normally (node) http://192.168.177.133:8080/ http://192.168.177.188/input the scheduler address, that is, the virtual address, to test the scheduling of the two nodes. //Turn down 192.168.177.132 (tomcatdown) and see if http://192.168.177.188/ will display < H1 > server 133</h1>

#vim server.xml / / skip to the end of the line and add 148 lines under Host name <Context path="" docBase="SLSaleSystem" reloadable="true" debug="0"></Context> If the log debug ging information is 0, the less information there is, the docBase specifies the access directory Mysql installation (Master, slave) #yum install -y mariadb-server mariadb #systemctl start mariadb.service #systemctl enable mariadb.service / / boot up #netstat -anpt | grep 3306 #MySQL? Secure? Installation / / general security settings #mysql -u root -p

Import database

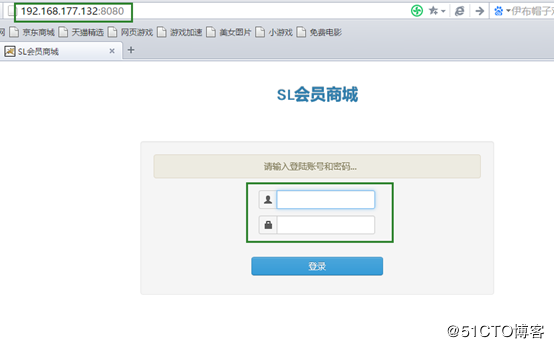

Mount first #mysql -u root -p < slsaledb-2014-4-10.sql #mysql -u root -p show databases; GRANT all ON slsaledb.* TO 'root'@'%' IDENTIFIED BY 'abc123'; flush privileges; One million PV of large website architecture Do the following in two tomcat nodes #tar xf SLSaleSystem.tar.gz -C /usr/local/tomcat8/webapps/ #cd /usr/local/tomcat8/webapps/SLSaleSystem/WEB-INF/classes #vim jdbc.properties / / modify the virtual IP address of the VRRP database, and the authorized user name root and password abc123. One million PV of large website architecture Website testing http://192.168.177.132:8080/ / / default user name admin Password: 123456 http://192.168.177.133:8080/ http://192.168.177.188 / / enter the virtual address to test the login, and turn off the main retest login

redis cluster (Master, slave)

#yum install -y epel-release #yum install redis -y #vim /etc/redis.conf bind 0.0.0.0 #systemctl start redis.service #netstat -anpt | grep 6379 #redis-cli -h 192.168.177.145 -p 6379 / / test connection 192.168.177.145:6379> set name test //Set the name value to test 192.168.177.145:6379> get name //Get name value ```

Line 266: slavof 192.168.177.145 6379 / / the IP of the primary server is not a virtual IP

#redis-cli -h 192.168.177.135 -p 6379 / / log in to the slave and obtain the value. Success indicates that the master-slave synchronization is successful

192.168.177.135:6379> get name

"test"

##One million PV of large website architecture

Configure the parameters of connecting redis in the mall project

38 <! -- redis configuration start -- >

47 <constructor-arg value="192.168.177.188"/>

48 <constructor-arg value="6379"/>

Test cache effect

#redis-cli -h 192.168.177.188 -p 6379

192.168.177.188:6379> info

keyspace_hits:1 or keyspace_misses:2 / / focus on this value, hit and miss

Log in to the mall, and then repeatedly click the operation page that the database needs to participate in, and then come back to check the value change of keyspace_hits or keyspace_misses.

## Configure the master-slave switch of redis cluster, and only operate on the master server

#Redis cli - H 192.168.177.145 info replication / / get the role of the current server

#vim /etc/redis-sentinel.conf

17 lines protected mode no17g

Line 68 sentinel monitor mymaster 192.168.177.145 6379 1 //1 means one slave note: modify

98 lines sentinel down after milliseconds mymaster 3000 / / the unit of failover time is milliseconds

#Service redis sentinel start / / start the cluster

#netstat -anpt | grep 26379

#Redis cli - H 192.168.177.145 - P 26379 info sentinel / / view the cluster information

##Mysql master slave

mysql master server configuration

#Under vim /etc/my.cnf //[mysqld]

binlog-ignore-db=mysql,information_schema

character_set_server=utf8

log_bin=mysql_bin

server_id=1

log_slave_updates=true

sync_binlog=1

#systemctl restart mariadb

#netstat -anpt | grep 3306

#mysql -u root -p

show master status; / / record the log file name and location value

grant replication slave on . to 'rep'@'192.168.177.%' identified by '123456';

flush privileges;

One million PV of large website architecture

mysql configuration from server

#Under vim /etc/my.cnf //[mysqld]

binlog-ignore-db=mysql,information_schema

character_set_server=utf8

log_bin=mysql_bin

server_id=2

log_slave_updates=true

sync_binlog=1

#systemctl restart mariadb

#netstat -anpt | grep 3306

#mysql -u root -p

change master to master_host='192.168.177.145',master_user='rep',master_password='123456',master_log_file='mysql_bin.000001',master_log_pos=245;

start slave;

show slave status\G;

Slave_IO_Running: Yes Slave_SQL_Running: Yes

One million PV of large website architecture