Template matching method

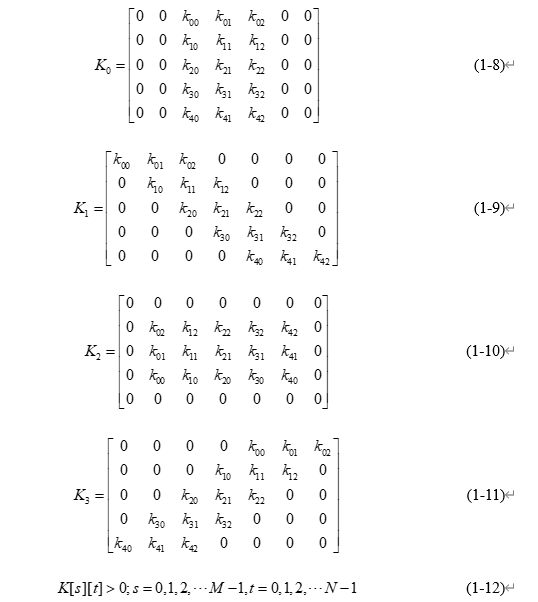

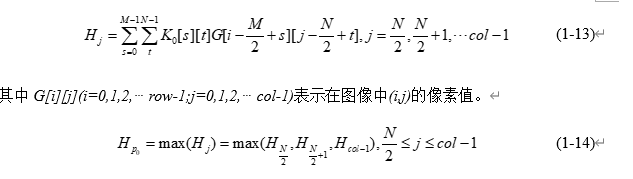

Directional template method is a method proposed by Hu bin and others to detect the fringe center of structured light by using variable directional template. It is an improved algorithm based on gray center of gravity method [5]. When the linear structured light is projected onto the rough object material surface, the light stripe will produce offset and deformation. When the accuracy requirement is not very high, the template in four directions of light stripe offset can be approximately constructed, namely horizontal, vertical, 45 ° left and 45 ° right. Corresponding to the above four offset modes in which the light fringes are offset, templates in four directions are constructed (see equations 1-8, 1-9, 1-10, 1-11 and 1-2 for templates in four directions), the pixel blocks in each row of the light fringes section are convoluted with four templates respectively, and the center of the pixel block with the largest calculated response value is taken as the fringe center point of the light fringes section in this row. Set the image size as row row and col column, and the template slides on a row i of the image, for example, row i and column j have the following effects on the template:

Directional template method is a method proposed by Hu bin and others to detect the fringe center of structured light by using variable directional template. It is an improved algorithm based on gray center of gravity method [5]. When the linear structured light is projected onto the rough object material surface, the light stripe will produce offset and deformation. When the accuracy requirement is not very high, the template in four directions of light stripe offset can be approximately constructed, namely horizontal, vertical, 45 ° left and 45 ° right. Corresponding to the above four offset modes in which the light fringes are offset, templates in four directions are constructed (see equations 1-8, 1-9, 1-10, 1-11 and 1-2 for templates in four directions), the pixel blocks in each row of the light fringes section are convoluted with four templates respectively, and the center of the pixel block with the largest calculated response value is taken as the fringe center point of the light fringes section in this row. Set the image size as row row and col column, and the template slides on a row i of the image, for example, row i and column j have the following effects on the template:

The templates ? 1, ? 2 and ? 3 have corresponding response values ? 1, ? 2 and ? 3 respectively. If there is ? = ?1198866; 119909; {? 119901; 0, ? 119901; 1, ? 119901; 2 and ? 3}, the center of the laser stripe on line i is at point ?. Directional template method is a method to extract the center line of light stripe which can overcome the interference of white noise. In addition, to a certain extent, the directional template method can also be used for the repair and connection of broken lines, but limited to the limited template direction, the surface of solid objects with complex texture may directly cause a stripe to move in more directions and produce height offset. In the process of object measurement with higher accuracy requirements, only selecting the offset template in four directions can no longer meet the needs of measurement accuracy in the actual task, but selecting more different direction templates will further increase the calculation time and amount, which will affect the processing efficiency.

python code

# Direction template method

# author luoye 2022/1/21

# The matching effect of direction template is not good

import cv2

import os

import tqdm

import numpy as np

from scipy import ndimage

from skimage import morphology

def imgConvolve(image, kernel):

'''

:param image: Picture matrix

:param kernel: Filter window

:return:Convoluted matrix

'''

img_h = int(image.shape[0])

img_w = int(image.shape[1])

kernel_h = int(kernel.shape[0])

kernel_w = int(kernel.shape[1])

# padding

padding_h = int((kernel_h - 1) / 2)

padding_w = int((kernel_w - 1) / 2)

convolve_h = int(img_h + 2 * padding_h)

convolve_W = int(img_w + 2 * padding_w)

# Allocate space

img_padding = np.zeros((convolve_h, convolve_W))

# Picture center filling

img_padding[padding_h:padding_h + img_h, padding_w:padding_w + img_w] = image[:, :]

# Convolution result

image_convolve = np.zeros(image.shape)

# convolution

for i in range(padding_h, padding_h + img_h):

for j in range(padding_w, padding_w + img_w):

image_convolve[i - padding_h][j - padding_w] = int(

np.sum(img_padding[i - padding_h:i + padding_h + 1, j - padding_w:j + padding_w + 1] * kernel))

return image_convolve

def imgGaussian(sigma):

'''

:param sigma: σstandard deviation

:return: Template of Gaussian filter

'''

img_h = img_w = 2 * sigma + 1

gaussian_mat = np.zeros((img_h, img_w))

for x in range(-sigma, sigma + 1):

for y in range(-sigma, sigma + 1):

gaussian_mat[x + sigma][y + sigma] = np.exp(-0.5 * (x ** 2 + y ** 2) / (sigma ** 2))

return gaussian_mat

def imgAverageFilter(image, kernel):

'''

:param image: Picture matrix

:param kernel: Filter window

:return:Matrix after mean filtering

'''

return imgConvolve(image, kernel) * (1.0 / kernel.size)

T1=np.array([[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0]])

T2=np.array([[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[1,1,1,1,1,1,1,1,1,1,1,1,1],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0]])

T3=np.array([[1,0,0,0,0,0,0,0,0,0,0,0,0],

[0,1,0,0,0,0,0,0,0,0,0,0,0],

[0,0,1,0,0,0,0,0,0,0,0,0,0],

[0,0,0,1,0,0,0,0,0,0,0,0,0],

[0,0,0,0,1,0,0,0,0,0,0,0,0],

[0,0,0,0,0,1,0,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,0,1,0,0,0,0,0],

[0,0,0,0,0,0,0,0,1,0,0,0,0],

[0,0,0,0,0,0,0,0,0,1,0,0,0],

[0,0,0,0,0,0,0,0,0,0,1,0,0],

[0,0,0,0,0,0,0,0,0,0,0,1,0],

[0,0,0,0,0,0,0,0,0,0,0,0,1]])

T4=np.array([[0,0,0,0,0,0,0,0,0,0,0,0,1],

[0,0,0,0,0,0,0,0,0,0,0,1,0],

[0,0,0,0,0,0,0,0,0,0,1,0,0],

[0,0,0,0,0,0,0,0,0,1,0,0,0],

[0,0,0,0,0,0,0,0,1,0,0,0,0],

[0,0,0,0,0,0,0,1,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,1,0,0,0,0,0,0,0],

[0,0,0,0,1,0,0,0,0,0,0,0,0],

[0,0,0,1,0,0,0,0,0,0,0,0,0],

[0,0,1,0,0,0,0,0,0,0,0,0,0],

[0,1,0,0,0,0,0,0,0,0,0,0,0],

[1,0,0,0,0,0,0,0,0,0,0,0,0]])

def DTM(img, thresh):

# thresh = 40

# image_orig = cv2.imread("./3.png")

# image = np.copy(image_orig)

image = np.copy(img)

# original image

# cv2.namedWindow("original image", 0)

# cv2.imshow("original image", image)

# cv2.waitKey(10)

image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

image0 = image

# Filter noise

image[image < thresh] = 0

# Gaussian filtering

# image = cv2.GaussianBlur(image, (5, 5), 0, 0)

image = ndimage.filters.convolve(image, imgGaussian(5), mode='nearest')

image = np.uint8(np.log(1 + np.array(image, dtype=np.float32)))

# Filtered image

# cv2.namedWindow("lvbo image", 0)

# cv2.imshow("lvbo image", image)

# cv2.waitKey(10)

# Otsu threshold

ret, image = cv2.threshold(image, 0, 255, cv2.THRESH_OTSU)

if ret:

image = image / 255.

kernel = np.ones((3, 3), np.uint8)

image = cv2.dilate(image, kernel, iterations=1)

# cv2.namedWindow("dilate image", 0)

# cv2.imshow('dilate image', image)

# cv2.waitKey(10)

# cv2.destroyAllWindows()

# Closed operation

kernel1 = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (5, 5))

image = cv2.morphologyEx(image, cv2.MORPH_CLOSE, kernel1)

# image = cv2.erode(image, kernel, iterations=6)

image = morphology.skeletonize(image)

image = np.multiply(image0, image)

# image = image.astype(np.uint8)*255

# cv2.namedWindow("thin image", 0)

# cv2.imshow('thin image', image)

# cv2.waitKey(10)

row, col = image.shape

# np.zeros_like(image)

H1 = np.zeros((row, col))

H2 = np.zeros((row, col))

H3 = np.zeros((row, col))

H4 = np.zeros((row, col))

num1 = np.zeros((11, 1))

num2 = np.zeros((11, 1))

centerU = 0

centerV = 0

center = []

CenterPoint = []

image1 = np.array(image, np.uint8)

for u in range(7, row - 6):

for v in range(7, col - 6):

if image1[u][v]:

for i in range(0, 13):

for j in range(0, 13):

H1[u][v] = image1[u - 7 + i][v - 7 + j] * T1[i][j] + H1[u][v]

H2[u][v] = image1[u - 7 + i][v - 7 + j] * T2[i][j] + H2[u][v]

H3[u][v] = image1[u - 7 + i][v - 7 + j] * T3[i][j] + H3[u][v]

H4[u][v] = image1[u - 7 + i][v - 7 + j] * T4[i][j] + H4[u][v]

data = np.array([H1[u][v], H2[u][v], H3[u][v], H4[u][v]])

# h = np.where(data == np.max(data))

h = np.argmax(data)

# cc = len(h)

# if cc > 1: h = h[1]

if h == 0:

for k in range(0, 11):

num1[k] = image0[u - 5 + k, v] * (u - 5 + k)

num2[k] = image0[u - 5 + k, v]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

centerV = v

CenterPoint.append([int(centerV), int(centerU[0])])

elif h == 1:

for k in range(0, 9):

num1[k] = image0[u, v - 5 + k] * (v - 5 + k)

num2[k] = image0[u, v - 5 + k]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

centerV = u

CenterPoint.append([int(centerU[0]), int(centerV)])

# print(int(centerV), int(centerU[0]))

elif h == 2:

for k in range(0, 9):

num1[k] = image0[u + 5 - k, v - 5 + k] * (v - 5 + k)

num2[k] = image0[u + 5 - k, v - 5 + k]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

for k in range(0, 9):

num1[k] = image0[u + 5 - k, v - 5 + k] * (u + 5 - k)

num2[k] = image0[u + 5 - k, v - 5 + k]

if sum(num2)[0] != 0:

centerV = sum(num1) / sum(num2)

CenterPoint.append([int(centerU[0]), int(centerV[0])])

# print(int(centerV), int(centerU[0]))

elif h == 3:

for k in range(0, 9):

num1[k] = image0[u - 5 + k, v - 5 + k] * (v - 5 + k)

num2[k] = image0[u - 5 + k, v - 5 + k]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

for k in range(0, 9):

num1[k] = image0[u - 5 + k, v - 5 + k] * (u - 5 + k)

num2[k] = image0[u - 5 + k, v - 5 + k]

if sum(num2)[0] != 0:

centerV = sum(num1) / sum(num2)

CenterPoint.append([int(centerU[0]), int(centerV[0])])

# print(int(centerV), int(centerU[0]))

# print(CenterPoint)

newimage = np.zeros_like(image)

for point in CenterPoint:

img[point[1], point[0], :] = (0, 0, 255)

newimage[point[1],point[0]] = 255

# cv2.namedWindow("result", 0)

# cv2.imshow("result", image_orig)

# cv2.waitKey(0)

return img, newimage

if __name__ == "__main__":

import time

import os

import tqdm

image_path = "./Jay/images/"

save_path = "./Jay/dmt/"

if not os.path.isdir(save_path): os.makedirs(save_path)

sum_time = 0

for img in tqdm.tqdm(os.listdir(image_path)):

image = cv2.imread(os.path.join(image_path, img))

start_time = time.time()

image_c, line = DTM(image, thresh=40)

end_time = time.time()

sum_time += end_time - start_time

cv2.imwrite(os.path.join(save_path, img), image_c)

cv2.imwrite(os.path.join(save_path, img.split('.')[0] + "_line.png"), line)

average_time = sum_time / len(os.listdir(image_path))

print("Average one image time: ", average_time)