ceph RBD

Environmental Science

192.168.126.101 ceph01

192.168.126.102 ceph02

192.168.126.103 ceph03

192.168.126.104 ceph04

192.168.126.105 ceph-admin

192.168.48.11 ceph01

192.168.48.12 ceph02

192.168.48.13 ceph03

192.168.48.14 ceph04

192.168.48.15 ceph-admin

192.168.48.56 web

Create RBD

Create pool

[cephadm@ceph-admin ceph-cluster]$ ceph osd pool create rbdpool 64 64

pool 'rbdpool' created

Create client account

[cephadm@ceph-admin ceph-cluster]$ ceph auth get-or-create client.rbdpool mon 'allow r' osd 'allow * pool=rbdpool'

[client.rbdpool]

key = AQAYrzJdUh5IIBAA4E13hVvtjkfnMc0JKPwbOA==

[cephadm@ceph-admin ceph-cluster]$ ceph auth get client.rbdpool

exported keyring for client.rbdpool

[client.rbdpool]

key = AQAYrzJdUh5IIBAA4E13hVvtjkfnMc0JKPwbOA==

caps mon = "allow r"

caps osd = "allow * pool=rbdpool"

Export client keyring

[cephadm@ceph-admin ceph-cluster]$ ceph auth get client.rbdpool -o ceph.client.rbdpool.keyring

exported keyring for client.rbdpool

pool start RBD

[cephadm@ceph-admin ceph-cluster]$ ceph osd pool application enable rbdpool rbd

enabled application 'rbd' on pool 'rbdpool'

[cephadm@ceph-admin ceph-cluster]$ rbd pool init rbdpool

Create image

[cephadm@ceph-admin ceph-cluster]$ rbd create --pool rbdpool --image vol01 --size 2G

[cephadm@ceph-admin ceph-cluster]$ rbd create --size 2G rbdpool/vol02

[cephadm@ceph-admin ceph-cluster]$ rbd ls --pool rbdpool

vol01

vol02

[cephadm@ceph-admin ceph-cluster]$ rbd ls --pool rbdpool -l

NAME SIZE PARENT FMT PROT LOCK

vol01 2 GiB 2

vol02 2 GiB 2

[cephadm@ceph-admin ceph-cluster]$ rbd ls --pool rbdpool -l --format json --pretty-format

[

{

"image": "vol01",

"size": 2147483648,

"format": 2

},

{

"image": "vol02",

"size": 2147483648,

"format": 2

}

]

[cephadm@ceph-admin ceph-cluster]$ rbd info rbdpool/vol01

rbd image 'vol01':

size 2 GiB in 512 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: d48cc0cdf2a2

block_name_prefix: rbd_data.d48cc0cdf2a2

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Sat Jul 20 19:00:02 2019

access_timestamp: Sat Jul 20 19:00:02 2019

modify_timestamp: Sat Jul 20 19:00:02 2019

[cephadm@ceph-admin ceph-cluster]$ rbd info --pool rbdpool vol02

rbd image 'vol02':

size 2 GiB in 512 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: d492e1d9de65

block_name_prefix: rbd_data.d492e1d9de65

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Sat Jul 20 19:01:14 2019

access_timestamp: Sat Jul 20 19:01:14 2019

modify_timestamp: Sat Jul 20 19:01:14 2019

Disable image property

[cephadm@ceph-admin ceph-cluster]$ rbd feature disable rbdpool/vol01 object-map fast-diff deep-flatten exclusive-lock

[cephadm@ceph-admin ceph-cluster]$ rbd feature disable rbdpool/vol02 object-map fast-diff deep-flatten exclusive-lock

[cephadm@ceph-admin ceph-cluster]$ rbd info --pool rbdpool vol02

rbd image 'vol02':

size 2 GiB in 512 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: d492e1d9de65

block_name_prefix: rbd_data.d492e1d9de65

format: 2

features: layering

op_features:

flags:

create_timestamp: Sat Jul 20 19:01:14 2019

access_timestamp: Sat Jul 20 19:01:14 2019

modify_timestamp: Sat Jul 20 19:01:14 2019

[cephadm@ceph-admin ceph-cluster]$ rbd info --pool rbdpool vol01

rbd image 'vol01':

size 2 GiB in 512 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: d48cc0cdf2a2

block_name_prefix: rbd_data.d48cc0cdf2a2

format: 2

features: layering

op_features:

flags:

create_timestamp: Sat Jul 20 19:00:02 2019

access_timestamp: Sat Jul 20 19:00:02 2019

modify_timestamp: Sat Jul 20 19:00:02 2019

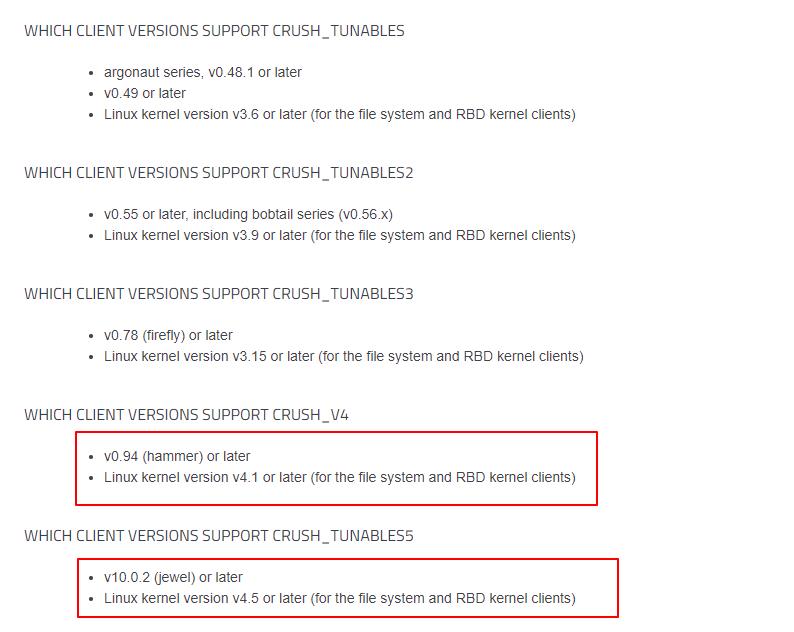

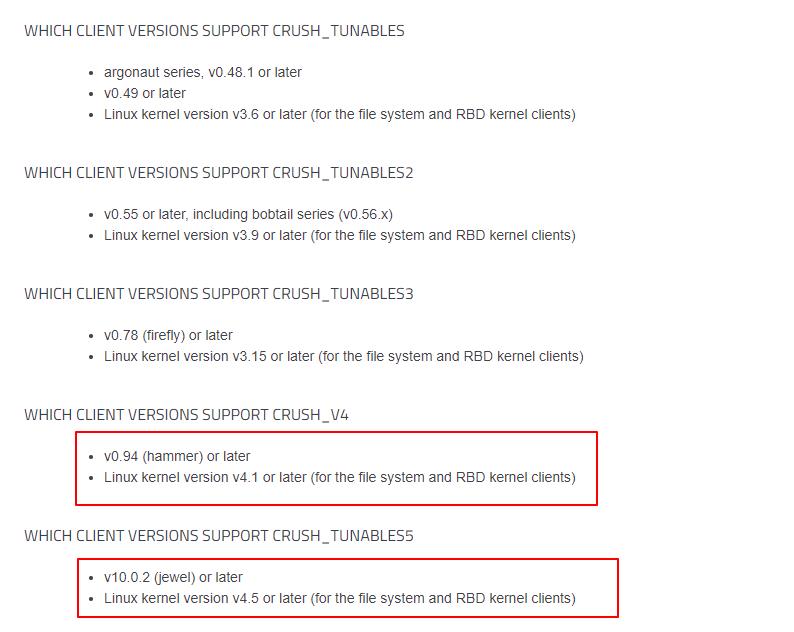

Adjust crush tunables version

n The default version of version is jewel,Kernel 4 required.5 Above

[cephadm@ceph-admin ceph-cluster]$ ceph osd crush show-tunables

{

"choose_local_tries": 0,

"choose_local_fallback_tries": 0,

"choose_total_tries": 50,

"chooseleaf_descend_once": 1,

"chooseleaf_vary_r": 1,

"chooseleaf_stable": 1,

"straw_calc_version": 1,

"allowed_bucket_algs": 54,

"profile": "jewel",

"optimal_tunables": 1,

"legacy_tunables": 0,

"minimum_required_version": "jewel",

"require_feature_tunables": 1,

"require_feature_tunables2": 1,

"has_v2_rules": 0,

"require_feature_tunables3": 1,

"has_v3_rules": 0,

"has_v4_buckets": 1,

"require_feature_tunables5": 1,

"has_v5_rules": 0

}

My client is version 4.4

[root@web ~]# uname -r

4.4.180-1.el7.elrepo.x86_64

Official document: http://docs.ceph.com/docs/master/rados/operations/crash-map/ "tunables"

[cephadm@ceph-admin ceph-cluster]$ ceph osd crush tunables hammer

adjusted tunables profile to hammer

[cephadm@ceph-admin ceph-cluster]$ ceph osd crush show-tunables

{

"choose_local_tries": 0,

"choose_local_fallback_tries": 0,

"choose_total_tries": 50,

"chooseleaf_descend_once": 1,

"chooseleaf_vary_r": 1,

"chooseleaf_stable": 0,

"straw_calc_version": 1,

"allowed_bucket_algs": 54,

"profile": "hammer",

"optimal_tunables": 0,

"legacy_tunables": 0,

"minimum_required_version": "hammer",

"require_feature_tunables": 1,

"require_feature_tunables2": 1,

"has_v2_rules": 0,

"require_feature_tunables3": 1,

"has_v3_rules": 0,

"has_v4_buckets": 1,

"require_feature_tunables5": 0,

"has_v5_rules": 0

}

Client web mount rbd

[root@web ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 2G 0 lvm

└─centos-home 253:2 0 47G 0 lvm /home

sr0 11:0 1 1024M 0 rom

Configure ceph and epel sources

vim /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/$basearch

enabled=1

gpgcheck=0

type=rpm-md

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

cat > /etc/yum.repos.d/epel.repo << EOF

[epel]

name=Extra Packages for Enterprise Linux 7 - $basearch

baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/7/$basearch

#mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-7&arch=$basearch

failovermethod=priority

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

[epel-debuginfo]

name=Extra Packages for Enterprise Linux 7 - $basearch - Debug

baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/7/$basearch/debug

#mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-debug-7&arch=$basearch

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=1

[epel-source]

name=Extra Packages for Enterprise Linux 7 - $basearch - Source

baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/7/SRPMS

#mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-source-7&arch=$basearch

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=1

EOF

Install CEPH common

[root@web ~]# yum -y install ceph-common

Preparing client keyring and ceph configuration files

[cephadm@ceph-admin ceph-cluster]$ scp ceph.client.rbdpool.keyring root@web:/etc/ceph

[cephadm@ceph-admin ceph-cluster]$ scp ceph.conf root@web:/etc/ceph

[root@web ~]# ls /etc/ceph/

ceph.client.rbdpool.keyring ceph.conf rbdmap

[root@web ~]# ceph --user rbdpool -s

cluster:

id: 8a83b874-efa4-4655-b070-704e63553839

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph01,ceph02,ceph03 (age 8h)

mgr: ceph04(active, since 8h), standbys: ceph03

mds: cephfs:1 {0=ceph02=up:active}

osd: 8 osds: 8 up (since 8h), 8 in (since 8d)

rgw: 1 daemon active (ceph01)

data:

pools: 9 pools, 352 pgs

objects: 220 objects, 3.7 KiB

usage: 8.1 GiB used, 64 GiB / 72 GiB avail

pgs: 352 active+clean

io:

client: 4.0 KiB/s rd, 0 B/s wr, 3 op/s rd, 2 op/s wr

RBD mapping

[root@web ~]# rbd --user rbdpool map rbdpool/vol01

/dev/rbd0

[root@web ~]# rbd --user rbdpool map rbdpool/vol02

/dev/rbd1

Disk create file system

[root@web ~]# mkfs.xfs /dev/rbd0

meta-data=/dev/rbd0 isize=512 agcount=8, agsize=65536 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=524288, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@web ~]# mkfs.xfs /dev/rbd1

meta-data=/dev/rbd1 isize=512 agcount=8, agsize=65536 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=524288, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Mount disk

[root@web ~]# mkdir /data/v1 -p

[root@web ~]# mkdir /data/v2 -p

[root@web ~]# mount /dev/rbd0 /data/v1

[root@web ~]# mount /dev/rbd1 /data/v2

[root@web ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 2G 0 lvm

└─centos-home 253:2 0 47G 0 lvm /home

sr0 11:0 1 1024M 0 rom

rbd0 252:0 0 2G 0 disk /data/v1

rbd1 252:16 0 2G 0 disk /data/v2

[root@web ~]# rbd showmapped

id pool namespace image snap device

0 rbdpool vol01 - /dev/rbd0

1 rbdpool vol02 - /dev/rbd1

Uninstall disk

[root@web ~]# umount /data/v1

[root@web ~]# rbd showmapped

id pool namespace image snap device

0 rbdpool vol01 - /dev/rbd0

1 rbdpool vol02 - /dev/rbd1

[root@web ~]# rbd unmap /dev/rbd0

[root@web ~]# rbd showmapped

id pool namespace image snap device

1 rbdpool vol02 - /dev/rbd1

Expand image size

[cephadm@ceph-admin ceph-cluster]$ rbd resize -s 5G rbdpool/vol01

Resizing image: 100% complete...done.

[cephadm@ceph-admin ceph-cluster]$ rbd ls -p rbdpool -l

NAME SIZE PARENT FMT PROT LOCK

vol01 5 GiB 2

vol02 2 GiB 2

Delete image

[cephadm@ceph-admin ceph-cluster]$ rbd rm rbdpool/vol02

Removing image: 100% complete...done.

[cephadm@ceph-admin ceph-cluster]$ rbd ls -p rbdpool -l

NAME SIZE PARENT FMT PROT LOCK

vol01 5 GiB 2

image in Recycle Bin

[cephadm@ceph-admin ceph-cluster]$ rbd trash move rbdpool/vol01

[cephadm@ceph-admin ceph-cluster]$ rbd ls -p rbdpool -l

[cephadm@ceph-admin ceph-cluster]$ rbd trash list -p rbdpool

d48cc0cdf2a2 vol01

Recycle bin recovery image

[cephadm@ceph-admin ceph-cluster]$ rbd trash restore -p rbdpool --image vol01 --image-id d48cc0cdf2a2

[cephadm@ceph-admin ceph-cluster]$ rbd ls -p rbdpool -l

NAME SIZE PARENT FMT PROT LOCK

vol01 5 GiB 2

snapshot

Before snapshot

[root@web ~]# rbd --user rbdpool map rbdpool/vol01

/dev/rbd0

[root@web ~]# mount /dev/rbd0 /data/v1

[root@web ~]# cd /data/v1

[root@web v1]# echo "this is v1">1.txt

[root@web v1]# echo "this is v2">2.txt

[root@web v1]# ls

1.txt 2.txt

Create Snapshot

[cephadm@ceph-admin ceph-cluster]$ rbd snap create rbdpool/vol01@snap1

[cephadm@ceph-admin ceph-cluster]$ rbd snap list rbdpool/vol01

SNAPID NAME SIZE PROTECTED TIMESTAMP

4 snap1 5 GiB Sat Jul 20 21:11:22 2019

Restore snapshot

[root@web v1]# ls

1.txt 2.txt

[root@web v1]# rm -rf 2.txt

[root@web v1]# ls

1.txt

[root@web ~]# umount /data/v1

[root@web ~]# rbd unmap /dev/rbd0

[root@web ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 2G 0 lvm

└─centos-home 253:2 0 47G 0 lvm /home

sr0 11:0 1 1024M 0 rom

[cephadm@ceph-admin ceph-cluster]$ rbd snap rollback rbdpool/vol01@snap1

Rolling back to snapshot: 100% complete...done.

[root@web ~]# rbd --user rbdpool map rbdpool/vol01

/dev/rbd0

[root@web ~]# mount /dev/rbd0 /data/v1

[root@web ~]# cd /data/v1

[root@web v1]# ls

1.txt 2.txt

Delete snapshot

[cephadm@ceph-admin ceph-cluster]$ rbd snap rm rbdpool/vol01@snap1

Removing snap: 100% complete...done.

Snapshot limit

[cephadm@ceph-admin ceph-cluster]$ rbd snap limit set rbdpool/vol01 --limit 10

Number of snapshots cleared

[cephadm@ceph-admin ceph-cluster]$ rbd snap limit clear rbdpool/vol01

Create clone template

[root@web ~]# umount /data/v1

[root@web ~]# rbd unmap /dev/rbd0

[cephadm@ceph-admin ceph-cluster]$ rbd snap create rbdpool/vol01@clonetemp

[cephadm@ceph-admin ceph-cluster]$ rbd snap ls rbdpool/vol01

SNAPID NAME SIZE PROTECTED TIMESTAMP

6 clonetemp 5 GiB Sat Jul 20 21:32:55 2019

[cephadm@ceph-admin ceph-cluster]$ rbd snap create rbdpool/vol01@clonetemp

[cephadm@ceph-admin ceph-cluster]$ rbd snap ls rbdpool/vol01

SNAPID NAME SIZE PROTECTED TIMESTAMP

6 clonetemp 5 GiB Sat Jul 20 21:32:55 2019

[cephadm@ceph-admin ceph-cluster]$ rbd snap protect rbdpool/vol01@clonetemp

Clone template as image

[cephadm@ceph-admin ceph-cluster]$ rbd clone rbdpool/vol01@clonetemp rbdpool/vol03

[cephadm@ceph-admin ceph-cluster]$ rbd ls -p rbdpool

vol01

vol03

[cephadm@ceph-admin ceph-cluster]$ rbd children rbdpool/vol01@clonetemp

rbdpool/vol03

[root@web ~]# rbd --user rbdpool map rbdpool/vol03

/dev/rbd0

[root@web ~]# mount /dev/rbd0 /data/v1

[root@web ~]# cd /data/v1/

[root@web v1]# ls

1.txt 2.txt

[root@web v1]# echo "this is v3">3.txt

[root@web v1]# ls

1.txt 2.txt 3.txt

Delete clone template

[cephadm@ceph-admin ceph-cluster]$ rbd children rbdpool/vol01@clonetemp

rbdpool/vol03

[cephadm@ceph-admin ceph-cluster]$ rbd flatten rbdpool/vol03

Image flatten: 100% complete...done.

[cephadm@ceph-admin ceph-cluster]$ rbd snap unprotect rbdpool/vol01@clonetemp

[cephadm@ceph-admin ceph-cluster]$ rbd snap rm rbdpool/vol01@clonetemp

Removing snap: 100% complete...done.

[cephadm@ceph-admin ceph-cluster]$ rbd ls -p rbdpool

vol01

vol03