preface

Some time ago, I learned about the netty source code from scratch, but they all understood it in principle. The blogger has just learned a little about the dubbo framework. This time, take the dubbo framework as an example to see in detail how the excellent open source framework uses netty and how it integrates with the logic of the framework itself.

This paper is divided into two parts. One part is the encapsulation of netty by dubbo server and the other part is the encapsulation of netty by dubbo client. Each part is divided into two stages: netty initialization and call. Let's get to the point.

preface

Some time ago, I learned about the netty source code from scratch, but they all understood it in principle. The blogger has just learned a little about the dubbo framework. This time, take the dubbo framework as an example to see in detail how the excellent open source framework uses netty and how it integrates with the logic of the framework itself.

This paper is divided into two parts. One part is the encapsulation of netty by dubbo server and the other part is the encapsulation of netty by dubbo client. Each part is divided into two stages: netty initialization and call. Let's get to the point.

[image upload failed... (image-eb3834-1614247162101)]

1, Dubbo server

The invocation of netty by Dubbo server starts from service export. At the end of service export, Dubbo protocol #openserver method will be called, which is the initialization of netty server completed in this method (this article is based on the premise of configuring netty communication). Here is the starting point.

1. Server initialization

The source code of the openServer method is as follows. The main logic is to first obtain the address as a string in key ip:port format, and then make a double check. If the server does not exist, call createServer to create one and put it into the serverMap. From here, we can know that an ip + port in dubbo service provider corresponds to a nettyServer, and all nettyservers are maintained in a ConcurrentHashMap. But in fact, usually, the server of a service provider will only expose one port for dubbo. Therefore, although it is saved with Map, there will generally be only one nettyServer. It should also be noted here that dubbo exposes that a service provider executes the export method once, that is, a service provider interface triggers the openServer method once and corresponds to a nettyServer. The following is the creation process of the server.

1 private void openServer(URL url) {

2 // find server.

3 String key = url.getAddress();

4 //client can export a service which's only for server to invoke

5 boolean isServer = url.getParameter(IS_SERVER_KEY, true);

6 if (isServer) {

7 ProtocolServer server = serverMap.get(key);

8 if (server == null) {

9 synchronized (this) {

10 server = serverMap.get(key);

11 if (server == null) {

12 serverMap.put(key, createServer(url));

13 }

14 }

15 } else {

16 // server supports reset, use together with override

17 server.reset(url);

18 }

19 }

20 }

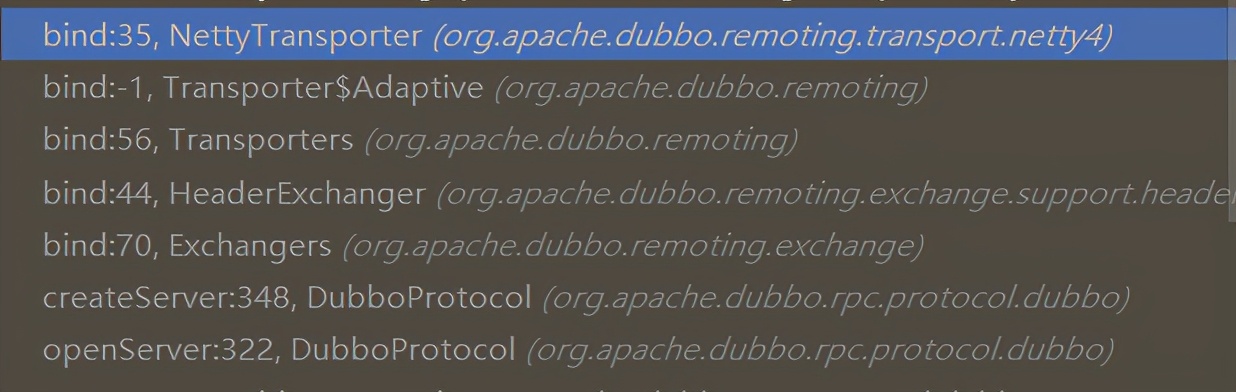

The method stack called by openServer is as follows:

Enter the bind method of NettyTransporter. NettyTransporter has two methods - bind and connect. The former is called when initializing the server, and the latter is triggered when initializing the client. The source code is as follows:

1 public class NettyTransporter implements Transporter {

2 public static final String NAME = "netty";

3 @Override

4 public RemotingServer bind(URL url, ChannelHandler listener) throws RemotingException {

5 return new NettyServer(url, listener);

6 }

7 @Override

8 public Client connect(URL url, ChannelHandler listener) throws RemotingException {

9 return new NettyClient(url, listener);

10 }

11 }

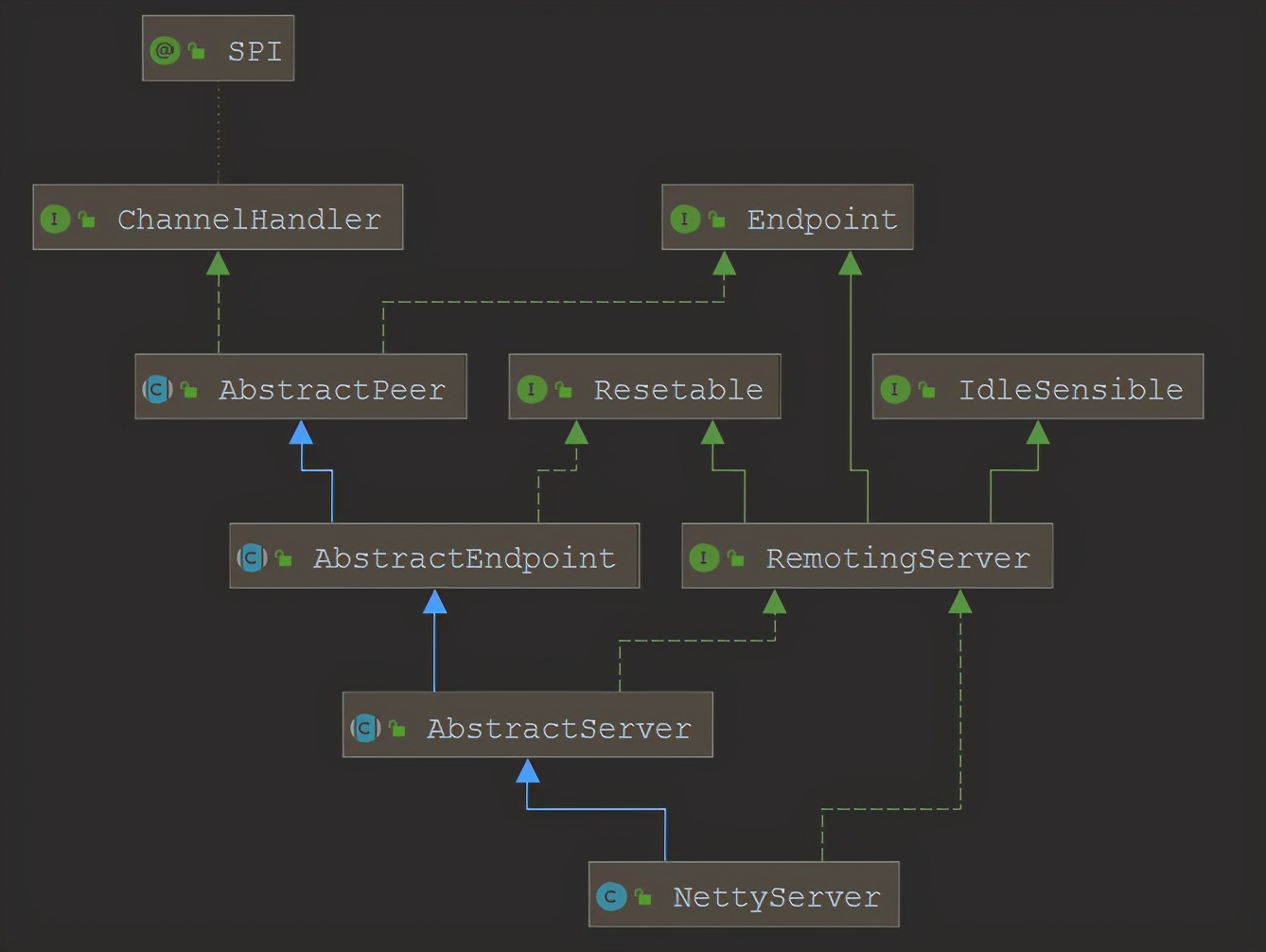

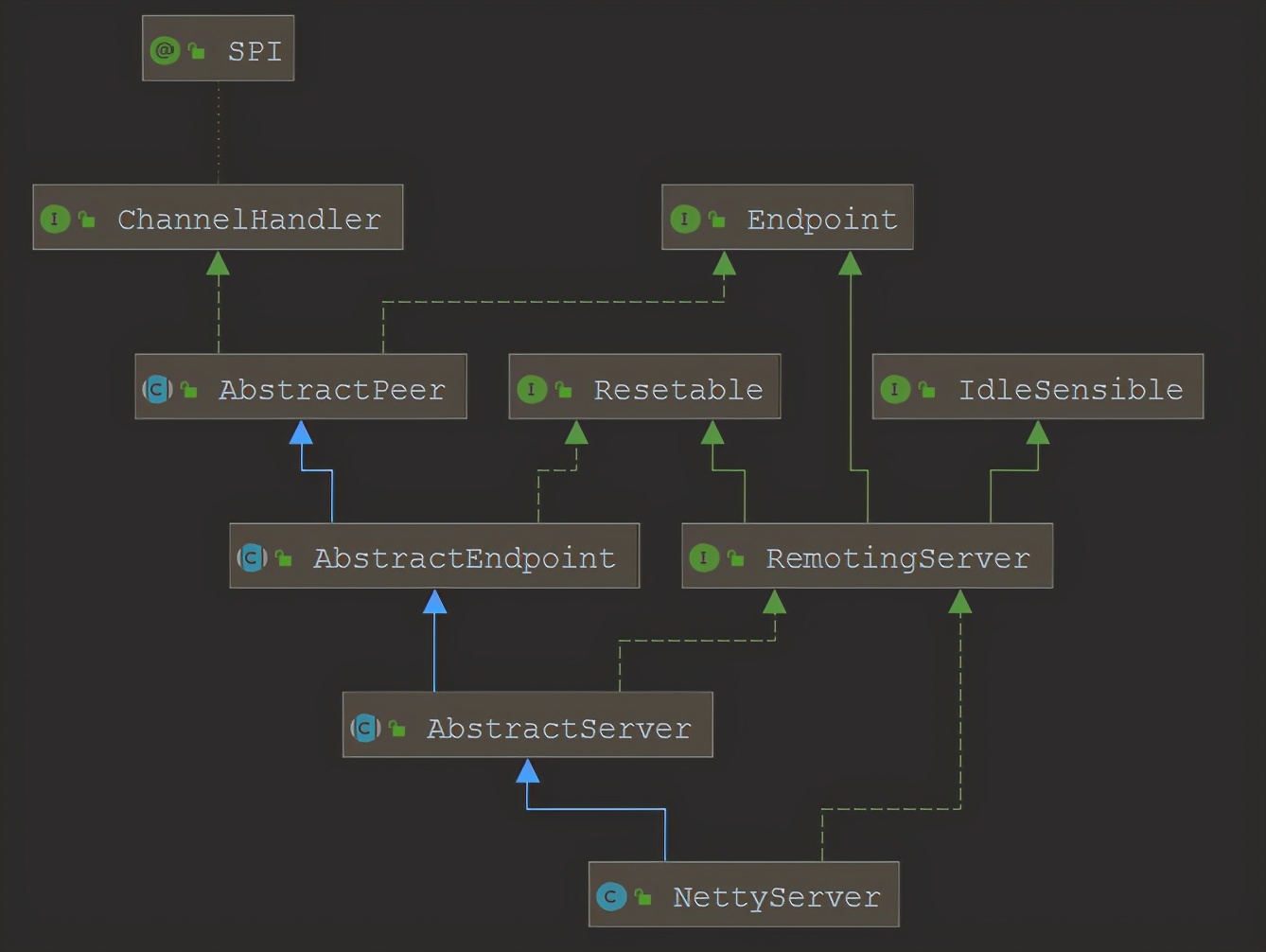

Let's see how NettyServer is associated with netty. Let's take a look at the class diagram of NettyServer:

Experienced gardeners can guess from the class diagram that this is the hierarchical abstraction commonly used in the source code framework. AbstractServer is an abstraction of a template. After inheriting it, other types of communication can be extended, such as MinaServer and GrizzlyServer. Let's go back to NettyServer, the protagonist of this article, and take a look at its constructor:

1 public NettyServer(URL url, ChannelHandler handler) throws RemotingException {

2 super(ExecutorUtil.setThreadName(url, SERVER_THREAD_POOL_NAME), ChannelHandlers.wrap(handler, url));

3 }

Set the thread name parameters in url, encapsulate handler and url, and then call the constructor of parent class AbstractServer.

Here, you need to determine the handler type of the input parameter and the handler type passed to the parent class constructor. The NettyServer constructor input parameter ChannelHandler is encapsulated in HeaderExchanger#bind in the following way:

1 public ExchangeServer bind(URL url, ExchangeHandler handler) throws RemotingException {

2 return new HeaderExchangeServer(Transporters.bind(url, new DecodeHandler(new HeaderExchangeHandler(handler))));

3 }

Further, the implementation class of the input parameter ExchangeHandler of the bind method should be traced back to DubboProtocol, and its member variable requestHandler is as follows:

private ExchangeHandler requestHandler = new ExchangeHandlerAdapter() {

// Omit several rewritten method logic

}

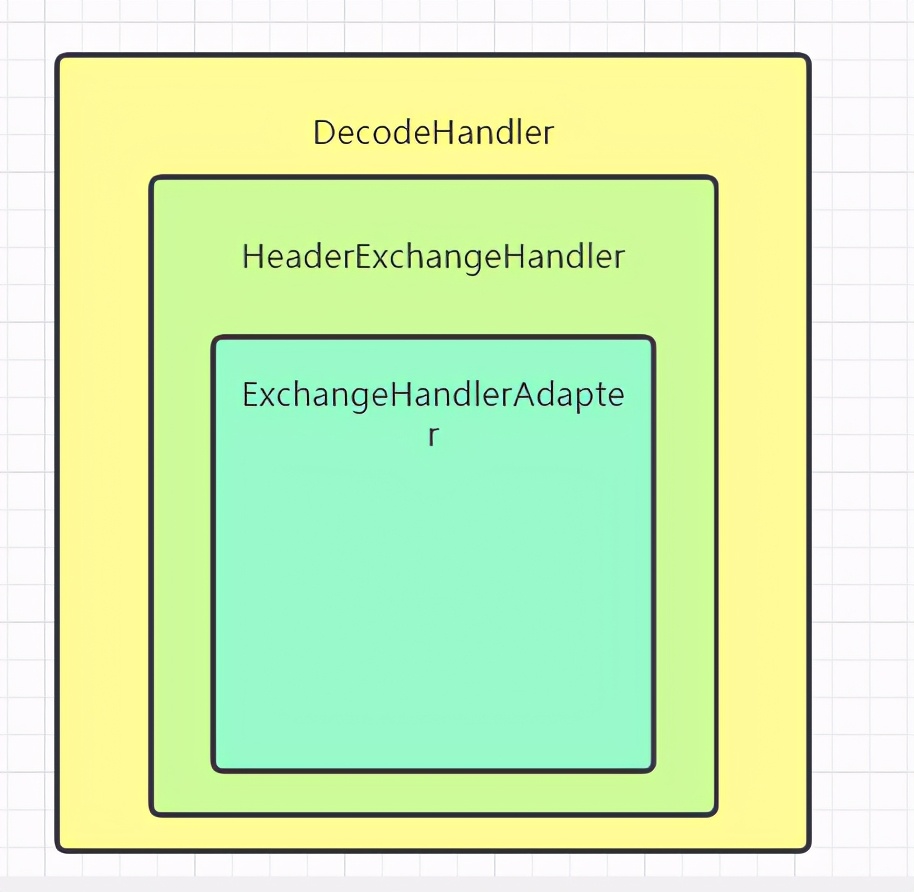

So far, the type of the NettyServer constructor's input parameter ChannelHandler has been confirmed. Its internal final implementation is the ExchangeHandlerAdapter in DubboProtocol, which encapsulates a layer of HeaderExchangeHandler and a layer of DecodeHandler. The diagram is as follows:

After finding out the ChannelHandler of the NettyServer constructor, follow up channelhandlers Wrap method, the final packaging method is as follows:

1 protected ChannelHandler wrapInternal(ChannelHandler handler, URL url) {

2 return new MultiMessageHandler(new HeartbeatHandler(ExtensionLoader.getExtensionLoader(Dispatcher.class)

3 .getAdaptiveExtension().dispatch(handler, url)));

4 }

The Dispatcher defaults to AllDispatcher, and its dispatch method is as follows:

1 public ChannelHandler dispatch(ChannelHandler handler, URL url) {

2 return new AllChannelHandler(handler, url);

3 }

At this point, channelhandlers The ChannelHandler structure obtained after the wrap method is executed is as follows. The decorator mode is adopted and decorated layer by layer.

[image upload failed... (image-8e480e-1614247162100)]

After understanding the wrap method, let's return to the main line and enter the constructor of AbstractServer:

1 public AbstractServer(URL url, ChannelHandler handler) throws RemotingException {

2 // 1. Call the parent class constructor to save these two variables, and finally exist in AbstractPeer

3 super(url, handler);

4 // 2. Set two address es

5 localAddress = getUrl().toInetSocketAddress();

6 String bindIp = getUrl().getParameter(Constants.BIND_IP_KEY, getUrl().getHost());

7 int bindPort = getUrl().getParameter(Constants.BIND_PORT_KEY, getUrl().getPort());

8 if (url.getParameter(ANYHOST_KEY, false) || NetUtils.isInvalidLocalHost(bindIp)) {

9 bindIp = ANYHOST_VALUE;

10 }

11 bindAddress = new InetSocketAddress(bindIp, bindPort);

12 this.accepts = url.getParameter(ACCEPTS_KEY, DEFAULT_ACCEPTS);

13 this.idleTimeout = url.getParameter(IDLE_TIMEOUT_KEY, DEFAULT_IDLE_TIMEOUT);

14 try {

15 // 3. Complete the call of netty source code - open the netty server

16 doOpen();

17 } catch (Throwable t) {

18 // Omit exception handling

19 }

20 // 4. Get / create thread pool

21 executor = executorRepository.createExecutorIfAbsent(url);

22 }

The logic of 1 / 2 is relatively simple, and 3 and 4 are the key points. Next, enter the doOpen method at 3. The doOpen method is an abstract method in AbstractServer, so you should look at its subclass NettyServer:

1 protected void doOpen() throws Throwable {

2 // You can see the familiar netty code here

3 bootstrap = new ServerBootstrap();

4 // bossGroup a thread

5 bossGroup = new NioEventLoopGroup(1, new DefaultThreadFactory("NettyServerBoss", true));

6 // The number of CPU cores taken by the worker group thread is the small value of + 1 and 32

7 workerGroup = new NioEventLoopGroup(getUrl().getPositiveParameter(IO_THREADS_KEY, Constants.DEFAULT_IO_THREADS),

8 new DefaultThreadFactory("NettyServerWorker", true));

9 // ***1. Here, NettyServer is encapsulated into NettyServerHandler to realize the connection between netty and dubbo

10 final NettyServerHandler nettyServerHandler = new NettyServerHandler(getUrl(), this);

11 channels = nettyServerHandler.getChannels();

12 // netty encapsulation

13 bootstrap.group(bossGroup, workerGroup)

14 .channel(NioServerSocketChannel.class)

15 .childOption(ChannelOption.TCP_NODELAY, Boolean.TRUE)

16 .childOption(ChannelOption.SO_REUSEADDR, Boolean.TRUE)

17 .childOption(ChannelOption.ALLOCATOR, PooledByteBufAllocator.DEFAULT)

18 .childHandler(new ChannelInitializer<NioSocketChannel>() {

19 @Override

20 protected void initChannel(NioSocketChannel ch) throws Exception {

21 int idleTimeout = UrlUtils.getIdleTimeout(getUrl());

22 NettyCodecAdapter adapter = new NettyCodecAdapter(getCodec(), getUrl(), NettyServer.this);

23 if (getUrl().getParameter(SSL_ENABLED_KEY, false)) {

24 ch.pipeline().addLast("negotiation",

25 SslHandlerInitializer.sslServerHandler(getUrl(), nettyServerHandler));

26 }

27 ch.pipeline()

28 .addLast("decoder", adapter.getDecoder())

29 .addLast("encoder", adapter.getEncoder())

30 .addLast("server-idle-handler", new IdleStateHandler(0, 0, idleTimeout, MILLISECONDS))

31 .addLast("handler", nettyServerHandler);

32 }

33 });

34 // Bind IP and port. The bindAddress variable in AbstractServer is used here

35 ChannelFuture channelFuture = bootstrap.bind(getBindAddress());

36 channelFuture.syncUninterruptibly();

37 channel = channelFuture.channel();

38 }

One place marked with an asterisk * * * is the key point. See the pipeline below Addlast shows that the NettyServer storing the dubbo logic is encapsulated in the nettyserverhandler and then put into the pipeline. When a client is connected, the corresponding method in the nettyserverhandler will be triggered to enter the dubbo interface call logic. The connector between the dubbo function and the netty framework is the nettyserverhandler class. NettyServer encapsulates a thread pool, that is, after a client is connected, the server uses a thread pool to receive and process a series of requests from the client, that is, a thread pool is added on the basis of netty's original thread model.

Executorrepository in 4 Createexecutorifabsent (URL) is used to generate thread pool. Here is the server. Click the source code to find that a port port corresponds to a thread pool on dubbo's server, and no special parameters are set here. Therefore, the default type of ThreadPool is fixed, that is, the getExecutor method of FixedThreadPool:

1 public Executor getExecutor(URL url) {

2 String name = url.getParameter(THREAD_NAME_KEY, DEFAULT_THREAD_NAME);

3 int threads = url.getParameter(THREADS_KEY, DEFAULT_THREADS);

4 int queues = url.getParameter(QUEUES_KEY, DEFAULT_QUEUES);

5 return new ThreadPoolExecutor(threads, threads, 0, TimeUnit.MILLISECONDS,

6 queues == 0 ? new SynchronousQueue<Runnable>() :

7 (queues < 0 ? new LinkedBlockingQueue<Runnable>()

8 : new LinkedBlockingQueue<Runnable>(queues)),

9 new NamedInternalThreadFactory(name, true), new AbortPolicyWithReport(name, url));

10 }

This method can pay attention to two points: the number of threads is 200 by default, and the blocking queue adopts synchronous queue because queues==0. What does this thread pool do after initialization? After searching the call relationship, it is found that the thread pool will be operated only when the NettyServer is reset or shut down. But it doesn't make sense in theory. You can't create a thread pool just to close it. And look down.

2. Server call

In fact, the thread pool on the server side is an inspiration for bloggers to see the source code. Note that here is to go to the warehouse to obtain a reference to the thread pool (i.e. executorrepository. Createexecutorifiabsent (URL)), and the warehouse creates the thread pool to cache it, and the thread pool reference after caching can also be exposed to other places, Execute the thread pool's execute method elsewhere. Specifically, here is the thread pool that is invoked in AllChannelHandler, such as the connected method. As shown below, the getExecutorService method is to get the thread pool of the server side in the warehouse and encapsulate a ChannelEventRunnable to the thread pool execution. The received method when the server receives the request is the same process.

1 public void connected(Channel channel) throws RemotingException {

2 ExecutorService executor = getExecutorService();

3 try {

4 executor.execute(new ChannelEventRunnable(channel, handler, ChannelState.CONNECTED));

5 } catch (Throwable t) {

6 throw new ExecutionException("connect event", channel, getClass() + " error when process connected event .", t);

7 }

8 }

Let's sort out the processing flow of service invocation in combination with netty's invocation process: after netty's ServerBootstrap is started, the thread in the bossGroup (i.e. Reactor thread) will be started and the run method will be executed all the time. When a client wants to connect, the select method will get a connection event from the operating system. The Reactor thread will create a NioSocketChannel for the connecting party, select a thread from the workerGroup and run the run method. The thread is used to handle the subsequent communication between the server and the client. The nettyServerHandler added to the pipeline here will trigger the corresponding method when the client sends a read-write request. Finally, call the corresponding method in the above AllChannelHandler to execute the subsequent business logic with the thread pool.

2, Dubbo's client

1. Client initialization

During the initialization of dubbo client, it will call the reference method of RegistryProtocol. After several twists and turns, it finally comes to the protocolBindingRefer method of dubbo protocol, as follows. The getClients method called in line 5 is the focus of integration with netty, that is, to generate the client connecting the server. Note that each service interface introduced in the client corresponds to a DubboInvoker.

1 public <T> Invoker<T> protocolBindingRefer(Class<T> serviceType, URL url) throws RpcException {

2 optimizeSerialization(url);

3

4 // create rpc invoker.

5 DubboInvoker<T> invoker = new DubboInvoker<T>(serviceType, url, getClients(url), invokers); // Generate corresponding nettyClient for each invoker

6 invokers.add(invoker);

7

8 return invoker;

9 }

Continue to follow up. You will enter the connect method of NettyTransporter, which should be familiar here, because the bind method under this class is called during server initialization. The bind method initializes the NettyServer object, while the connect method initializes the NettyClient object.

public class NettyTransporter implements Transporter {

public static final String NAME = "netty";

@Override

public RemotingServer bind(URL url, ChannelHandler listener) throws RemotingException {

return new NettyServer(url, listener);

}

@Override

public Client connect(URL url, ChannelHandler listener) throws RemotingException {

return new NettyClient(url, listener);

}

}

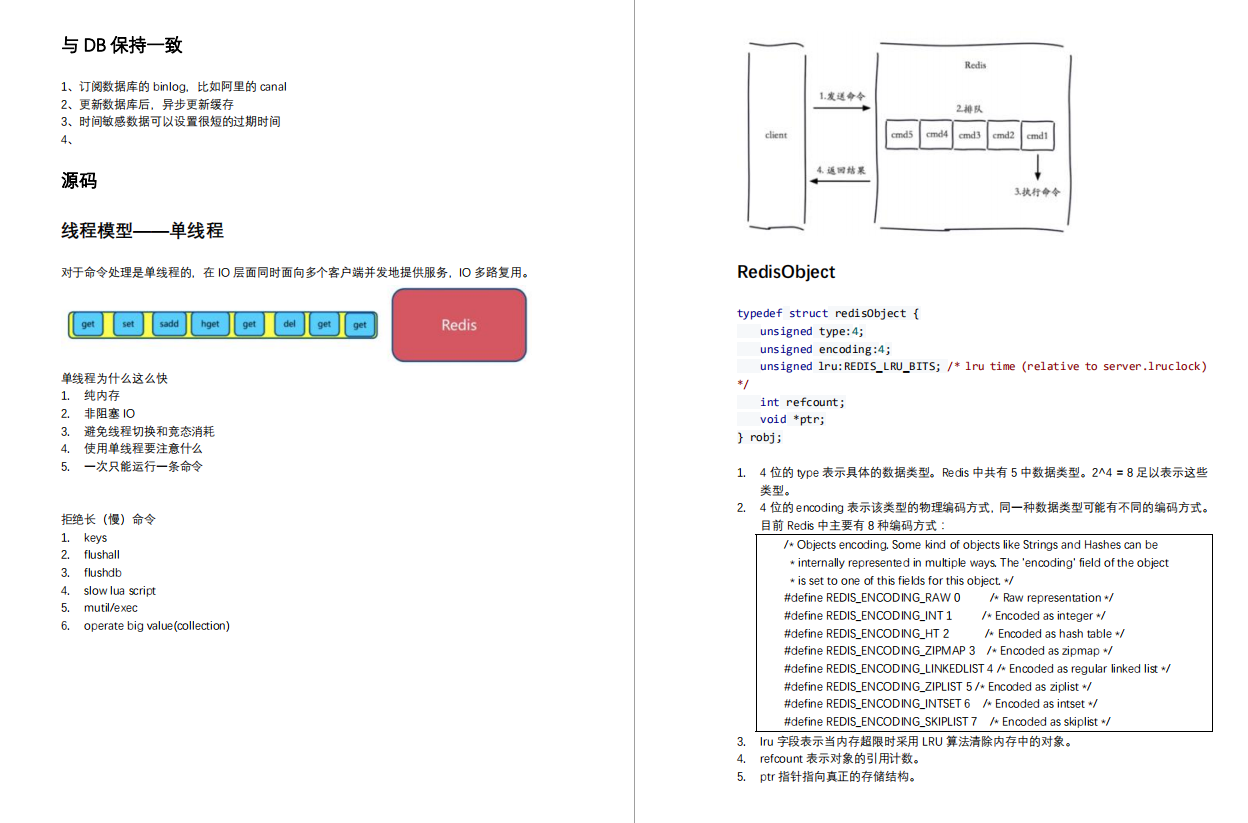

The class diagram structure of NettyClient is similar to that of NettyServer:

[image upload failed... (image-881bf2-1614247162099)]

Let's look at the constructor of NettyClient:

1 public NettyClient(final URL url, final ChannelHandler handler) throws RemotingException {

2 // you can customize name and type of client thread pool by THREAD_NAME_KEY and THREADPOOL_KEY in CommonConstants.

3 // the handler will be warped: MultiMessageHandler->HeartbeatHandler->handler

4 super(url, wrapChannelHandler(url, handler));

5 }

The parent class constructor is called directly, and the wrapChannelHandler method is the same as that in NettyServer, which will not be repeated. Let's look at the parent class constructor:

1 public AbstractClient(URL url, ChannelHandler handler) throws RemotingException {

2 super(url, handler);

3

4 needReconnect = url.getParameter(Constants.SEND_RECONNECT_KEY, false);

5 // 1. Initialize client thread pool

6 initExecutor(url);

7

8 try {

9 doOpen(); // 2. Create a Bootstrap for the client

10 } catch (Throwable t) {

11 // Omit exception handling

12 }

13 try {

14 // 3. Connect Netty server

15 connect();

16 } catch (RemotingException t) {

17 // Omit exception handling

18 } catch (Throwable t) {

19 close();

20 // Throwing anomaly

21 }

22 }

There are three main steps, which have been marked above. The following three methods are followed up respectively.

1) . the initExecutor method directly adds the thread pool type to the url. The client defaults to the Cached type, so when calling executorrepository When createexecutorifabsent (url), it will enter the CachedThreadPool.

1 private void initExecutor(URL url) {

2 url = ExecutorUtil.setThreadName(url, CLIENT_THREAD_POOL_NAME);

3 url = url.addParameterIfAbsent(THREADPOOL_KEY, DEFAULT_CLIENT_THREADPOOL);

4 executor = executorRepository.createExecutorIfAbsent(url);

5 }

The code of CachedThreadPool is as follows. It can be seen that the number of core threads created is 0, the maximum number of threads is unlimited, and the blocking queue defaults to SynchronousQueue.

1 public class CachedThreadPool implements ThreadPool {

2

3 @Override

4 public Executor getExecutor(URL url) {

5 String name = url.getParameter(THREAD_NAME_KEY, DEFAULT_THREAD_NAME);

6 int cores = url.getParameter(CORE_THREADS_KEY, DEFAULT_CORE_THREADS);

7 int threads = url.getParameter(THREADS_KEY, Integer.MAX_VALUE);

8 int queues = url.getParameter(QUEUES_KEY, DEFAULT_QUEUES);

9 int alive = url.getParameter(ALIVE_KEY, DEFAULT_ALIVE);

10 return new ThreadPoolExecutor(cores, threads, alive, TimeUnit.MILLISECONDS,

11 queues == 0 ? new SynchronousQueue<Runnable>() :

12 (queues < 0 ? new LinkedBlockingQueue<Runnable>()

13 : new LinkedBlockingQueue<Runnable>(queues)),

14 new NamedInternalThreadFactory(name, true), new AbortPolicyWithReport(name, url));

15 }

16 }

2) . Dopen method

The implementation logic in NettyClient is normally encapsulated. The change is to put NettyClientHandler into pipeline. Note that the Bootstrap is only initialized here, but the connection with the server is not triggered.

3) . connect method

The method finally calls the connect method of bootstrap in the doConnect method of NettyClient to complete the connection with the server.

2. Client call

When the consumer calls the server interface or receives the return result from the server, it will trigger the corresponding method in NettyClientHandler. This is similar to NettyServerHandler. Finally, it obtains the previously created client thread pool (Cached type) in AllChannelHandler and uses this thread pool for subsequent operations.

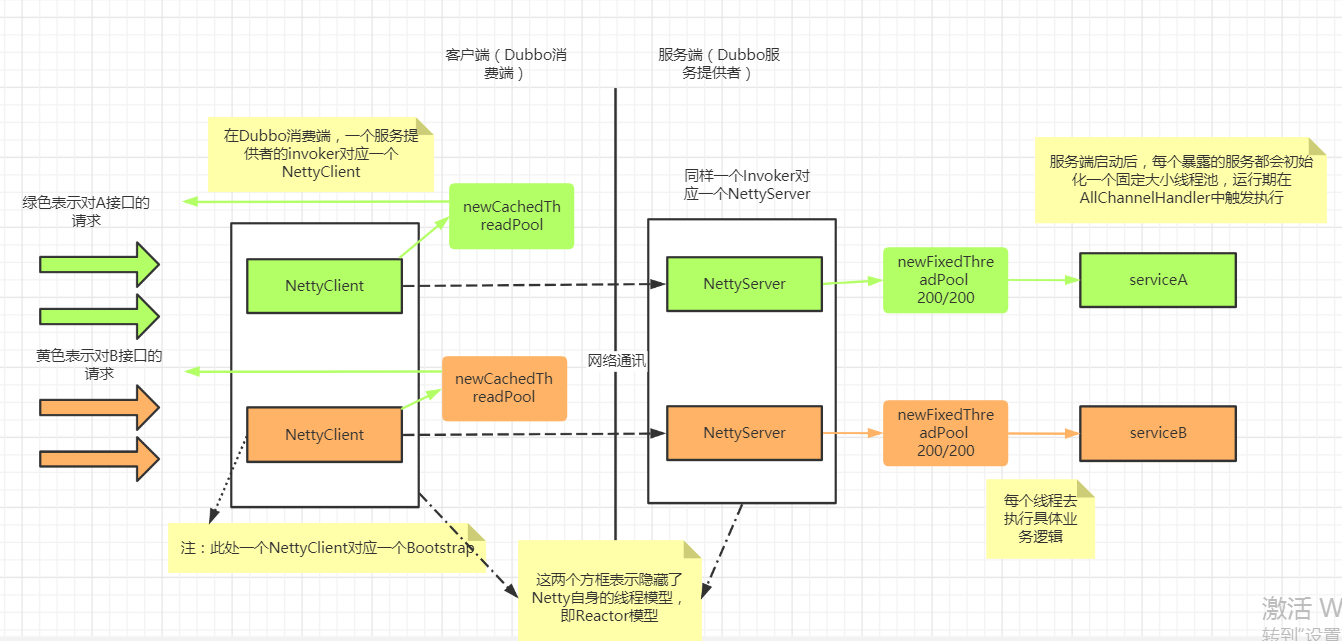

Finally, a schematic diagram is given to summarize the call:

1, Dubbo server

The invocation of netty by Dubbo server starts from service export. At the end of service export, Dubbo protocol #openserver method will be called, which is the initialization of netty server completed in this method (this article is based on the premise of configuring netty communication). Here is the starting point.

1. Server initialization

The source code of the openServer method is as follows. The main logic is to first obtain the address as a string in key ip:port format, and then make a double check. If the server does not exist, call createServer to create one and put it into the serverMap. From here, we can know that an ip + port in dubbo service provider corresponds to a nettyServer, and all nettyservers are maintained in a ConcurrentHashMap. But in fact, usually, the server of a service provider will only expose one port for dubbo. Therefore, although it is saved with Map, there will generally be only one nettyServer. It should also be noted here that dubbo exposes that a service provider executes the export method once, that is, a service provider interface triggers the openServer method once and corresponds to a nettyServer. The following is the creation process of the server.

1 private void openServer(URL url) {

2 // find server.

3 String key = url.getAddress();

4 //client can export a service which's only for server to invoke

5 boolean isServer = url.getParameter(IS_SERVER_KEY, true);

6 if (isServer) {

7 ProtocolServer server = serverMap.get(key);

8 if (server == null) {

9 synchronized (this) {

10 server = serverMap.get(key);

11 if (server == null) {

12 serverMap.put(key, createServer(url));

13 }

14 }

15 } else {

16 // server supports reset, use together with override

17 server.reset(url);

18 }

19 }

20 }

The method stack called by openServer is as follows:

Enter the bind method of NettyTransporter. NettyTransporter has two methods - bind and connect. The former is called when initializing the server, and the latter is triggered when initializing the client. The source code is as follows:

1 public class NettyTransporter implements Transporter {

2 public static final String NAME = "netty";

3 @Override

4 public RemotingServer bind(URL url, ChannelHandler listener) throws RemotingException {

5 return new NettyServer(url, listener);

6 }

7 @Override

8 public Client connect(URL url, ChannelHandler listener) throws RemotingException {

9 return new NettyClient(url, listener);

10 }

11 }

Let's see how NettyServer is associated with netty. Let's take a look at the class diagram of NettyServer:

Experienced gardeners can guess from the class diagram that this is the hierarchical abstraction commonly used in the source code framework. AbstractServer is an abstraction of a template. After inheriting it, other types of communication can be extended, such as MinaServer and GrizzlyServer. Let's go back to NettyServer, the protagonist of this article, and take a look at its constructor:

1 public NettyServer(URL url, ChannelHandler handler) throws RemotingException {

2 super(ExecutorUtil.setThreadName(url, SERVER_THREAD_POOL_NAME), ChannelHandlers.wrap(handler, url));

3 }

Set the thread name parameters in url, encapsulate handler and url, and then call the constructor of parent class AbstractServer.

Here, you need to determine the handler type of the input parameter and the handler type passed to the parent class constructor. The NettyServer constructor input parameter ChannelHandler is encapsulated in HeaderExchanger#bind in the following way:

1 public ExchangeServer bind(URL url, ExchangeHandler handler) throws RemotingException {

2 return new HeaderExchangeServer(Transporters.bind(url, new DecodeHandler(new HeaderExchangeHandler(handler))));

3 }

Further, the implementation class of the input parameter ExchangeHandler of the bind method should be traced back to DubboProtocol, and its member variable requestHandler is as follows:

private ExchangeHandler requestHandler = new ExchangeHandlerAdapter() {

// Omit several rewritten method logic

}

So far, the type of the NettyServer constructor's input parameter ChannelHandler has been confirmed. Its internal final implementation is the ExchangeHandlerAdapter in DubboProtocol, which encapsulates a layer of HeaderExchangeHandler and a layer of DecodeHandler. The diagram is as follows:

After finding out the ChannelHandler of the NettyServer constructor, follow up channelhandlers Wrap method, the final packaging method is as follows:

1 protected ChannelHandler wrapInternal(ChannelHandler handler, URL url) {

2 return new MultiMessageHandler(new HeartbeatHandler(ExtensionLoader.getExtensionLoader(Dispatcher.class)

3 .getAdaptiveExtension().dispatch(handler, url)));

4 }

The Dispatcher defaults to AllDispatcher, and its dispatch method is as follows:

1 public ChannelHandler dispatch(ChannelHandler handler, URL url) {

2 return new AllChannelHandler(handler, url);

3 }

At this point, channelhandlers The ChannelHandler structure obtained after the wrap method is executed is as follows. The decorator mode is adopted and decorated layer by layer.

[image upload failed... (image-ccc763-1614246837626)]

After understanding the wrap method, let's return to the main line and enter the constructor of AbstractServer:

1 public AbstractServer(URL url, ChannelHandler handler) throws RemotingException {

2 // 1. Call the parent class constructor to save these two variables, and finally exist in AbstractPeer

3 super(url, handler);

4 // 2. Set two address es

5 localAddress = getUrl().toInetSocketAddress();

6 String bindIp = getUrl().getParameter(Constants.BIND_IP_KEY, getUrl().getHost());

7 int bindPort = getUrl().getParameter(Constants.BIND_PORT_KEY, getUrl().getPort());

8 if (url.getParameter(ANYHOST_KEY, false) || NetUtils.isInvalidLocalHost(bindIp)) {

9 bindIp = ANYHOST_VALUE;

10 }

11 bindAddress = new InetSocketAddress(bindIp, bindPort);

12 this.accepts = url.getParameter(ACCEPTS_KEY, DEFAULT_ACCEPTS);

13 this.idleTimeout = url.getParameter(IDLE_TIMEOUT_KEY, DEFAULT_IDLE_TIMEOUT);

14 try {

15 // 3. Complete the call of netty source code - open the netty server

16 doOpen();

17 } catch (Throwable t) {

18 // Omit exception handling

19 }

20 // 4. Get / create thread pool

21 executor = executorRepository.createExecutorIfAbsent(url);

22 }

The logic of 1 / 2 is relatively simple, and 3 and 4 are the key points. Next, enter the doOpen method at 3. The doOpen method is an abstract method in AbstractServer, so you should look at its subclass NettyServer:

1 protected void doOpen() throws Throwable {

2 // You can see the familiar netty code here

3 bootstrap = new ServerBootstrap();

4 // bossGroup a thread

5 bossGroup = new NioEventLoopGroup(1, new DefaultThreadFactory("NettyServerBoss", true));

6 // The number of CPU cores taken by the worker group thread is the small value of + 1 and 32

7 workerGroup = new NioEventLoopGroup(getUrl().getPositiveParameter(IO_THREADS_KEY, Constants.DEFAULT_IO_THREADS),

8 new DefaultThreadFactory("NettyServerWorker", true));

9 // ***1. Here, NettyServer is encapsulated into NettyServerHandler to realize the connection between netty and dubbo

10 final NettyServerHandler nettyServerHandler = new NettyServerHandler(getUrl(), this);

11 channels = nettyServerHandler.getChannels();

12 // netty encapsulation

13 bootstrap.group(bossGroup, workerGroup)

14 .channel(NioServerSocketChannel.class)

15 .childOption(ChannelOption.TCP_NODELAY, Boolean.TRUE)

16 .childOption(ChannelOption.SO_REUSEADDR, Boolean.TRUE)

17 .childOption(ChannelOption.ALLOCATOR, PooledByteBufAllocator.DEFAULT)

18 .childHandler(new ChannelInitializer<NioSocketChannel>() {

19 @Override

20 protected void initChannel(NioSocketChannel ch) throws Exception {

21 int idleTimeout = UrlUtils.getIdleTimeout(getUrl());

22 NettyCodecAdapter adapter = new NettyCodecAdapter(getCodec(), getUrl(), NettyServer.this);

23 if (getUrl().getParameter(SSL_ENABLED_KEY, false)) {

24 ch.pipeline().addLast("negotiation",

25 SslHandlerInitializer.sslServerHandler(getUrl(), nettyServerHandler));

26 }

27 ch.pipeline()

28 .addLast("decoder", adapter.getDecoder())

29 .addLast("encoder", adapter.getEncoder())

30 .addLast("server-idle-handler", new IdleStateHandler(0, 0, idleTimeout, MILLISECONDS))

31 .addLast("handler", nettyServerHandler);

32 }

33 });

34 // Bind IP and port. The bindAddress variable in AbstractServer is used here

35 ChannelFuture channelFuture = bootstrap.bind(getBindAddress());

36 channelFuture.syncUninterruptibly();

37 channel = channelFuture.channel();

38 }

One place marked with an asterisk * * * is the key point. See the pipeline below Addlast shows that the NettyServer storing the dubbo logic is encapsulated in the nettyserverhandler and then put into the pipeline. When a client is connected, the corresponding method in the nettyserverhandler will be triggered to enter the dubbo interface call logic. The connector between the dubbo function and the netty framework is the nettyserverhandler class. NettyServer encapsulates a thread pool, that is, after a client is connected, the server uses a thread pool to receive and process a series of requests from the client, that is, a thread pool is added on the basis of netty's original thread model.

Executorrepository in 4 Createexecutorifabsent (URL) is used to generate thread pool. Here is the server. Click the source code to find that a port port corresponds to a thread pool on dubbo's server, and no special parameters are set here. Therefore, the default type of ThreadPool is fixed, that is, the getExecutor method of FixedThreadPool:

1 public Executor getExecutor(URL url) {

2 String name = url.getParameter(THREAD_NAME_KEY, DEFAULT_THREAD_NAME);

3 int threads = url.getParameter(THREADS_KEY, DEFAULT_THREADS);

4 int queues = url.getParameter(QUEUES_KEY, DEFAULT_QUEUES);

5 return new ThreadPoolExecutor(threads, threads, 0, TimeUnit.MILLISECONDS,

6 queues == 0 ? new SynchronousQueue<Runnable>() :

7 (queues < 0 ? new LinkedBlockingQueue<Runnable>()

8 : new LinkedBlockingQueue<Runnable>(queues)),

9 new NamedInternalThreadFactory(name, true), new AbortPolicyWithReport(name, url));

10 }

This method can pay attention to two points: the number of threads is 200 by default, and the blocking queue adopts synchronous queue because queues==0. What does this thread pool do after initialization? After searching the call relationship, it is found that the thread pool will be operated only when the NettyServer is reset or shut down. But it doesn't make sense in theory. You can't create a thread pool just to close it. And look down.

2. Server call

In fact, the thread pool on the server side is an inspiration for bloggers to see the source code. Note that here is to go to the warehouse to obtain a reference to the thread pool (i.e. executorrepository. Createexecutorifiabsent (URL)), and the warehouse creates the thread pool to cache it, and the thread pool reference after caching can also be exposed to other places, Execute the thread pool's execute method elsewhere. Specifically, here is the thread pool that is invoked in AllChannelHandler, such as the connected method. As shown below, the getExecutorService method is to get the thread pool of the server side in the warehouse and encapsulate a ChannelEventRunnable to the thread pool execution. The received method when the server receives the request is the same process.

1 public void connected(Channel channel) throws RemotingException {

2 ExecutorService executor = getExecutorService();

3 try {

4 executor.execute(new ChannelEventRunnable(channel, handler, ChannelState.CONNECTED));

5 } catch (Throwable t) {

6 throw new ExecutionException("connect event", channel, getClass() + " error when process connected event .", t);

7 }

8 }

Let's sort out the processing flow of service invocation in combination with netty's invocation process: after netty's ServerBootstrap is started, the thread in the bossGroup (i.e. Reactor thread) will be started and the run method will be executed all the time. When a client wants to connect, the select method will get a connection event from the operating system. The Reactor thread will create a NioSocketChannel for the connecting party, select a thread from the workerGroup and run the run method. The thread is used to handle the subsequent communication between the server and the client. The nettyServerHandler added to the pipeline here will trigger the corresponding method when the client sends a read-write request. Finally, call the corresponding method in the above AllChannelHandler to execute the subsequent business logic with the thread pool.

2, Dubbo's client

1. Client initialization

During the initialization of dubbo client, it will call the reference method of RegistryProtocol. After several twists and turns, it finally comes to the protocolBindingRefer method of dubbo protocol, as follows. The getClients method called in line 5 is the focus of integration with netty, that is, to generate the client connecting the server. Note that each service interface introduced in the client corresponds to a DubboInvoker.

1 public <T> Invoker<T> protocolBindingRefer(Class<T> serviceType, URL url) throws RpcException {

2 optimizeSerialization(url);

3

4 // create rpc invoker.

5 DubboInvoker<T> invoker = new DubboInvoker<T>(serviceType, url, getClients(url), invokers); // Generate corresponding nettyClient for each invoker

6 invokers.add(invoker);

7

8 return invoker;

9 }

Continue to follow up. You will enter the connect method of NettyTransporter, which should be familiar here, because the bind method under this class is called during server initialization. The bind method initializes the NettyServer object, while the connect method initializes the NettyClient object.

public class NettyTransporter implements Transporter {

public static final String NAME = "netty";

@Override

public RemotingServer bind(URL url, ChannelHandler listener) throws RemotingException {

return new NettyServer(url, listener);

}

@Override

public Client connect(URL url, ChannelHandler listener) throws RemotingException {

return new NettyClient(url, listener);

}

}

The class diagram structure of NettyClient is similar to that of NettyServer:

[image upload failed... (image-892dc5-1614246837626)]

Let's look at the constructor of NettyClient:

1 public NettyClient(final URL url, final ChannelHandler handler) throws RemotingException {

2 // you can customize name and type of client thread pool by THREAD_NAME_KEY and THREADPOOL_KEY in CommonConstants.

3 // the handler will be warped: MultiMessageHandler->HeartbeatHandler->handler

4 super(url, wrapChannelHandler(url, handler));

5 }

The parent class constructor is called directly, and the wrapChannelHandler method is the same as that in NettyServer, which will not be repeated. Let's look at the parent class constructor:

1 public AbstractClient(URL url, ChannelHandler handler) throws RemotingException {

2 super(url, handler);

3

4 needReconnect = url.getParameter(Constants.SEND_RECONNECT_KEY, false);

5 // 1. Initialize client thread pool

6 initExecutor(url);

7

8 try {

9 doOpen(); // 2. Create a Bootstrap for the client

10 } catch (Throwable t) {

11 // Omit exception handling

12 }

13 try {

14 // 3. Connect Netty server

15 connect();

16 } catch (RemotingException t) {

17 // Omit exception handling

18 } catch (Throwable t) {

19 close();

20 // Throwing anomaly

21 }

22 }

There are three main steps, which have been marked above. The following three methods are followed up respectively.

1) . the initExecutor method directly adds the thread pool type to the url. The client defaults to the Cached type, so when calling executorrepository When createexecutorifabsent (url), it will enter the CachedThreadPool.

1 private void initExecutor(URL url) {

2 url = ExecutorUtil.setThreadName(url, CLIENT_THREAD_POOL_NAME);

3 url = url.addParameterIfAbsent(THREADPOOL_KEY, DEFAULT_CLIENT_THREADPOOL);

4 executor = executorRepository.createExecutorIfAbsent(url);

5 }

The code of CachedThreadPool is as follows. It can be seen that the number of core threads created is 0, the maximum number of threads is unlimited, and the blocking queue defaults to SynchronousQueue.

1 public class CachedThreadPool implements ThreadPool {

2

3 @Override

4 public Executor getExecutor(URL url) {

5 String name = url.getParameter(THREAD_NAME_KEY, DEFAULT_THREAD_NAME);

6 int cores = url.getParameter(CORE_THREADS_KEY, DEFAULT_CORE_THREADS);

7 int threads = url.getParameter(THREADS_KEY, Integer.MAX_VALUE);

8 int queues = url.getParameter(QUEUES_KEY, DEFAULT_QUEUES);

9 int alive = url.getParameter(ALIVE_KEY, DEFAULT_ALIVE);

10 return new ThreadPoolExecutor(cores, threads, alive, TimeUnit.MILLISECONDS,

11 queues == 0 ? new SynchronousQueue<Runnable>() :

12 (queues < 0 ? new LinkedBlockingQueue<Runnable>()

13 : new LinkedBlockingQueue<Runnable>(queues)),

14 new NamedInternalThreadFactory(name, true), new AbortPolicyWithReport(name, url));

15 }

16 }

2) . Dopen method

The implementation logic in NettyClient is normally encapsulated. The change is to put NettyClientHandler into pipeline. Note that the Bootstrap is only initialized here, but the connection with the server is not triggered.

3) . connect method

The method finally calls the connect method of bootstrap in the doConnect method of NettyClient to complete the connection with the server.

2. Client call

When the consumer calls the server interface or receives the return result from the server, it will trigger the corresponding method in NettyClientHandler. This is similar to NettyServerHandler. Finally, it obtains the previously created client thread pool (Cached type) in AllChannelHandler and uses this thread pool for subsequent operations.

Finally, a schematic diagram is given to summarize the call:

last

Starting with the key architecture of building a key value database, this document not only takes you to establish a global view, but also helps you quickly grasp the core main line. In addition, it will also explain the data structure, thread model, network framework, persistence, master-slave synchronization and slice clustering to help you understand the underlying principles. I believe this is a perfect tutorial for Redis users at all levels.

Fast start channel:( Poke here and download it for free )Sincerity is full!!!

It's not easy to sort out. Friends who feel helpful can help praise, share and support Xiaobian~

Your support is my motivation; I wish you a bright future and continuous offer s!!!

en method

The implementation logic in NettyClient is normally encapsulated. The change is to put NettyClientHandler into pipeline. Note that the Bootstrap is only initialized here, but the connection with the server is not triggered.

3) . connect method

The method finally calls the connect method of bootstrap in the doConnect method of NettyClient to complete the connection with the server.

2. Client call

When the consumer calls the server interface or receives the return result from the server, it will trigger the corresponding method in NettyClientHandler. This is similar to NettyServerHandler. Finally, it obtains the previously created client thread pool (Cached type) in AllChannelHandler and uses this thread pool for subsequent operations.

Finally, a schematic diagram is given to summarize the call:

last

Starting with the key architecture of building a key value database, this document not only takes you to establish a global view, but also helps you quickly grasp the core main line. In addition, it will also explain the data structure, thread model, network framework, persistence, master-slave synchronization and slice clustering to help you understand the underlying principles. I believe this is a perfect tutorial for Redis users at all levels.

[external chain picture transferring... (img-HX3mYlIH-1623618235638)]

Fast start channel:( Poke here and download it for free )Sincerity is full!!!

It's not easy to sort out. Friends who feel helpful can help praise, share and support Xiaobian~

Your support is my motivation; I wish you a bright future and continuous offer s!!!