Implementation of artificial neural network with Python numpy coding

In the previous articles, we are familiar with the mathematical principle and derivation process of artificial neural network, but it is time to turn the theory into reality. Now we will apply the Python language and its powerful extension library Numpy To write a simple neural network.

Prepare data:

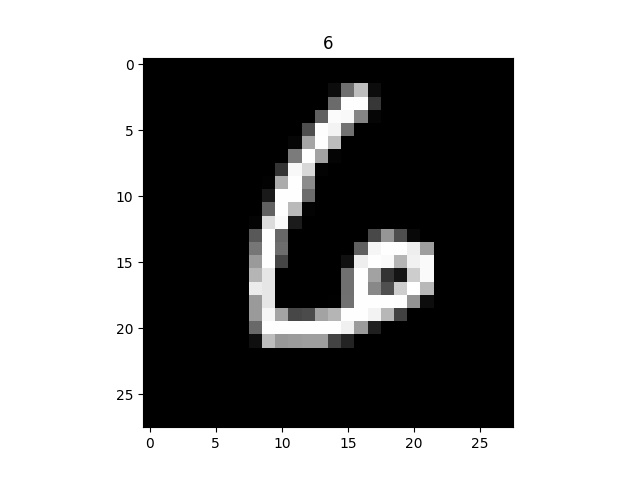

- Training set and test set: Mnist handwritten numeral data set (copy git link clone) MINST database is a handwritten numeral database file provided by American machine learning boss Yann. Its official download address is Download Mnist . The data set will be the input signal of the neural network.

Each picture has 28 pixels × 28 28\times 28 28 × 28, so it can be used as a 784 × 1 784\times 1 784 × 1 vector afferent neural network.

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-vOdOSJra-1580383064774)(/ artificial neural network learning notes (3) / 20190607015526334.png)]

- Initial flower link weight matrix: the normal probability distribution is used to sample the weight, the average value is 0, and the standard variance is the square of the number of incoming links at the node, I N P U T c o n n e c t s \frac{1}{\sqrt{inputconnects} } inputconnects 1

Coding implementation

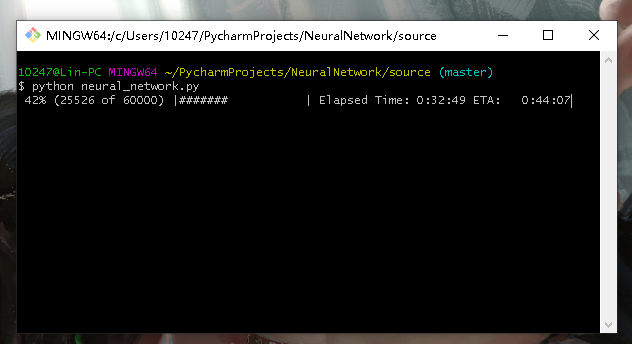

The following code implements a double hidden layer neural network, However, its performance is not good (at least in Mnist data set). After training for 5 hours (generation 5), I can only achieve an accuracy rate of% 96.54. Compared with that, when the hidden layer neural network performs better on Mnist data set, it takes 3 hours (generation 5) can achieve an accuracy of% 97.34. You can comment out some of the following codes, return them to the single hidden layer structure, or even add them to the three hidden layer structure. Although the code is written in a mess, each sentence in the code has detailed comments. Don't mind ha ha. Contains two source code files:

- neural_network.py contains the main class of neural networks for training neural networks

- network_test.py is used to test neural networks

neural_network.py

import numpy

import matplotlib.pyplot as plt

import scipy.special

import scipy.ndimage.interpolation

import time

import progressbar

import matplotlib.animation as anim

# Neural network class definition

class neuralNetwork:

# Initialization neural network

def __init__(self,inputnodes,hiddennodes,hiddennodes_2,outputnodes,learningrate):

# Set the input layer, hidden layer, output layer, node number and learning rate of neural network

self.inodes = inputnodes

self.hnodes = hiddennodes # Number of nodes in the first hidden layer

self.hnodes_2 = hiddennodes_2 # Number of hidden layer 2 nodes

#self.hnodes_3 = hiddennodes_3 # Number of hidden layer 3 nodes

self.onodes = outputnodes

# Learning rate

self.lr = learningrate

# (regular version) link weight matrix with random weight between - 0.5 and 0.5 (three-layer neural network)

self.wih = (numpy.random.rand(hiddennodes,inputnodes)-0.5)

self.who = (numpy.random.rand(outputnodes,hiddennodes)-0.5)

# (advanced version) link weight matrix with random weight between - 0.5 and 0.5 (three-layer neural network)

self.wih_ = numpy.random.normal(0.0,pow(self.hnodes,-0.5),(hiddennodes,inputnodes)) # Input layer to first hidden layer weight matrix

self.wh12_ = numpy.random.normal(0.0,pow(self.hnodes_2,0.5),(hiddennodes_2,hiddennodes)) # Weight matrix from the first hidden layer to the second hidden layer

#self.wh23_ = numpy.random.normal(0.0,pow(self.hnodes_3,0.5),(hiddennodes_3,hiddennodes_2)) # Weight matrix from the second hidden layer to the third hidden layer

self.who_ = numpy.random.normal(0.0,pow(self.onodes,-0.5),(outputnodes,hiddennodes_2)) # Third hidden layer to output layer weight matrix

#Define the activation function, which is provided by the scipy library

self.activation_function = lambda x : scipy.special.expit(x)

# Training neural network

def train(self,inputs_list,targets_list):

# Convert the input signal list and target signal list into column vectors

inputs = numpy.array(inputs_list,ndmin=2).T

targets = numpy.array(targets_list,ndmin=2).T

# Input signal of the first hidden layer:

hidden_inputs = numpy.dot(self.wih_,inputs)

# Output signal of the first hidden layer (function of activation function):

hidden_outputs = self.activation_function(hidden_inputs)

# Input signal of the second hidden layer:

hidden_inputs_2 = numpy.dot(self.wh12_,hidden_outputs)

# Output signal of the second hidden layer:

hidden_outputs_2 = self.activation_function(hidden_inputs_2)

'''

# Input signal of the third hidden layer:

hidden_inputs_3 = numpy.dot(self.wh23_,hidden_outputs_2)

# Output signal of the third hidden layer:

hidden_outputs_3 = self.activation_function(hidden_inputs_3)

'''

# Input signal of output layer:

final_inputs = numpy.dot(self.who_,hidden_outputs_2)

# Output signal of output layer:

final_outputs = self.activation_function(final_inputs)

# Calculate the error vector of the output layer

output_errors = targets - final_outputs

# Calculate the error vector of the third hidden layer

#hidden_errors_3 = numpy.dot(self.who_.T,output_errors)

# Calculate the error vector of the second hidden layer

hidden_errors_2 = numpy.dot(self.who_.T,output_errors)

# Calculate the error vector of the first hidden layer

hidden_errors = numpy.dot(self.wh12_.T,hidden_errors_2)

''' Optimize link weight values '''

# Third, link weight optimization between hidden layer and output layer

#self.who_ += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)),numpy.transpose(hidden_outputs_3))

# Link weight optimization between the second hidden layer and the third hidden layer

self.who_ += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)),numpy.transpose(hidden_outputs_2))

# Link weight optimization between the first hidden layer and the second hidden layer

self.wh12_ += self.lr * numpy.dot((hidden_errors_2 * hidden_outputs_2 * (1.0 - hidden_outputs_2)),numpy.transpose(hidden_outputs))

# Link weight optimization between the input layer and the first hidden layer

self.wih_ += self.lr * numpy.dot((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)),numpy.transpose(inputs))

#return self.query(inputs_list)

# query

def query(self,inputs_list):

# Converts the input list to a numpy vector object and to a column vector

inputs = numpy.array(inputs_list,ndmin=2).T

# The input signal of the node of the first hidden layer: the product of the weight matrix and the input signal vector

self.hidden_inputs = numpy.dot(self.wih_,inputs)

# The output signal of the node of the first hidden layer: the weighted sum value of the S function

self.hidden_outputs = self.activation_function(self.hidden_inputs)

# Input signal of the second hidden layer:

self.hidden_inputs_2 = numpy.dot(self.wh12_,self.hidden_outputs)

# Output signal of the second hidden layer:

self.hidden_outputs_2 = self.activation_function(self.hidden_inputs_2)

'''

# Input signal of the third hidden layer:

self.hidden_inputs_3 = numpy.dot(self.wh23_,self.hidden_outputs_2)

# Output signal of the third hidden layer:

self.hidden_outputs_3 = self.activation_function(self.hidden_inputs_3)

'''

# Input signal of output layer node:

self.final_inputs = numpy.dot(self.who_,self.hidden_outputs_2)

# Final output signal of output layer node:

self.final_outputs = self.activation_function(self.final_inputs)

# Return final output signal

return self.final_outputs

def test(Network,test_dataset_name):

Network.wih_ = numpy.loadtxt('wih_file.csv')

Network.wh12_ = numpy.loadtxt('wh12_file.csv')

#Network.wh23_ = numpy.loadtxt('wh23_file.csv')

Network.who_ = numpy.loadtxt('who_file.csv')

# Prepare test data

test_data_file = open(test_dataset_name,'r')

test_data_list = test_data_file.readlines()

test_data_file.close()

print('\n')

print("Testing...\n")

# Statistics

correct_test = 0

all_test = 0

correct = [0,0,0,0,0,0,0,0,0,0]

num_counter = [0,0,0,0,0,0,0,0,0,0]

#Test progress bar

p_test = progressbar.ProgressBar()

p_test.start(len(test_data_list))

# Animation display

#plt.figure(1)

for imag_list in test_data_list:

all_values = imag_list.split(',')

lable = int(all_values[0])

scaled_input = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01

imag_array = numpy.asfarray(scaled_input).reshape((28,28))

'''

plt.imshow(imag_array,cmap='Greys',animated=True)

plt.draw()

plt.pause(0.00001)

'''

net_answer = Network.query(scaled_input).tolist().index(max(Network.final_outputs))

num_counter[lable] += 1

if lable == int(net_answer):

correct_test += 1

correct[lable] += 1

p_test.update(all_test + 1)

all_test += 1

p_test.finish()

print("Finish Test.\n")

# network performance

performance = correct_test/all_test

Per_num_performance = []

for i in range(10):

# The test set may not contain some numbers, so a divide by 0 exception is caught

try:

Per_num_performance.append(correct[i]/num_counter[i])

except ZeroDivisionError:

Per_num_performance.append(0)

print("The correctRate of per number: ",Per_num_performance)

print("Performance of the NeuralNetwork: ",performance*100)

return performance

# Define network size and learning rate

input_nodes = 784

hidden_nodes = 700

hidden_nodes_2 = 700

#hidden_nodes_3 = 100

output_nodes = 10

learningrate = 0.0001

if __name__ == "__main__":

# Defining training algebra

epochs = 5

#Create neural network instance

Net = neuralNetwork(input_nodes,hidden_nodes,hidden_nodes_2,output_nodes,learningrate)

#plt.imshow(final_outputs,interpolation="nearest")

# Prepare training data

data_file = open("mnist_train.csv",'r')

data_list = data_file.readlines()

N_train = len(data_list)

data_file.close()

# Animation display

#plt.figure(1)

print("Training: ", epochs, "epochs...")

for e in range(epochs):

# Training progress bar

print('\nThe '+str(e+1)+'th epoch trainning:\n')

p_train = progressbar.ProgressBar()

p_train.start(N_train)

i = 0

for img_list in data_list:

# Separate records with commas

all_values = img_list.split(',')

# Map 0-255 to 0.01-0.99

scaled_input = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01

imag_array = numpy.asfarray(scaled_input).reshape((28,28))

#plt.imshow(imag_array,cmap='Greys',animated=True)

#plt.draw()

#plt.pause(0.00001)

#Rotate the image to generate a new training set

input_plus_10imag = scipy.ndimage.interpolation.rotate(imag_array,10,cval=0.01,reshape=False)

input_minus_10imag = scipy.ndimage.interpolation.rotate(imag_array,-10,cval=0.01,reshape=False)

input_plus10 = input_plus_10imag.reshape((1,784))

input_minus10 = input_minus_10imag.reshape((1, 784))

# Create a target value vector from the label

targets = numpy.zeros(output_nodes) + 0.01

targets[int(all_values[0])] = 0.99

# Training neural network with three training sets

Net.train(scaled_input,targets)

Net.train(input_plus10,targets)

Net.train(input_minus10,targets)

#time.sleep(0.01)

p_train.update(i+1)

i+=1

p_train.finish()

print("\nTrainning finish.\n")

# Output the trained neural network link weight to csv file

numpy.savetxt('wih_file.csv',Net.wih_,fmt='%f')

numpy.savetxt('wh12_file.csv',Net.wh12_,fmt='%f')

#numpy.savetxt('wh23_file.csv',Net.wh23_,fmt='%f')

numpy.savetxt('who_file.csv',Net.who_,fmt='%f')network_test.py

import neural_network as nk import numpy import matplotlib.pyplot as pl import scipy.special import scipy.ndimage.interpolation import json import time import progressbar # Test neural network if __name__ == "__main__": input_nodes = nk.input_nodes hidden_nodes = nk.hidden_nodes hidden_nodes_2 = nk.hidden_nodes_2 #hidden_nodes_3 = nk.hidden_nodes_3 output_nodes = nk.output_nodes learningrate = nk.learningrate Network = nk.neuralNetwork(input_nodes,hidden_nodes,hidden_nodes_2,output_nodes,learningrate) nk.test(Network,"mnist_test.csv") # hidden_nodes = 200 lr = 0.01 performance = 97.34

Run program

- cd enters the folder where the code is located

- (training neural network) input command: python neural_network.py

- (test neural network) input command: python network_test.py

[Warning] when running, please ensure that the training set and test set data are consistent The csv file is in the same directory as the source code file. Otherwise, please modify the file path in the source code