Learning the source code of ConcurrentHashMap

It is recommended to understand the underlying source code of HashMap first, and then learn ConcurrentHashMap. The efficiency will be much faster.

Here is my understanding of HashMap.

HashMap source code learning

characteristic

- ConcurrentHashMap inherits the AbstractMap class and implements the ConcurrentMap and Serializable interfaces

- Concurrent HashMap thread safety (jdk1.7: segment lock; jdk1.8: node+cas+synchronized)

Seven important parameters

- Maximum array length_ CAPACITY = 1 << 30

- Array initialization length DEFAULT_CAPACITY = 16

- Default concurrency level DEFAULT_CONCURRENCY_LEVEL = 16

- Load factor LOAD_FACTOR = 0.75f

- Tree threshold tree_ THRESHOLD = 8

- Chained threshold UNTREEIFY_THRESHOLD = 6

- Minimum treelized array length MIN_TREEIFY_CAPACITY = 64

Several important methods

Based on jdk1.8

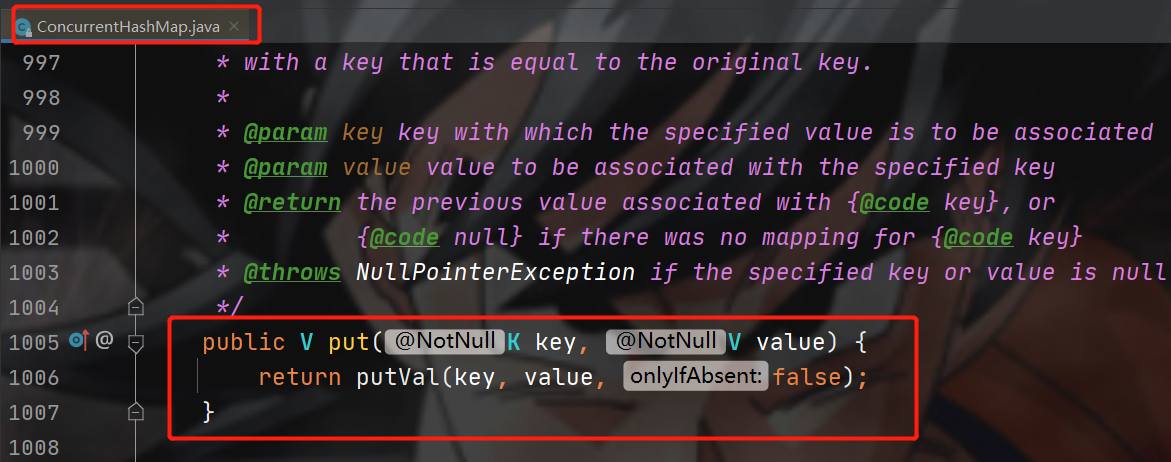

put()

First, look at the put function. putVal is called internally

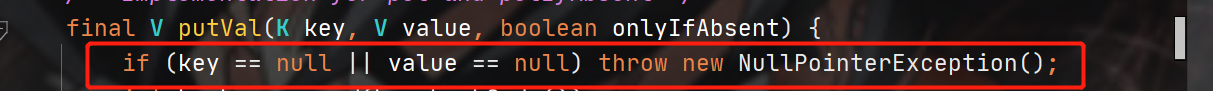

putVal()

final V putVal(K key, V value, boolean onlyIfAbsent) {

// The key value of ConcurrentHashMap cannot be empty

if (key == null || value == null) throw new NullPointerException();

// Calculate the hash value of the key

int hash = spread(key.hashCode());

// binCount is used to record the number of elements in the linked list / tree

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

// If the Node array is empty, initialize it first

if (tab == null || (n = tab.length) == 0)

tab = initTable();

// Calculate the index of the key to be stored in the array, and judge whether there are elements in the current position

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

// If there is no element, the element is added through cas operation

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

// If there are elements and the hash value of the first element is MOVED(-1), capacity expansion is required

else if ((fh = f.hash) == MOVED)

// Help with data migration

tab = helpTransfer(tab, f);

// If there are elements, expansion is not required

else {

V oldVal = null;

// Lock only the first element, which is a feature of ConcurrentHashMap

synchronized (f) {

if (tabAt(tab, i) == f) {

// The hash value of the first element > = 0 indicates that this is a linked list structure

if (fh >= 0) {

// binCount is used to record the length of the linked list

binCount = 1;

// Traversal linked list

for (Node<K,V> e = f;; ++binCount) {

K ek;

// Compare whether the key is the same object as the HashMap

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

// Traverse to the tail and directly use the tail interpolation method

Node<K,V> pred = e;

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

// If the current is a red black tree structure

else if (f instanceof TreeBin) {

Node<K,V> p;

binCount = 2;

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

// Number of current nodes binCount

if (binCount != 0) {

// The number of nodes is greater than or equal to tree_ Treeing is performed when threshold (8)

if (binCount >= TREEIFY_THRESHOLD)

treeifyBin(tab, i);

if (oldVal != null)

// If there is an override, the old value is returned

return oldVal;

break;

}

}

}

// Record the number of elements in all arrays

addCount(1L, binCount);

return null;

}

Here you can learn about the put operation in jdk1.7

final V put(K key, int hash, V value, boolean onlyIfAbsent) {

// Locate the table in the current Segment to the HashEntry through the hashcode of the key

// First, in the first step, you will try to obtain the lock. If the acquisition fails, there must be competition from other threads. Then use scanAndLockForPut() to spin to obtain the lock. If the number of retries reaches max_ SCAN_ Restries is changed to block lock acquisition to ensure success.

// The tryLock () method attempts to acquire a lock

HashEntry<K,V> node = tryLock() ? null :

scanAndLockForPut(key, hash, value);

V oldValue;

try {

HashEntry<K,V>[] tab = table;

int index = (tab.length - 1) & hash;

// Take the first element of array tab first

HashEntry<K,V> first = entryAt(tab, index);

// e is to traverse the elements in the array

for (HashEntry<K,V> e = first;;) {

// If e is not empty

if (e != null) {

K k;

// Traverse the HashEntry. If it is not empty, judge whether the passed key is equal to the currently traversed key. If it is equal, overwrite the old value.

// Equal, overwrite, and then end

if ((k = e.key) == key ||

(e.hash == hash && key.equals(k))) {

oldValue = e.value;

if (!onlyIfAbsent) {

e.value = value;

++modCount;

}

break;

}

e = e.next;

}

// If e is empty

else {

// If the node is not empty, you need to create a HashEntry and add it to the Segment. At the same time, you will first judge whether you need to expand the capacity.

if (node != null)

node.setNext(first);

else

node = new HashEntry<K,V>(hash, key, value, first);

int c = count + 1;

// Capacity expansion

if (c > threshold && tab.length < MAXIMUM_CAPACITY)

rehash(node);

else

setEntryAt(tab, index, node);

++modCount;

count = c;

oldValue = null;

break;

}

}

} finally {

//Release lock

unlock();

}

return oldValue;

}

helpTransfer()

For data migration

treeifyBin()

Convert linked list into red black tree

get()

public V get(Object key) {

Node<K,V>[] tab; Node<K,V> e, p; int n, eh; K ek;

// Calculate the hash value of the key

int h = spread(key.hashCode());

// The current array is not empty, and the length is > 0. After summing according to the hash value of the key and the array length, the first element of the array subscript is not empty

if ((tab = table) != null && (n = tab.length) > 0 &&

(e = tabAt(tab, (n - 1) & h)) != null) {

// If the current key and the key of the first element of the array are the same object, value will be returned directly

if ((eh = e.hash) == h) {

if ((ek = e.key) == key || (ek != null && key.equals(ek)))

return e.val;

}

// If the hash value is less than 0, it indicates capacity expansion or red black tree

else if (eh < 0)

return (p = e.find(h, key)) != null ? p.val : null;

// Traversal linked list

while ((e = e.next) != null) {

// Compare key s one by one, and return value if it is the same object

if (e.hash == h &&

((ek = e.key) == key || (ek != null && key.equals(ek))))

return e.val;

}

}

// null if no

return null;

}

Five points for attention

1. The key value of concurrenthashmap cannot be empty (jdk1.8)

A null pointer exception is returned.

2. Differences between concurrenthashmap and HashMap:

- If the element under the current index is empty, write data through CAS. If it fails, spin

- If it is not empty, add a synchronized lock to the first element of the linked list, followed by HashMap

3. Differences between JDK1.8 and jdk1.7:

- Red black tree is added to improve query efficiency

- segment lock is replaced by synchronized built-in lock (inheriting ReentrantLock lock lock)

- Segment array and Entry array are replaced by Node [] array

- In jdk1.7, put and get operations need two hashes to reach the specified HashEntry. The first hash reaches the Segment, the second hash reaches the entry in the Segment, and then traverses the entry linked list

- Using cas to manipulate put elements

4. Why does jdk1.8 use the built-in lock synchronized to replace the reentrant lock

- Because the lock granularity is reduced, synchronized is no worse than ReentrantLock in relatively low granularity locking. In coarse granularity locking, ReentrantLock may control the boundaries of each low granularity through Condition, which is more flexible. In low granularity locking, the advantage of Condition is lost

- The JVM development team has never given up on synchronized, and the JVM based synchronized optimization has more space, and using embedded keywords is more natural than using API s (synchronized has a lock upgrade mechanism in 1.6, and its performance is optimized)

- Under a large number of data operations, for the memory pressure of the JVM, the ReentrantLock based on API will cost more memory. Although it is not a bottleneck, it is also a basis for selection. synchronized is a built-in keyword in java.

5. Capacity expansion mechanism

In jdk1.7, the length of segment is fixed (the initial value is 16) and cannot be expanded. The HashEntry array is expanded; In jdk1.8, the Node array is expanded. The capacity expansion mechanism is the same as that of HashMap: when the number of overall elements > array length * capacity expansion factor and array length > 64, the capacity will be expanded twice.