Libtorch It is the C++ API officially provided by pytoch, and the use method can be said to restore pytoch to a great extent.

1, Environment configuration

- GitHub address: BASNet

- VS2017+CUDA 10.1+cuDNN 7.6.5+OpenCV 3.4.11

- Pytorch 1.5.0+torchvision 0.6.0

- Libtorch 1.5.0 (release): extraction code: 865k

https://pan.baidu.com/s/1Ty-UPZWEOnNRPwldexLZzw

The version downloaded by Libtorch must correspond to the Pytorch version. What I provide here is the release version of Libtorch

Note:

1. The original author GitHub recommended using pytoch 0.4.0 to run, but libtoch started to support pytoch 1.0, so try to upgrade to 1.0 To run x

2.!!! Pytorch, Libtorch, CUDA and cuDNN versions must correspond one by one

3. Training model generation pth's pytoch version is also best and converted to torch script pt files should also be consistent. Do not use 0.4.0 training and use 1.7.0 conversion.

2, torchscript generation pt file

Modify basnet before conversion Py file (note that the training should be finished at this time and should not be modified before training) 344 lines return F.sigmoid(dout), F.sigmoid(d1), F.sigmoid(d2), F.sigmoid(d3), F.sigmoid(d4), F.sigmoid(d5), F.sigmoid(d6), F.sigmoid(db) only return the first value. If you don't change it, an error will be reported when you forward in C + +. It seems that tuple can also be used to receive multiple values. I haven't studied it here.

import torch from model import BASNet #Basnet. Net under model folder Py file model_dir = r"./saved_models/basnet_bsi/basnet_best.pth" model = BASNet(3,1) # channels, classes model.load_state_dict(torch.load(model_dir)) if torch.cuda.is_available(): model.cuda() model.eval() example = torch.rand(1,3,256,256).cuda() # input example traced_script_module = torch.jit.trace(model,example) traced_script_module.save(r"./basnet.pt")

. cuda() is very important. It is attached to CUDA. In C + +, it can be calculated by CPU or GPU. Otherwise, it can only be calculated by CPU.

reference resources: Precautions and common errors in the use of TorchScript

3, Libtorch deployment

Libtorch can be configured in two ways: CMake and VS manual configuration. Here I choose VS manual configuration.

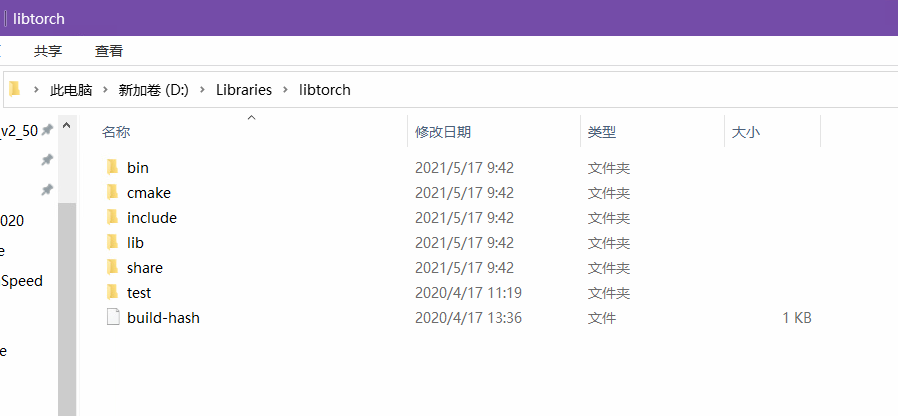

The list of downloaded Libtorch files is as follows:

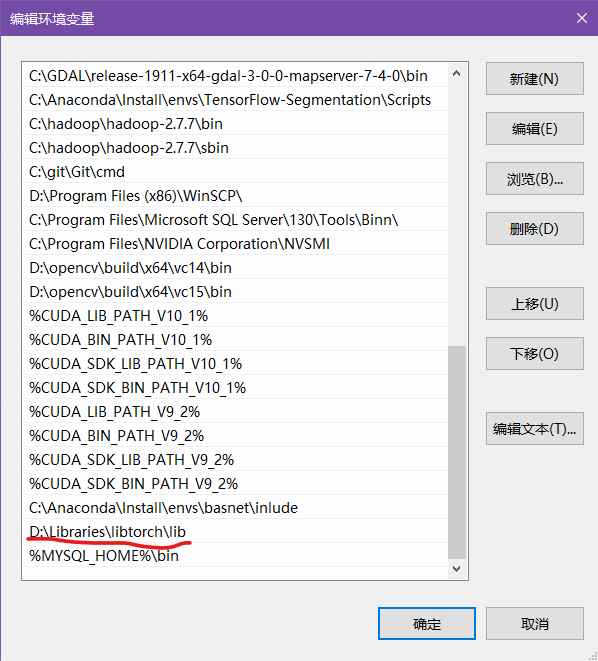

1. Add environment variables

2.VS configuration environment

(1) Create an empty project

(2) Add various paths

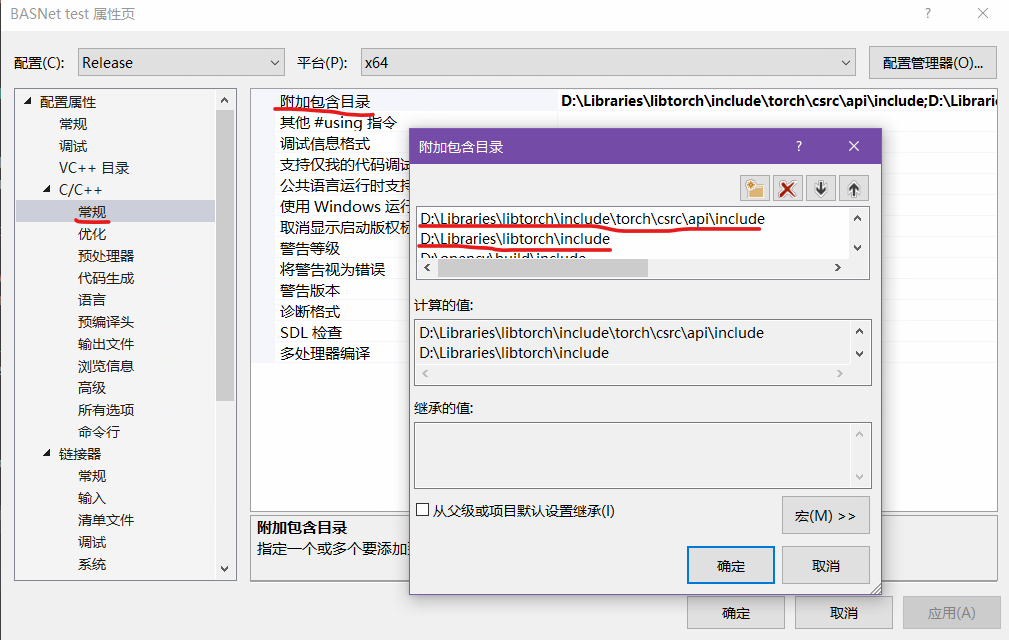

i. Project - properties - C/C + + - General - attach include directory

D:\Libraries\libtorch\include D:\Libraries\libtorch\include\torch\csrc\api\include

The former corresponds to #include < torch / script h> , the latter corresponds to #include < torch / torch h>.

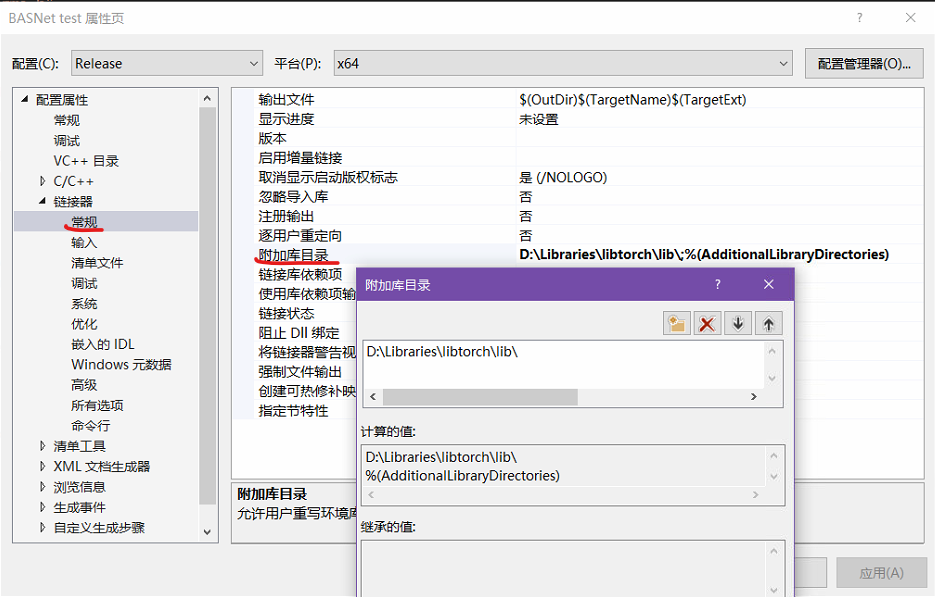

ii. Project - properties - linker - General - attach Library Directory

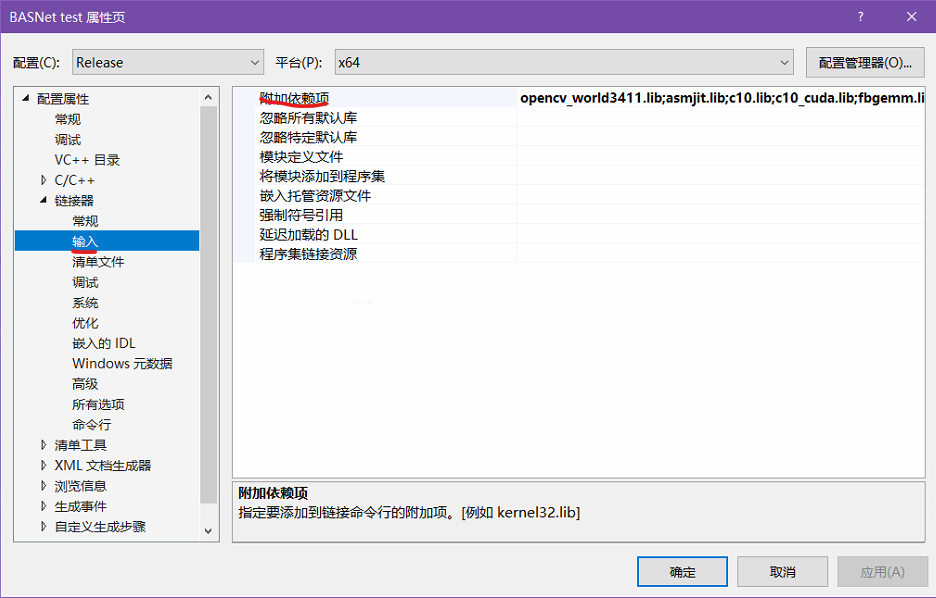

iii. Project - properties - linker - input - additional dependencies

If you are not sure, you can write all lib files in the libtorch\lib Directory:

asmjit.lib c10.lib c10_cuda.lib caffe2_detectron_ops_gpu.lib caffe2_module_test_dynamic.lib caffe2_nvrtc.lib clog.lib cpuinfo.lib fbgemm.lib libprotobuf.lib libprotobuf-lite.lib libprotoc.lib mkldnn.lib torch.lib torch_cuda.lib torch_cpu.lib

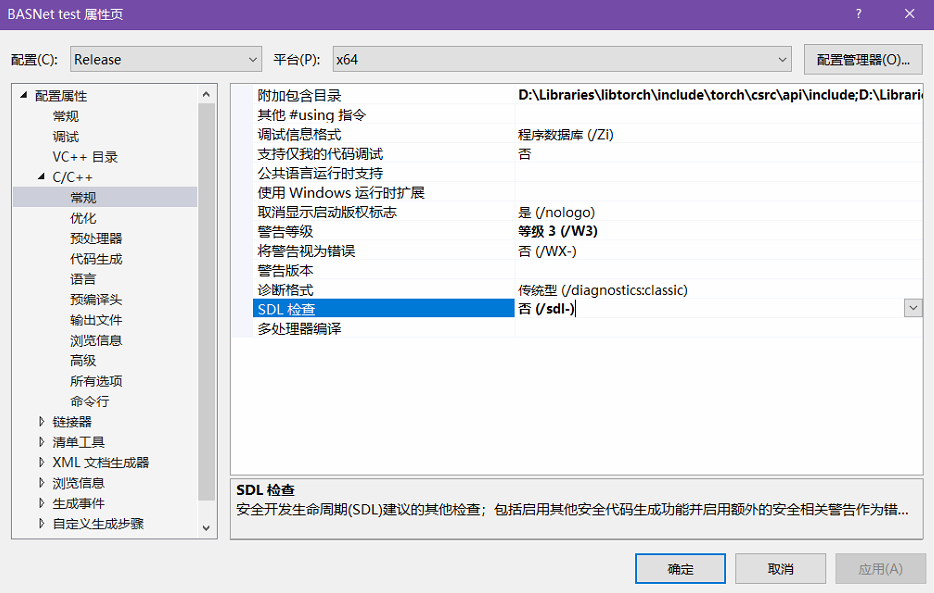

iv. project - "properties" - C/C + + Directory - "general" - SDL check: no

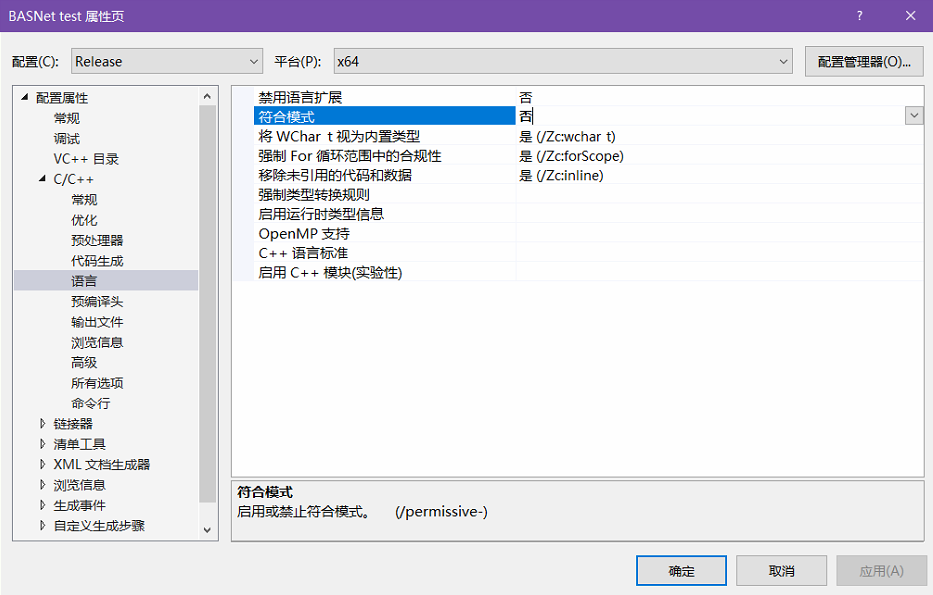

v. Project - properties - C/C + + Directory - language - Compliance mode: no

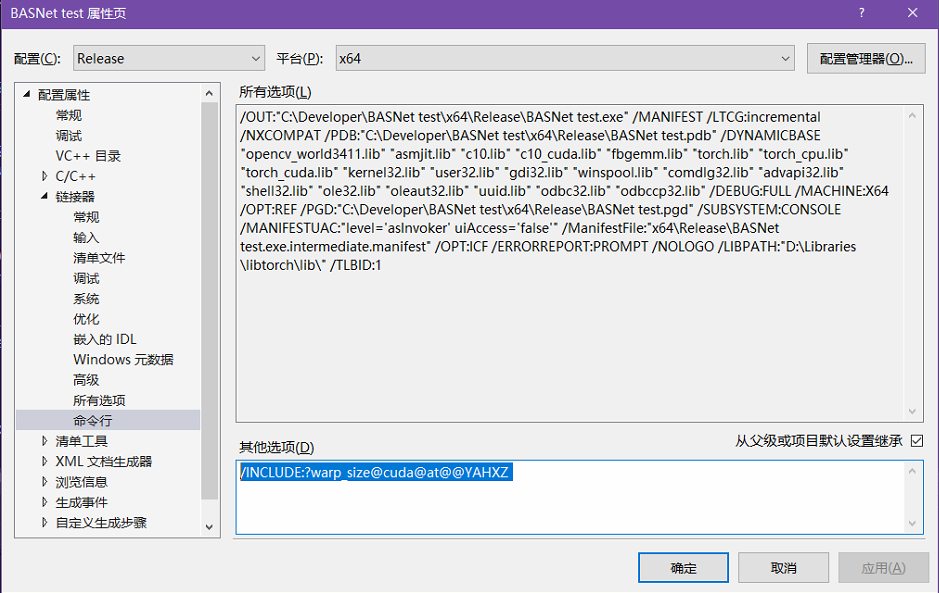

vi. create a new item - properties - Connector - command line - other options:

Add * * / INCLUDE:?warp_size@cuda@at@@YAHXZ**

test

#include "torch/torch.h"

#include "torch/script.h"

int main()

{

torch::Tensor output = torch::randn({ 3,2 });

std::cout << output;

return 0;

}

Output:

Configuration succeeded!

4, BASNet demo

#include "torch/torch.h"

#include "torch/script.h"

#include <opencv2/opencv.hpp>

#include <iostream>

using std::cout;

using std::endl;

using std::string;

using torch::jit::script::Module;

const string image_path = "C:/Developer/BASNet test/0003.jpg";

const string module_path = "C:/Developer/BASNet test/basnet.pt";

int main(int argc, char **argv)

{

cout << "CUDA Available:" << (torch::cuda::is_available() ? "√" : "×") << endl;

cout << "cudnn Available:" << (torch::cuda::cudnn_is_available() ? "√" : "×") << endl;

// load module

Module module;

try

{

module = torch::jit::load(module_path);

module.to(torch::kCUDA);

}

catch (const c10::Error& e)

{

std::cerr << "Error\n";

}

// Read the image as cv::Mat and resize it

cv::Mat image = cv::imread(image_path, cv::ImreadModes::IMREAD_COLOR);

int img_h = image.rows; // Height of the original image

int img_w = image.cols; // Width of the original image

cv::cvtColor(image, image, cv::COLOR_BGR2RGB); // BGR->RGB

cv::Mat image_transfomed;

cv::resize(image, image_transfomed, cv::Size(256, 256));

// Mat to Tensor

torch::Tensor tensor_image = torch::from_blob(image_transfomed.data, { image_transfomed.rows, image_transfomed.cols, 3 }, torch::kByte); //{ 256,256,3 }

tensor_image = tensor_image.toType(torch::kFloat); // In order to normalize the division of 255 in the next step, the unsigned integer is converted to float

tensor_image = tensor_image.div(255.0); // normalization

tensor_image = tensor_image.permute({ 2,0,1 }).unsqueeze(0); // The storage form of image matrix read by opencv: H x W x C, but the storage form of Tensor in pytoch is: N x C x H x W, so it needs to be transformed

tensor_image = tensor_image.to(torch::kCUDA);

// perdict

at::Tensor output = module.forward({ tensor_image }).toTensor(); // output.size = { 1,1,256,256 }

at::Tensor predict(output);

predict = predict.squeeze(0); // Dimensionality reduction

predict = predict.mul(255).clamp(0, 255).to(torch::kU8); // Inverse normalization and conversion to unsigned integer

predict = predict.to(torch::kCPU); // kCUDA reports an error

// Tensor to Mat

cv::Mat result(image_transfomed.rows, image_transfomed.cols, CV_8UC1, predict.data_ptr()); // (1) CV_8UC1√ CV_8UC3 × (2) Must be 256 × 256. If you directly resize to the size of the original image, an error occurs

cv::resize(result, result, cv::Size(img_w, img_h)); // Restore to original size

cv::imshow("result", result);

cv::imwrite("../prediction.png", result);

cv::waitKey(0);

return 0;

}

Input:

Output:

done!!!

Sprout a new one, because the project needs to use C + +. Only when there is no foundation of pytoch, can we strengthen libtoch. I also learned about pytoch. There is little information about Libtorch. I stepped on a lot of pits and recorded it here.

Reference link

[1] Method summary of deploying Pytorch (Libtorch) model in C + + (Win10+VS2017)

[2] Precautions and common errors in the use of TorchScript

[3] Summary of problems and errors in C + + deployment of pytoch (libtoch)

[4] Examples of common api functions of libtorch (the most complete and detailed in History)

[5] libtorch knowledge summary