Summary of orders

This command is designed to work without user interaction.

curl is a tool for transferring data from or to servers. "Transfer data to the server or get data from the server"

The supporting protocols are DICT, FILE, FTP, FTPS, GOPHER, HTTP, HTTPS, IMAP, IMAPS, LDAP, LDAPS, POP3S, POP3S, RTMP, RTSP, SCP, SFTP, SMTP, SMTPS, TELNET and TFTP.

curl provides a lot of useful techniques, such as proxy support, user authentication, FTP upload, HTTP post, SSL connection, cookie, file breakpoint continuation, Metalink and so on. As you will see below, the number of these features can make you dizzy!

Accessed URL

You can specify any number of URLs on the command line. They will be acquired in the specified order.

You can specify multiple urls, or parts of urls, by writing partial sets in curly brackets, such as:

1 http://site.{one,two,three}.com 2 # See also 3 curl http://www.zhangblog.com/2019/06/16/hexo{04,05,06}/ -I # see information

Or you can use [] to get a sequence of alphanumeric sequences, such as:

1 ftp://ftp.numericals.com/file[1-100].txt 2 ftp://ftp.numericals.com/file[001-100].txt # Leading Zero 3 ftp://ftp.letters.com/file[a-z].txt 4 # See also 5 curl http://www.zhangblog.com/2019/06/16/hexo[04-06]/ -I # see information

Nested sequences are not supported, but several adjacent sequences can be used:

http://any.org/archive[1996-1999]/vol[1-4]/part{a,b,c}.html

You can specify the range of a step counter to get the nth number or letter:

http://www.numericals.com/file[1-100:10].txt http://www.letters.com/file[a-z:2].txt

If you specify a URL without protocol://prefix, it defaults to HTTP.

Common Option 1

curl usually displays a schedule during the operation, showing the amount of data transferred, transmission speed and estimated remaining time, etc.

-#, --progress-bar

Show curl progress as a simple progress bar; not a standard schedule with more information.

1 [root@iZ28xbsfvc4Z 20190702]# curl -O http://www.zhangblog.com/2019/06/16/hexo04/index.html # Default schedule 2 % Total % Received % Xferd Average Speed Time Time Time Current 3 Dload Upload Total Spent Left Speed 4 100 97299 100 97299 0 0 186k 0 --:--:-- --:--:-- --:--:-- 186k 5 [root@iZ28xbsfvc4Z 20190702]# 6 [root@iZ28xbsfvc4Z 20190702]# curl -# -O http://www.zhangblog.com/2019/06/16/hexo04/index.html #Simple progress bar 7 ######################################################################## 100.0%

-0, --http1.0

(HTTP) Forces curl to make requests using HTTP 1.0 instead of its internal preferred HTTP 1.1.

-1, --tlsv1

(SSL) Forces curl to use TLS version 1.x when negotiating with remote TLS services.

Options -- tlsv1.0, -- tlsv1.1, and -- tlsv1.2 can be used to control the TLS version more accurately (if the SSL backend used supports this level of control).

-2, --sslv2

(SSL) Forces curl to use TLS version 2 when negotiating with remote TLS services.

-3, --sslv3

(SSL) Forces curl to use TLS version 3 when negotiating with remote TLS services.

-4, --ipv4

If curl can resolve an address to multiple IP versions (for example, it supports IPv4 and ipv6), this option tells curl to resolve only the name to an IPv4 address.

-6, --ipv6

If curl can resolve an address to multiple IP versions (for example, it supports ipv4 and ipv6), this option tells curl to resolve only the name to an IPv6 address.

-a, --append

(FTP/SFTP) When used in uploads, this tells curl to append to the target file instead of overwriting it. If the file does not exist, it will be created. Note that some SSH servers, including OpenSSH, ignore this flag.

-A, --user-agent <agent string>

(HTTP) Specifies the User-Agent string to be sent to the HTTP server. Of course, you can also use the - H, --header option to set it up.

Used to simulate the client, such as: Google Browser, Firefox Browser, IE browser and so on.

If this option is used multiple times, the last option will be used.

Imitate browser access

curl -A "Mozilla/5.0 (Windows NT 10.0; Win64; x64) Chrome/75.0.3770.999" http://www.zhangblog.com/2019/06/24/domainexpire/

--basic

(HTTP) tells curl to use HTTP basic authentication. This is the default.

Common Option 2

-b, --cookie <name=data>

(HTTP) Passes data as cookies to the HTTP server. It should be the data in the "Set-Cookie:" row that was previously received from the server. The data format is "NAME1=VALUE1;NAME2 = VALUE2".

If the'='symbol is not used in the row, it is treated as a file name for reading previously stored cookie rows, and if they match, they should be used in this session.

The file format for reading cookie files should be pure HTTP header file or Netscape/Mozilla cookie file format.

Note: Using - b, --cookie specified files are used only as input. Cookies are not stored in files. To store cookies, you can use the - c, --cookie-jar option, or you can even use - D, --dump-header to save HTTP headers to files.

-c, --cookie-jar <file name>

(HTTP) Specifies which file you want curl to write all cookie s to after you complete the operation.

All cookies read from the specified file before Curl writes, and all cookies received from the remote server.

If there is no known cookie, no file will be written. This file will be written in Netscape cookie file format. If you set the filename to a single dash "-", cookies will be standard output.

This command-line option activates the cookie engine to enable curl to record and use cookies. Another way to activate it is to use the - b, --cookie option.

If Cookie Jars cannot be created or written, the entire curl operation will not fail, or even report errors clearly. Using - v gives you a warning, but it's the only visible feedback you get about this potentially fatal situation.

If this option is used multiple times, the last specified filename will be used.

--connect-timeout <seconds>

Timeout to connect to the server. This only limits the connection phase, and once curl is connected, this option is no longer used.

See also: -m, --max-time option.

1 # current https://www.zhangXX.com It's a foreign server with limited access 2 [root@iZ28xbsfvc4Z ~]# curl --connect-timeout 10 https://www.zhangXX.com | head 3 % Total % Received % Xferd Average Speed Time Time Time Current 4 Dload Upload Total Spent Left Speed 5 0 0 0 0 0 0 0 0 --:--:-- 0:00:10 --:--:-- 0 6 curl: (28) Connection timed out after 10001 milliseconds

--create-dirs

When used with the - o option, curl creates the necessary local directory hierarchy as needed.

This option only creates dirs associated with the - o option, with no other content. If the - o filename does not use dir, or if the dir mentioned in it already exists, the dir will not be created.

Example

curl -o ./hexo04/index.html --create-dirs http://www.zhangblog.com/2019/06/16/hexo04

-C, --continue-at <offset>

Continue/restore previous file transfers at a given offset. The given offset is the exact number of bytes skipped, calculated from the beginning of the source file, and then transferred to the target file.

Use "-C-" "Notice the presence and absence of spaces" to tell curl to automatically find out where / how to restore the transmission. Then, it uses the given output/input file to solve the problem.

1 # Download a 2G file that can be tested repeatedly to see the results 2 curl -C - -o tmp.data http://www.zhangblog.com/uploads/tmp/tmp.data

-d, --data <data>

With this option, the default request mode is POST.

(HTTP) Sends the specified data to the HTTP server in the POST request, the same as the browser does when the user fills in the HTML form and presses the submit button. This will cause curl to pass data to the server using content-type application/x-www-form-urlencoded. See also: - F, - form.

If these commands are used multiple times on the same command line, the data fragments will use the specified separator-merge.

Therefore, using'- d name=daniel -d skill=lousy'will generate a post block similar to'name = Daniel & skill = lousy', which can also be used directly in combination.

- d,--data is the same as--data-ascii. When post data is pure binary data, use the -- data-binary option. To url the value of a form field, you can use -- data-urlencode.

If you start with the letter @, the rest should be a file name from which you can read data. Or - if you want curl to read data from stdin [standard input]. The content of the file must already be url-encoded. You can also specify multiple files. Therefore, a file named "foobar" for Posting data will be completed using -- data@foobar.

Example

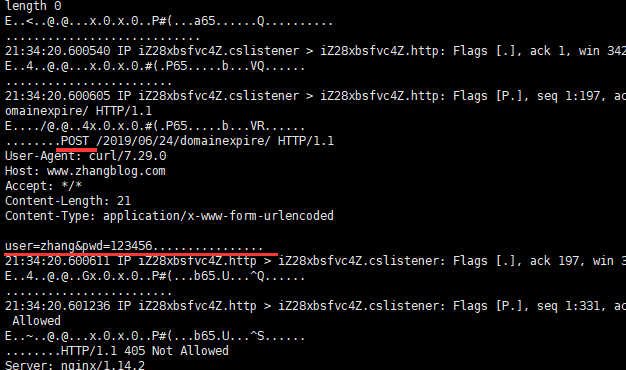

Request information:

1 [root@iZ28xbsfvc4Z 20190712]# curl -sv --local-port 9000 -d 'user=zhang&pwd=123456' http://www.zhangblog.com/2019/06/24/domainexpire/ | head -n1 2 * About to connect() to www.zhangblog.com port 80 (#0) 3 * Trying 120.27.48.179... 4 * Local port: 9000 5 * Connected to www.zhangblog.com (120.27.48.179) port 80 (#0) 6 > POST /2019/06/24/domainexpire/ HTTP/1.1 # Visible request mode is POST 7 > User-Agent: curl/7.29.0 8 > Host: www.zhangblog.com 9 > Accept: */* 10 > Content-Length: 21 11 > Content-Type: application/x-www-form-urlencoded 12 > 13 } [data not shown] 14 * upload completely sent off: 21 out of 21 bytes 15 < HTTP/1.1 405 Not Allowed 16 < Server: nginx/1.14.2 17 < Date: Fri, 12 Jul 2019 13:34:20 GMT 18 < Content-Type: text/html 19 < Content-Length: 173 20 < Connection: keep-alive 21 < 22 { [data not shown] 23 * Connection #0 to host www.zhangblog.com left intact 24 <html>

Packet capture information

[root@iZ28xbsfvc4Z tcpdump]# tcpdump -i any port 9000 -A -s 0

--data-ascii <data>

See - d,--data

--data-binary <data>

(HTTP) POST data is processed in exactly the specified manner without any additional processing.

If you start with the letter @, the rest should be file names.

Data is published in a way similar to -- data-ascii, but with newline preservation and never converted.

If this option is used many times, the option after the first option will append the data as described in - d, --data.

--data-urlencode <data>

(HTTP) This Post data is similar to another -- data option, except for url encoding.

-D, --dump-header <file>

Writes the response protocol header to the specified file.

If this option is used multiple times, the last option will be used.

This option is very convenient when you want to store header files sent to you by HTTP sites.

1 [root@iZ28xbsfvc4Z 20190703]# curl -D baidu_header.info www.baidu.com 2 .................. 3 [root@iZ28xbsfvc4Z 20190703]# ll 4 total 4 5 -rw-r--r-- 1 root root 400 Jul 3 10:11 baidu_header.info # Generated header file

The second curl call then reads cookies from the header through the - b, --cookie option. However, the - c, --cookie-jar option is a better way to store cookies.

Common Option 3

--digest

(HTTP) Enable HTTP digest authentication. This is an authentication scheme that prevents passwords from being sent over the network in plain text. Combine this option with the normal - u, --user option to set the username and password.

See -- ntlm, --negotiate and -- anyauth for options.

If this option is used many times, only the first option is used.

-e, --referer <URL>

(HTTP) Sends "Referer Page" [from which page to jump] information to the HTTP server. Of course, you can also use the - H, --header flag to set it up.

If this option is used multiple times, the last option will be used.

curl -e 'https:www.baidu.com' http://www.zhangblog.com/2019/06/24/domainexpire/

-f, --fail

(HTTP) Silent failure on server error (no output at all). This is mainly to make scripts and other attempts to better handle failures.

Typically, when an HTTP server fails to deliver a document, it returns an HTML document explaining the reason (and usually the reason). This flag prevents curl from outputting the value and returning error 22.

1 [root@iZ28xbsfvc4Z 20190713]# curl http://www.zhangblog.com/201912312 2 <html> 3 <head><title>404 Not Found</title></head> 4 <body bgcolor="white"> 5 <center><h1>404 Not Found</h1></center> 6 <hr><center>nginx/1.14.2</center> 7 </body> 8 </html> 9 [root@iZ28xbsfvc4Z 20190713]# curl -f http://www.zhangblog.com/201912312 10 curl: (22) The requested URL returned error: 404 Not Found

-F, --form <name=content>

(HTTP) This allows curl to simulate the form that the user fills after pressing the submit button.

This situation allows curl to use Content-Type multipart/form-data POST data. You can also upload binary files and so on.

@ File Name: Make a file upload attached to the post.

File Name: Get the content of the text field from the file.

For example, to send a password file to the server, where "password" is the name of the form field, / etc/passwd will be the input:

curl -F password=@/etc/passwd www.mypasswords.com

You can also use'type='to tell curl what Content-Type to use, similar to:

curl -F "web=@index.html;type=text/html" url.com //or curl -F "name=daniel;type=text/foo" url.com

You can change the name of the locally uploaded file by setting filename = as follows:

curl -F "file=@localfile;filename=nameinpost" url.com

Uploaded file name changed from nameinpost

If the file name/path includes',''or';', it must be enclosed in double quotation marks:

curl -F "file=@\"localfile\";filename=\"nameinpost\"" url.com //or curl -F 'file=@"localfile";filename="nameinpost"' url.com

The outermost layer can be either single or double quotation marks.

This option can be used many times.

Do not use as follows

curl -F 'user=zhang&password=pwd' url.com # This usage is wrong.

--form-string <name=string>

(HTTP) is similar to -- form, except that the value string of the named parameter is used literally.

Characters that begin with'@'and'<' and'; type='strings in values have no special meaning.

This option is preferred if the string value may accidentally trigger the "@" or "<" feature of the form.

-g, --globoff

This option turns off the URL global parser. When you set this option, you can specify URLs that contain the letters {}[], without requiring curl itself to interpret them.

Note that these letters are not normal legal URL content, but they should be coded according to the URI standard.

-G, --get

With this option, all data specified using - d, --data or -- data-binary will be used in HTTP GET requests rather than POST requests.

Data will be appended to a'?'delimiter in the URL.

If used in conjunction with - I, POST data will be replaced and appended to the URL with the HEAD request.

If this option is used many times, only the first option is used.

Example

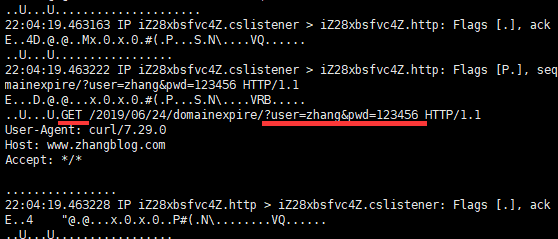

1 [root@iZ28xbsfvc4Z 20190712]# curl -sv -G --local-port 9000 -d 'user=zhang&pwd=123456' http://www.zhangblog.com/2019/06/24/domainexpire/ | head -n1 2 Or then 3 [root@iZ28xbsfvc4Z 20190713]# curl -sv --local-port 9000 "http://www.zhangblog.com/2019/06/24/domainexpire/?user=zhang&pwd=123456" | head -n1 4 * About to connect() to www.zhangblog.com port 80 (#0) 5 * Trying 120.27.48.179... 6 * Local port: 9000 7 * Connected to www.zhangblog.com (120.27.48.179) port 80 (#0) 8 > GET /2019/06/24/domainexpire/?user=zhang&pwd=123456 HTTP/1.1 # So the request is GET, and the parameters are appended to the URI. 9 > User-Agent: curl/7.29.0 10 > Host: www.zhangblog.com 11 > Accept: */* 12 > 13 < HTTP/1.1 200 OK 14 < Server: nginx/1.14.2 15 < Date: Fri, 12 Jul 2019 14:04:19 GMT 16 < Content-Type: text/html 17 < Content-Length: 51385 18 < Last-Modified: Tue, 09 Jul 2019 13:55:19 GMT 19 < Connection: keep-alive 20 < ETag: "5d249cc7-c8b9" 21 < Accept-Ranges: bytes 22 < 23 { [data not shown] 24 * Connection #0 to host www.zhangblog.com left intact 25 <!DOCTYPE html>

Packet capture information

[root@iZ28xbsfvc4Z tcpdump]# tcpdump -i any port 9000 -A -s 0

-H, --header <header>

(HTTP) The custom request header to be sent to the server.

This option can be used multiple times to add/replace/delete multiple headers.

1 curl -H 'Connection: keep-alive' -H 'Referer: https://sina.com.cn' -H 'User-Agent: Mozilla/1.0' http://www.zhangblog.com/2019/06/24/domainexpire/

--ignore-content-length

(HTTP) Ignore Content-Length header information.

-i, --include

(HTTP) Includes HTTP header information in the output.

curl -i https://www.baidu.com

-I, --head

(HTTP/FTP/FILE) Gets only HTTP header files.

When using FTP or FILE files, curl only shows the file size and the last modification time.

curl -I https://www.baidu.com

-k, --insecure

(SSL) allows curl to perform unsafe SSL connections and transmissions.

All SSL connections attempt to use the default installed CA certificate package to ensure security.

Example

1 [root@iZ28xbsfvc4Z ~]# curl https://140.205.16.113/ # Rejected 2 curl: (51) Unable to communicate securely with peer: requested domain name does not match the server's certificate. 3 [root@iZ28xbsfvc4Z ~]# 4 [root@iZ28xbsfvc4Z ~]# curl -k https://140.205.16.113/ # Allow unsafe certificate connections 5 <!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN"> 6 <html> 7 <head><title>403 Forbidden</title></head> 8 <body bgcolor="white"> 9 <h1>403 Forbidden</h1> 10 <p>You don't have permission to access the URL on this server.<hr/>Powered by Tengine</body> 11 </html>

Common Option 4

--keepalive-time <seconds>

Keep alive for a long time. If no-keep alive is used, this option is invalid.

If this option is used multiple times, the last option will be used. If not specified, this option defaults to 60 seconds.

--key <key>

(SSL/SSH) Private key file name. Allow you to provide your private key in this separate file.

For SSH, if not specified, curl attempts the following order:'~/.ssh/id_rsa','~/.ssh/id_dsa','. / id_rsa','. / id_rsa','. / id_dsa'.

If this option is used multiple times, the last option will be used.

--key-type <type>

(SSL) Private key file type. Specifies the type of private key provided by -- key. Support DER, PEM and ENG. If not specified, PEM.

If this option is used multiple times, the last option will be used.

-L, --location

Tracking redirection (HTTP/HTTPS)

If the server reports that the request page has moved to another location (represented by location: header and 320 response code), this option will cause curl to redo the request in a new location.

If used with - i, --include or - I, --head, the title of all requested pages will be displayed.

1 [root@iZ28xbsfvc4Z ~]# curl -I -L https://baidu.com/ 2 HTTP/1.1 302 Moved Temporarily # 302 redirect 3 Server: bfe/1.0.8.18 4 Date: Thu, 04 Jul 2019 03:07:15 GMT 5 Content-Type: text/html 6 Content-Length: 161 7 Connection: keep-alive 8 Location: http://www.baidu.com/ 9 10 HTTP/1.1 200 OK 11 Accept-Ranges: bytes 12 Cache-Control: private, no-cache, no-store, proxy-revalidate, no-transform 13 Connection: Keep-Alive 14 Content-Length: 277 15 Content-Type: text/html 16 Date: Thu, 04 Jul 2019 03:07:15 GMT 17 Etag: "575e1f60-115" 18 Last-Modified: Mon, 13 Jun 2016 02:50:08 GMT 19 Pragma: no-cache 20 Server: bfe/1.0.8.18

--limit-rate <speed>

Specifies the maximum transmission rate to use curl.

This feature is very useful if you have a limited pipeline and want to transfer without using all your bandwidth.

curl --limit-rate 500 http://www.baidu.com/ curl --limit-rate 2k http://www.baidu.com/

Unit: Default bytes unless suffixes are added. Additional "k" or "K" denotes kilobytes, "m" or "M" denotes megabytes, while "g" or "G" denotes gigabytes. For example: 200K, 3m and 1G.

The given rate is the average speed calculated throughout the transmission process. This means that curl may use higher transmission speeds in a short time, but over time, it only uses a given rate.

If this option is used multiple times, the last option will be used.

--local-port <num>[-num]

Specify a local port or port range to connect.

Note that port numbers are essentially a scarce resource and sometimes very busy, so setting this range too narrow can lead to unnecessary connection failures.

curl --local-port 9000 http://www.baidu.com/ curl --local-port 9000-9999 http://www.baidu.com/

-m, --max-time <seconds>

The maximum time (in seconds) allowed for the entire operation.

This is very useful for preventing batch jobs from hanging up for hours due to slower network or links.

See also: -- connect-timeout option

1 [root@iZ28xbsfvc4Z ~]# curl -m 10 --limit-rate 5 http://www.baidu.com/ | head # After 10 seconds, disconnect 2 % Total % Received % Xferd Average Speed Time Time Time Current 3 Dload Upload Total Spent Left Speed 4 2 2381 2 50 0 0 4 0 0:09:55 0:00:10 0:09:45 4 5 curl: (28) Operation timed out after 10103 milliseconds with 50 out of 2381 bytes received 6 <!DOCTYPE html> 7 <!--STATUS OK--><html> <head><met 8 ### or 9 [root@iZ28xbsfvc4Z ~]# curl -m 10 https://www.zhangXX.com | head # After 10 seconds, disconnect 10 % Total % Received % Xferd Average Speed Time Time Time Current 11 Dload Upload Total Spent Left Speed 12 0 0 0 0 0 0 0 0 --:--:-- 0:00:10 --:--:-- 0 13 curl: (28) Connection timed out after 10001 milliseconds

--max-filesize <bytes>

Specifies the maximum size (in bytes) of the file to download.

If the requested file is larger than this value, the transfer will not start and curl will return exit code 63.

Example

1 [root@iZ28xbsfvc4Z ~]# curl -I http://www.zhangblog.com/uploads/hexo/00.jpg # normal 2 HTTP/1.1 200 OK 3 Server: nginx/1.14.2 4 Date: Thu, 04 Jul 2019 07:24:24 GMT 5 Content-Type: image/jpeg 6 Content-Length: 18196 7 Last-Modified: Mon, 24 Jun 2019 01:43:02 GMT 8 Connection: keep-alive 9 ETag: "5d102aa6-4714" 10 Accept-Ranges: bytes 11 12 [root@iZ28xbsfvc4Z ~]# echo $? 13 0 14 [root@iZ28xbsfvc4Z ~]# 15 [root@iZ28xbsfvc4Z ~]# 16 [root@iZ28xbsfvc4Z ~]# curl --max-filesize 1000 -I http://www.zhangblog.com/uploads/hexo/00.jpg # Constrained anomaly 17 HTTP/1.1 200 OK 18 Server: nginx/1.14.2 19 Date: Thu, 04 Jul 2019 07:24:54 GMT 20 Content-Type: image/jpeg 21 curl: (63) Maximum file size exceeded 22 [root@iZ28xbsfvc4Z ~]# 23 [root@iZ28xbsfvc4Z ~]# echo $? 24 63

--max-redirs <num>

Set the maximum number of redirection traces allowed.

If - L, --location is also used, this option can be used to prevent curl from redirecting indefinitely in the paradox.

By default, the limit is 50 redirects. Set this option to - 1 to make it infinite.

--no-keepalive

Disable keeping messages on TCP connections because curl enabled them by default.

Note that this is a negative option name in the document. Therefore, you can use -- keep alive to force keep alive.

Common Option 5

-o, --output <file>

Output to a file, not standard output.

If you use {} or [] to get multiple documents. You can use'''followed by a number in the descriptor. This variable will be replaced by the current string that is retrieving the URL. Like:

curl http://{one,two}.site.com -o "file_#1.txt" curl http://{site,host}.host[1-5].com -o "#1_#2"

Example 1

1 [root@iZ28xbsfvc4Z 20190703]# curl "http://www.zhangblog.com/2019/06/16/hexo{04,05,06}/" -o "file_#1.info" # Note that curl's address needs to be enclosed in quotes 2 or 3 [root@iZ28xbsfvc4Z 20190703]# curl "http://www.zhangblog.com/2019/06/16/hexo[04-06]/" -o "file_#1.info" # Note that curl's address needs to be enclosed in quotes 4 [1/3]: http://www.zhangblog.com/2019/06/16/hexo04/ --> file_04.info 5 % Total % Received % Xferd Average Speed Time Time Time Current 6 Dload Upload Total Spent Left Speed 7 100 97299 100 97299 0 0 1551k 0 --:--:-- --:--:-- --:--:-- 1557k 8 9 [2/3]: http://www.zhangblog.com/2019/06/16/hexo05/ --> file_05.info 10 100 54409 100 54409 0 0 172M 0 --:--:-- --:--:-- --:--:-- 172M 11 12 [3/3]: http://www.zhangblog.com/2019/06/16/hexo06/ --> file_06.info 13 100 56608 100 56608 0 0 230M 0 --:--:-- --:--:-- --:--:-- 230M 14 [root@iZ28xbsfvc4Z 20190703]# 15 [root@iZ28xbsfvc4Z 20190703]# ll 16 total 212 17 -rw-r--r-- 1 root root 97299 Jul 4 16:51 file_04.info 18 -rw-r--r-- 1 root root 54409 Jul 4 16:51 file_05.info 19 -rw-r--r-- 1 root root 56608 Jul 4 16:51 file_06.info

Example 2

1 [root@iZ28xbsfvc4Z 20190703]# curl "http://www.{baidu,douban}.com" -o "site_#1.txt" # Note that curl's address needs to be enclosed in quotes 2 [1/2]: http://www.baidu.com --> site_baidu.txt 3 % Total % Received % Xferd Average Speed Time Time Time Current 4 Dload Upload Total Spent Left Speed 5 100 2381 100 2381 0 0 46045 0 --:--:-- --:--:-- --:--:-- 46686 6 7 [2/2]: http://www.douban.com --> site_douban.txt 8 100 162 100 162 0 0 3173 0 --:--:-- --:--:-- --:--:-- 3173 9 [root@iZ28xbsfvc4Z 20190703]# 10 [root@iZ28xbsfvc4Z 20190703]# ll 11 total 220 12 -rw-r--r-- 1 root root 2381 Jul 4 16:53 site_baidu.txt 13 -rw-r--r-- 1 root root 162 Jul 4 16:53 site_douban.txt

-O, --remote-name

Write to the local file with the same name as the remote file. Using only the file part of the remote file, the path is cut off. )

The remote file name used to save is extracted from a given URL, with no other content.

Therefore, the file will be saved in the current working directory. If you want to save the file in another directory, make sure that you change the current working directory before curl calls - O, --remote-name!

1 [root@iZ28xbsfvc4Z 20190712]# curl -O https://www.baidu.com # Using the - O option, you must specify specific file misuse 2 curl: Remote file name has no length! 3 curl: try 'curl --help' or 'curl --manual' for more information 4 [root@iZ28xbsfvc4Z 20190712]# curl -O https://www.baidu.com/index.html # With the - O option, you must specify the correct use of specific files 5 % Total % Received % Xferd Average Speed Time Time Time Current 6 Dload Upload Total Spent Left Speed 7 100 2443 100 2443 0 0 13289 0 --:--:-- --:--:-- --:--:-- 13349

--pass <phrase>

(SSL/SSH) Private Key Password

If this option is used multiple times, the last option will be used.

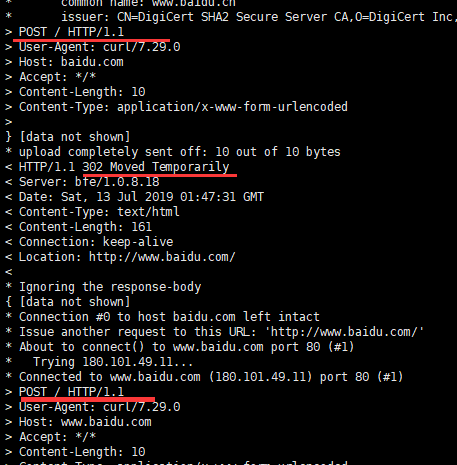

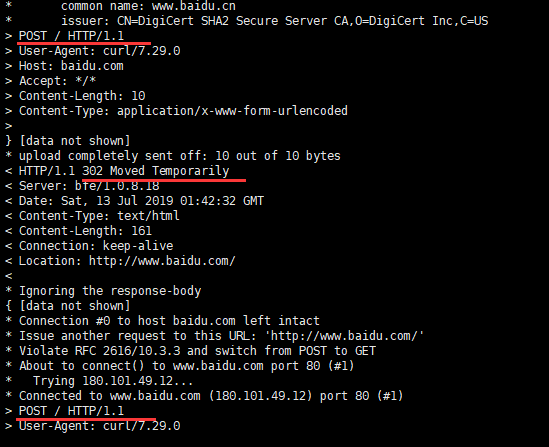

--post301

Tell curl not to convert POST requests to GET requests when 301 redirects.

Non-rfc behavior is ubiquitous in web browsers, so curl transforms by default to maintain consistency. However, the server may need to reserve POST as POST after redirection.

This option makes sense only when - L, --location is used

--post302

Tell curl not to convert POST requests to GET requests when 302 redirects.

Non-rfc behavior is ubiquitous in web browsers, so curl transforms by default to maintain consistency. However, the server may need to reserve POST as POST after redirection.

This option makes sense only when - L, --location is used

--post303

Tell curl not to convert POST requests to GET requests when 303 redirects.

Non-rfc behavior is ubiquitous in web browsers, so curl transforms by default to maintain consistency. However, the server may need to reserve POST as POST after redirection.

This option makes sense only when - L, --location is used

Explain:

The above three options are designed to prevent the original POST request from becoming a GET request during redirection. In order to prevent this situation, there are two ways to deal with it.

1. The use of the above options can be avoided.

2. Use - X POST options and commands.

Example

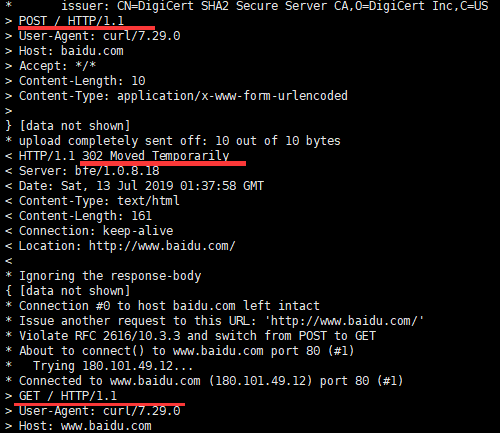

[root@iZ28xbsfvc4Z ~]# curl -Lsv -d 'user=zhang' https://baidu.com | head -n1

Starting with a POST request, 302 redirects to a GET request.

[root@iZ28xbsfvc4Z ~]# curl -Lsv -d 'user=zhang' --post301 --post302 --post303 https://baidu.com | head -n1

POST requests come and go. But there are many options.

[root@iZ28xbsfvc4Z ~]# curl -Lsv -d 'user=zhang' -X POST https://baidu.com | head -n1

POST requests come and go. This command is recommended.

--pubkey <key>

(SSH) Public key file name. Allow public keys to be provided in this separate file.

If this option is used multiple times, the last option will be used.

-r, --range <range>

(HTTP/FTP/SFTP/FILE) Retrieves byte ranges from HTTP/1.1, FTP or SFTP servers or local files. Scopes can be specified in a variety of ways. Used for segment download.

Sometimes the file is larger, or it is difficult to transmit quickly, but the use of sectional transmission can achieve stable, efficient and guaranteed transmission, more practical, and easy to correct error files.

0-499: Specify the first 500 bytes

500-999: Specify the second 500 bytes

- 500: Specify the last 500 bytes

9500 -: Specifies 9500 bytes and subsequent bytes

0-0, -1: Specify the first and last bytes

500-700,600-799: 300 bytes specified from offset 500

100-199,500-599: Specify two separate 100-byte ranges

Segmented Download

1 [root@iZ28xbsfvc4Z 20190715]# curl -I http://www.zhangblog.com/uploads/hexo/00.jpg # View file size 2 HTTP/1.1 200 OK 3 Server: nginx/1.14.2 4 Date: Mon, 15 Jul 2019 03:23:44 GMT 5 Content-Type: image/jpeg 6 Content-Length: 18196 # file size 7 Last-Modified: Fri, 05 Jul 2019 08:04:58 GMT 8 Connection: keep-alive 9 ETag: "5d1f04aa-4714" 10 Accept-Ranges: bytes 11 [root@iZ28xbsfvc4Z 20190715]# curl -r 0-499 -o 00-jpg.part1 http://www.zhangblog.com/uploads/hexo/00.jpg 12 [root@iZ28xbsfvc4Z 20190715]# curl -r 500-999 -o 00-jpg.part2 http://www.zhangblog.com/uploads/hexo/00.jpg 13 [root@iZ28xbsfvc4Z 20190715]# curl -r 1000- -o 00-jpg.part3 http://www.zhangblog.com/uploads/hexo/00.jpg

View downloaded files

1 [root@iZ28xbsfvc4Z 20190715]# ll 2 total 36 3 -rw-r--r-- 1 root root 500 Jul 15 11:25 00-jpg.part1 4 -rw-r--r-- 1 root root 500 Jul 15 11:25 00-jpg.part2 5 -rw-r--r-- 1 root root 17196 Jul 15 11:26 00-jpg.part3

Document merging

1 [root@iZ28xbsfvc4Z 20190715]# cat 00-jpg.part1 00-jpg.part2 00-jpg.part3 > 00.jpg 2 [root@iZ28xbsfvc4Z 20190715]# ll 3 total 56 4 -rw-r--r-- 1 root root 18196 Jul 15 11:29 00.jpg

-R, --remote-time

Make curl try to get the timestamp of the remote file, and if available, make the local file get the same timestamp [Modify for the modified timestamp].

curl -o nfs1.info -R http://www.zhangblog.com/2019/07/05/nfs1/

--retry <num>

Number of retries in case of transmission problems. Setting the number to 0 prevents curl from retrying (this is the default).

Transient errors such as timeout, FTP 4xx response code or HTTP 5xx response code occur.

When curl is ready to retry the transmission, it will wait for a second first, and then for all upcoming retries, it will double the waiting time until it reaches 10 minutes, which will be the delay between the remaining retries.

--retry-delay <seconds>

Set the retry interval when transmission problems occur. Setting this delay to zero will cause curl to use the default delay time.

--retry-max-time <seconds>

When transmission problems occur, set the maximum retry time. Set this option to 0 and retry without timeout.

Common Option 6

-s, --silent

Silence or silence mode. Schedule/bar or error messages are not displayed.

Example

1 [root@iZ28xbsfvc4Z 20190713]# curl https://www.baidu.com | head -n1 # Default schedule 2 % Total % Received % Xferd Average Speed Time Time Time Current 3 Dload Upload Total Spent Left Speed 4 100 2443 100 2443 0 0 13346 0 --:--:-- --:--:-- --:--:-- 13349 5 <!DOCTYPE html> 6 [root@iZ28xbsfvc4Z 20190713]# curl -s https://www.baidu.com | head -n1 7 <!DOCTYPE html>

-S, --show-error

When used with - s, if curl fails, curl displays an error message.

1 [root@iZ28xbsfvc4Z 20190713]# curl -s https://140.205.16.113/ 2 [root@iZ28xbsfvc4Z 20190713]# 3 [root@iZ28xbsfvc4Z 20190713]# curl -sS https://140.205.16.113/ 4 curl: (51) Unable to communicate securely with peer: requested domain name does not match the server's certificate.

--stderr <file>

Redirect error information to a file. If the file name is normal'-', it is written to stdout.

If this option is used multiple times, the last option will be used.

1 [root@iZ28xbsfvc4Z 20190713]# curl --stderr err.info https://140.205.16.113/ 2 [root@iZ28xbsfvc4Z 20190713]# ll 3 total 92 4 -rw-r--r-- 1 root root 116 Jul 13 10:19 err.info 5 [root@iZ28xbsfvc4Z 20190713]# cat err.info 6 curl: (51) Unable to communicate securely with peer: requested domain name does not match the server's certificate.

-T, --upload-file <file>

This transfers the specified local file to the remote URL. If there is no file part in the specified URL, Curl appends the local file name.

Note: You must use the trailing / to really prove that Curl has no filename on the last directory, otherwise Curl will think that your last directory name is the remote filename you want to use. This is likely to cause the upload operation to fail.

If you use this command on an HTTP(S) server, the PUT command will be used.

It also supports multiple file uploads, as follows:

curl -T "{file1,file2}" http://www.uploadtothissite.com Or then curl -T "img[1-1000].png" ftp://ftp.picturemania.com/upload/

--trace <file>

debug the specified file. Includes all incoming and outgoing data.

This option overrides the previous - v, - verbose or - trace-ascii.

If this option is used multiple times, the last option will be used.

curl --trace trace.info https://www.baidu.com

--trace-ascii <file>

debug the specified file. Includes all incoming and outgoing data.

This is very similar to -- trace, but omits the hexadecimal part and displays only the ASCII part of the dump. Making it output smaller may be easier for us to read.

This option overrides the previous - v, - verbose or - trace.

If this option is used multiple times, the last option will be used.

curl --trace-ascii trace2.info https://www.baidu.com

--trace-time

Add a timestamp for each trace or verbose line that curl displays.

curl --trace-ascii trace3.info --trace-time https://www.baidu.com

-v, --verbose

Display detailed operation information. Mainly used for debugging.

Lines beginning with > denote the header data sent by curl; < denote the normally hidden header data received by curl; and lines beginning with * denote the additional information provided by curl.

1 [root@iZ28xbsfvc4Z 20190712]# curl -v https://www.baidu.com 2 * About to connect() to www.baidu.com port 443 (#0) 3 * Trying 180.101.49.12... 4 * Connected to www.baidu.com (180.101.49.12) port 443 (#0) 5 * Initializing NSS with certpath: sql:/etc/pki/nssdb 6 * CAfile: /etc/pki/tls/certs/ca-bundle.crt 7 CApath: none 8 * SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 9 * Server certificate: 10 * subject: CN=baidu.com,O="Beijing Baidu Netcom Science Technology Co., Ltd",OU=service operation department,L=beijing,ST=beijing,C=CN 11 * start date: May 09 01:22:02 2019 GMT 12 * expire date: Jun 25 05:31:02 2020 GMT 13 * common name: baidu.com 14 * issuer: CN=GlobalSign Organization Validation CA - SHA256 - G2,O=GlobalSign nv-sa,C=BE 15 > GET / HTTP/1.1 16 > User-Agent: curl/7.29.0 17 > Host: www.baidu.com 18 > Accept: */* 19 > 20 < HTTP/1.1 200 OK 21 < Accept-Ranges: bytes 22 < Cache-Control: private, no-cache, no-store, proxy-revalidate, no-transform 23 < Connection: Keep-Alive 24 < Content-Length: 2443 25 < Content-Type: text/html 26 < Date: Fri, 12 Jul 2019 08:26:23 GMT 27 < Etag: "588603eb-98b" 28 < Last-Modified: Mon, 23 Jan 2017 13:23:55 GMT 29 < Pragma: no-cache 30 < Server: bfe/1.0.8.18 31 < Set-Cookie: BDORZ=27315; max-age=86400; domain=.baidu.com; path=/ 32 < 33 <!DOCTYPE html> 34 .................. # Specific information on curl web pages

-w, --write-out <format>

What should be displayed on stdout after completion and successful operation?

The following variables are supported. Please refer to the curl document for the specific meaning.

content_type filename_effective ftp_entry_path http_code http_connect local_ip local_port num_connects num_redirects redirect_url remote_ip remote_port size_download size_header size_request size_upload speed_download speed_upload ssl_verify_result time_appconnect time_connect time_namelookup time_pretransfer time_redirect time_starttransfer time_total url_effective

Examples

1 [root@iZ28xbsfvc4Z 20190713]# curl -o /dev/null -s -w %{content_type} www.baidu.com # The output is not newline 2 text/html[root@iZ28xbsfvc4Z 20190713]# 3 [root@iZ28xbsfvc4Z 20190713]# curl -o /dev/null -s -w %{http_code} www.baidu.com # The output is not newline 4 200[root@iZ28xbsfvc4Z 20190713]# 5 [root@iZ28xbsfvc4Z 20190713]# curl -o /dev/null -s -w %{local_port} www.baidu.com # The output is not newline 6 37346[root@iZ28xbsfvc4Z 20190713]# 7 [root@iZ28xbsfvc4Z 20190713]#

-x, --proxy <[protocol://][user:password@]proxyhost[:port]>

Use the specified HTTP proxy. If no port number is specified, it is assumed to be located at port 1080.

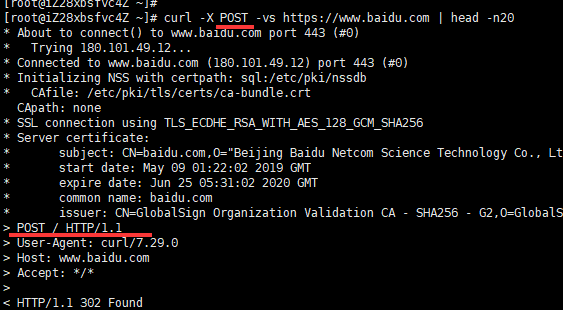

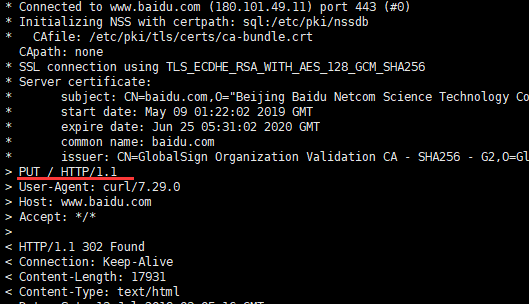

-X, --request <command>

(HTTP) Specifies how requests are made when communicating with HTTP servers. Default GET

curl -vs -X POST https://www.baidu.com | head -n1

curl -vs -X PUT https://www.baidu.com | head -n1

Recommended reading

Linux curl form login or submission and cookie use

If you think it's good, order a compliment (-^ O ^-)!

-—END-—-