Author: Daoge, a 10 + year embedded development veteran, focusing on: C/C + +, embedded and Linux.

Pay attention to the official account below, reply to books, get classic books in Linux and embedded field. Reply to [PDF] to obtain all original articles (PDF format).

catalogue

Other people's experience, our ladder!

Hello, I'm brother Dao. The technical knowledge I'll explain to you today is: [the lower part of the mechanism in interrupt processing - work queue].

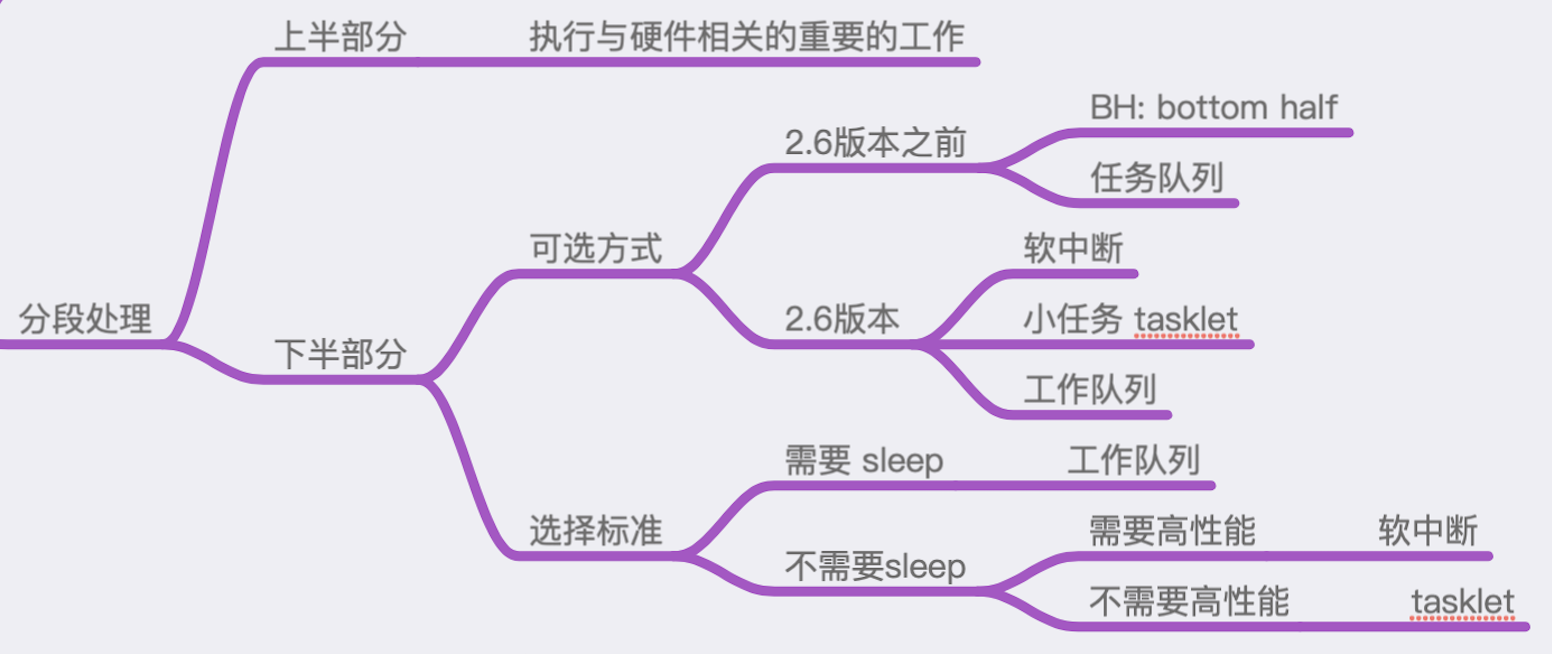

At the beginning of the introduction to interrupt processing, the following figure was posted:

The figure describes the mechanisms in the lower part of interrupt processing and how to select according to the actual business scenarios and constraints.

It can be seen that some of these different implementations are repetitive or replace each other.

Because of this, the usage methods between them are almost the same, at least in the usage of API interface functions. From the perspective of usage, they are very similar.

In this article, we will demonstrate the use of work queue through actual code operation.

Characteristics of work queue

Work queue is an important way to process the lower part of interrupt in Linux operating system!

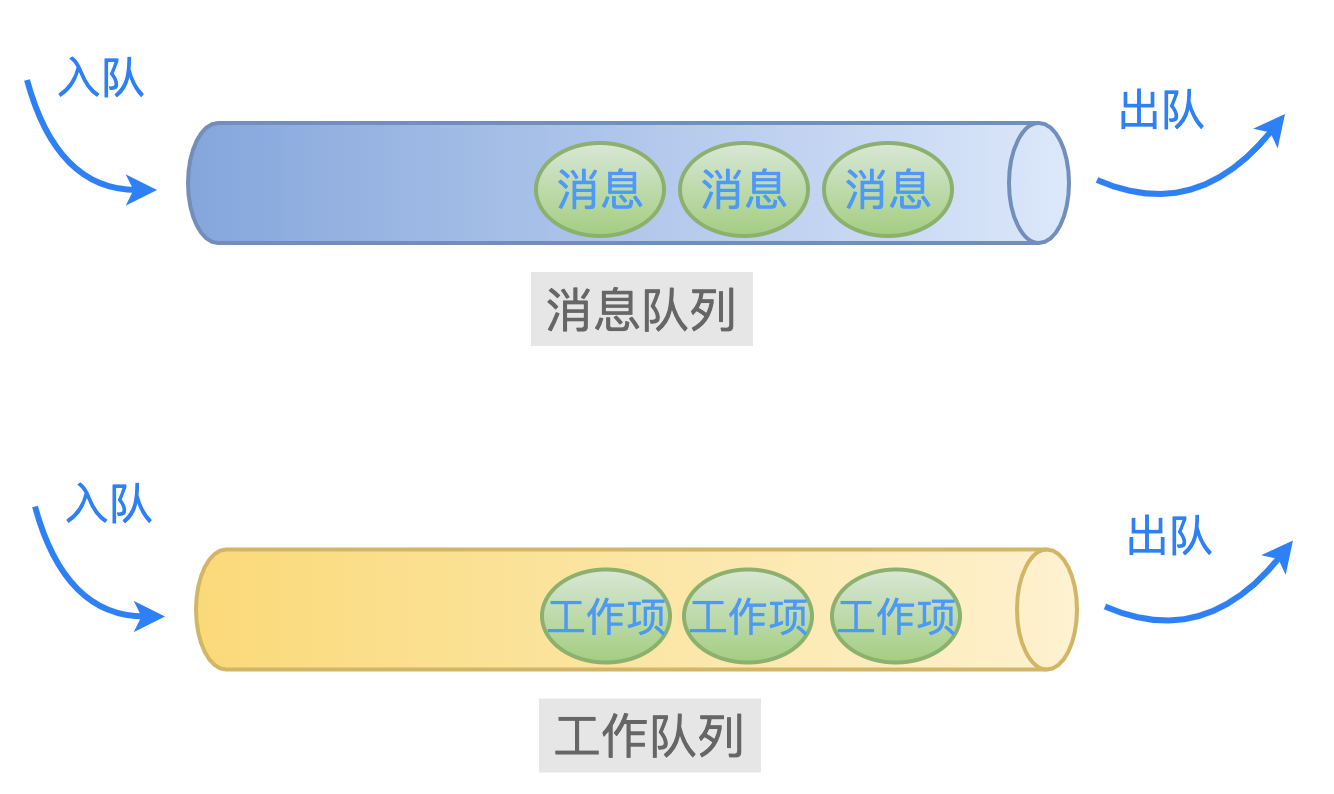

It can be guessed from the name: a work queue is like the message queue commonly used in the business layer, which stores a lot of work items waiting to be processed.

There are two important structures in the work queue: work queue_struct and work_struct:

struct workqueue_struct {

struct list_head pwqs; /* WR: all pwqs of this wq */

struct list_head list; /* PR: list of all workqueues */

...

char name[WQ_NAME_LEN]; /* I: workqueue name */

...

/* hot fields used during command issue, aligned to cacheline */

unsigned int flags ____cacheline_aligned; /* WQ: WQ_* flags */

struct pool_workqueue __percpu *cpu_pwqs; /* I: per-cpu pwqs */

struct pool_workqueue __rcu *numa_pwq_tbl[]; /* PWR: unbound pwqs indexed by node */

};

struct work_struct {

atomic_long_t data;

struct list_head entry;

work_func_t func; // Point to handler

#ifdef CONFIG_LOCKDEP

struct lockdep_map lockdep_map;

#endif

};

In the kernel, all work items in the work queue are linked together through a linked list and wait for a thread in the operating system to take them out one by one for processing.

These threads can be driven through kthread_ The thread created by create can also be a thread created by the operating system in advance.

Here comes a question of trade-offs.

If our processing function is simple, there is no need to create a separate thread to process it.

There are two reasons:

-

Creating a kernel thread is very resource consuming. If the function is very simple, it will not pay off if the thread is closed after the execution is finished soon;

-

If every driver writer creates kernel threads without restraint, there will be a large number of unnecessary threads in the kernel. Of course, it is essentially a problem of system resource consumption and execution efficiency;

In order to avoid this situation, the operating system creates some work queues and kernel threads for us in advance.

We only need to add the work items to be processed directly to these pre created work queues, and they will be taken out by the corresponding kernel thread for processing.

For example, the following work queues are created by the kernel by default (include/linux/workqueue.h):

/* * System-wide workqueues which are always present. * * system_wq is the one used by schedule[_delayed]_work[_on](). * Multi-CPU multi-threaded. There are users which expect relatively * short queue flush time. Don't queue works which can run for too * long. * * system_highpri_wq is similar to system_wq but for work items which * require WQ_HIGHPRI. * * system_long_wq is similar to system_wq but may host long running * works. Queue flushing might take relatively long. * * system_unbound_wq is unbound workqueue. Workers are not bound to * any specific CPU, not concurrency managed, and all queued works are * executed immediately as long as max_active limit is not reached and * resources are available. * * system_freezable_wq is equivalent to system_wq except that it's * freezable. * * *_power_efficient_wq are inclined towards saving power and converted * into WQ_UNBOUND variants if 'wq_power_efficient' is enabled; otherwise, * they are same as their non-power-efficient counterparts - e.g. * system_power_efficient_wq is identical to system_wq if * 'wq_power_efficient' is disabled. See WQ_POWER_EFFICIENT for more info. */ extern struct workqueue_struct *system_wq; extern struct workqueue_struct *system_highpri_wq; extern struct workqueue_struct *system_long_wq; extern struct workqueue_struct *system_unbound_wq; extern struct workqueue_struct *system_freezable_wq; extern struct workqueue_struct *system_power_efficient_wq; extern struct workqueue_struct *system_freezable_power_efficient_wq;

The creation code of these default work queues is (kernel/workqueue.c):

int __init workqueue_init_early(void)

{

...

system_wq = alloc_workqueue("events", 0, 0);

system_highpri_wq = alloc_workqueue("events_highpri", WQ_HIGHPRI, 0);

system_long_wq = alloc_workqueue("events_long", 0, 0);

system_unbound_wq = alloc_workqueue("events_unbound", WQ_UNBOUND,

WQ_UNBOUND_MAX_ACTIVE);

system_freezable_wq = alloc_workqueue("events_freezable",

WQ_FREEZABLE, 0);

system_power_efficient_wq = alloc_workqueue("events_power_efficient",

WQ_POWER_EFFICIENT, 0);

system_freezable_power_efficient_wq = alloc_workqueue("events_freezable_power_efficient",

WQ_FREEZABLE | WQ_POWER_EFFICIENT,

0);

...

}

In addition, due to the work queue system_wq is used frequently, so the kernel encapsulates a simple function (schedule_work) for us to use:

/**

* schedule_work - put work task in global workqueue

* @work: job to be done

*

* Returns %false if @work was already on the kernel-global workqueue and

* %true otherwise.

*

* This puts a job in the kernel-global workqueue if it was not already

* queued and leaves it in the same position on the kernel-global

* workqueue otherwise.

*/

static inline bool schedule_work(struct work_struct *work){

return queue_work(system_wq, work);

}

Of course, everything has its advantages and disadvantages!

Because the work queue created by the kernel by default is shared by all drivers.

If all drivers delegate work items waiting to be processed to them, it will lead to overcrowding in a work queue.

According to the first come, first served principle, the work items added later in the work queue may take too long to execute the processing functions of the previous work items, resulting in the inability to guarantee the timeliness.

Therefore, there is a problem of system balance.

That's all about the basic knowledge of work queue. Let's verify it with practical operation.

Driver

In previous articles, the operation flow of testing interrupt processing in the driver is the same, so I won't repeat the operation flow here.

Here you can directly give the driver's full picture code, and then view the output information of dmesg.

Create driver source files and makefiles:

$ cd tmp/linux-4.15/drivers $ mkdir my_driver_interrupt_wq $ touch my_driver_interrupt_wq.c $ touch Makefile

Sample code overview

The test scenario is: after loading the driver module, if it is detected that the ESC key on the keyboard is pressed, it will go to the default work queue system of the kernel_ Add a work item to WQ, and then observe whether the corresponding processing function of the work item is called.

#include <linux/kernel.h>

#include <linux/module.h>

#include <linux/interrupt.h>

static int irq;

static char * devname;

static struct work_struct mywork;

// Receive the parameters passed in when the driver module is loaded

module_param(irq, int, 0644);

module_param(devname, charp, 0644);

// Defines the ID of the driver, which is used in the interrupt processing function to determine whether it needs to be processed

#define MY_DEV_ID 1226

// Driver data structure

struct myirq

{

int devid;

};

struct myirq mydev ={ MY_DEV_ID };

#define KBD_DATA_REG 0x60

#define KBD_STATUS_REG 0x64

#define KBD_SCANCODE_MASK 0x7f

#define KBD_STATUS_MASK 0x80

// Work item binding handler

static void mywork_handler(struct work_struct *work)

{

printk("mywork_handler is called. \n");

// do some other things

}

//Interrupt handling function

static irqreturn_t myirq_handler(int irq, void * dev)

{

struct myirq mydev;

unsigned char key_code;

mydev = *(struct myirq*)dev;

// Check the device id and process it only when it is equal

if (MY_DEV_ID == mydev.devid)

{

// Read keyboard scan code

key_code = inb(KBD_DATA_REG);

if (key_code == 0x01)

{

printk("ESC key is pressed! \n");

// Initialize work item

INIT_WORK(&mywork, mywork_handler);

// Join to work queue system_wq

schedule_work(&mywork);

}

}

return IRQ_HANDLED;

}

// Driver module initialization function

static int __init myirq_init(void)

{

printk("myirq_init is called. \n");

// Register interrupt handler

if(request_irq(irq, myirq_handler, IRQF_SHARED, devname, &mydev)!=0)

{

printk("register irq[%d] handler failed. \n", irq);

return -1;

}

printk("register irq[%d] handler success. \n", irq);

return 0;

}

// Driver module exit function

static void __exit myirq_exit(void)

{

printk("myirq_exit is called. \n");

// Release interrupt handler

free_irq(irq, &mydev);

}

MODULE_LICENSE("GPL");

module_init(myirq_init);

module_exit(myirq_exit);

Makefile file

ifneq ($(KERNELRELEASE),) obj-m := my_driver_interrupt_wq.o else KERNELDIR ?= /lib/modules/$(shell uname -r)/build PWD := $(shell pwd) default: $(MAKE) -C $(KERNELDIR) M=$(PWD) modules clean: $(MAKE) -C $(KERNEL_PATH) M=$(PWD) clean endif

Compilation and testing

$ make $ sudo insmod my_driver_interrupt_wq.ko irq=1 devname=mydev

Check whether the driver module is loaded successfully:

$ lsmod | grep my_driver_interrupt_wq my_driver_interrupt_wq 16384 0

Take another look at the output of dmesg:

$ dmesg ... [ 188.247636] myirq_init is called. [ 188.247642] register irq[1] handler success.

Description: Driver initialization function myirq_init was called and the handler of interrupt 1 was successfully registered.

At this point, press ESC on the keyboard.

After capturing the keyboard interrupt, the operating system will call all interrupt handlers of the interrupt in turn, including our registered myirq_handler function.

In this function, when it is determined that it is the ESC key, it initializes a work item (bind the variable of structure work_struct type with a processing function), and then throw it to the pre created work queue (system_wq) of the operating system for processing, as shown below:

if (key_code == 0x01)

{

printk("ESC key is pressed! \n");

INIT_WORK(&mywork, mywork_handler);

schedule_work(&mywork);

}

Therefore, when the corresponding kernel thread takes out the work item (mywork) from the work queue (system_wq) for processing, the function mywork_handler will be called.

Now let's take a look at the output of dmesg:

[ 305.053155] ESC key is pressed! [ 305.053177] mywork_handler is called.

You can see: mywork_ The handler function was called correctly.

Perfect!

------ End ------

Recommended reading

[3] The underlying debugging principle of gdb is so simple

[4] Is inline assembly terrible? Finish reading this article and end it!

Other albums: Selected articles,Application design,Internet of things, C language.