Inodes and directory entries

File system itself is a mechanism for organizing and managing files on storage devices. Different file systems will be formed in different ways of organization. The most important thing you should remember is that everything is a file in Linux. Not only ordinary files and directories, but also block devices, sockets, pipes, etc. should be managed through a unified file system. The Linux file system allocates two data structures for each file, index node and directory entry, which are mainly used to record the meta information and directory structure of the file

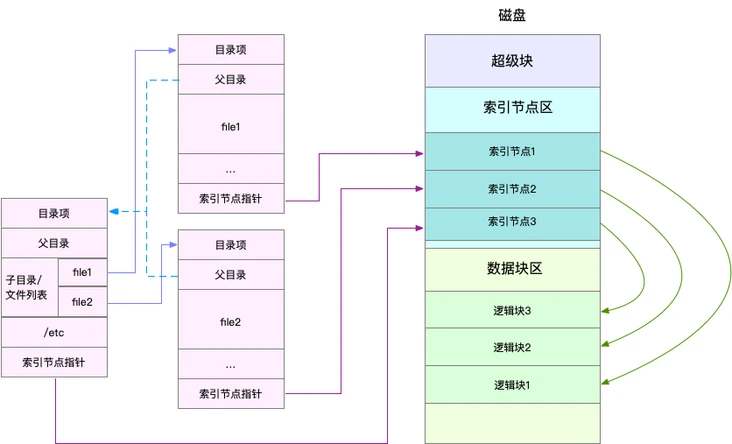

- The index node, referred to as inode for short, is used to record file metadata, such as inode number, file size, access rights, modification date, data location, etc. The inode corresponds to the file one by one. Like the file content, it will be persisted and stored on disk. So remember, inodes also take up disk space.

- Directory entries, called dentry for short, are used to record file names, inode pointers, and associations with other directory entries. Multiple associated directory entries constitute the directory structure of the file system. However, unlike inodes, directory entries are an in memory data structure maintained by the kernel, so they are often called directory entry cache.

In other words, the index node is the unique flag of each file, and the directory entry maintains the tree structure of the file system. The relationship between directory entries and index nodes is many to one. You can simply understand that a file can have multiple aliases. For example, aliases created for files through hard links correspond to different directory entries, but these directory entries are essentially linked to the same file, so their index nodes are the same. Index nodes and directory entries record the metadata of files and the directory relationship between files. Specifically, how are file data stored? Is it better to write directly to disk? In fact, the smallest unit of disk reading and writing is the sector. However, the sector is only 512B in size. If you read and write such a small unit every time, the efficiency must be very low. Therefore, the file system forms a logical block of continuous sectors, and then uses the logical block as the smallest unit to manage data every time. The common logical block size is 4KB, which is composed of 8 consecutive sectors.

- The directory entry itself is a memory Cache, and the inode is the data stored on disk. In the previous principles of Buffer and Cache, I mentioned that in order to reconcile the performance differences between slow disks and fast CPU s, the file contents will be cached in the page Cache

- When the disk performs file system formatting, it will be divided into three storage areas, super block, inode area and data block area. Among them, the super block stores the state of the entire file system. The inode area is used to store the inodes. The data block area is used to store file data.

The kernel uses Slab mechanism to manage the cache of directory entries and index nodes/ proc/meminfo only gives the overall size of the Slab. For each Slab cache, you should also check the / proc/slabinfo file to check the cache of all directory entries and various file system index nodes

[root@VM-4-5-centos lighthouse]# cat /proc/slabinfo | grep -E '^#|dentry|inode' # name <active_objs> <num_objs> <objsize> <objperslab> <pagesperslab> : tunables <limit> <batchcount> <sharedfactor> : slabdata <active_slabs> <num_slabs> <sharedavail> isofs_inode_cache 46 46 704 23 4 : tunables 0 0 0 : slabdata 2 2 0 ext4_inode_cache 4644 4644 1176 27 8 : tunables 0 0 0 : slabdata 172 172 0 jbd2_inode 128 128 64 64 1 : tunables 0 0 0 : slabdata 2 2 0 mqueue_inode_cache 16 16 1024 16 4 : tunables 0 0 0 : slabdata 1 1 0 hugetlbfs_inode_cache 24 24 680 12 2 : tunables 0 0 0 : slabdata 2 2 0 inotify_inode_mark 102 102 80 51 1 : tunables 0 0 0 : slabdata 2 2 0 sock_inode_cache 253 253 704 23 4 : tunables 0 0 0 : slabdata 11 11 0 proc_inode_cache 3168 3322 728 22 4 : tunables 0 0 0 : slabdata 151 151 0 shmem_inode_cache 882 882 768 21 4 : tunables 0 0 0 : slabdata 42 42 0 inode_cache 14688 14748 656 12 2 : tunables 0 0 0 : slabdata 1229 1229 0 dentry 27531 27531 192 21 1 : tunables 0 0 0 : slabdata 1311 1311 0 selinux_inode_security 16626 16626 40 102 1 : tunables 0 0 0 : slabdata 163 163 0

The dentry line represents the directory item cache, inode_ The cache line represents the VFS inode cache, and the rest is the inode cache of various file systems. In the actual performance analysis, we often use slaptop to find the cache type that occupies the most memory

[root@VM-4-5-centos lighthouse]#slabtop Active / Total Objects (% used) : 223087 / 225417 (99.0%) Active / Total Slabs (% used) : 7310 / 7310 (100.0%) Active / Total Caches (% used) : 138 / 207 (66.7%) Active / Total Size (% used) : 50536.41K / 51570.46K (98.0%) Minimum / Average / Maximum Object : 0.01K / 0.23K / 8.00K OBJS ACTIVE USE OBJ SIZE SLABS OBJ/SLAB CACHE SIZE NAME 29295 28879 98% 0.19K 1395 21 5580K dentry 27150 27150 100% 0.13K 905 30 3620K kernfs_node_cache 26112 25802 98% 0.03K 204 128 816K kmalloc-32 23010 23010 100% 0.10K 590 39 2360K buffer_head 16626 16626 100% 0.04K 163 102 652K selinux_inode_security 14856 14764 99% 0.64K 1238 12 9904K inode_cache 9894 9894 100% 0.04K 97 102 388K ext4_extent_status 6698 6698 100% 0.23K 394 17 1576K vm_area_struct 5508 5508 100% 1.15K 204 27 6528K ext4_inode_cache 5120 5120 100% 0.02K 20 256 80K kmalloc-16 4672 4630 99% 0.06K 73 64 292K kmalloc-64 4564 4564 100% 0.57K 326 14 2608K radix_tree_node 4480 4480 100% 0.06K 70 64 280K anon_vma_chain 4096 4096 100% 0.01K 8 512 32K kmalloc-8 3570 3570 100% 0.05K 42 85 168K ftrace_event_field 3168 2967 93% 0.71K 144 22 2304K proc_inode_cache 3003 2961 98% 0.19K 143 21 572K kmalloc-192 2898 2422 83% 0.09K 63 46 252K anon_vma 2368 1944 82% 0.25K 148 16 592K filp 2080 2075 99% 0.50K 130 16 1040K kmalloc-512 2016 2016 100% 0.07K 36 56 144K Acpi-Operand 1760 1760 100% 0.12K 55 32 220K kmalloc-128 1596 1596 100% 0.09K 38 42 152K kmalloc-96 1472 1472 100% 0.09K 32 46 128K trace_event_file

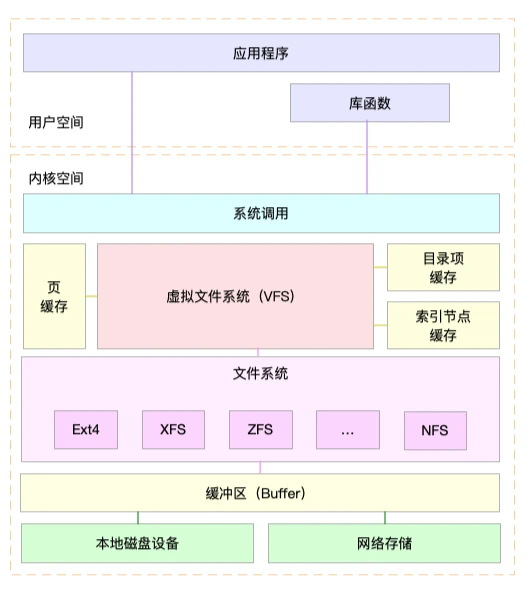

Virtual file system

Directory entries, index nodes, logical blocks and super blocks constitute the four basic elements of Linux file system. However, in order to support various file systems, the Linux kernel introduces an abstraction layer between user processes and file systems, That is, Virtual File System (VFS). VFS defines a set of data structures and standard interfaces supported by all file systems. In this way, user processes and other subsystems in the kernel only need to interact with the unified interface provided by VFS, and do not need to care about the implementation details of various underlying file systems.