background

- Read the fucking source code! -- By Lu Xun

- A picture is worth a thousand words. -- By Golgi

explain:

- Kernel version: 4.14

- ARM64 processor, Contex-A53, dual core

- Tools used: Source Insight 3.5, Visio

1. General

This article will analyze watermark.

Simply put, when allocating pages using the zoned page frame allocator, the available free pages are compared with the watermark of the zone to determine whether to allocate memory.

At the same time, watermark is also used to determine the sleep and wake-up of kswapd kernel threads for memory retrieval and compression.

Recall the struct zone structure mentioned earlier:

struct zone {

/* Read-mostly fields */

/* zone watermarks, access with *_wmark_pages(zone) macros */

unsigned long watermark[NR_WMARK];

unsigned long nr_reserved_highatomic;

....

}

enum zone_watermarks {

WMARK_MIN,

WMARK_LOW,

WMARK_HIGH,

NR_WMARK

};

#define min_wmark_pages(z) (z->watermark[WMARK_MIN])

#define low_wmark_pages(z) (z->watermark[WMARK_LOW])

#define high_wmark_pages(z) (z->watermark[WMARK_HIGH])

It can be seen that there are three kinds of watermarks and can only be accessed through specific macros.

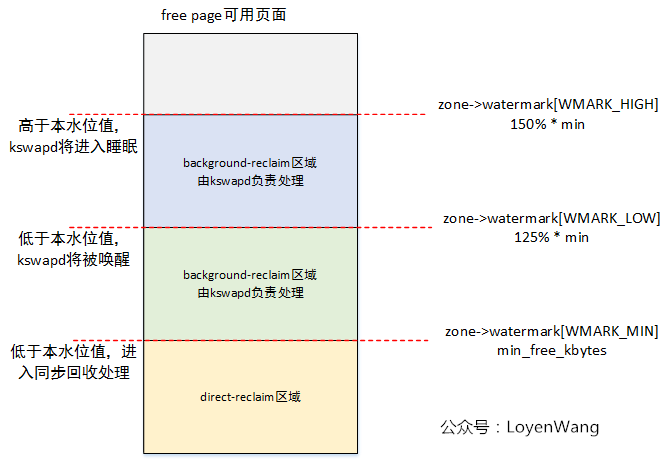

-

WMARK_MIN

The lowest point of insufficient memory. If the calculated available pages are lower than this value, page counting cannot be performed; -

WMARK_LOW

By default, the value is wmark_ 125% of min. at this time, kswapd will be awakened. You can modify watermark_scale_factor to change the scale value; -

WMARK_HIGH

By default, the value is wmark_ 150% of max. at this time, kswapd will sleep. You can modify watermark_scale_factor to change the scale value;

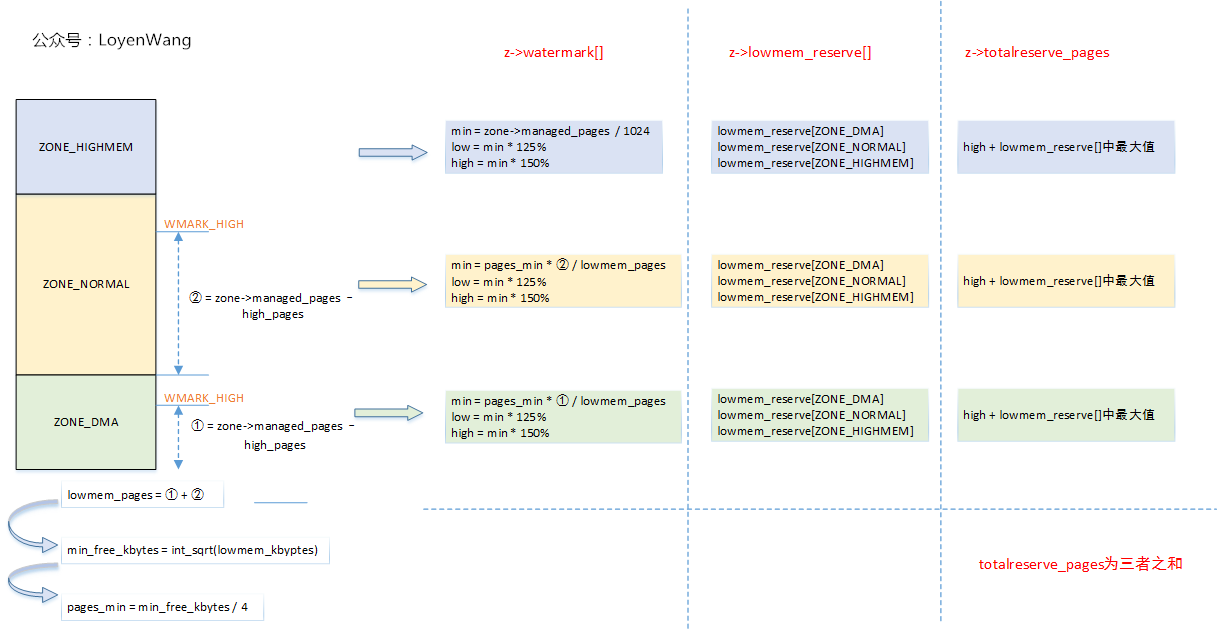

Here comes the picture:

The details will be further analyzed below.

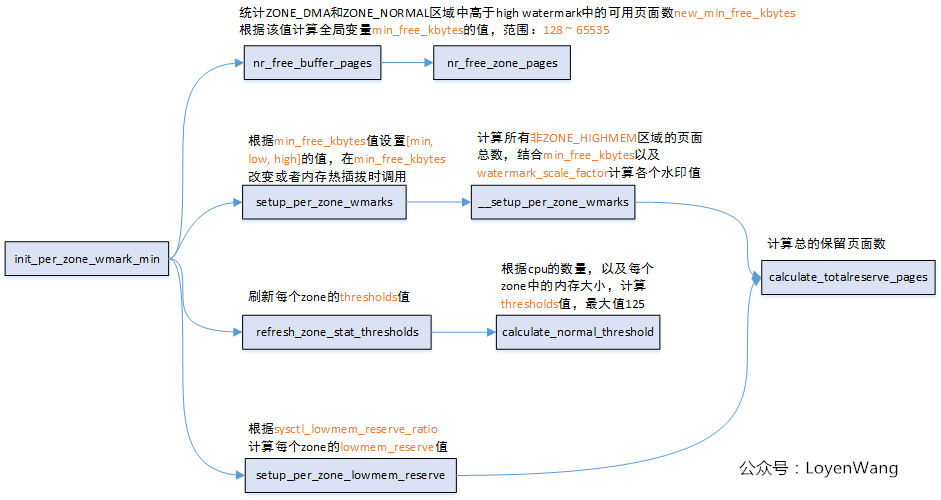

1. watermark initialization

Let's take a look at the initialization related calling functions:

-

nr_free_buffer_pages: Statistics ZONE_DMA and zone_ Available pages in normal, managed_pages - high_pages;

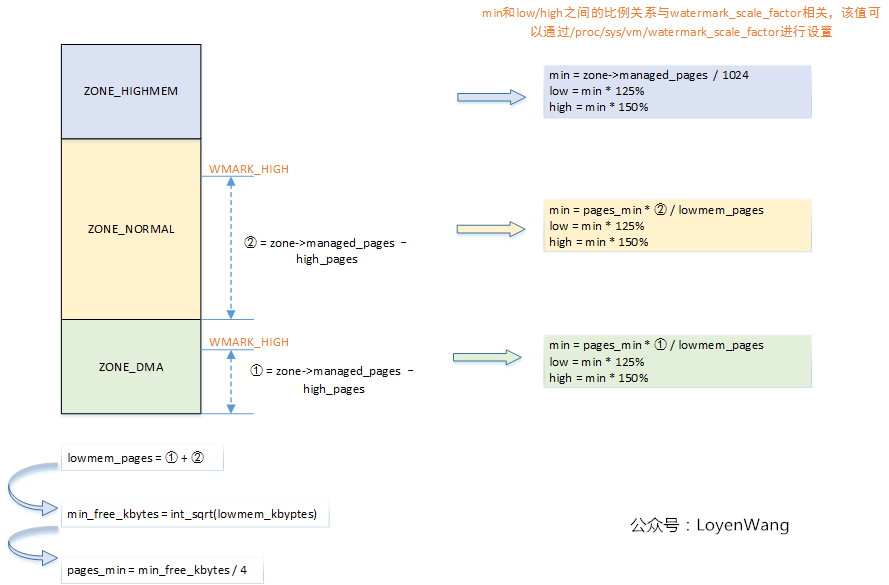

-

setup_per_zone_wmarks: according to min_free_kbytes to calculate the watermark value. A picture will be clear and easy to understand:

-

refresh_zone_stat_thresholds:

Let's review the struct pglist first_ Data and struct zone:

typedef struct pglist_data {

...

struct per_cpu_nodestat __percpu *per_cpu_nodestats;

...

} pg_data_t;

struct per_cpu_nodestat {

s8 stat_threshold;

s8 vm_node_stat_diff[NR_VM_NODE_STAT_ITEMS];

};

struct zone {

...

struct per_cpu_pageset __percpu *pageset;

...

}

struct per_cpu_pageset {

struct per_cpu_pages pcp;

#ifdef CONFIG_NUMA

s8 expire;

u16 vm_numa_stat_diff[NR_VM_NUMA_STAT_ITEMS];

#endif

#ifdef CONFIG_SMP

s8 stat_threshold;

s8 vm_stat_diff[NR_VM_ZONE_STAT_ITEMS];

#endif

};

From the data structure, we can see that both nodes and zones have a per CPU structure to store information, and refresh_zone_stat_thresholds are related to these two structures and are used to update stats in these two structures_ For the threshold field, the specific calculation method is not shown in the table. In addition, percpu is calculated_ drift_ Mark, which is required for watermark judgment. The function of threshold is to judge and trigger a certain behavior, such as memory compression processing.

-

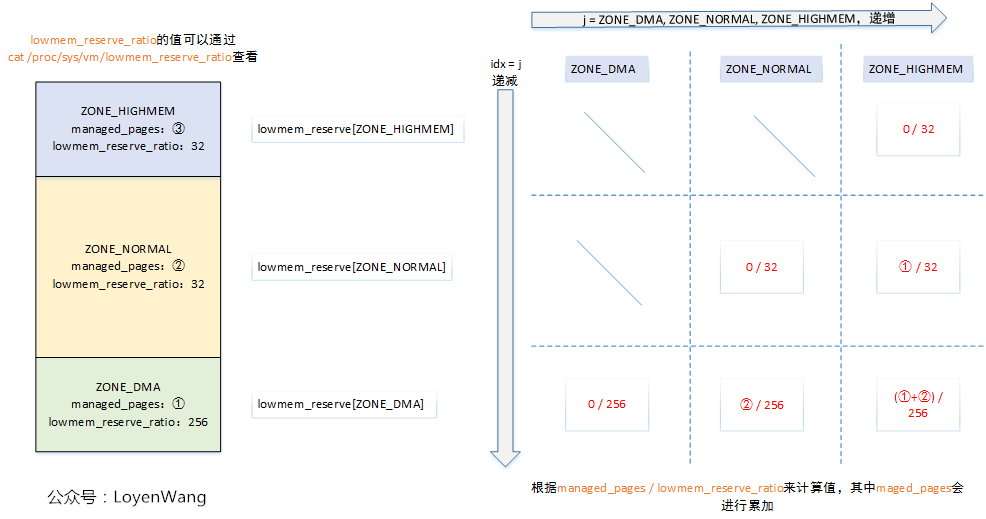

setup_per_zone_lowmem_reserve:

Set the lowmem for each zone_ Reserve size, and the implementation logic in the code is shown in the following figure.

-

calculate_totalreserve_pages:

Calculate the reserved pages of each zone and the total reserved pages of the system, in which high watermark will be regarded as the reserved page. As shown in the figure:

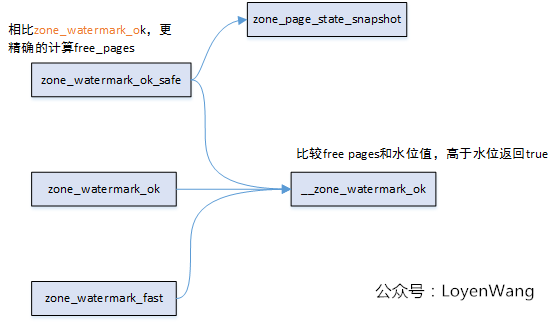

2. watermark judgment

According to the old rule, first look at the function call diagram:

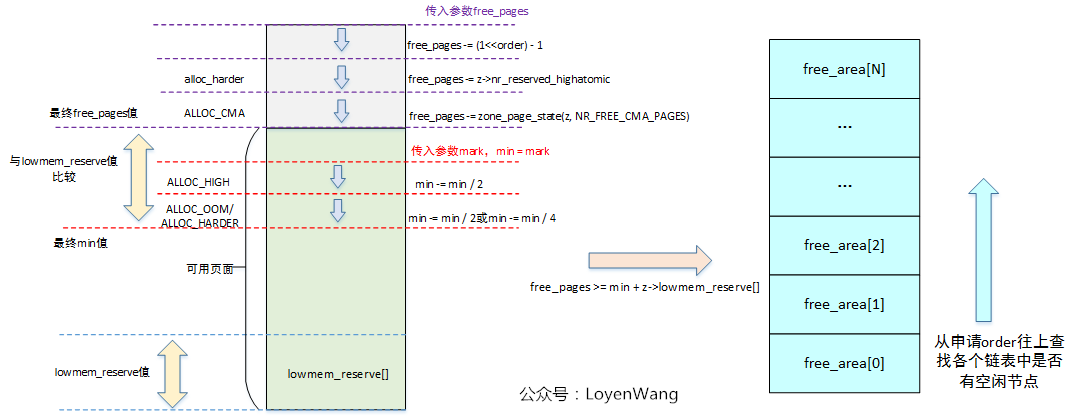

- __zone_watermark_ok:

The key function of watermark judgment. It can be seen from the call relationship in the figure that the final processing is completed through it. Let's illustrate the overall logic with pictures:

In the figure above, judge whether there are enough free pages on the left, and query free directly on the right_ Whether area [] can be allocated finally.

-

zone_watermark_ok: call directly__ zone_watermark_ok, there is no other logic.

-

zone_watermark_fast:

It can be seen from the name that this is a fast judgment. The fast embodiment is mainly to make judgment and decision when order = 0. When the conditions are met, it will directly return true, otherwise it will be called__ zone_watermark_ok.

Post a code, clear and clear:

static inline bool zone_watermark_fast(struct zone *z, unsigned int order,

unsigned long mark, int classzone_idx, unsigned int alloc_flags)

{

long free_pages = zone_page_state(z, NR_FREE_PAGES);

long cma_pages = 0;

#ifdef CONFIG_CMA

/* If allocation can't use CMA areas don't use free CMA pages */

if (!(alloc_flags & ALLOC_CMA))

cma_pages = zone_page_state(z, NR_FREE_CMA_PAGES);

#endif

/*

* Fast check for order-0 only. If this fails then the reserves

* need to be calculated. There is a corner case where the check

* passes but only the high-order atomic reserve are free. If

* the caller is !atomic then it'll uselessly search the free

* list. That corner case is then slower but it is harmless.

*/

if (!order && (free_pages - cma_pages) > mark + z->lowmem_reserve[classzone_idx])

return true;

return __zone_watermark_ok(z, order, mark, classzone_idx, alloc_flags,

free_pages);

}

- zone_watermark_ok_safe:

In zone_ watermark_ ok_ The safe function mainly adds zone_ page_ state_ The call of snapshot is used to calculate free_ Pages, this calculation process will be better than directly through the zone_page_state(z, NR_FREE_PAGES) is more accurate.

bool zone_watermark_ok_safe(struct zone *z, unsigned int order,

unsigned long mark, int classzone_idx)

{

long free_pages = zone_page_state(z, NR_FREE_PAGES);

if (z->percpu_drift_mark && free_pages < z->percpu_drift_mark)

free_pages = zone_page_state_snapshot(z, NR_FREE_PAGES);

return __zone_watermark_ok(z, order, mark, classzone_idx, 0,

free_pages);

}

percpu_drift_mask in refresh_ zone_ stat_ Set in the thresholds function, which has been discussed above.

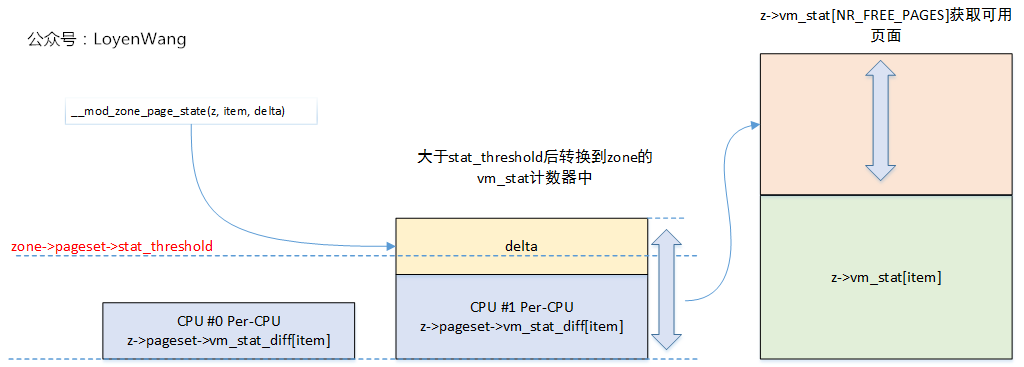

Each zone maintains three fields for page statistics, as follows:

struct zone {

...

struct per_cpu_pageset __percpu *pageset;

...

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

...

/* Zone statistics */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

}

In memory management, the kernel reads the free page and compares it with the watermark value. To read the correct free page value, the VM must be read at the same time_ Stat [] and__ percpu *pageset calculator. If you read every time, it will reduce the efficiency, so you set percpu_drift_mark value. Only when it is lower than this value, more accurate calculation will be triggered to maintain performance.

__ When the value of the percpu *pageset counter is updated, when the counter value exceeds stat_ The threshold value is updated to vm_stat [], as shown below:

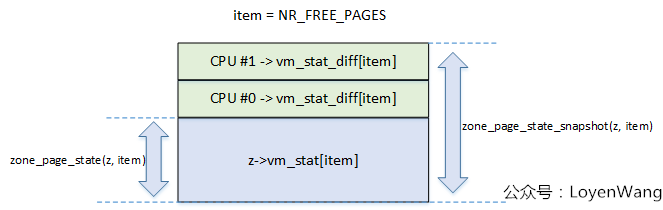

zone_ watermark_ ok_ Zone_ is invoked in safe. page_ state_ Snapshot, and zone_ page_ The difference between States is shown in the following figure:

This is the end of watermark's analysis. It's over!