Environmental information:

dx-hadoop57.dx:

- cpu: 40c

- Operating system: ceontos 6.7

- Deployment Services: DataNode, NodeManager, Impala services.

1, Preface:

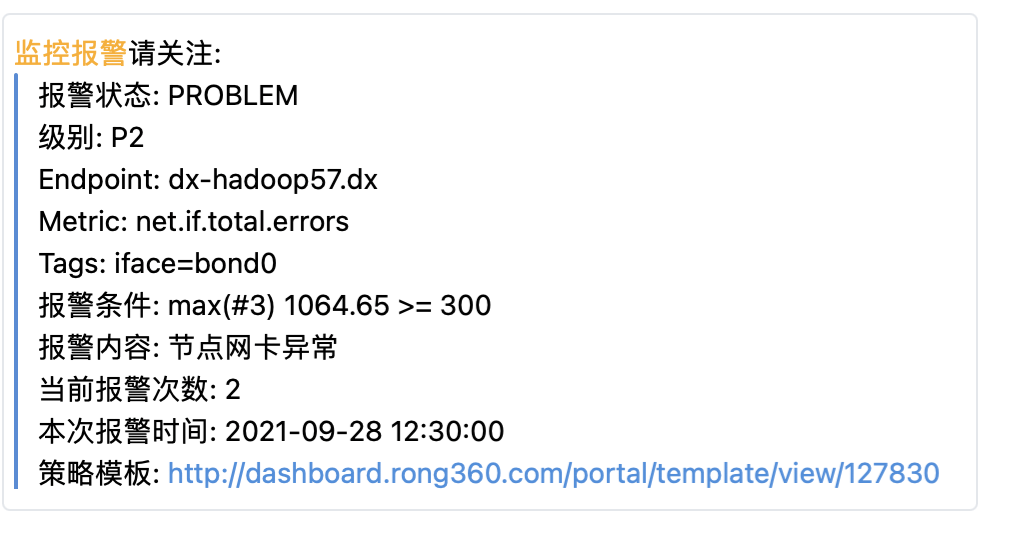

An error has been reported by the network card of a node before. As a result, presto task fails, HDFS reading slows down, and Yarn task execution slows down.

Therefore, the indicator net.if.total.errors is monitored uniformly. After a period of time, similar alarms are received at other nodes.

So I thought it was the previous error, so I asked OP students to help switch the network card again. After switching the network card, I really didn't receive an alarm for a period of time. However, a new alarm was received after a period of time (4-5 hours).

Therefore, stop the running NodeManager and Impala services to, and only retain the DataNode service.

As a result, the error reported by the network card is still not alleviated. Still receive a lot of alarms. Through observation and monitoring, it is found that the average data writing time of this node is longer than that of other nodes.

2, Research:

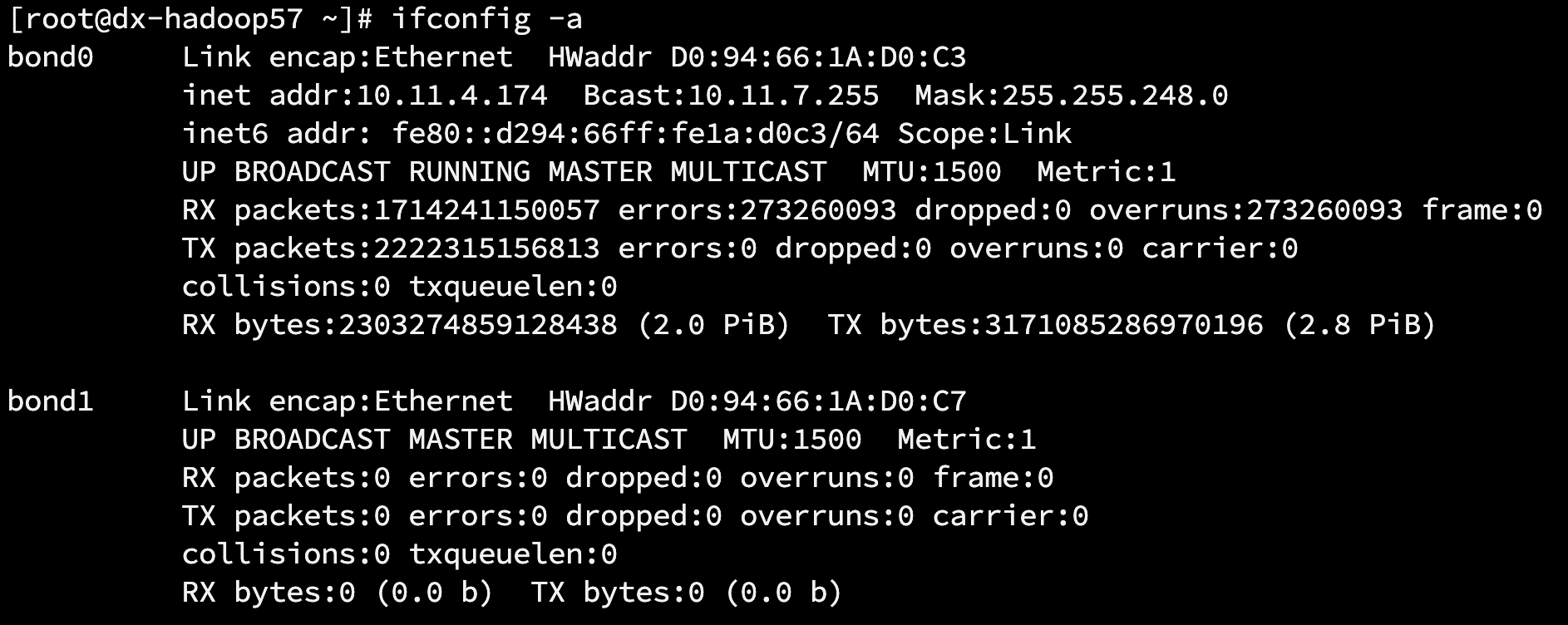

View network card information

When using the ifconfig command to view the network card information, there are dropped and overruns fields in the statistics of collection and contracting, which seem to be packet loss, but what is the difference between them?

ifconfig -a

View results:

The specific explanations are as follows:

- dropped indicates that the data packet has entered the network card's receive cache fifo queue and started to be interrupted by the system to prepare for data packet copying (copying from the network card cache fifo queue to the system memory), but the data packet is lost due to system reasons (such as insufficient memory), that is, the data packet is lost by the Linux system.

- overruns indicates that the packet is discarded before it has entered the fifo queue of the network card's receive cache. Therefore, the fifo of the network card is full at this time. Why is fifo full? Because the system is busy and there is no time to respond to the network card interrupt, the data packet in the network card is not copied to the system memory in time. If the fifo is full, the subsequent data packet cannot enter, that is, the data packet is lost by the network card hardware.

Therefore, I feel that in case of non-zero overruns, I need to check the cpu load and cpu interrupt.

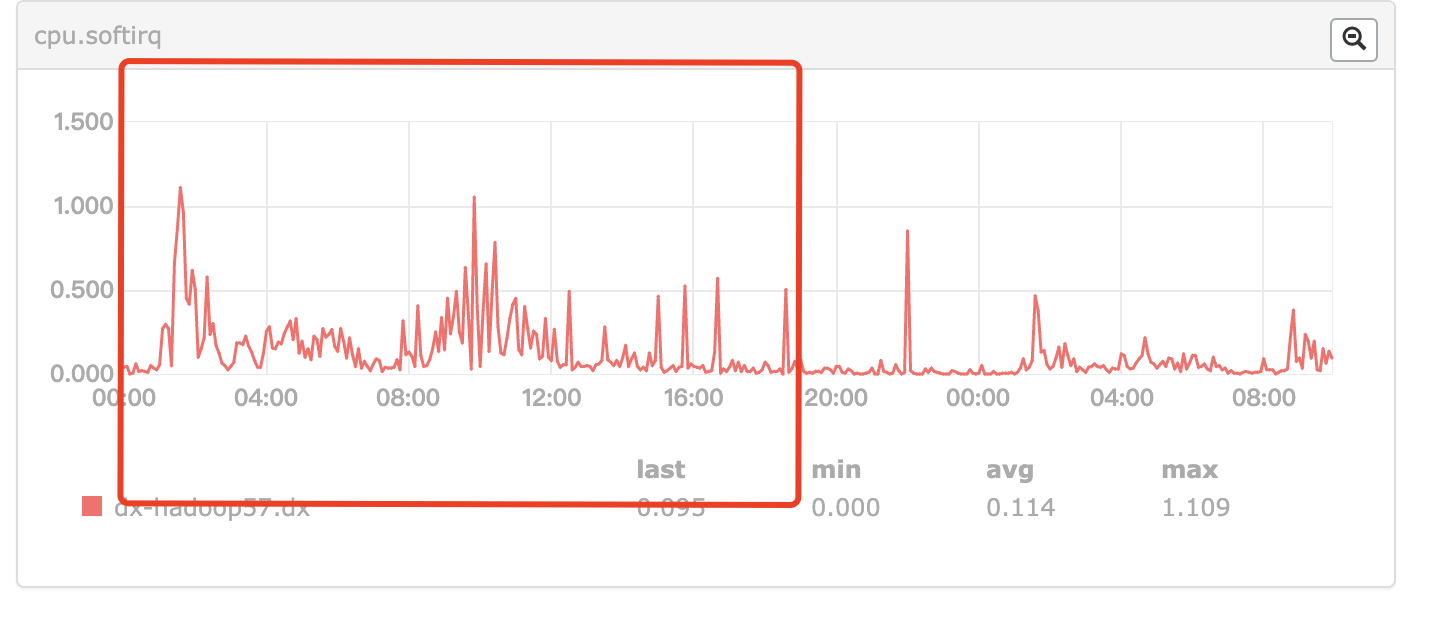

View cpu soft interrupt

The selected in the figure below is the comparison before and after optimization.

By checking the Cpu idle, it is found that individual CPUs will be full. It is observed that the soft interrupt is also higher than that of other nodes. So we decided to optimize the network card soft interrupt binding.

3, Multi queue network card

As the name suggests, the multi queue network card has changed from the original single network card single queue to the current single network card multi queue. Multi queue network card is a technology, which was originally used to solve the problem of network IO QoS (quality of service). Later, with the continuous improvement of network IO bandwidth, the single core CPU can not fully meet the needs of the network card. The most obvious manifestation is that the single core CPU can not handle a large number of packet requests (soft interrupts) of the network card, resulting in a large number of packet losses,

In fact, when the network card receives a packet, it will generate an interrupt to notify the kernel that there is a new packet, and then the kernel calls the interrupt handler to respond and copy the packet from the network card cache to memory. Because the network card cache size is limited, if the data is not copied in time, the subsequent packets will be discarded due to cache overflow, so this work needs to be completed immediately.

The remaining work of processing and operating data packets will be handed over to soft interrupts. High load network cards are the major users of soft interrupts, which are easy to form bottlenecks. However, through the support of multi queue network card driver, each queue is bound to different CPU cores through interrupt to meet the needs of network card, which is the application of multi queue network card.

Network card soft interrupt imbalance:

- Focus on one CPU core (mpstat view% soft set, usually cpu0).

- The hardware interrupt queue of the network card is not enough, < the number of CPU cores, and cannot be bound one-to-one, resulting in less CPU cores%soft and unbalanced CPU usage.

Therefore, if the network traffic is large, soft interrupts need to be evenly distributed to each core to avoid a single CPU core becoming a bottleneck.

4, SMP IRQ affinity

After the Linux 2.4 kernel, the technology of binding specific interrupts to the specified CPU is introduced, which is called SMP IRQ affinity

Principle:

When a hardware (such as disk controller or Ethernet card) In order to prevent multiple settings from sending the same interrupt, Linux designs an interrupt request system so that each device in the computer system is assigned its own interrupt number to ensure the uniqueness of its interrupt request Sex

Starting with the 2.4 kernel, Linux has improved the allocation of specific interrupts to specified processors (or processor groups) This is called SMP IRQ affinity. It can control how the system responds to various hardware events. It allows you to limit or redistribute the workload of the server, so as to make the server work more effectively. Take the network card interrupt as an example. When SMP IRQ affinity is not set, all network card interrupts are associated with CPU0, which leads to the high load of CPU0 and can not be handled effectively and quickly Managing network packets leads to a bottleneck.

Through SMP IRQ affinity, multiple network card interrupts are allocated to multiple CPUs, which can disperse CPU pressure and improve data processing speed.

Premise of use:

- Systems requiring multiple CPU s

- A Linux kernel greater than or equal to 2.4 is required

5, Solution:

Script content:

#!/bin/bash

#

# Copyright (c) 2014, Intel Corporation

#

# Redistribution and use in source and binary forms, with or without

# modification, are permitted provided that the following conditions are met:

#

# * Redistributions of source code must retain the above copyright notice,

# this list of conditions and the following disclaimer.

# * Redistributions in binary form must reproduce the above copyright

# notice, this list of conditions and the following disclaimer in the

# documentation and/or other materials provided with the distribution.

# * Neither the name of Intel Corporation nor the names of its contributors

# may be used to endorse or promote products derived from this software

# without specific prior written permission.

#

# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

# AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

# IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

# DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE

# FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

# DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

# SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

# CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

# OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

# OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

#

# Affinitize interrupts to cores

#

# typical usage is (as root):

# set_irq_affinity -x local eth1 <eth2> <eth3>

#

# to get help:

# set_irq_affinity

usage()

{

echo

echo "Usage: $0 [-x|-X] {all|local|remote|one|custom} [ethX] <[ethY]>"

echo " options: -x Configure XPS as well as smp_affinity"

echo " options: -X Disable XPS but set smp_affinity"

echo " options: {remote|one} can be followed by a specific node number"

echo " Ex: $0 local eth0"

echo " Ex: $0 remote 1 eth0"

echo " Ex: $0 custom eth0 eth1"

echo " Ex: $0 0-7,16-23 eth0"

echo

exit 1

}

usageX()

{

echo "options -x and -X cannot both be specified, pick one"

exit 1

}

if [ "$1" == "-x" ]; then

XPS_ENA=1

shift

fi

if [ "$1" == "-X" ]; then

if [ -n "$XPS_ENA" ]; then

usageX

fi

XPS_DIS=2

shift

fi

if [ "$1" == -x ]; then

usageX

fi

if [ -n "$XPS_ENA" ] && [ -n "$XPS_DIS" ]; then

usageX

fi

if [ -z "$XPS_ENA" ]; then

XPS_ENA=$XPS_DIS

fi

num='^[0-9]+$'

# Vars

AFF=$1

shift

case "$AFF" in

remote) [[ $1 =~ $num ]] && rnode=$1 && shift ;;

one) [[ $1 =~ $num ]] && cnt=$1 && shift ;;

all) ;;

local) ;;

custom) ;;

[0-9]*) ;;

-h|--help) usage ;;

"") usage ;;

*) IFACES=$AFF && AFF=all ;; # Backwards compat mode

esac

# append the interfaces listed to the string with spaces

while [ "$#" -ne "0" ] ; do

IFACES+=" $1"

shift

done

# for now the user must specify interfaces

if [ -z "$IFACES" ]; then

usage

exit 1

fi

# support functions

set_affinity()

{

VEC=$core

if [ $VEC -ge 32 ]

then

MASK_FILL=""

MASK_ZERO="00000000"

let "IDX = $VEC / 32"

for ((i=1; i<=$IDX;i++))

do

MASK_FILL="${MASK_FILL},${MASK_ZERO}"

done

let "VEC -= 32 * $IDX"

MASK_TMP=$((1<<$VEC))

MASK=$(printf "%X%s" $MASK_TMP $MASK_FILL)

else

MASK_TMP=$((1<<$VEC))

MASK=$(printf "%X" $MASK_TMP)

fi

printf "%s" $MASK > /proc/irq/$IRQ/smp_affinity

printf "%s %d %s -> /proc/irq/$IRQ/smp_affinity\n" $IFACE $core $MASK

case "$XPS_ENA" in

1)

printf "%s %d %s -> /sys/class/net/%s/queues/tx-%d/xps_cpus\n" $IFACE $core $MASK $IFACE $((n-1))

printf "%s" $MASK > /sys/class/net/$IFACE/queues/tx-$((n-1))/xps_cpus

;;

2)

MASK=0

printf "%s %d %s -> /sys/class/net/%s/queues/tx-%d/xps_cpus\n" $IFACE $core $MASK $IFACE $((n-1))

printf "%s" $MASK > /sys/class/net/$IFACE/queues/tx-$((n-1))/xps_cpus

;;

*)

esac

}

# Allow usage of , or -

#

parse_range () {

RANGE=${@//,/ }

RANGE=${RANGE//-/..}

LIST=""

for r in $RANGE; do

# eval lets us use vars in {#..#} range

[[ $r =~ '..' ]] && r="$(eval echo {$r})"

LIST+=" $r"

done

echo $LIST

}

# Affinitize interrupts

#

setaff()

{

CORES=$(parse_range $CORES)

ncores=$(echo $CORES | wc -w)

n=1

# this script only supports interrupt vectors in pairs,

# modification would be required to support a single Tx or Rx queue

# per interrupt vector

queues="${IFACE}-.*TxRx"

irqs=$(grep "$queues" /proc/interrupts | cut -f1 -d:)

[ -z "$irqs" ] && irqs=$(grep $IFACE /proc/interrupts | cut -f1 -d:)

[ -z "$irqs" ] && irqs=$(for i in `ls -Ux /sys/class/net/$IFACE/device/msi_irqs` ;\

do grep "$i:.*TxRx" /proc/interrupts | grep -v fdir | cut -f 1 -d : ;\

done)

[ -z "$irqs" ] && echo "Error: Could not find interrupts for $IFACE"

echo "IFACE CORE MASK -> FILE"

echo "======================="

for IRQ in $irqs; do

[ "$n" -gt "$ncores" ] && n=1

j=1

# much faster than calling cut for each

for i in $CORES; do

[ $((j++)) -ge $n ] && break

done

core=$i

set_affinity

((n++))

done

}

# now the actual useful bits of code

# these next 2 lines would allow script to auto-determine interfaces

#[ -z "$IFACES" ] && IFACES=$(ls /sys/class/net)

#[ -z "$IFACES" ] && echo "Error: No interfaces up" && exit 1

# echo IFACES is $IFACES

CORES=$(</sys/devices/system/cpu/online)

[ "$CORES" ] || CORES=$(grep ^proc /proc/cpuinfo | cut -f2 -d:)

# Core list for each node from sysfs

node_dir=/sys/devices/system/node

for i in $(ls -d $node_dir/node*); do

i=${i/*node/}

corelist[$i]=$(<$node_dir/node${i}/cpulist)

done

for IFACE in $IFACES; do

# echo $IFACE being modified

dev_dir=/sys/class/net/$IFACE/device

[ -e $dev_dir/numa_node ] && node=$(<$dev_dir/numa_node)

[ "$node" ] && [ "$node" -gt 0 ] || node=0

case "$AFF" in

local)

CORES=${corelist[$node]}

;;

remote)

[ "$rnode" ] || { [ $node -eq 0 ] && rnode=1 || rnode=0; }

CORES=${corelist[$rnode]}

;;

one)

[ -n "$cnt" ] || cnt=0

CORES=$cnt

;;

all)

CORES=$CORES

;;

custom)

echo -n "Input cores for $IFACE (ex. 0-7,15-23): "

read CORES

;;

[0-9]*)

CORES=$AFF

;;

*)

usage

exit 1

;;

esac

# call the worker function

setaff

done

# check for irqbalance running

IRQBALANCE_ON=`ps ax | grep -v grep | grep -q irqbalance; echo $?`

if [ "$IRQBALANCE_ON" == "0" ] ; then

echo " WARNING: irqbalance is running and will"

echo " likely override this script's affinitization."

echo " Please stop the irqbalance service and/or execute"

echo " 'killall irqbalance'"

fi

Execute script

sh set_irq.sh local eth0