1, Random method

Completely random: randomly select one of the servers for access according to the list size value of the back-end server through the random algorithm of the system. According to the theory of probability and statistics, as the number of calls from the client to the server increases, the actual effect is closer and closer to the average allocation of calls to each server at the back end, that is, the result of polling.

Code implementation:

public class Servers {

public List<String> list = new ArrayList<>() {

{

add("192.168.1.1");

add("192.168.1.2");

add("192.168.1.3");

}

};

}

public class FullRandom {

static Servers servers = new Servers();

static Random random = new Random();

public static String go() {

var number = random.nextInt(servers.list.size()); // Create a 0 ~ list A random number between size()

return servers.list.get(number); // Randomly get the values in the list

}

public static void main(String[] args) {

for (var i = 0; i < 10; i++) {

System.out.println(go());

}

}

}

When there are enough requests, it will become more and more average; Complete randomness is the simplest load balancing algorithm. The disadvantages are obvious, because the servers have good and bad, and the processing capacity is different. We hope that servers with good performance can process more requests and servers with poor performance can process less requests, so there is weighted randomness.

Weighted random: Although the random algorithm is still used, the weight is set for each server. The server with large weight has a higher probability of obtaining, and the server with small weight has a lower probability of obtaining.

Method 1: add the ip address of the server to the List as many times as the weight of the server is

Code implementation:

public class Servers {

public HashMap<String, Integer> map = new HashMap<>() {

{

put("192.168.1.1", 2); // The value in the map represents the corresponding weight of the server

put("192.168.1.2", 7);

put("192.168.1.3", 1);

}

};

}

public class WeightRandom {

static Servers servers = new Servers();

static Random random = new Random();

public static String go() {

var ipList = new ArrayList<String>();

for (var item : servers.map.entrySet()) {

for (var i = 0; i < item.getValue(); i++) {

ipList.add(item.getKey()); // The server weight corresponds to the number of times the ip of the server is added to the ipList

}

}

int allWeight = servers.map.values().stream().mapToInt(a -> a).sum();

var number = random.nextInt(allWeight);

return ipList.get(number); // Randomly obtain server ip from ipList

}

public static void main(String[] args) {

for (var i = 0; i < 15; i++) {

System.out.println(go());

}

}

}

Advantages of this method: simple

Disadvantages: the number of servers is very large and will occupy a lot of memory

Method 2:

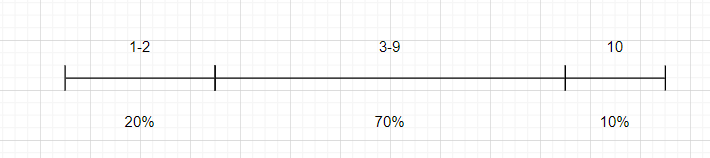

If the weight of server A is 2, the weight of server B is 7, and the weight of server C is 1, as shown in the figure:

So if the generated random number is 4, server B will be selected, and so on

Code implementation:

public class WeightRandom {

static Servers servers = new Servers();

static Random random = new Random();

public static String go() {

int allWeight = servers.map.values().stream().mapToInt(a -> a).sum();

var number = random.nextInt(allWeight);

for (var item : servers.map.entrySet()) {

if (item.getValue() >= number) {

return item.getKey();

}

number -= item.getValue();

}

return "";

}

public static void main(String[] args) {

for (var i = 0; i < 15; i++) {

System.out.println(go());

}

}

}

Advantages: memory friendly

2, Round robin

Full polling: the requests are distributed to the back-end servers in turn. It treats each server in the back-end in a balanced manner, regardless of the actual number of connections of the server and the current system load

Code implementation:

public class FullRound {

static Servers servers = new Servers();

static int index;

public static String go() {

if (index == servers.list.size()) {

index = 0; // It's the end. Start from 0

}

return servers.list.get(index++); // Sequential access

}

public static void main(String[] args) {

for (var i = 0; i < 15; i++) {

System.out.println(go());

}

}

}

Weight Round Robin:

Different back-end servers may have different machine configurations and the load of the current system, so their stress resistance is also different. Configure higher weights for machines with high configuration and low load to handle more requests; The machines with low configuration and high load are assigned a lower weight to reduce their system load. Weighted polling can deal with this problem well and distribute the requests to the back end in order and according to the weight

Code implementation:

public class WeightRound {

static Servers servers = new Servers();

static int index;

public static String go() {

int allWeight = servers.map.values().stream().mapToInt(a -> a).sum();

int number = (index++) % allWeight;

for (var item : servers.map.entrySet()) {

if (item.getValue() > number) {

return item.getKey();

}

number -= item.getValue();

}

return "";

}

public static void main(String[] args) {

for (var i = 0; i < 15; i++) {

System.out.println(go());

}

}

}

3, Source address hash

The idea of source address hash is to obtain a value calculated by the hash function according to the IP address of the client, use this value to modulo the size of the server list, and the result is the serial number of the customer service side to access the server. The source address hash method is used for load balancing. When the back-end server list of clients with the same IP address remains unchanged, it will be mapped to the same back-end server for access every time, which can solve the problem of Session sharing under load balancing

Now let's see how it works

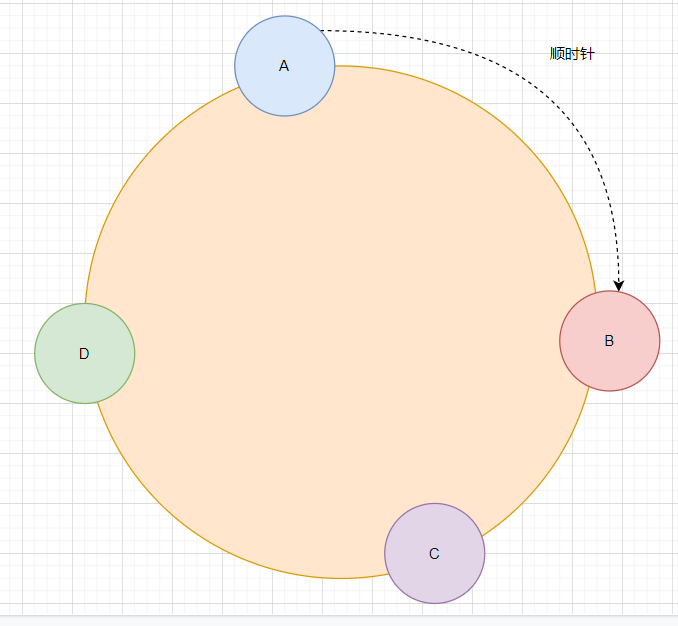

As shown in the figure: we can imagine that this circle is composed of countless points. When A request comes, it will be handed over to the first server rotating clockwise. For example, if the request is in A and B, the request will be handed over to server B for processing.

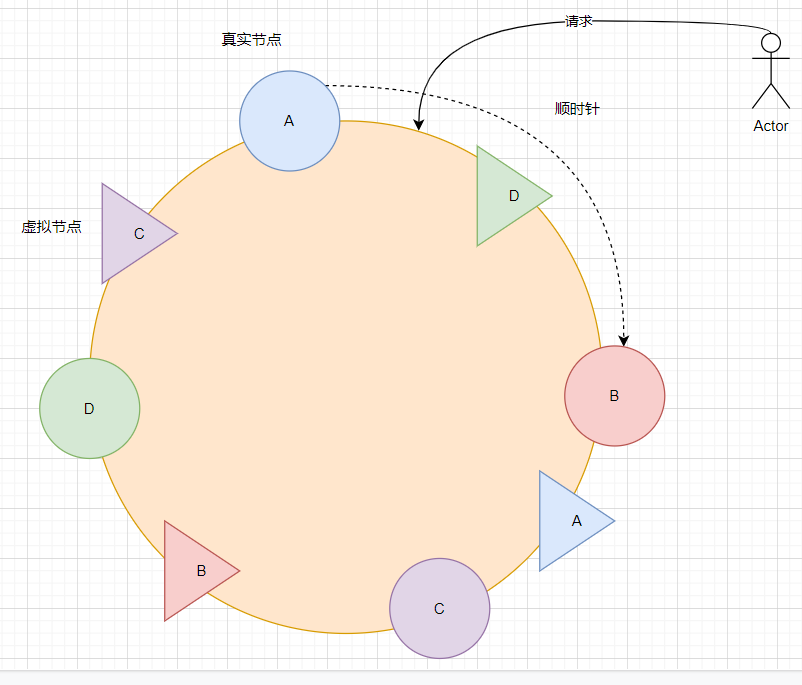

If server C goes down, the service range of server D will become larger. At this time, the pressure of server D will be too large, and server B is relatively idle. This situation is called "hash skew". How to solve it? In fact, we only need to insert virtual nodes between these servers, as shown in the figure:

The triangle in the figure is a virtual node and the circle is a real node. When there is a request as shown in the figure, according to the clockwise algorithm, the request will be handed over to the virtual server D for processing, but finally to the real server D for processing. Therefore, this can reduce the situation that one server above is very busy and green and one server is very idle

Code implementation:

private static String go(String client) {

int nodeCount = 20;

TreeMap<Integer, String> treeMap = new TreeMap();

for (String s : new Servers().list) {

for (int i = 0; i < nodeCount; i++)

treeMap.put((s + "--The server---" + i).hashCode(), s);

}

int clientHash = client.hashCode();

SortedMap<Integer, String> subMap = treeMap.tailMap(clientHash);

Integer firstHash;

if (subMap.size() > 0) {

firstHash = subMap.firstKey();

} else {

firstHash = treeMap.firstKey();

}

String s = treeMap.get(firstHash);

return s;

}

public static void main(String[] args) {

System.out.println(go("It's a nice day today"));

System.out.println(go("192.168.5.258"));

System.out.println(go("0"));

System.out.println(go("-110000"));

System.out.println(go("it 's raining and blowing hard"));

}

4, Least connections method

The minimum number of connections algorithm is flexible and intelligent. Due to different configurations of back-end servers, the processing of requests is fast and slow. It dynamically selects the server with the least current backlog of connections to process current requests according to the current connection status of back-end servers, so as to improve the utilization efficiency of back-end services as much as possible, Distribute the responsibility to each server reasonably

For example, if server A has 100 requests, server B has 5 requests, and server C has only 3 requests, there is no doubt that server C will be selected. This load balancing algorithm is more scientific. Unfortunately, in the current scenario, the "original" minimum pressure load balancing algorithm can not be simulated.

reference resources: https://juejin.cn/post/6844903793012768781

According to the current connection of the back-end server, dynamically select the server with the least number of current backlog connections to process the current request, improve the utilization efficiency of the back-end service as much as possible, and reasonably divert the responsibility to each server

For example, if server A has 100 requests, server B has 5 requests, and server C has only 3 requests, there is no doubt that server C will be selected. This load balancing algorithm is more scientific. Unfortunately, in the current scenario, the "original" minimum pressure load balancing algorithm can not be simulated.

reference resources: https://juejin.cn/post/6844903793012768781

https://www.pdai.tech/md/algorithm/alg-domain-load-balance.html#%E9%9A%8F%E6%9C%BA%E6%B3%95random