This paper records the design and implementation process of message queue with persistence function which I participated in Aliyun middleware competition a month ago. It should be noted that LocalMQ draws on the core design idea of RocketMQ in Broker. The earliest source code is also based on RocketMQ source code modification. This article covers references and other information about message queues Here The source code is placed in LocalMQ Warehouse In addition, the author's level is limited, and since graduation travel has not been optimized, many of the contents of this article may have fallacies and shortcomings, please criticize and correct.

LocalMQ: Building RocketMQ-like high-performance message queues from scratch

The so-called message queue is intuitively like a reservoir, which can decouple between producers and consumers, and balance the difference between calculating amount and calculating time between producers and consumers. At present, the mainstream message queues are famous Kafka, RabbitMQ, RocketMQ and so on. In the author's realization LocalMQ The three versions of Memory Message MQ, Embedded Message Queue and Local Message Queue are implemented successively from simplicity to replication. It needs to be noted that in the three versions of message queues, the so-called pull mode is adopted, that is, the mode in which consumers actively request pull messages from message queues to cancel messages. Many internal function and performance test cases are provided under the wx.demo. * package.

// First here: https://parg.co/beX download code // Then modify the inheritance class corresponding to DefaultProducer // Testing MemoryMessageQueue inherits MemoryProducer. // Testing Embedded MessageQueue inherits Embedded Producer. // By default, test LocalMessageQueue. Note that the same changes need to be made to DefaultPullConsumer public class DefaultProducer extends LocalProducer // Running test cases using mvn can also be opened in Eclipse or Intellij mvn clean package -U assembly:assembly -Dmaven.test.skip=true java -Xmx2048m -Xms2048m -cp open-messaging-wx.demo-1.0.jar wx.demo.benchmark.ProducerBenchmark

The simplest Memory Message Queue is to store message data in memory according to the selected topic. Its main structure is as follows:

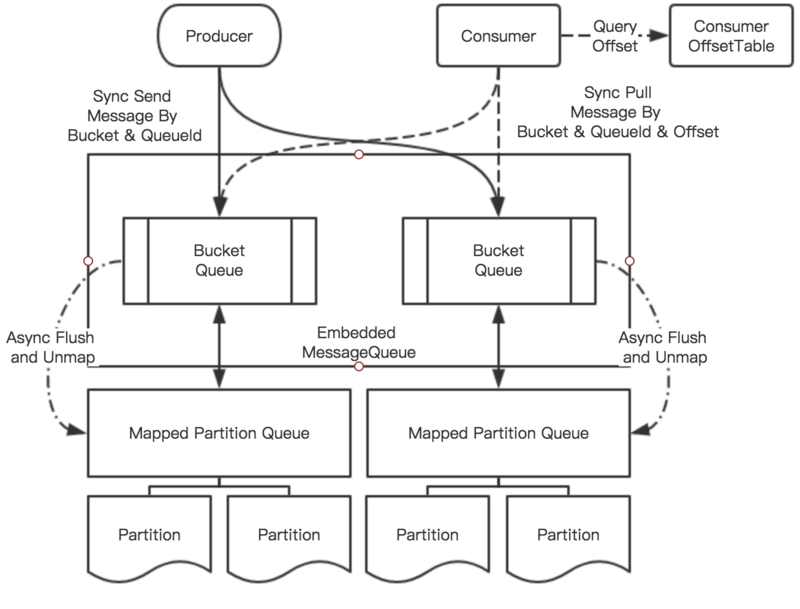

Memory Message Queue provides synchronous message submission and pull operations, which use HashMap heap storage to cache all messages, and maintains another so-called Queue Offsets in memory to record consumption offsets for queues corresponding to each topic. Embedded MessageQueue provides a slightly more complex message queue that supports disk persistence than the simple message queue that MemoryMessageQueue implements that cannot be persisted. Embedded MessageQueue builds Mapped Partition Queue based on MappedByteBuffer provided by Java NIO. Each MappedPartitionQueue corresponds to multiple physical files on disk and provides a single logical file for the upper application abstraction. The Embedded MessageQueue structure is shown in the following figure:

The main process of Embedded Message Queue is that producers submit messages synchronously like Bucket Queue, and each Bucket can be regarded as a Topic or Queue. Embedded MessageQueue also contains an asynchronous thread responsible for periodically writing data persistence from MappedPartitionQueue to disk, which periodically completes Flush operations. Embedded MessageQueue assumes that a BucketQueue is occupied by a Consumer after it is assigned to it, and that the Consumer consumes all of its cached messages; each Consumer contains an independent Consumer Offset Table to record current queue consumption. The drawbacks of Embedded MessageQueue are:

Mixed Processing and Markup Bits: Embedded Message Queue provides only the simplest message serialization model and cannot record additional message attributes.

Timing of persistent storage to disk: Embedded MessageQueue uses only one level of cache and only persists files when a Partition is full;

Post-processing of adding messages: Embedded Message Queue is to write messages directly into Mapped Partition Queue contained in BucketQueue. It can not dynamically index and filter messages after processing, and its scalability is poor.

The case of intermittent pulling is not considered: Embedded MessageQueue assumes that Consumer can process all the messages of a single Partition in a BucketQueue at a single time, so when recording its processing value, only the displacement at the file level is recorded. If there exists a time when only part of the content in a single Partition is pulled out, the next starting pull point is still the next header of the file.

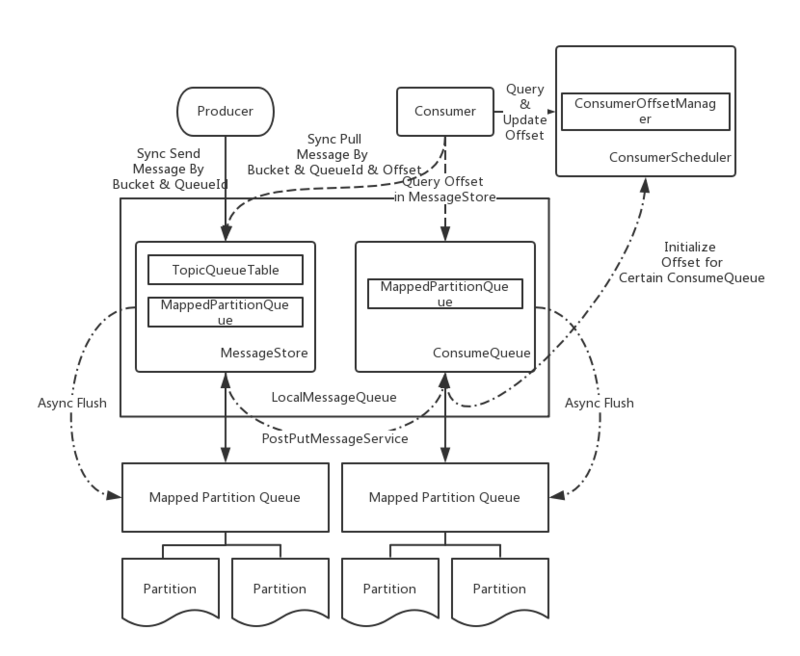

In Embedded Message Queue, we can persist messages into files separately in each Producer thread, while in Local Message Queue, we write messages into Message Store, and then post PutMessage Service for secondary processing. The structure of LocalMessageQueue is as follows:

The biggest change of Local Message Queue is to store messages uniformly in an independent Message Store (similar to CommitLog in RocketMQ) and then divide them into different ConsumeQueues for Topic-queueId, where queueId is determined by the corresponding Producer exclusive editor and each Consumer is assigned to occupy a ConsumeQueue (similar to Rocket MQ). The consumequeue in MQ ensures that messages produced by a Producer are consumed by a dedicated Constumer. LocalMessageQueue also uses MappedPartitionQueue to provide the underlying file system abstraction, and constructs an independent Consumer Offset Manager to manage the consumer's consumption schedule, thus facilitating exception recovery.

Design outline

Sequential consumption

This section draws from Principle and Practice of Distributed Open Message System (RocketMQ)

An important feature of message products is sequence assurance, that is to say, the order of message consumption should be consistent with the order of sending time; in the case of multiple senders, the cost of guaranteeing global sequence is relatively high, as long as the order of each sender is guaranteed; for example, P1 sends M11, M12, M13, P2 sends M21, M22, M23, only M11, M23 when consuming. The order of 12, M13 (M21, M22, M23), that is to say, the actual consumption order is: M11, M21, M12, M13, M22, M23 is correct; M11, M21, M22, M12, M13, M23 is correct M11, M13, M13, M21, M22, M23, M12 is wrong, the order of M12 and M13 is reversed; if the producer produces two messages: M1, M2, the most intuitive way to ensure the order of these two messages is similar to that in TCP. Confirmation message:

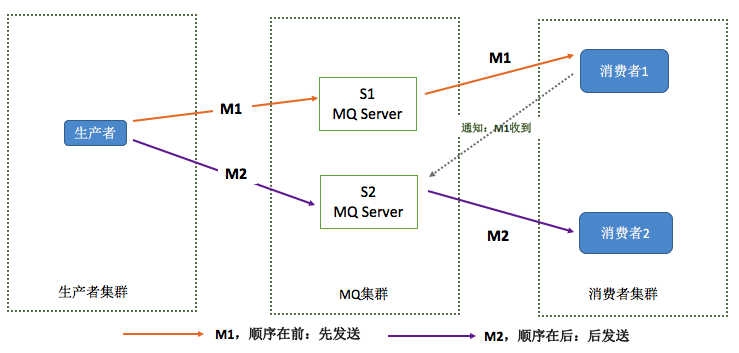

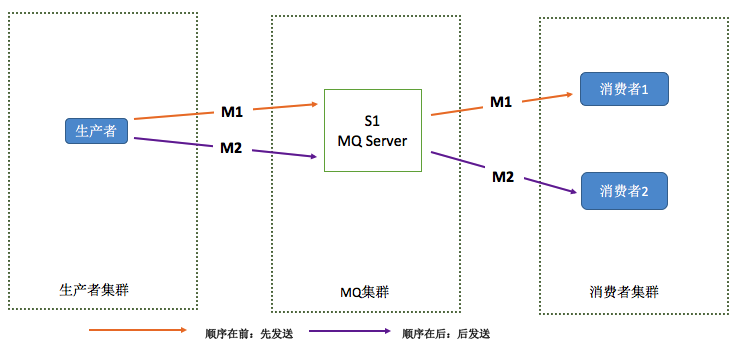

However, in this model, if M1 and M2 are sent to two different message servers respectively, we can not control the timing of sending M1 and M2 to the message server; it is possible that M2 has been sent to consumers before M1 is sent to the message server. To solve this problem, the idea of the improved version is to send M1 and M2 to a single message server, and then to the corresponding consumers according to the principle of first arrival, first consumption:

However, in practice, M2 will be consumed before M1 when the transmission time of M1 is longer than M2 because of network delay or other problems. Therefore, if we want to ensure strict sequential messages, we must ensure a one-to-one correspondence among producers, message servers and consumers. In the implementation of LocalMQ, we first divide the message into a unique Topic-queueId queue according to the producer, and ensure that the consumption queue will only be monopolized by one consumer at the same time. If a consumer accidentally interrupts the queue before consuming it, the queue will not be reallocated during the retention window period; the queue will be assigned to a new consumer outside the window period, and even if the original consumer resumes work, it will not be able to continue pulling the message contained in the queue.

data storage

LocalMQ is currently a file system-based persistent storage. Its main functions are implemented in Mapped Partition and Mapped Partition Queue. The author will also introduce the implementation of these two classes in detail below. In this section, we discuss the file format of data storage. For LocalMessageQueue, the file format is as follows:

* messageStore * -- MapFile1 * -- MapFile2 * consumeQueue * -- Topic1 * ---- queueId1 * ------ MapFile1 * ------ MapFile2 * ---- queueId2 * ------ MapFile1 * ------ MapFile2 * -- Queue1 * ---- queueId1 * ------ MapFile1 * ------ MapFile2 * ---- queueId2 * ------ MapFile1 * ------ MapFile2

Local Message Queue uses a unified message storage scheme, so the actual content of all messages will be stored in the messageStore directory. The consumeQueue stores the index of the message, which is the offset address in the message store. LocalMQ uses MappedPartitionQueue to manage a single file logically, and automatically cuts it into multiple physically independent Mapped File s depending on the size of the single file. Each MappedPartition uses offset, that is, the global offset of the first address of the file is named; pos / position is used to unify the local offset in a single file, and index is used to indicate the subscript of a file in its folder.

performance optimization

In the process of writing, the author found that the optimization of execution flow, avoiding repeated calculation and additional variables, and choosing the appropriate concurrency strategy would have a great impact on the results. For example, after the author switched from SpinLock to reentry lock, the local test TPS increased by about 5%. In addition, the author also counts the time proportion of different stages in consumer work, in which the construction (including serialization of message attributes) and the sending operation (written to MappedFileQueue, without secondary cache) are synchronized, and the time proportion of both is the largest.

[2017-06-01 12:13:21,802] INFO: construction time: 0.471270, transmission time: 0.428567, persistence time: 0.100163 [2017-06-01 12:25:31,275] INFO: Construct time-consuming ratio: 0.275170, send time-consuming ratio: 0.573520, persistence time-consuming ratio: 0.151309

Code level optimization

In the process of implementing LocalMQ, the author feels most deeply that the performance of different codes that implement the same function may vary greatly. In the process of implementation, redundant variable declaration and creation should be avoided, extra space application and garbage collection should be avoided, and redundant execution process should be avoided. In addition, appropriate data structures should be selected as far as possible, such as the migration of the author from ArrayList to LinkedList and from Concurrent HashMap to HashMap in some implementations.

Asynchronous IO

Asynchronous IO, sequential Flush; I found that if multiple threads concurrent Flush operation, instead of single thread sequential Flush.

concurrency control

Minimize the scope of lock control.

Concurrent computing optimization, put all time-consuming computing in a concurrent roducer.

With a reasonable lock, the re-entry phase lock is nearly five times higher than the spin lock in TPS.

MemoryMessageQueue

Source code reference Here

MemoryMessageQueue is the simplest implementation, but its code can reflect the basic flow of a message queue. First, in the producer, we need to create a message and send it to the message queue:

// Create messages BytesMessage message = messageFactory.createBytesMessageToTopic(topic, body); // send message messageQueue.putMessage(topic, message);

In the putMessage function, messages are stored in memory:

// Store all messages private Map<String, ArrayList<Message>> messageBuckets = new HashMap<>(); // Adding messages public synchronized PutMessageResult putMessage(String bucket, Message message) { if (!messageBuckets.containsKey(bucket)) { messageBuckets.put(bucket, new ArrayList<>(1024)); } ArrayList<Message> bucketList = messageBuckets.get(bucket); bucketList.add(message); return new PutMessageResult(PutMessageStatus.PUT_OK, null); }

Consumer pulls and cancels interest rates according to the specified Bucket and queueId, and polls if there are multiple Buckets that need to be pulled:

//use Round Robin int checkNum = 0; while (++checkNum <= bucketList.size()) { String bucket = bucketList.get((++lastIndex) % (bucketList.size())); Message message = messageQueue.pullMessage(queue, bucket); if (message != null) { return message; } }

The pullMessage function of MemoryMessageQueue first determines whether the target Bucket exists or not, and then judges whether the pull-out is complete according to the pull offset recorded in the built-in queueOffset. If not, the message is returned and the local offset is updated.

private Map<String, HashMap<String, Integer>> queueOffsets = new HashMap<>(); ... public synchronized Message pullMessage(String queue, String bucket) { ... ArrayList<Message> bucketList = messageBuckets.get(bucket); if (bucketList == null) { return null; } HashMap<String, Integer> offsetMap = queueOffsets.get(queue); if (offsetMap == null) { offsetMap = new HashMap<>(); queueOffsets.put(queue, offsetMap); } int offset = offsetMap.getOrDefault(bucket, 0); if (offset >= bucketList.size()) { return null; } Message message = bucketList.get(offset); offsetMap.put(bucket, ++offset); ... }

EmbeddedMessageQueue

Source code reference Here

Message persistence support is introduced in Embedded Message Queue. In this section, we also discuss message serialization and the underlying MappedPartitionQueue implementation.

Message serialization

The message format defined in Embedded MessageQueue is as follows:

| Serial number | Message Storage Structure | Remarks | Length (bytes) |

|---|---|---|---|

| 1 | TOTALSIZE | Message size | 4 |

| 2 | MAGICCODE | MAGIC CODE for Messages | 4 |

| 3 | BODY | The first four bytes store the message body size value, and the second body Length size space stores the message body content. | 4 + bodyLength |

| 4 | headers* | The first two bytes (short) store the header size, and then the header Length size header data. | 2 + headersLength |

| 5 | properties* | The first two bytes (short) store the attribute value size, and then the attribute data of the property Length size. | 2 + propertiesLength |

Embedded Message Serializer is the main class responsible for message persistence inherited from Message Serializer. It provides a function for calculating message length.

/** * Description Calculate the length of a message. Note that headers ByteArray and properties ByteArray complete the transformation when sending a message. * @param message * @param headersByteArray * @param propertiesByteArray * @return */ public static int calMsgLength(DefaultBytesMessage message, byte[] headersByteArray, byte[] propertiesByteArray) { // Message Body byte[] body = message.getBody(); int bodyLength = body == null ? 0 : body.length; // Calculating Head Length short headersLength = (short) headersByteArray.length; // Calculate attribute length short propertiesLength = (short) propertiesByteArray.length; // Calculate the total length of message body return calMsgLength(bodyLength, headersLength, propertiesLength); }

The encode function of Embedded Message Encoder is responsible for the specific message serialization operation:

/** * Description Encoding of messages * @param message Message object * @param msgStoreItemMemory Internal cache handle * @param msgLen Computed message length * @param headersByteArray Message header byte sequence * @param propertiesByteArray Message attribute byte sequence */ public static final void encode( DefaultBytesMessage message, final ByteBuffer msgStoreItemMemory, int msgLen, byte[] headersByteArray, byte[] propertiesByteArray ) { // Message Body byte[] body = message.getBody(); int bodyLength = body == null ? 0 : body.length; // Calculating Head Length short headersLength = (short) headersByteArray.length; // Calculate attribute length short propertiesLength = (short) propertiesByteArray.length; // Initialize storage space resetByteBuffer(msgStoreItemMemory, msgLen); // 1 TOTALSIZE msgStoreItemMemory.putInt(msgLen); // 2 MAGICCODE msgStoreItemMemory.putInt(MESSAGE_MAGIC_CODE); // 3 BODY msgStoreItemMemory.putInt(bodyLength); if (bodyLength > 0) msgStoreItemMemory.put(message.getBody()); // 4 HEADERS msgStoreItemMemory.putShort((short) headersLength); if (headersLength > 0) msgStoreItemMemory.put(headersByteArray); // 5 PROPERTIES msgStoreItemMemory.putShort((short) propertiesLength); if (propertiesLength > 0) msgStoreItemMemory.put(propertiesByteArray); }

The corresponding deserialization operation is performed by Embedded Message Decoder, which reads data from a ByteBuffer:

/** * Description Deserialize message objects from input ByteBuffer * * @return 0 Come the end of the file // >0 Normal messages // -1 Message checksum failure */ public static DefaultBytesMessage readMessageFromByteBuffer(ByteBuffer byteBuffer) { // 1 TOTAL SIZE int totalSize = byteBuffer.getInt(); // 2 MAGIC CODE int magicCode = byteBuffer.getInt(); switch (magicCode) { case MESSAGE_MAGIC_CODE: break; case BLANK_MAGIC_CODE: return null; default: // log.warning("found a illegal magic code 0x" + Integer.toHexString(magicCode)); return null; } byte[] bytesContent = new byte[totalSize]; // 3 BODY int bodyLen = byteBuffer.getInt(); byte[] body = new byte[bodyLen]; if (bodyLen > 0) { // Read and verify message body content byteBuffer.get(body, 0, bodyLen); } // 4 HEADERS short headersLength = byteBuffer.getShort(); KeyValue headers = null; if (headersLength > 0) { byteBuffer.get(bytesContent, 0, headersLength); String headersStr = new String(bytesContent, 0, headersLength, EmbeddedMessageDecoder.CHARSET_UTF8); headers = string2KeyValue(headersStr); } // 5 PROPERTIES // Get properties size short propertiesLength = byteBuffer.getShort(); KeyValue properties = null; if (propertiesLength > 0) { byteBuffer.get(bytesContent, 0, propertiesLength); String propertiesStr = new String(bytesContent, 0, propertiesLength, EmbeddedMessageDecoder.CHARSET_UTF8); properties = string2KeyValue(propertiesStr); } // Returns the read message return new DefaultBytesMessage( totalSize, headers, properties, body ); }

Message Writing

The writing of messages in Embedded Message Queue is actually accomplished by BucketQueue's putMessage/putMessages function, where a BucketQueue corresponds to the unique identity of Topic-queueId. Let's take batch writing as an example. First, we get the latest available MappedPartition from Mapped Partition Queue included in BucketQueue:

mappedPartition = this.mappedPartitionQueue.getLastMappedFileOrCreate(0);

Then call MappedPartition's appendMessages method, which is described below; here we discuss the corresponding processing of several results of adding messages. If the addition is successful, the success is returned directly; if the remaining space of the MappedPartition is insufficient to write a message in the message queue, the MappedPartitionQueue needs to be called to create a new MappedPartition and recalculate the sequence of messages to be written:

... // Call the corresponding MappedPartition additional message // Note that after filling in here, the migration of the message in Message Store and in QueueOffset are added in reverse. result = mappedPartition.appendMessages(messages, this.appendMessageCallback); // Different operations are performed according to the additional results switch (result.getStatus()) { case PUT_OK: break; case END_OF_FILE: this.messageQueue.getFlushAndUnmapPartitionService().putPartition(mappedPartition); // If you have reached the end of the file, create a new file mappedPartition = this.mappedPartitionQueue.getLastMappedFileOrCreate(0); if (null == mappedPartition) { // XXX: warn and notify me log.warning("Establish MappedPartition error, topic: " + messages.get(0).getTopicOrQueueName()); beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, result); } // Otherwise, add again // Get the number of processed messages from the results int appendedMessageNum = result.getAppendedMessageNum(); // Create temporary eftMessages ArrayList<DefaultBytesMessage> leftMessages = new ArrayList<>(); // Add all unconsumed messages for (int i = appendedMessageNum; i < messages.size(); i++) { leftMessages.add(messages.get(i)); } result = mappedPartition.appendMessages(leftMessages, this.appendMessageCallback); break; case MESSAGE_SIZE_EXCEEDED: case PROPERTIES_SIZE_EXCEEDED: beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, result); case UNKNOWN_ERROR: beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result); default: beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result); } ...

Logical File Storage

Mapped Partition

A MappedPartition maps a single file physically, which is initialized with the following file name and file size attributes:

/** * Description Initialize a memory mapping file * * @param fileName file name * @param fileSize File size * @throws IOException An exception occurred when opening the file */ private void init(final String fileName, final int fileSize) throws IOException { ... // Getting the global offset of the current file from the file name this.fileFromOffset = Long.parseLong(this.file.getName()); ... // Try to open the file this.fileChannel = new RandomAccessFile(this.file, "rw").getChannel(); // Mapping files to memory this.mappedByteBuffer = this.fileChannel.map(FileChannel.MapMode.READ_WRITE, 0, fileSize); }

The initialization stage opens the file mapping, and then when writing messages or other content, it calls the incoming message encoding callback (that is, the package object serialized by the message described above) to encode the object as a byte stream and write:

public AppendMessageResult appendMessage(final DefaultBytesMessage message, final AppendMessageCallback cb) { ... // Get the current write location int currentPos = this.wrotePosition.get(); // If it's still writable if (currentPos < this.fileSize) { // Get the actual write handle ByteBuffer byteBuffer = this.mappedByteBuffer.slice(); // Adjust the current write location byteBuffer.position(currentPos); // Recording information AppendMessageResult result = null; // Calling the actual write operation in the callback function result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos, message); this.wrotePosition.addAndGet(result.getWroteBytes()); this.storeTimestamp = result.getStoreTimestamp(); return result; } ... }

MappedPartitionQueue

MappedPartitionQueue is used to manage multiple physical mapping files. Its constructors are as follows:

// Store all mapping files private final CopyOnWriteArrayList<MappedPartition> mappedPartitions = new CopyOnWriteArrayList<MappedPartition>(); ... /** * Description Default constructor * * @param storePath Input storage file directory, possibly into MessageStore directory or ConsumeQueue directory * @param mappedFileSize * @param allocateMappedPartitionService */ public MappedPartitionQueue(final String storePath, int mappedFileSize, AllocateMappedPartitionService allocateMappedPartitionService) { this.storePath = storePath; this.mappedFileSize = mappedFileSize; this.allocateMappedPartitionService = allocateMappedPartitionService; }{}

The load function is taken as an example to illustrate its loading process.

/** * Description Loading Memory Mapped File Sequences * * @return */ public boolean load() { // Read Storage Path File dir = new File(this.storePath); // List all files in the catalog File[] files = dir.listFiles(); // If the file is not empty, then it is necessary to load it if (files != null) { // Reordering Arrays.sort(files); // Traverse all files for (File file : files) { // If you encounter a file that has not been filled, you return to the end of loading. if (file.length() != this.mappedFileSize) { log.warning(file + "\t" + file.length() + " length not matched message store config value, ignore it"); return true; } // Otherwise, load the file try { // Actual reading of files MappedPartition mappedPartition = new MappedPartition(file.getPath(), mappedFileSize); // Set the current file pointer to the end of the file mappedPartition.setWrotePosition(this.mappedFileSize); mappedPartition.setFlushedPosition(this.mappedFileSize); // Place the file in the MappedFiles array this.mappedPartitions.add(mappedPartition); // log.info("load " + file.getPath() + " OK"); } catch (IOException e) { log.warning("load file " + file + " error"); return false; } } } return true; }

Asynchronous Pre-Creation File

For performance reasons, MappedPartitionQueue also creates files in advance, and in the getLastMappedFileOrCreate function, when allocateMappedPartitionService exists, the asynchronous service pre-created file is invoked:

/** * Description Find the last file based on the initial offset * * @param startOffset * @return */ public MappedPartition getLastMappedFileOrCreate(final long startOffset) { ... // Create files if necessary if (createOffset != -1) { // Get the path and file name to the next file String nextFilePath = this.storePath + File.separator + FSExtra.offset2FileName(createOffset); // And the path and file name of the next file String nextNextFilePath = this.storePath + File.separator + FSExtra.offset2FileName(createOffset + this.mappedFileSize); // Point to the mapping file handle to be created MappedPartition mappedPartition = null; // Determine whether there is a service for creating mapping files if (this.allocateMappedPartitionService != null) { // Using services to create mappedPartition = this.allocateMappedPartitionService.putRequestAndReturnMappedFile(nextFilePath, nextNextFilePath, this.mappedFileSize); // Preheat treatment } else { // Otherwise, create it directly try { mappedPartition = new MappedPartition(nextFilePath, this.mappedFileSize); } catch (IOException e) { log.warning("create mappedPartition exception"); } } ... return mappedPartition; } return mappedPartitionLast; }

Here the Allocate MappedPartition Service executes requests to create files uninterruptedly:

@Override public void run() { ... // Loop execution of file allocation requests while (!this.isStopped() && this.mmapOperation()) {} ... } /** * Description Loop execution mapping file pre-allocation * * @Exception Only interrupted by the external thread, will return false */ private boolean mmapOperation() { ... // Perform operations try { // Remove the latest execution object req = this.requestQueue.take(); // Get an instance of the object to be executed in the request table AllocateRequest expectedRequest = this.requestTable.get(req.getFilePath()); ... // Determine whether the created object already exists if (req.getMappedPartition() == null) { // Record start creation time long beginTime = System.currentTimeMillis(); // Building Memory Mapped File Objects MappedPartition mappedPartition = new MappedPartition(req.getFilePath(), req.getFileSize()); ... // Preheat files, only MessageStore if (mappedPartition.getFileSize() >= mapedFileSizeCommitLog && isWarmMappedFileEnable) { mappedPartition.warmMappedFile(); } // Write back the created object to the request req.setMappedPartition(mappedPartition); // Exception set to false this.hasException = false; // Successfully set to true isSuccess = true; } ... }

Asynchronous Flush

Embedded MessageQueue also contains a flush AndUnmapPartition Services for asynchronous Flush files and for closing operations without mapping files. The core code of the service is as follows:

private final ConcurrentLinkedQueue<MappedPartition> mappedPartitions = new ConcurrentLinkedQueue<>(); ... @Override public void run() { while (!this.isStopped()) { int interval = 100; try { if (this.mappedPartitions.size() > 0) { long startTime = now(); // Remove the MappedPartition to be processed MappedPartition mappedPartition = this.mappedPartitions.poll(); // Write the current content to disk mappedPartition.flush(0); // Release currently unnecessary space mappedPartition.cleanup(); long past = now() - startTime; // EmbeddedProducer.flushEclipseTime.addAndGet(past); if (past > 500) { log.info("Flush data to disk and unmap MappedPartition costs " + past + " ms:" + mappedPartition.getFileName()); } } else { // Perform Flush operations on a regular basis this.waitForRunning(interval); } } catch (Throwable e) { log.warning(this.getServiceName() + " service has exception. "); } } }

Here, mapped Partitions are added when the message is added and returned to END_OF_FILE as described above.

LocalMessageQueue

Source code reference Here

Message Storage

In LocalMessageQueue, a centralized message storage scheme is adopted. The putMessage / putMessages function provided by it actually calls the message writing function of the built-in MessageStore object:

// Submit using MessageStore PutMessageResult result = this.messageStore.putMessage(message);

Message Store is the central storage for all real messages. Local Message Queue supports more complex message attributes:

| Serial number | Message Storage Structure | Remarks | Length (bytes) |

|---|---|---|---|

| 1 | TOTALSIZE | Message size | 4 |

| 2 | MAGICCODE | MAGIC CODE for Messages | 4 |

| 3 | BODYCRC | Message Body BODY CRC for restart-time verification | 4 |

| 4 | QUEUEID | Queue ID | 4 |

| 5 | QUEUEOFFSET | Self-increment, not the real offset of consume queue, can represent the number of messages in this queue. To find the data in consume queue through this value, QUEUEOFFSET* 12 is the offset address. | 8 |

| 6 | PHYSICALOFFSET | Physical Start Address Offset of Messages in commitLog | 8 |

| 7 | STORETIMESTAMP | Storage timestamp | 8 |

| 8 | BODY | The first four bytes store the message body size value, and the second body Length size space stores the message body content. | 4 + bodyLength |

| 9 | TOPICORQUEUENAME | The first byte stores the Topic size, followed by the topic name of the Topic OrQueueName Length size. | 1 + topicOrQueueNameLength |

| 10 | headers* | The first two bytes (short) store the header size, and then the header Length size header data. | 2 + headersLength |

| 11 | properties* | The first two bytes (short) store the attribute value size, and then the attribute data of the property Length size. | 2 + propertiesLength |

The Mapped Partition Queue initialized in its constructor is a group of mapped files of fixed size (default single file 1G):

// Constructing Mapping File Classes this.mappedPartitionQueue = new MappedPartitionQueue( ((LocalMessageQueueConfig) this.messageStore.getMessageQueueConfig()).getStorePathCommitLog(), mapedFileSizeCommitLog, messageStore.getAllocateMappedPartitionService(), this.flushMessageStoreService );

Building ConsumeQueue

Unlike Embedded Message Queue, Local Message Queue does not write directly to the storage divided by Topic-queueId when the message is first submitted; instead, it relies on the built-in PostPutMessage Service:

/** * Description Post-message operation */ private void doReput() { for (boolean doNext = true; this.isCommitLogAvailable() && doNext; ) { ... // Read the current message SelectMappedBufferResult result = this.messageStore.getMessageStore().getData(reputFromOffset); // Stop the current operation if the message does not exist if (result == null) { doNext = false; continue; } try { // Get the starting position of the current message this.reputFromOffset = result.getStartOffset(); // Read all messages sequentially for (int readSize = 0; readSize < result.getSize() && doNext; ) { // Read the message of the current location PostPutMessageRequest postPutMessageRequest = checkMessageAndReturnSize(result.getByteBuffer()); int size = postPutMessageRequest.getMsgSize(); readSpendTime.addAndGet(now() - startTime); startTime = now(); // If successful if (postPutMessageRequest.isSuccess()) { if (size > 0) { // Execute the operation of writing messages to ConsumeQueue this.messageStore.putMessagePositionInfo(postPutMessageRequest); // Fixed the current read position this.reputFromOffset += size; readSize += size; } else if (size == 0) { this.reputFromOffset = this.messageStore.getMessageStore().rollNextFile(this.reputFromOffset); readSize = result.getSize(); } putSpendTime.addAndGet(now() - startTime); } else if (!postPutMessageRequest.isSuccess()) { ... } } } finally { result.release(); } } }

In the putMessagePositionInfo function, the actual ConstumeQueue is created:

/** * Description Place the location of the message in ConsumeQueue * * @param postPutMessageRequest */ public void putMessagePositionInfo(PostPutMessageRequest postPutMessageRequest) { // Find or create ConsumeQueue ConsumeQueue cq = this.findConsumeQueue(postPutMessageRequest.getTopic(), postPutMessageRequest.getQueueId()); // Place messages in the right place in ConsumeQueue cq.putMessagePositionInfoWrapper(postPutMessageRequest.getCommitLogOffset(), postPutMessageRequest.getMsgSize(), postPutMessageRequest.getConsumeQueueOffset()); } /** * Description Find ConsumeQueue by topic and QueueId, and create it if it does not exist * * @param topic * @param queueId * @return */ public ConsumeQueue findConsumeQueue(String topic, int queueId) { ConcurrentHashMap<Integer, ConsumeQueue> map = consumeQueueTable.get(topic); ... // Determine if queueId exists under this topic, and if it does not exist, create ConsumeQueue logic = map.get(queueId); // If the fetch is empty, create a new ConsumeQueue if (null == logic) { ConsumeQueue newLogic = new ConsumeQueue(// topic, // theme queueId, // queueId LocalMessageQueueConfig.mapedFileSizeConsumeQueue, // Mapping file size this); ConsumeQueue oldLogic = map.putIfAbsent(queueId, newLogic); ... } return logic; }

In the constructor of ConsumeQueue, the actual file mapping and reading are completed:

/** * Description Major constructors * * @param topic * @param queueId * @param mappedFileSize * @param localMessageStore */ public ConsumeQueue( final String topic, final int queueId, final int mappedFileSize, final LocalMessageQueue localMessageStore) { ... // The path of the current queue String queueDir = this.storePath + File.separator + topic + File.separator + queueId; // Initialize memory mapping queues this.mappedPartitionQueue = new MappedPartitionQueue(queueDir, mappedFileSize, null); this.byteBufferIndex = ByteBuffer.allocate(CQ_STORE_UNIT_SIZE); }

The file format of ConsumeQueue is relatively simple:

// Single Message Size in ConsumeQueue File // 1 | MessageStore Offset | int 8 Byte // 2 | Size | short 8 Byte

Message pulling

When Local Pull Consumer pulls out interest, a batch pull mechanism is set up, that is, pulling multiple messages from Local Message Queue to the local area at one time, and then returning them to the local area in batches for processing (assuming that the processing is time-consuming). In the batch pull function, we first need to obtain whether the ConsumeQueue corresponding to the current Consumer topic and queue number contains data, and then apply for a specific read handle and occupy the queue:

/** * Description Batch grab cancel rate. Note that only pre-grab is done here, and the read offset will be corrected only when the consumer actually gets it. */ private void batchPoll() { // If it's LocalMessageQueue // Execute Prefetch LocalMessageQueue localMessageStore = (LocalMessageQueue) this.messageQueue; // Get the name of the bucket currently to be grabbed String bucket = bucketList.get((lastIndex) % (bucketList.size())); // First, get the queue and offset to be captured long offsetInQueue = localMessageStore.getConsumerScheduler().queryOffsetAndLock("127.0.0.1:" + this.refId, bucket, this.getQueueId()); // If the queueId currently being crawled is already occupied, switch directly to the next topic if (offsetInQueue == -2) { // Set the current theme to true this.isFinishedTable.put(bucket, true); // Reset the current LastIndex or RefOffset, queueId this.resetLastIndexOrRefOffsetWhenNotFound(); } else { // After obtaining a valid queue offset, start trying to retrieve the message consumerOffsetTable.put(bucket, new AtomicLong(offsetInQueue)); // Set the maximum number of messages contained in a file at a time, which is equivalent to one-time read in disguise. Note that the number here is also limited by the size of a single file. GetMessageResult getMessageResult = localMessageStore.getMessage(bucket, this.getQueueId(), this.consumerOffsetTable.get(bucket).get() + 1, mapedFileSizeConsumeQueue / ConsumeQueue.CQ_STORE_UNIT_SIZE); // If no data is found, switch to the next one if (getMessageResult.getStatus() != GetMessageStatus.FOUND) { // Set the current theme to true this.isFinishedTable.put(bucket, true); this.resetLastIndexOrRefOffsetWhenNotFound(); } else { // This does not take into account the malicious killing of Consumer, so updates the remote Offset value directly. localMessageStore.getConsumerScheduler().updateOffset("127.0.0.1:" + this.refId, bucket, this.getQueueId(), consumerOffsetTable.get(bucket).addAndGet(getMessageResult.getMessageCount())); // First read all the messages from the file system at once ArrayList<DefaultBytesMessage> messages = readMessagesFromGetMessageResult(getMessageResult); // Adding messages to the queue this.messages.addAll(messages); // Only after this grab is successful can we start to grab the next one. lastIndex++; } } }

Consumer scheduling

Consumer Scheduler provides us with a core consumer scheduling function. Its built-in Customer Offset Manager contains two core stores:

// Storage maps to memory private ConcurrentHashMap<String/* topic */, ConcurrentHashMap<Integer/*queueId*/, Long>> offsetTable = new ConcurrentHashMap<String, ConcurrentHashMap<Integer, Long>>(512); // Store information about a Queue under a Topic occupied by a Consumer private ConcurrentHashMap<String/* topic */, ConcurrentHashMap<Integer/*queueId*/, String/*refId*/>> queueIdOccupiedByConsumerTable = new ConcurrentHashMap<String, ConcurrentHashMap<Integer, String>>(512);

It corresponds to the progress of consumption of a Consume Queue and the information occupied by consumers. At the same time, Consumer Offset Manager also provides JSON-based persistence function, and automatic periodic persistence through scheduled Executor Service in Consumer Scheduler. In the message submission phase, the LocalMessageQueue automatically calls the updateOffset function to initialize the offset of a ConsumeQueue (also used in recovery):

public void updateOffset(final String topic, final int queueId, final long offset) { this.consumerOffsetManager.commitOffset("Broker Inner", topic, queueId, offset); }

When a Consumer first pulls, it calls the queryOffsetAndLock function to query the pullability of a ConsumeQueue:

/** * Description Fixed a value in a ConsumerOffset queue * * @param topic * @param queueId * @return */ public long queryOffsetAndLock(final String clientHostAndPort, final String topic, final int queueId) { String key = topic; // First, determine whether the Topic-queueId is occupied if (this.queueIdOccupiedByConsumerTable.containsKey(topic)) { ... } // If it is not occupied, the occupancy is declared at this time ConcurrentHashMap<Integer, String> consumerQueueIdMap = this.queueIdOccupiedByConsumerTable.get(key); ... // Real search operation ConcurrentHashMap<Integer, Long> map = this.offsetTable.get(key); if (null != map) { Long offset = map.get(queueId); if (offset != null) return offset; } // The default return value is -1 return -1; }

And call the updateOffset function to update the pull progress after the pull is finished.

Message Reading

After a Consumer gets the available pull offset through ConsumerManager, it reads the real message from LocalMessageQueue:

/** * Description Consumer Interface for reading data from storage * * @param topic * @param queueId * @param offset Start Subscript for Next Start Grabbing * @param maxMsgNums * @return */ public GetMessageResult getMessage(final String topic, final int queueId, final long offset, final int maxMsgNums) { ... // Build a consumer queue based on Topic and queueId ConsumeQueue consumeQueue = findConsumeQueue(topic, queueId); // Ensure the current ConsumeQueue exists if (consumeQueue != null) { // Get the displacement of the smallest message contained in the current ConsumeQueue in the MessageStore minOffset = consumeQueue.getMinOffsetInQueue(); // Note that the largest displacement address is the unreachable address, which is the subscript of the next message for all current messages maxOffset = consumeQueue.getMaxOffsetInQueue(); // If maxOffset is zero, no message is available if (maxOffset == 0) { status = GetMessageStatus.NO_MESSAGE_IN_QUEUE; nextBeginOffset = 0; } else if (offset < minOffset) { status = GetMessageStatus.OFFSET_TOO_SMALL; nextBeginOffset = minOffset; } else if (offset == maxOffset) { status = GetMessageStatus.OFFSET_OVERFLOW_ONE; nextBeginOffset = offset; } else if (offset > maxOffset) { status = GetMessageStatus.OFFSET_OVERFLOW_BADLY; if (0 == minOffset) { nextBeginOffset = minOffset; } else { nextBeginOffset = maxOffset; } } else { // Get the current ConsumeQueue cache based on the offset SelectMappedBufferResult bufferConsumeQueue = consumeQueue.getIndexBuffer(offset); if (bufferConsumeQueue != null) { try { status = GetMessageStatus.NO_MATCHED_MESSAGE; long nextPhyFileStartOffset = Long.MIN_VALUE; long maxPhyOffsetPulling = 0; int i = 0; // Set the maximum number of messages retrieved at a time final int maxFilterMessageCount = Math.max(16000, maxMsgNums * ConsumeQueue.CQ_STORE_UNIT_SIZE); // Traversing through message pointers in all Consume Queue s for (; i < bufferConsumeQueue.getSize() && i < maxFilterMessageCount; i += ConsumeQueue.CQ_STORE_UNIT_SIZE) { long offsetPy = bufferConsumeQueue.getByteBuffer().getLong(); int sizePy = bufferConsumeQueue.getByteBuffer().getInt(); maxPhyOffsetPulling = offsetPy; if (nextPhyFileStartOffset != Long.MIN_VALUE) { if (offsetPy < nextPhyFileStartOffset) continue; } boolean isInDisk = checkInDiskByCommitOffset(offsetPy, maxOffsetPy); if (isTheBatchFull(sizePy, maxMsgNums, getResult.getBufferTotalSize(), getResult.getMessageCount(), isInDisk)) { break; } // Getting messages from MessageStore SelectMappedBufferResult selectResult = this.messageStore.getMessage(offsetPy, sizePy); // If no data is obtained, switch to the next file to continue if (null == selectResult) { if (getResult.getBufferTotalSize() == 0) { status = GetMessageStatus.MESSAGE_WAS_REMOVING; } nextPhyFileStartOffset = this.messageStore.rollNextFile(offsetPy); continue; } // If obtained, the result is returned getResult.addMessage(selectResult); status = GetMessageStatus.FOUND; nextPhyFileStartOffset = Long.MIN_VALUE; } nextBeginOffset = offset + (i / ConsumeQueue.CQ_STORE_UNIT_SIZE); long diff = maxOffsetPy - maxPhyOffsetPulling; // Get the current memory condition long memory = (long) (getTotalPhysicalMemorySize() * (LocalMessageQueueConfig.accessMessageInMemoryMaxRatio / 100.0)); getResult.setSuggestPullingFromSlave(diff > memory); } finally { bufferConsumeQueue.release(); } } else { status = GetMessageStatus.OFFSET_FOUND_NULL; nextBeginOffset = consumeQueue.rollNextFile(offset); log.warning("consumer request topic: " + topic + "offset: " + offset + " minOffset: " + minOffset + " maxOffset: " + maxOffset + ", but access logic queue failed."); } } } else { ... } ... }

Note that the only message returned here is the storage address of the message in the Message Store. To read the real message, you need to use the readMessagesFromGetMessageResult function:

/** * Description Grab all messages from GetMessageResult * * @param getMessageResult * @return */ public static ArrayList<DefaultBytesMessage> readMessagesFromGetMessageResult(final GetMessageResult getMessageResult) { ArrayList<DefaultBytesMessage> messages = new ArrayList<>(); try { List<ByteBuffer> messageBufferList = getMessageResult.getMessageBufferList(); for (ByteBuffer bb : messageBufferList) { messages.add(readMessageFromByteBuffer(bb)); } } finally { getMessageResult.release(); } // Get byte arrays return messages; } /** * Description Deserialize message objects from input ByteBuffer * * @return 0 Come the end of the file // >0 Normal messages // -1 Message checksum failure */ public static DefaultBytesMessage readMessageFromByteBuffer(java.nio.ByteBuffer byteBuffer) { // 1 TOTAL SIZE int totalSize = byteBuffer.getInt(); // 2 MAGIC CODE int magicCode = byteBuffer.getInt(); switch (magicCode) { case MESSAGE_MAGIC_CODE: break; case BLANK_MAGIC_CODE: return null; default: log.warning("found a illegal magic code 0x" + Integer.toHexString(magicCode)); return null; } byte[] bytesContent = new byte[totalSize]; ... }

Epilogue

Around the Dragon Boat Festival, I stopped coding and thought that Zhou could finish the document writing in time. Unfortunately, the graduation trip and graduation party had been delayed until July, and finally finished in a hurry. It was also my personal delay in the advanced stage of cancer.