In the actual use process, we sometimes want to import and search RSS data. In many real micro services, a lot of data is provided in the form of RSS feed, such as our common comment websites. So is there any way to import these data into Elasticsearch and search? The answer is to use the information provided by Logstash RSS input plugin . In today's article, I will use an example to show.

We first find an RSS feed. We can see such a page on Elastic's official website elastic.co/blog . We open this page:

Click RSS above:

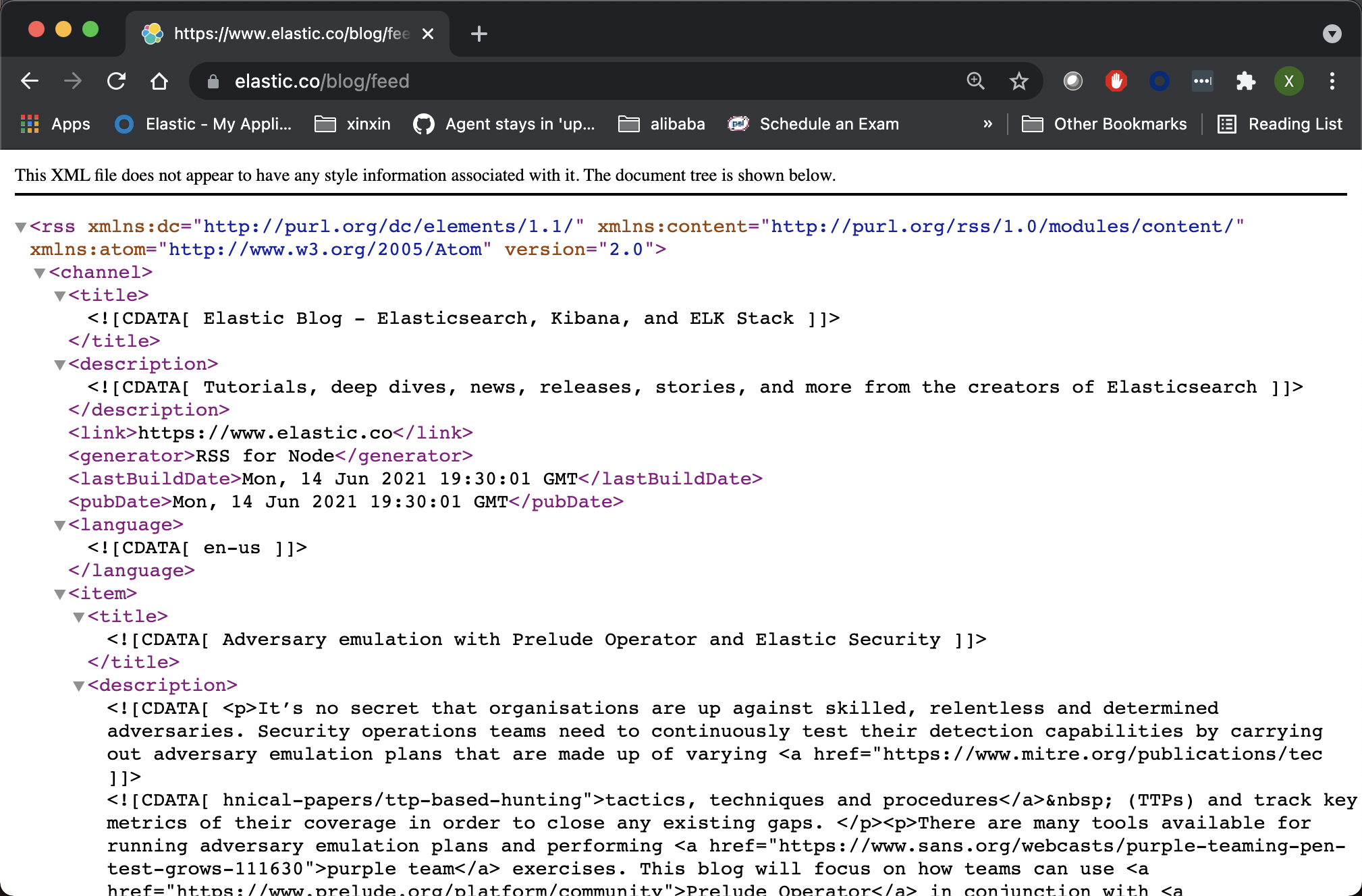

Above, we can see the response result of RSS. It contains title, description and other information. We can use RSS input plugin to extract these.

install

Since RSS input plugin is not one of Logstash input plugin s, we must install it manually. If you want to know the input plugin of Logstash, you can use the following command:

./bin/logstash-plugin list --group input

The above command shows:

logstash-input-azure_event_hubs logstash-input-beats └── logstash-input-elastic_agent (alias) logstash-input-couchdb_changes logstash-input-elasticsearch logstash-input-exec logstash-input-file logstash-input-ganglia logstash-input-gelf logstash-input-generator logstash-input-graphite logstash-input-heartbeat logstash-input-http logstash-input-http_poller logstash-input-imap logstash-input-jms logstash-input-pipe logstash-input-redis logstash-input-rss logstash-input-s3 logstash-input-snmp logstash-input-snmptrap logstash-input-sqs logstash-input-stdin logstash-input-syslog logstash-input-tcp logstash-input-twitter logstash-input-udp logstash-input-unix

The RSS input plugin is obviously not in it. We can use the following command to install:

./bin/logstash-plugin install logstash-input-rss

$ ./bin/logstash-plugin install logstash-input-rss Using JAVA_HOME defined java: /Library/Java/JavaVirtualMachines/jdk-15.0.2.jdk/Contents/Home WARNING, using JAVA_HOME while Logstash distribution comes with a bundled JDK Validating logstash-input-rss Installing logstash-input-rss Installation successful

Once the installation is successful, we will use the above list command again to check, and we will find that logstash input RSS will be included in it.

Import RSS feed data

Next, we use the RSS input plugin installed above to import https://www.elastic.co/blog/feed Data in. First, we create the following configuration files in the root directory of Logstash installation:

logstash.conf

input {

rss {

url => "https://www.elastic.co/blog/feed"

interval => 120

tags => ["rss", "elastic"]

}

}

filter {

mutate {

rename => [ "message", "blog_html" ]

copy => { "blog_html" => "blog_text" }

copy => { "published" => "@timestamp" }

}

mutate {

gsub => [

"blog_text", "<.*?>", "",

"blog_text", "[\n\t]", " "

]

remove_field => [ "published", "author" ]

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => [ "localhost:9200" ]

index => "elastic_blog"

}

}In the input section above, we defined rss. Its url is https://www.elastic.co/blog/feed . The interval is 120, that is, 120 seconds. Run every two minutes. At the same time, we added the tags we want to facilitate our data search. In the filter section, we rename message to blog_html, and the fields are copied at the same time. In the change part, we replace all the contents in < > brackets with "", that is, remove them. At the same time, it also replaces \ n\t such characters as null "". Finally, we delete the published and author fields, although it is unnecessary to delete the author field.

Run the following command in the Logstash installation directory:

./bin/logstash -f logstash.conf

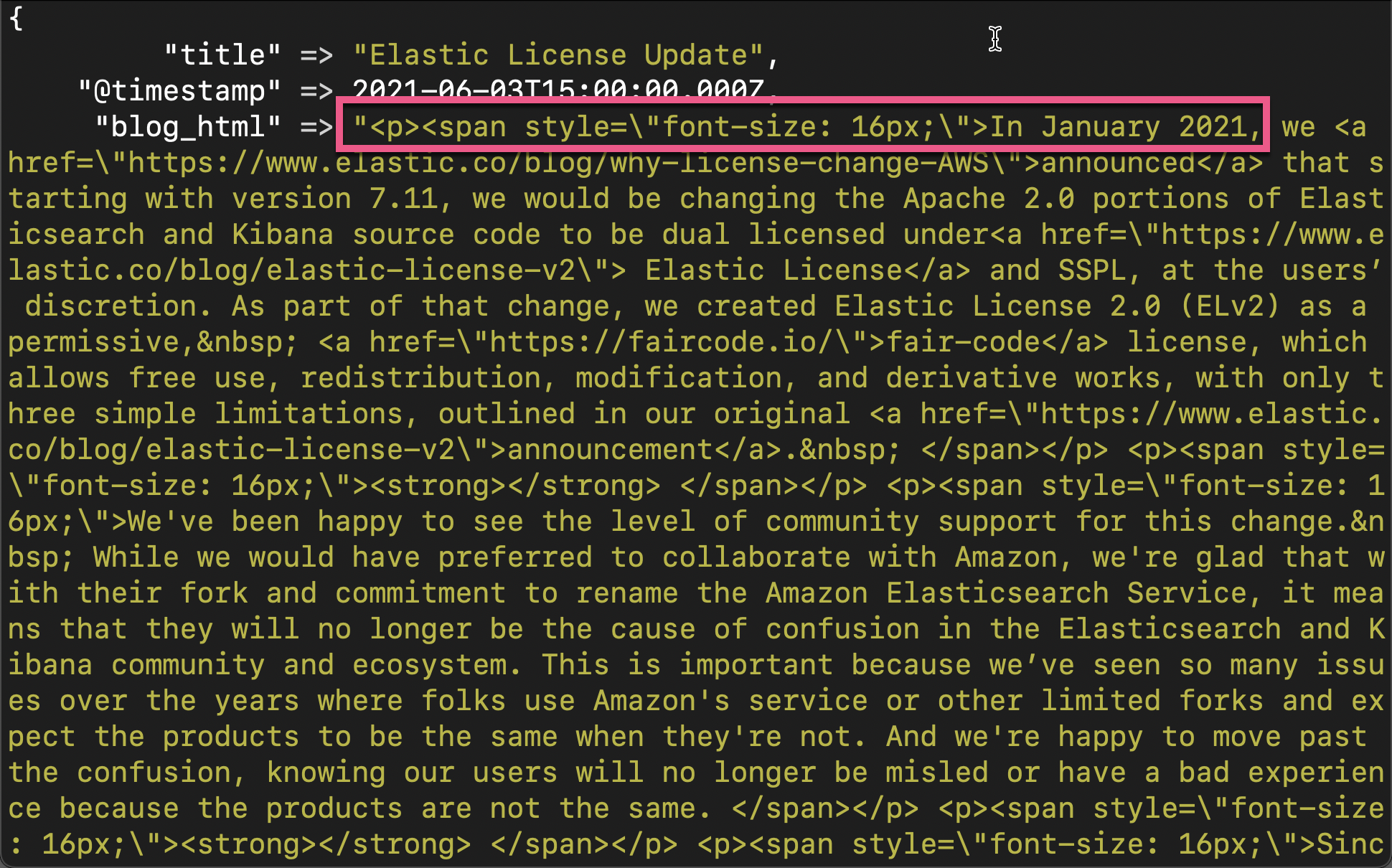

We can see in the console:

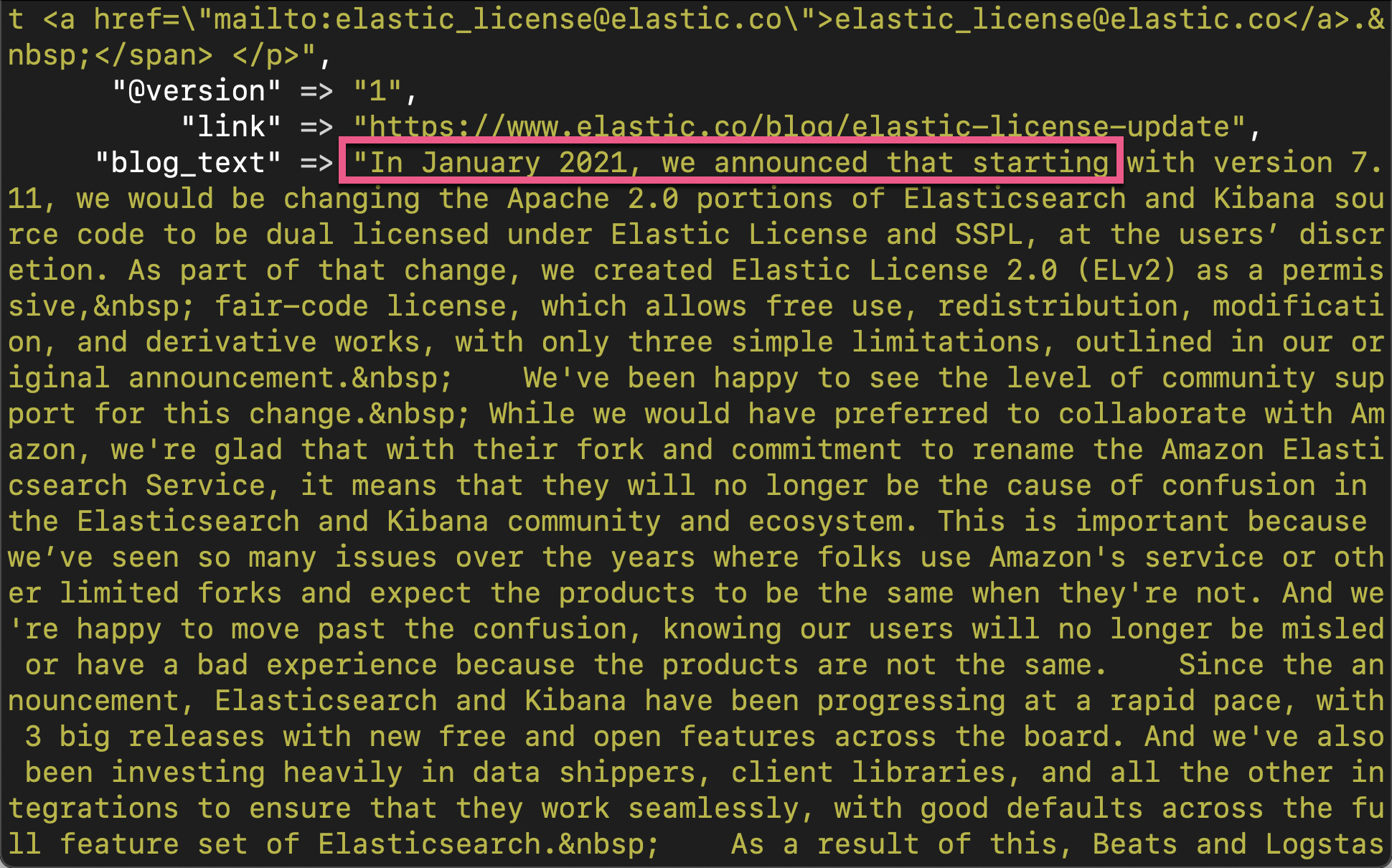

We can see in the blog_text, those contents in < > tag have been removed.

We can find the index elastic in elastic search_ blog:

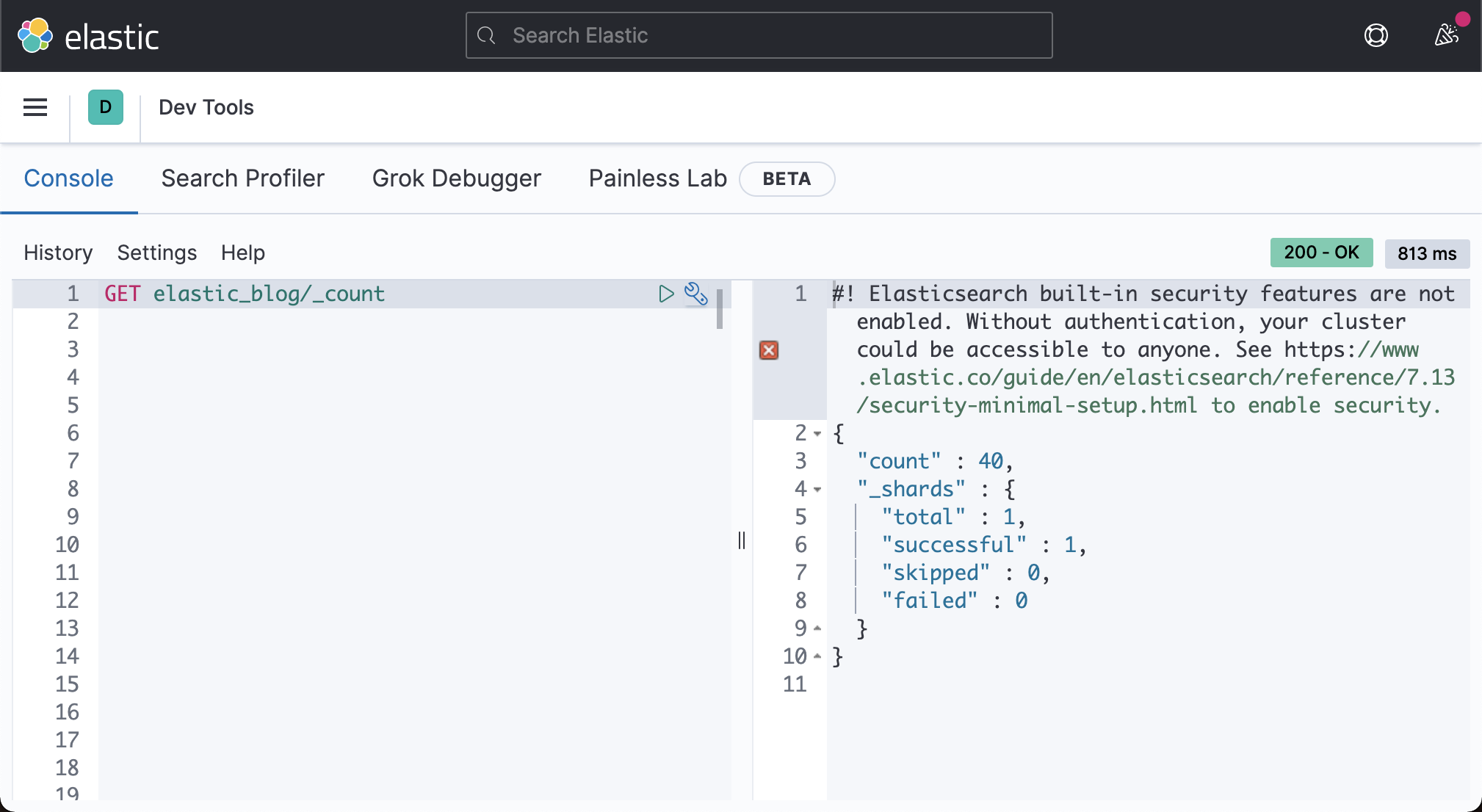

GET elastic_blog/_count

{

"count" : 20,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

}

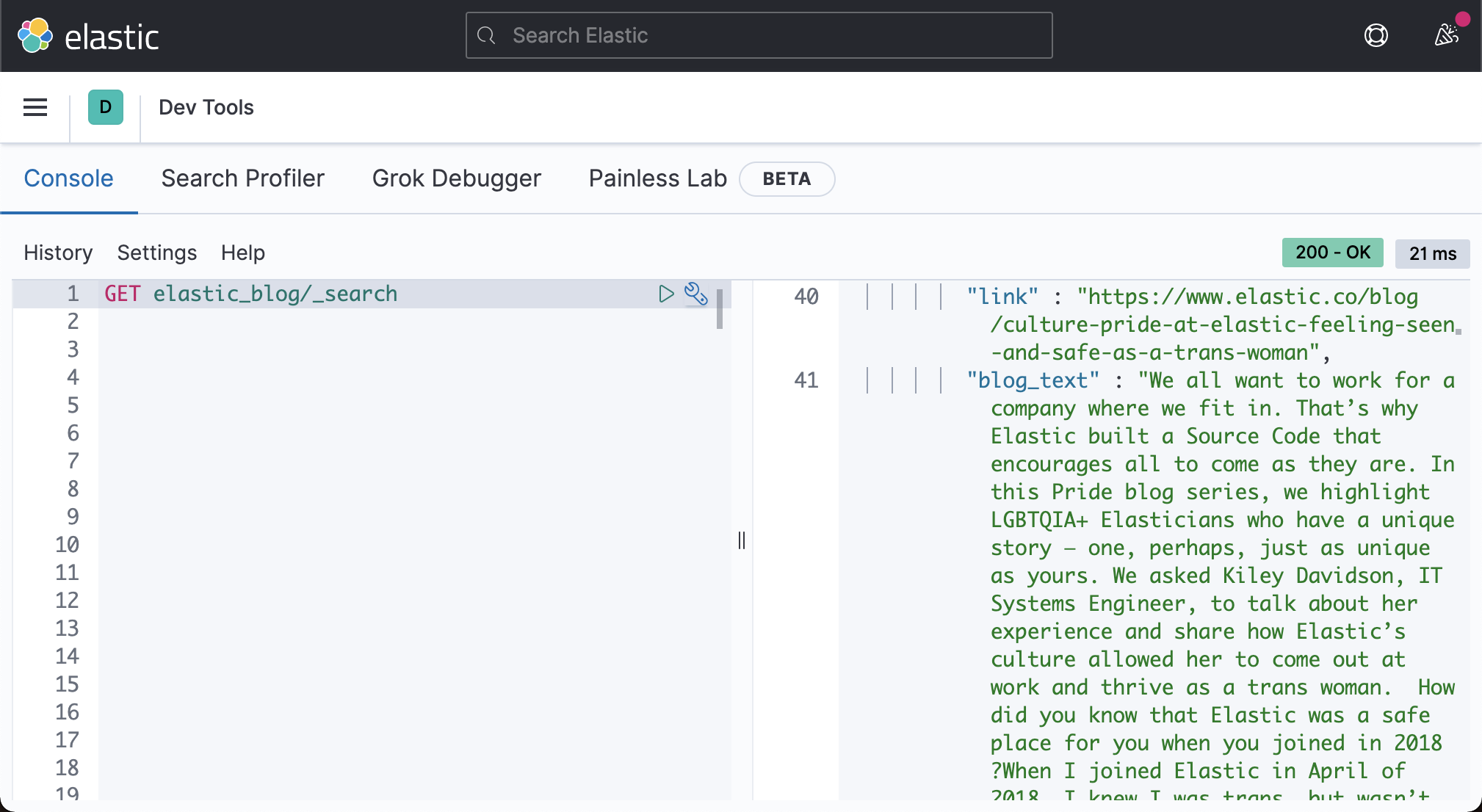

}We can even search it:

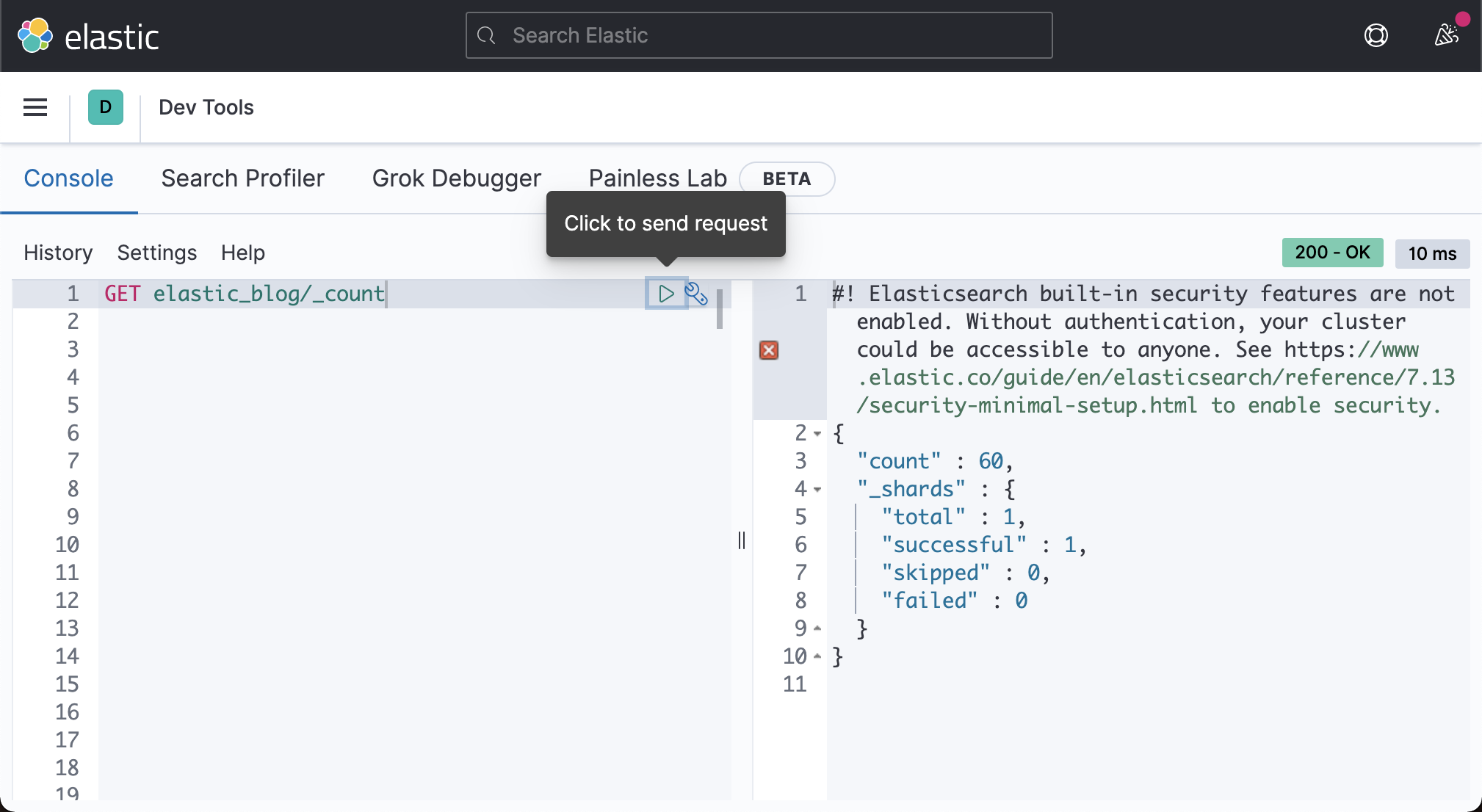

After another two minutes, we will review the imported data again:

This time, we see that the count becomes 40, which is twice the first 20. This is because we re read the data every 2 minutes. If we wait another two minutes, the data will become 60:

Well, in today's exercise. We described how to use the Logstash RSS input plugin to import RSS feed data. I hope it will be helpful to your work.