1, Cluster and distributed

1. Cluster

1.1 meaning of cluster

Cluster, cluster

It is composed of multiple hosts, but only as a whole

Question:

In Internet applications, as the site has higher and higher requirements for hardware performance, response speed, service stability and data reliability, a single server is unable to meet its needs

resolvent:

Scale UP: vertical expansion, upward expansion, enhancement, computers with stronger performance run the same services

Scale Out: horizontal expansion, outward expansion, adding equipment, running multiple services in parallel, scheduling and allocation problem, Cluster

1.2 clusters are divided into three types

LB: Load Balancing. It is composed of multiple hosts. Each host only undertakes part of the access requests

Improve the response ability of the application system, handle more access requests as much as possible and reduce latency, so as to obtain the overall performance of high concurrency and high load (LB)

The load distribution of LB depends on the shunting algorithm of the master node

HA: high availability, avoiding SPOF (single Point Of failure)

Improve the reliability of the application system and reduce the interruption time as much as possible to ensure the continuity of service and achieve the fault-tolerant effect of high availability (HA)

The working mode of HA includes duplex and master-slave modes

HPC: High Performance Computing

The goal is to improve the CPU operation speed of the application system, expand hardware resources and analysis ability, and obtain the high-performance operation (HPC) ability equivalent to large-scale and supercomputer

High performance depends on "distributed computing" and "parallel computing". It integrates the CPU, memory and other resources of multiple servers through special hardware and software to realize the computing power that only large and supercomputers have

1.3 SLA

SLA: service level agreement (SLA for short, full name: service level agreement). It is a mutually recognized agreement defined between service providers and users to ensure the performance and availability of services under a certain cost. Usually, this cost is the main factor driving the quality of services provided. In conventional fields, the so-called three 9s and four 9s are always set to represent it. When this cost is not reached There will be a series of punitive measures when the is flat, and the main goal of operation and maintenance is to achieve this service level.

1.4 shunting algorithm

Round Robin: distribute the received access requests to the nodes in the cluster in turn, and treat each server equally, regardless of the actual number of connections and system load of the server.

Weighted Round Robin: distribute requests according to the weight value set by the scheduler. Nodes with high weight value give priority to tasks and allocate more requests. This can ensure that nodes with high performance can undertake more requests.

Least Connections: allocate according to the number of established connections of the real server, and give priority to the node with the least number of connections. If the performance of all server nodes is similar, this method can better balance the load.

Weighted Least Connections: when the performance of server nodes varies greatly, the scheduler can automatically adjust the weight according to the node server load, and the node with higher weight will bear a larger proportion of the active connection load.

IP_Hash calculates the hash according to the IP address of the request source to obtain the back-end server. In this way, requests from the same IP will always be processed on the same server, so that the request context information can be stored on this server,

url_hash allocates requests according to the hash results of the access URL, so that each URL is directed to the same back-end server, which is more effective when the back-end server is cache. I haven't studied it

fair does not use the rotation balancing algorithm used by the built-in load balancing, but can intelligently balance the load according to the page size and loading time. That is, user requests are allocated according to the time of the back-end server, and those with short response time are allocated first

2. Distributed

Distributed storage: Ceph, GlusterFS, FastDFS, MogileFS

Distributed computing: hadoop, Spark

Common distributed applications:

Distributed application services are divided according to functions and use microservices (a single application is divided into a group of small services, which coordinate and cooperate with each other to provide users with final value services)

Distributed static resources – static resources are placed on different storage clusters

Distributed data and storage – using a key value caching system

Distributed computing – use distributed computing for special services, such as Hadoop clusters

Cluster: the same business system is deployed on multiple servers. In the cluster, the functions implemented by each server are the same, and the data and code are the same.

Distributed: a service is split into multiple sub services, or it is a different service and deployed on multiple servers. In distributed, the functions implemented by each server are different, and the data and code are also different. The sum of the functions of each distributed server is the complete business

2, Introduction to Linux Virtual Server

1. Introduction to LVS

LVS: Linux Virtual Server, load scheduler, kernel integration

2. Working principle of LVS

VS forwards its scheduling to an RS according to the target IP, target protocol and port of the request message, and selects the RS according to the scheduling algorithm. LVS is a kernel level function. It works in the INPUT chain and "processes" the traffic sent to INPUT

3. Terms in LVS cluster type

VS: Virtual Server, director server (DS), dispatcher (scheduler), Load Balancer (lvs server)

RS: Real Server(lvs), upstream server(nginx), backend server(haproxy) (real server)

CIP: Client IP

VIP: Virtual serve IP VS IP of Extranet

DIP: Director IP VS intranet IP

RIP: Real server IP

Access process: CIP < – > VIP = = dip < – > rip

3, LVS operating mode and related commands

1. Working mode of LVS Cluster

LVS NAT: modify the target IP of the request message and the DNAT of the multi-target IP

LVS Dr: manipulate and encapsulate the new MAC address (direct routing)

LVS Tun: tunnel mode

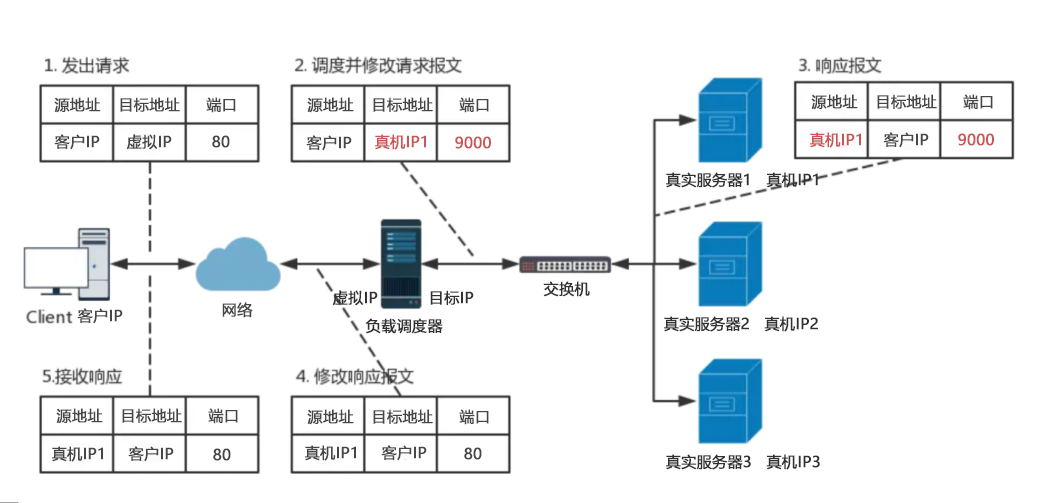

1.1 NAT mode of LVS

LVS NAT: in essence, it is the DNAT of multi-target IP. By modifying the target address and target port in the request message to the RIP and port of RS somewhere

PORT implements forwarding

(1) RIP and DIP shall be on the same IP network, and the private network address shall be used; the gateway of RS shall point to DIP

(2) Both request message and response message must be forwarded through Director, which is easy to become the system bottleneck

(3) Support PORT mapping and modify the target PORT of the request message

(4) VS must be a Linux system, and RS can be any OS system

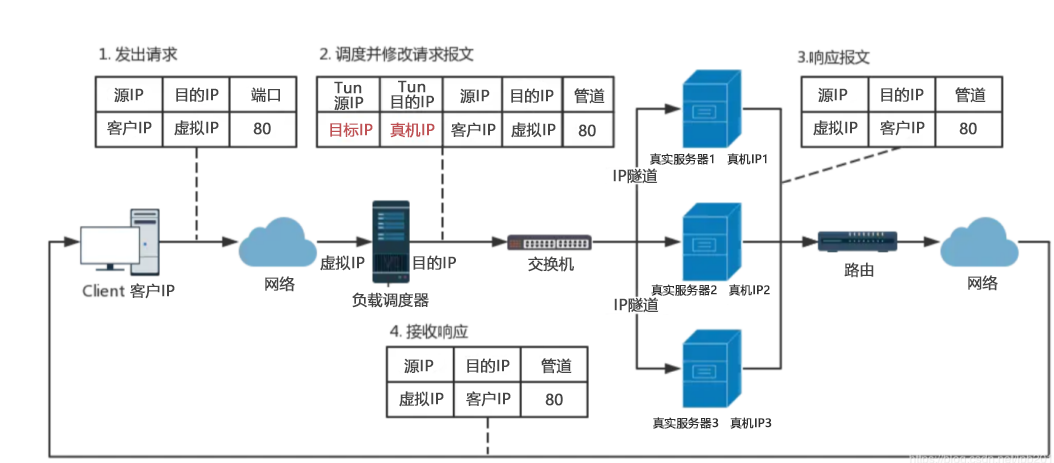

1.2 IP tunnel

-

RIP and DIP may not be in the same physical network. Generally, the gateway of RS cannot point to DIP, and RIP can communicate with the public network. That is, cluster nodes can be implemented across the Internet. DIP, VIP and RIP can be public network addresses.

-

The VIP address needs to be configured on the channel interface of RealServer to receive the data packet forwarded by DIP and the source IP of the response.

-

When forwarding DIP to RealServer, you need to use the tunnel. The source IP of the IP header on the outer layer of the tunnel is DIP and the target IP is RIP, while the IP header that RealServer responds to the client is obtained according to the analysis of the IP header on the inner layer of the tunnel. The source IP is VIP and the target IP is CIP

-

The request message shall pass through the Director, but the response shall not pass through the Director. The response shall be completed by RealServer itself

-

Port mapping is not supported

-

The OS of RS must support tunnel function

1.3 direct routing

Direct Routing: referred to as DR mode for short, it adopts a semi open network structure, which is similar to TUN

The structure of the mode is similar, but the nodes are not scattered everywhere, but are located in the same physical network as the scheduler.

The load scheduler is connected with each node server through the local network without establishing a dedicated IP tunnel direct route. The LVS default mode is the most widely used. A MAC header is re encapsulated by the request message for forwarding. The source MAC is the MAC of the interface where the DIP is located, and the target mac is the MAC address of the interface where the RIP of a selected RS is located; The source IP/PORT and destination IP/PORT remain unchanged

4, ipvsadm tool

-A: Add virtual server

-D: Delete entire virtual server

-s: Specify the load scheduling algorithm (polling: rr, weighted polling: wrr, least connected: lc, weighted least connected: wlc)

-a: Add real server (node server)

-d: Delete a node

-t: Specify VIP address and TCP port

-r: Specify RIP address and TCP port

-m: Indicates that NAT cluster mode is used

-g: Indicates that DR mode is used

-i: Indicates that TUN mode is used

-w: Set the weight (when the weight is 0, it means to pause the node)

-p 60: it means to keep the connection for 60 seconds

-l: View LVS virtual server list (view all by default)

-n: Displays address, port and other information in digital form, often combined with "- l" option. ipvsadm -ln

5, NAT mode LVS load balancing deployment

Load scheduler: configure dual network card intranet: 192.168 47.100 (ens33) external network card: 12.0 0.1(ens37)

Cluster pool of two WEB servers: 192.168 47.102,192.168. forty-seven point one zero three

One NFS shared server: 192.168 forty-seven point one zero four

Client: 12.0 zero point one zero zero

Deploy shared storage (NFS server: 192.168.47.104)

Turn off firewall

[root@localhost ~]#systemctl stop firewalld [root@localhost ~]#setenforce 0

Installing nfs services

[root@localhost ~]# yum install -y nfs-utils rpcbind

Open service

[root@localhost ~]# systemctl start rpcbind [root@localhost ~]# systemctl start nfs

Create a new directory and create a site file

[root@localhost ~]# cd /opt/ [root@localhost opt]# mkdir wwd benet [root@localhost opt]# chmod 777 wwd/ benet/ [root@localhost opt]# echo "this is wwd" > /opt/wwd/index.html [root@localhost opt]# echo "this is benet" > /opt/benet/index.html

Set sharing policy

[root@localhost opt]# vim /etc/exports /opt/wwd 192.168.47.0/24(rw,sync) /opt/benet 192.168.47.0/24(rw,sync)

Publishing services

[root@localhost opt]# exportfs -rv exporting 192.168.47.0/24:/opt/benet exporting 192.168.47.0/24:/opt/wwd

Open service

[root@localhost opt]# systemctl restart nfs

Node server

First set

Turn off firewall

[root@localhost ~]#systemctl stop firewalld [root@localhost ~]#setenforce 0

View nfs services

[root@localhost ~]# showmount -e 192.168.47.104 Export list for 192.168.47.104: /opt/benet 192.168.47.0/24 /opt/wwd 192.168.47.0/24

Install http service

[root@localhost ~]# yum install -y httpd

Mount site

[root@localhost ~]# mount 192.168.47.104:/opt/wwd /var/www/html/ [root@localhost ~]# cat /var/www/html/index.html this is wwd [root@localhost ~]# systemctl start httpd [root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33 IPADDR=192.168.47.102 NETMASK=255.255.255.0 GATEWAY=192.168.47.100

Restart the network card

[root@localhost ~]# systemctl restart network

Second set

Turn off firewall

[root@localhost ~]#systemctl stop firewalld [root@localhost ~]#setenforce 0

View nfs services

[root@localhost ~]# showmount -e 192.168.47.104 Export list for 192.168.47.104: /opt/benet 192.168.47.0/24 /opt/wwd 192.168.47.0/24

Install http service

[root@localhost ~]# yum install -y httpd

Mount site

[root@localhost ~]# mount 192.168.47.104:/opt/benet /var/www/html/ [root@localhost ~]# cat /var/www/html/index.html this is benet [root@localhost ~]# systemctl start httpd [root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33 IPADDR=192.168.47.103 NETMASK=255.255.255.0 GATEWAY=192.168.47.100

Restart the network card

[root@localhost ~]# systemctl restart network

Dispatch server

Turn off firewall

[root@localhost ~]#systemctl stop firewalld [root@localhost ~]#setenforce 0 [root@localhost ~]#cd /etc/sysconfig/network-scripts/ [root@localhost network-scripts]#cp ifcfg-ens33 ifcfg-ens37

Remove the gateway and restart the service

[root@localhost network-scripts]#vim ifcfg-ens33 [root@localhost network-scripts]#vim ifcfg-ens37 [root@localhost network-scripts]# systemctl restart network

#Turn on routing forwarding function

[root@localhost network-scripts]#vim /etc/sysctl.conf net.ipv4.ip_forward = 1 [root@localhost network-scripts]#sysctl -p net.ipv4.ip_forward = 1

firewall

View policy

[root@localhost network-scripts]# iptables -nL -t nat

Empty policy

[root@localhost network-scripts]# iptables -F

Add policy

[root@localhost network-scripts]# iptables -t nat -A POSTROUTING -s 192.168.47.0/24 -o ens37 -j SNAT --to 12.0.0.1

View policy

[root@localhost network-scripts]# iptables -nL -t nat Chain PREROUTING (policy ACCEPT) target prot opt source destination Chain INPUT (policy ACCEPT) target prot opt source destination Chain OUTPUT (policy ACCEPT) target prot opt source destination Chain POSTROUTING (policy ACCEPT) target prot opt source destination SNAT all -- 192.168.47.0/24 0.0.0.0/0 to:12.0.0.1

Load kernel module

[root@localhost network-scripts]# cat /proc/net/ip_vs cat: /proc/net/ip_vs: There is no such file or directory [root@localhost network-scripts]# modprobe ip_vs [root@localhost network-scripts]# cat /proc/net/ip_vs IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn

Install software

[root@localhost network-scripts]# yum install ipvsadm* -y

Save profile

[root@localhost network-scripts]# ipvsadm-save >/etc/sysconfig/ipvsadm [root@localhost network-scripts]# systemctl start ipvsadm.service

Empty policy

[root@localhost network-scripts]# ipvsadm -C

Specify the entry -s rr polling of the IP address extranet

[root@localhost network-scripts]# ipvsadm -A -t 12.0.0.1:80 -s rr

Specify the virtual server first, then add the real server address, - r: real server address, - m specify the nat mode

[root@localhost network-scripts]# ipvsadm -a -t 12.0.0.1:80 -r 192.168.47.102:80 -m [root@localhost network-scripts]# ipvsadm -a -t 12.0.0.1:80 -r 192.168.47.103:80 -m

start-up

[root@localhost network-scripts]# ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 12.0.0.1:http rr -> 192.168.47.102:http Masq 1 0 0 -> 192.168.47.103:http Masq 1 0 0

View policy

[root@localhost network-scripts]# ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 12.0.0.1:80 rr -> 192.168.47.102:80 Masq 1 0 0 -> 192.168.47.103:80 Masq 1 0 0

Client

You can see that it has succeeded

Will poll later