introduction

In this highly information-based IT era, the production system, business operation, sales and support, daily management and other links of enterprises increasingly rely on computer information and services, resulting in a large increase in the demand for the application of high availability technology, so as to provide continuous and uninterrupted computer system or network services.

1, Detailed explanation of LVS-DR mode

1. DR mode features

-

Director Server and Real Server must be on the same - physical network.

-

Real Server can use private address or public address. If the public network address is used, RIP can be accessed directly through the Internet.

-

The Director Server acts as an access portal to the cluster, but not as a gateway.

-

All request messages pass through the Director Server, but the reply response message cannot pass through the Director Server.

-

The gateway of the Real Server is not allowed to point to the Director Server IP, that is, the packets sent by the Real Server are not allowed to pass through the Director Server.

-

The lo interface on the Real Server configures the IP address of the VIP.

2. Packet flow analysis

In the same LAN:

- The client sends a request to the target VIP and the load balancer receives it

- The load balancer selects the back-end real server according to the load balancing algorithm, does not modify or encapsulate the IP message, but changes the MAC address of the data frame to the MAC address of the back-end real server, and then sends it on the LAN

- The back-end real server receives this frame, and after unpacking, it finds that the target IP matches the local machine (VIP is bound in advance), so it processes this message.

- Then re encapsulate the message, transmit the response message to the physical network card through the lo interface, and then send it out, and the client will receive the reply message; The client considers that it has received normal service and does not know which server processed it. If it crosses the network segment, the message will be returned to the user via the Internet through the router

3. ARP problem in Dr mode

-

① In LVS-DR load balancing cluster, both load balancing node and server should be configured with the same VIP address.

-

② Having the same IP address in the LAN is bound to cause the disorder of ARP communication among servers.

When the ARP broadcast is sent to the LVS-DR cluster, both the load balancer and the node server will receive the ARP broadcast because they are connected to the same network.

Only the front-end load balancer responds, and other node servers should not respond to ARP broadcasts. -

③ Process the node server so that it does not respond to ARP requests for VIP s.

Use virtual interface lo:0 to host VIP address

Set kernel parameter arp_ignore=1: the system only responds to ARP requests whose destination IP is the local IP -

④ The message returned by RealServer (the source IP is VIP) is forwarded by the router. When repackaging the message, you need to obtain the MAC address of the router first.

-

⑤ When sending an ARP request, Linux uses the source IP address (VIP s) of the IP packet as the source IP address in the ARP request packet by default, instead of the IP address of the sending interface, such as ens33.

-

⑥ After receiving the ARP request, the router will update the ARP table entry

-

⑦ The MAC address of the Director corresponding to the original VIP will be updated to the MAC address of the RealServer corresponding to the VIP

-

⑧ According to the ARP table entry, the router will forward the new request message to RealServer, resulting in the failure of the Director's VIP;

Solution: process the node server and set the kernel parameter ARP_ Announcement = 2: the system does not use the source address of the IP packet to set the source address of the ARP request, but selects the IP address of the sending interface. -

Setting methods to solve two problems of ARP

#Modify the / etc/sysctl.conf file net.ipv4.conf.lo.arp_ignore = 1 net.ipv4.conf.lo.arp_announce = 2 net.ipv4.conf.all.arp_ignore = 1 net.ipv4.conf.all.arp_announce = 2

2, Kept

1. General

The design goal of kept is to build a highly available LVS load balancing cluster. You can call ipvsadm tool to create a virtual server and manage the server pool, not just as a dual machine hot standby.

2. Advantages

Building LVS clusters with Keepalived is easier and easier to use. The main advantage is

- Implement hot standby switching for LVS load scheduler to improve availability;

- Support automatic failover;

- Support node health status check;

- Judge the availability of LVS load scheduler and node server. When the master fails, switch to the backup node in time to ensure normal business. When the master fails, rejoin the cluster and switch the business back to the master node (priority).

3. Implementation principle of keepalived

- keepalived uses VRRP hot backup protocol to realize the multi machine hot backup function of Linux server

- VRRP (virtual routing Redundancy Protocol) is a backup solution for routers.

- A hot backup group is composed of multiple routers to provide services through the shared virtual IP address

- Only one primary router in each hot standby group provides services at the same time, and other routers are in redundant state. If the currently online router fails, other routers will automatically take over the virtual IP address and continue to provide services according to the set priority

snmp is a protocol that manages servers, switches, routers and other devices through the network

Health check (status) is managed in kept SNMP

During monitoring, snmp will also be used to monitor and obtain the data of the monitored server

3, LVS-DR+Keepalived deployment

1. Case environment

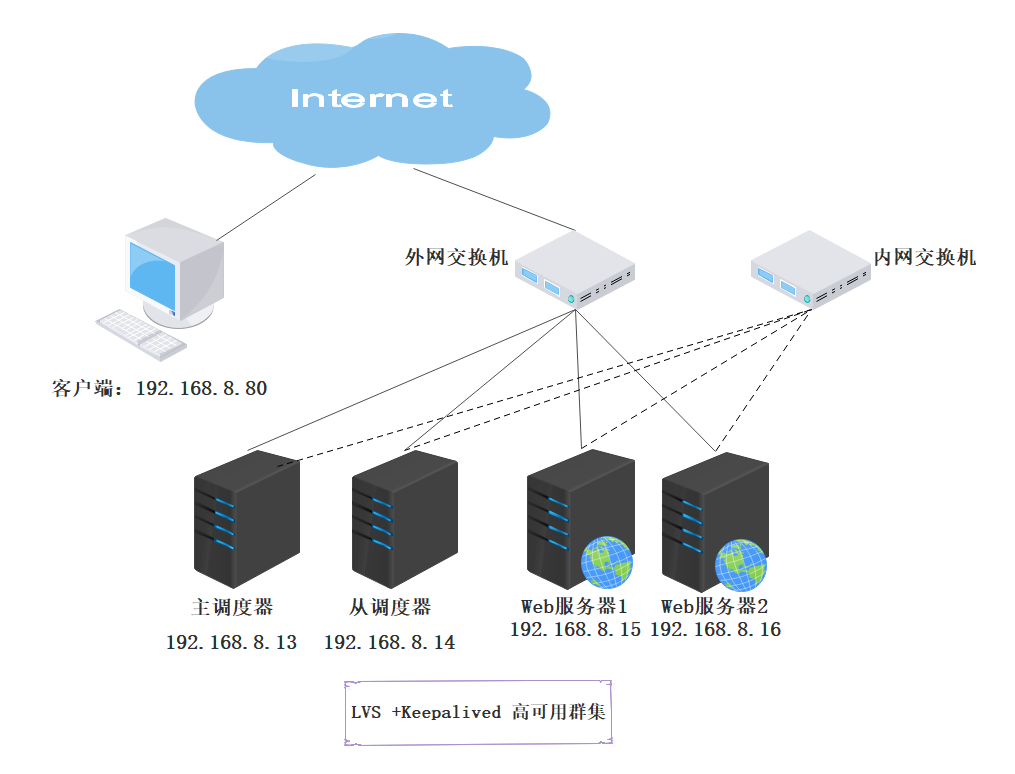

| The server | IP address | tool |

|---|---|---|

| Main load scheduler (Director1) | ens33: 192.168.8.13 | ipvsadm, kept (hot standby) |

| Standby load scheduler (Director2) | ens33: 192.168.8.14 | ipvsadm,keepalived |

| web server 1 | ens33: 192.168.8.15 | / |

| lo:0 (VIP) | 192.168.8.100 | httpd |

| web server 2 | ens33: 192.168.8.16 | / |

| lo:0 (VIP) | 192.168.8.100 | httpd |

| centos client | 192.168.8.10 | web browser |

| centos client | 192.168.8.17 | web browser |

- Topological graph

2. Configuration of LVS primary and standby servers

systemctl stop firewalld.service setenforce 0 yum -y install ipvsadm modprobe ip_vs #Load ip_vs module cat /proc/net/ip_vs #View ip_vs version information ---------------------------------------------------------------------------------------------- #LVS1 configuration: cd /etc/sysconfig/network-scripts/ cp -p ifcfg-ens33 ifcfg-ens33:0 [root@diretor1 /etc/sysconfig/network-scripts]#vim ifcfg-ens33:0 DEVICE=ens33:0 ONBOOT=yes IPADDR=192.168.8.100 NETMASK=255.255.255.255 ifup ens33:0 ifconfig ens33:0 systemctl restart network vim /etc/sysctl.conf net.ipv4.ip_forward = 0 #Turn off routing forwarding net.ipv4.conf.all.send_redirects = 0 #Turn off all redirects net.ipv4.conf.default.send_redirects = 0 #Turn off default redirection net.ipv4.conf.ens33.send_redirects = 0 sysctl -p ------------------------------------------------------------------------------------------------- #LVS2 configuration: cd /etc/sysconfig/network-scripts/ cp -p ifcfg-ens33 ifcfg-ens33:0 [root@diretor1 /etc/sysconfig/network-scripts]#vim ifcfg-ens33:0 DEVICE=ens33:0 ONBOOT=yes IPADDR=192.168.8.100 NETMASK=255.255.255.255 ifup ens33:0 ifconfig ens33:0 systemctl restart network vim /etc/sysctl.conf net.ipv4.ip_forward = 0 #Turn off routing forwarding net.ipv4.conf.all.send_redirects = 0 #Turn off all redirects net.ipv4.conf.default.send_redirects = 0 #Turn off default redirection net.ipv4.conf.ens33.send_redirects = 0 sysctl -p ----------------------------------------------------------------------------------------------- #Two lvs are configured in the same way modprobe ip_vs #Open ipvsadm cat /proc/net/ip_vs ipvsadm-save > /etc/sysconfig/ipvsadm systemctl start ipvsadm systemctl status ipvsadm vim /opt/dd.sh #!/opt/dd.sh ipvsadm -C ##Clear all records in the table ipvsadm -A -t 192.168.8.100:80 -s rr ipvsadm -a -t 192.168.8.100:80 -r 192.168.8.15:80 -g ipvsadm -a -t 192.168.8.100:80 -r 192.168.8.16:80 -g ipvsadm ipvsadm -Lnc

3. Web server configuration

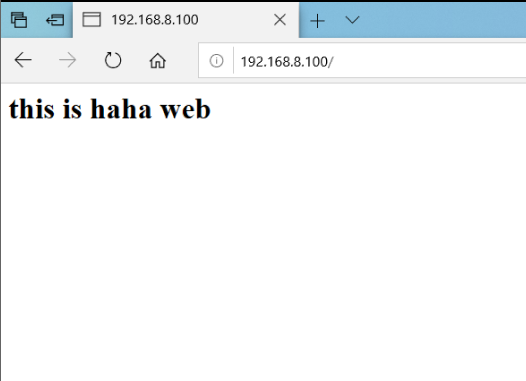

#Web1 and Web2 are configured the same: cd /etc/sysconfig/network-scripts/ vim ifcfg-lo:0 DEVICE=lo:0 IPADDR=192.168.8.100 NETMASK=255.255.255.255 # If you're having problems with gated making 127.0.0.0/8 a martian, # you can change this to something else (255.255.255.255, for example) #BROADCAST=127.255.255.255 ONBOOT=yes #NAME=loopback ifcfg lo:0 route add -host 192.168.8.100 dev lo:0 #Confinement routing route -n vim /etc/rc.local #Configure startup management to execute route imprisonment /sbin/route add -host 192.168.8.100 dev lo:0 yum -y install httpd systemctl start httpd --------------------------------------------------------------------------------------------- #Adjust the ARP response parameters of the kernel to prevent updating the MAC address of VIP and avoid conflict vim /etc/sysctl.conf net.ipv4.conf.lo.arp_ignore = 1 #Strategy of ring interface net.ipv4.conf.lo.arp_announce = 2 #Response strategy of ring interface net.ipv4.conf.all.arp_ignore = 1 #Policies for all interfaces net.ipv4.conf.all.arp_announce = 2 #Response strategy for all interfaces #web1: vim /var/www/html/index.html <html> <body> <meta http-equiv="Content-Type" content="text/html;charset=utf-8"> <h1>this is haha web</h1> </body> </html> web2: vim /var/www/html/index.html <html> <body> <meta http-equiv="Content-Type" content="text/html;charset=utf-8"> <h1>this is hehe web</h1> </body> </html>

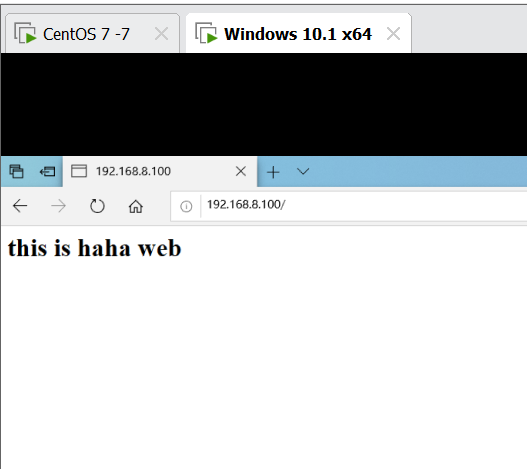

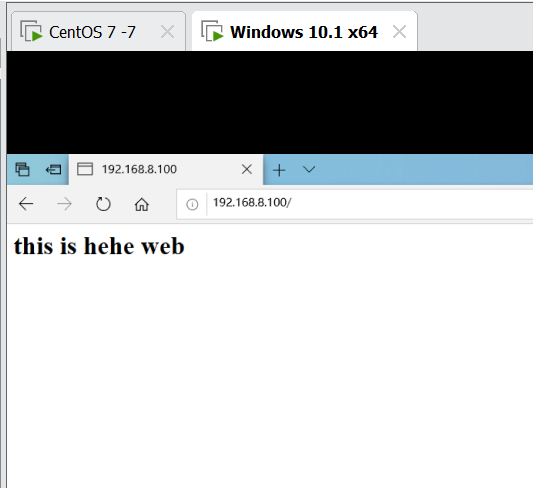

4. Access the client

[root@diretor1 /etc/sysconfig/network-scripts]#sh -x /opt/dd.sh + ipvsadm -C + ipvsadm -A -t 192.168.8.100:80 -s rr + ipvsadm -a -t 192.168.8.100:80 -r 192.168.8.15:80 -g + ipvsadm -a -t 192.168.8.100:80 -r 192.168.8.16:80 -g + ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP diretor1:http rr -> 192.168.8.15:http Route 1 0 0 -> 192.168.8.16:http Route 1 0 0

- Visit 192.168.8.100 to refresh and view the effect

5. Configure keepalived

- Installation of two LVS servers

yum -y install keepalived

cd /etc/keepalived/

cp keepalived.conf keepalived.conf.bak

vim keepalived.conf

global_defs { #Define global parameters

router_id lvs_01 #The device names in the hot spare group cannot be consistent

}

vrrp_instance vi_1 { #Define VRRP hot spare instance parameters

state MASTER #Specify the hot standby status. The primary is master and the standby is backup

interface ens33 #Specifies the physical interface that hosts the vip address

virtual_router_id 51 #Specify the ID number of the virtual router, and each hot spare group is consistent

priority 110 #Specify the priority. The higher the value, the higher the priority

advert_int 1

authentication { #encryption

auth_type PASS

auth_pass 6666

}

virtual_ipaddress { #Specify cluster VIP address

192.168.8.100

}

}

#Specify virtual server address, vip, port, and define virtual server and web server pool parameters

virtual_server 192.168.8.100 80 {

lb_algo rr #Specify scheduling algorithm, polling (rr)

lb_kind DR #Specify the working mode of the cluster and route the DR directly

persistence_timeout 6 #Interval between health checks

protocol TCP #The application service adopts TCP protocol

}

#Specify the address and port of the first web node

real_server 192.168.8.15 80 {

weight 1 #Node weight

TCP_CHECK {

connect_port 80 #Add target port for check

connect_timeout 3 #Add connection timeout

nb_get_retry 3 #Add retry count

delay_before_retry 3 #Add retry interval

}

}

#Specify the address and port of the second web node

real_server 192.168.8.16 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#Both LVS servers are enabled with keepalived

systemctl start keepalived

systemctl status keepalived

ip a #View virtual network card information

If you can't see it, it's on both sets lvs The server swipes the script

sh -x /opt/dd.sh

ipvsadm -Ln

- Browser access

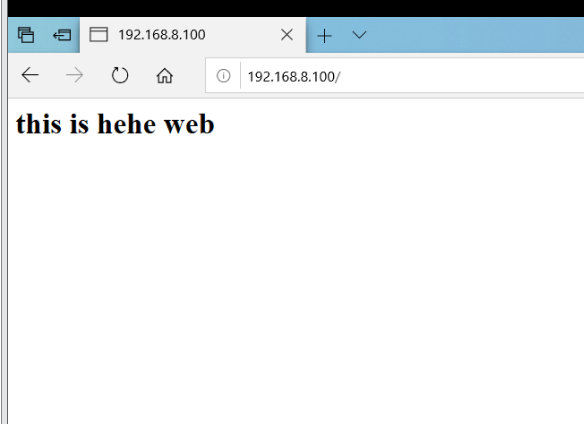

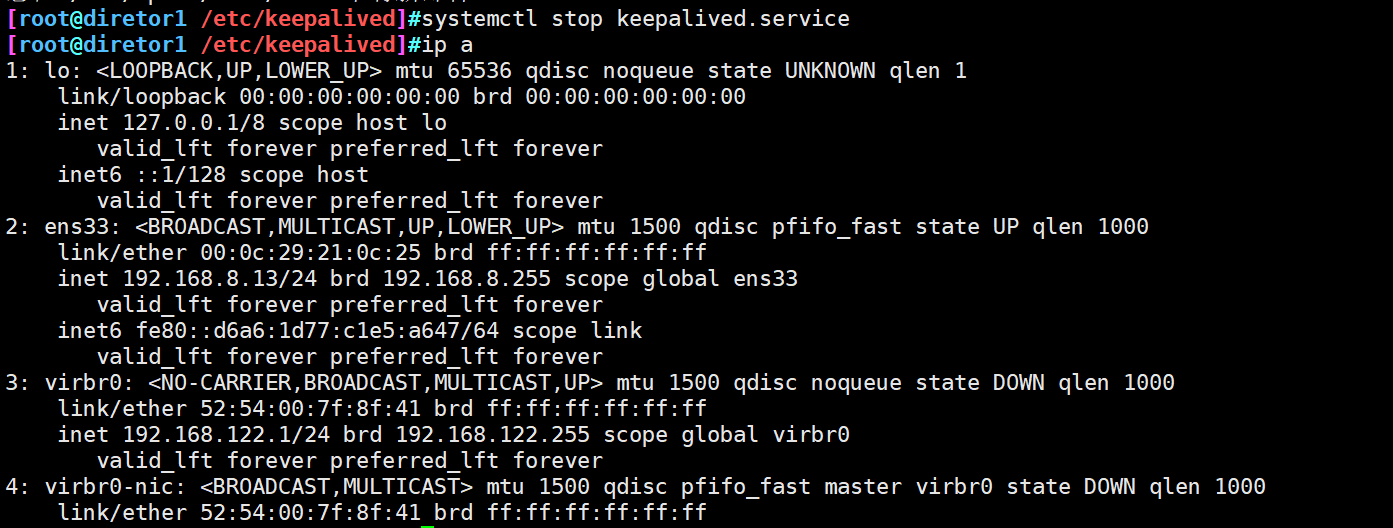

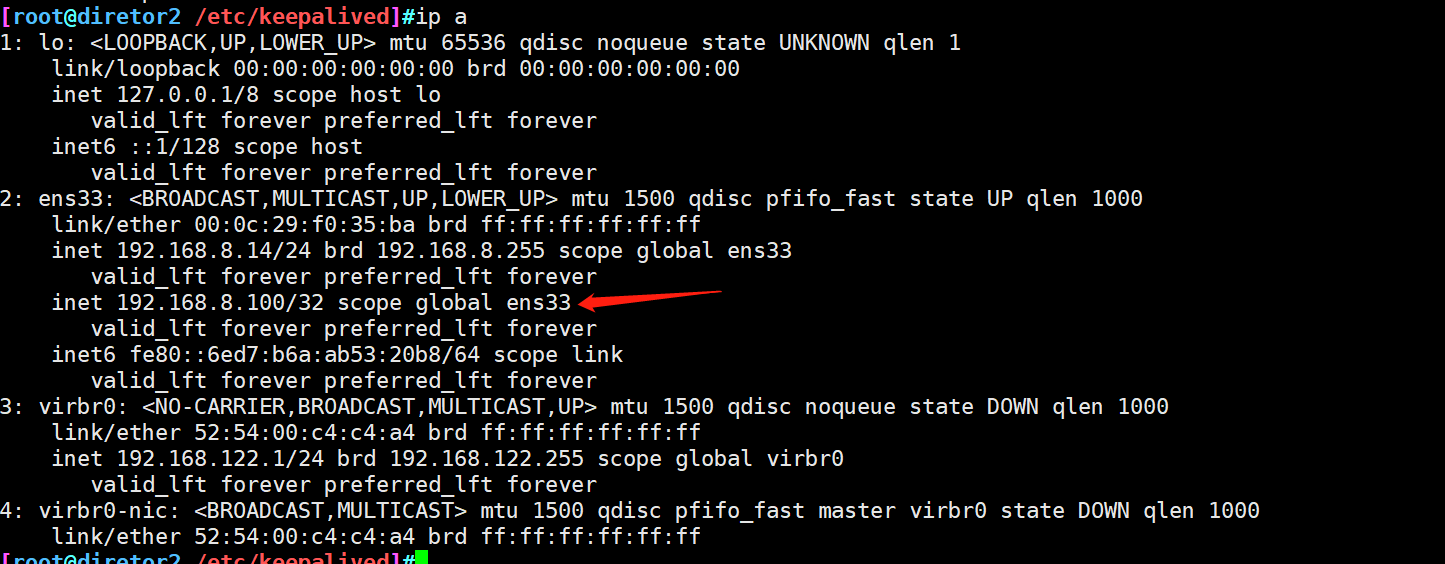

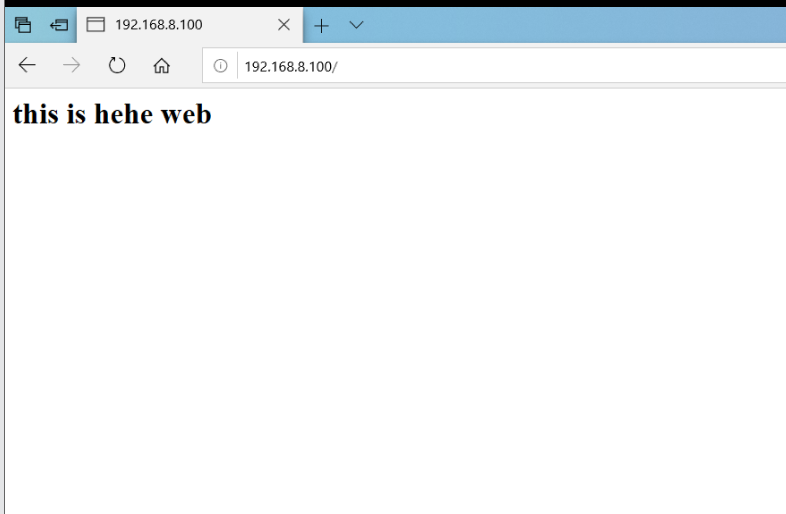

5. Simulate msater fault

systemctl stop keepalived #Analog master hang up ip a #lvs1 no VIP

-

VIP drift to LVS2

-

Browser access can still see the page

summary

- Keepalived is mainly designed for LVS cluster applications and provides failover and health check functions. In non LVS cluster environment, it can also be used to realize multi machine hot standby function.

- The configuration file of Keepalived is keepalived.conf. The configuration difference between the primary and standby servers mainly lies in the router name, hot standby status and priority.

- The drift address (VIP) is automatically specified by Keepalived according to the hot standby status and does not need to be set manually. It is pre configured in the keepalived.conf file of the server pool of the LVS cluster and does not need to execute the ipvsadm tool manually.

- Through the combination of LVS and keepalived, the highly available load balancing cluster of servers can be realized.