1, Overview

DR mode

Also called Direct Routing

The semi open network structure is adopted, which is similar to the structure of tun mode, but the node and scheduler are in the same physical network

The load scheduler connects with each node server through the local network, and there is no need to establish a special ip tunnel

The scheduler is only an access portal, and the response data does not pass through the scheduler. After the user data enters the scheduler, the scheduler is forwarded to the node server, and then after the node server is processed, it responds to the client through the network. In order to respond to access to the entire cluster, both Director Server and Real Server need to be configured with VIP addresses.

2, Workflow

- The client sends a request to the load balancer, and the data message reaches the kernel space

- The kernel space judges that the target ip of the packet is the native vip

At this time, IPVS (ip virtual server) compares whether the service requested by the packet is a cluster service, and repackages the packet if it is a cluster service. Modify the source MAC to the MAC address of the load balancer, and modify the target Mac to the MAC address of the Real Server. The source ip and the target ip remain unchanged, and then send the packet to the Real Server selected according to the load balancing algorithm.

-

If the MAC address of the request message arriving at the Real Server is its own MAC address, this message will be received. The data packet re encapsulates the message (the source IP is VIP and the target IP is CIP), transmits the response message to the physical network card through the lo interface, and then sends it out.

-

Respond back to the client through the switch and router, but the client will not know which server handled it.

-

Characteristics of LVS-DR mode

Director Server and Real Server must be on the same physical network.

Real Server can use private address or public address. If the public network address is used, RIP can be accessed directly through the Internet.

The Director Server is used as the access portal of the cluster, but not as the gateway

All request messages pass through the Director Server, but the reply response message cannot pass through the Director Server.

The gateway of Real Server is not allowed to point to Director Server IP, that is, packets are not allowed to pass through director s server.

The lo interface on the Real Server configures the IP address of the VIP.

ARP problem in LVS-DR

- Question one

In the LVS-DR load balancing cluster, the load balancer and the node server should be configured with the same VIP address and the same IP address in the LAN. It is bound to cause the disorder of ARP communication among servers

When the ARP broadcast is sent to the LVS-DR cluster, both the load balancer and the node server will receive the ARP broadcast because they are connected to the same network

Only the front-end load balancer responds, and other node servers should not respond to ARP broadcasts

resolvent:

Process the node server so that it does not respond to ARP requests for VIP s

Use virtual interface lo:0 to host VIP address

Set kernel parameter arp_ ignore=1: the system only responds to ARP requests whose destination IP is the local IP

- Question two

The message returned by RealServer (the source IP is VIP) is forwarded by the router. When repackaging the message, you need to obtain the MAC address of the router first. When sending ARP request, Linux uses the source IP address of the IP packet by default (VIPs) are used as the source IP address in the ARP request packet instead of the IP address of the sending interface. After receiving the ARP request, the router will update the ARP table entry, and the MAC address of the original Director corresponding to the VIP will be updated to the MAC address of the RealServer corresponding to the VIP.

According to the ARP table entry, the router will forward the new request message to RealServer, resulting in the failure of the Director's VIP

resolvent:

Process the node server and set the kernel parameter ARP_ Announcement = 2: the system does not use the source address of the IP packet to set the source address of the ARP request, but selects the IP address of the sending interface

vim /etc/sysctl.conf net.ipv4.ip_forward = 0 net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.ens33.send_redirects = 0 sysctl -p

3, Configuration started!

Prepare four servers and one client

Load balancer 192.168 one hundred point two five four

Node server: 192.168 100.10 ; 192.168. one hundred point one one

nfs server: 192.168 twenty-three point one two

vip : 192.168.100.100

Client: 192.168 one hundred point two zero zero

Configure the load balancer first

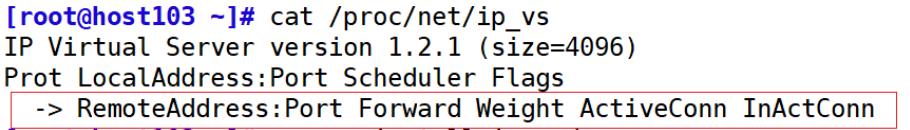

systemctl stop firewalld systemctl disable firewalld setenforce 0 modprobe ip_vs cat /proc/net/ip_vs yum -y install ipvsadm

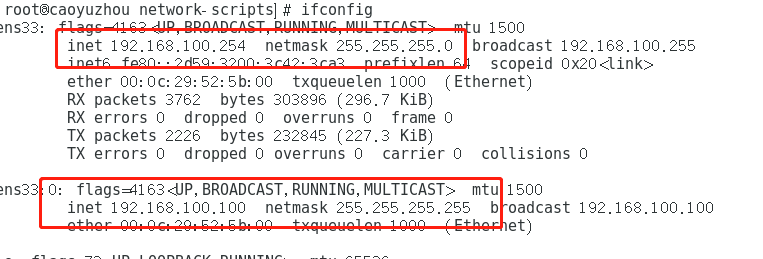

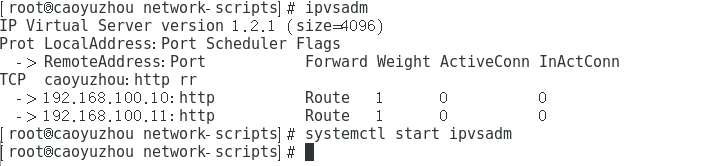

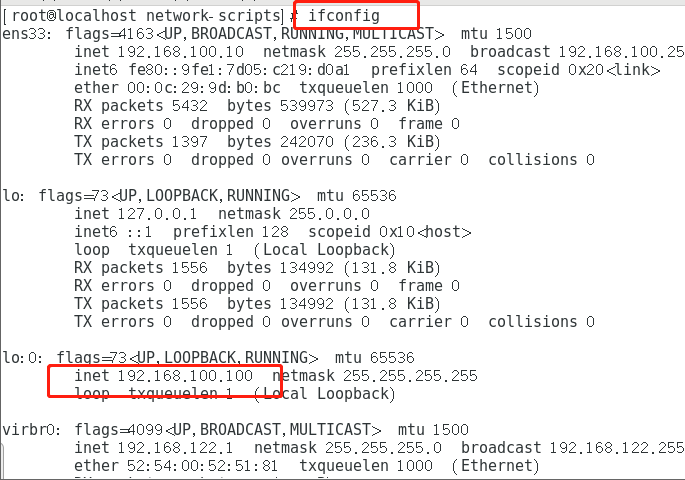

Configure vip address (192.168.100.100)

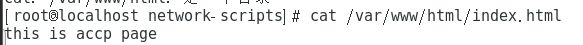

cd /etc/sysconfig/network-scripts

cp ifcfg-ens33{,:0}

vim ifcfg-ens33:0

NAME=ens33:0

DEVICE=ens33:0

ONBOOT=yes

IPADDR=192.168.100.100

NETMASK=255.255.255.255

boot adapter

Then adjust the proc response parameter.

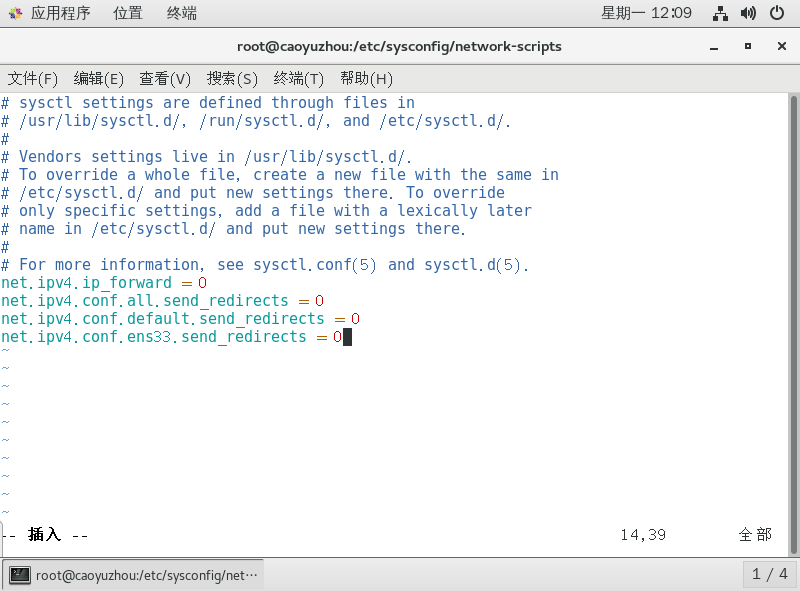

vim /etc/sysctl.conf

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

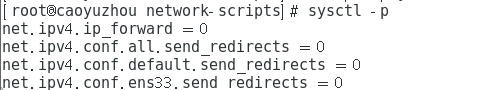

sysctl -p # load kernel parameters

Then configure the load distribution policy

ipvsadm-save > /etc/sysconfig/ipvsadm #If you do not save to this file, an error will be reported #Empty rule ipvsadm -C #Add virtual server, 192.168 23.100:80, using polling algorithm ipvsadm -A -t 192.168.23.100:80 -s rr #Add a real server VIP address 192.168 192.168 at 23.100:80 rip 23.12:80, using DR mode ipvsadm -a -t 192.168.23.100:80 -r 192.168.23.12:80 -g ipvsadm -a -t 192.168.23.100:80 -r 192.168.23.13:80 -g ipvsadm #View node status

Then deploy shared storage on nfs server

See the previous article for details

Configure node server:

192.168.100.10

192.168.100.11

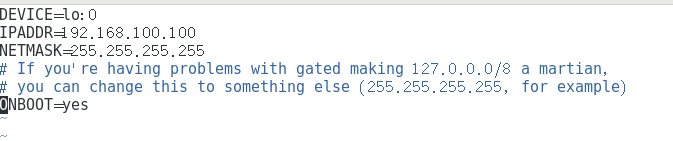

cd /etc/sysconfig/network-scripts

cp ifcfg-lo{,:0}

vim ifcfg-lo:0

DEVICE=lo:0

IPADDR=192.168.23.100

NETMASK=255.255.255.255

ONBOOT=yes

ifup lo: 0

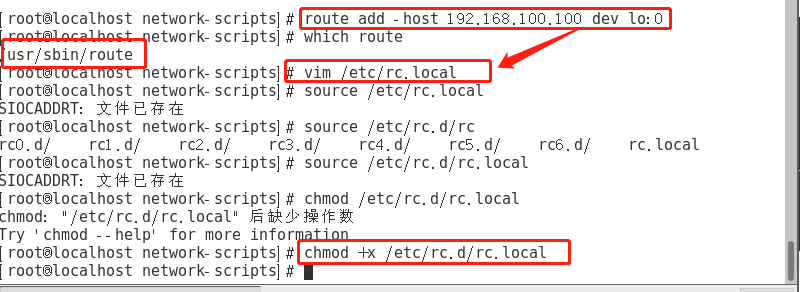

#Host routes added to lo:0 route add -host 192.168.23.100 dev lo:0 which route #Check the path of the route command and find that it is in / usr/sbin/route #Write the routing configuration to the startup script vim /etc/rc.local route add -host 192.168.23.100 dev lo:0 chmod +x /etc/rc.d/rc.local

Then adjust the kernel arp parameter.

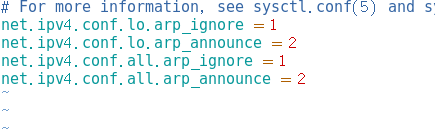

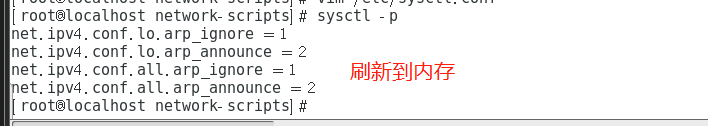

vim /etc/sysctl.conf net.ipv4.conf.lo.arp_ignore = 1 #Only respond to arp requests whose destination IP address is the local address on the receiving network card net.ipv4.conf.lo.arp_announce = 2 #Only respond to ARP messages matching the network segment to the network card net.ipv4.conf.all.arp_ignore = 1 net.ipv4.conf.all.arp_announce = 2 #arp_ignore and ARP_ The announcement parameters include all,default,lo,eth0, etc., corresponding to different network cards. When the parameter values of all and the specific network card are inconsistent, the configuration with a larger value will take effect. Generally, you only need to modify the parameters of all and a specific network card. sysctl -p #Flush to memory

Mount directory (refer to the previous NAT for details)

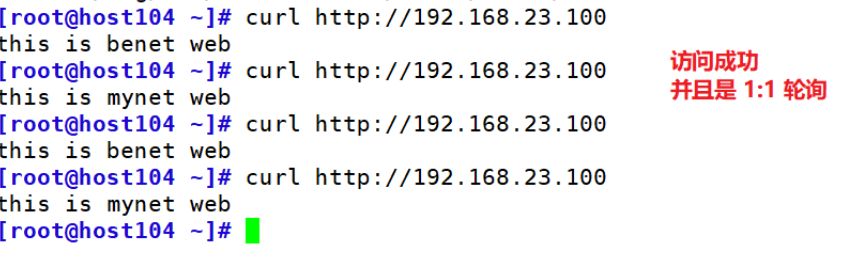

Test: