Hadoop RPC is divided into four parts

- Serialization layer: convert structured objects into byte streams for transmission over the network or write to persistent storage. In the RPC framework, it is mainly used to convert parameters or responses in user requests into byte streams for cross machine transmission.

- Function call layer: locate the function to be called and execute it. Hadoop RPC uses Java reflection mechanism and dynamic proxy to realize function call.

- Network transport layer: describes the mode of message transmission between Client and Server. Hadoop RPC adopts Socket mechanism based on TCP/IP.

- Server side processing framework: it can be abstracted as a network I/O model, which describes the way of information interaction between the client and the server. Its design directly determines the concurrent processing capability of the server. Hadoop RPC adopts an event driven I/O model based on Reactor design pattern.

Analysis of Hadoop RPC framework

Basic concepts of RPC

RPC usually adopts the client / server model. The requester is the client and the service provider is the server. The typical RPC framework mainly includes the following parts:

- Communication module: it implements the request response protocol and does not process data packets. The implementation mode of request response protocol is synchronous and asynchronous. The synchronous mode requires the client to block and wait for the response sent by the server to arrive. Asynchronous does not need to block and wait, and the server can take the initiative to notify. In high concurrency scenarios, asynchrony can reduce access latency and improve bandwidth utilization.

- Stub program: it can be regarded as an agent program. Make remote function calls transparent to user programs. At the client, the request information is sent to the server through the network module. When the server sends a response, it will decode the corresponding result; On the server side, stub decodes the parameters in the request message, calls the corresponding service process and encodes the return value of the response result.

- Scheduler: receive the request information from the communication module and select a Stub program to process according to the identification. Generally, when the amount of concurrent requests from the client is relatively large, thread pool will be used to improve the processing efficiency

- Client program \ service process: the sender of the request and the caller of the request. If it is a stand-alone environment, the client program can directly access the service process through function call. However, in a distributed environment, network communication needs to be considered, so the communication module and Stub program should be added (to ensure the transparency of function call)

Steps from sending an RPC request to obtaining processing results:

- The client program calls the system generated Stub program locally

- The Stub program encapsulates the function call information into a message package according to the requirements of the network communication module, and sends it to the communication module to the remote server

- After receiving this message, the remote server sends it to the corresponding Stub program

- The Stub program unpacks the message, forms the form required by the called process, and calls the corresponding function

- The called function executes according to the obtained parameters and returns the result to the Stub program

- The Stub program encapsulates the result into a message and transmits it to the client program level by level through the network communication module.

Hadoop RPC basic framework

Hadoop RPC usage

Two interfaces

- public static VersionedProtocol get Proxy/waitForProxy(): construct a client proxy object to send RPC requests to the server.

- public static Server getServer(): construct a server object for a protocol instance to process requests sent by clients

Steps of Hadoop RPC usage method:

- Step 1: define RPC Protocol. RPC Protocol is the communication interface between client and server, which defines the service interface provided by server.

/**

* Superclass of all protocols that use Hadoop RPC.

* Subclasses of this interface are also supposed to have a static final long versionID field.

* Subclass must have a version number

*/

public interface VersionedProtocol{}

All custom RPC interfaces in Hadoop need to inherit the VersionedProtocol interface, which describes the version information of the protocol.

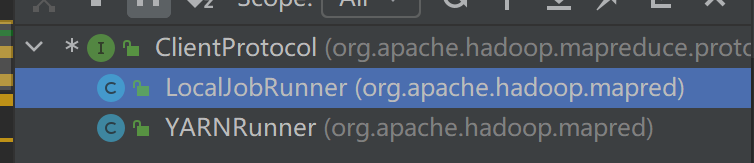

For example, look at a client protocol

public interface ClientProtocol extends VersionedProtocol {

/*

*Changing the versionID to 2L since the getTaskCompletionEvents method has

*changed.

*Changed to 4 since killTask(String,boolean) is added

*Version 4: added jobtracker state to ClusterStatus

*Version 5: max_tasks in ClusterStatus is replaced by

* max_map_tasks and max_reduce_tasks for HADOOP-1274

* Version 6: change the counters representation for HADOOP-2248

* Version 7: added getAllJobs for HADOOP-2487

* Version 8: change {job|task}id's to use corresponding objects rather that strings.

* Version 9: change the counter representation for HADOOP-1915

* Version 10: added getSystemDir for HADOOP-3135

* Version 11: changed JobProfile to include the queue name for HADOOP-3698

* Version 12: Added getCleanupTaskReports and

* cleanupProgress to JobStatus as part of HADOOP-3150

* Version 13: Added getJobQueueInfos and getJobQueueInfo(queue name)

* and getAllJobs(queue) as a part of HADOOP-3930

* Version 14: Added setPriority for HADOOP-4124

* Version 15: Added KILLED status to JobStatus as part of HADOOP-3924

* Version 16: Added getSetupTaskReports and

* setupProgress to JobStatus as part of HADOOP-4261

* Version 17: getClusterStatus returns the amount of memory used by

* the server. HADOOP-4435

* Version 18: Added blacklisted trackers to the ClusterStatus

* for HADOOP-4305

* Version 19: Modified TaskReport to have TIP status and modified the

* method getClusterStatus() to take a boolean argument

* for HADOOP-4807

* Version 20: Modified ClusterStatus to have the tasktracker expiry

* interval for HADOOP-4939

* Version 21: Modified TaskID to be aware of the new TaskTypes

* Version 22: Added method getQueueAclsForCurrentUser to get queue acls info

* for a user

* Version 23: Modified the JobQueueInfo class to inlucde queue state.

* Part of HADOOP-5913.

* Version 24: Modified ClusterStatus to include BlackListInfo class which

* encapsulates reasons and report for blacklisted node.

* Version 25: Added fields to JobStatus for HADOOP-817.

* Version 26: Added properties to JobQueueInfo as part of MAPREDUCE-861.

* added new api's getRootQueues and

* getChildQueues(String queueName)

* Version 27: Changed protocol to use new api objects. And the protocol is

* renamed from JobSubmissionProtocol to ClientProtocol.

* Version 28: Added getJobHistoryDir() as part of MAPREDUCE-975.

* Version 29: Added reservedSlots, runningTasks and totalJobSubmissions

* to ClusterMetrics as part of MAPREDUCE-1048.

* Version 30: Job submission files are uploaded to a staging area under

* user home dir. JobTracker reads the required files from the

* staging area using user credentials passed via the rpc.

* Version 31: Added TokenStorage to submitJob

* Version 32: Added delegation tokens (add, renew, cancel)

* Version 33: Added JobACLs to JobStatus as part of MAPREDUCE-1307

* Version 34: Modified submitJob to use Credentials instead of TokenStorage.

* Version 35: Added the method getQueueAdmins(queueName) as part of

* MAPREDUCE-1664.

* Version 36: Added the method getJobTrackerStatus() as part of

* MAPREDUCE-2337.

* Version 37: More efficient serialization format for framework counters

* (MAPREDUCE-901)

* Version 38: Added getLogFilePath(JobID, TaskAttemptID) as part of

* MAPREDUCE-3146

*/

public static final long versionID = 37L;

-

Step 2: implement RPC Protocol. The Hadoop RPC Protocol is usually a Java interface that users need to implement.

For example, Hadoop implements an instance of ClientProtocol:

-

Step 3: construct and start the RPC Server. Directly use the static method getServer() to construct an RPC Server, and call the function start() to start the Server.

server = RPC.getServer(new ClientProtocolImpl(), severHost, serverPort , numHandlers , false , conf); server.start();

serverHost and serverPort respectively identify the host and listening port number of the server. numHandlers indicates the number of threads processing requests on the server side. Up to this point, the server processes listening status and waits for the arrival of client requests.

- Step 4: paparazzi RPC Client and send RPC request. Use the static method getProxy() to construct the client proxy object and call the remote method directly through the proxy object

To be more

This part is rather vague. Come back when there are specific application scenarios~