win 10 anaconda pycharm

Project address: https://github.com/matterport/Mask_RCNN

1. Environment configuration

Reference https://blog.csdn.net/hesongzefairy/article/details/104702119

2. Data set preparation

Create folder dataset

The pic folder contains the original pictures

Then label the picture with labelme, and save the marked json file in the json folder

Then use the labelme provided by labelme_json_to_dataset generates JSON folders. Each JSON folder contains five sub files, and the pick-up folder is stored in labelme_json folder

Statement for batch generation of json folder

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

import os

if __name__ == '__main__':

json_dir = "D:\Desktop\summer\Mask_RCNN-master\dataset\json" #Store the json file marked by labelme

for name in os.listdir(json_dir):

#print(name)

json_path = os.path.join(json_dir,name)

os.system(str("labelme_json_to_dataset " + json_path))

print("success json to dataset: ",json_path)Then label me_ Label in JSON Move PNG to CV2_ In the mask folder (many tutorials on the Internet have the process of processing label.png (16 to 8 bits). This is mainly due to the previous label me version. My 4.5.7 is an OK label me_ json_ to_ Label generated by dataset Png itself is 8 bits, which can be directly used for training)

Label Png unified replication to CV2_ The mask folder uses the following program

from PIL import Image

import numpy as np

import os

n=5 #n is Number of json files

for i in range(n):

# open_path='E:/data_image/new_file/L_train/labelme_json/'+'L'+format(str(i), '0>1s')+'_json'+'/label.png'#File address

open_path = "D:\Desktop\summer\Mask_RCNN-master\dataset\labelme_json/"+format(str(i+1), '0>1s')+'_json'+'/label.png'

# try:

# f=open(open_path)

# f.close()

# except FileNotFoundError:

# continue

img1=Image.open(open_path)#Open image

print(img1)

save_path='D:\Desktop\summer\Mask_RCNN-master\dataset\cv2.mask/'#Save address

# img1.show()

# img=Image.fromarray(np.uint8(img1))#16 bits to 8 bits

img=img1

img.save(os.path.join(save_path,str(i+1)+'.png')) #Save in png format

At this point, the preparation of the dataset is completed

3. Training

The program used for training is in samples/shapes/train_shpes.py file, I directly refer to the online process

# -*- coding: utf-8 -*-

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

import tensorflow as tf

from mrcnn.config import Config

#import utils

from mrcnn import model as modellib,utils

from mrcnn import visualize

import yaml

from mrcnn.model import log

from PIL import Image

import tensorflow as tf

from keras import backend as K

config = tf.ConfigProto()

config.gpu_options.allow_growth=True

sess = tf.Session(config=config)

K.set_session(sess)

#os.environ["CUDA_VISIBLE_DEVICES"] = "0"

# Root directory of the project

ROOT_DIR = os.getcwd()

#ROOT_DIR = os.path.abspath("../")

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num=0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # background + 3 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 128

IMAGE_MAX_DIM = 128

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 100

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 100

# use small validation steps since the epoch is small

VALIDATION_STEPS = 50

config = ShapesConfig()

config.display()

class DrugDataset(utils.Dataset):

# Get the number of instances (objects) in the figure

def get_obj_index(self, image):

n = np.max(image)

return n

# Parse the yaml file obtained in labelme to obtain the instance label corresponding to each layer of the mask

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read(),Loader=yaml.FullLoader)

labels = temp['label_names']

del labels[0]

return labels

# Rewrite draw_mask

def draw_mask(self, num_obj, mask, image,image_id):

#print("draw_mask-->",image_id)

#print("self.image_info",self.image_info)

info = self.image_info[image_id]

#print("info-->",info)

#print("info[width]----->",info['width'],"-info[height]--->",info['height'])

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

#print("image_id-->",image_id,"-i--->",i,"-j--->",j)

#print("info[width]----->",info['width'],"-info[height]--->",info['height'])

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

# Rewrite load_shapes, which contains their own categories

# And in self image_ Path and mask are added to info information_ path ,yaml_path

# yaml_pathdataset_root_path = "/tongue_dateset/"

# img_floder = dataset_root_path + "rgb"

# mask_floder = dataset_root_path + "mask"

# dataset_root_path = "/tongue_dateset/"

def load_shapes(self, count, img_floder, mask_floder, imglist, dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes

self.add_class("shapes", 1, "car1")

# self.add_class("shapes", 2, "b")

# self.add_class("shapes", 3, "c")

# self.add_class("shapes", 4, "e")

for i in range(count):

# Get picture width and height

filestr = imglist[i].split(".")[0]

#print(imglist[i],"-->",cv_img.shape[1],"--->",cv_img.shape[0])

#print("id-->", i, " imglist[", i, "]-->", imglist[i],"filestr-->",filestr)

# filestr = filestr.split("_")[1]

mask_path = mask_floder + "/" + filestr + ".png"

yaml_path = dataset_root_path + "labelme_json/" + filestr + "_json/info.yaml"

print(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

# Rewrite load_mask

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

print("image_id",image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img,image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("car1") != -1:

labels_form.append("car1")

# elif labels[i].find("b") != -1:

# labels_form.append("b")

# elif labels[i].find("c") != -1:

# labels_form.append("c")

# elif labels[i].find("e") != -1:

# labels_form.append("e")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def get_ax(rows=1, cols=1, size=8):

"""Return a Matplotlib Axes array to be used in

all visualizations in the notebook. Provide a

central point to control graph sizes.

Change the default size attribute to control the size

of rendered images

"""

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

#Basic settings

dataset_root_path="dataset/"

img_floder = dataset_root_path + "pic"

mask_floder = dataset_root_path + "cv2_mask"

#yaml_floder = dataset_root_path

imglist = os.listdir(img_floder)

count = len(imglist)

#train and val dataset preparation

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_floder, mask_floder, imglist,dataset_root_path)

dataset_train.prepare()

#print("dataset_train-->",dataset_train._image_ids)

dataset_val = DrugDataset()

# dataset_val.load_shapes(7, img_floder, mask_floder, imglist,dataset_root_path)

dataset_val.load_shapes(3, img_floder, mask_floder, imglist,dataset_root_path)

dataset_val.prepare()

#print("dataset_val-->",dataset_val._image_ids)

# Load and display random samples

#image_ids = np.random.choice(dataset_train.image_ids, 4)

#for image_id in image_ids:

# image = dataset_train.load_image(image_id)

# mask, class_ids = dataset_train.load_mask(image_id)

# visualize.display_top_masks(image, mask, class_ids, dataset_train.class_names)

# Create model in training mode

model = modellib.MaskRCNN(mode="training", config=config,

model_dir=MODEL_DIR)

# Which weights to start with?

init_with = "coco" # imagenet, coco, or last

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

# Load weights trained on MS COCO, but skip layers that

# are different due to the different number of classes

# See README for instructions to download the COCO weights

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last model you trained and continue training

model.load_weights(model.find_last()[1], by_name=True)

# Train the head branches

# Passing layers="heads" freezes all layers except the head

# layers. You can also pass a regular expression to select

# which layers to train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs=10,

layers='heads')

# Fine tune all layers

# Passing layers="all" trains all layers. You can also

# pass a regular expression to select which layers to

# train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE / 10,

epochs=30,

layers="all")Several parts need to be modified:

① You need to add fields, otherwise there will be an error

import tensorflow as tf from keras import backend as K config = tf.ConfigProto() config.gpu_options.allow_growth=True sess = tf.Session(config=config) K.set_session(sess)

② Modify num_classes = 1 + the number of categories defined by myself. The bottom two parameters seem to be set according to my own input image. I set the minimum value without understanding it

NUM_CLASSES = 1 + 1 # background + 3 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 128

IMAGE_MAX_DIM = 128

③ Modify defined categories

# Add classes

self.add_class("shapes", 1, "car1")

# self.add_class("shapes", 2, "b")

# self.add_class("shapes", 3, "c")

# self.add_class("shapes", 4, "e")load_ The category in the mask should also be changed

for i in range(len(labels)):

if labels[i].find("car1") != -1:

labels_form.append("car1")

# elif labels[i].find("b") != -1:

# labels_form.append("b")

# elif labels[i].find("c") != -1:

# labels_form.append("c")

# elif labels[i].find("e") != -1:

# labels_form.append("e")④ Modify the file path in the basic category

#Basic settings dataset_root_path="dataset/" img_floder = dataset_root_path + "pic" mask_floder = dataset_root_path + "cv2_mask" #yaml_floder = dataset_root_path imglist = os.listdir(img_floder) count = len(imglist)

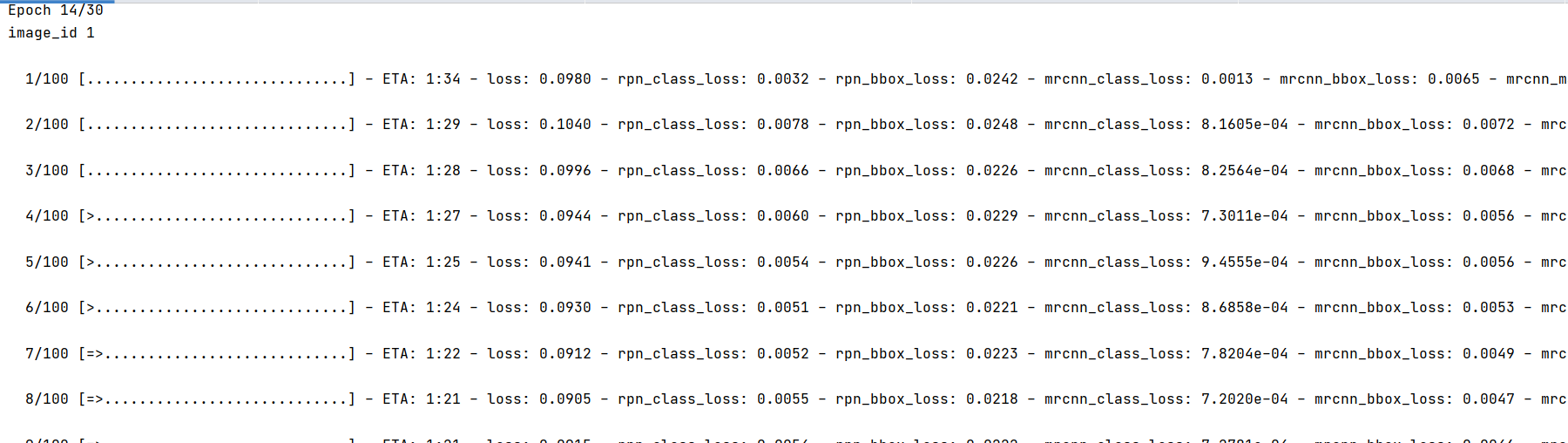

Then run it

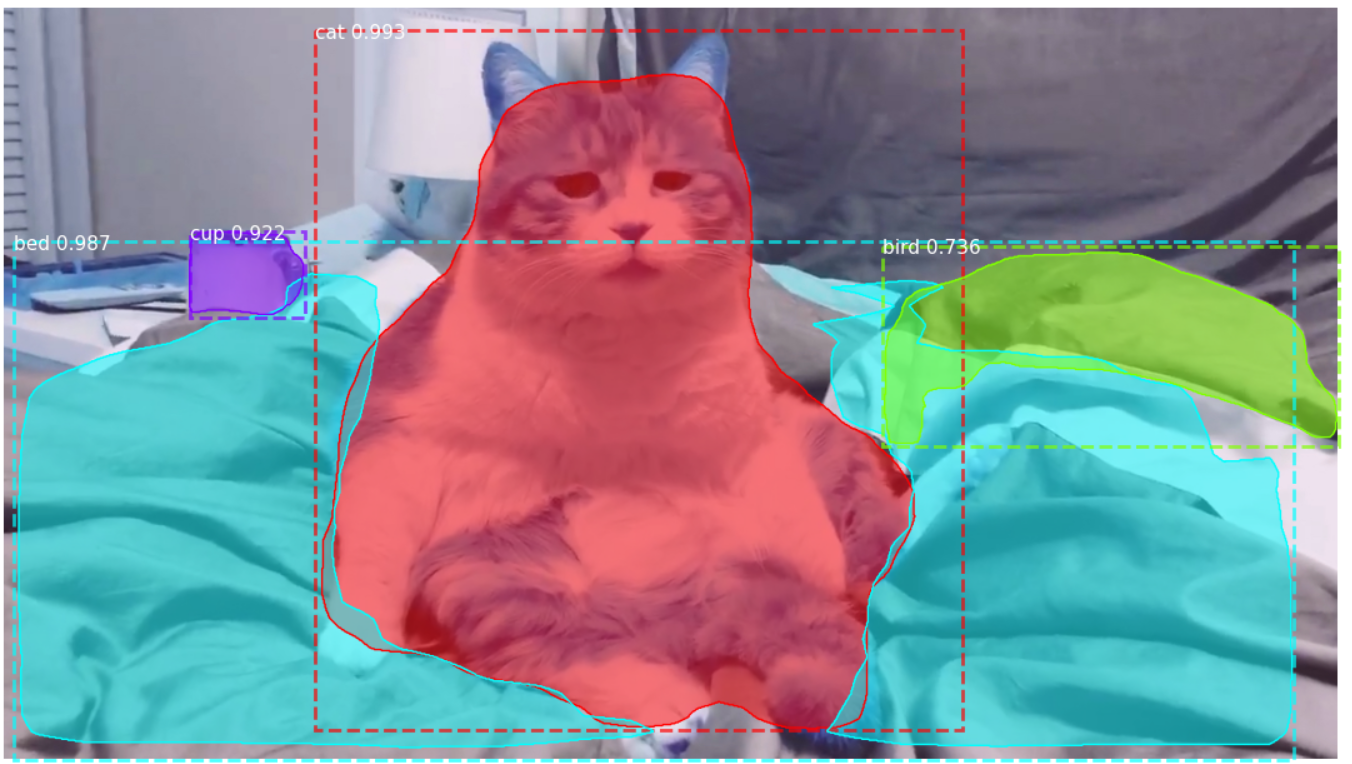

About testing, you can directly demo Ipynb to py file, and then modify the weight file and image path code

Modify weight file path here

# Load weights trained on MS-COCO model.load_weights(COCO_MODEL_PATH, by_name=True)

Modify the path of the picture to be predicted here

# Load a random image from the images folder # file_names = next(os.walk(IMAGE_DIR))[2] # image = skimage.io.imread(os.path.join(IMAGE_DIR, random.choice(file_names))) import cv2 image = cv2.imread(r"D:\Desktop\ririzhu.jpg")

reference resources:

https://blog.csdn.net/hesongzefairy/article/details/104702119

mask_rcnn trains its own data set - an apple - blog Garden (cnblogs.com)