TL;DR

The knowledge of network is many and miscellaneous, and many of them are part of the system kernel. Originally, I didn't do network, and my knowledge of system kernel is also weak. But it is precisely these strange contents full of temptation, coupled with the current work is more related to the network, seize the opportunity to learn.

This article takes Kubernetes LoadBalancer as the starting point, uses MetalLB to realize the load balancer of the cluster, and understands some network knowledge while exploring its working principle.

Since MetalLB has a lot of content, we will only introduce the simple and easy to understand part of it step by step. There will be a next article (too complex, wait until I understand: D).

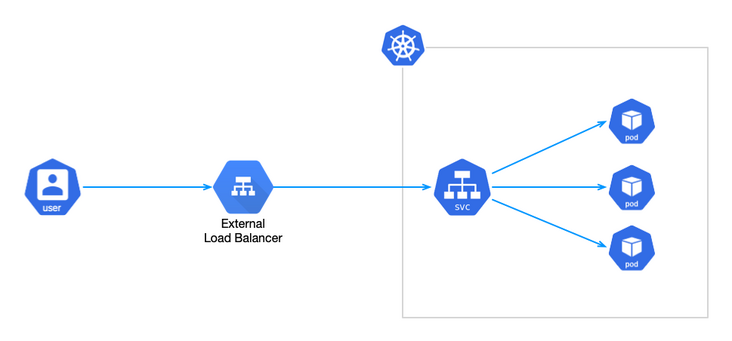

LoadBalancer type Service

Since the IP address of Pod in kubernets is not fixed, the IP will change after restart and cannot be used as the communication address. Kubernets provides a Service to solve this problem and expose it to the public.

Kubernetes provides the same DNS name and virtual IP for a group of pods, as well as the ability of load balancing. Here, the grouping of pods is completed by labeling pods. When defining a Service, a Label selector will be declared to associate the Service with this group of pods.

According to different usage scenarios, services are divided into four types: ClusterIP, NodePort, LoadBalancer and ExternalName. The default is ClusterIP. We won't introduce them in detail here. Please check them if you are interested Service official document.

Except for today's protagonist LoadBalancer, the other three types are commonly used. The official explanation of LoadBalancer is:

Expose services externally using the load balancer of the cloud provider. The external load balancer can route traffic to the automatically created NodePort service and ClusterIP service.

When you see the words "provided by the cloud provider", you often flinch. Sometimes you need the LoadBalancer to do some verification work on the external exposure Service (although you can also use the NodePort type Service in addition to the 7-layer progress), but Kubernetes officially does not provide the implementation. For example, the following will be introduced MetalLB It's a good choice.

MetalLB introduction

MetalLB is the load balancer implementation of bare metal Kubernetes cluster, using standard routing protocol.

Note: MetalLB is still in beta.

As mentioned earlier, Kubernetes officials do not provide the implementation of LoadBalancer. Various cloud vendors provide implementations, but if they are not running on these cloud environments, the created LoadBalancer Service will always be in Pending state (see the Demo below).

MetalLB provides two functions:

- Address assignment: when creating LoadBalancer Service, MetalLB will assign IP address to it. This IP address is obtained from the < U > pre configured IP address library < / u >. Similarly, after the Service is deleted, the assigned IP address will return to the address library.

- External broadcasting: after the IP address is allocated, the network outside the cluster needs to know the existence of this address. MetalLB uses standard routing protocols: ARP, NDP or BGP.

There are two ways of broadcasting. The first is Layer 2 mode, which uses ARP (ipv4) / NDP (ipv6) protocol; The second is BPG.

<u> Today, we mainly introduce the simple Layer 2 mode < / u >, which is the implementation of OSI Layer 2 as the name suggests.

The specific implementation principle will be analyzed after reading the Demo. If you can't wait, please jump to the end directly.

Runtime

MetalLB runs with two workloads:

- Controller: Deployment, which is used to listen for Service changes and allocate / reclaim IP addresses.

- Speaker: DaemonSet, the IP address of the external broadcast Service.

Demo

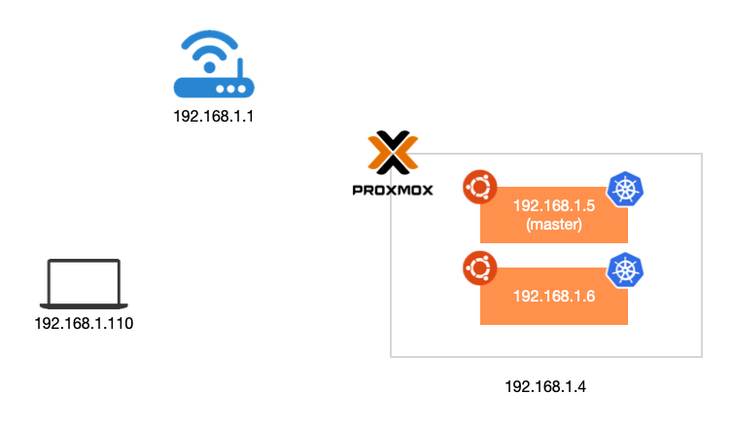

Before installation, introduce the network environment. Kubernetes uses K8s to install in Virtual machine for Proxmox Come on.

Install K3s

To install k3s, you need to disable the default servicelb of k3s through -- disable servicelb.

reference resources K3s document , K3s is used by default Traefik Inress controller and Klipper Service load balancer to expose services.

curl -sfL https://get.k3s.io | sh -s - --disable traefik --disable servicelb --write-kubeconfig-mode 644 --write-kubeconfig ~/.kube/config

Create workload

Using nginx mirroring, create two workloads:

kubectl create deploy nginx --image nginx:latest --port 80 -n default kubectl create deploy nginx2 --image nginx:latest --port 80 -n default

Create services for two deployments at the same time. Here, select LoadBalancer as the type:

kubectl expose deployment nginx --name nginx-lb --port 8080 --target-port 80 --type LoadBalancer -n default kubectl expose deployment nginx2 --name nginx2-lb --port 8080 --target-port 80 --type LoadBalancer -n default

Check the Service and find that the status is Pending. This is because we disabled the implementation of LoadBalancer when installing K3s:

kubectl get svc -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 14m nginx-lb LoadBalancer 10.43.108.233 <pending> 8080:31655/TCP 35s nginx2-lb LoadBalancer 10.43.26.30 <pending> 8080:31274/TCP 16s

At this time, MetalLB is needed.

Install MetalLB

Use the officially provided manifest to install. At present, the latest version is 0.12.1. In addition, other installation methods can be selected, such as Helm,Kustomize perhaps MetalLB Operator.

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml kubectl get po -n metallb-system NAME READY STATUS RESTARTS AGE speaker-98t5t 1/1 Running 0 22s controller-66445f859d-gt9tn 1/1 Running 0 22s

At this time, check that the status of LoadBalancer Service is still Pending, huh? Because MetalLB needs to assign IP addresses to the Service, but the IP addresses do not come out of thin air, but need to provide an address Library in advance.

Here, we use the Layer 2 mode to provide an IP segment through Configmap:

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.1.30-192.168.1.49At this time, check the status of the Service. You can see that MetalLB has assigned IP addresses 192.168.1.30 and 192.168.1.31 to the two services:

kubectl get svc -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 28m nginx-lb LoadBalancer 10.43.201.249 192.168.1.30 8080:30089/TCP 14m nginx2-lb LoadBalancer 10.43.152.236 192.168.1.31 8080:31878/TCP 14m

You can request the following tests:

curl -I 192.168.1.30:8080 HTTP/1.1 200 OK Server: nginx/1.21.6 Date: Wed, 02 Mar 2022 15:31:15 GMT Content-Type: text/html Content-Length: 615 Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT Connection: keep-alive ETag: "61f01158-267" Accept-Ranges: bytes curl -I 192.168.1.31:8080 HTTP/1.1 200 OK Server: nginx/1.21.6 Date: Wed, 02 Mar 2022 15:31:18 GMT Content-Type: text/html Content-Length: 615 Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT Connection: keep-alive ETag: "61f01158-267" Accept-Ranges: bytes

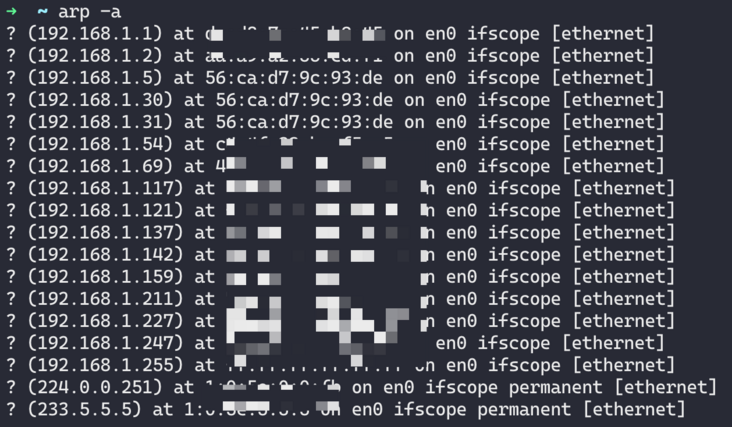

mac OS uses arp -a locally. You can find the two IP addresses and mac addresses by looking at the ARP table. You can see that both IP addresses are bound to the same network card and the IP address of the virtual machine. That is, three IPS are bound to en0 of the virtual machine:

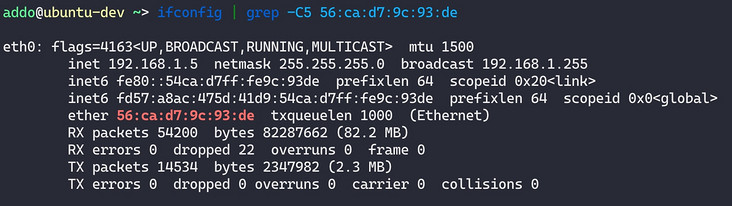

Go to the virtual machine (node) to check the network card (here you can only see the IP bound by the system):

How Layer 2 works

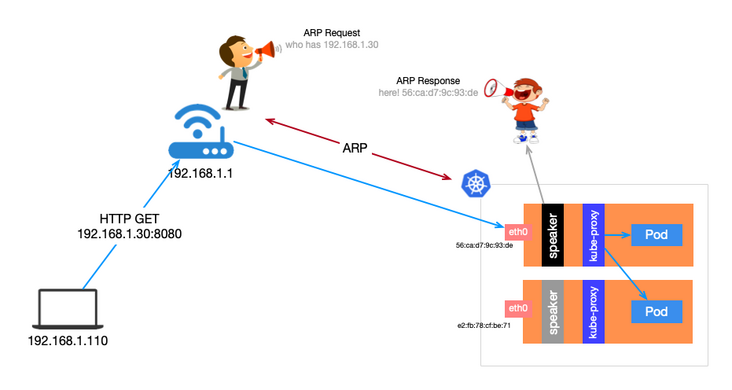

The Speaker workload in Layer 2 is of DeamonSet type, and a Pod is scheduled on each node. First, several pods will be elected first to elect leaders. The Leader obtains all LoadBalancer type services and binds the assigned IP address to the current host network card. In other words, the IP addresses of all LoadBalancer type services are bound to the network card of the same node at the same time.

When an external host has a request to send to a Service in the cluster, it is necessary to first determine the mac address of the network card of the target host (for why, refer to Wikipedia ). This is by sending an ARP request, and the Leader node will respond with its mac address. The external host will cache it in the local ARP table and get it directly from the ARP table next time.

After the request reaches the node, the node will request the load balancing target Pod through Kube proxy. Therefore, if the Service is multi Pod, it may jump to another host.

Advantages and disadvantages

The advantages are obvious and easy to implement (compared with another BGP mode, the router should support BPG). Just like the author's environment, as long as the IP address library and the cluster are the same network segment.

Of course, the disadvantages are more obvious. The bandwidth of the Leader node will become a bottleneck; At the same time, availability is poor and failover takes 10 seconds( Each speaker process has a 10s cycle).

reference resources

- Address resolution protocol

The article is unified in the official account of the cloud.