There are some problems in the traditional master-slave architecture in the previous article (for example, single point of failure, etc.), which will be solved by adding new content in this blog.

1, MHA concept

- MHA (MasterHigh Availability) is a set of excellent software for failover and master-slave replication in MySQL high availability environment.

- The emergence of MHA is to solve the problem of MySQL single point.

- During MySQL failover, MHA can automatically complete the failover operation within 0-30 seconds.

- MHA can ensure the consistency of data to the greatest extent in the process of failover, so as to achieve high availability in the real sense.

1. Composition of MHA

- MHA Node

- The MHA Node runs on each MySQL server.

- MHA Manager (management node)

- MHA Manager can be deployed on an independent machine to manage multiple master slave clusters; It can also be deployed on a slave node.

- The MHA node in the cluster will be detected regularly. When the master fails, it can automatically promote the slave of the latest data to the new master, and then point all other slave to the new master again. The entire failover process is completely transparent to the application.

2. Characteristics of MHA

- In the process of automatic failover, MHA tries to save binary logs from the down main server to ensure no data loss to the greatest extent

- Using semi synchronous replication can greatly reduce the risk of data loss. If only one slave has received the latest binary log, MHA can apply the latest binary log to all other slave servers, so it can ensure the data consistency of all nodes

- At present, MHA supports a master-slave architecture with at least three services, i.e. one master and two slaves

2, Build MySQL+MHA

To install mysql database: Edit and install MySQL shell script

mha4mysql-manager-0.57.tar.gz

mha4mysql-node-0.57.tar.gz

Link: Related installation package

Extraction code: 1234

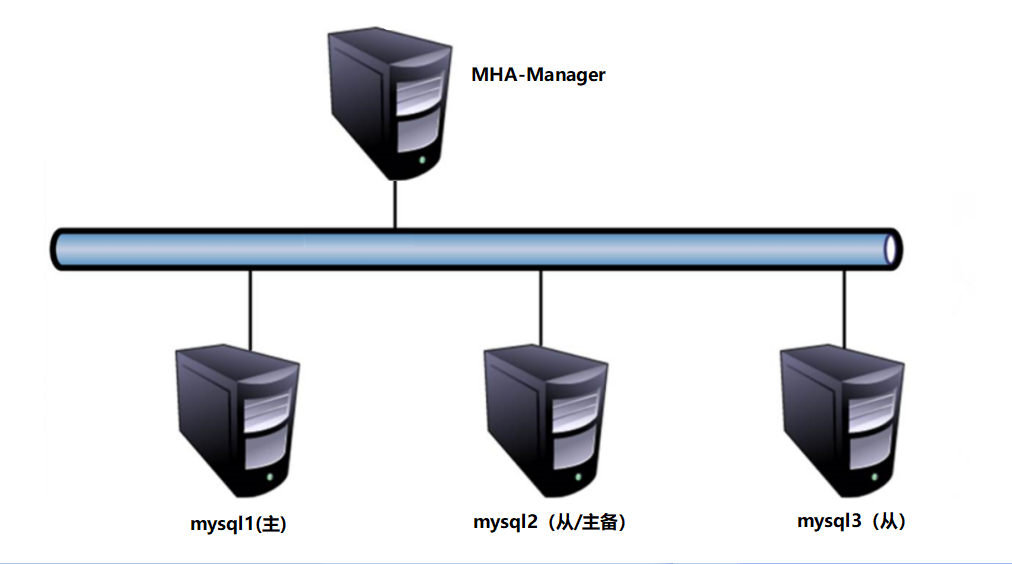

Experimental ideas

1. MHA architecture

- Database installation

- One master and two slaves

- MHA construction

2. Fault simulation

- Simulated main library failure

- The alternative primary library becomes the primary library

- The original fault master database is restored and rejoined to MHA as a slave database

Experimental preparation

| host | operating system | IP address | Required components |

|---|---|---|---|

| MHAmanager | CentOS7 | 192.168.163.10 | MHAnode component, MHAmanager component |

| mysql1 | CentOS7 | 192.168.163.11 | mysql-boost-5.7.20.tar.gz, MHAnode components |

| mysql2 | CentOS7 | 192.168.163.12 | mysql-boost-5.7.20.tar.gz, MHAnode components |

| mysql3 | CentOS7 | 192.168.163.13 | mysql-boost-5.7.20.tar.gz, MHAnode components |

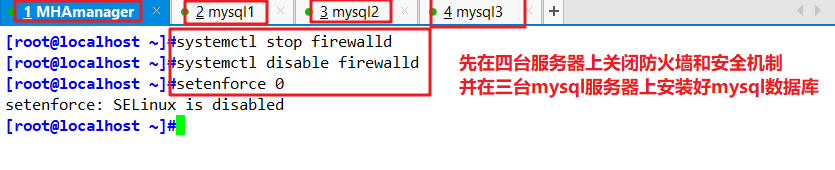

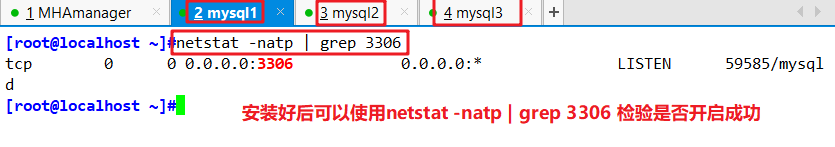

- Now install MySQL on the three MySQL hosts. You can use the script in the link above to install mysql

- Turn off firewalls and security

systemctl stop firewalld systemctl disable firewalld setenforce 0

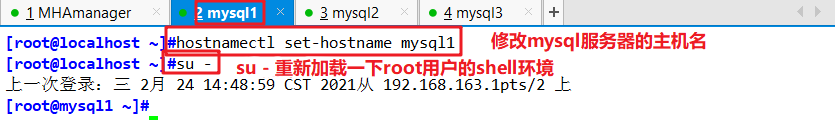

1,modify mysql Hostname of the node

mysql1(192.168.163.11)

hostnamectl set-hostname mysql1 su -

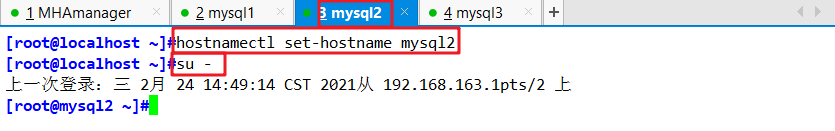

mysql2(192.168.163.12)

hostnamectl set-hostname mysql2 su -

mysql3(192.168.163.13)

hostnamectl set-hostname mysql3 su -

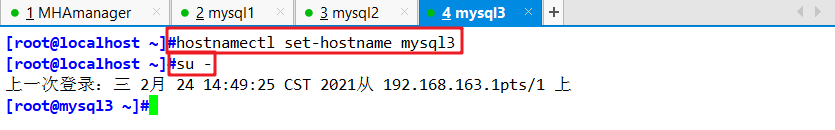

2. Modify the main configuration file / etc / my. Of the three MySQL servers CNF and create a command soft link

mysql1(192.168.163.11)

vim /etc/my.cnf [mysqld] server-id = 11 log_bin = master-bin log-slave-updates = true

systemctl restart mysqld

ln -s /usr/local/mysql/bin/mysql /usr/sbin/

ln -s /usr/local/mysql/bin/mysqlbinlog /usr/sbin/

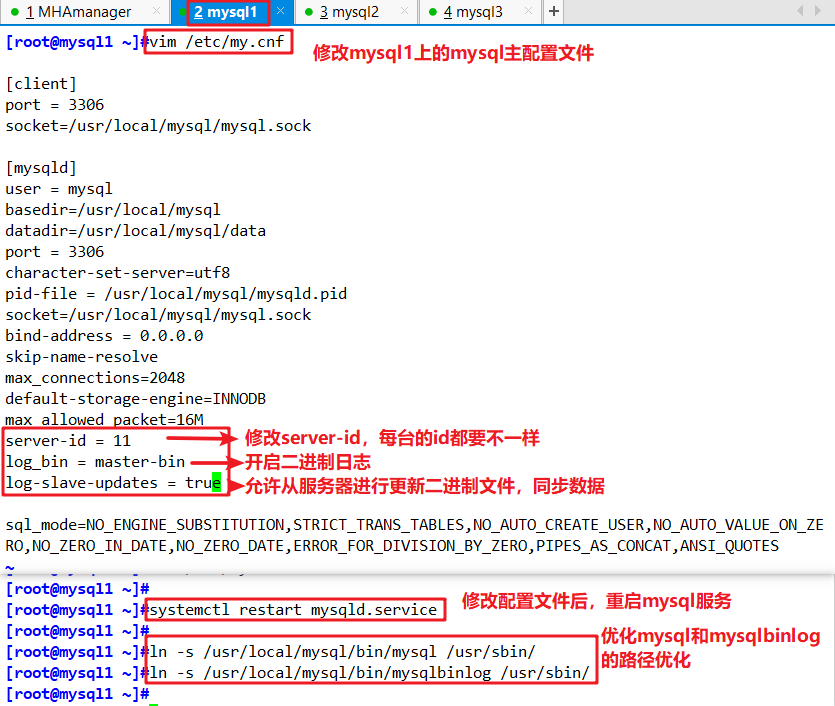

mysql2(192.168.163.12)

mysql3(192.168.163.13)

vim /etc/my.cnf server-id = 12 #If server id = 13, mysql3 is 13. The server IDs of the three servers cannot be the same log_bin = master-bin relay-log = relay-log-bin relay-log-index = slave-relay-bin.index

systemctl restart mysqld

ln -s /usr/local/mysql/bin/mysql /usr/sbin/

ln -s /usr/local/mysql/bin/mysqlbinlog /usr/sbin/

3. Configure MySQL with one master and two slaves

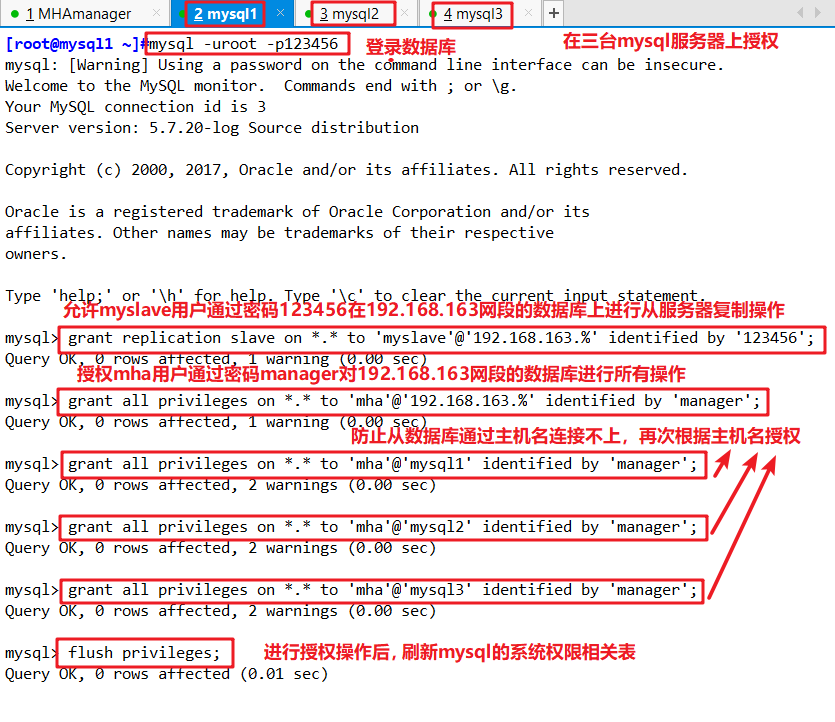

(1) All MySQL servers are authorized to use mysql

mysql1(192.168.163.11)

mysql2(192.168.163.12)

mysql3(192.168.163.13)

mysql -uroot -p123456 grant replication slave on *.* to 'myslave'@'192.168.163.%' identified by '123456'; grant all privileges on *.* to 'mha'@'192.168.163.%' identified by 'manager'; grant all privileges on *.* to 'mha'@'mysql1' identified by 'manager'; grant all privileges on *.* to 'mha'@'mysql2' identified by 'manager'; grant all privileges on *.* to 'mha'@'mysql3' identified by 'manager'; flush privileges;

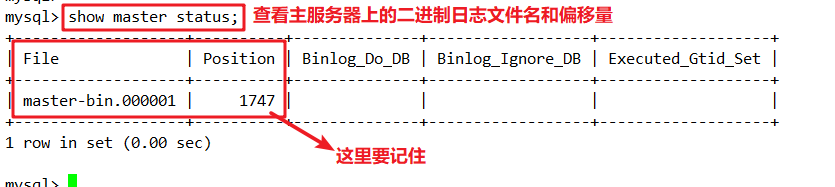

(2) View binaries and synchronization points in the Master node

mysql1(192.168.163.11)

show master status; #Everyone's binary file name or offset may be different. Remember your own

(3) Perform synchronization operations at Slave1 and Slave2 nodes

mysql2(192.168.163.12)

mysql3(192.168.163.13)

change master to master_host='192.168.163.11',master_user='myslave',master_password='123456',master_log_file='master-bin.000001',master_log_pos=1747;

start slave;

show slave status\G

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

#General slave_ IO_ Possibility of running: No:

#The network is blocked

#my.cnf configuration problem

#Incorrect password, file name and pos offset

#The firewall is not turned off

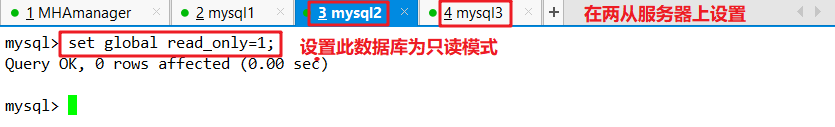

(4) Slave1 and Slave2 nodes are set to read-only mode

mysql2(192.168.163.12)

mysql3(192.168.163.13)

set global read_only=1; #Change back to read / write state set global read_only=0;

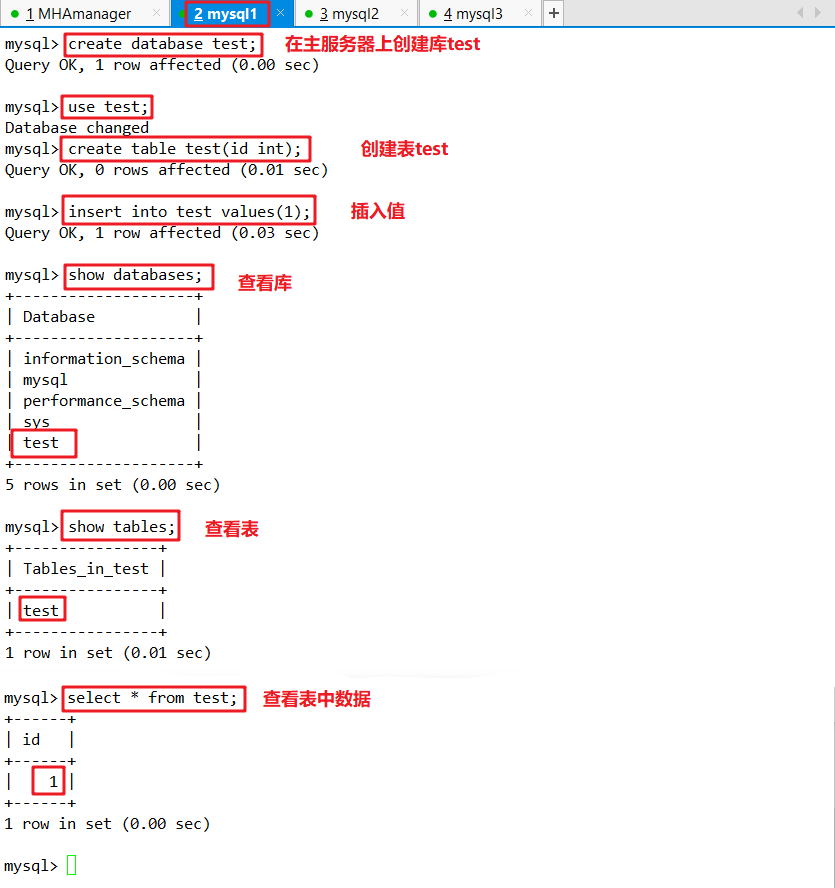

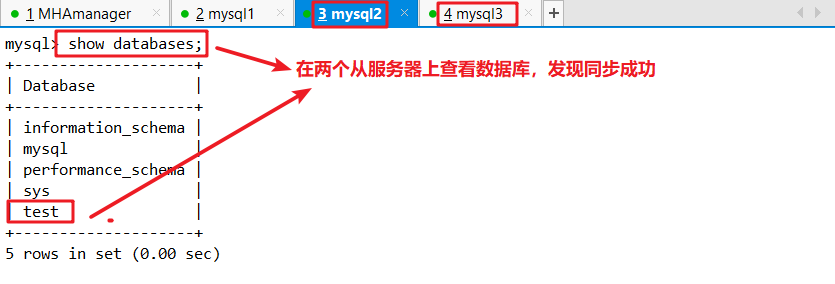

(5) Master slave replication verification

mysql1(192.168.163.11)

Create Library

create database test; use test; create table test(id int); insert into test values(1);

mysql2(192.168.163.12)

mysql3(192.168.163.13)

Query Library validation

show databases;

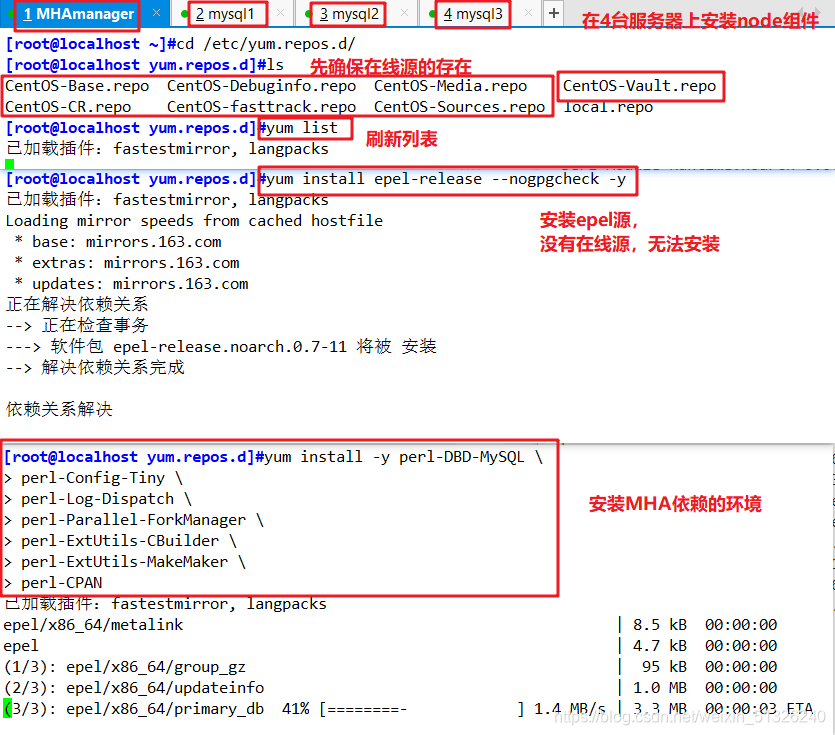

4. Install MHA software

(1) MHA dependent environments are installed on all servers

MHAmanager(192.168.163.10)

mysql1(192.168.163.11)

mysql2(192.168.163.12)

mysql3(192.168.163.13)

- First install the epel source, which needs to be installed online

- Then install the node component on all servers

#Install online source mv /etc/yum.repos.d/repos.bak/CentOS-* /etc/yum.repos.d/ yum list

yum install epel-release --nogpgcheck -y

yum install -y perl-DBD-MySQL

perl-Config-Tiny

perl-Log-Dispatch

perl-Parallel-ForkManager

perl-ExtUtils-CBuilder

perl-ExtUtils-MakeMaker

perl-CPAN

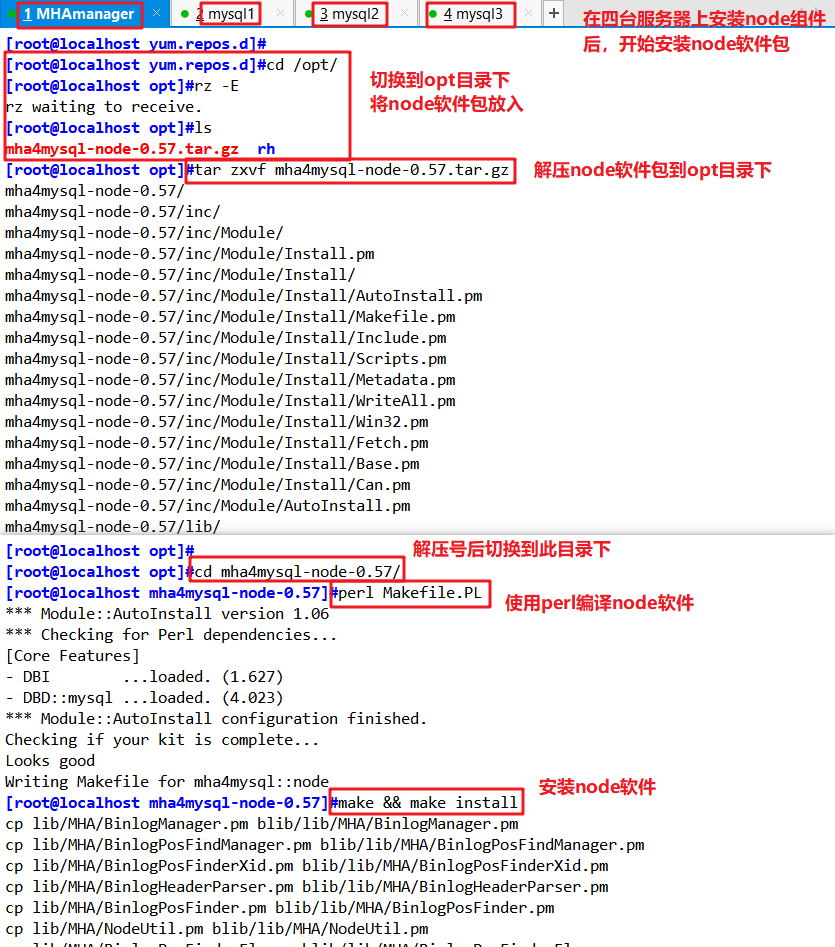

(2) Install MHA node package

MHAmanager(192.168.163.10)

mysql1(192.168.163.11)

mysql2(192.168.163.12)

mysql3(192.168.163.13)

- The version of each operating system is different. Here, centos7 4. Version 0.57 must be selected.

- The node component must be installed on all servers first, and then the manager component must be installed on the MHA manager node, because the manager depends on the node component.

#Package mha4mysql-node-0.57 tar. GZ into / opt directory cd /opt tar zxvf mha4mysql-node-0.57.tar.gz cd mha4mysql-node-0.57 perl Makefile.PL make && make install

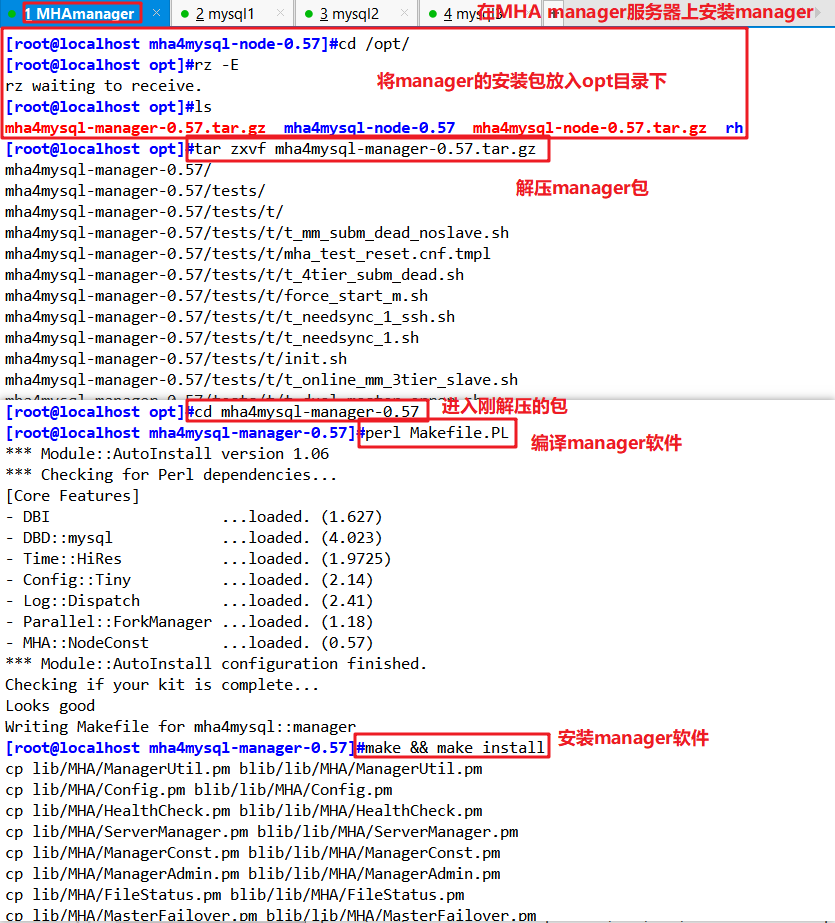

(3) Install the manager component on the MHA manager node

MHAmanager(192.168.163.10)

#Package mha4mysql-manager-0.57 tar. GZ into / opt directory cd /opt tar zxvf mha4mysql-manager-0.57.tar.gz cd mha4mysql-manager-0.57 perl Makefile.PL make && make install

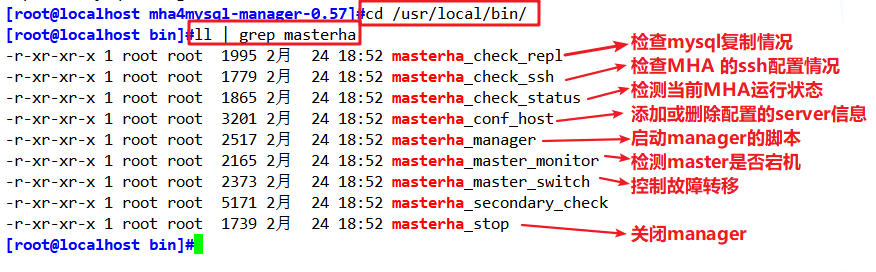

- After the manager component is installed, several tools will be generated under / usr/local/bin

It mainly includes the following:

| assembly | effect |

|---|---|

| masterha_check_ssh | Check the SSH configuration of MHA |

| masterha_check_repl | Check MySQL replication status |

| masterha_manger | Script to start manager |

| masterha_check_status | Detect the current MHA operation status |

| masterha_master_monitor | Check whether the master is down |

| masterha_master_switch | Control failover (automatic or manual) |

| masterha_conf_host | Add or delete configured server information |

| masterha_stop | Close manager |

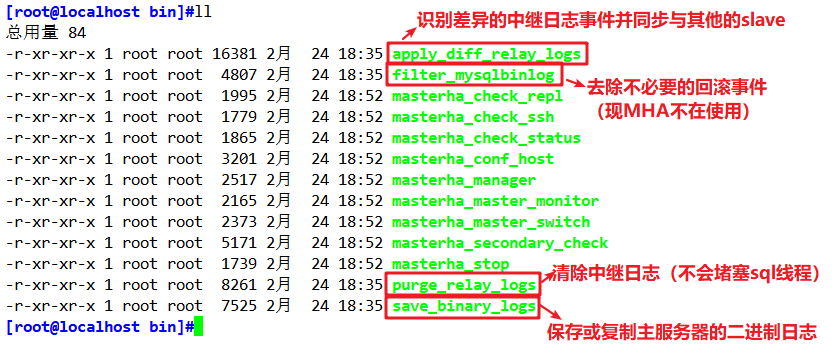

- After the node component is installed, several scripts will also be generated under / usr/local/bin (these tools are usually triggered by MHAManager scripts without human operation)

The main contents are as follows:

| script | effect |

|---|---|

| save_binary_logs | Save and copy binary logs of master |

| apply_diff_relay_logs | Identify the different relay log events and apply the different events to other slave s |

| filter_mysqlbinlog | Remove unnecessary ROLLBACK events (MHA no longer uses this tool) |

| purge_relay_logs | Clear relay log (does not block SQL thread) |

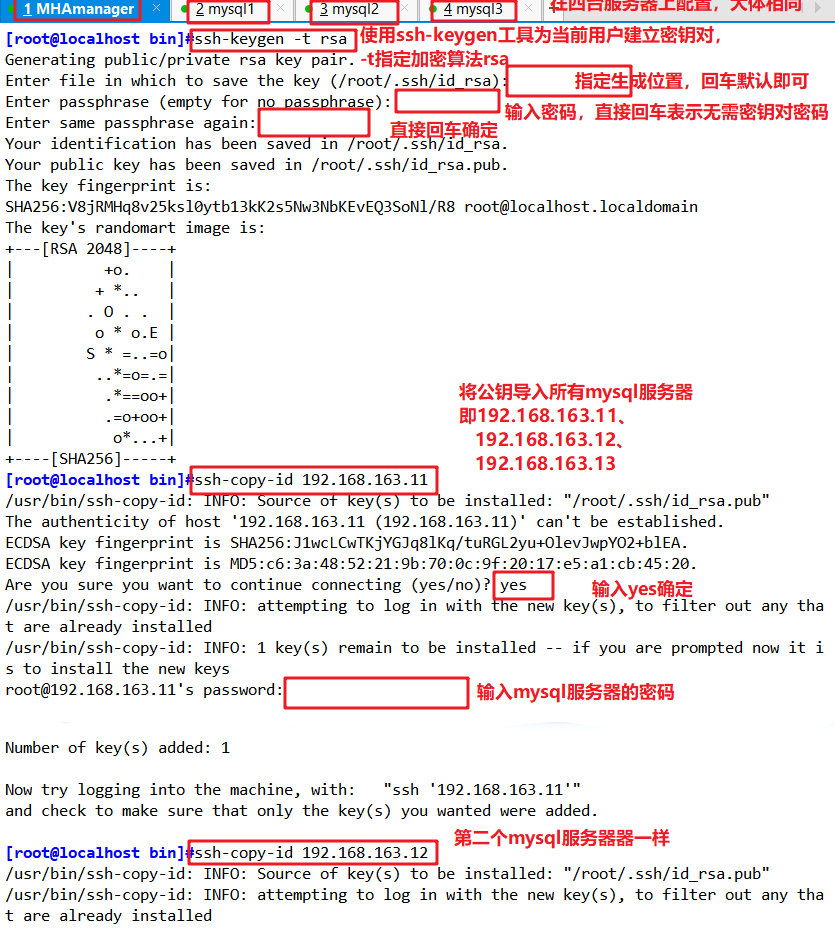

5. Configure password less authentication on all servers

(1) Configure password less authentication to all database nodes on the manager node

MHAmanager(192.168.163.10)

ssh-keygen -t rsa #Press enter all the way ssh-copy-id 192.168.163.11 ssh-copy-id 192.168.163.12 ssh-copy-id 192.168.163.13

- The three mysql servers are the same. They take up too much space and don't map.

(2) Configure password less authentication SSH keygen - t RSA to the database nodes mysql2 and mysql3 on mysql1

mysql1(192.168.163.11)

ssh-keygen -t rsa ssh-copy-id 192.168.163.12 ssh-copy-id 192.168.163.13

(3) Configure password free authentication to database nodes mysql1 and mysql3 on mysql2

mysql2(192.168.163.12)

ssh-keygen -t rsa ssh-copy-id 192.168.163.11 ssh-copy-id 192.168.163.13

(4) Configure password free authentication to the database nodes mysql1 and mysql2 on mysql3

mysql3(192.168.163.13)

ssh-keygen -t rsa ssh-copy-id 192.168.163.11 ssh-copy-id 192.168.163.12

6. Configure MHA on the manager node

MHAmanager(192.168.163.10)

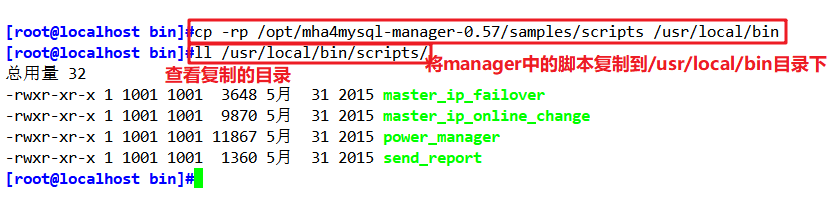

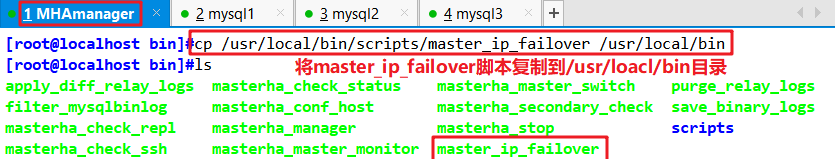

(1) On the manager node, copy the relevant scripts to the / usr/local/bin directory

cp -rp /opt/mha4mysql-manager-0.57/samples/scripts /usr/local/bin

#After copying, there will be four execution files

ll /usr/local/bin/scripts/

| file name | effect |

|---|---|

| master_ip_failover | Script of VIP management during automatic switching |

| master_ip_online_change | Management of vip during online switching |

| power_manager | Script to shut down the host after failure |

| send_report | Script for sending alarms after failover |

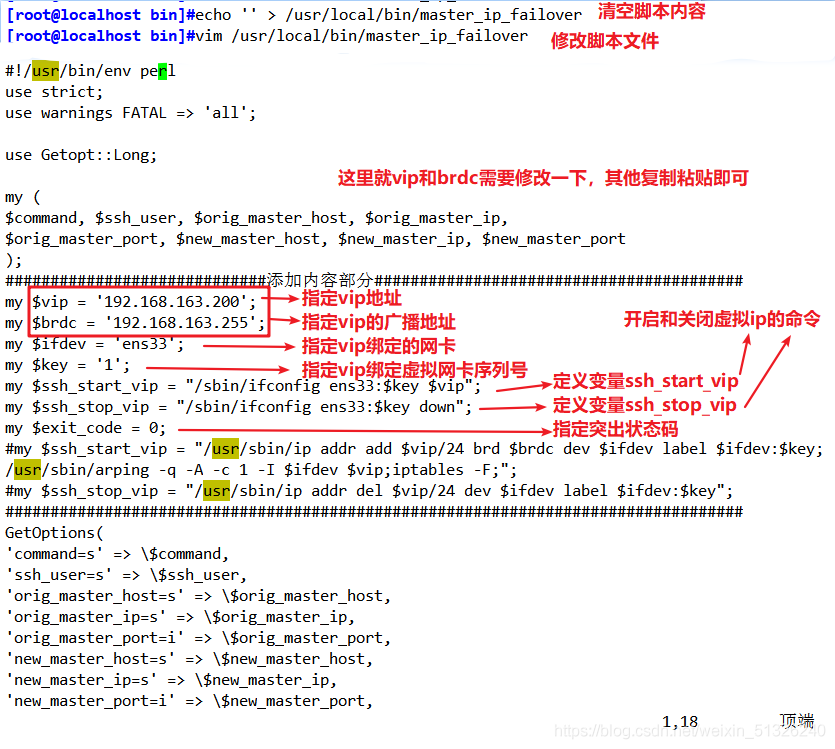

(2) Copy the above script of VIP management during automatic switching to / usr/local/bin directory. Here, use master_ip_failover scripts to manage VIPs and failover

cp /usr/local/bin/scripts/master_ip_failover /usr/local/bin

#Empty the original content first

echo '' > /usr/local/bin/master_ip_failover

#Directly copy and modify vip related parameters

vim /usr/local/bin/master_ip_failover

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

#############################Add content section#########################################

my $vip = '192.168.163.200'; #Specify the address of the vip

my $brdc = '192.168.163.255'; #Specify the broadcast address of vip

my $ifdev = 'ens33'; #Specify the network card bound by vip

my $key = '1'; #Specify the serial number of virtual network card bound by vip

my $ssh_start_vip = "/sbin/ifconfig ens33:$key $vip"; #Represents that the value of this variable is ifconfig ens33:1 192.168.163.200

my $ssh_stop_vip = "/sbin/ifconfig ens33:$key down"; #Indicates that the value of this variable is ifconfig ens33:1 down

my $exit_code = 0; #Specifies that the exit status code is 0

#my $ssh_start_vip = "/usr/sbin/ip addr add $vip/24 brd $brdc dev $ifdev label $ifdev:$key;/usr/sbin/arping -q -A -c 1 -I $ifdev $vip;iptables -F;";

#my $ssh_stop_vip = "/usr/sbin/ip addr del $vip/24 dev $ifdev label $ifdev:$key";

##################################################################################

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

## A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

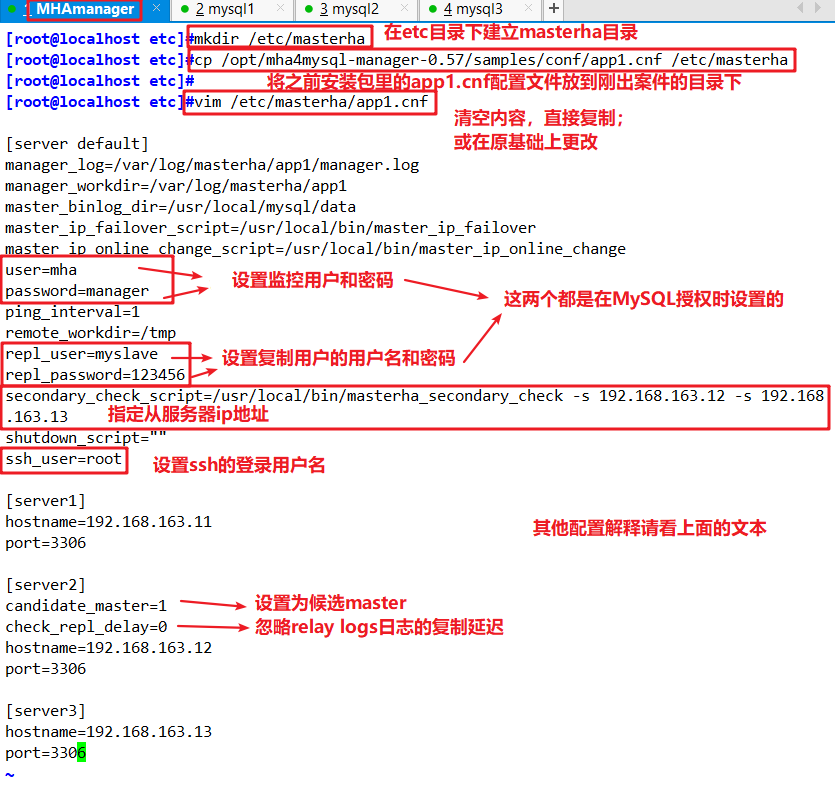

(3)establish MHA Software directory and copy the configuration file, which is used here app1.cnf Configuration files to manage mysql Node server

mkdir /etc/masterha

cp /opt/mha4mysql-manager-0.57/samples/conf/app1.cnf /etc/masterha

vim /etc/masterha/app1.cnf

[server default]

manager_log=/var/log/masterha/app1/manager.log

manager_workdir=/var/log/masterha/app1

master_binlog_dir=/usr/local/mysql/data

master_ip_failover_script=/usr/local/bin/master_ip_failover

master_ip_online_change_script=/usr/local/bin/master_ip_online_change

user=mha

password=manager

ping_interval=1

remote_workdir=/tmp

repl_user=myslave

repl_password=123456

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.163.12 -s 192.168.163.13

shutdown_script=""

ssh_user=root

[server1]

hostname=192.168.163.11

port=3306

[server2]

candidate_master=1

check_repl_delay=0

hostname=192.168.163.12

port=3306

[server3]

hostname=192.168.163.13

port=3306

#--------------------------Profile interpretation--------------------------------------------------------------------------

[server default]

manager_log=/var/log/masterha/app1/manager.log #manager log

manager_workdir=/var/log/masterha/app1.log #manager working directory

master_binlog_dir=/usr/local/mysql/data/ #The location where the master saves binlog. The path here should be consistent with the path of binlog configured in the master so that MHA can find it

master_ip_failover_script=/usr/local/bin/master_ip_failover #The switching script when setting automatic failover, that is, the script above

master_ip_online_change_script=/usr/local/bin/master_ip_online_change #Set the switching script for manual switching

user=mha #Set monitoring user root

password=manager #Set the password of the root user in mysql, which is the password of the monitoring user created in the previous article

ping_interval=1 #Set the monitoring master database. The interval between sending ping packets is 1 second, which is 3 seconds by default. When there is no response after three attempts, the failover will be carried out automatically

remote_workdir=/tmp #Set the save location of binlog when remote mysql switches

repl_user=myslave #Set the user of the replication user

repl_password=123456 #Set the password of the replication user

report_script=/usr/local/send_report #Set the script of the alarm sent after switching

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.163.12 -s 192.168.163.13 #Specifies the IP address of the slave server to check

shutdown_script="" #Set the script to close the failed host after the failure occurs (the main function of this script is to close the host to prevent brain crack, which is not used here)

ssh_user=root #Set the login user name of ssh

[server1]

hostname=192.168.163.11

port=3306

[server2]

hostname=192.168.163.12

port=3306

candidate_master=1

#Set as the candidate master. After setting this parameter, the slave database will be promoted to the master database after the master-slave switch occurs, even if the master database is not the latest slave in the cluster

check_repl_delay=0

#By default, if a slave lags behind the master by more than 100M of relay logs, MHA will not choose the slave as a new master, because the recovery of the slave takes a long time; By setting check_repl_delay=0, MHA triggers the switch. When selecting a new master, the replication delay will be ignored. This parameter is set to candidate_ The host with master = 1 is very useful because the candidate master must be a new master in the process of switching

[server3]

hostname=192.168.163.13

port=3306

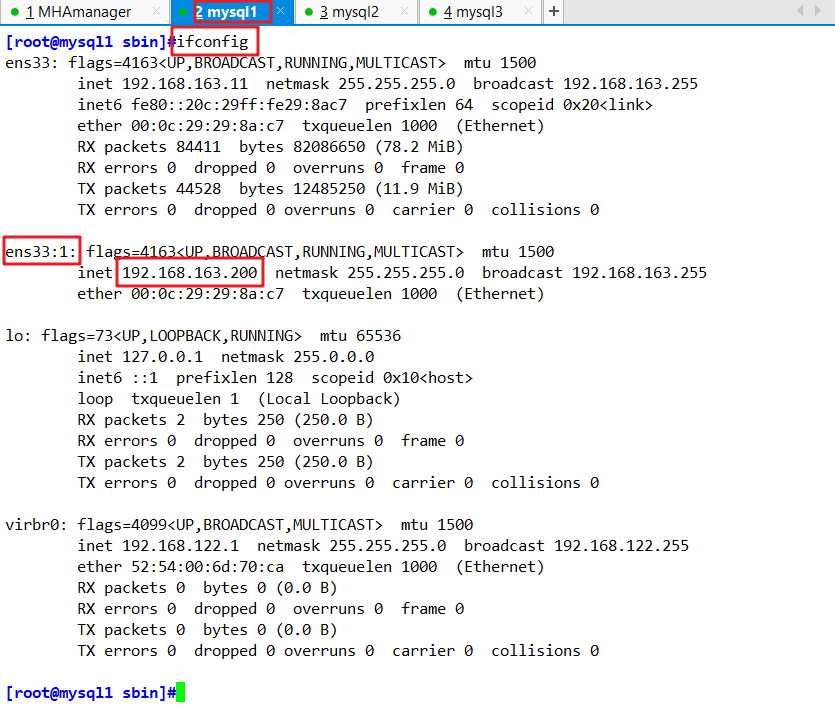

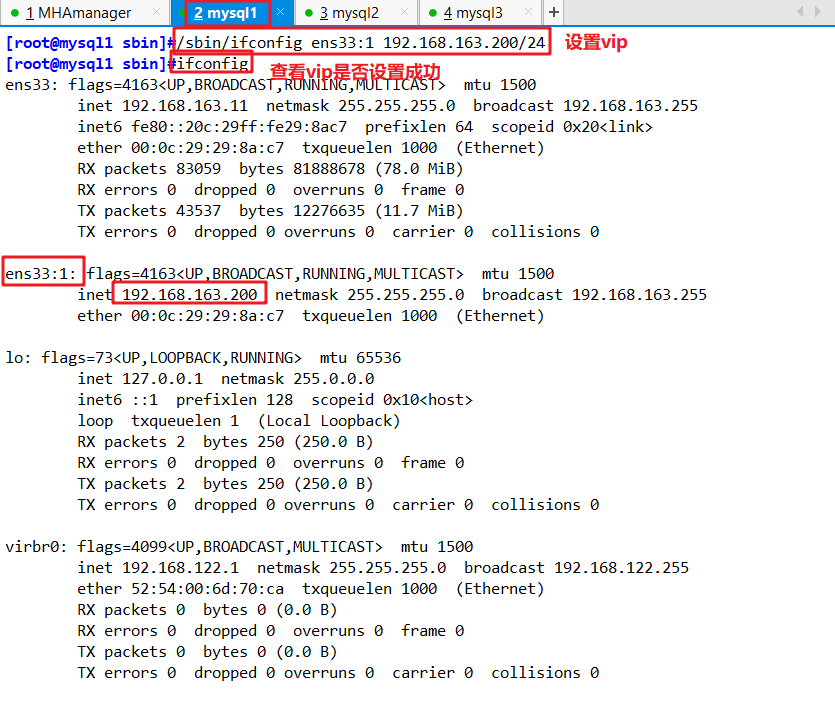

7,The first configuration needs to be in Master Manually turn on virtual on node IP

Master(192.168.163.11)

/sbin/ifconfig ens33:1 192.168.163.200/24

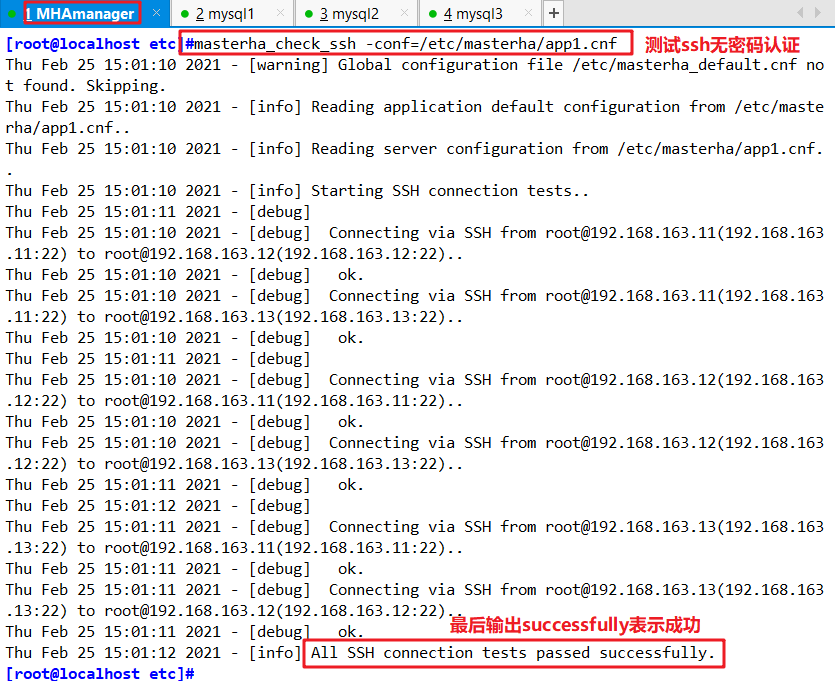

8,stay manager Test on node ssh No password authentication

MHAmanager(192.168.163.10)

masterha_check_ssh -conf=/etc/masterha/app1.cnf

#If it is normal, it will finally output successfully;

#If it fails, you can go to the place where the server has no password authentication to see if there is a problem

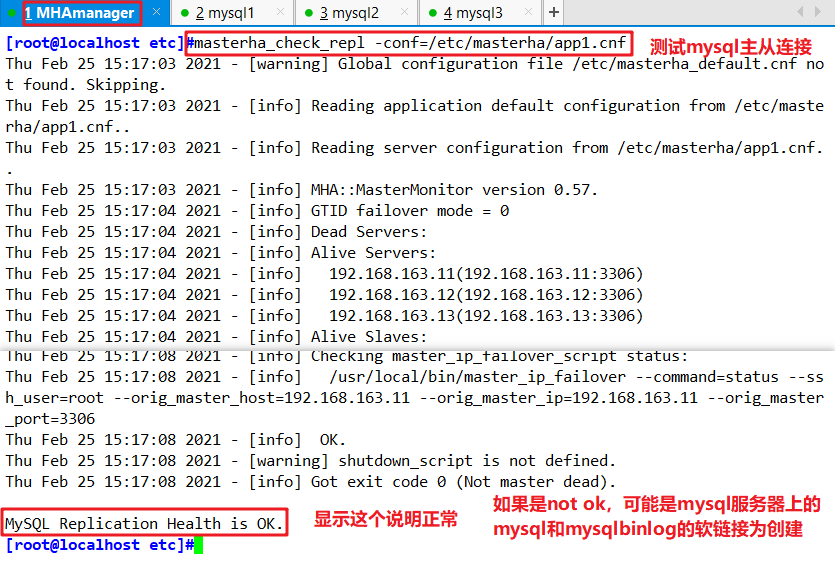

9,stay manager Test on node mysql Master-slave connection

MHAmanager(192.168.163.10)

masterha_check_repl -conf=/etc/masterha/app1.cnf

#Finally, MySQL Replication Health is OK appears, indicating that it is normal;

#MySQL Replication Health is NOT OK appears! , you can see if there are fewer soft links on the MySQL server -- > location of this article: 2. Modify the main configuration file of the three MySQL servers / etc / my CNF and create a command soft link

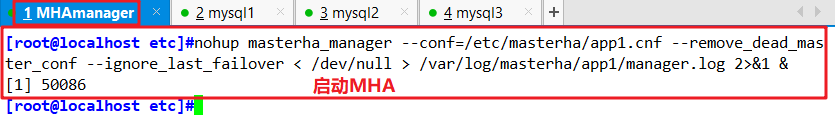

10,stay manager Start on node MHA

MHAmanager(192.168.163.10)

nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

#------------------------Component interpretation----------------------------------------------------------------------------------

--remove_dead_master_conf: This parameter represents the of the old master database after master-slave switching ip Will be removed from the configuration file.

--manger_log: Log storage location.

--ignore_last_failover: By default, if MHA If continuous downtime is detected and the interval between two outages is less than 8 hours, it will not be Failover, The reason for this restriction is to avoid ping-pong effect. This parameter represents ignoring the last MHA Files generated by trigger switching. By default, MHA After switching, the log directory will be recorded in the log directory, that is, the log set above app1.failover.complete File. If it is found that the file exists in this directory during the next switching, the switching will not be allowed unless the file is deleted after the first switching. For convenience, it is set to--ignore_last_failover.

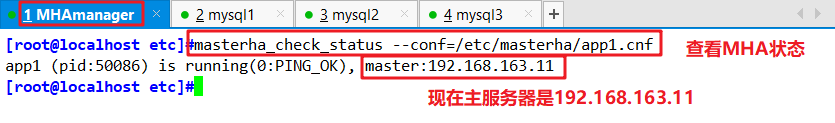

11,View related status

MHAmanager(192.168.163.10)

- Viewing the MHA status, you can see that the current master is Mysql1 node.

masterha_check_status --conf=/etc/masterha/app1.cnf

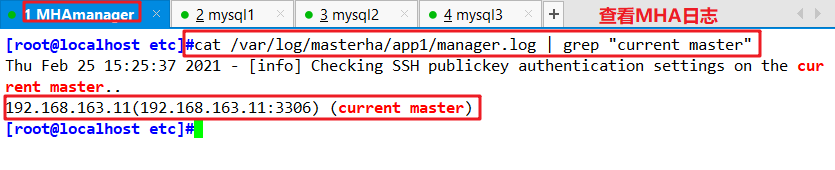

MHAmanager(192.168.163.10)

- View the current log with MH 11.168.master

cat /var/log/masterha/app1/manager.log | grep "current master"

mysql1(192.168.163.11)

- Check the VIP address of Mysql1 and check whether the VIP address 192.168.163.200 of Mysql1 exists. This VIP address will not disappear because the manager node stops MHA service.

ifconfig

Add: to close manager Service, you can use the following command.

masterha_stop --conf=/etc/masterha/app1.cnf Or it can be used directly kill process ID Close by.3, Fault simulation

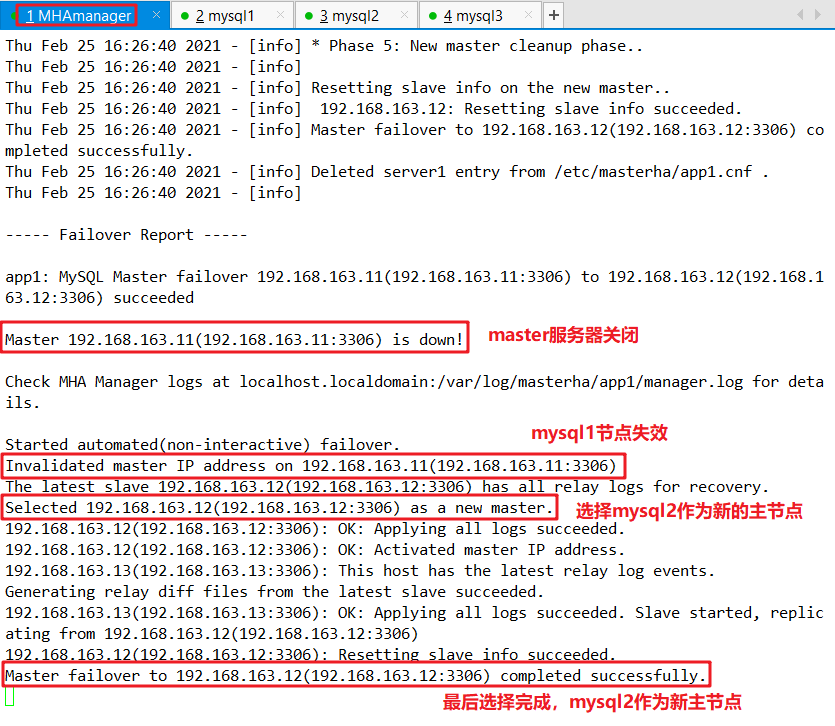

1,fault simulation

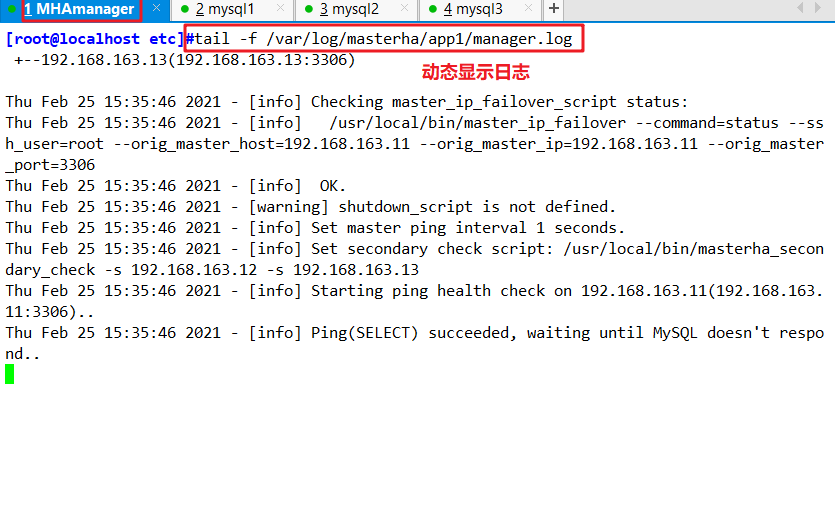

(1)stay manager Monitoring observation logging on node

MHAmanager(192.168.163.10)tail -f /var/log/masterha/app1/manager.log

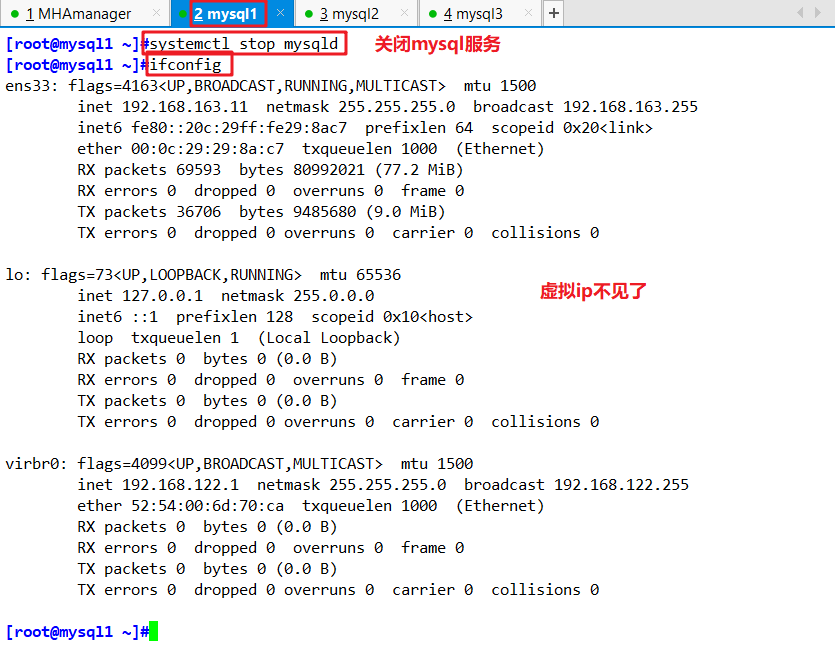

(2)stay Master node Mysql1 Upper stop mysql service

mysql1(192.168.163.11)systemctl stop mysqld or pkill -9 mysqlAfter normal automatic switching once, MHA The process exits. HMA It will be modified automatically app1.cnf File content, will be down mysql1 Node deletion.

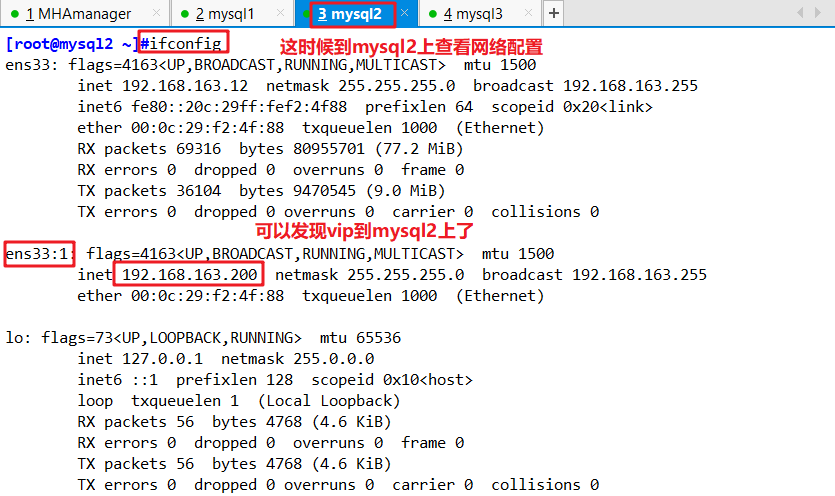

(3)see mysql2 Take over VIP

mysql2(192.168.163.12)ifconfig

(4)go back to manager Monitoring observation logging on node

MHAmanager(192.168.163.10)

Algorithm of failover alternative master database:

1,It is generally judged that the slave library is from( position/GTID)Judging the pros and cons, there are differences in data, which is closest to master of slave,Become an alternative master.

2,When the data is consistent, select the alternative main library according to the order of the configuration file.

3,Set weighted( candidate_master=1),Force an alternate master by weight.

(1)By default, if one slave backward master 100M of relay logs If there is weight, it will fail.

(2)If check_repl_delay=0 If so, even if it lags behind a lot of logs, it is forced to choose it as the alternative master.2,Troubleshooting steps

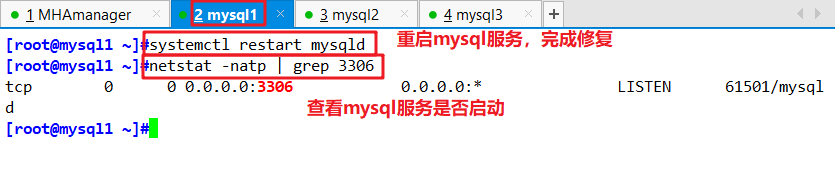

(1)repair mysql

mysql1(192.168.163.11)systemctl restart mysqld

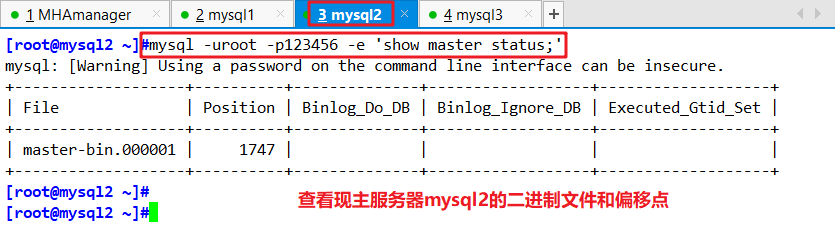

(2)Repair master-slave

- Check binary files and synchronization points on the current main database server Mysql2

mysql2(192.168.163.12)

mysql -uroot -p123456 -e 'show master status;' #Execute show master status in the database;

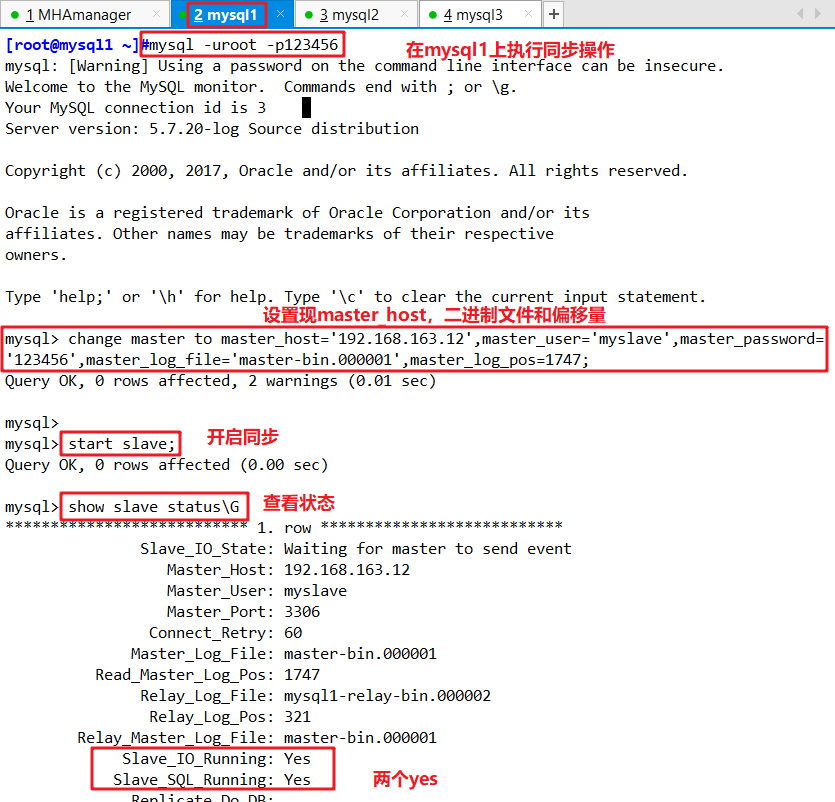

- Perform synchronization on the original master database server mysql1

mysql1(192.168.163.11)

change master to master_host='192.168.163.12',master_user='myslave',master_password='123456',master_log_file='master-bin.000001',master_log_pos=1747; start slave; show slave status\G

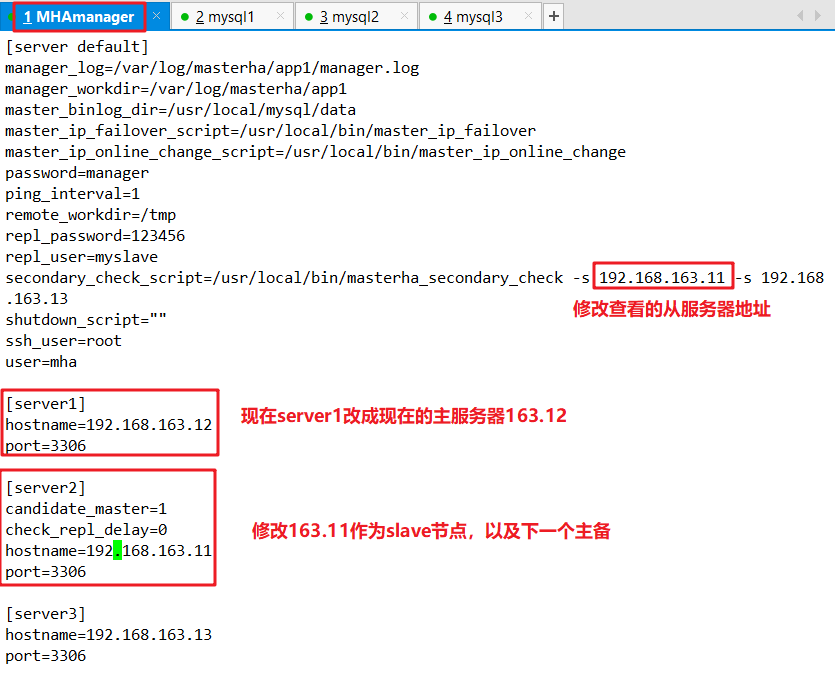

(3)stay manager Modify profile on node app1.cnf

MHAmanager(192.168.163.10)

- Add this record again, because it will disappear automatically when it detects failure

vim /etc/masterha/app1.cnf ...... secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.163.11 -s 192.168.163.13 ...... [server1] hostname=192.168.163.12 port=3306 [server2] candidate_master=1 check_repl_delay=0 hostname=192.168.163.11 port=3306 [server3] hostname=192.168.163.13 port=3306

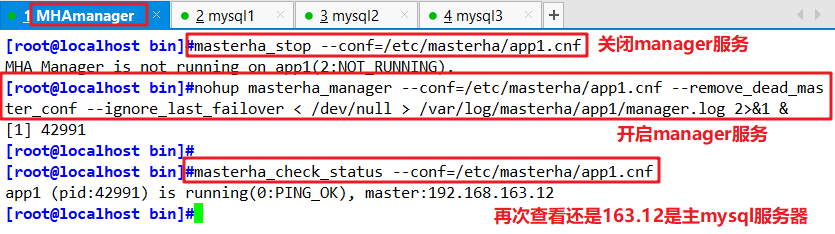

(4)stay manager Start on node MHA

MHAmanager(192.168.163.10)masterha_stop --conf=/etc/masterha/app1.cnf nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 & masterha_check_status --conf=/etc/masterha/app1.cnf