Preface:

Servers running the same service are combined to be called clusters

Centralized clusters: master, slave,

MHA is used to resolve cluster master downtime.

MMM Cluster Architecture: There are two master servers

Overview of MHA

- DeNA youshimaton (now a Facebook company) in Japan

- A set of excellent high-availability software for failover and master-slave promotion in MySQL high-availability environment

Composition of MHA - MHA Manager (Management Node)

- MHA Node (Data Node)

MHA features - During automatic failover, MHA tries to save binary logs from the downtime master server to maximize data loss

- Using MySQL 5.5 semi-synchronous replication can significantly reduce the risk of data loss

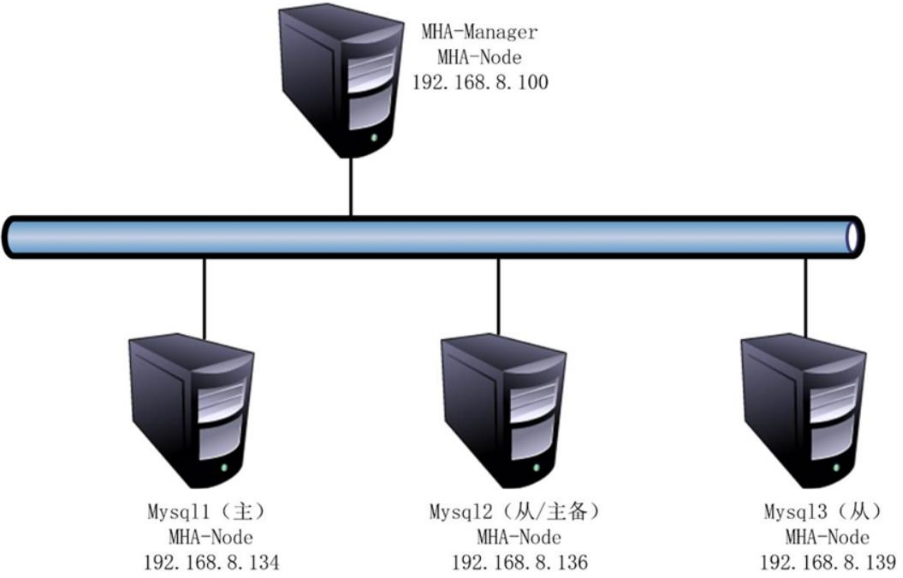

Case Topology

Experimental environment

centos7 version

MHA version 0.57

mysql version 5.6.36

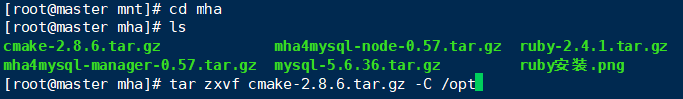

cmake version 2.8.6

MHA: 192.168.191.132

master:192.168.191.160

slave1:192.168.191.161

slave2:192.168.191.162

Set up MySQL master-slave replication environment

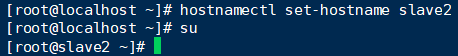

1. Modify hostname to distinguish servers

The installation and configuration of the three mysql servers are consistent, so take master for example

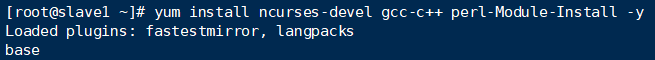

Install compile-dependent environments

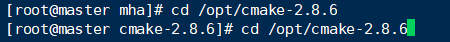

Install the cmake compiler

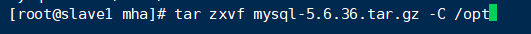

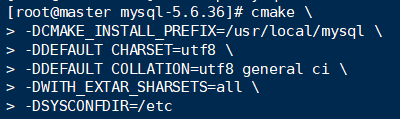

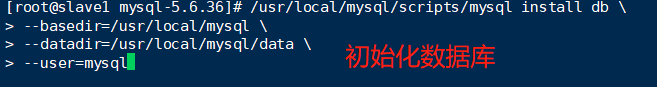

Install mysql database

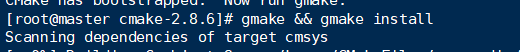

Next is the make && make install process

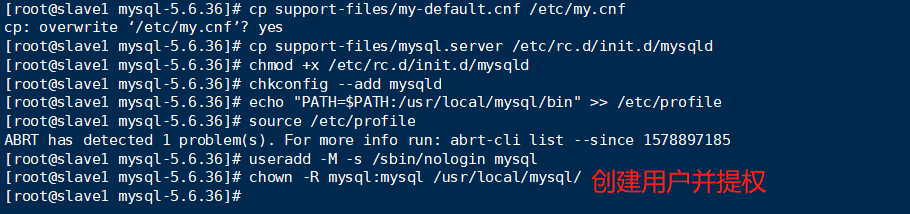

Optimize mysql database path, create mysql user, initialize database

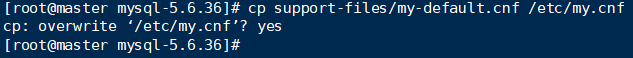

Under vim/etc/my.cnf:

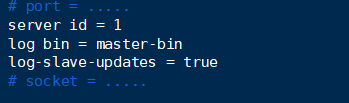

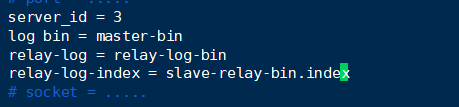

Modify master master master profile for master server

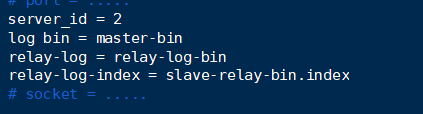

Modify slave1 master profile from server

Modify slave2 master profile from server

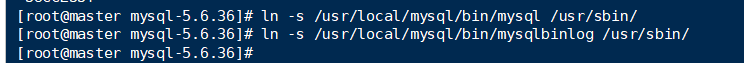

Two soft connections between master and slave servers for easy operation

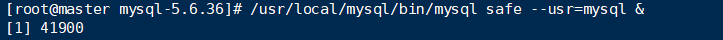

Start MySQL from Master-Slave Server

Create master-slave synchronization user myslavemysql> grant replication slave on *.* to 'myslave'@'192.168.191.%' identified by '123'; Query OK, 0 rows affected (0.00 sec)

Grant all databases mha users permission to operate on the database

mysql> grant all privileges on *.* to 'mha'@'192.168.191.%' identified by 'manager'; Query OK, 0 rows affected (0.00 sec) mysql> grant all privileges on *.* to 'mha'@'master' identified by 'manager'; Query OK, 0 rows affected (0.00 sec) mysql> grant all privileges on *.* to 'mha'@'slave1' identified by 'manager'; Query OK, 0 rows affected (0.00 sec) mysql> grant all privileges on *.* to 'mha'@'slave2' identified by 'manager'; Query OK, 0 rows affected (0.00 sec) #Refresh Database mysql> flush privileges; Query OK, 0 rows affected (0.00 sec)

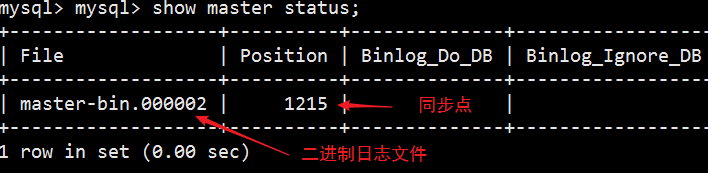

View on the mysql master server as a forbidden file and synchronization point. Do not add anything to the database at this time, as this will cause

Perform synchronization from the servermysql> change master to master_host='192.168.191.160',master_user='myslave',master_password='123',master_log_file='master-bin.000002',master_log_pos=12215; Query OK, 0 rows affected, 2 warnings (0.00 sec) mysql> start slave; Query OK, 0 rows affected (0.01 sec) mysql> show slave status\G; ···Omit some content Slave_IO_Running: Yes //If this is Slave_IO_Running: Connecting, check to see if the firewall on the primary server is turned off Slave_SQL_Running: Yes ···Omit some contentSet two slave servers to read-only mode

mysql> set global read_only=1; Query OK, 0 rows affected (0.00 sec)

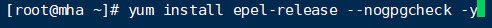

Install Node components (all servers, version 0.57)

Install perl environment

MHA is different for each operating system version, centos7.4 must select version 0.57, the node component must be installed on all servers, and the manager component must be installed on the MHA-manager node, because the manager depends on the node component

Install Node Components[root@master ~]# tar zxvf /abc/mha/mha4mysql-node-0.57.tar.gz [root@master ~]# cd mha4mysql-node-0.57/ [root@master mha4mysql-node-0.57]# perl Makefile.PL [root@master mha4mysql-node-0.57]# make [root@master mha4mysql-node-0.57]# make install

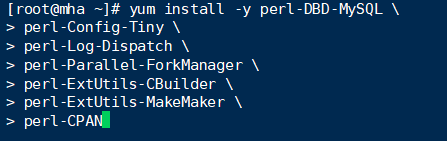

Generate several script tools under / usr/local/bin/after node installation (these tools are usually triggered by MHA Manager scripts and do not require human action)

save_binary_logs: Save and copy master's binaries

apply_diff_relay_logs: Identify differences in relay log events and apply their differences to other slave s

filter_mysqlbinlog: Remove unnecessary ROLLBACK rollback events (MHA no longer uses this tool)

purge_relay_logs: Clears relay logs (does not block SQL threads)

Install the Manager component (version 0.57 on the manager node)[root@manager ~]# tar zxvf /abc/mha/mha4mysql-manager-0.57.tar.gz [root@manager ~]# cd mha4mysql-manager-0.57/ [root@manager mha4mysql-manager-0.57]# perl Makefile.PL [root@manager mha4mysql-manager-0.57]# make [root@manager mha4mysql-manager-0.57]# make install

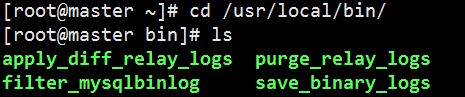

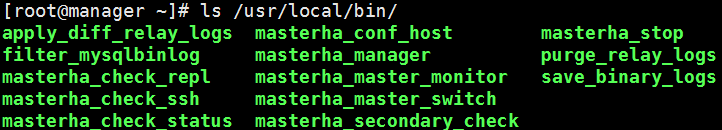

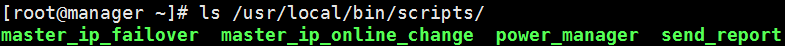

Several script tools are generated below/usr/local/bin/after manager is installed

masterha_check_ssh: Check the SHH configuration of MHA

masterha_check_repl: Check MySQL replication status

masterha_manager: Script to start Manager

masterha_check_status: Detect the current MHA running state

masterha_master_monitor: Detect if master is down

masterha_master_switch: Control failover (automatic or manual)

masterha_conf_host: Add or remove configuration server information

masterha_stop: Turn off manager

Configure Password-Free Authentication

Tools: ssh-keygen, ssh-copy-id

(1) Configure password-free authentication for all data nodes on manager[root@manager ~]# ssh-keygen -t rsa #Enter at all times [root@manager ~]# ssh-copy-id 192.168.191.160 [root@manager ~]# ssh-copy-id 192.168.191.161 [root@manager ~]# ssh-copy-id 192.168.191.162

(2) Password-free authentication configured on master to database nodes slave1 and slave 2

#Enter at all times [root@master ~]# ssh-copy-id 192.168.191.161 [root@master ~]# ssh-copy-id 192.168.191.162

(3) Password-free authentication configured to database nodes master and slave2 on slave1

[root@slave1 ~]# ssh-keygen -t rsa #Enter at all times [root@slave1 ~]# ssh-copy-id 192.168.191.160 [root@slave1 ~]# ssh-copy-id 192.168.191.162

(4) Password-free authentication configured to database nodes master and slave1 on slave2

[root@slave2 ~]# ssh-keygen -t rsa #Enter at all times [root@slave2 ~]# ssh-copy-id 192.168.191.160 [root@slave2 ~]# ssh-copy-id 192.168.191.161

Configure MHA (on the manager node)

Copy the script to the /usr/local/bin directory[root@manager ~]# cp -ra /root/mha4mysql-manager-0.57/samples/scripts /usr/local/bin

Four execution files are copied

master_ip_failover: script for VIP management when switching automatically

master_ip_online_change: vip management during online switching

power_manager: A script to shut down the host after a failure occurs

send_report: A script that sends an alert after failover

(2) Copy the above automatic switch VIP management scripts to/usr/local/bin directory and use scripts to manage VIP[root@manager ~]# cp /usr/local/bin/scripts/master_ip_failover /usr/local/bin

Modify master_ip_failover script (delete original content, rewrite)

[root@manager ~]# vim /usr/local/bin/master_ip_failover #!/usr/bin/env perl use strict; use warnings FATAL => 'all'; use Getopt::Long; my ( $command, $ssh_user, $orig_master_host, $orig_master_ip, $orig_master_port, $new_master_host, $new_master_ip, $new_master_port ); #############################Add Content Section######################################### #Set Drift IP my $vip = '192.168.191.200'; my $brdc = '192.168.191.255'; my $ifdev = 'ens33'; my $key = '1'; my $ssh_start_vip = "/sbin/ifconfig ens33:$key $vip"; my $ssh_stop_vip = "/sbin/ifconfig ens33:$key down"; my $exit_code = 0; #my $ssh_start_vip = "/usr/sbin/ip addr add $vip/24 brd $brdc dev $ifdev label $ifdev:$key;/usr/sbin/arping -q -A -c 1 -I $ifdev $vip;iptables -F;"; #my $ssh_stop_vip = "/usr/sbin/ip addr del $vip/24 dev $ifdev label $ifdev:$key"; ################################################################################## GetOptions( 'command=s' => \$command, 'ssh_user=s' => \$ssh_user, 'orig_master_host=s' => \$orig_master_host, 'orig_master_ip=s' => \$orig_master_ip, 'orig_master_port=i' => \$orig_master_port, 'new_master_host=s' => \$new_master_host, 'new_master_ip=s' => \$new_master_ip, 'new_master_port=i' => \$new_master_port, ); exit &main(); sub main { print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n"; if ( $command eq "stop" || $command eq "stopssh" ) { my $exit_code = 1; eval { print "Disabling the VIP on old master: $orig_master_host \n"; &stop_vip(); $exit_code = 0; }; if ($@) { warn "Got Error: $@\n"; exit $exit_code; } exit $exit_code; } elsif ( $command eq "start" ) { my $exit_code = 10; eval { print "Enabling the VIP - $vip on the new master - $new_master_host \n"; &start_vip(); $exit_code = 0; }; if ($@) { warn $@; exit $exit_code; } exit $exit_code; } elsif ( $command eq "status" ) { print "Checking the Status of the script.. OK \n"; exit 0; } else { &usage(); exit 1; } } sub start_vip() { `ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`; } # A simple system call that disable the VIP on the old_master sub stop_vip() { `ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`; } sub usage { print "Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n"; }Note: First configuration requires manual turning on virtual IP on master

[root@master ~]# /sbin/ifconfig ens33:1 192.168.191.200/24

(4) Create MHA software catalog and copy configuration file

[root@manager ~]# mkdir /etc/masterha [root@manager ~]# cp /root/mha4mysql-manager-0.57/samples/conf/app1.cnf /etc/masterha/ [root@manager ~]# vim /etc/masterha/app1.cnf [server default] #manager Profile manager_log=/var/log/masterha/app1/manager.log #manager log manager_workdir=/var/log/masterha/app1 #Master saves the binlog in the same location as the bilog configured in master master_binlog_dir=/home/mysql #Set the switch script for automatic failover.That's the script above master_ip_failover_script=/usr/local/bin/master_ip_failover #Set switch script for manual switch master_ip_online_change_script=/usr/local/bin/master_ip_online_change #This password is the one you created earlier to monitor users password=manager ping_interval=1 remote_workdir=/tmp #Set Replication User Password repl_password=123 #Set up users for replication users repl_user=myslave #Set script to alert when a switch occurs secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.150.158 -s 192.168.150.244 #Set Up Failure Shutdown Failure Script Host shutdown_script="" #Set ssh login user name ssh_user=root #Set up monitoring users user=mha [server1] hostname=192.168.150.240 port=3306 [server2] #Set as candidate master, if this parameter is set, the sender master slave will be upgraded from the library to the master library after the sender slave switch candidate_master=1 #By default, MHA will not choose a slave as the new master if it falls behind master's relay logs of 100M check_repl_delay=0 hostname=192.168.150.158 port=3306 [server3] hostname=192.168.150.244 port=3306

test

SSH Free Interactive Logon (manager node)[root@manager ~]# masterha_check_ssh -conf=/etc/masterha/app1.cnf ···Omit some content #Output success if normal Sun Jan 12 19:19:11 2020 - [info] All SSH connection tests passed successfully.

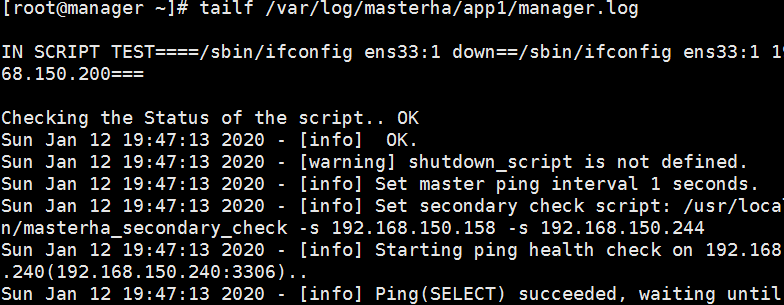

Start MHA to see MHA status

#Start MHA and run in the background [root@manager ~]# nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 & [1] 13525 #Looking at the MHA status, you can see that the current master is a mysql node [root@manager ~]# masterha_check_status --conf=/etc/masterha/app1.cnf app1 (pid:13525) is running(0:PING_OK), master:192.168.191.200

- - remove_dead_master_conf: This parameter indicates that the old ip will be removed from the configuration file when a master-slave switch occurs

- - ignore_last_failover: By default, failover will not occur if MHA detects a successive downtime with less than 8 hours of downtime interval. This restriction is to avoid ping-pong effect, which means ignoring files generated after the last MHA triggered the switch. By default, MHA switches to the log directory, which is aboveSet the appl.failover.complete file, which will not be allowed to trigger a switch if it is found to exist in the directory next time you switch, unless you receive the deletion after the first switch. For convenience, set this to -ignore_last_failover

Start monitoring observation logging on manager

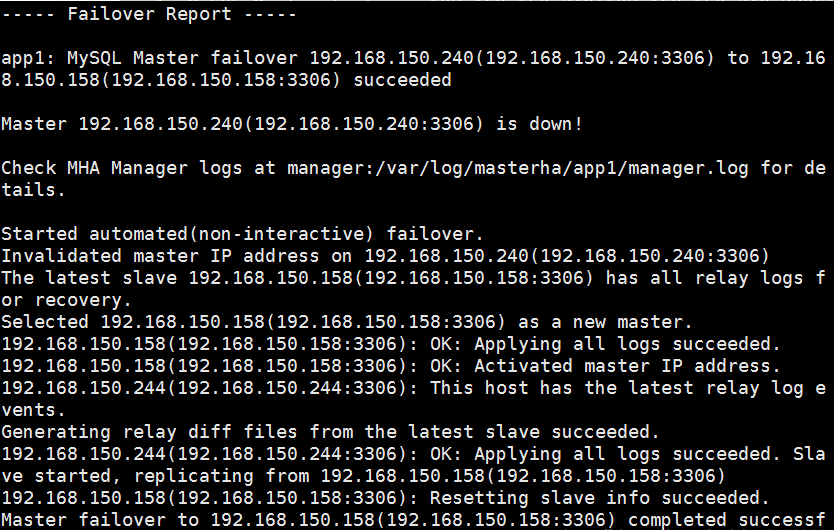

Analog failure

Execute Stop mysql Service on master of Primary Library[root@master ~]# pkill -9 mysqld

manager detects that the primary server is down and switches the standby primary server to the primary server

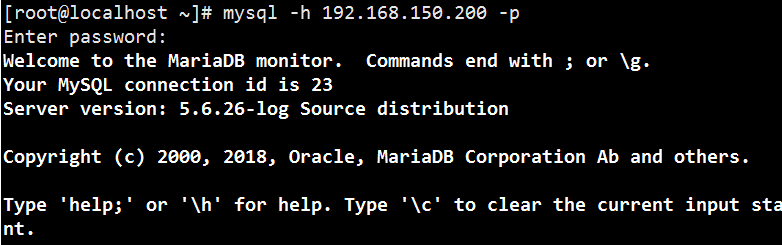

View IP address on slave1[root@slave1 ~]# ifconfig ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.191.160 netmask 255.255.255.0 broadcast 192.168.191.255 ··· #Virtual IP address translation to alternate primary server ens33:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.191.200 netmask 255.255.255.0 broadcast 192.168.191.255 ···At this time, the client can log in through the virtual IP address, or log in.

The MHA high availability configuration and failover experiments are complete.