9.1 migrate the data of the original CM node to the new node

9.1.1 backup the original CM node data

- It mainly backs up the monitoring data and management information of CM. The data directory includes:

/var/lib/cloudera-host-monitor /var/lib/cloudera-service-monitor /var/lib/cloudera-scm-server /var/lib/cloudera-scm-eventserver /var/lib/cloudera-scm-headlamp ### Note: compress and transmit the backup command to prevent the change of ownership and permission of the directory

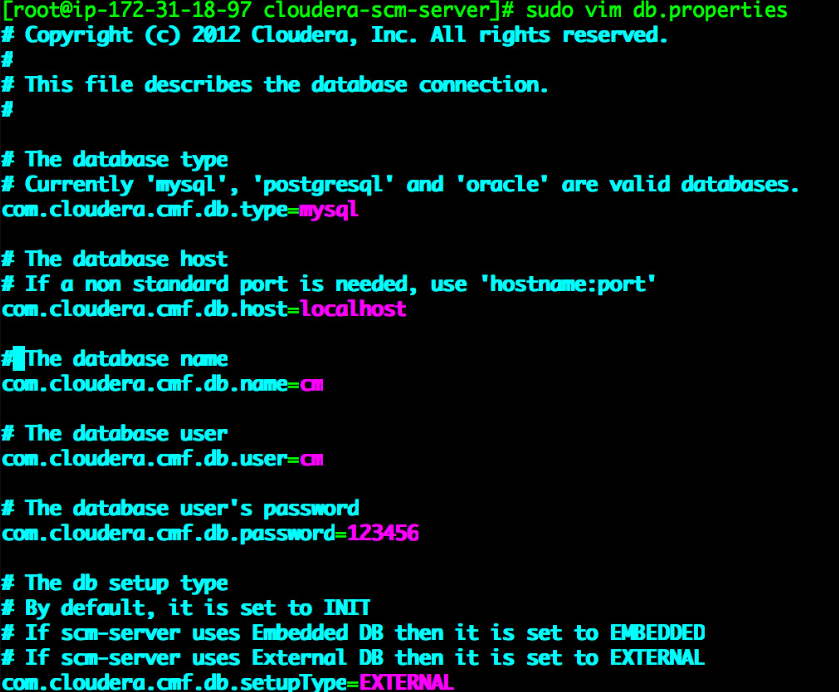

9.1.2 modify CM database configuration information

- Modify the database configuration file of the new CM / etc / cloudera SCM server / DB Properties, configuration file content

[root@ip-172-31-18-97 cloudera-scm-server]# sudo vim db.properties # Copyright (c) 2012 Cloudera, Inc. All rights reserved. # # This file describes the database connection. # # The database type # Currently 'mysql', 'postgresql' and 'oracle' are valid databases. com.cloudera.cmf.db.type=mysql # The database host # If a non standard port is needed, use 'hostname:port' com.cloudera.cmf.db.host=localhost # The database name com.cloudera.cmf.db.name=cm # The database user com.cloudera.cmf.db.user=cm # The database user's password com.cloudera.cmf.db.password=123456 # The db setup type # By default, it is set to INIT # If scm-server uses Embedded DB then it is set to EMBEDDED # If scm-server uses External DB then it is set to EXTERNAL com.cloudera.cmf.db.setupType=EXTERNAL

- Modify the marked red part according to your own configuration information

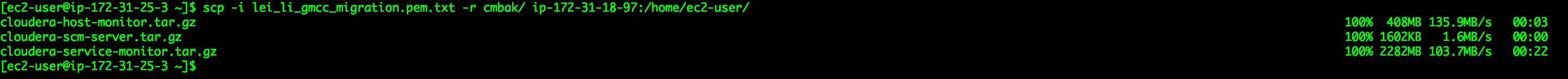

9.1.3 CM backup data import new node

- Copy the data backed up on the original CM to the new CM node

- Restore the backup data to the corresponding directory through the following command

[ec2-user@ip-172-31-18-97 cmbak]$ sudo tar -zxvf cloudera-host-monitor.tar.gz -C /var/lib/ [ec2-user@ip-172-31-18-97 cmbak]$ sudo tar -zxvf cloudera-service-monitor.tar.gz -C /var/lib/ [ec2-user@ip-172-31-18-97 cmbak]$ sudo tar -zxvf cloudera-scm-server.tar.gz -C /var/lib/ [ec2-user@ip-172-31-18-97 cmbak]$ sudo tar -zxvf cloudera-scm-eventserver.tar.gz -C /var/lib/ [ec2-user@ip-172-31-18-97 cmbak]$ sudo tar -zxvf cloudera-scm-headlamp.tar.gz -C /var/lib/

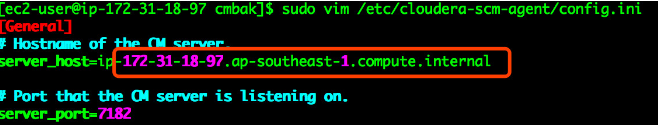

9.1.4 update CM Server pointing of all nodes in the cluster

- Modify / etc / cloudera SCM agent / config. On all nodes of the cluster Server in INI file_ The host value is the hostname of the new CM node

9.1.5 migrate the CM Service role of the original cm node to the new node

- Start the cloudera SCM server and cloudera SCM agent services of the new CM node

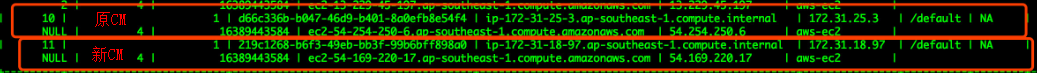

[ec2-user@ip-172-31-18-97 253back]# sudo systemctl start cloudera-scm-server [ec2-user@ip-172-31-18-97 253back]# sudo systemctl start cloudera-scm-agent ### Note: after starting the cloudera SCM agent service on the new cm node, the information of the CM node will be added to the HOSTS table of the CM library to view the HOSTS corresponding to the new cm node_ ID

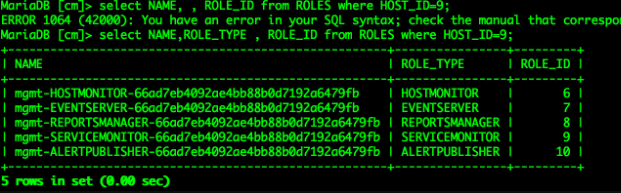

- Log in to mysql database and view cm host information of Cloudera Manager in hosts table

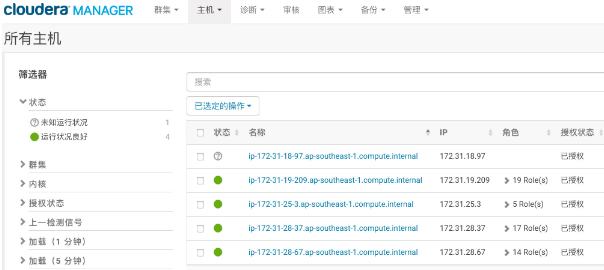

- Before migration, you can see that the new cm node does not have any role through the CM management interface

- Use the following command to migrate the role of the old CM to the new CM node

update ROLES set HOST_ID=11 where NAME like 'mgmt%';

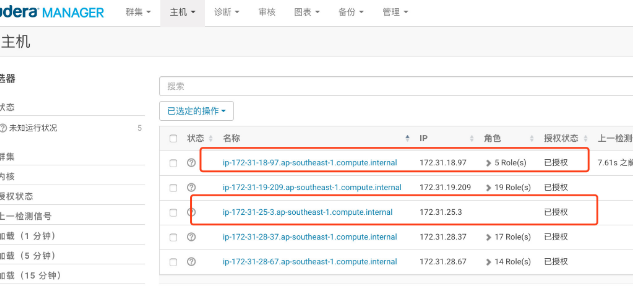

- After the operation, the role of the original CM node is migrated to the new CM node

- Delete the original cm node from the cluster through the CM management interface

- Delete the original CM node

- Since Kerberos is configured in the cluster, the Kerberos server needs to be updated. If Kerberos is not migrated, this step does not need to be considered

- Start Cloudera Management Service through CM management interface

- Due to the database migration, you need to modify the database configuration corresponding to hive/hue/oozie

- Database migration has not been done. You can skip this step

- Restart the cluster after making the above modifications

9.2 cluster service verification after migration

- Operation interface of original CM, historical monitoring data

- Log in to the CM management platform and check that the cluster status is normal

- After migration, you can view the historical monitoring data of the normal cluster

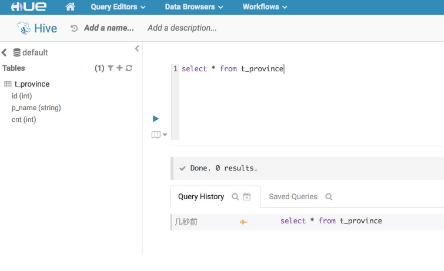

- Hue access and operation are normal

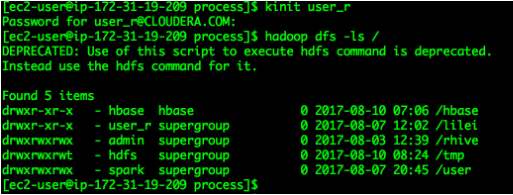

- HDFS access and operation are normal

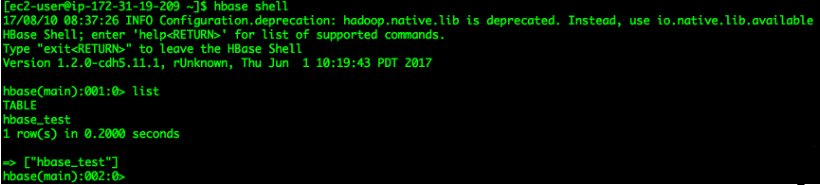

- HBase operates normally through hue and shell

- Hue access and operation are normal

9.3 common problem solving

9.3.1 FAQ 1

- The problem is caused by abnormal communication between cloudera SCM agent and supervisor.

- resolvent:

- Kill the supervisor process on the alarm node, and then restart the agent service

[root@ip-172-31-28-37 cloudera-scm-agent]# ps -ef |grep supervisord root 26910 1 0 07:02 ? 00:00:00 /usr/lib64/cmf/agent/build/env/bin/python /usr/lib64/cmf/agent/build/env/bin/supervisord root 28806 28748 0 07:03 pts/0 00:00:00 grep --color=auto supervisord [root@ip-172-31-28-37 cloudera-scm-agent]# kill -9 26910 [root@ip-172-31-28-37 cloudera-scm-agent]# systemctl restart cloudera-scm-agent

9.3.2 FAQ 2

- The / opt/cloudera/csd directory was not migrated during CM migration, causing problems.

- resolvent:

- Copy the / opt/cloudera/csd directory on the original CM node to the directory corresponding to the new CM node

- Restart cloudera SCM server service

[ec2-user@ip-172-31-18-97 253back]# sudo systemctl start cloudera-scm-server

9.3.3 FAQ 3

- Service Monitor failed to start, resulting in abnormal information

- During CM migration, the file in / var / lib / cloudera service monitor directory is missing

- resolvent:

- Overwrite the data of / var / lib / cloudera service monitor directory

9.3.4 FAQ 4

- After the cluster migration is completed, the NameNode and ResourceManager services with high availability services after the cluster is started cannot display the primary and standby nodes normally, and the summary information of HDFS cannot be displayed normally

- Because the cluster is configured with kerberos, the new CM node does not generate keytab

- resolvent:

- Stop all services of the CM node, and then generate the keytab of the host

9.4 summary

- How to migrate CM without stopping the cluster service requires the following conditions:

- The hostname and IP address of the new CM node are consistent with those of the old CM node;

- If the database needs to be migrated, the hostname and IP address of the new database are consistent with the original database, and the data of the original database needs to be imported into the new database;

- If the Kerberos MIT KDC needs to be migrated, the hostname and IP address of the new MIT KDC node and the old MIT KDC node are the same, and the old MIT KDC database data needs to be imported into the new MIT KDC database;

- Note: if you only do step 1, you do not need to restart the relevant services of hadoop cluster, and the existing jobs of hadoop cluster will not be affected; If 2 or 3 steps are performed, the cluster operation will be temporarily affected, but there is no need to restart hadoop cluster related services;

Big data video recommendation:

CSDN

Big data voice recommendation:

Application of enterprise level big data technology

Recommendation system of big data machine learning cases

natural language processing

Big data foundation

Artificial intelligence: introduction to deep learning to mastery