1. Distributed file system

Distributed file system refers to the physical storage resources managed by the file system. It is not necessarily on the local node, but connected to the node through the network.

It is to gather some scattered (distributed on various computers in the LAN) shared folders into one folder (virtual shared folder).

When users want to access these shared folders, they can see all the shared folders linked to the virtual shared folder as long as they open the virtual shared folder. Users can't feel that these shared files are scattered on each computer.

The advantages of distributed file system are centralized access, simplified operation, data disaster recovery, and improved file access performance.

2. Introduction to moosefs

MooseFS is a distributed file system with high fault tolerance. It can store resources in several different physical media, and only provide users with one access interface. Its operation is exactly the same as that of other file systems:

|Hierarchical file structure (directory tree structure);

l) store POSIX file attributes (authority, last access, modification time);

l) support special files (block folders, character files, pipes and socket s)

l) soft link (the file name points to the target file) and hard link (different file names point to the same piece of data);

l) access to the file system based on IP address or password only.

MoosFS has the following characteristics:

l) high reliability: data can be stored in several different places;

l) scalability: computers or disks can be added dynamically to increase the capacity and output of the system;

l) high controllability: the system can set the time interval for deleting files;

l) traceability: it can generate file snapshots according to different operations (write / access) of files.

3. Architecture of moosefs

Four modules

The metadata server Master is responsible for managing the file system and maintaining metadata in the whole system.

Metadata log server: backup the change log file of the Master server. The file type is changelog_ml.*.mfs. When the Master server data is lost or damaged, you can obtain files from the log server for repair.

Data storage server Chunk Server is the server that actually stores data. When storing files, the files will be saved in blocks and copied between data servers. The more data servers, the greater the "capacity" that can be used, the higher the reliability and the better the performance.

The Client client} can mount the MFS file system like NFS, and its operation is the same.

Working system

Metadata is stored in the memory and disk of the management server (periodically updating binary files and growing log files). At the same time, binary files and log files will be synchronized to the metadata server.

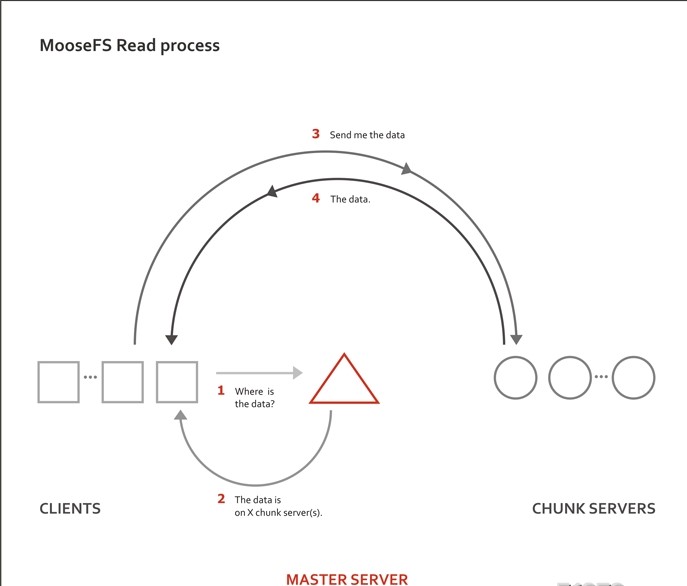

MFS read data processing

#The client sends a read request to the metadata server #The location where the metadata server stores the data (Chunk Server of IP Address and Chunk Number) inform the client (A large piece of data will be partitioned and stored on different data servers) #The client requests to send data to a known Chunk Server #Chunk Server sends data to clients

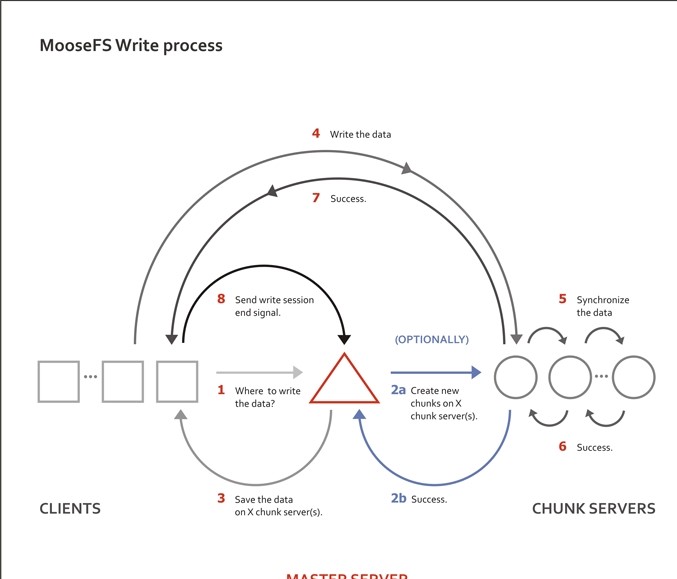

MFS write data processing

It is divided into create and update to modify files:

#The client sends a write request to the metadata server #The metadata server interacts with the Chunk Server (only when the required Chunks chunks exist), However, the metadata server only creates new chunks on some servers Chunks,Created successfully by Chunk Server Inform the metadata server that the operation is successful. #The metadata server tells the client which Chunks of which Chunk Server can write data (The number of copies is determined by master To maintain) #The client writes data to the specified Chunk Server #The Chunk Server synchronizes data with other chunk servers as required. After the synchronization is successful, the Chunk Server informs the client that the data is written successfully #The client informs the metadata server that this write is complete

The original read / write speed obviously depends on the performance of the hard disk used, the capacity and topology of the network, and the hardware used

The better the throughput of disk and network, the better the performance of the whole system

4. Experimental environment

MFS deployment

Deployment of master

Official installation documentation

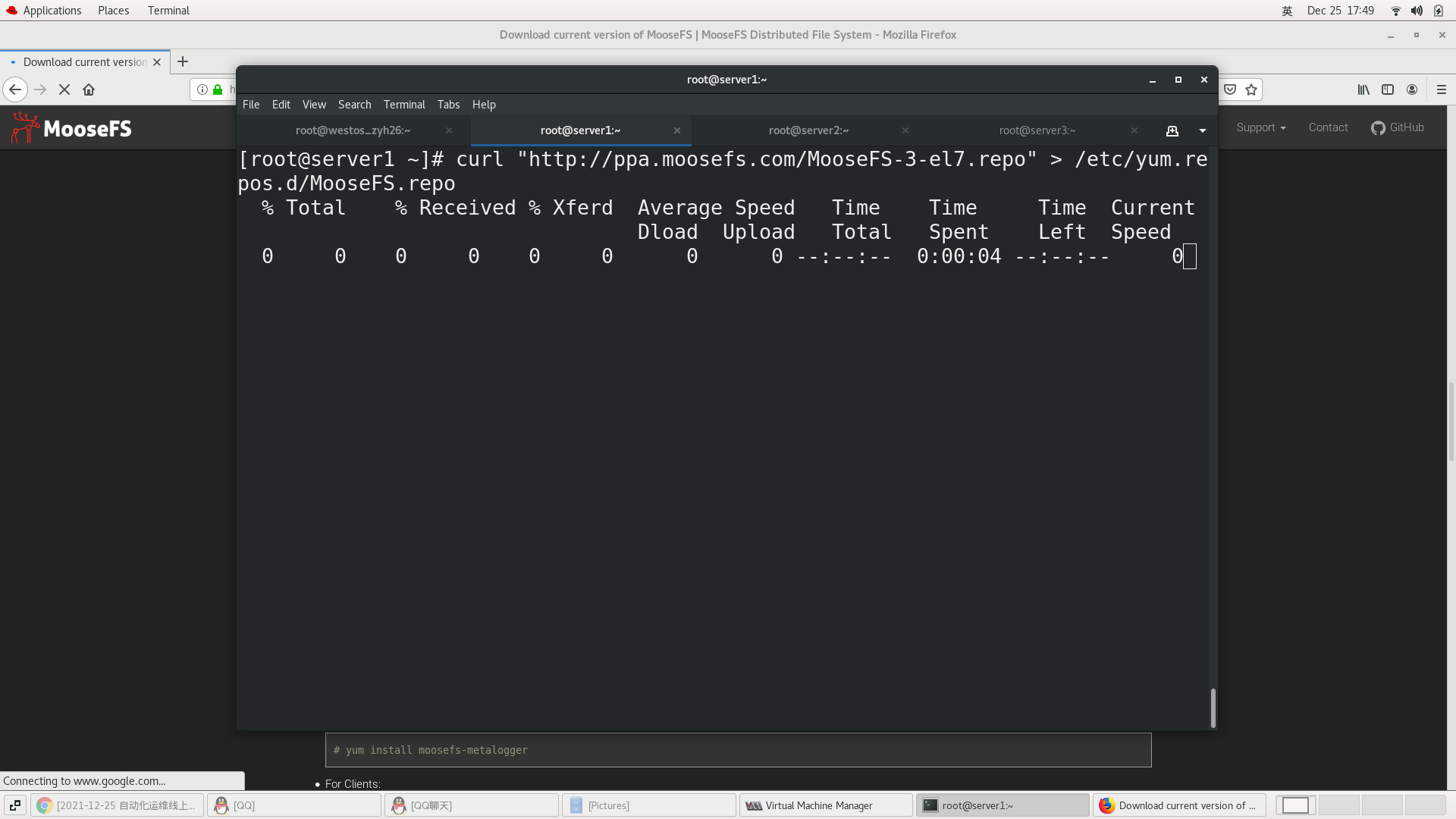

First, ensure that the virtual machine can access the Internet and execute commands in the real machine

iptables -t nat -I POSTROUTING -s 172.25.26.0/24 -j MASQUERADE

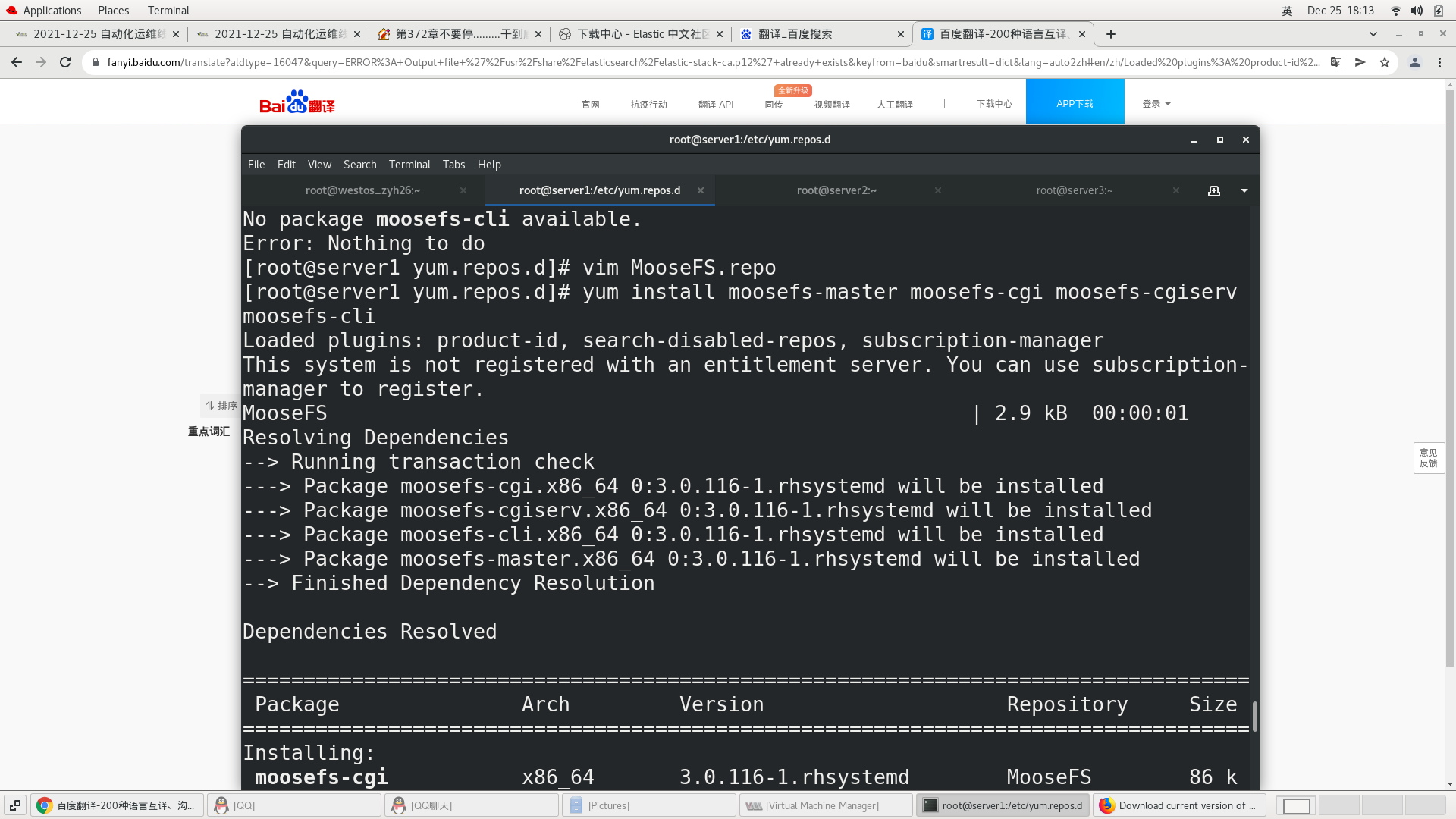

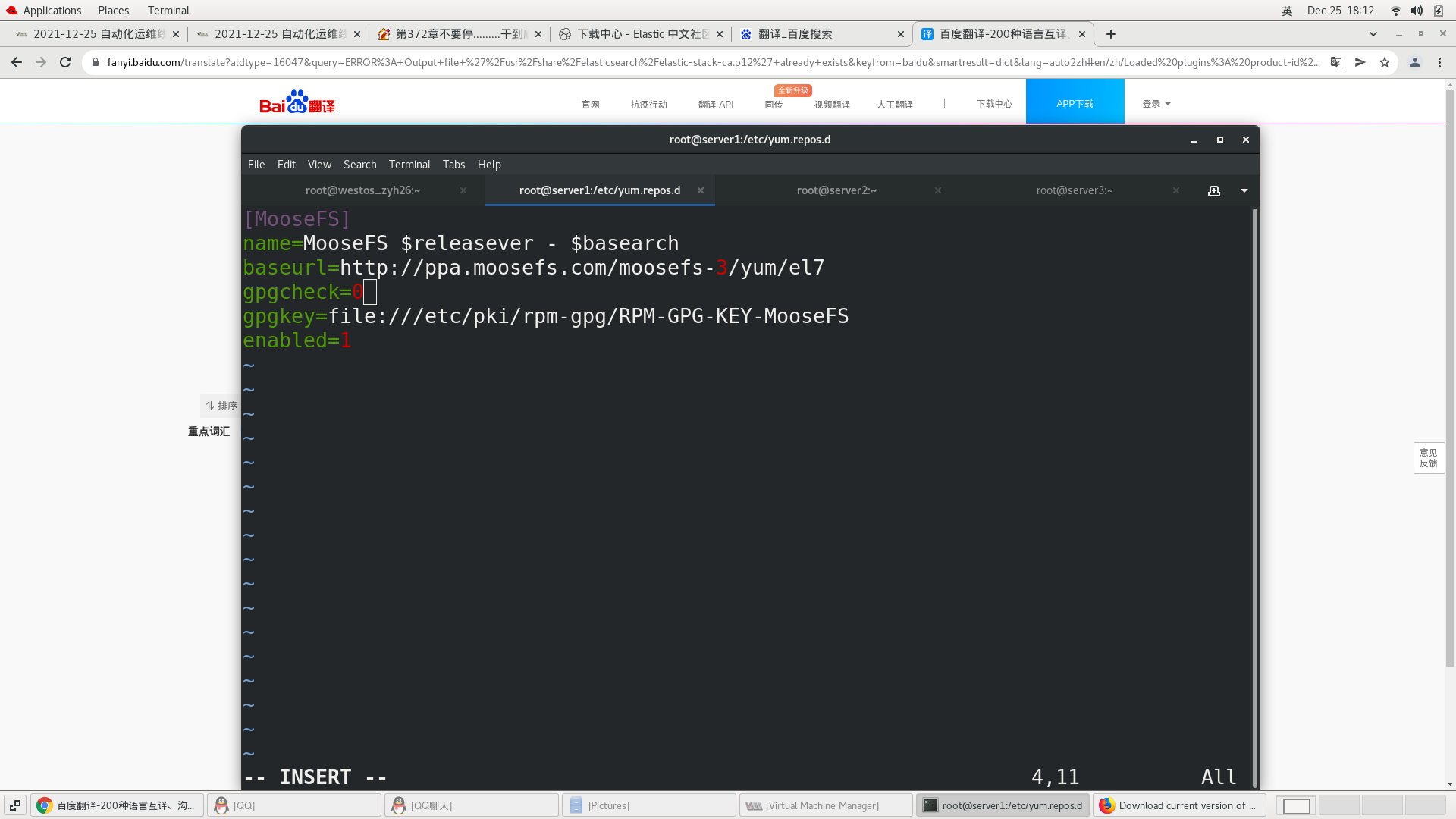

curl "http://ppa. moosefs. com/MooseFS-3-el7. Repo "> / etc / yum.repos.d/moosefs.repo # download repo source vim MooseFS.repo cat MooseFS.repo [MooseFS] name=MooseFS $releasever - $basearch baseurl=http://ppa.moosefs.com/moosefs-3/yum/el7 gpgcheck=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-MooseFS enabled=1 yum install -y moosefs-master ##Installing MFS master yum install moosefs-cgi moosefs-cgiserv moosefs-cli -y ##Install master server

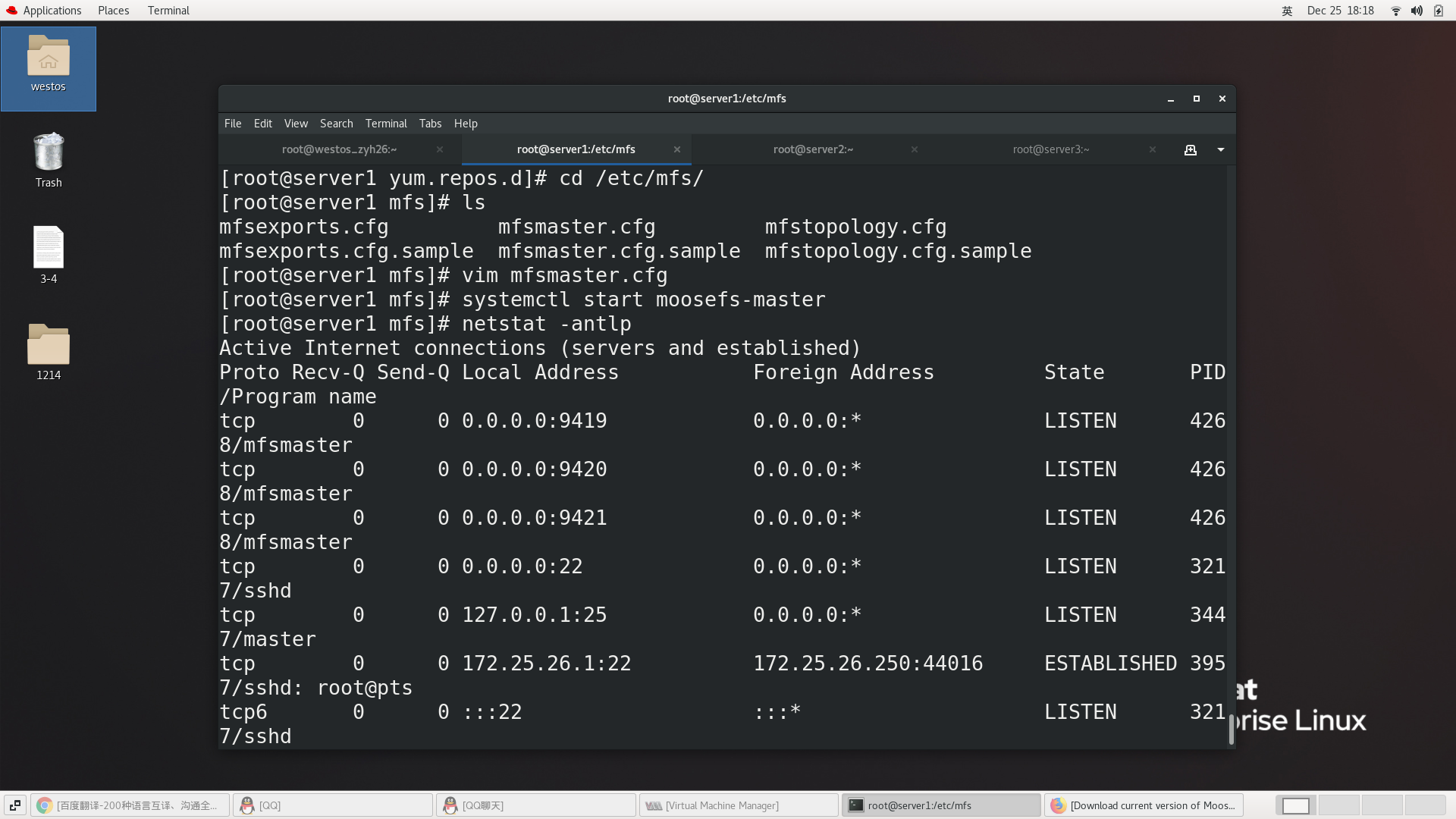

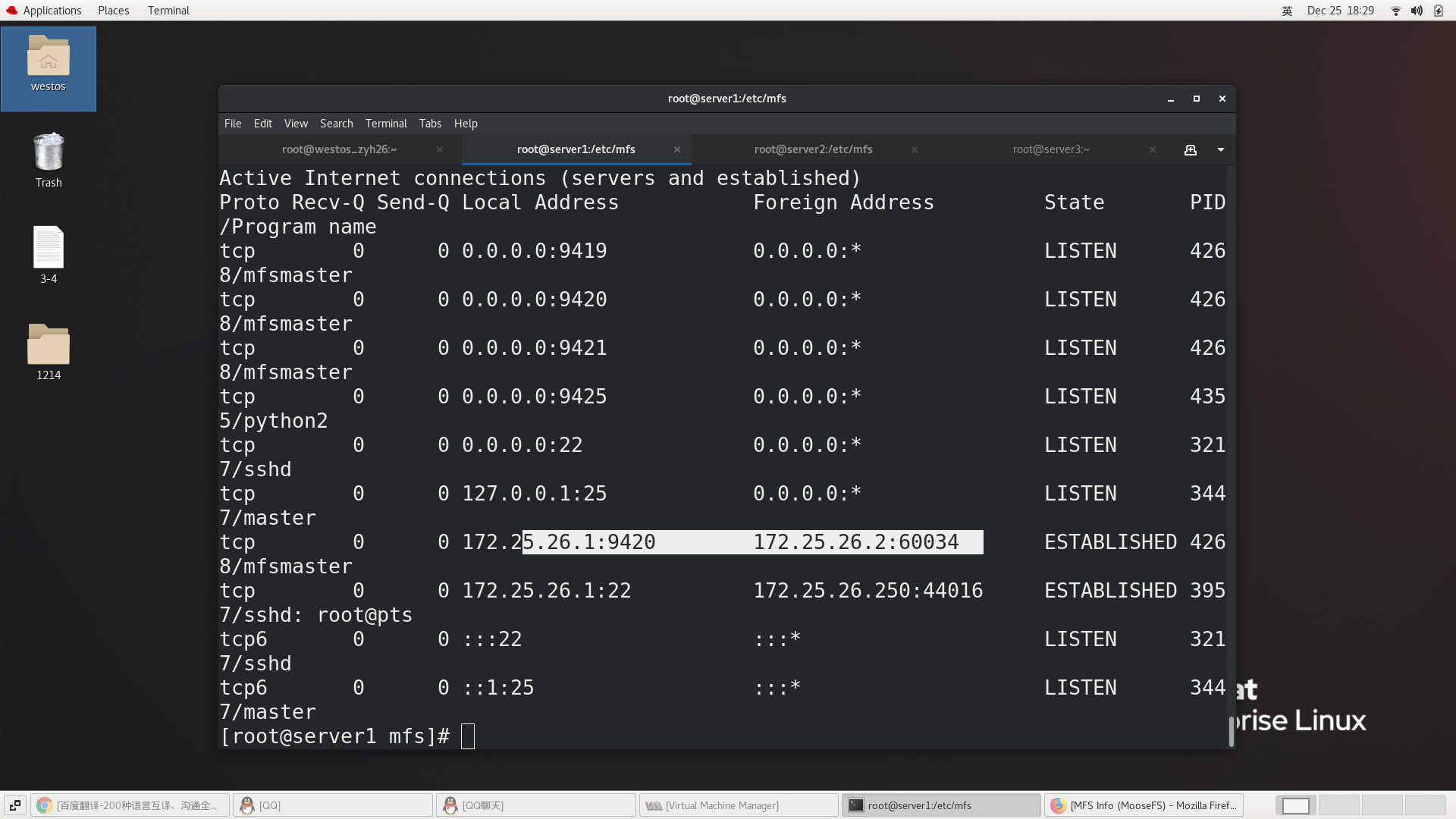

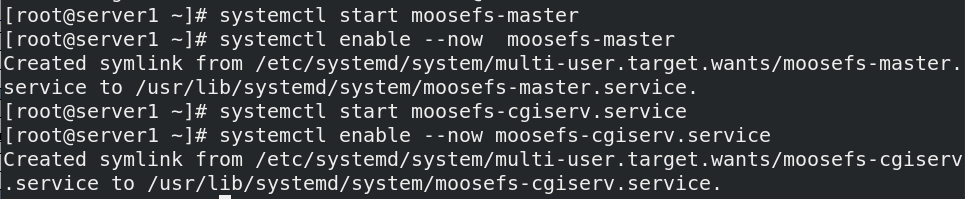

systemctl start moosefs-master ##Start MASTER vim /etc/hosts ##Parsing must be done 172.25.26.1 server1 mfsmaster systemctl start moosefs-cgiserv.service ##Web Monitoring netstat -antlp 9419: metalogger Listen to the port address and communicate with the metadata server when master In case of downtime, you can take over through its log master Services 9420: be used for chunkserver Connected port address 9421: Port address for client attach connections 9425: web The interface monitors the ports of each distribution node

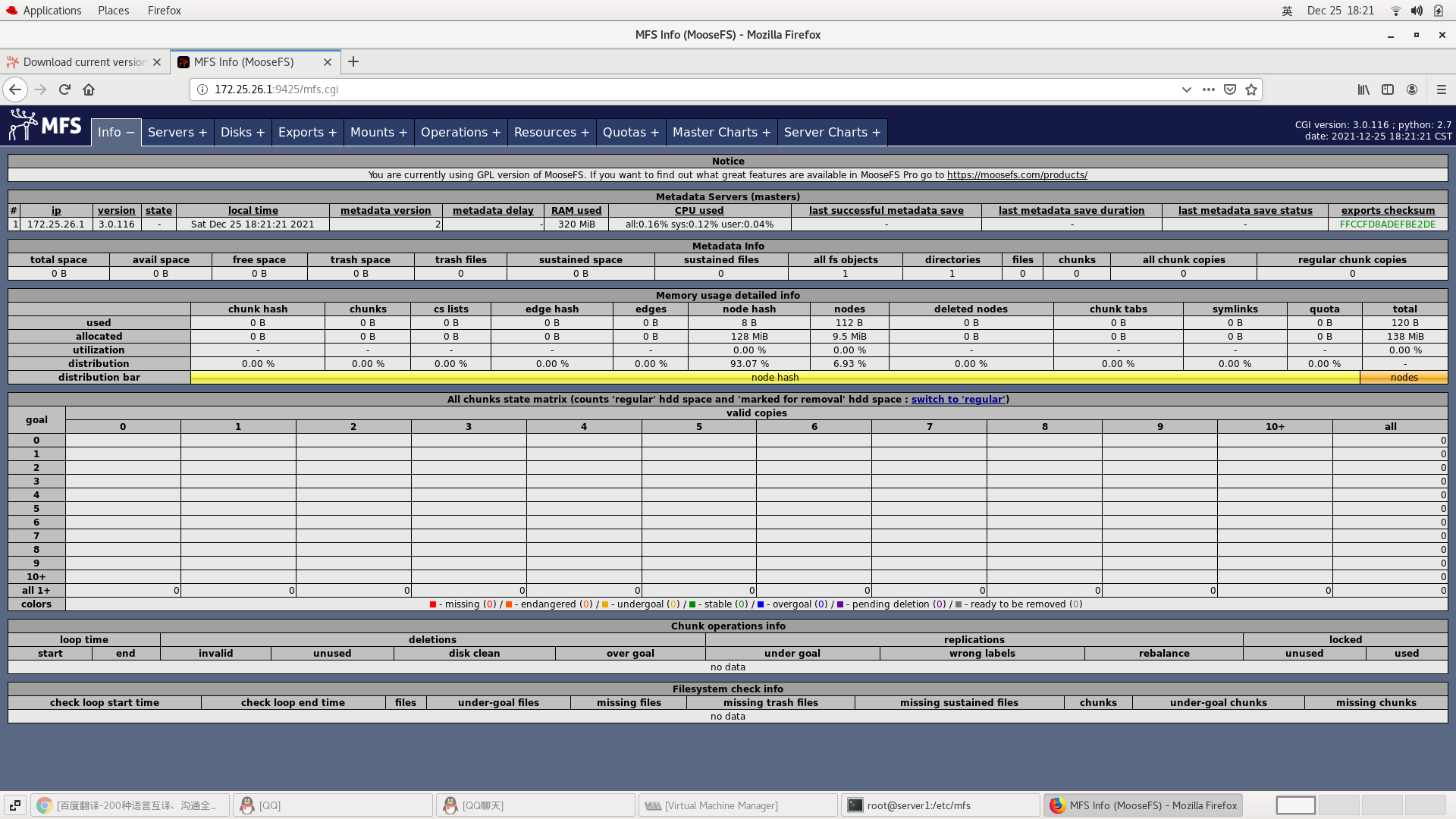

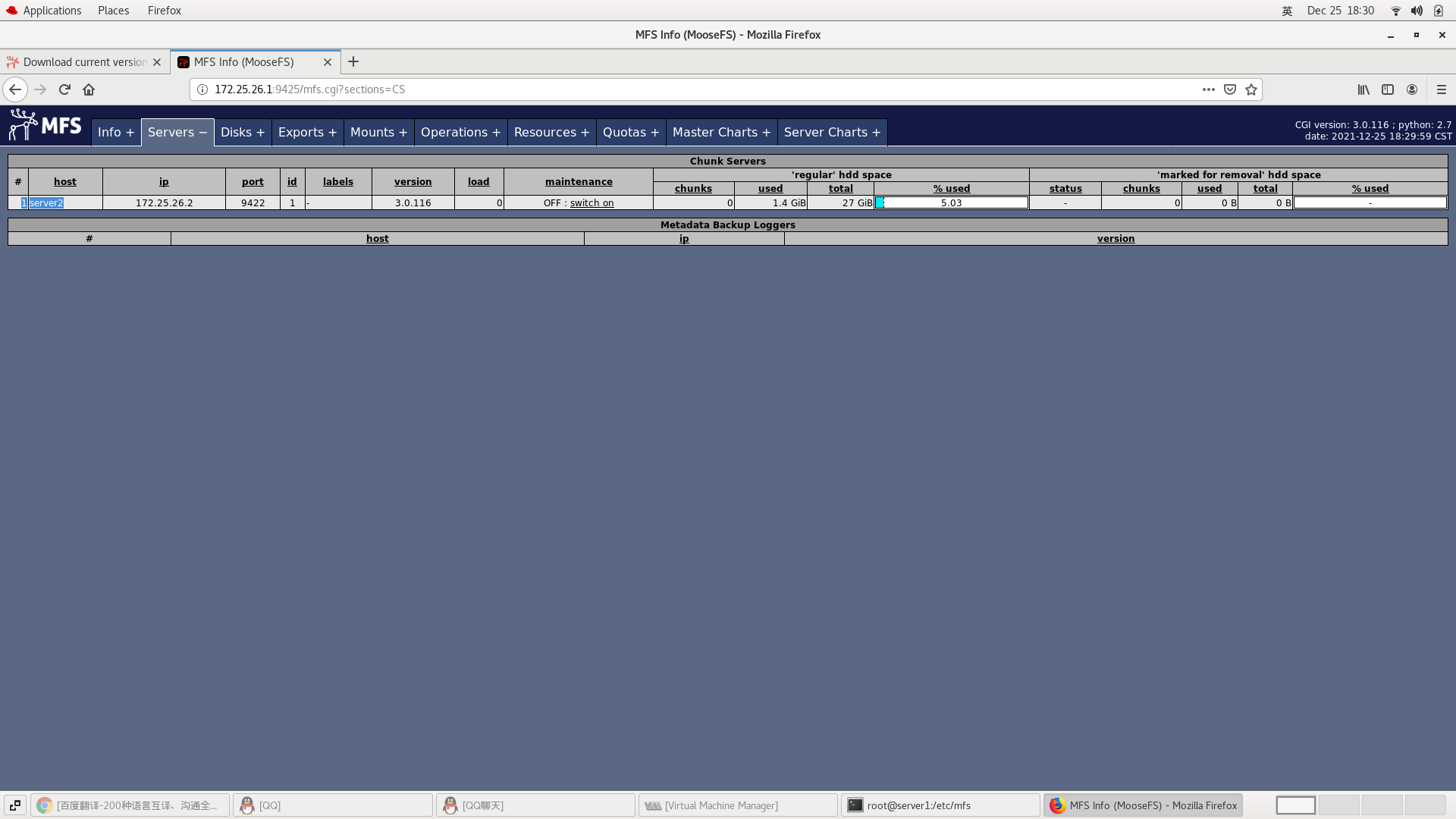

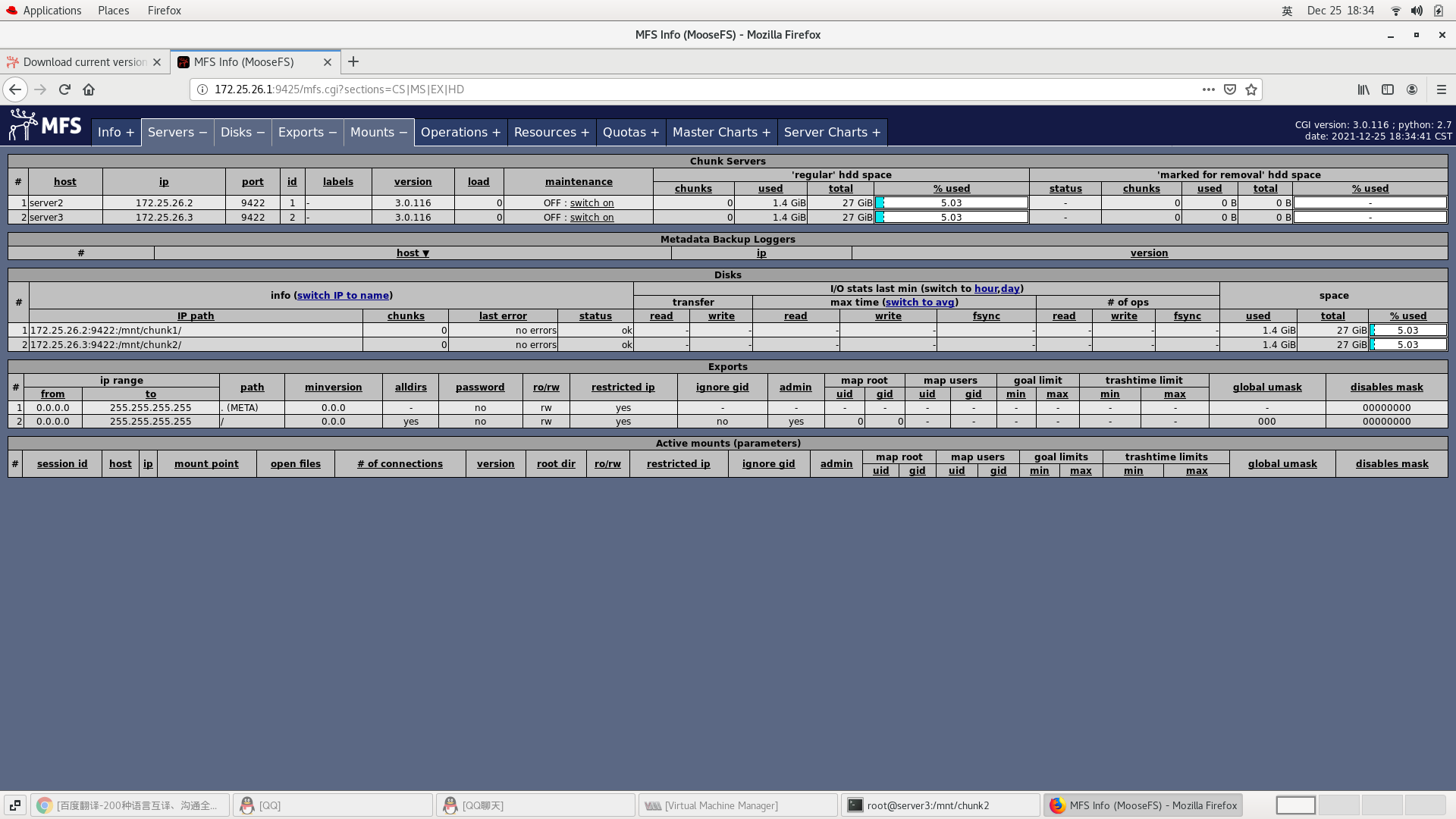

Visit 172.25.26.1:9425 / MFS cgi

slave deployment

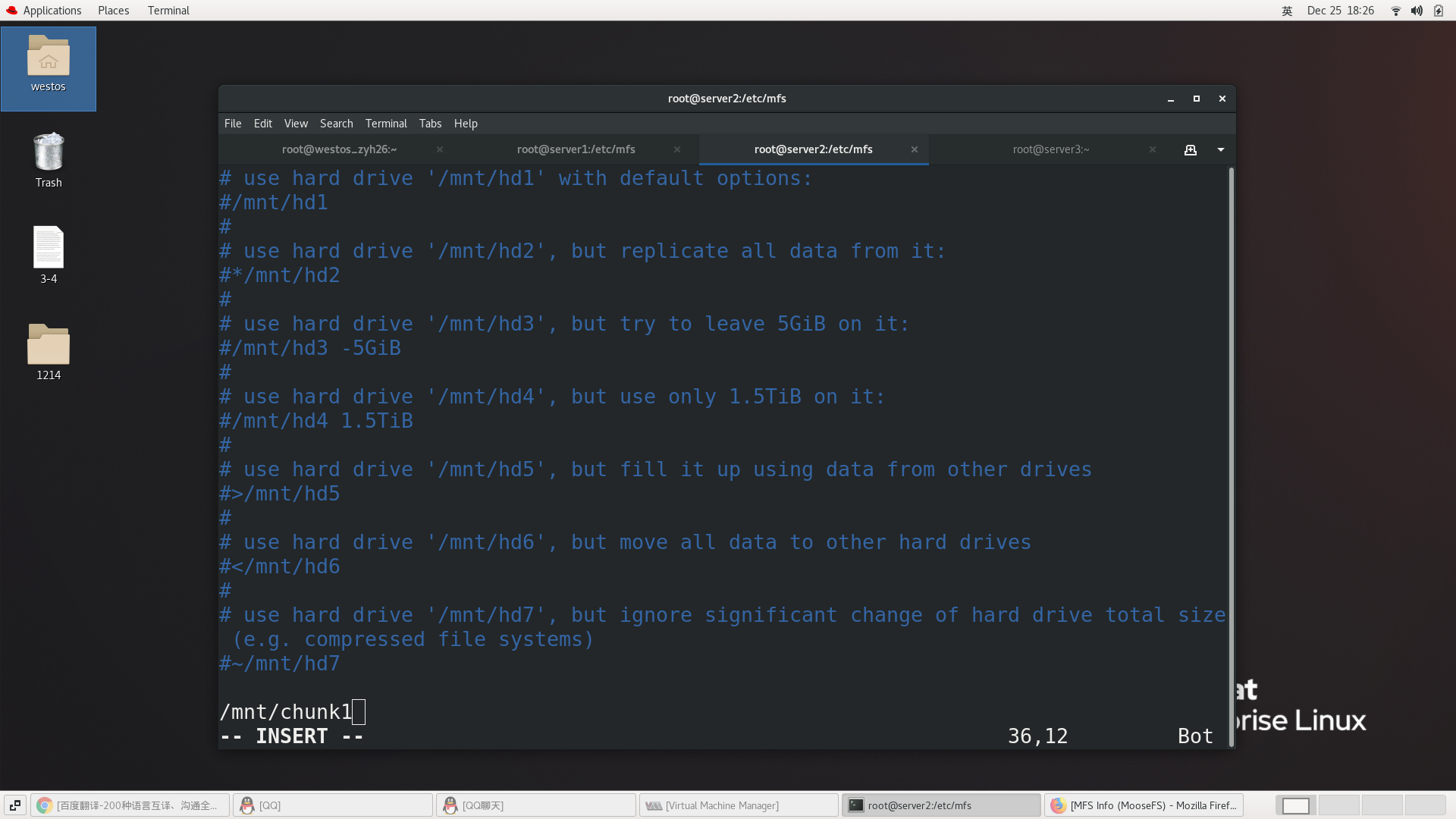

Note: / mnt/chunk1 or / mnt/chunk2 is a partition for mfs, but it is an independent directory on the machine, preferably a separate hard disk or a raid volume, and the minimum requirement is a partition.

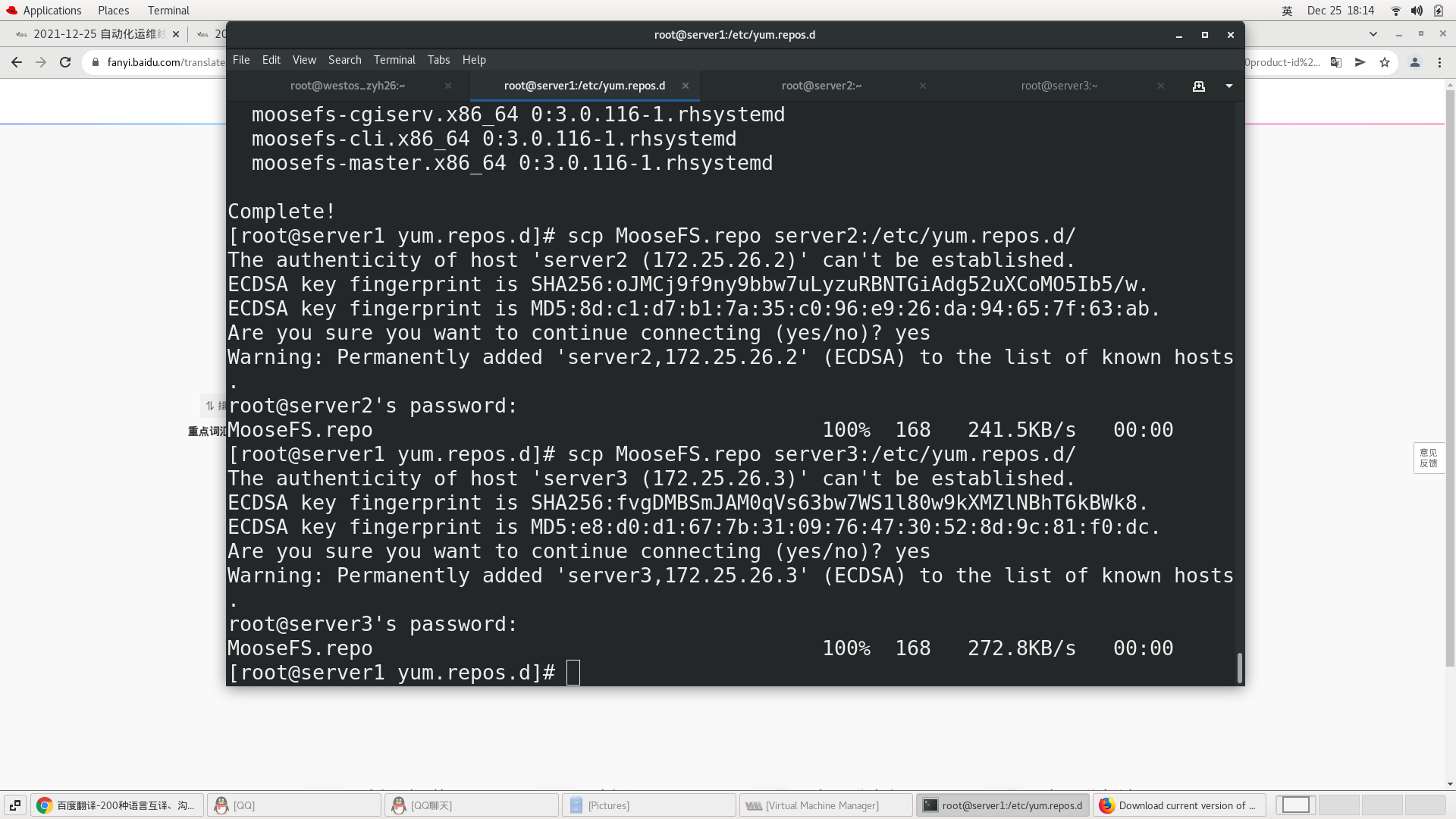

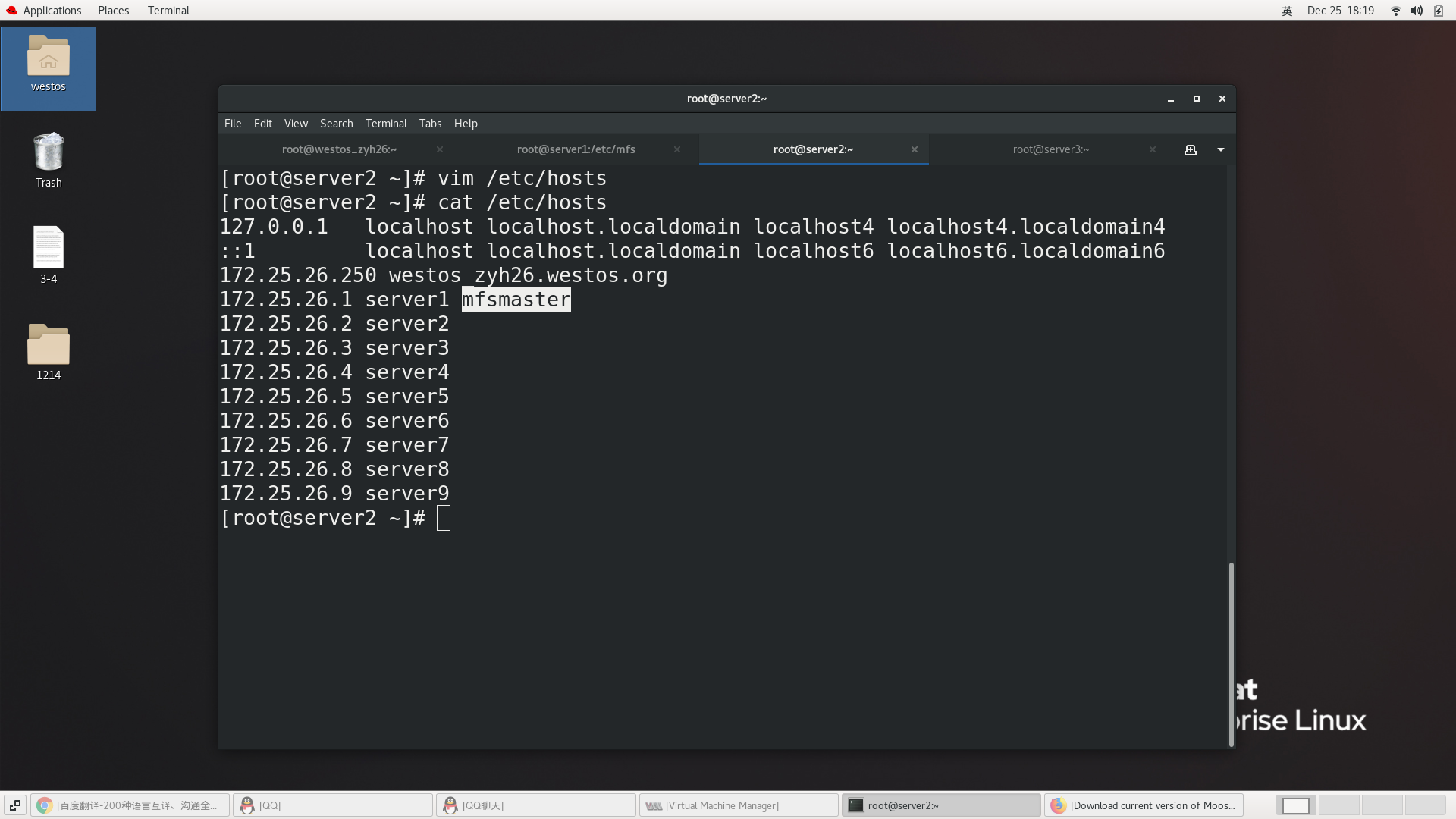

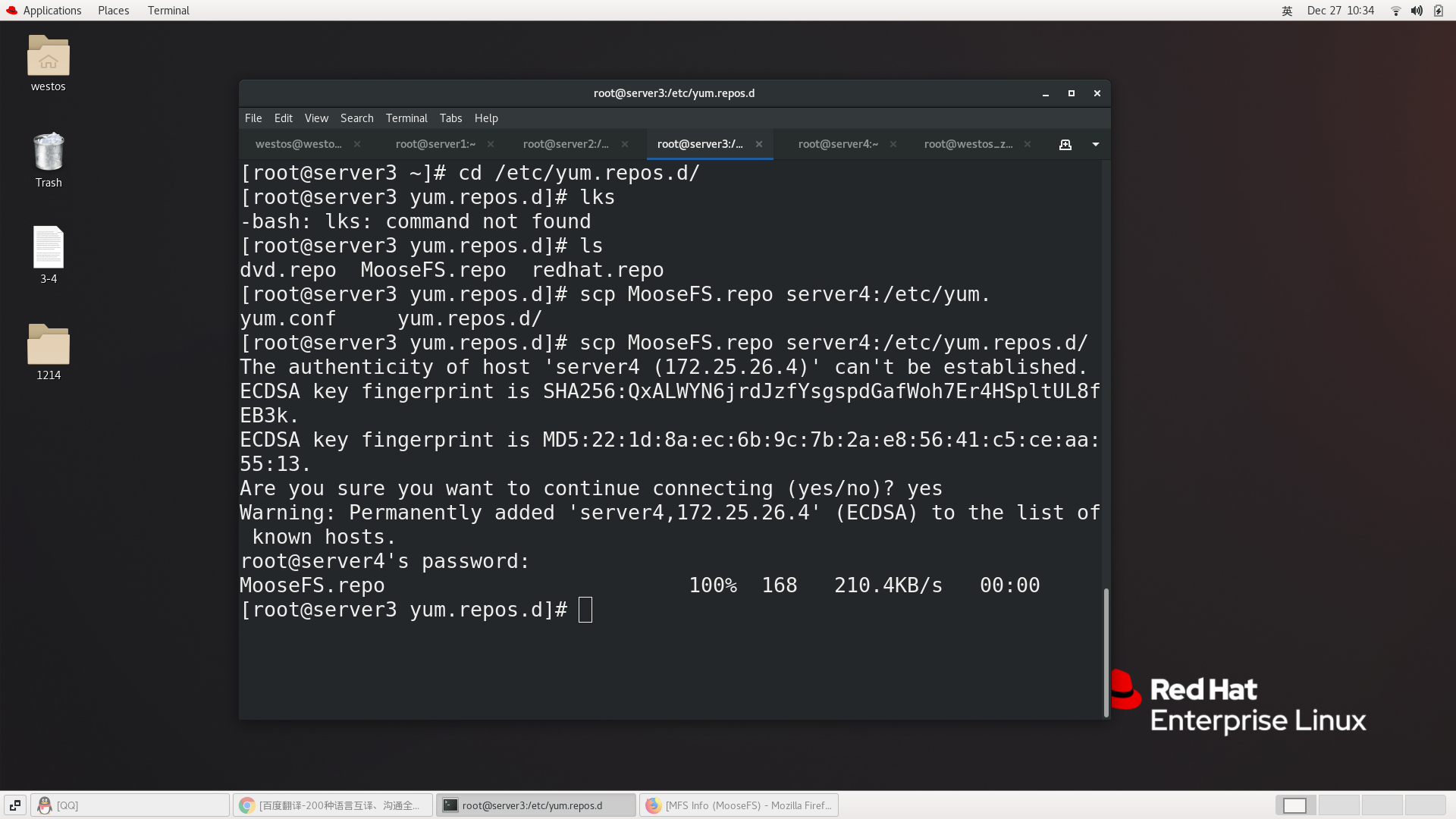

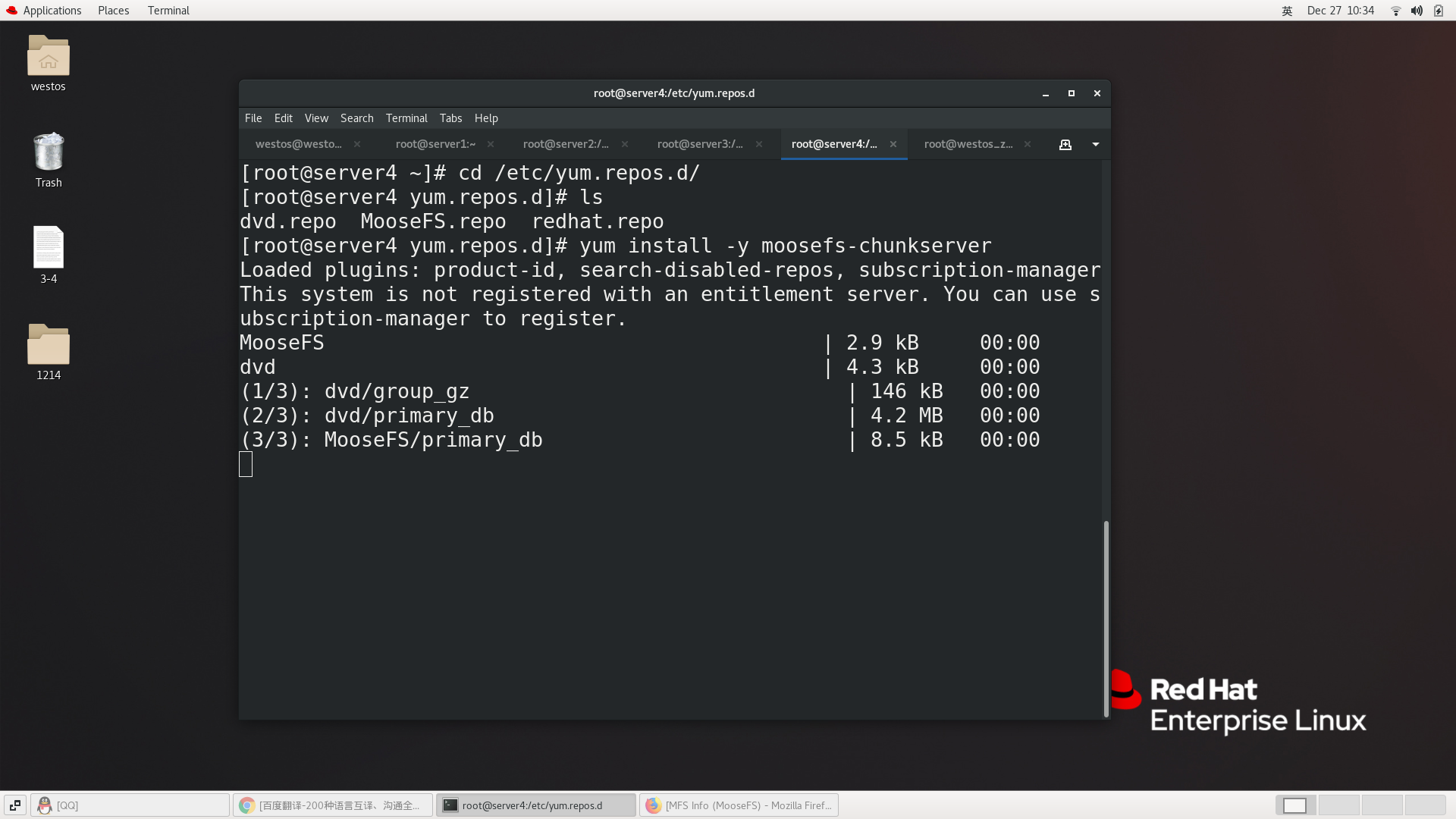

server2

[root@server1 yum.repos.d]# scp MooseFS.repo server2:/etc/yum.repos.d/ ssh: Could not resolve hostname server2: Name or service not known lost connection [root@servre1 yum.repos.d]# scp MooseFS.repo demo2:/etc/yum.repos.d/ [root@server1 yum.repos.d]# scp MooseFS.repo demo3:/etc/yum.repos.d/ [root@server2 ~]# yum install moosefs-chunkserver ##Install cold backup, two slave ends [root@server3 ~]# yum install moosefs-chunkserver ##Install cold backup [root@server3 ~]# vim /etc/hosts ##Do analysis

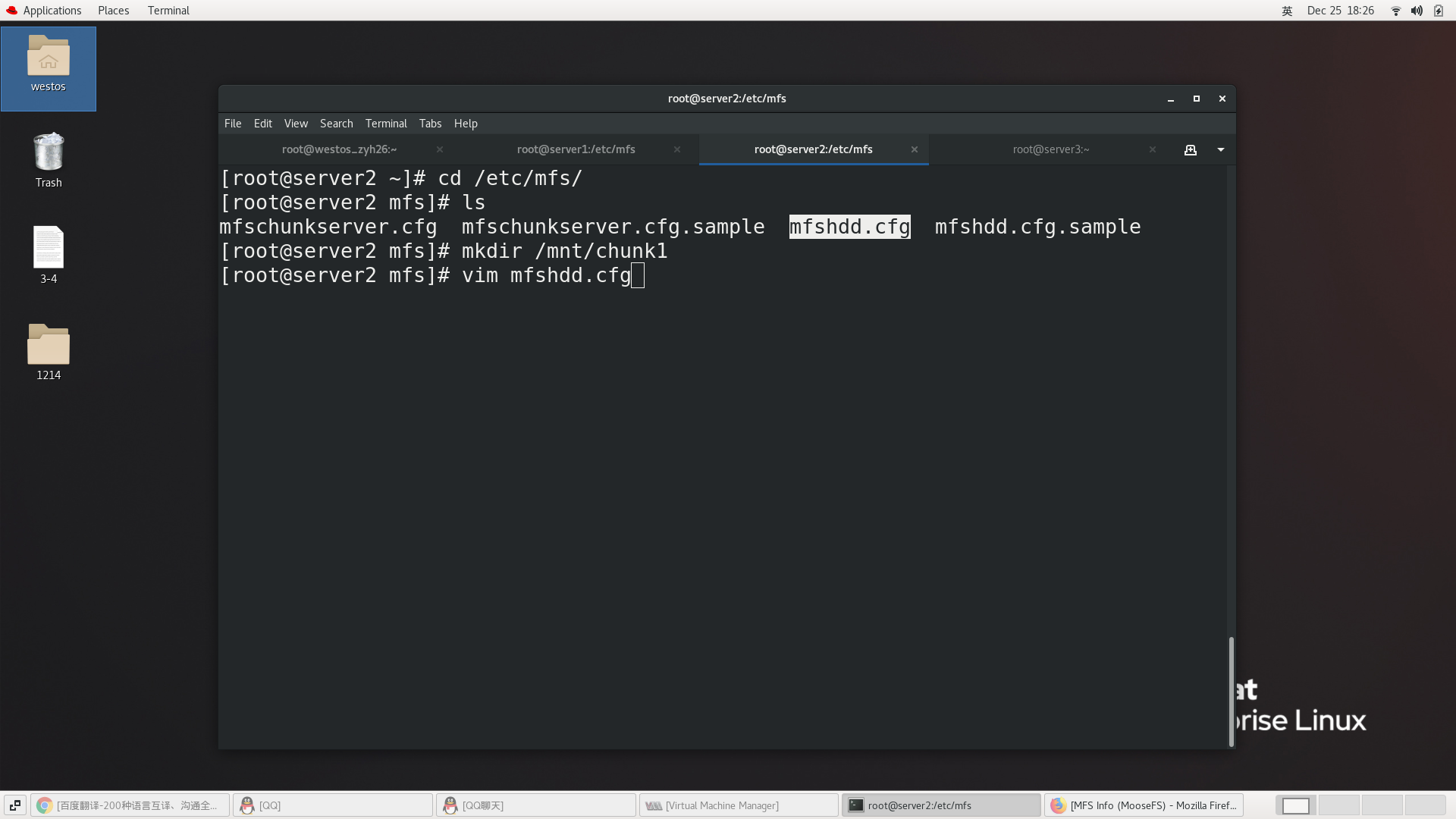

##server2 adds a virtual disk as distributed storage [root@server2 mfs]# fdisk /dev/vdb ##By default, it can be divided into one piece [root@server2 mfs]# mkfs.xfs /dev/vdb1 ##format [root@server2 mfs]# mkdir /mnt/chunk1 [root@server2 mfs]# mount /dev/vdb1 /mnt/chunk1/ ##mount [root@server2 mfs]# blkid /dev/vdb1: UUID="e94e65fa-35cd-4a40-9ef8-fa6ef42ef128" TYPE="xfs" [root@server2 mfs]# vim /etc/fstab [root@server2 mfs]# cat /etc/fstab ##Permanent mount UUID="e94e65fa-35cd-4a40-9ef8-fa6ef42ef128" /mnt/chunk1 xfs defaults 0 0 [root@server2 mfs]# mount -a ##Refresh [root@server2 mfs]# id mfs ##Set permissions uid=997(mfs) gid=995(mfs) groups=995(mfs) [root@server2 mfs]# chown mfs.mfs /mnt/chunk1/

##Start service start service [root@servre2 mfs]# systemctl start moosefs-chunkserver ##Check whether server2 is linked to the master of server1 [root@demo2 ~]# cd /etc/mfs/ [root@demo2 mfs]# ls mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample [root@demo2 mfs]# vim mfshdd.cfg ##Set mount directory [root@demo2 mfs]# grep -v ^# mfshdd.cfg /mnt/chunk1 [root@demo2 mfs]# systemctl restart moosefs-chunkserver ##Restart service

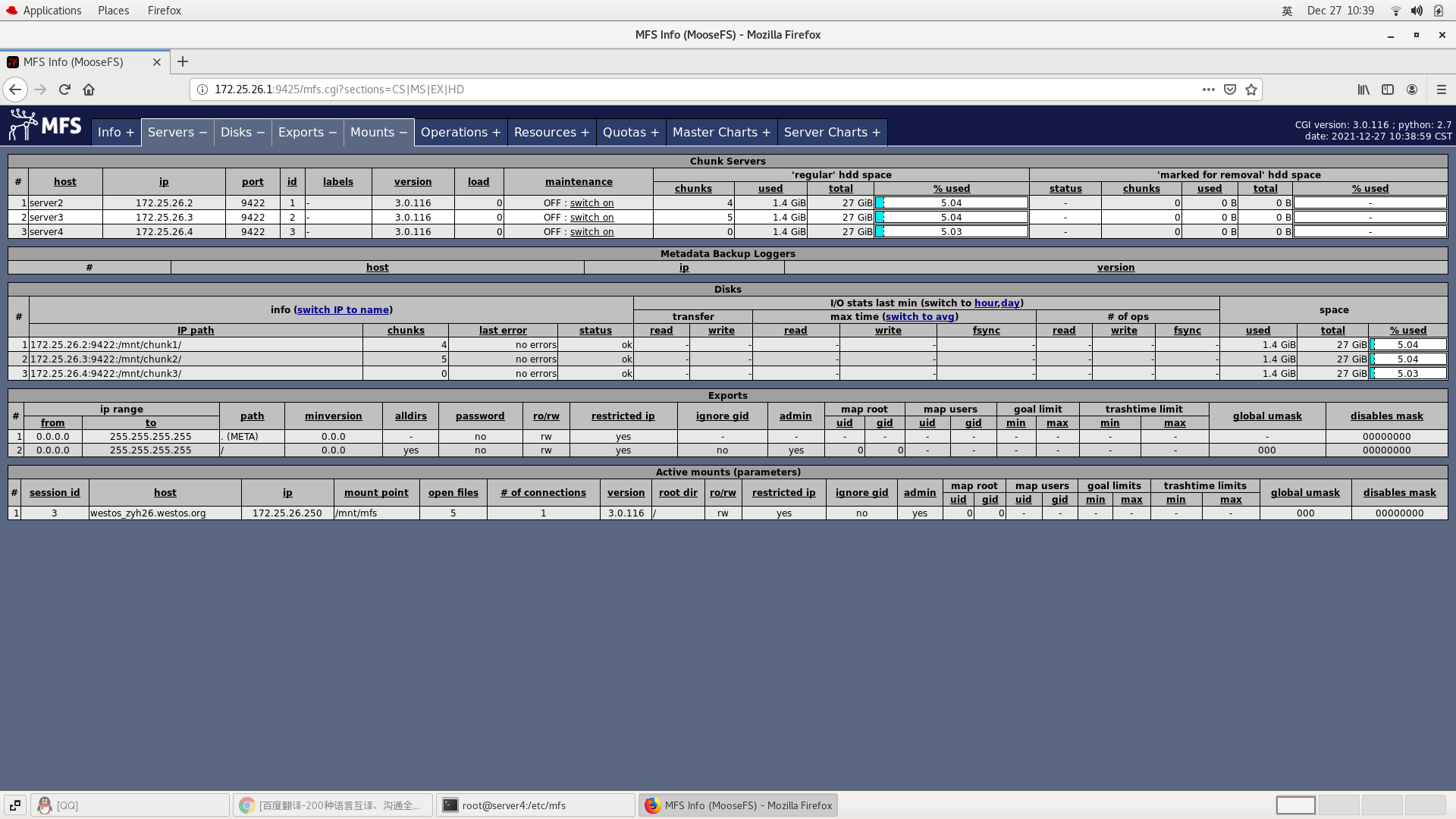

View status

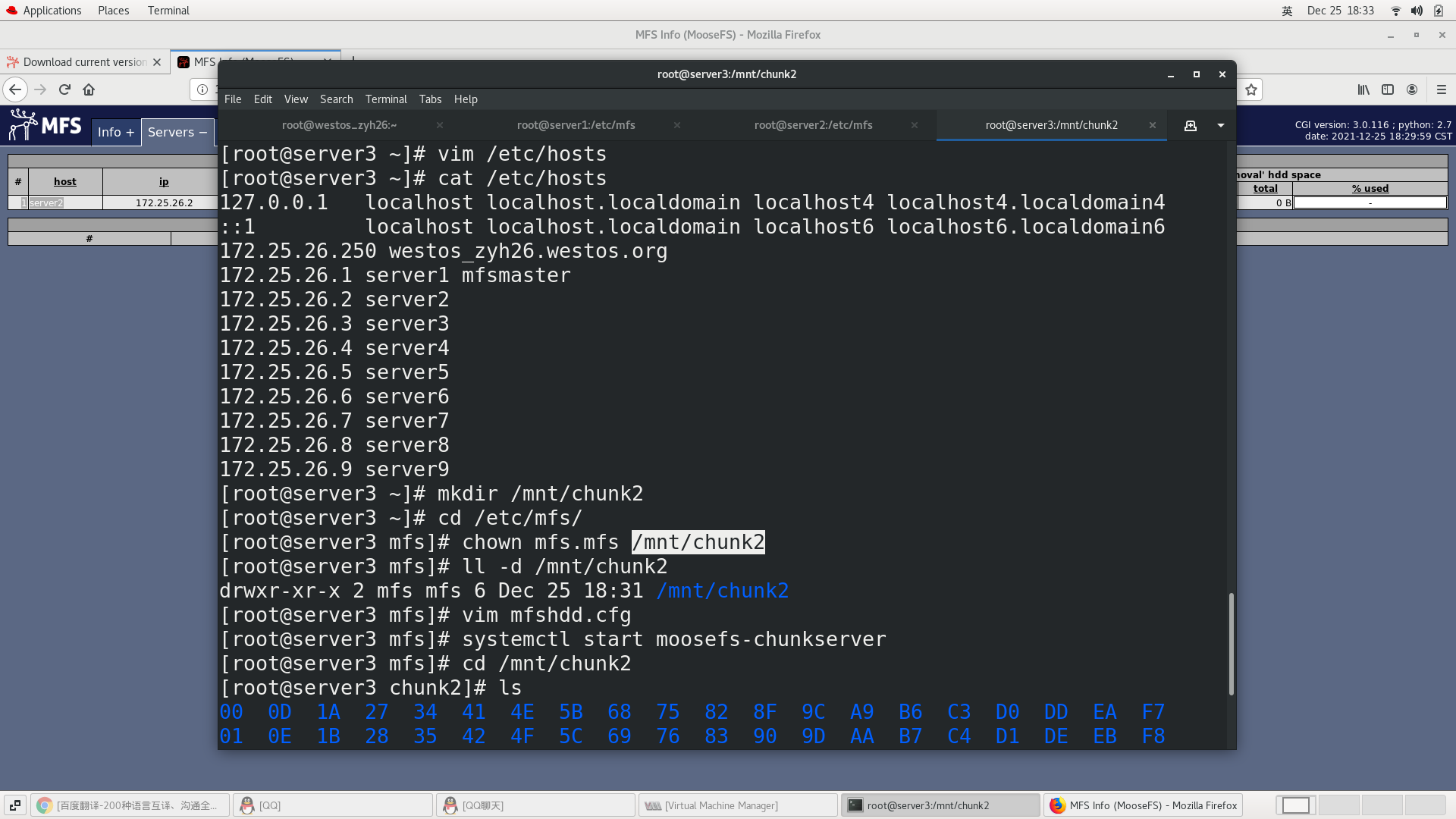

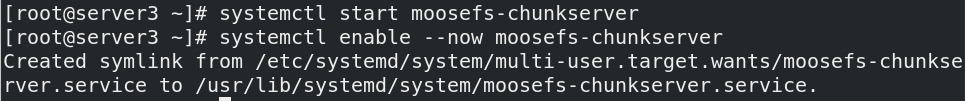

server3

[root@server3 ~]# cd /etc/mfs/ [root@server3 mfs]# ls mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample [root@server3 mfs]# mkdir /mnt/chunk2 [root@server3 mfs]# vim mfshdd.cfg [root@server3 mfs]# grep -v ^# mfshdd.cfg /mnt/chunk2 [root@server3 mfs]# chown mfs.mfs /mnt/chunk2 [root@server3 mfs]# df -h / Filesystem Size Used Avail Use% Mounted on /dev/mapper/rhel-root 17G 1.2G 16G 7% / [root@server3 mfs]# systemctl start moosefs-chunkserver

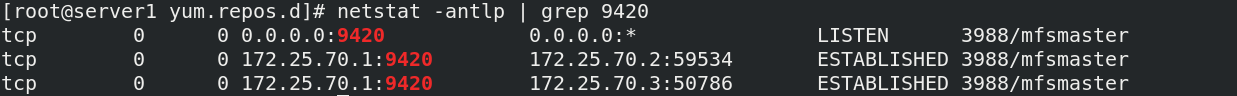

Check port 9420 on the master to see if server2 and 3 are connected to

The cpu pressure on the master side comes from the file size of the distributed system

Memory pressure comes from the number of files

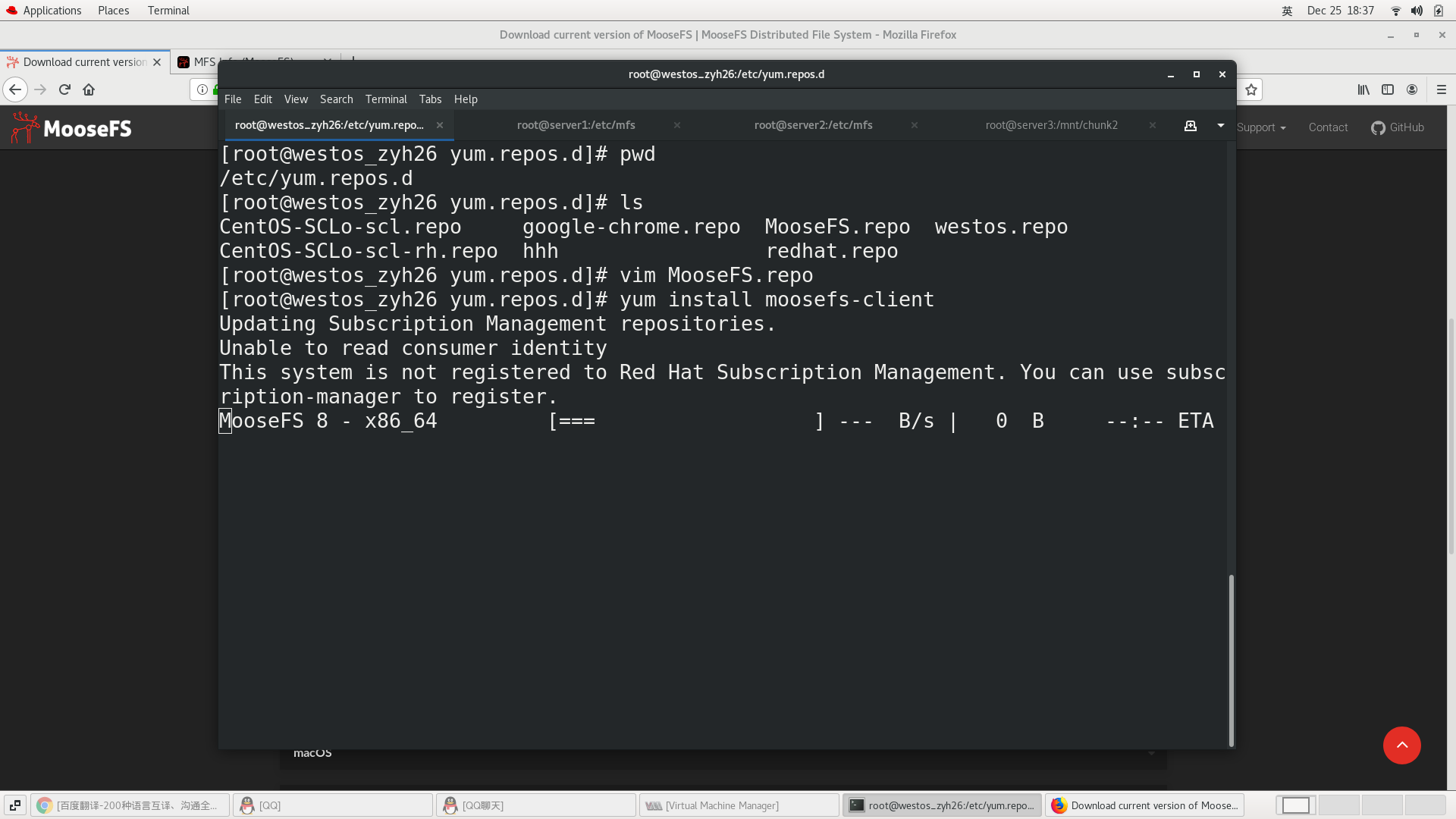

Client Deployment (real machine as client)

[root@westos ~]# cd /etc/yum.repos.d/

[root@westos yum.repos.d]# ls

aliyun.repo google-chrome.repo redhat.repo rhel8.2.repo

[root@westos yum.repos.d]# curl "http://ppa.moosefs.com/MooseFS-3-el8.repo" > /etc/yum.repos.d/MooseFS.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 168 100 168 0 0 93 0 0:00:01 0:00:01 --:--:-- 93

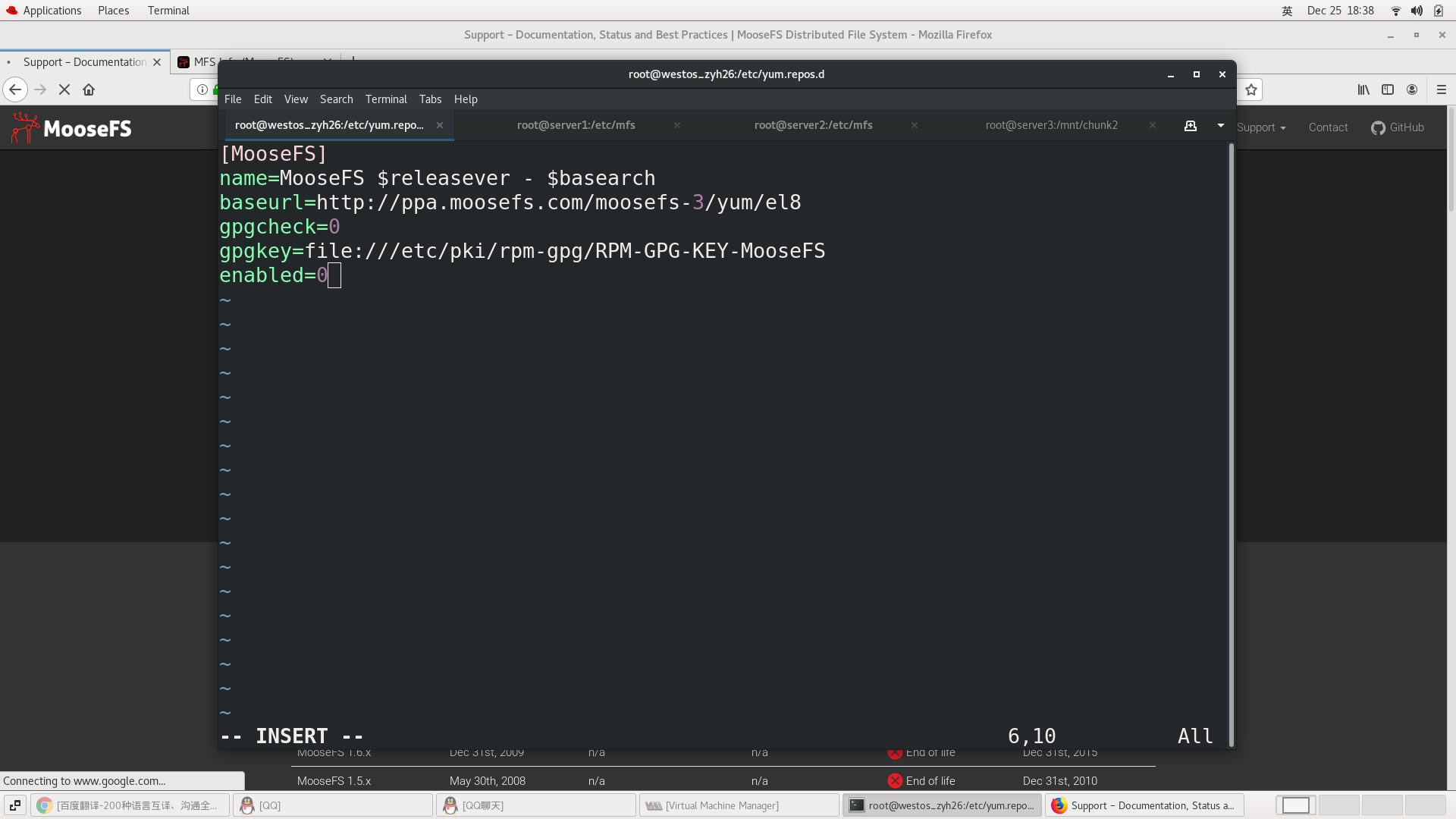

[root@westos yum.repos.d]# vim MooseFS.repo

[root@westos yum.repos.d]# cat MooseFS.repo

[MooseFS]

name=MooseFS $releasever - $basearch

baseurl=http://ppa.moosefs.com/moosefs-3/yum/el8

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-MooseFS

enabled=1

[root@westos yum.repos.d]# yum install moosefs-client

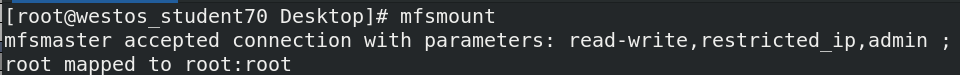

[root@westos ~]# cd /etc/mfs/ [root@westos mfs]# ls mfsmount.cfg mfsmount.cfg.sample [root@westos mfs]# vim mfsmount.cfg [root@westos mfs]# grep -v ^# mfsmount.cfg /mnt/mfs #Add a mounted directory, provided that the mounted directory must be empty [root@westos mfs]# vim /etc/hosts ##Do analysis [root@westos ~]# mkdir /mnt/mfs [root@westos ~]# mfsmount mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root [root@westos ~]# ll /etc/mfs/mfsmount.cfg -rw-r--r--. 1 root root 400 Mar 19 11:29 /etc/mfs/mfsmount.cfg [root@westos ~]# df #Check whether / mnt/mfs is mounted

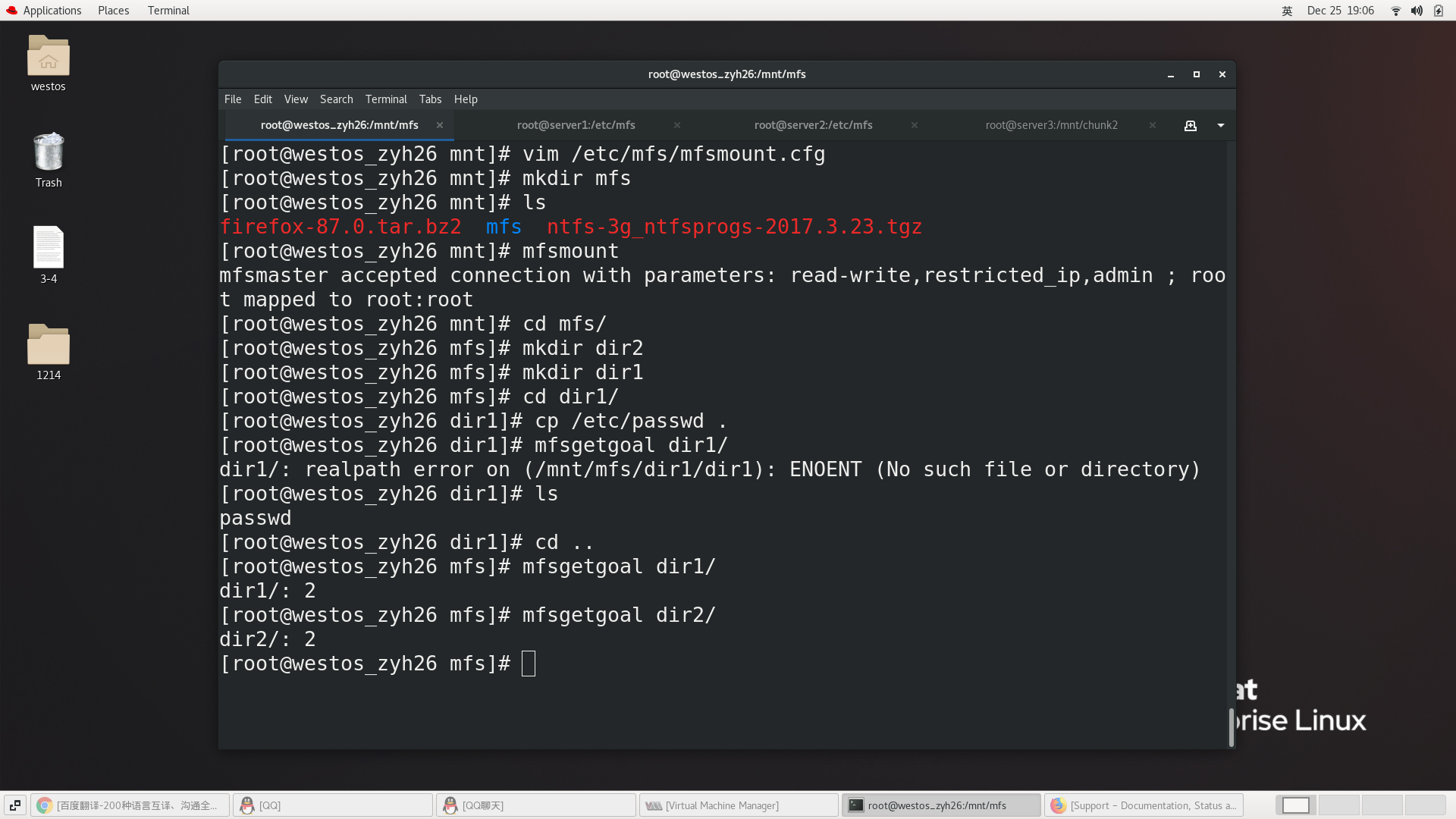

[root@westos mfs]# mkdir dir1 dir2

[root@westos mfs]# mfsgetgoal dir1

dir1: 2

[root@westos mfs]# mfsgetgoal dir2

dir2: 2

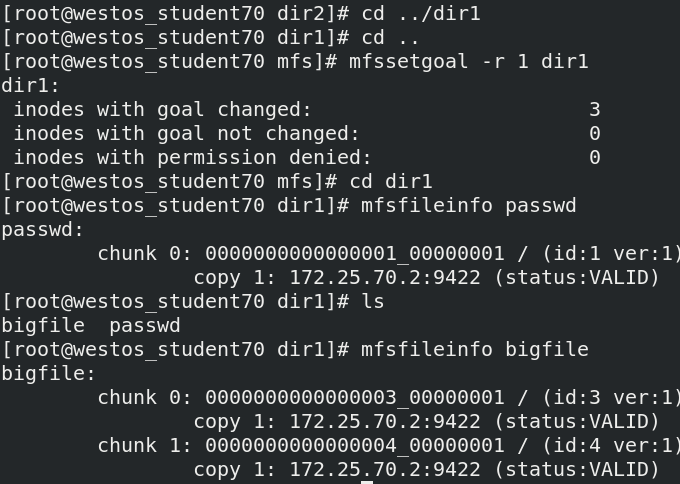

[root@westos mfs]# mfssetgoal -r 1 dir1

dir1:

inodes with goal changed: 1

inodes with goal not changed: 0

inodes with permission denied: 0

[root@westos mfs]# mfsgetgoal dir1

dir1: 1

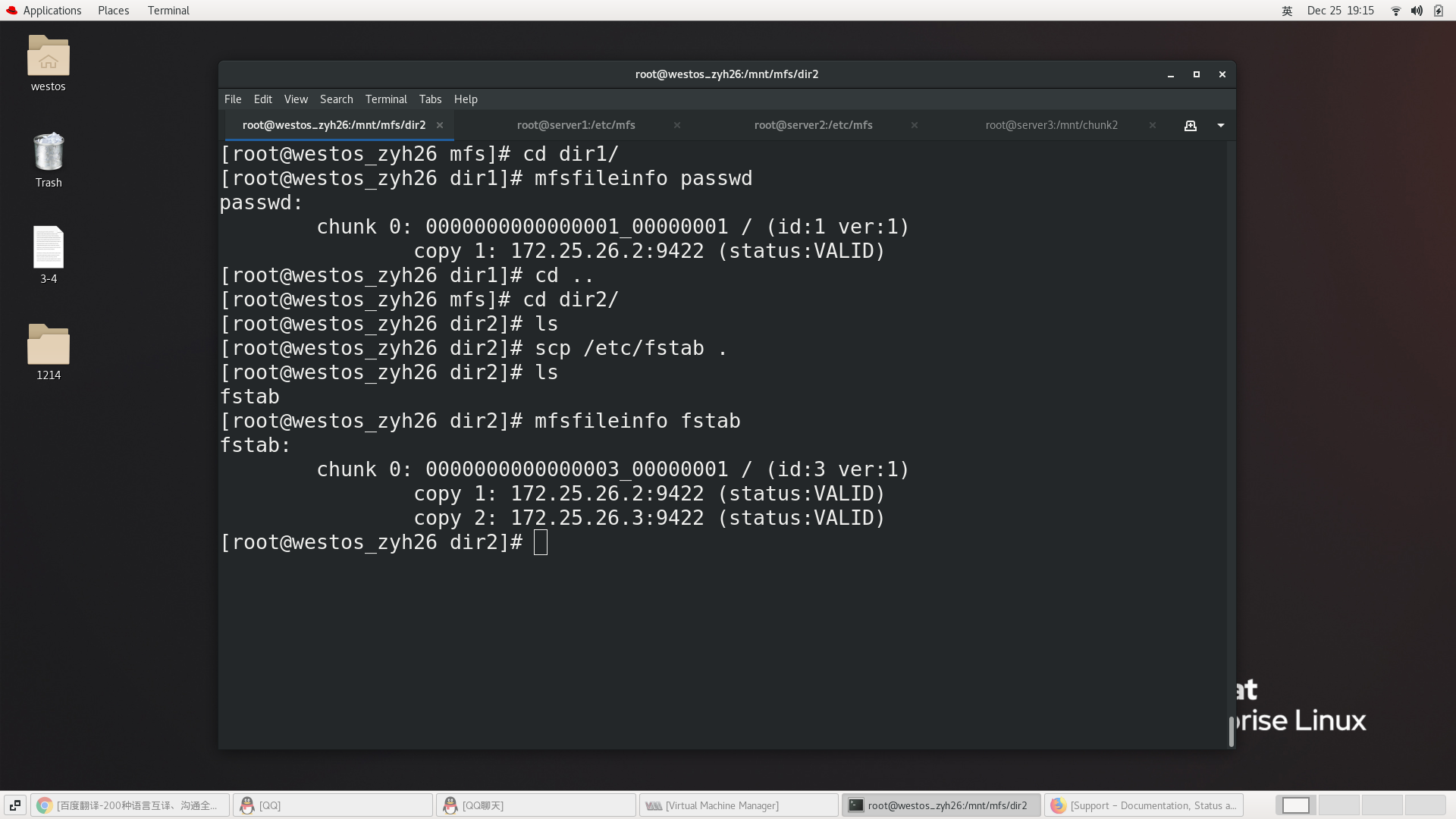

[root@westos mfs]# cd dir1/

[root@westos dir1]# cp /etc/passwd .

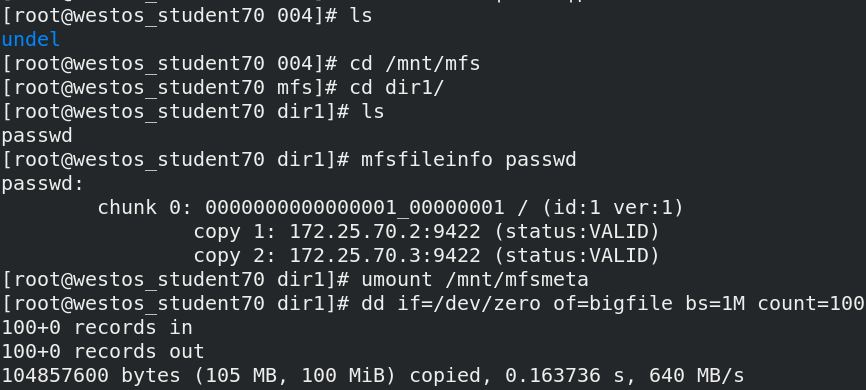

[root@westos dir1]# mfsfileinfo passwd

passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.26.2:9422 (status:VALID)

copy 2: 172.25.26.3:9422 (status:VALID)

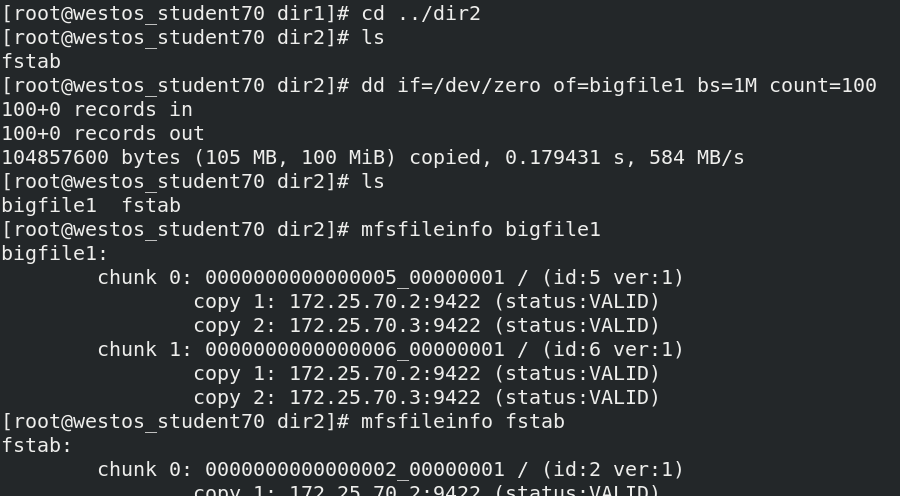

[root@westos dir1]# cd ../dir2/ [root@westos dir2]# cp /etc/fstab . [root@westos dir2]# ls fstab [root@westos dir2]# mfsfileinfo fstab fstab: chunk 0: 0000000000000002_00000001 / (id:2 ver:1) copy 1: 172.25.26.2:9422 (status:VALID) copy 2: 172.25.26.3:9422 (status:VALID)

5. Experiment

Basic experiment

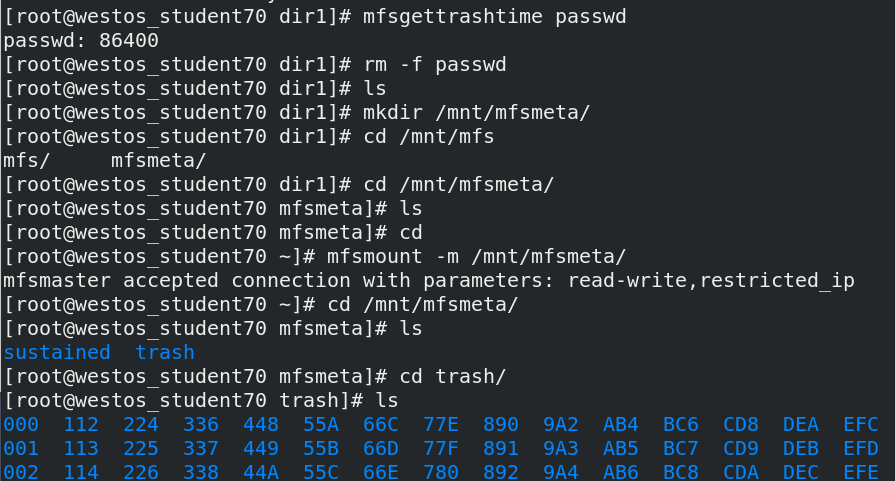

Text recovery

Mount mfsmount

Simulate deletion of passwd

Simulate deletion of passwd

mfsgettrashtime fstab ##See how long it can be restored after deletion fstab: 86400

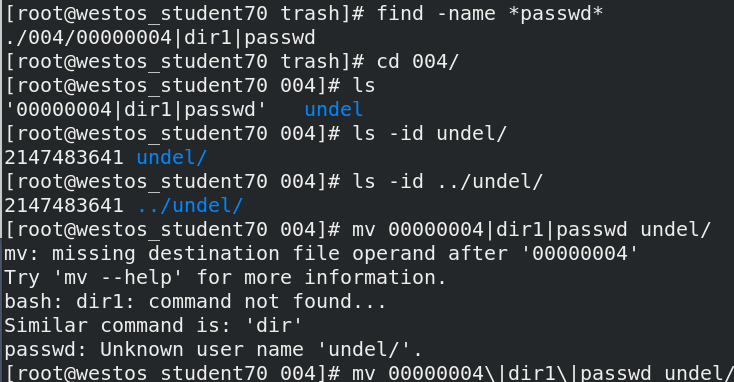

Find deleted files in the recycle bin and recover them

Memory allocation for backup

One backup file is 64M

mfssetgoal -r 1 dir1

Backup changed to 1

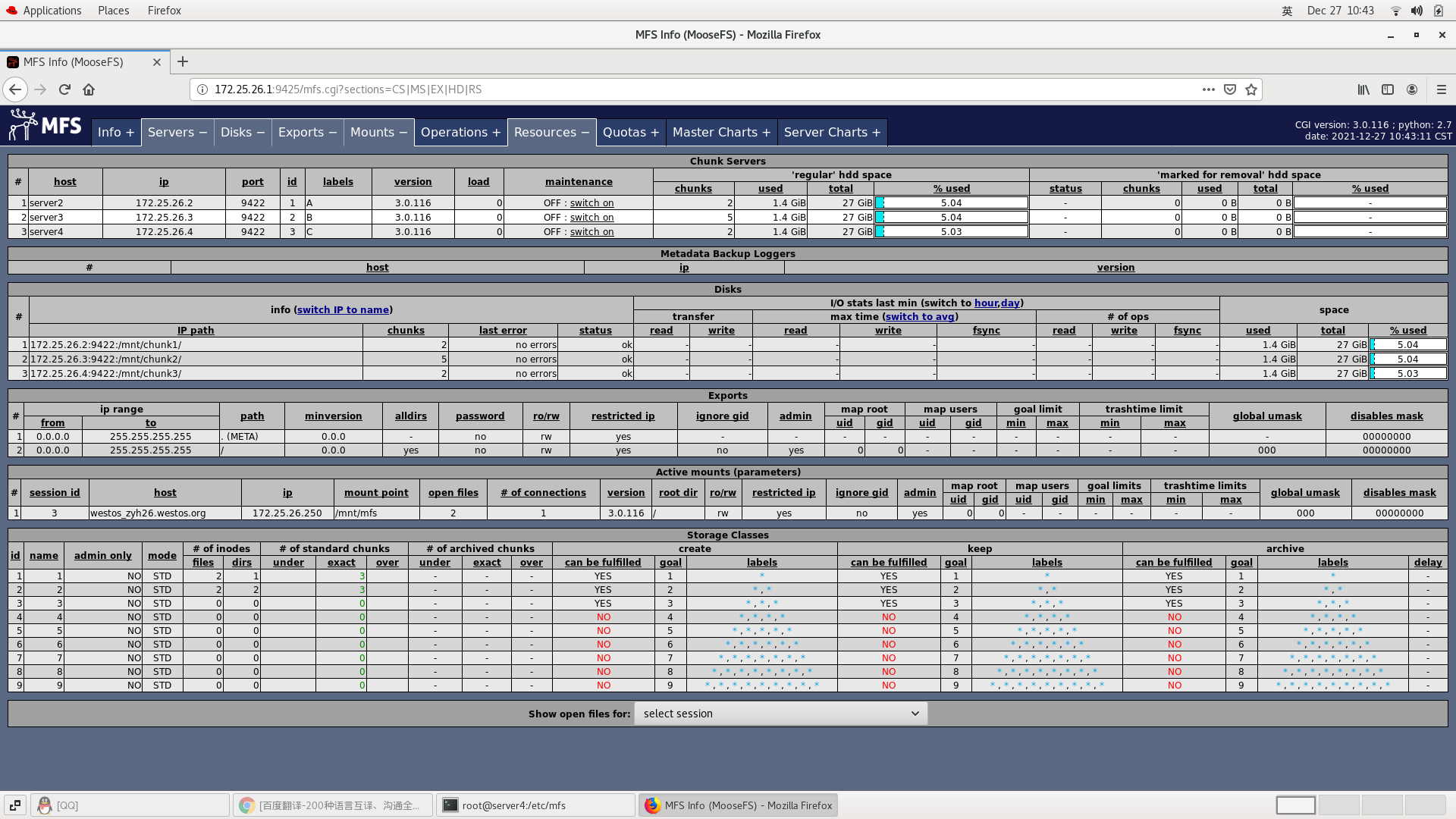

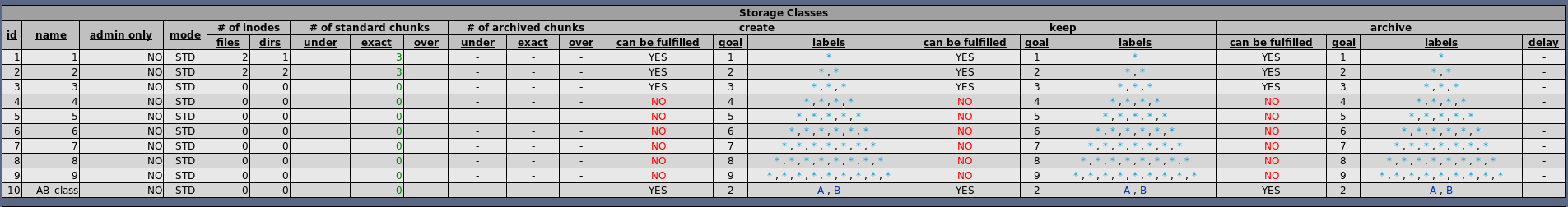

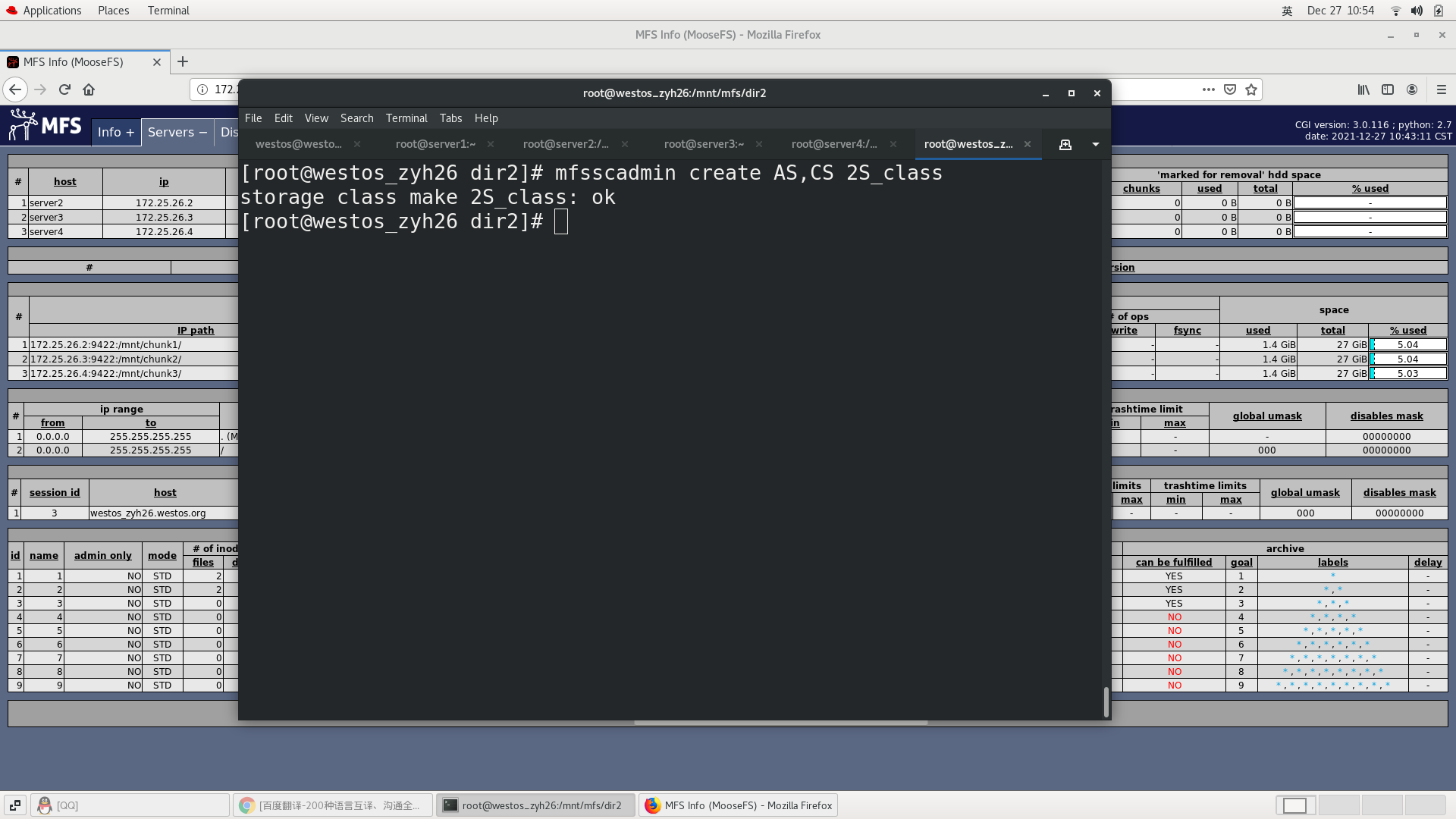

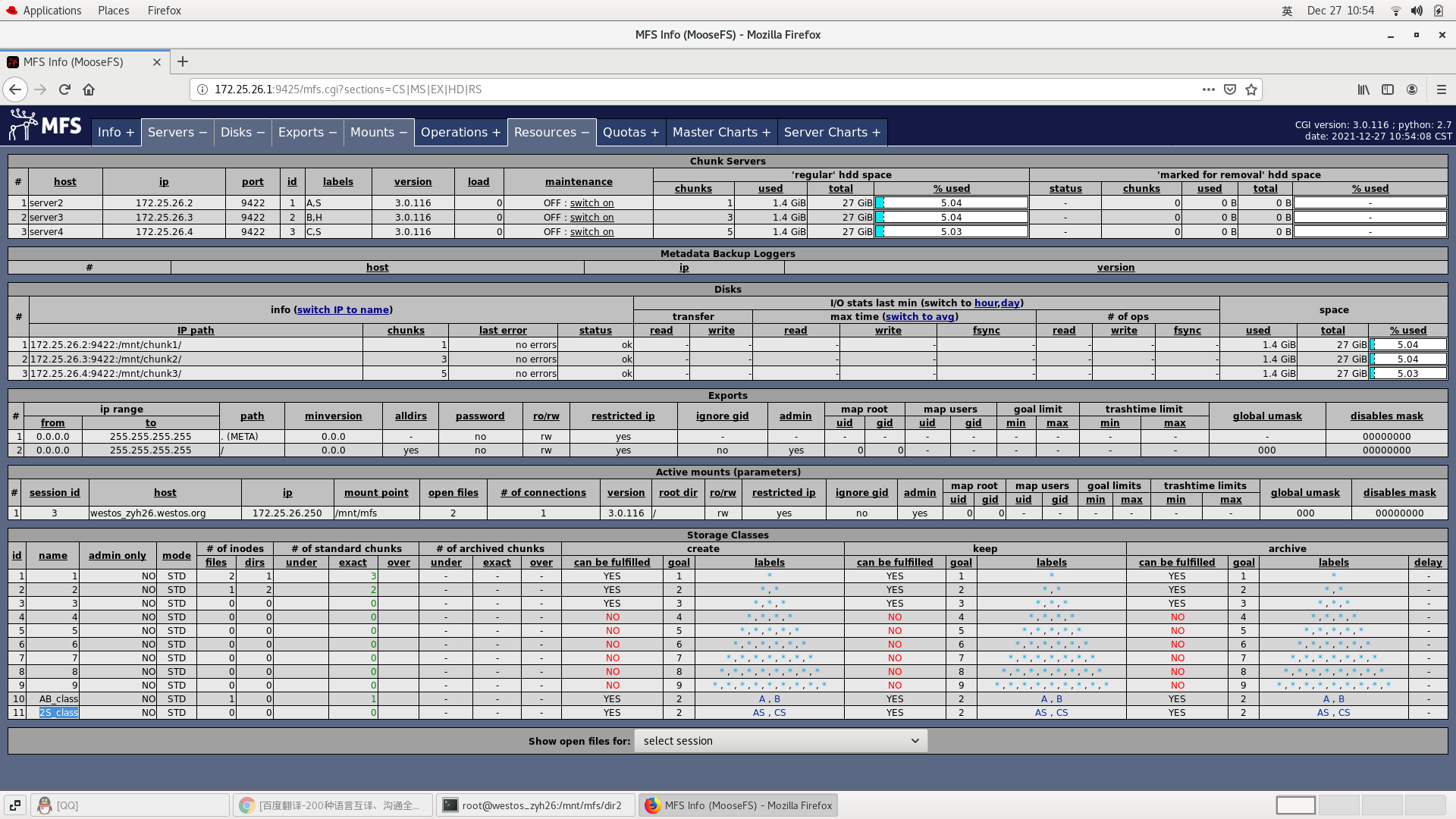

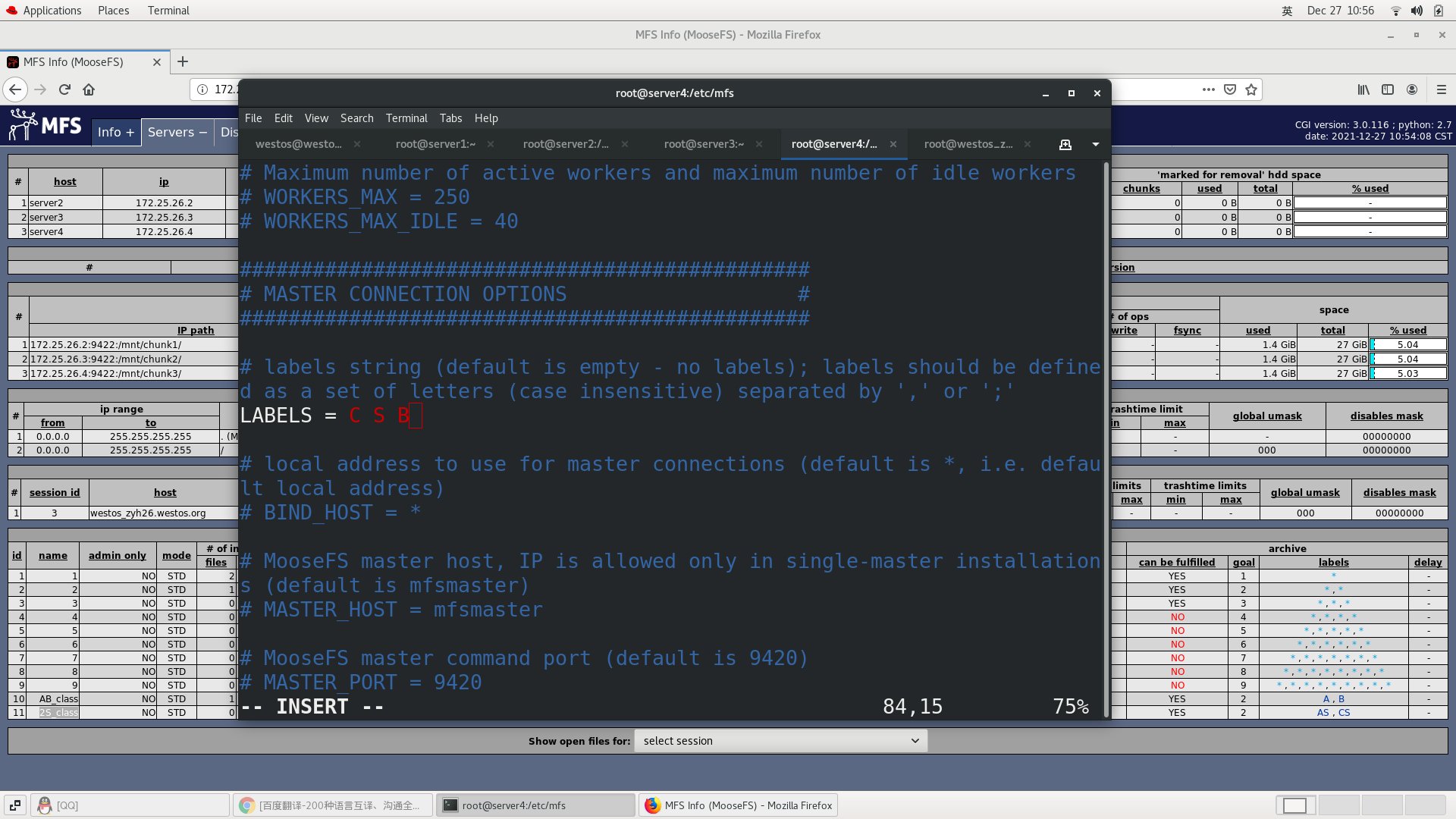

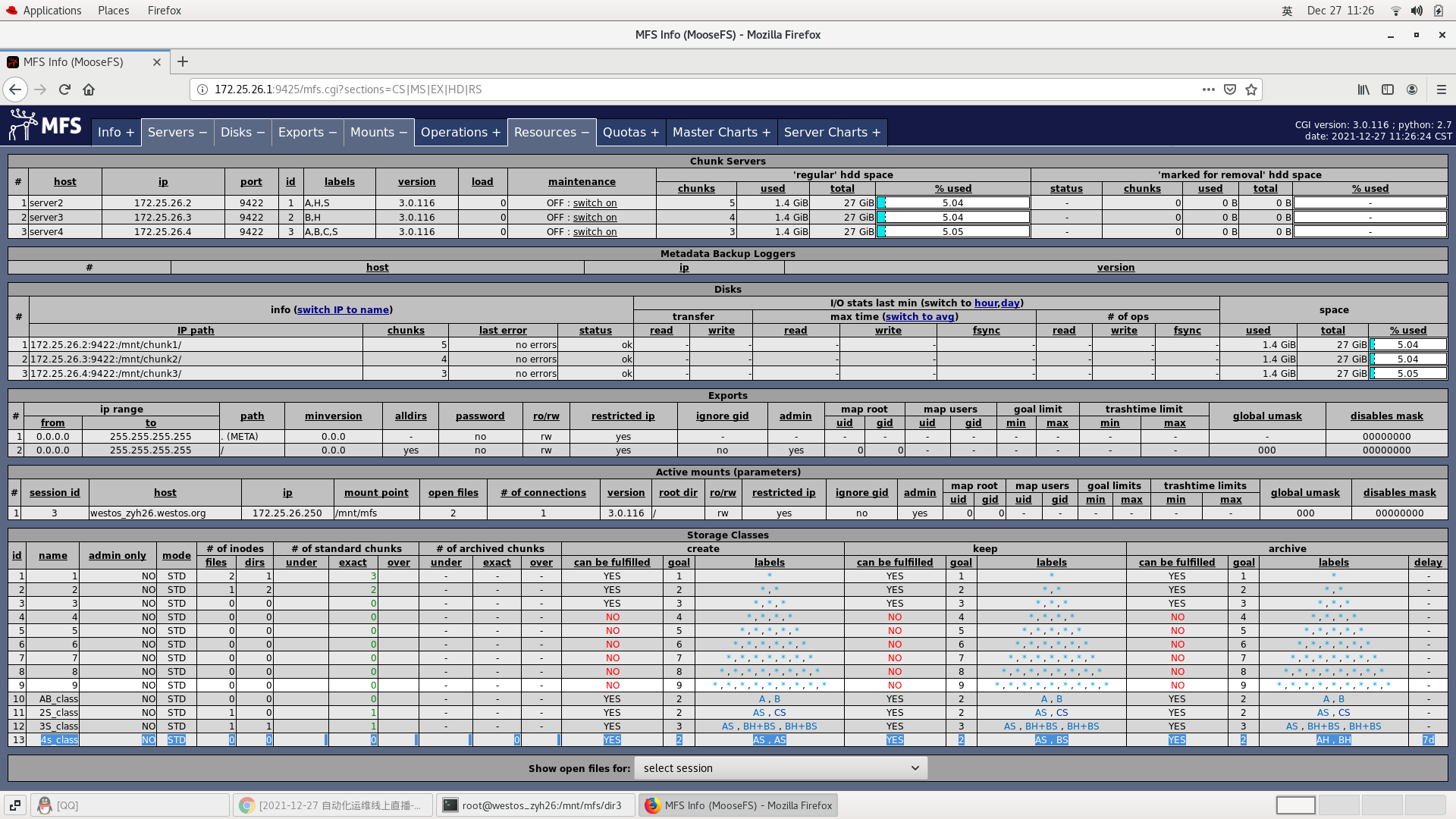

Specify the storage class label to store data

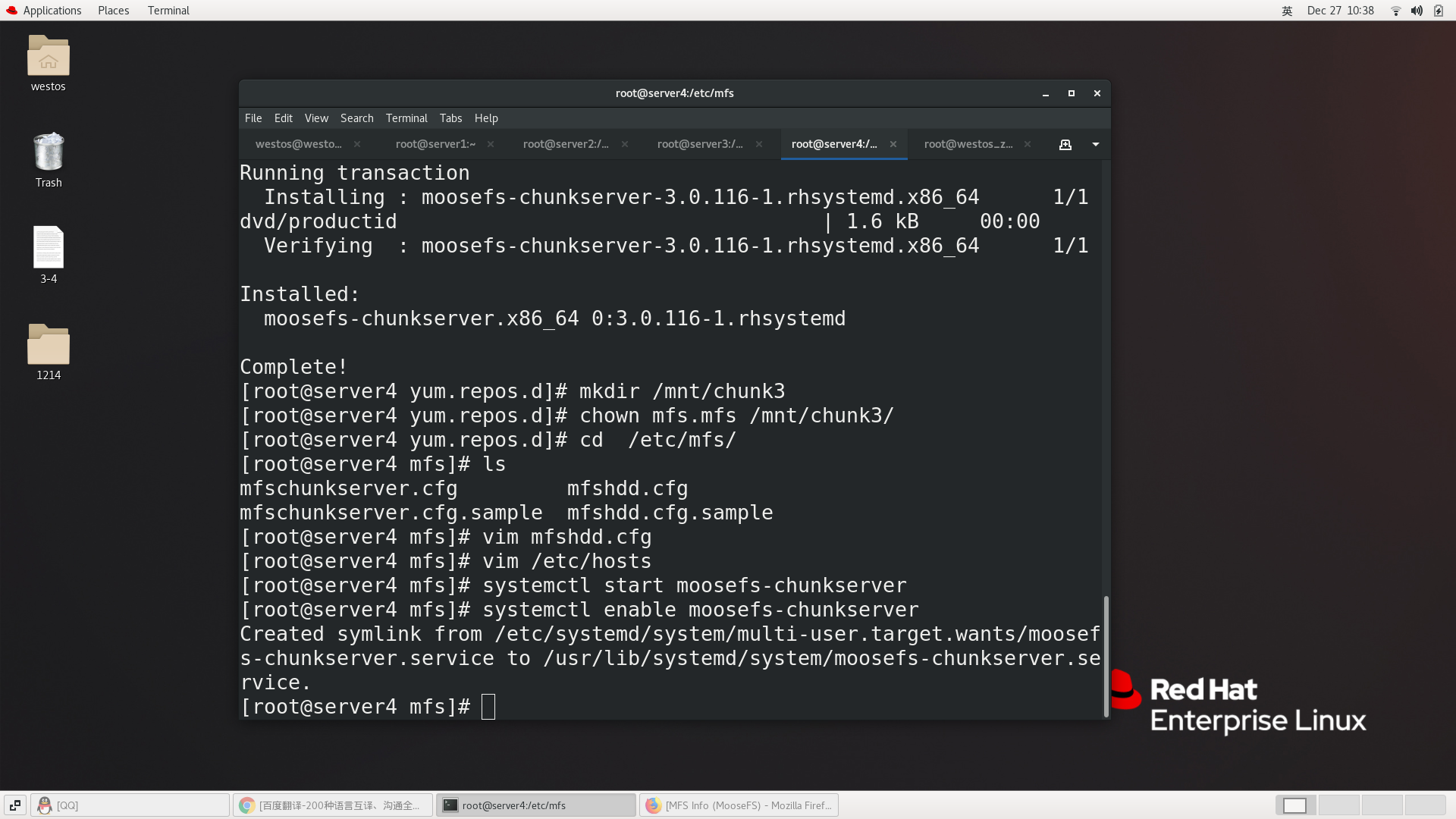

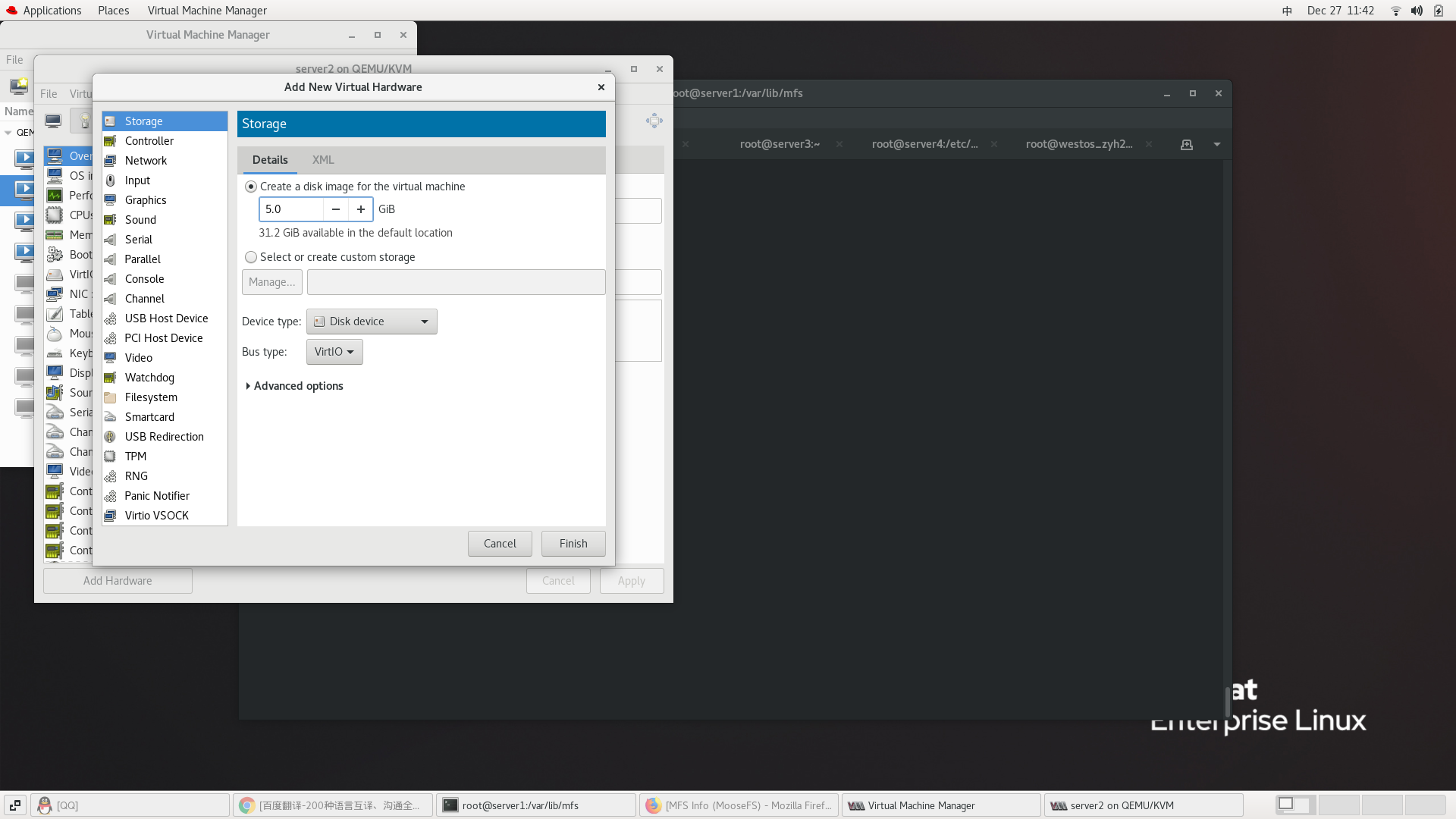

Create a chunk3

Status of labels

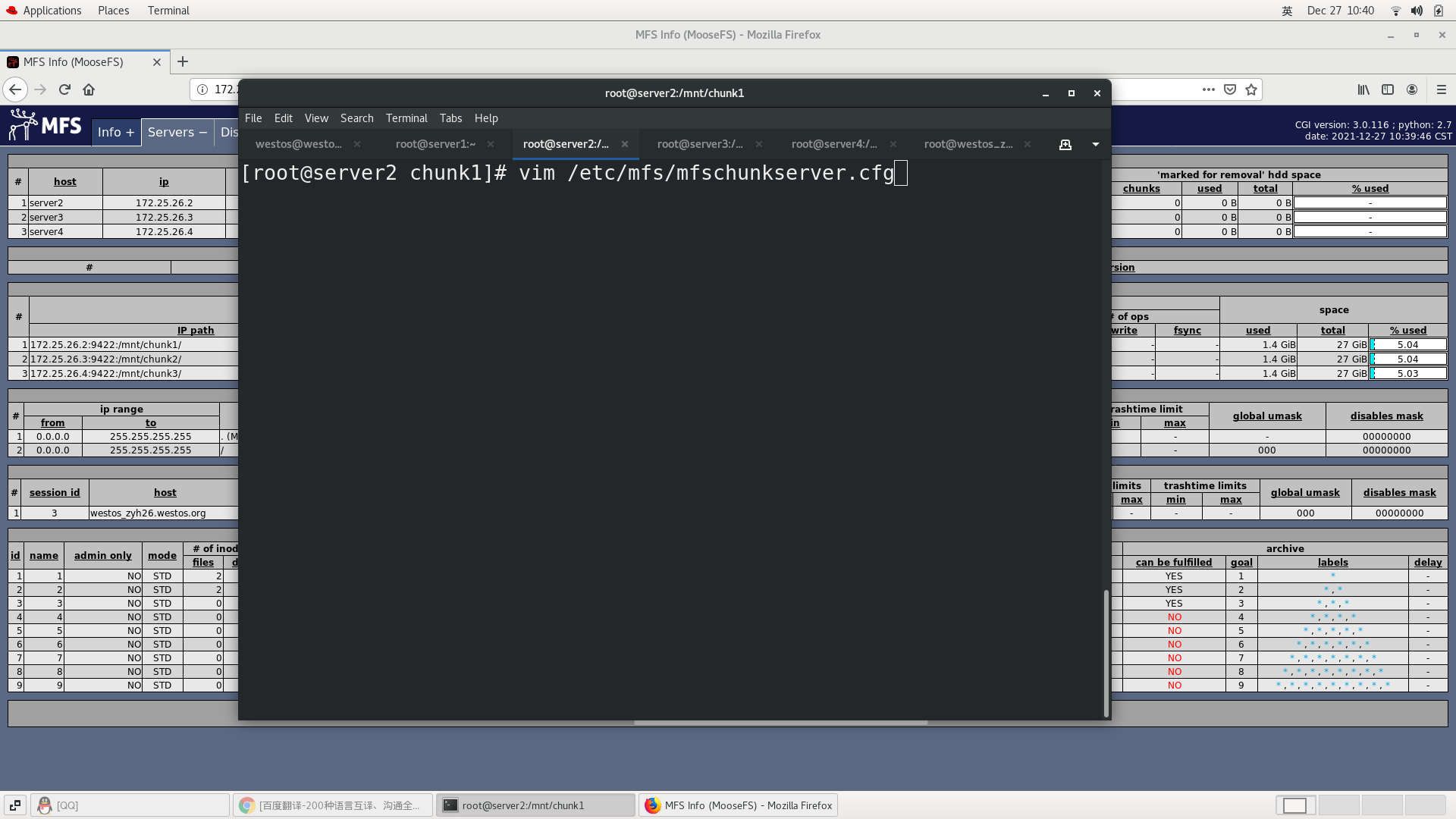

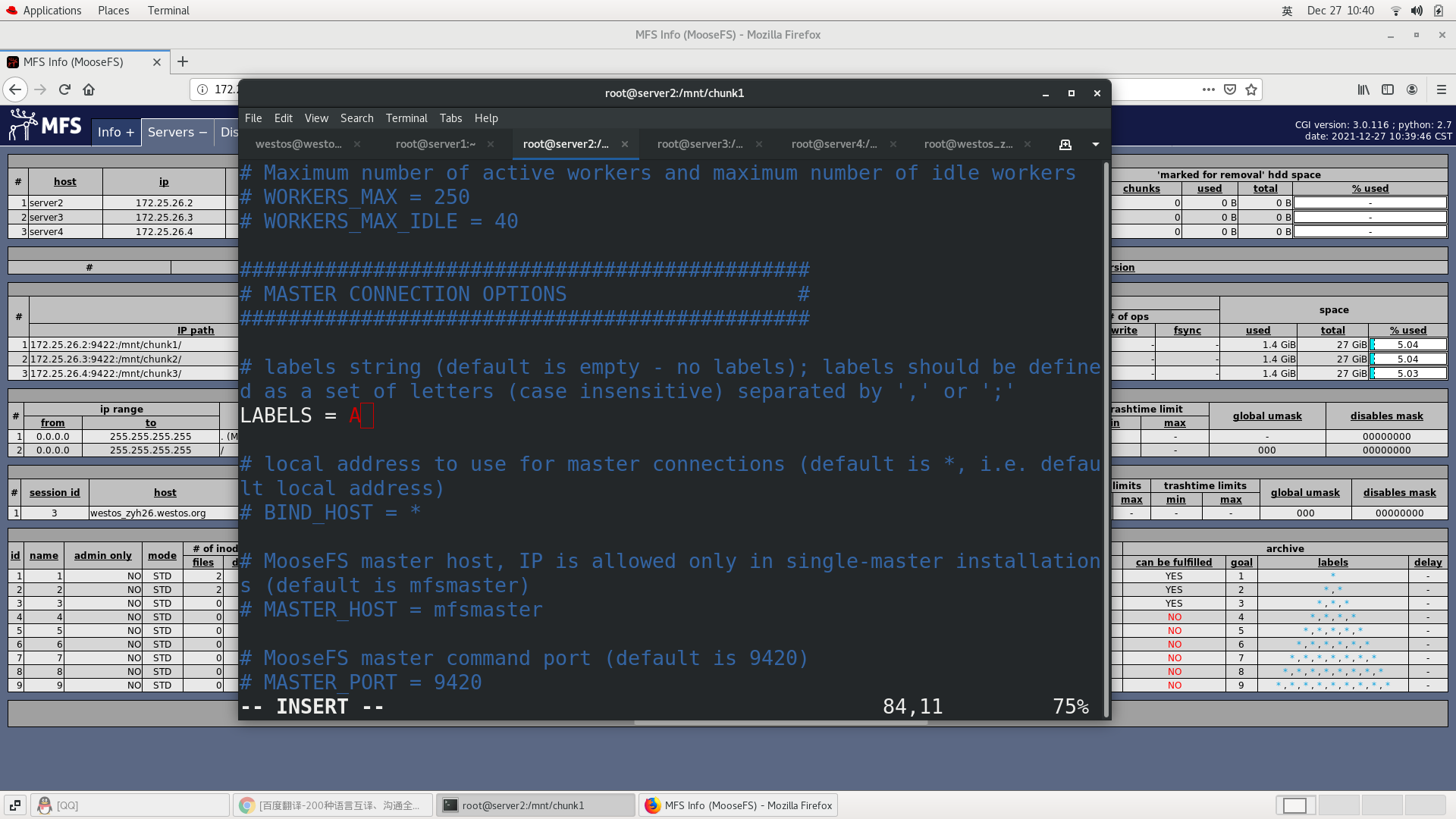

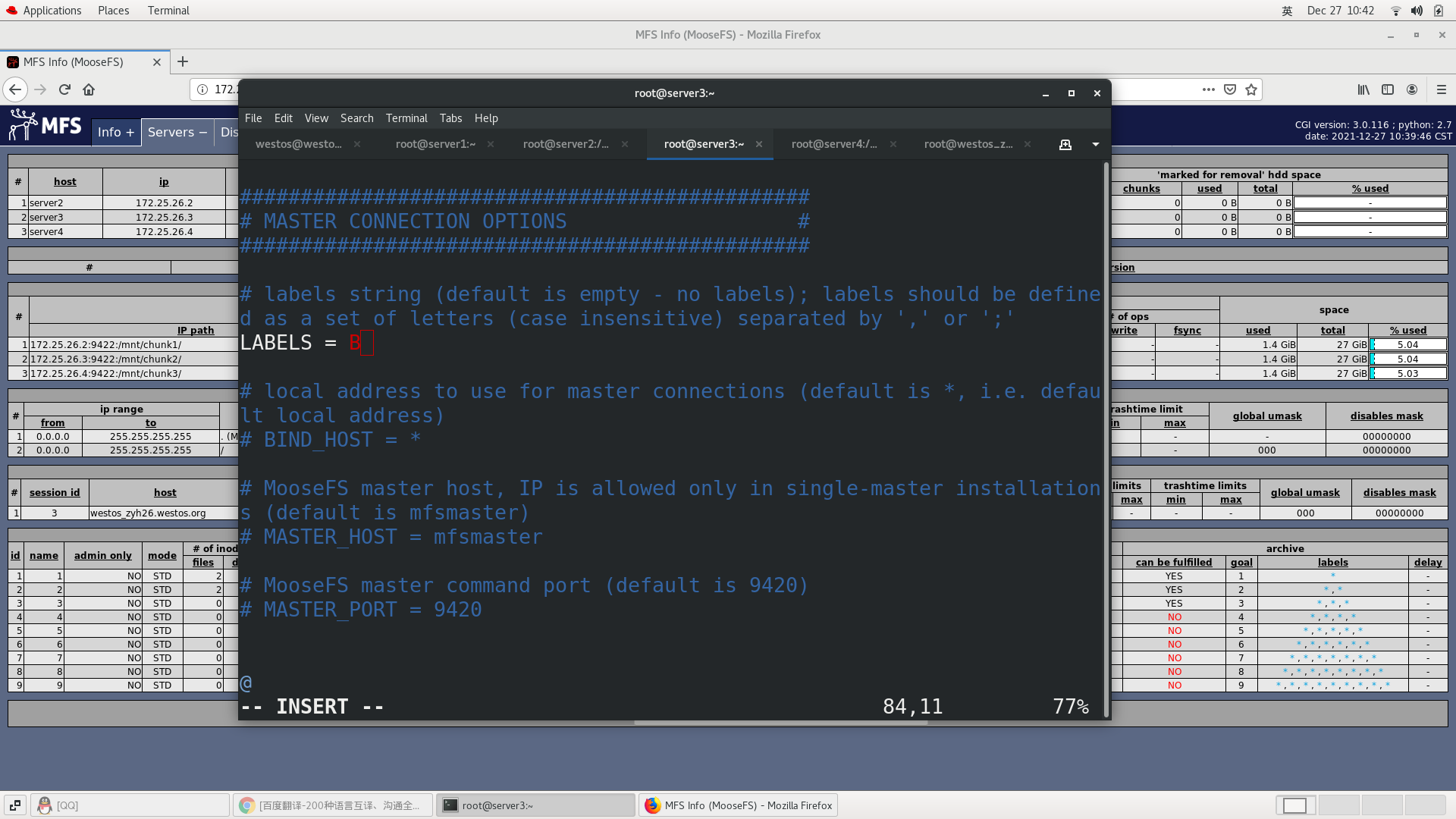

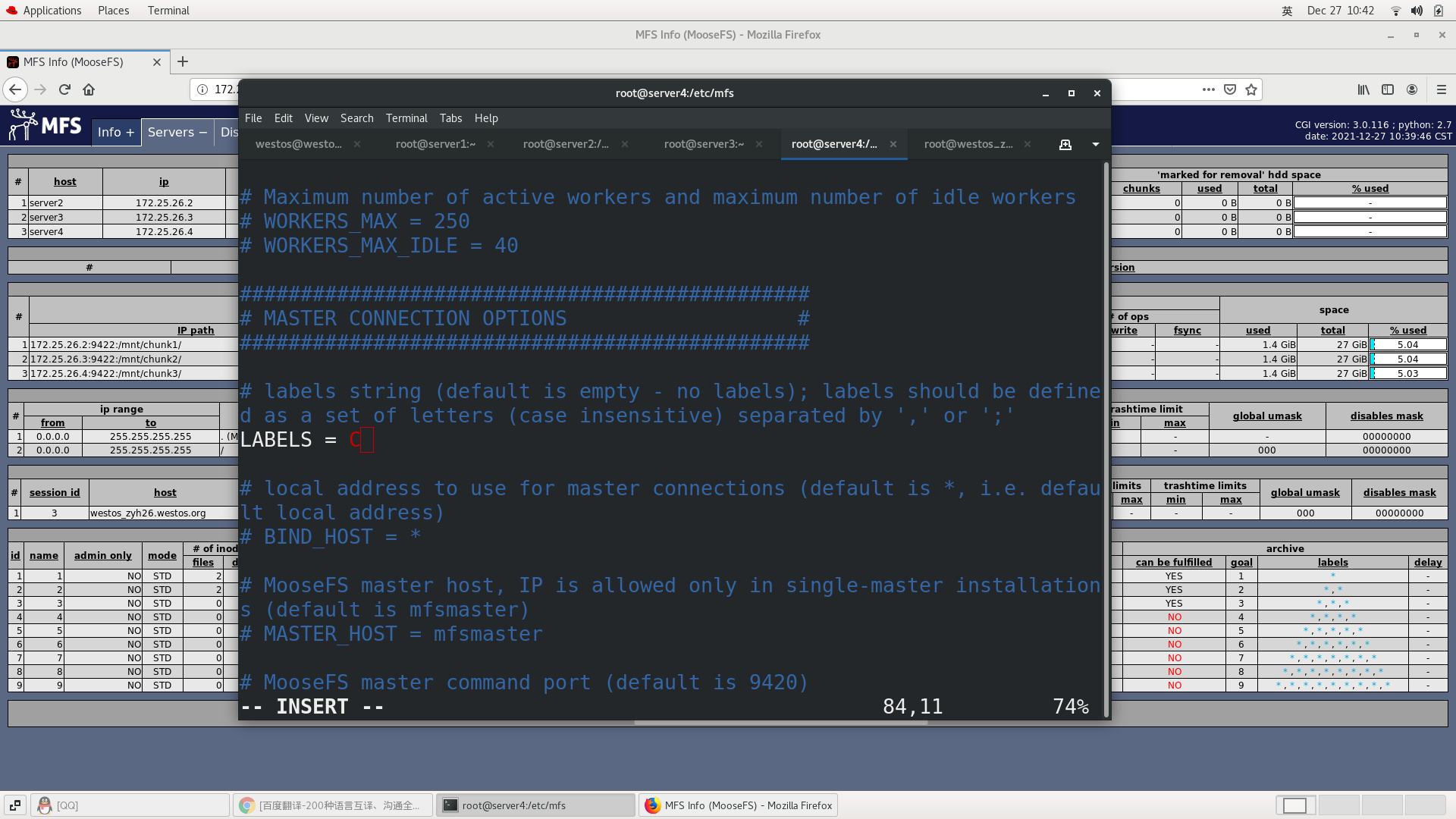

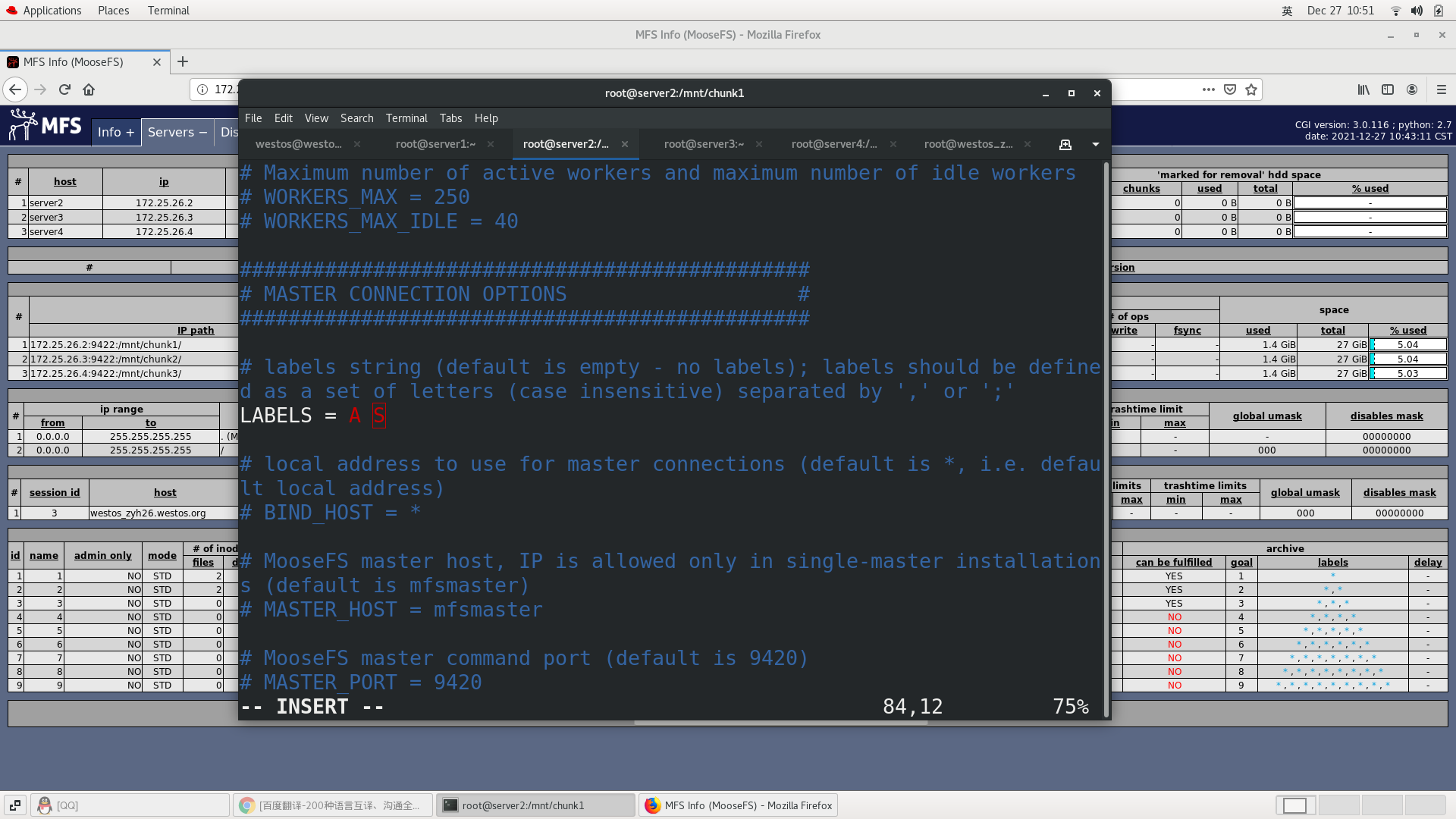

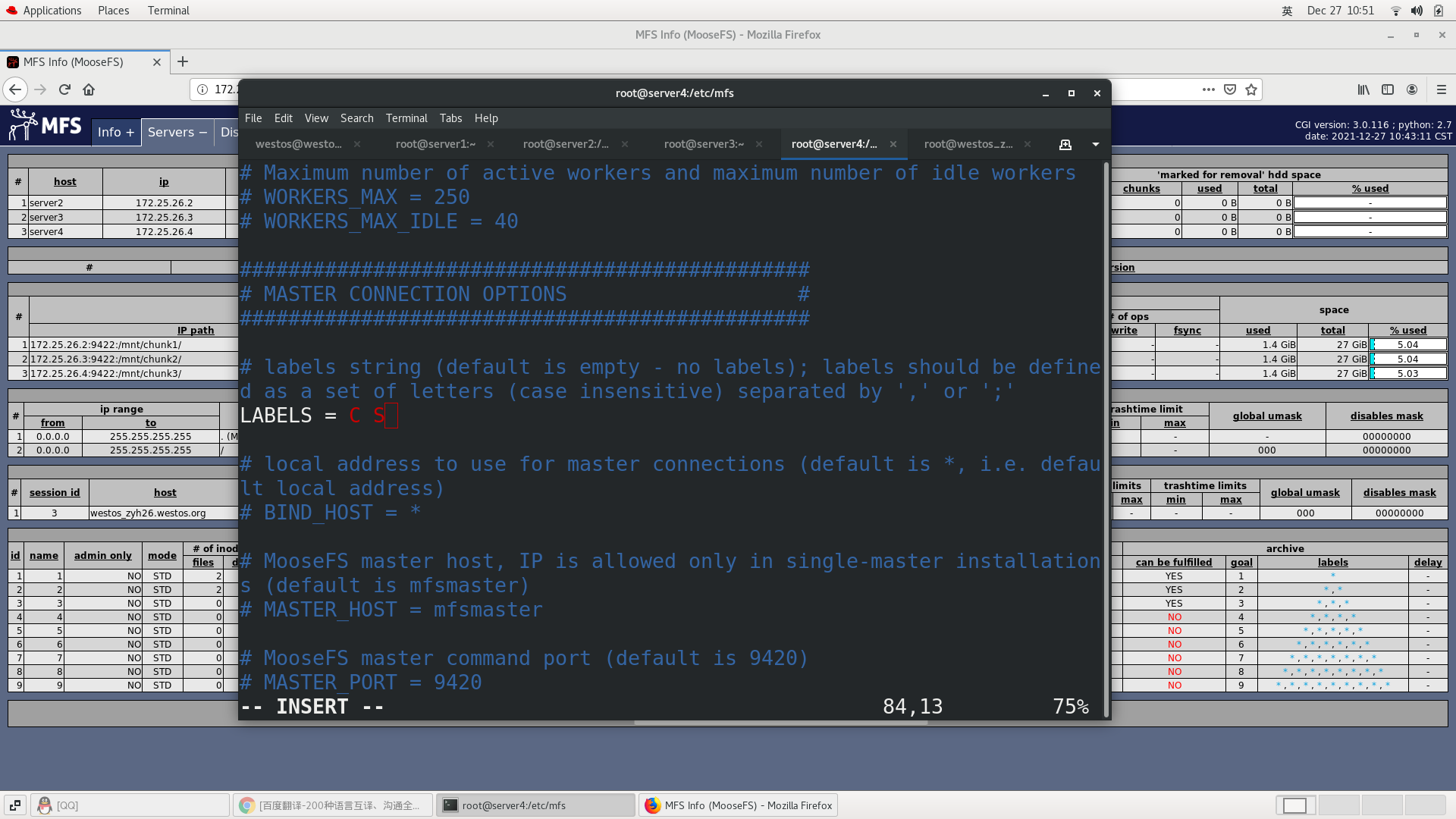

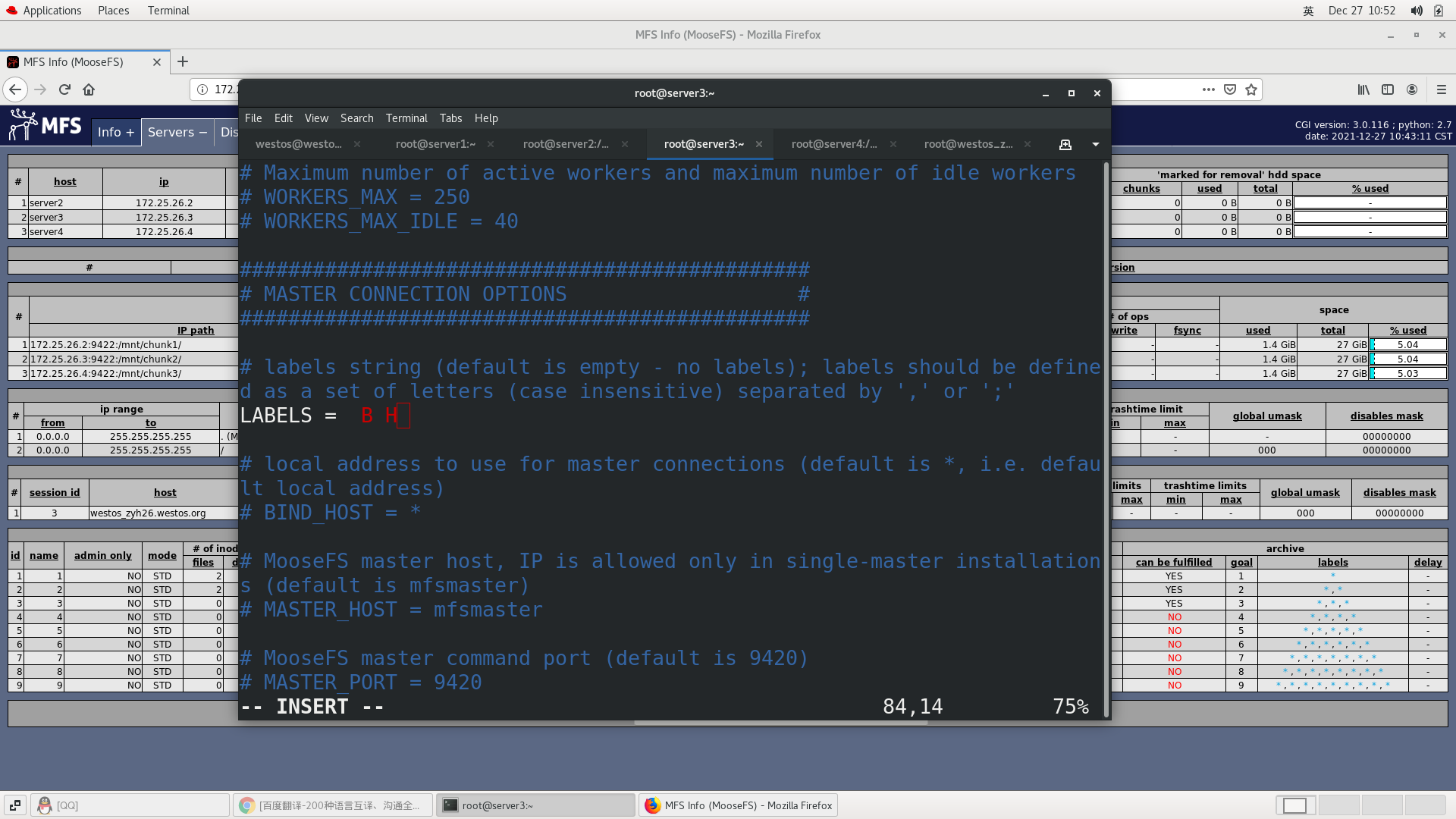

##server2 set label A [root@server2 mfs]# pwd /etc/mfs [root@server2 mfs]# ls mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample [root@server2 mfs]# vim mfschunkserver.cfg ##Set label A [root@server2 mfs]# systemctl reload moosefs-chunkserver.service ## server3 set label B [root@serve3 ~]# cd /etc/mfs/ [root@serve3 mfs]# ls mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample [root@serve3 mfs]# vim mfschunkserver.cfg [root@serve3 mfs]# systemctl reload moosefs-chunkserver.service ##Set server4 label to A [root@server4 ~]# cd /etc/mfs/ [root@server4 mfs]# ls mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample [root@server4 mfs]# vim mfschunkserver.cfg [root@server4 mfs]# systemctl reload moosefs-chunkserver.service

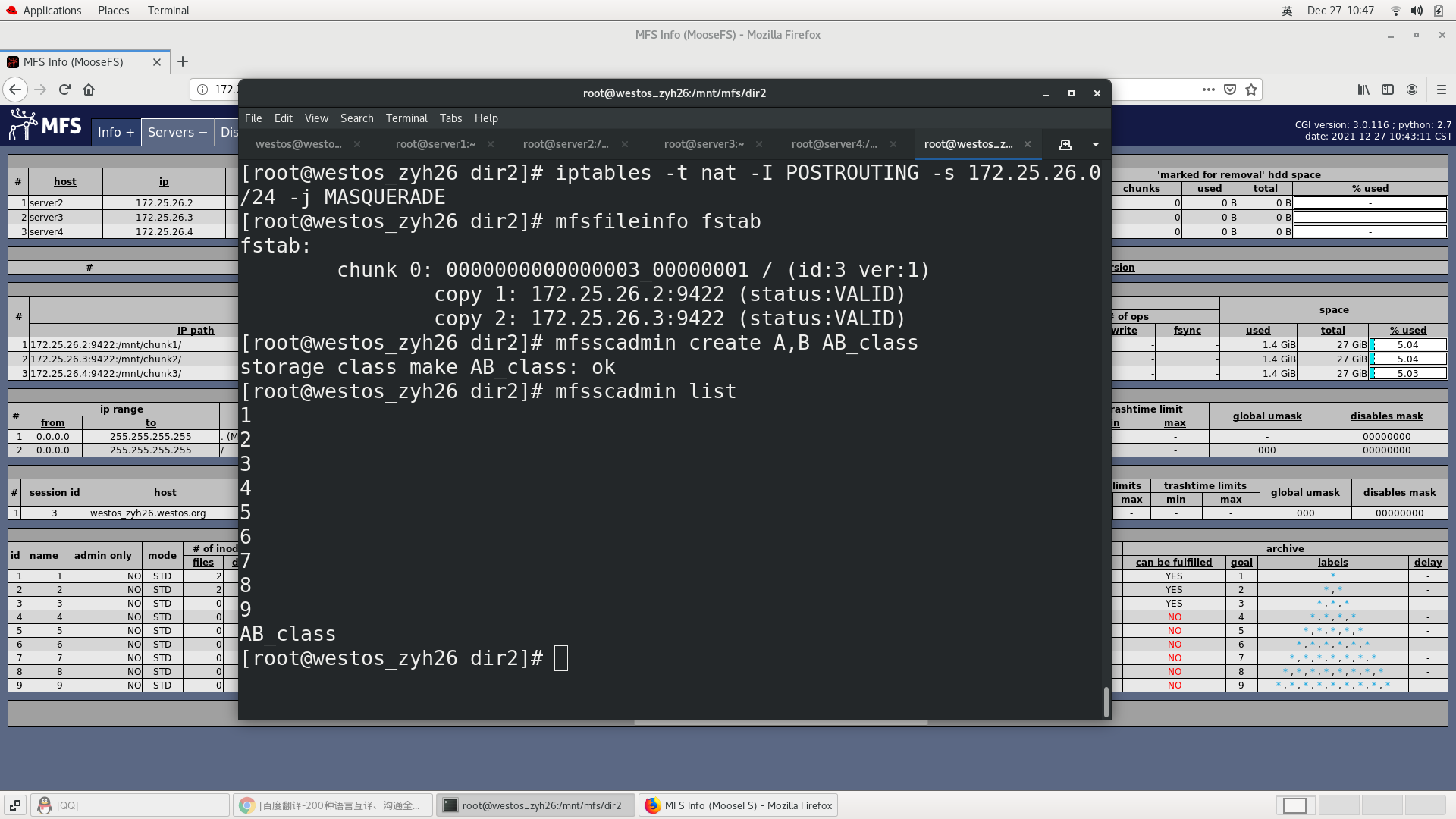

[root@westos ~]# cd /mnt/mfs [root@westos mfs]# mfsscadmin create A,B ab_class ##create label [root@westos mfs]# cd dir2/ [root@westos dir2]# ls bigfile fstab [root@westos dir2]# mfssetsclass ab_class fstab ##Set label fstab: storage class: 'ab_class' [root@westos dir2]# mfsfileinfo fstab fstab: chunk 0: 0000000000000002_00000001 / (id:2 ver:1) copy 1: 172.25.70.2:9422 (status:VALID) copy 2: 172.25.70.3:9422 (status:VALID)

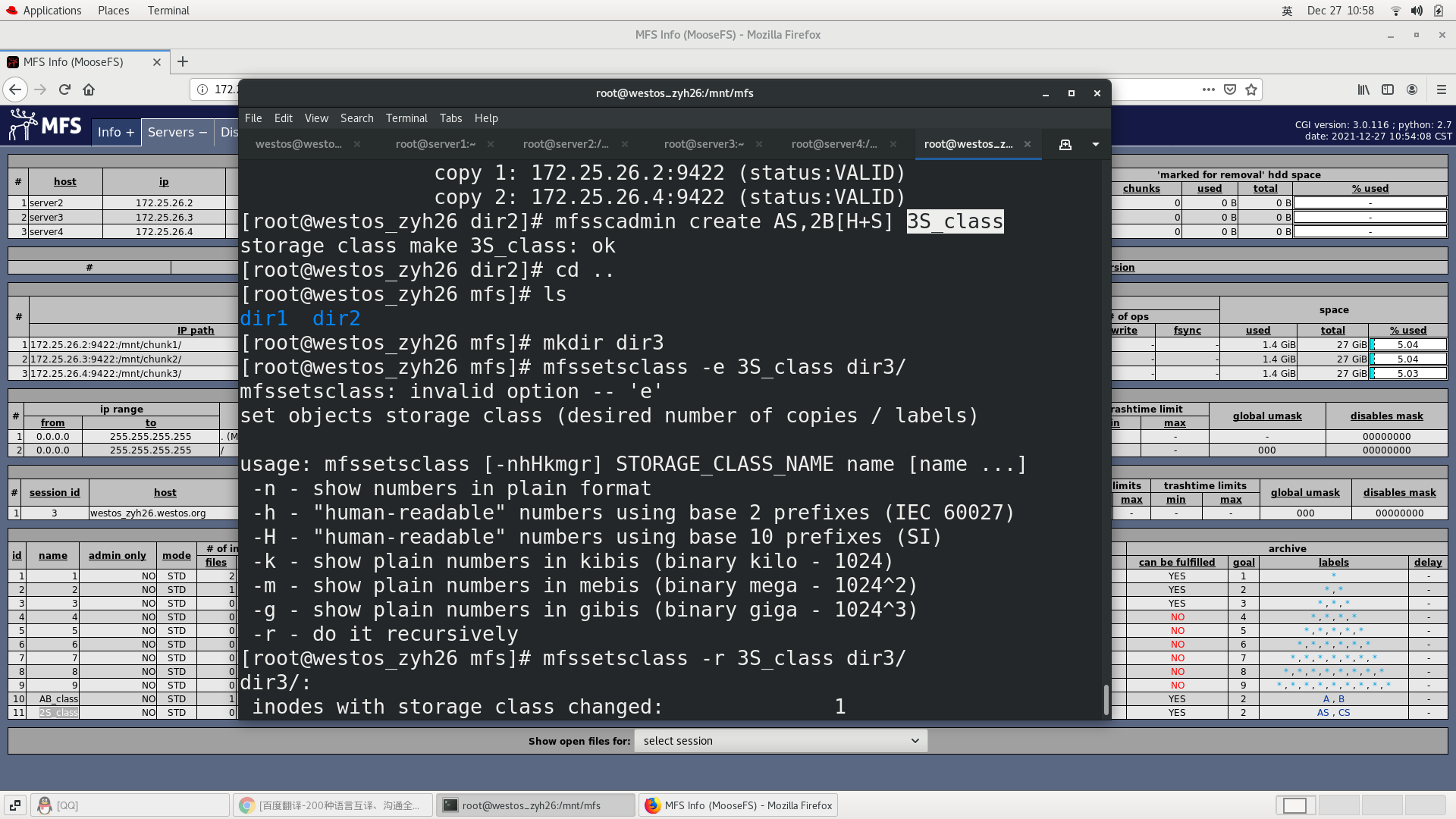

Experiment 2

Experiment 3

Experiment 4

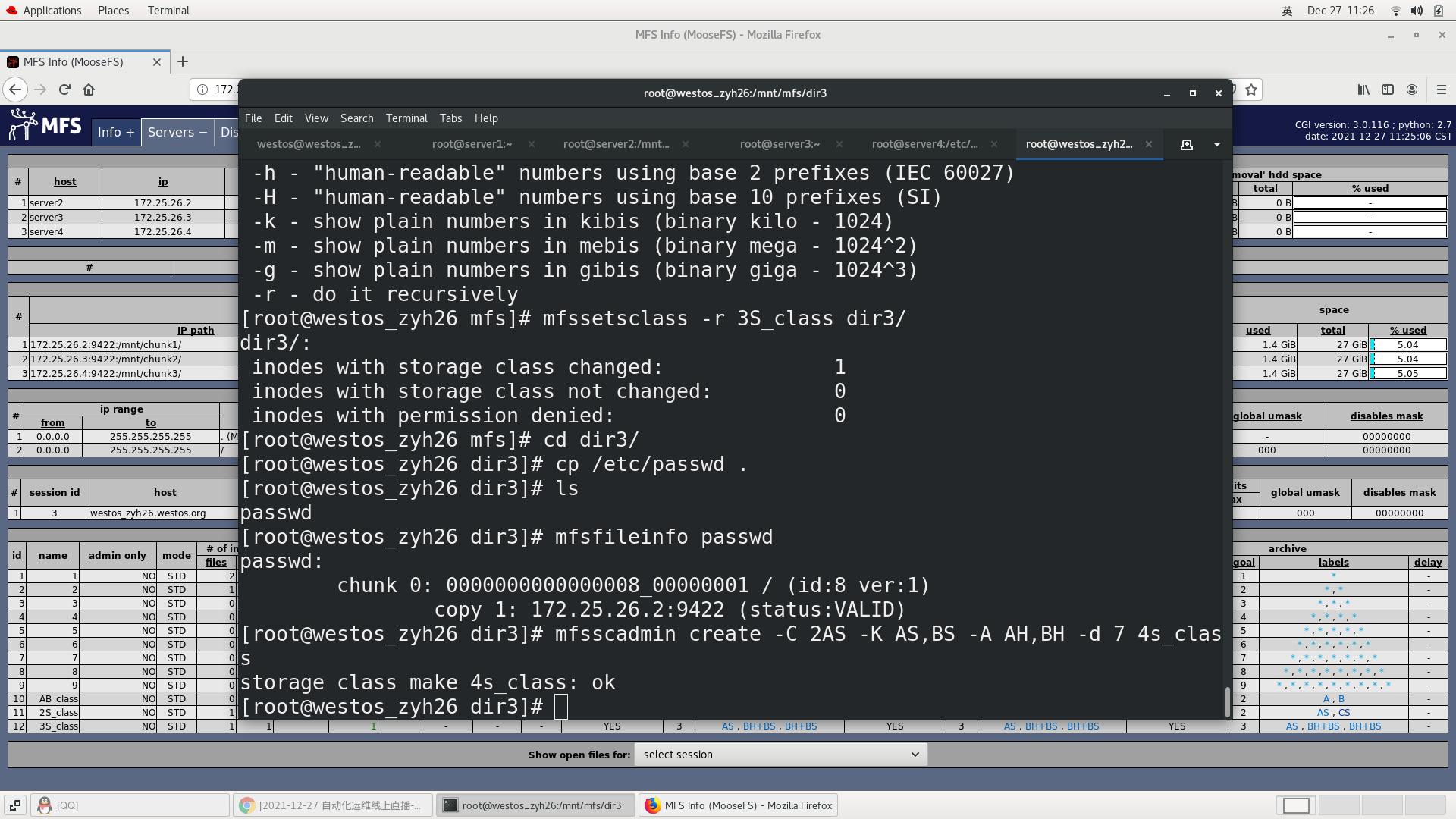

mfsscadmin create -C 2AS -K AS,BS -A AH,BH -d 7 4S_class ##C creates, K holds, A packs, and d represents the expiration time after packaging

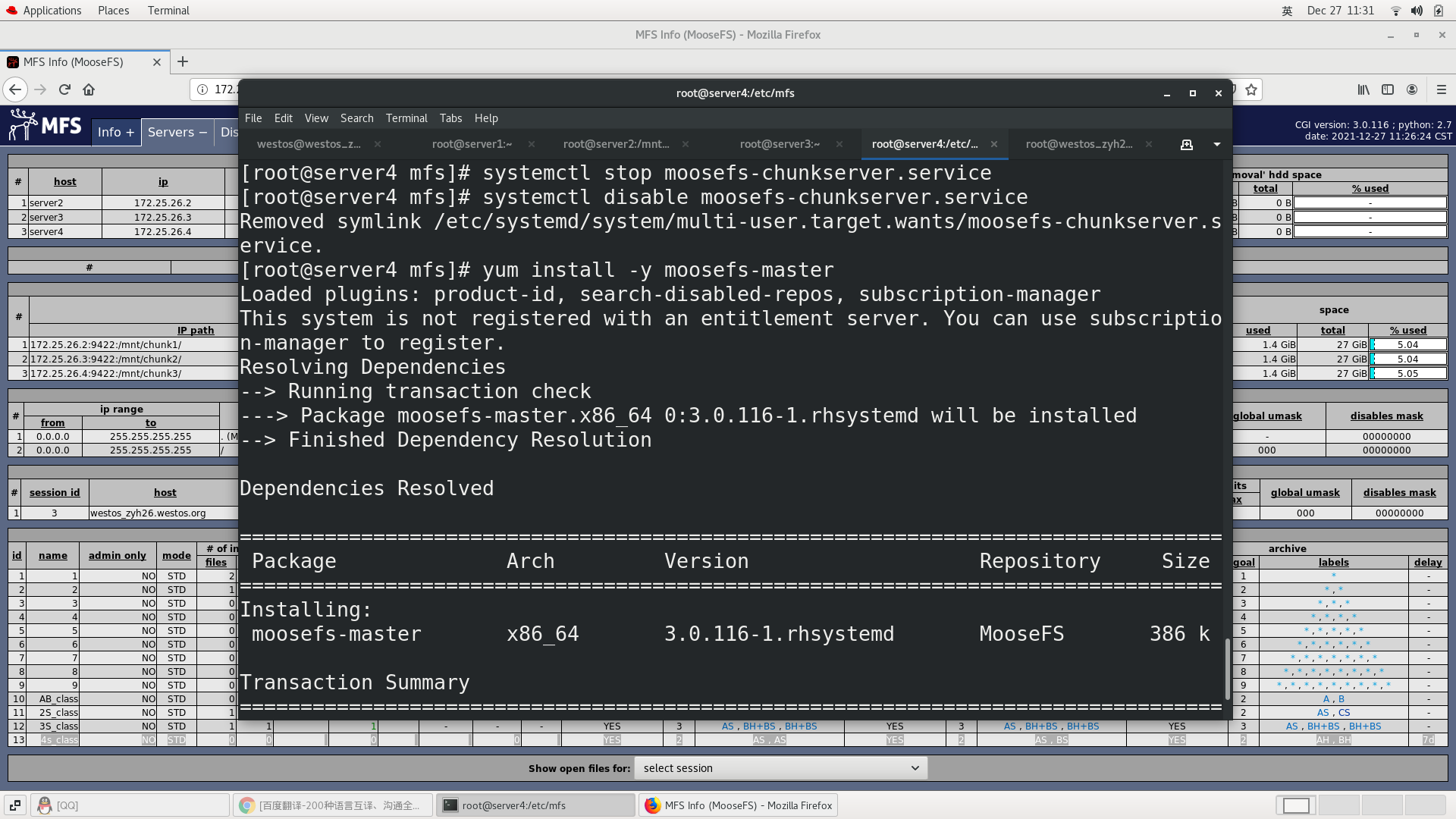

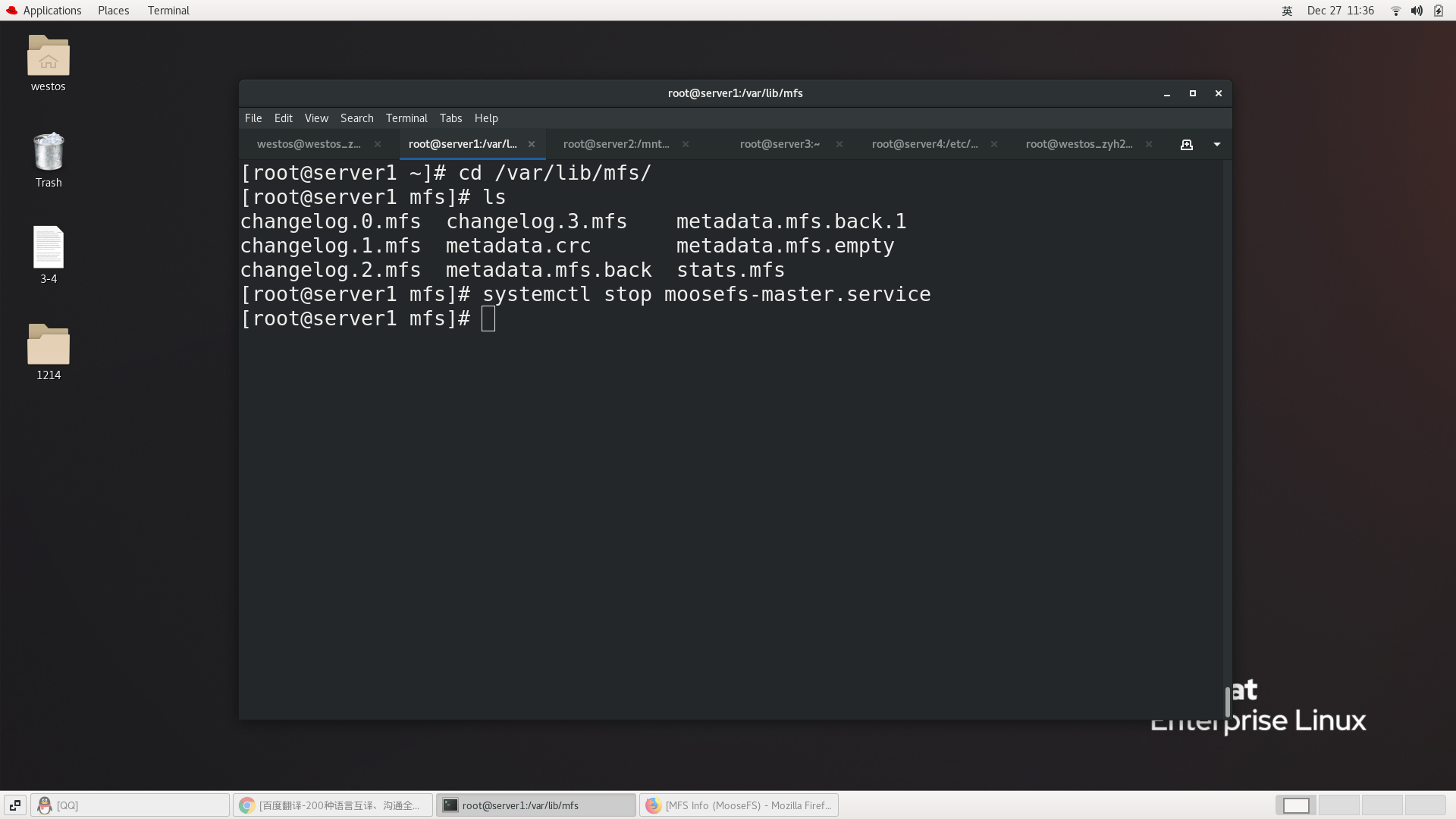

6.MFS high availability cluster

Close the moosefs chunkserver of server4

Use server4 as the master standby

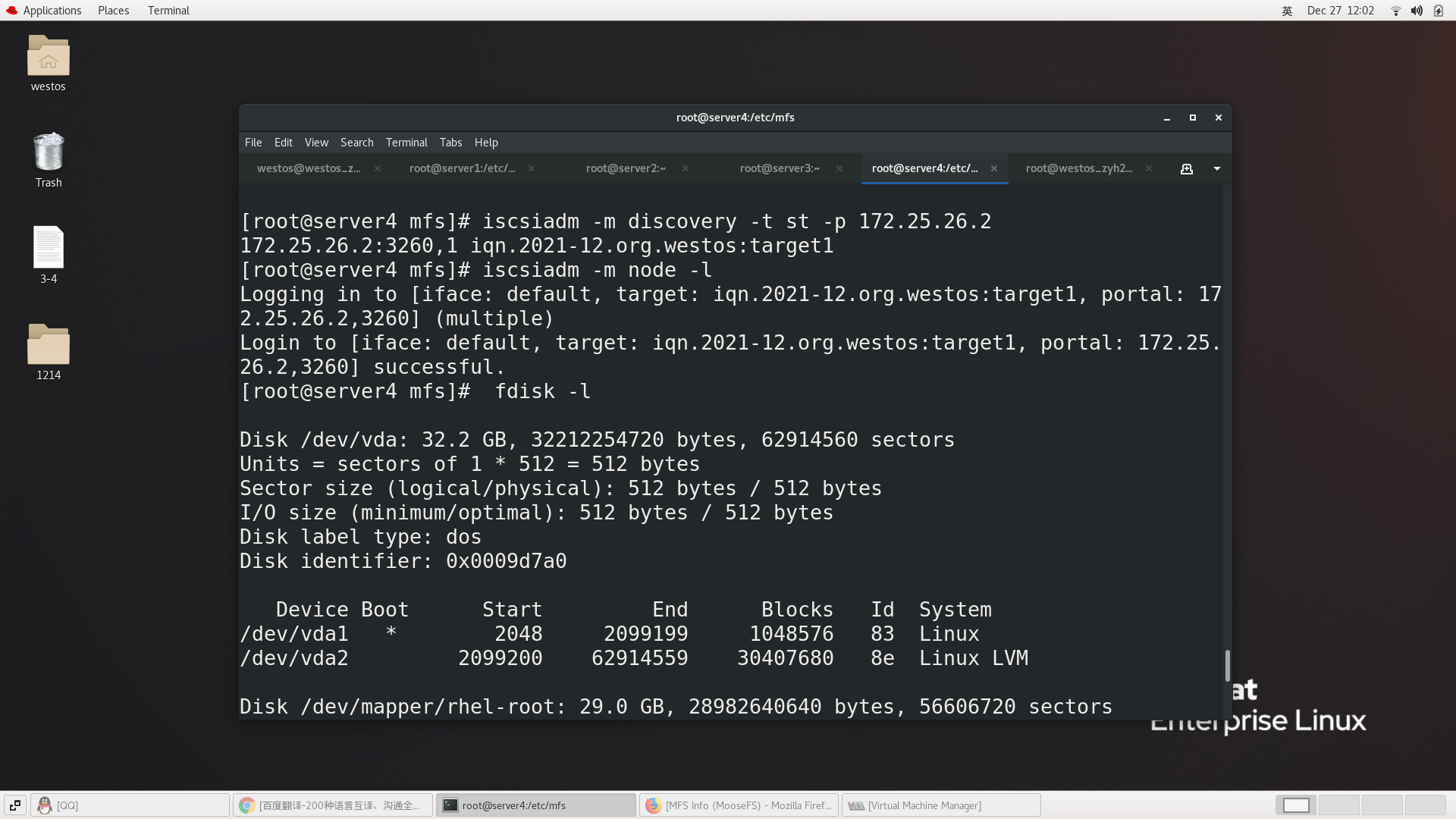

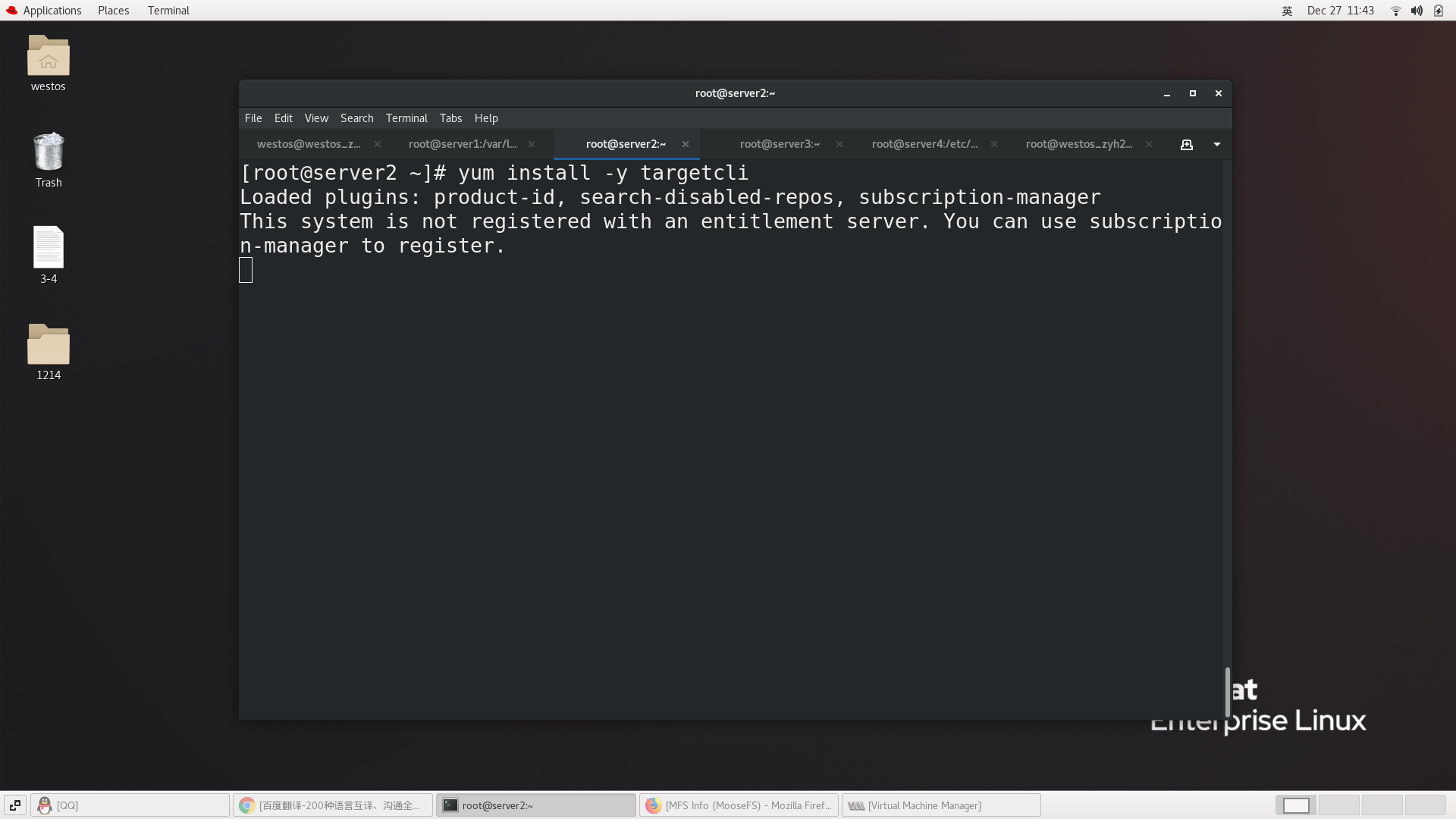

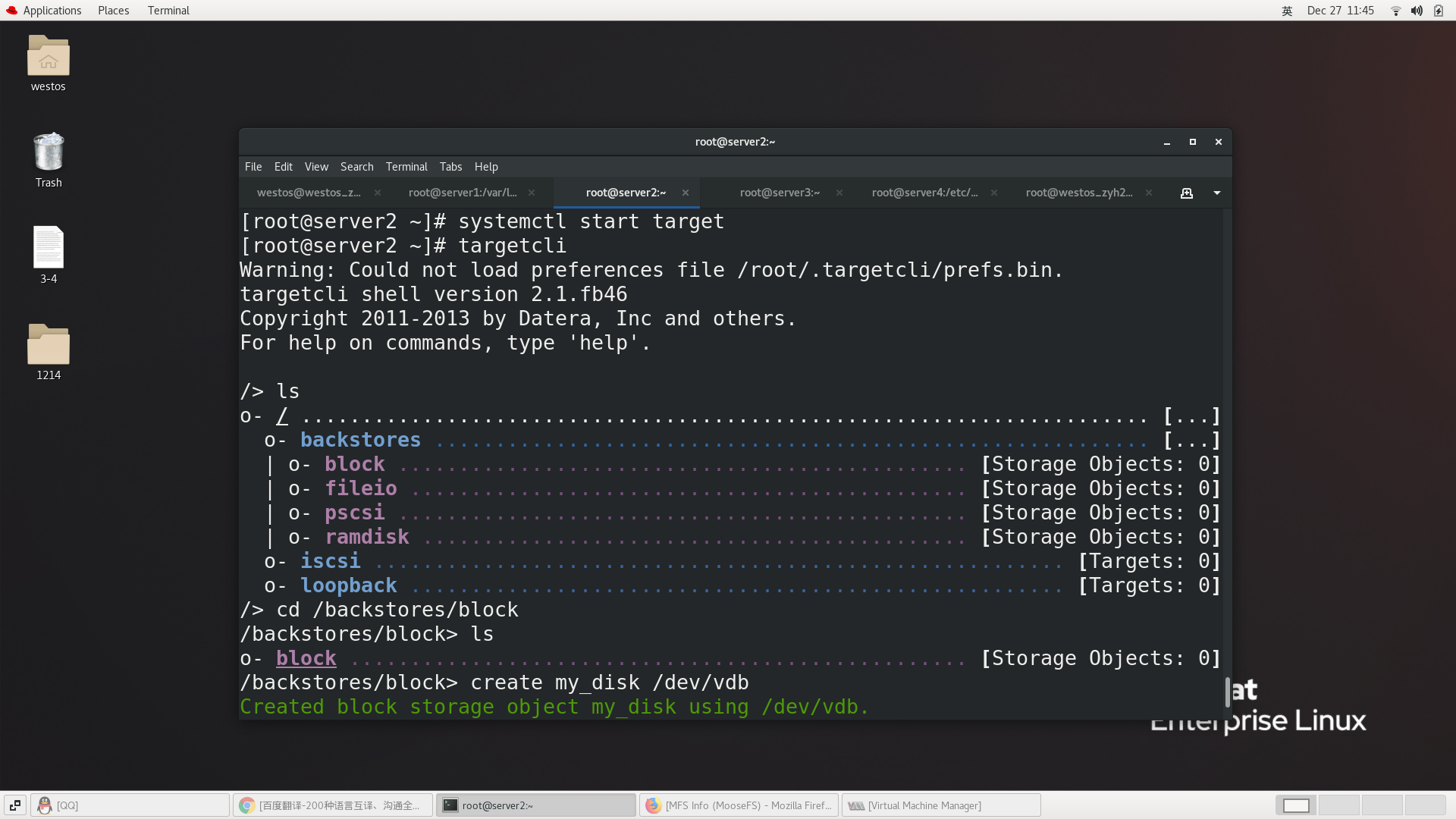

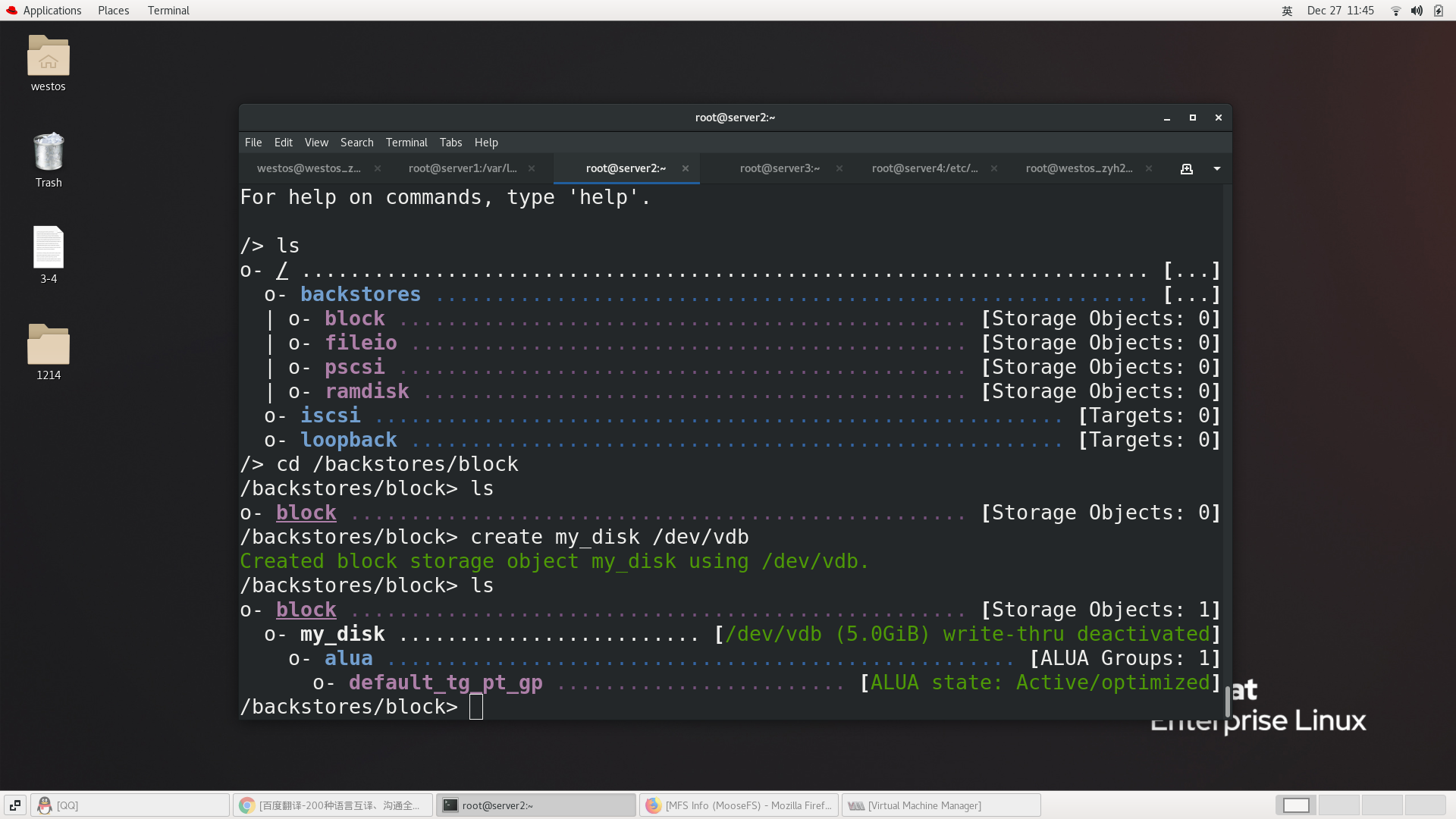

Configure target on server2

[root@server2 ~]# yum install targetcli -y ##Install target software [root@server2 ~]# systemctl start target.service ##Start target [root@server2 ~]# targetcli /> ls o- / .......................................................................... [...] o- backstores ............................................................... [...] | o- block ................................................... [Storage Objects: 0] | o- fileio .................................................. [Storage Objects: 0] | o- pscsi ................................................... [Storage Objects: 0] | o- ramdisk ................................................. [Storage Objects: 0] o- iscsi ............................................................. [Targets: 0] o- loopback .......................................................... [Targets: 0] /> cd backstores/block /backstores/block> ls o- block ....................................................... [Storage Objects: 0] /backstores/block> create my_disk /dev/vdc Created block storage object my_disk using /dev/vdc. /backstores/block> cd /iscsi /iscsi> create iqn.2021-12.org.westos:target1 Created target iqn.2021-12.org.westos:target1. Created TPG 1. Global pref auto_add_default_portal=true Created default portal listening on all IPs (0.0.0.0), port 3260. /iscsi> cd iqn.2021-03.org.westos:target1/ /iscsi/iqn.20...estos:target1> cd tpg1/luns /iscsi/iqn.20...et1/tpg1/luns> create /backstores/block/my_disk add_mapped_luns= lun= storage_object= /iscsi/iqn.20...et1/tpg1/luns> create /backstores/block/my_disk Created LUN 0. /iscsi/iqn.20...et1/tpg1/luns> cd ../acls /iscsi/iqn.20...et1/tpg1/acls> create iqn.2021-12.org.westos:client Created Node ACL for iqn.2021-03.org.westos:client Created mapped LUN 0. /iscsi/iqn.20...et1/tpg1/acls>

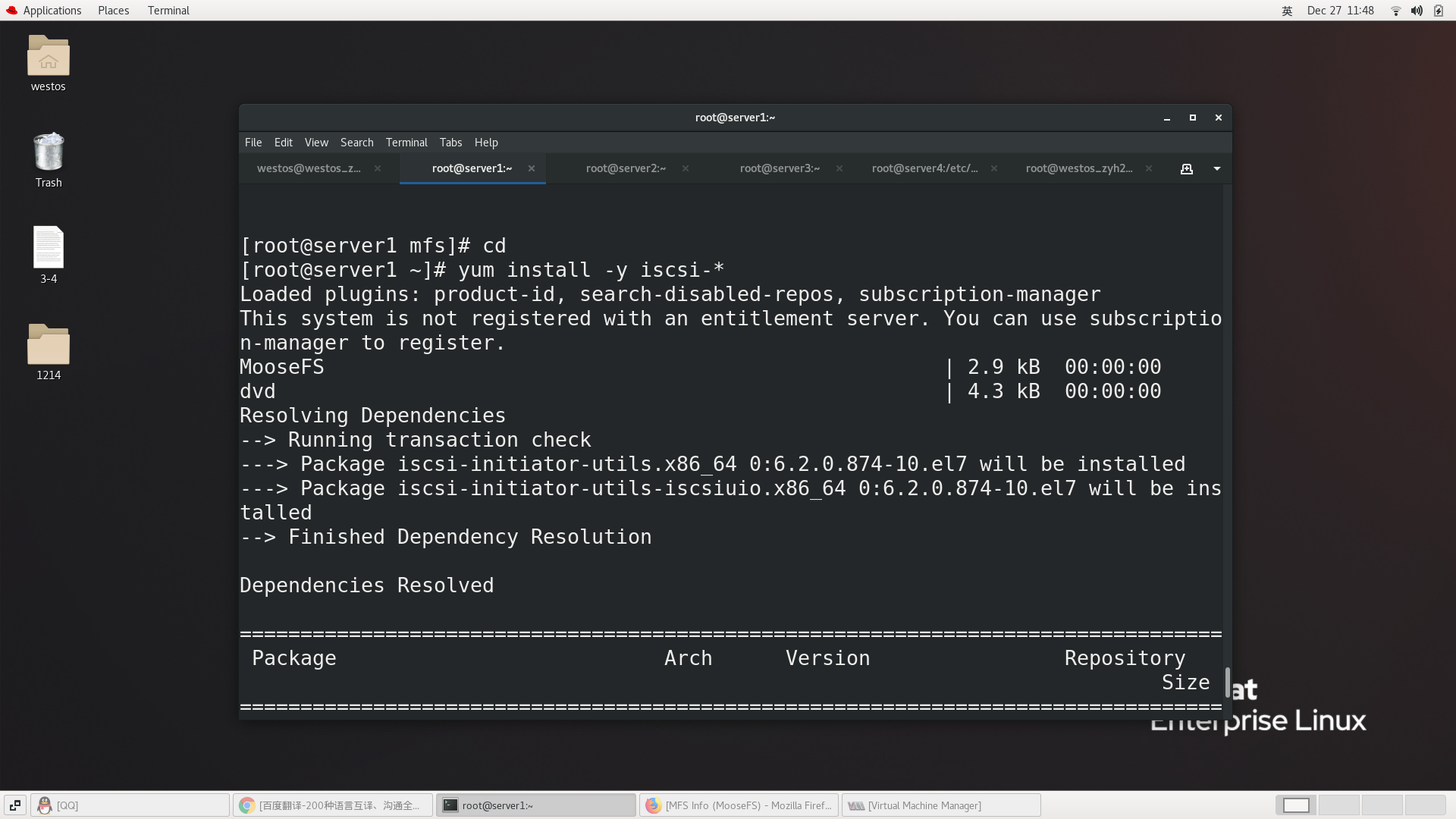

Configure server1

servre1 And server4 Same configuration

[root@server1 ~]# yum install -y iscsi-* ##Installing iscsi

[root@server5 ~]# yum install -y iscsi-* ##Installing iscsi

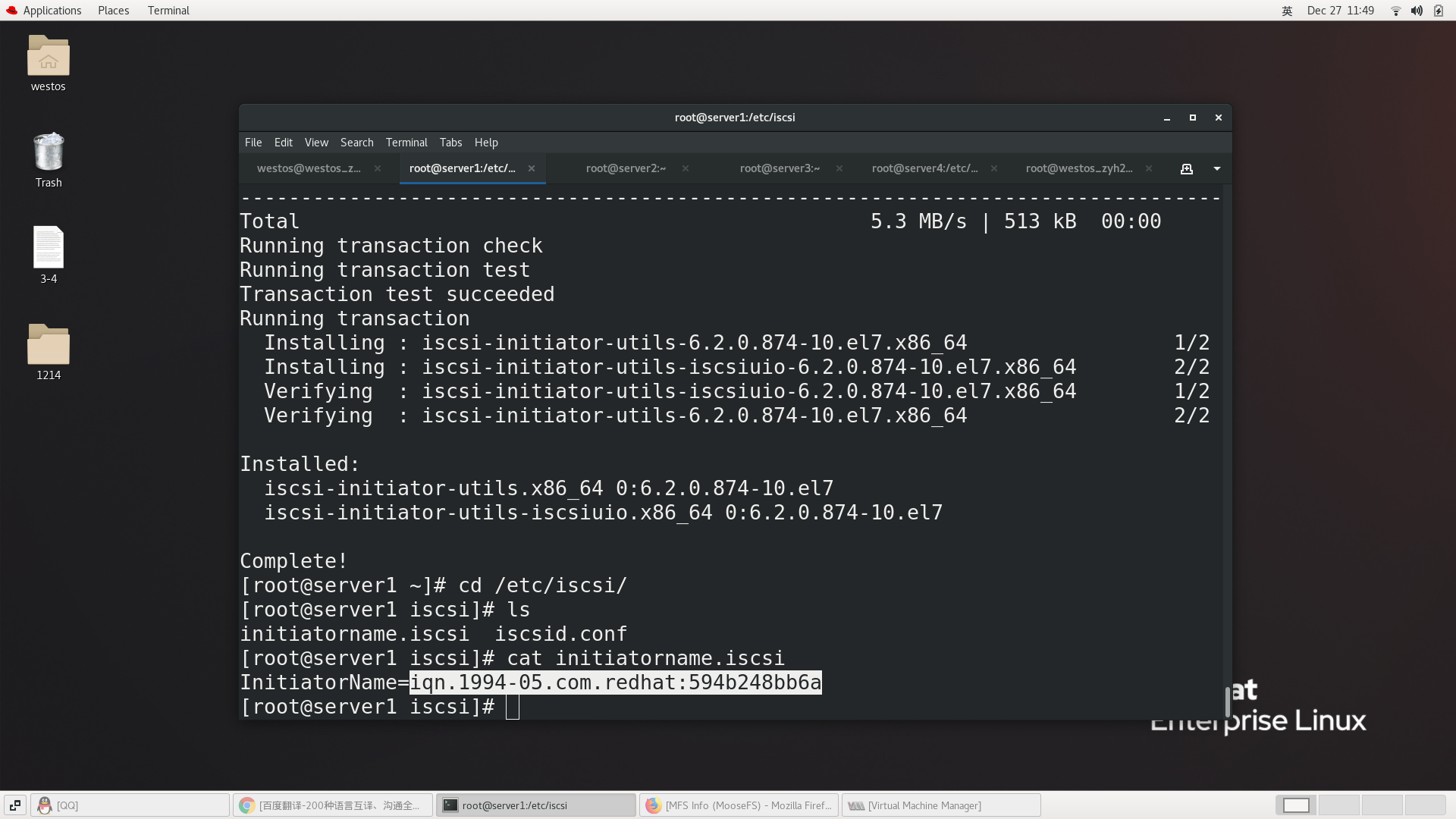

[root@server1 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:347c8bed1ada

[root@server1 ~]# vim /etc/iscsi/initiatorname.iscsi

[root@server1 ~]# cat /etc/iscsi/initiatorname.iscsi

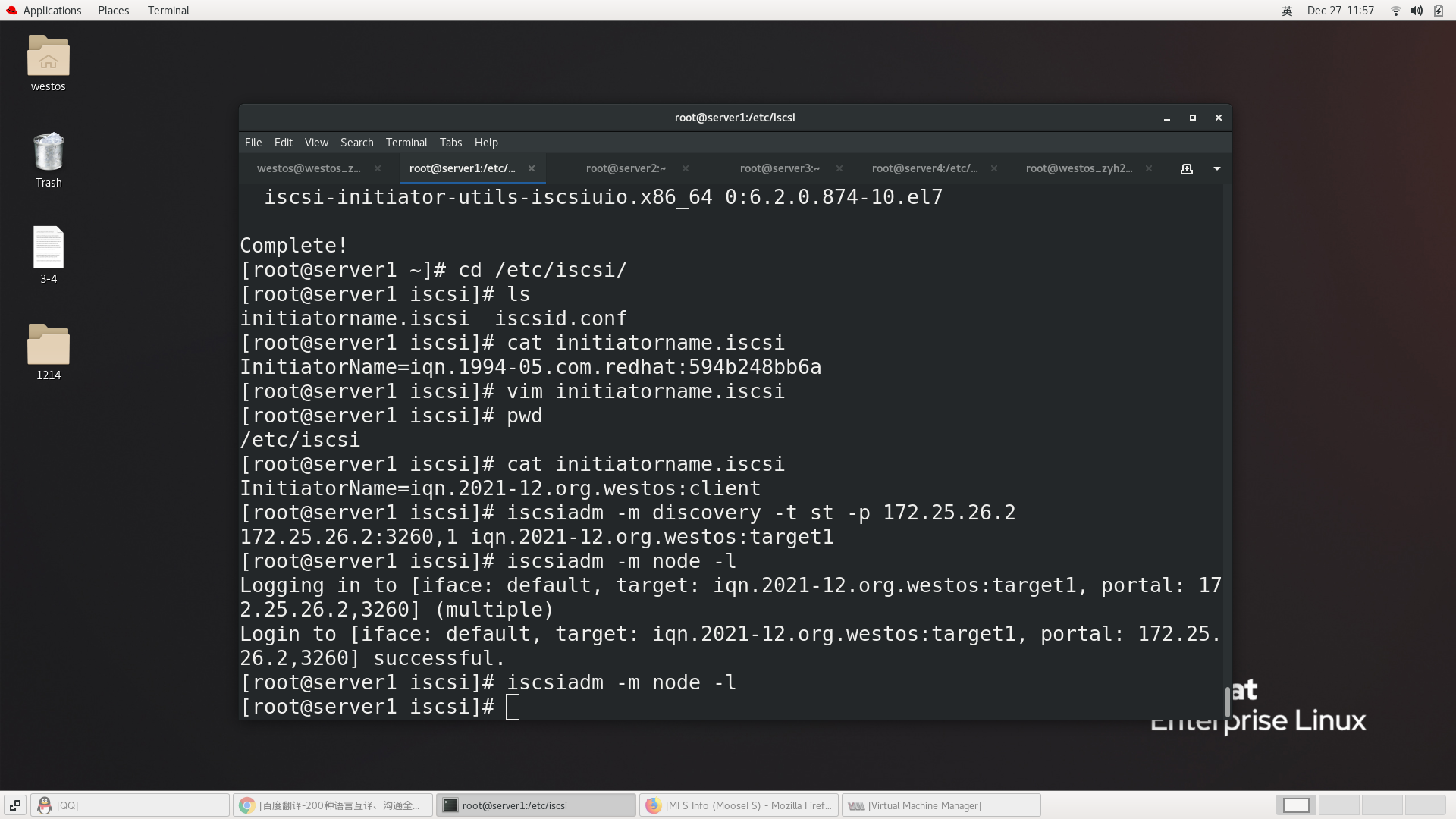

InitiatorName=iqn.2021-12.org.westos:client

[root@server1 ~]# systemctl status iscsi

● iscsi.service - Login and scanning of iSCSI devices

Loaded: loaded (/usr/lib/systemd/system/iscsi.service; enabled; vendor preset: disabled)

Active: inactive (dead)

Docs: man:iscsid(8)

man:iscsiadm(8)

[root@server1 ~]# systemctl is-enabled iscsi

enabled

[root@server1 ~]# systemctl is-enabled iscsid

disabled

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.70.2 ##Point to target host

172.25.70.2:3260,1 iqn.2021-12.org.westos:target1

[root@server1 ~]# iscsiadm -m node -l ##land

[root@server1 ~]# cd /var/lib/iscsi/ ##Enter iscsi folder

[root@server1 iscsi]# ls

ifaces isns nodes send_targets slp static

[root@server1 iscsi]# cd nodes/ ##Node pointed to

[root@server1 nodes]# ls

iqn.2021-03.org.westos:target1

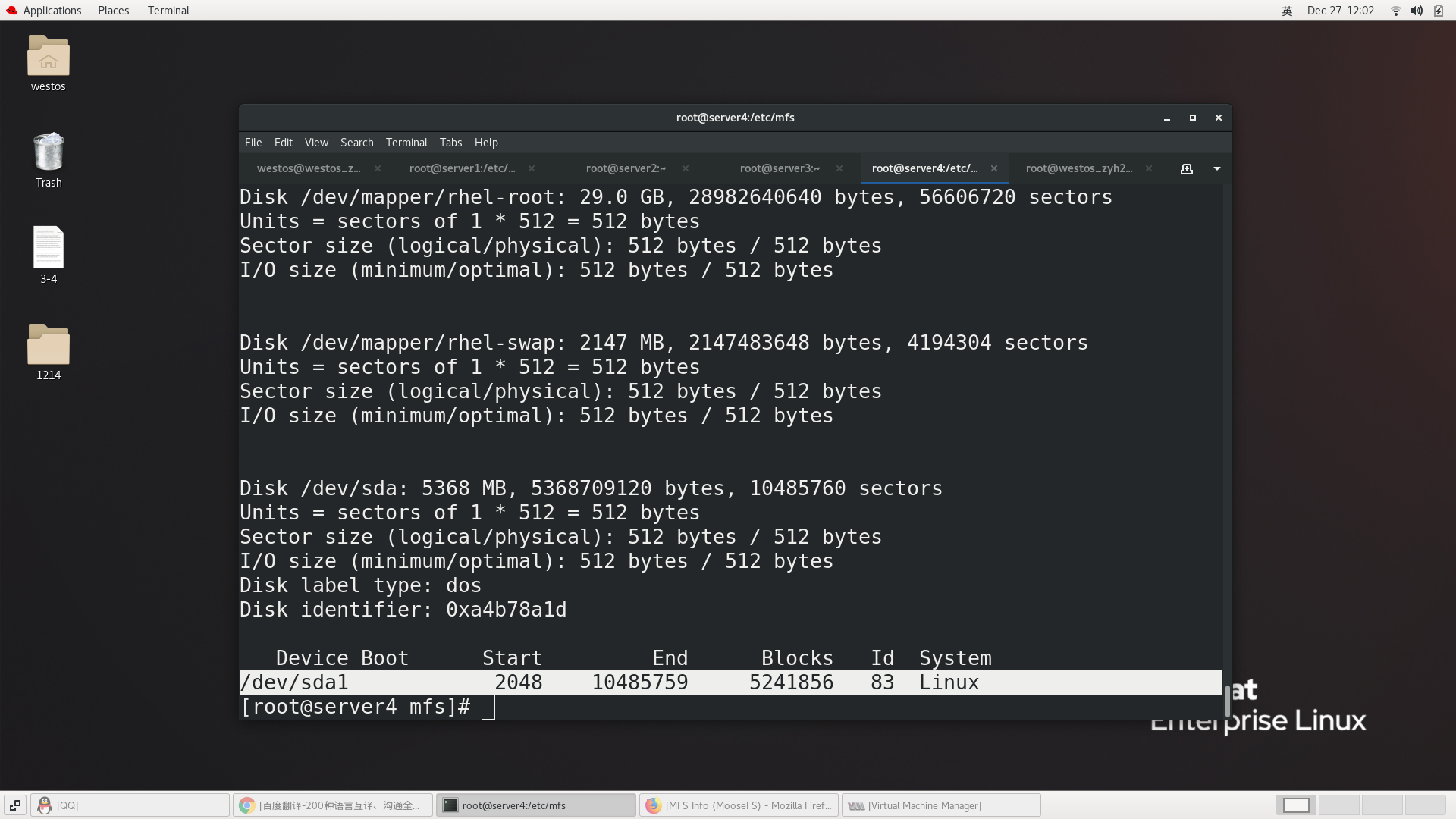

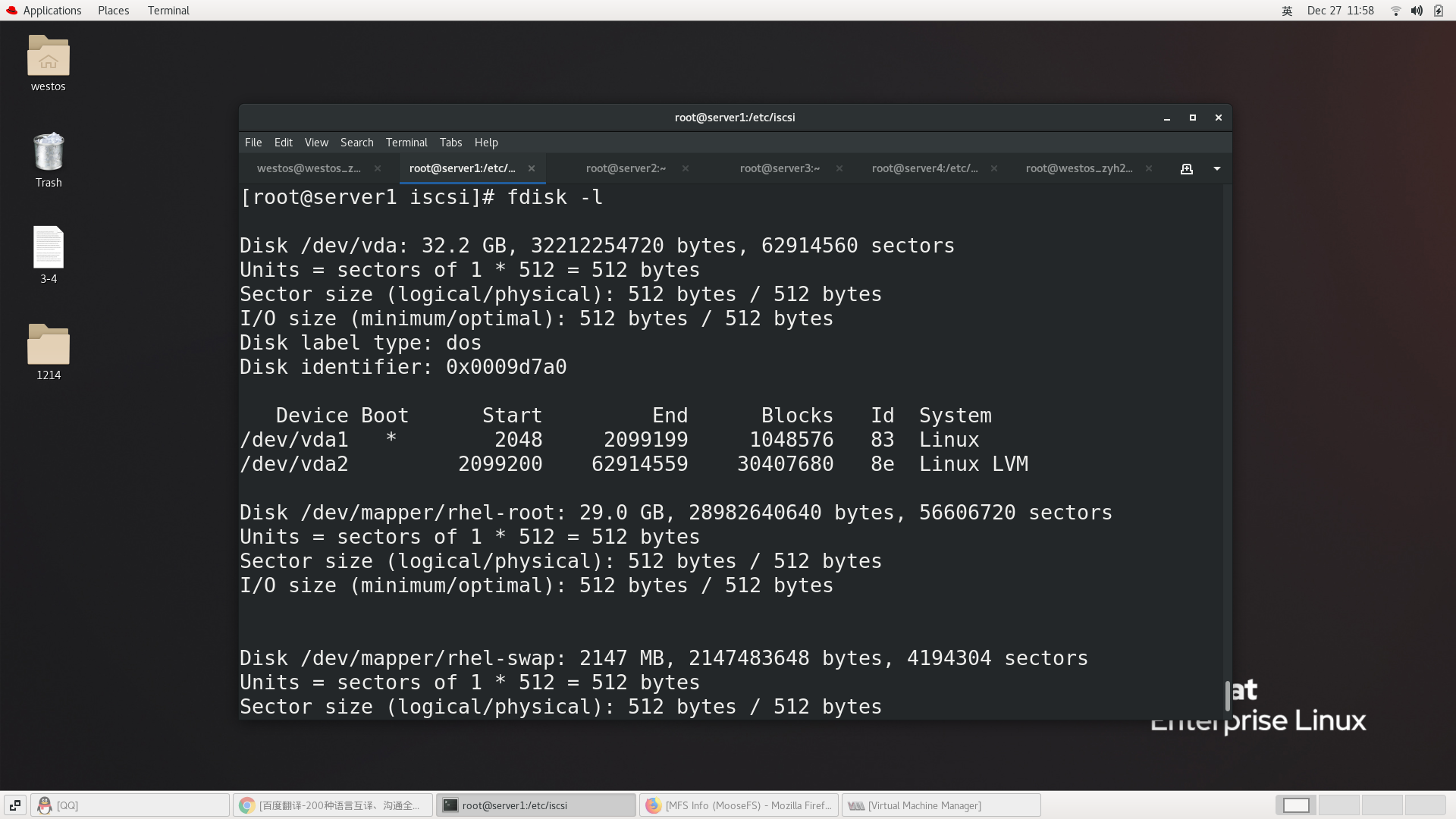

[root@server1 nodes]# fdisk -l ##Check whether the file system is shared successfully

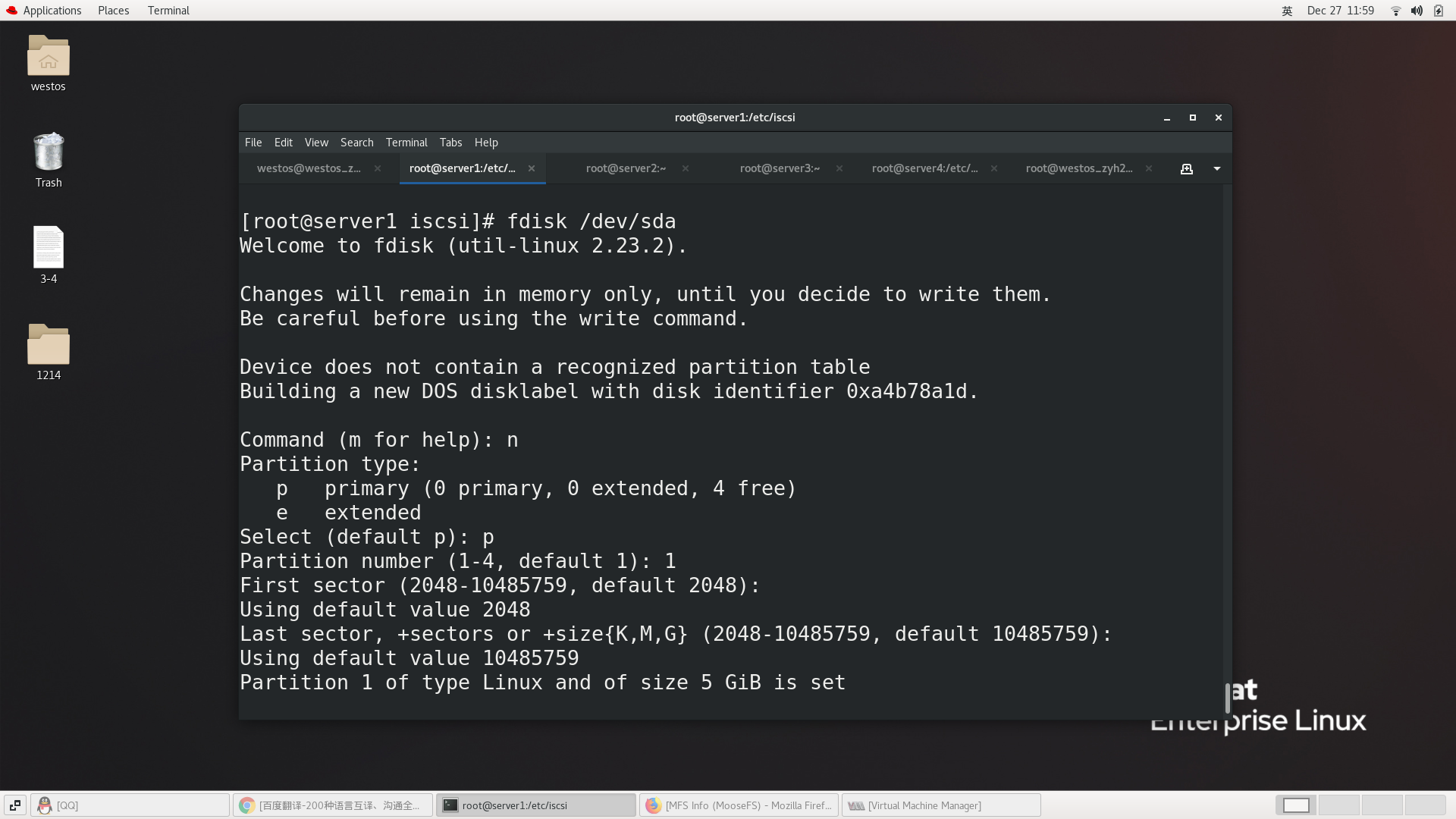

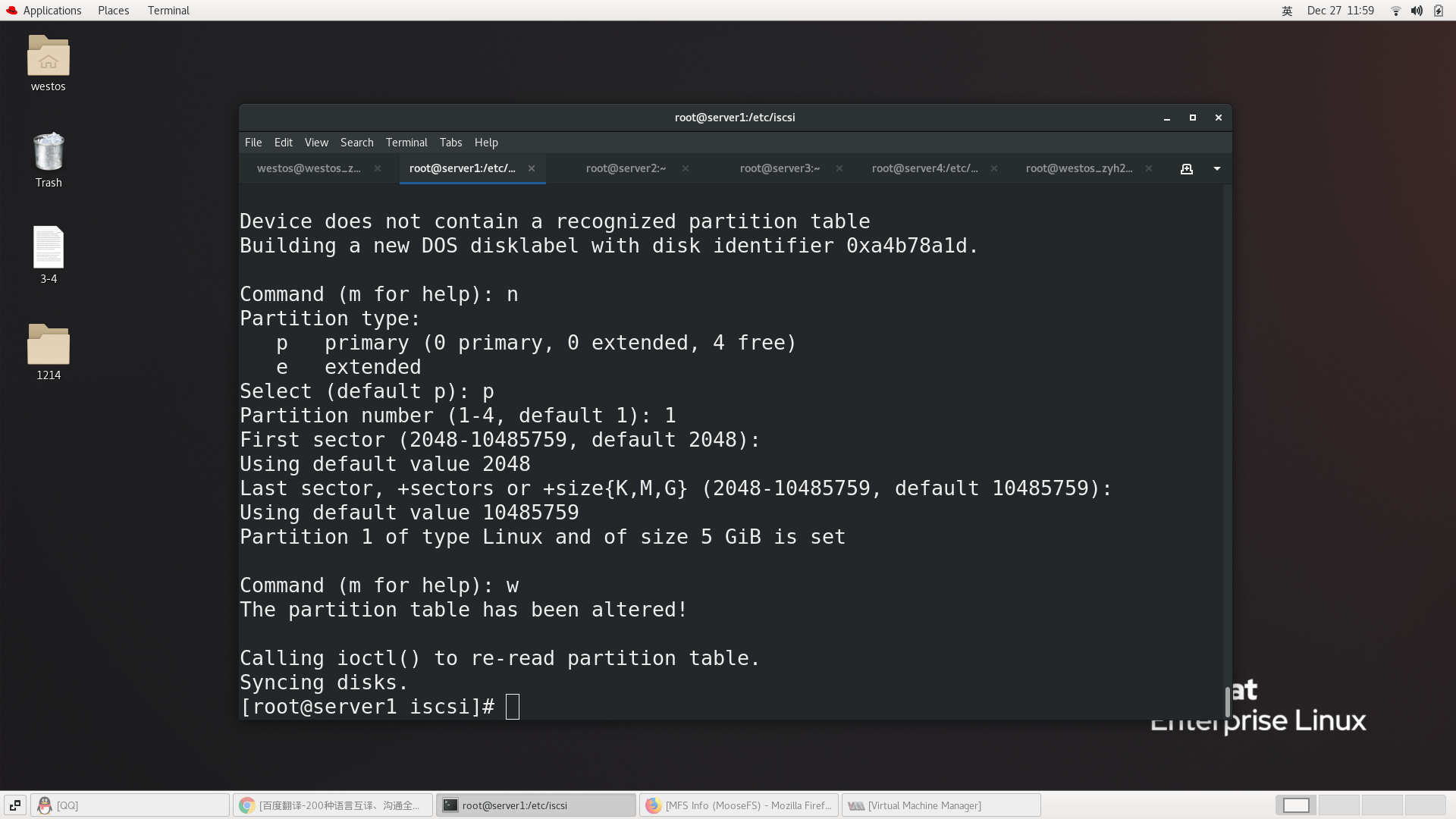

[root@server1 nodes]# fdisk /dev/sda ##Build a market directly

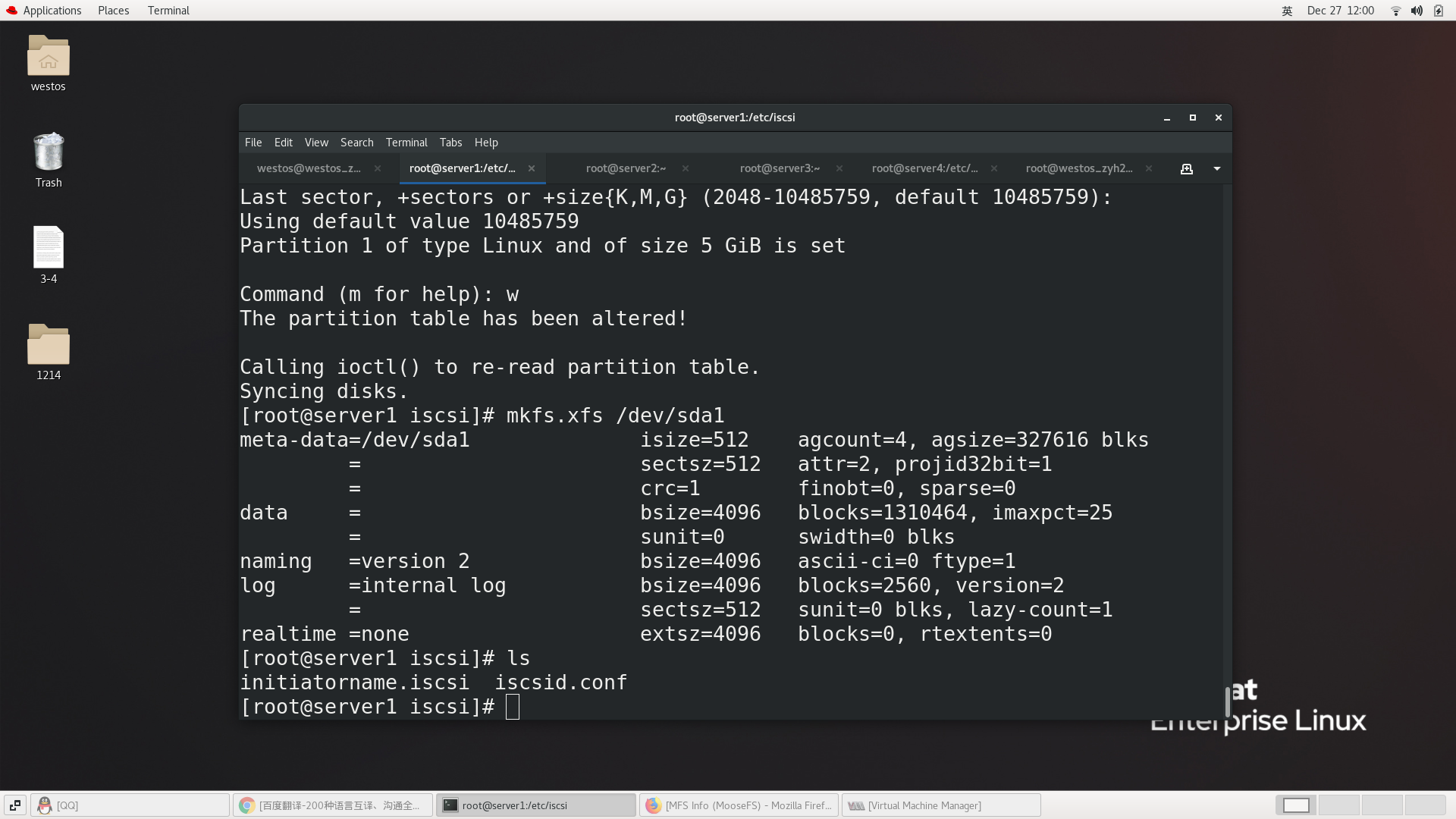

[root@server1 nodes]# cat /proc/partitions ##View disk information

major minor #blocks name

252 0 20971520 vda

252 1 1048576 vda1

252 2 19921920 vda2

253 0 17821696 dm-0

253 1 2097152 dm-1

8 0 10485760 sda

8 1 10484736 sda1

[root@server1 nodes]# partprobe

[root@server1 nodes]# mkfs.ext4 /dev/sda1 ##format

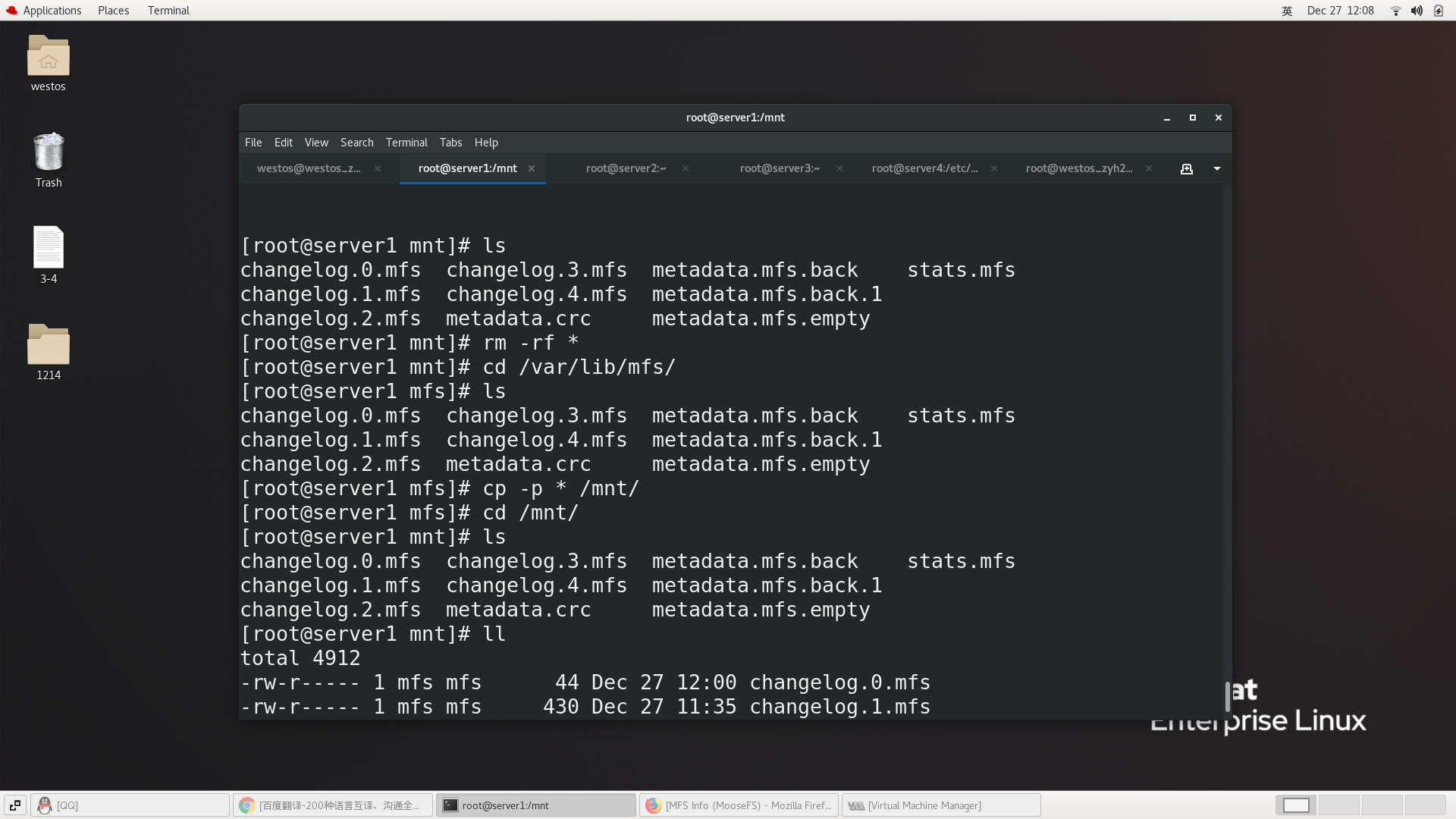

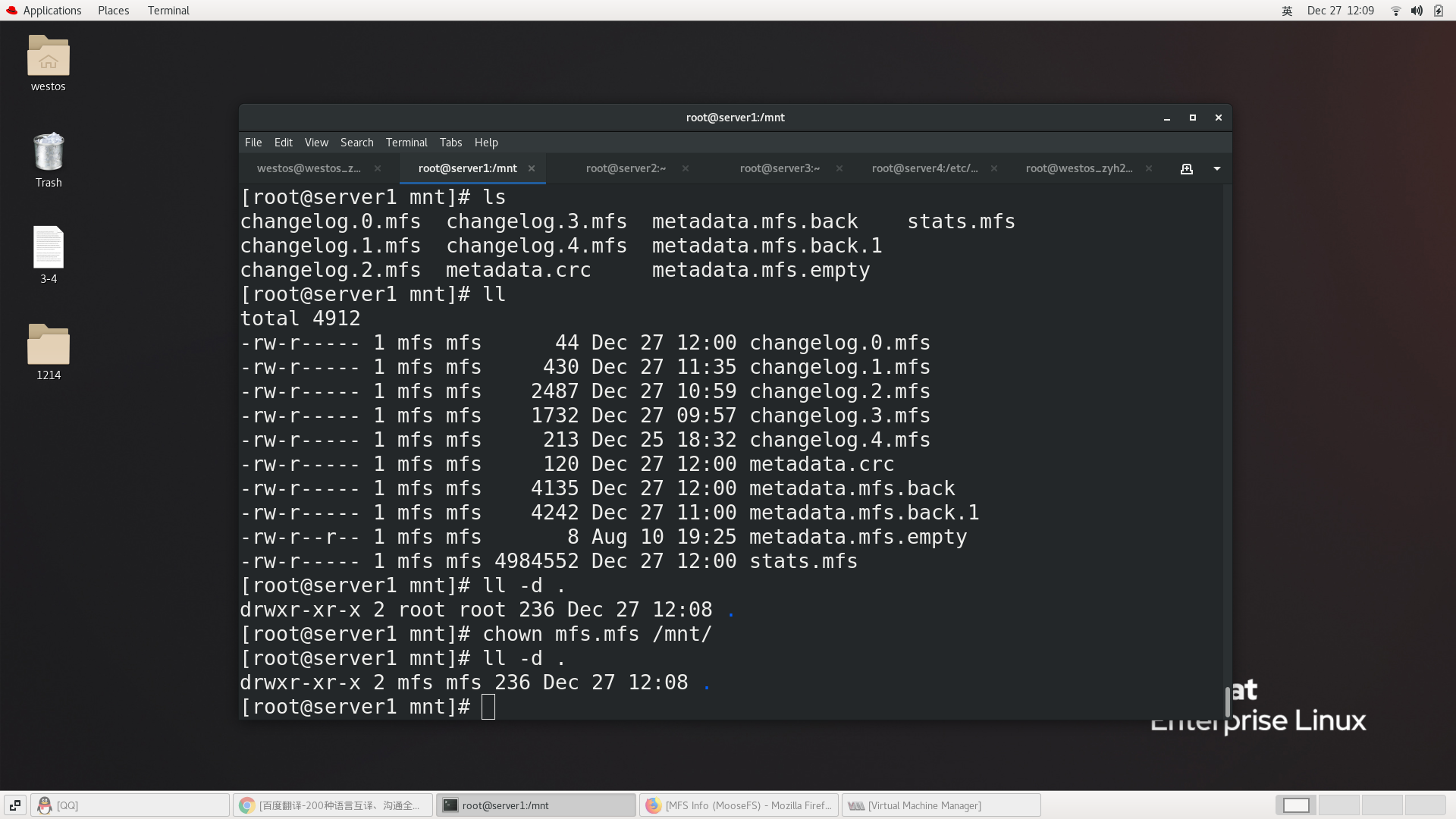

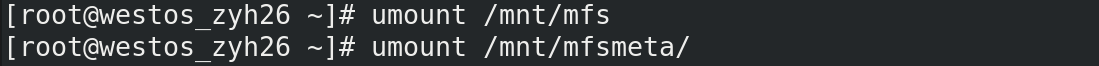

Synchronize files

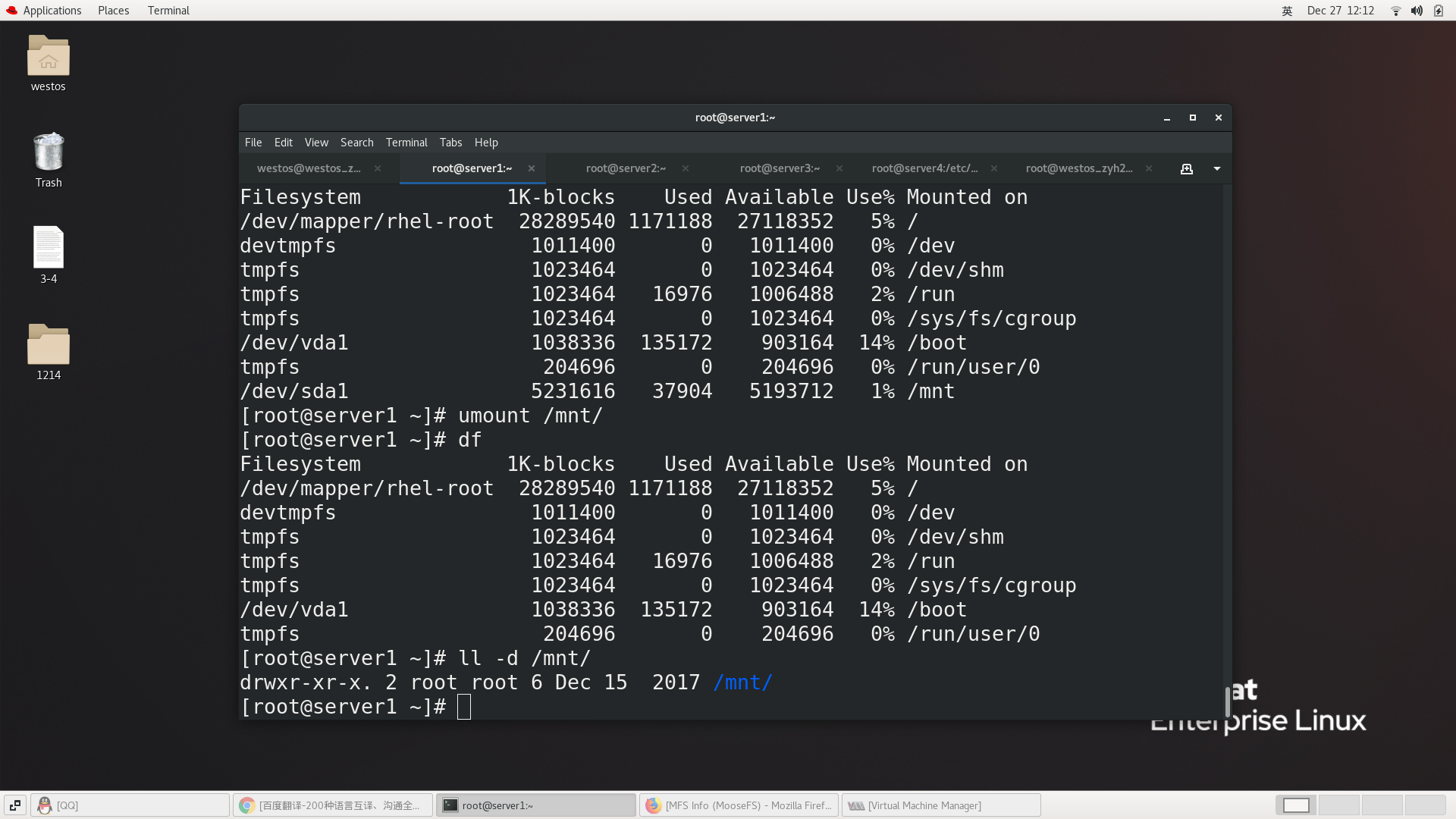

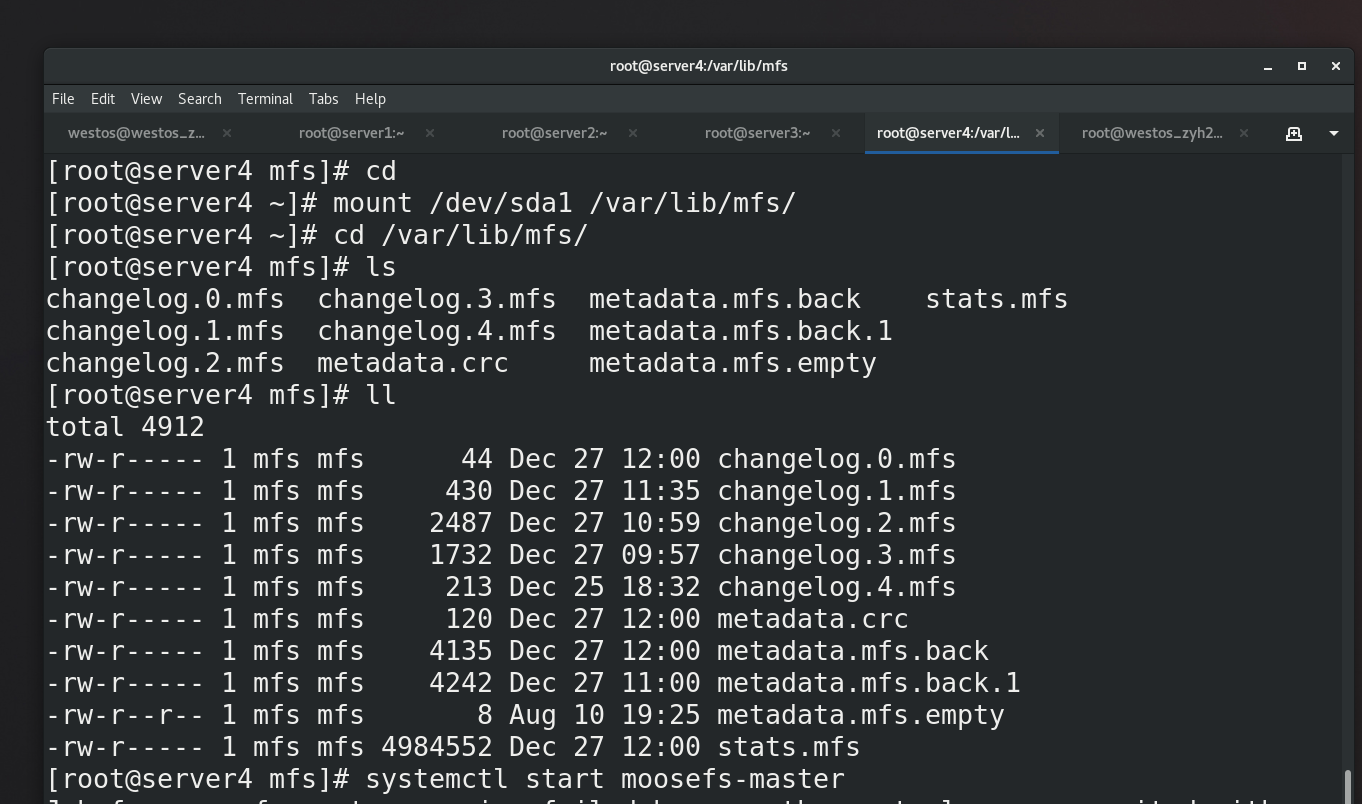

##1. Operation on Server1 [root@server1 ~]# mount /dev/sda1 /mnt/ [root@server1 ~]# df /dev/sda1 10189076 36888 9611568 1% /mnt [root@server1 ~]# ll -d /mnt/ drwxr-xr-x 3 root root 4096 Mar 19 04:06 /mnt/ [root@server1 ~]# id mfs uid=997(mfs) gid=995(mfs) groups=995(mfs) [root@server1 ~]# chown mfs.mfs /mnt/ [root@server1 ~]# ll -d /mnt/ drwxr-xr-x 3 mfs mfs 4096 Mar 19 04:06 /mnt/ [root@server1 ~]# cd /var/lib/mfs/ [root@server1 mfs]# ll total 4908 -rw-r----- 1 mfs mfs 404 Mar 19 03:27 changelog.1.mfs -rw-r----- 1 mfs mfs 1092 Mar 19 01:43 changelog.3.mfs -rw-r----- 1 mfs mfs 679 Mar 19 00:53 changelog.4.mfs -rw-r----- 1 mfs mfs 1864 Mar 18 23:59 changelog.5.mfs -rw-r----- 1 mfs mfs 261 Mar 18 22:17 changelog.6.mfs -rw-r----- 1 mfs mfs 45 Mar 18 21:49 changelog.7.mfs -rw-r----- 1 mfs mfs 120 Mar 19 04:00 metadata.crc -rw-r----- 1 mfs mfs 3569 Mar 19 04:00 metadata.mfs.back -rw-r----- 1 mfs mfs 4001 Mar 19 03:00 metadata.mfs.back.1 -rwxr--r-- 1 mfs mfs 8 Oct 8 07:55 metadata.mfs.empty -rw-r----- 1 mfs mfs 4984552 Mar 19 04:00 stats.mfs [root@server1 mfs]# cp -p * /mnt/ [root@server1 mfs]# cd /mnt/ [root@server1 mnt]# ls changelog.1.mfs changelog.5.mfs lost+found metadata.mfs.back.1 changelog.3.mfs changelog.6.mfs metadata.crc metadata.mfs.empty changelog.4.mfs changelog.7.mfs metadata.mfs.back stats.mfs [root@server1 mnt]# cd [root@server1 ~]# umount /mnt/ ##Unmount [root@server1 ~]# mount /dev/sda1 /var/lib/mfs/ [root@server1 ~]# cd /var/lib/mfs/ [root@server1 mfs]# ls changelog.1.mfs changelog.5.mfs lost+found metadata.mfs.back.1 changelog.3.mfs changelog.6.mfs metadata.crc metadata.mfs.empty changelog.4.mfs changelog.7.mfs metadata.mfs.back stats.mfs [root@server1 mfs]# cd [root@server1 ~]# umount /var/lib/mfs/ ##2. Operate on server5 [root@server5 ~]# cd /var/lib/mfs/ ##The data you can view is different from server1 [root@server5 mfs]# ls chunkserverid.mfs csstats.mfs metadata.mfs metadata.mfs.empty [root@server5 ~]# mount /dev/sda1 /var/lib/mfs/ ##Mount first. Before starting, the service must be closed. Or you need to -a do it again ##If an error occurs, you can mfsmaster -a first [root@server5 mfs]# systemctl status moosefs-master.service ##Modifying quotas [root@server5 mfs]# vim /usr/lib/systemd/system/moosefs-master.service [root@server5 mfs]# cat /usr/lib/systemd/system/moosefs-master.service ##server1 is also modified ExecStart=/usr/sbin/mfsmaster -a [root@server5 mfs]# systemctl daemon-reload [root@server5 mfs]# systemctl start moosefs-master [root@server5 mfs]# systemctl stop moosefs-master ##metadata.mfs is a required file [root@server5 mfs]# ls changelog.1.mfs changelog.5.mfs lost+found metadata.mfs.back.1 changelog.3.mfs changelog.6.mfs metadata.crc metadata.mfs.empty changelog.4.mfs changelog.7.mfs metadata.mfs stats.mfs