Installation of mpi4py

We will use the MPI for Python package mpi4py. If you have a clean geo_scipy environment, as described in Ryan's Python installation instructions on this website, you should be able to install it using conda without any problems. The first thing to do is to open the terminal shell and activate geo_scipy:

source activate geo_scipy

(or you can launch it from the Anaconda application)

Then install mpi4py:

conda install mpi4py

What is mpi4py?

MPI for Python provides MPI binding for Python language, allowing programmers to take advantage of multiprocessor computing system. mpi4py is built on the MPI-1/2 specification and provides an object-oriented interface that closely follows the MPI-2 C + + binding.

mpi4py documentation

The documentation of mpi4py can be found here: https ://mpi4py.scipy.org/

However, it is still in progress, most of which assume that you are familiar with the MPI standard. Therefore, you may also need to consult the MPI standard documentation:

MPI documentation covers only C and Fortran implementations, but the extension of Python syntax is simple and in most cases much simpler than the equivalent C or Fortran statements.

Another useful place to ask for help is mpi4py's API reference:

https://mpi4py.scipy.org/docs/apiref/mpi4py.MPI-module.html

In particular, the Class Comm section lists all the methods you can use with the communicator object:

https://mpi4py.scipy.org/docs/apiref/mpi4py.MPI.Comm-class.html

##Running Python scripts using MPI

Python programs that use MPI commands must run mpirun using the MPI interpreter provided by the command. On some systems, this command is called instead, mpiexec, and mpi4py appears to include both.

Check mpirun for Geo in your anaconda directory by using Unix comamnd_ SciPy to make sure your environment is right: which

$ which mpirun /anaconda/envs/geo_scipy/bin/mpirun

You can run the MPI Python script mpirun with the following command:

mpirun -n 4 python script.py

Here -n 4 tells MPI to use four processes, which is the number of cores on my laptop. Then we tell MPI to run script py.

If you run this program on a desktop computer, adjust the - n parameter to the number of cores on the system or the maximum number of processes required by the job, whichever is less. Or on large clusters, you can specify the number of cores required by the program or the maximum number of cores available on a particular cluster.

Communicator and level

The MPI of our first Python example will simply import MPI from the mpi4py package, create a communicator and get the level of each process:

from mpi4py import MPI

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

print('My rank is ',rank)Save this file, call comm.py, and run it:

mpirun -n 4 python comm.py

Here, we use the default communication MPI named COMM_ World processor, which consists of all processors. For many MPI codes, this is the primary communicator you need. However, you can use MPI COMM_ WORLD. For more information, refer to the documentation.

Point to point communication

Now we'll look at how to transfer data from one process to another. This is a very simple example. We pass the dictionary from process 0 to process 1:

from mpi4py import MPI

import numpy

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

if rank == 0:

data = {'a': 7, 'b': 3.14}

comm.send(data, dest=1)

elif rank == 1:

data = comm.recv(source=0)

print('On process 1, data is ',data)Here we send a dictionary, but you can also send an integer with a similar code:

from mpi4py import MPI

import numpy

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

if rank == 0:

idata = 1

comm.send(idata, dest=1)

elif rank == 1:

idata = comm.recv(source=0)

print('On process 1, data is ',idata)Notice how comm.send and comm.recv have lowercase s and r.

Now let's take a more complex example. We send a numpy array:

from mpi4py import MPI

import numpy as np

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

if rank == 0:

# in real code, this section might

# read in data parameters from a file

numData = 10

comm.send(numData, dest=1)

data = np.linspace(0.0,3.14,numData)

comm.Send(data, dest=1)

elif rank == 1:

numData = comm.recv(source=0)

print('Number of data to receive: ',numData)

data = np.empty(numData, dtype='d') # allocate space to receive the array

comm.Recv(data, source=0)

print('data received: ',data)Notice how comm.Send and comm.Recv use uppercase S and R for sending and receiving numpy arrays

Collective communication

radio broadcast:

The broadcast accepts a variable and sends an exact copy of it to all processes on the communicator. Here are some examples:

Broadcast Dictionary:

from mpi4py import MPI

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

if rank == 0:

data = {'key1' : [1,2, 3],

'key2' : ( 'abc', 'xyz')}

else:

data = None

data = comm.bcast(data, root=0)

print('Rank: ',rank,', data: ' ,data)You can use this method to broadcast a numpy array Bcast (note capital B again). Here, we will modify the above point-to-point code to broadcast the array data to all processes in the communicator (not just from process 0 to 1):

from mpi4py import MPI

import numpy as np

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

if rank == 0:

# create a data array on process 0

# in real code, this section might

# read in data parameters from a file

numData = 10

data = np.linspace(0.0,3.14,numData)

else:

numData = None

# broadcast numData and allocate array on other ranks:

numData = comm.bcast(numData, root=0)

if rank != 0:

data = np.empty(numData, dtype='d')

comm.Bcast(data, root=0) # broadcast the array from rank 0 to all others

print('Rank: ',rank, ', data received: ',data)Scattering:

Scatter takes an array and distributes its continuous parts in the rows and columns of the communicator. This is from http://mpitutorial.com It illustrates the difference between broadcasting and dispersion:

Now let's try an example.

from mpi4py import MPI

import numpy as np

comm = MPI.COMM_WORLD

size = comm.Get_size() # new: gives number of ranks in comm

rank = comm.Get_rank()

numDataPerRank = 10

data = None

if rank == 0:

data = np.linspace(1,size*numDataPerRank,numDataPerRank*size)

# when size=4 (using -n 4), data = [1.0:40.0]

recvbuf = np.empty(numDataPerRank, dtype='d') # allocate space for recvbuf

comm.Scatter(data, recvbuf, root=0)

print('Rank: ',rank, ', recvbuf received: ',recvbuf)In this example, the process with rank 0 created the array data. Since this is just a toy example, we made a simple linspace array, but in the research code, the data may be read from the file or generated by the previous part of the workflow. Data and then use comm.Scatter distributed to all levels (including level 0). Note that we must first initialize (or allocate) the receive buffer array recvbuf.

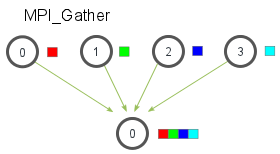

Collection:

A negative scatter is a gather that takes a subset of the arrays distributed in the hierarchy and collects them back into the complete array. This is from http://mpitutorial.com An image that graphically illustrates this:

For example, here's the opposite of the scatter example above.

from mpi4py import MPI

import numpy as np

comm = MPI.COMM_WORLD

size = comm.Get_size()

rank = comm.Get_rank()

numDataPerRank = 10

sendbuf = np.linspace(rank*numDataPerRank+1,(rank+1)*numDataPerRank,numDataPerRank)

print('Rank: ',rank, ', sendbuf: ',sendbuf)

recvbuf = None

if rank == 0:

recvbuf = np.empty(numDataPerRank*size, dtype='d')

comm.Gather(sendbuf, recvbuf, root=0)

if rank == 0:

print('Rank: ',rank, ', recvbuf received: ',recvbuf)

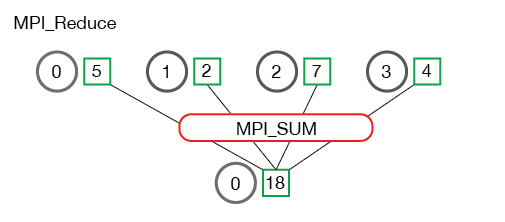

Decrease:

The MPreduce operation takes values from the array of each process and simplifies them to a single result on the root process. This is essentially like sending a somewhat complex command from each process to the root process, and then letting the root process perform the reduction operation. Fortunately, MPIreduce # completed all these tasks with a simple command.

For numpy arrays, the syntax is

~~~python comm.Reduce(send_data, recv_data, op=, root=0) ~ ~ ~ where send_ Data is the data sent by all processes on the communicator, recv_ Data is an array that will receive all data in the root process. Note that the send and receive arrays have the same size. All copies of the data inside are sent_ Data will be reduced according to the specified < operation >. Some common operations are: - MPI_SUM - sum elements- MPI_PROD - multiplies all elements- MPI_MAX - returns the maximum element- MPI_MIN - returns the smallest element.

Here are from http://mpi_thttp://mpitutorial.com Some more useful images that graphically illustrate the reduce steps:

This is a code example:

from mpi4py import MPI

import numpy as np

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

# Create some np arrays on each process:

# For this demo, the arrays have only one

# entry that is assigned to be the rank of the processor

value = np.array(rank,'d')

print(' Rank: ',rank, ' value = ', value)

# initialize the np arrays that will store the results:

value_sum = np.array(0.0,'d')

value_max = np.array(0.0,'d')

# perform the reductions:

comm.Reduce(value, value_sum, op=MPI.SUM, root=0)

comm.Reduce(value, value_max, op=MPI.MAX, root=0)

if rank == 0:

print(' Rank 0: value_sum = ',value_sum)

print(' Rank 0: value_max = ',value_max)