Multisensor fusion positioning Chapter 2 3D laser odometer

Reference blog:

Chapter 1 operation of multi-sensor fusion positioning of dark blue College

Reference code:

Code download: https://github.com/kahowang/sensor-fusion-for-localization-and-mapping

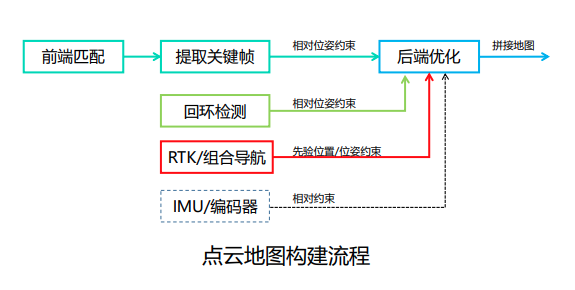

Point cloud map construction framework

FILE: front_end_flow.cpp

FrontEndFlow::Run()

bool FrontEndFlow::Run() {

if (!ReadData()) { // Get the original data from subscribe, store it in std::deque, and synchronize the data of each sensor

return false;

}

if (!InitCalibration()) { // Get lidar_to_imu_ Coordinate transformation matrix

return false;

}

if (!InitGNSS()) { // Initialize GNSS data

return false;

}

while(HasData()) {

if (!ValidData()) {

continue;

}

UpdateGNSSOdometry(); // Update GNSS location and use lidar_to_imu_ Transform to radar coordinate system

if (UpdateLaserOdometry()) { // Update laser odometer

PublishData();

SaveTrajectory();

} else {

LOG(INFO) << "UpdateLaserOdometry failed!" << std::endl;

}

}

return true;

}

FrontEndFlow::UpdateLaserOdometry()

bool FrontEndFlow::UpdateLaserOdometry() {

static bool front_end_pose_inited = false;

if (!front_end_pose_inited) {

front_end_pose_inited = true;

front_end_ptr_->SetInitPose(gnss_odometry_);

}

laser_odometry_ = Eigen::Matrix4f::Identity();

return front_end_ptr_->Update(current_cloud_data_, laser_odometry_);

}

FrontEnd::Update()

Renew odometer

bool FrontEnd::Update(const CloudData& cloud_data, Eigen::Matrix4f& cloud_pose) {

current_frame_.cloud_data.time = cloud_data.time;

std::vector<int> indices;

pcl::removeNaNFromPointCloud(*cloud_data.cloud_ptr, *current_frame_.cloud_data.cloud_ptr, indices);

CloudData::CLOUD_PTR filtered_cloud_ptr(new CloudData::CLOUD());

frame_filter_ptr_->Filter(current_frame_.cloud_data.cloud_ptr, filtered_cloud_ptr); // For filtering, select PCL:: voxelgrid < clouddata:: point >

static Eigen::Matrix4f step_pose = Eigen::Matrix4f::Identity();

static Eigen::Matrix4f last_pose = init_pose_;

static Eigen::Matrix4f predict_pose = init_pose_;

static Eigen::Matrix4f last_key_frame_pose = init_pose_;

// There is no key frame in the local map container, which represents the first frame data

// At this time, the current frame data is taken as the first key frame, and the local map container and global map container are updated

if (local_map_frames_.size() == 0) {

current_frame_.pose = init_pose_; // The first frame of the local map is used as the predict_pose a priori

UpdateWithNewFrame(current_frame_);

cloud_pose = current_frame_.pose;

return true;

}

// If it's not the first frame, it matches normally

registration_ptr_->ScanMatch(filtered_cloud_ptr, predict_pose, result_cloud_ptr_, current_frame_.pose);

cloud_pose = current_frame_.pose;

// Update the relative motion of two adjacent frames

step_pose = last_pose.inverse() * current_frame_.pose;

predict_pose = current_frame_.pose * step_pose; // Update predicted pose

last_pose = current_frame_.pose;

// After matching, judge whether a new key frame needs to be generated according to the distance, and update it accordingly if necessary

if (fabs(last_key_frame_pose(0,3) - current_frame_.pose(0,3)) +

fabs(last_key_frame_pose(1,3) - current_frame_.pose(1,3)) +

fabs(last_key_frame_pose(2,3) - current_frame_.pose(2,3)) > key_frame_distance_) {

UpdateWithNewFrame(current_frame_);

last_key_frame_pose = current_frame_.pose;

}

return true;

}

ICP_SVD

Funtion

FILE: icp_svd_registration.cpp

scanMatch()

bool ICPSVDRegistration::ScanMatch(

const CloudData::CLOUD_PTR& input_source,

const Eigen::Matrix4f& predict_pose,

CloudData::CLOUD_PTR& result_cloud_ptr,

Eigen::Matrix4f& result_pose

) {

input_source_ = input_source;

// pre-process input source:

CloudData::CLOUD_PTR transformed_input_source(new CloudData::CLOUD());

pcl::transformPointCloud(*input_source_, *transformed_input_source, predict_pose);

// init estimation:

transformation_.setIdentity();

//

// TODO: first option -- implement all computing logic on your own

//

// do estimation:

int curr_iter = 0;

while (curr_iter < max_iter_) {

// TODO: apply current estimation:

// apply current estimation:

CloudData::CLOUD_PTR curr_input_source(new CloudData::CLOUD());

pcl::transformPointCloud(*transformed_input_source, *curr_input_source, transformation_);

// TODO: get correspondence:

std::vector<Eigen::Vector3f> xs;

std::vector<Eigen::Vector3f> ys;

// TODO: do not have enough correspondence -- break:

if (GetCorrespondence(curr_input_source,xs,ys) < 3) // Find the point pair of the nearest point, and exit when there are less than 3 matching points

break;

// TODO: update current transform:

Eigen::Matrix4f delta_transformation;

GetTransform(xs, ys, delta_transformation);

// TODO: whether the transformation update is significant:

if(!IsSignificant(delta_transformation, trans_eps_)) // Maximum rotation matrix

break;

// TODO: update transformation:

transformation_ = delta_transformation * transformation_;

++curr_iter;

}

// set output:

result_pose = transformation_ * predict_pose;

// normalization

Eigen::Quaternionf qr(result_pose.block<3,3>(0,0));

qr.normalize();

Eigen::Vector3f t = result_pose.block<3,1>(0,3);

result_pose.setIdentity();

result_pose.block<3,3>(0,0) = qr.toRotationMatrix();

result_pose.block<3,1>(0,3) = t;

pcl::transformPointCloud(*input_source_, *result_cloud_ptr, result_pose);

return true;

}

GetCorrespondence()

Find the matching points of two point clouds through kdtree

size_t ICPSVDRegistration::GetCorrespondence(

const CloudData::CLOUD_PTR &input_source,

std::vector<Eigen::Vector3f> &xs,

std::vector<Eigen::Vector3f> &ys

) {

const float MAX_CORR_DIST_SQR = max_corr_dist_ * max_corr_dist_;

size_t num_corr = 0;

// TODO: set up point correspondence

for(size_t i =0; i < input_source->points.size(); ++i){

std::vector<int> corr_ind; // index

std::vector<float> corr_sq_dis; // correspondence_square_dis

input_target_kdtree_->nearestKSearch(

input_source->at(i),

1,

corr_ind, corr_sq_dis

); // kdtree search

if(corr_sq_dis.at(0) > MAX_CORR_DIST_SQR)

continue;

// add correspondence:

Eigen::Vector3f x(

input_target_->at(corr_ind.at(0)).x,

input_target_->at(corr_ind.at(0)).y,

input_target_->at(corr_ind.at(0)).z

);

Eigen::Vector3f y(

input_source->at(i).x,

input_source->at(i).y,

input_source->at(i).z

);

xs.push_back(x);

ys.push_back(y);

++num_corr;

}

return num_corr;

}

GetTransform()

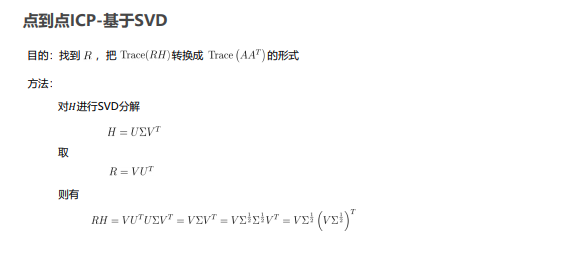

Formula:

R t is solved by Eigen svd,

Note: the obtained rotation matrix must be orthogonal and the determinant is 1. Therefore, it is necessary to orthogonalize the obtained rotation matrix

1. Rotate the matrix to quaternion and normalize the quaternion.

2.SO3 manifold projection.

3.SVD decomposition singular value is set to 1.

void ICPSVDRegistration::GetTransform(

const std::vector<Eigen::Vector3f> &xs,

const std::vector<Eigen::Vector3f> &ys,

Eigen::Matrix4f &transformation_

) {

const size_t N = xs.size();

// find centroids of mu_x and mu_y:

Eigen::Vector3f mu_x = Eigen::Vector3f::Zero();

Eigen::Vector3f mu_y = Eigen::Vector3f::Zero();

for (size_t i = 0; i < N; ++i) {

mu_x += xs.at(i);

mu_y += ys.at(i);

}

mu_x /= N;

mu_y /= N;

// build H:

Eigen::Matrix3f H = Eigen::Matrix3f::Zero();

for (size_t i = 0; i < N; ++i) {

H += (ys.at(i) - mu_y) * (xs.at(i) - mu_x).transpose();

}

// solve R:

Eigen::JacobiSVD<Eigen::MatrixXf> svd(H, Eigen::ComputeThinU | Eigen::ComputeThinV);

Eigen::Matrix3f R = svd.matrixV() * svd.matrixU().transpose();

// solve t:

Eigen::Vector3f t = mu_x - R * mu_y;

// set output:

transformation_.setIdentity();

transformation_.block<3, 3>(0, 0) = R;

transformation_.block<3, 1>(0, 3) = t;

}

SVD_ICP parameter configuration

FILE: front_end/config.yaml

trick: parameter setting, icp_svd major modifications

max_ corr_ dist: SVD_ The accuracy of ICP depends on the accuracy of adjacent point pairs. For example, set the distance threshold of adjacent point pairs as small as possible, such as 0.5

max_iter: Generally speaking, the more iterations, the better. Of course, it will increase the unnecessary burden of computing power, because ICP_SVD is solved in one step, so the number of iterations can not be too large.

ICP_SVD:

max_corr_dist : 0.5

trans_eps : 0.01

euc_fitness_eps : 0.36

max_iter : 10

NDT_CPU

Reference source code: autoware ndt_cpu

NDT formula derivation and source code analysis (1)

NDT formula derivation and source code analysis (2)

FILE: include/models/ndt_cpu/ndt_cpu_registration.hpp

#ifndef LIDAR_LOCALIZATION_MODELS_REGISTRATION_NDT_CPU_REGISTRATION_HPP_

#define LIDAR_LOCALIZATION_MODELS_REGISTRATION_NDT_CPU_REGISTRATION_HPP_

#include "lidar_localization/models/registration/registration_interface.hpp"

#include "lidar_localization/models/registration/ndt_cpu/NormalDistributionsTransform.h"

namespace lidar_localization {

class NDTCPURegistration: public RegistrationInterface { // Inherit the base class of point cloud registration

public:

NDTCPURegistration(const YAML::Node& node);

bool SetInputTarget(const CloudData::CLOUD_PTR& input_target) override;

bool ScanMatch(

const CloudData::CLOUD_PTR& input_source,

const Eigen::Matrix4f& predict_pose,

CloudData::CLOUD_PTR& result_cloud_ptr,

Eigen::Matrix4f& result_pose

) override;

private:

bool SetRegistrationParam(float res, float step_size, float trans_eps, int max_iter);

private:

cpu::NormalDistributionsTransform<CloudData::POINT, CloudData::POINT> ndt_cpu_; // Instantiate cpu_ndt object

};

} // namespace lidar_localization

#endif

FILE: src/models/registration/ndt_cpu/ndt_cpu_registration.cpp

/*

* @Description: NDT CPU lidar odometry

* @Author: KaHo

* @Date: 2021-8-22

*/

#include <pcl/common/transforms.h>

#include <Eigen/Dense>

#include "glog/logging.h"

#include "lidar_localization/models/registration/ndt_cpu/ndt_cpu_registration.hpp"

namespace lidar_localization{

NDTCPURegistration::NDTCPURegistration(const YAML::Node& node){

float res = node["res"].as<float>();

float step_size = node["step_size"].as<float>();

float trans_eps = node["trans_eps"].as<float>();

int max_iter = node["max_iter"].as<int>();

SetRegistrationParam(res, step_size, trans_eps, max_iter);

}

bool NDTCPURegistration::SetRegistrationParam(float res, float step_size, float trans_eps, int max_iter) {

ndt_cpu_.setResolution(res);

ndt_cpu_.setStepSize(step_size);

ndt_cpu_.setTransformationEpsilon(trans_eps);

ndt_cpu_.setMaximumIterations(max_iter);

LOG(INFO) << "NDT params:" << std::endl

<< "res: " << res << ", "

<< "step_size: " << step_size << ", "

<< "trans_eps: " << trans_eps << ", "

<< "max_iter: " << max_iter

<< std::endl << std::endl;

return true;

}

bool NDTCPURegistration::SetInputTarget(const CloudData::CLOUD_PTR& input_target) {

ndt_cpu_.setInputTarget(input_target);

return true;

}

bool NDTCPURegistration::ScanMatch(const CloudData::CLOUD_PTR& input_source,

const Eigen::Matrix4f& predict_pose,

CloudData::CLOUD_PTR& result_cloud_ptr,

Eigen::Matrix4f& result_pose) {

ndt_cpu_.setInputSource(input_source);

ndt_cpu_.align(*result_cloud_ptr, predict_pose);

result_pose = ndt_cpu_.getFinalTransformation(); // Matched point cloud

return true;

}

}

NDT_CPU parameter configuration

NDT_CPU:

res : 0.8 # volex resolution

step_size : 0.1 # The larger the step size of gradient descent, the faster the descent, but it is easy for over shot to fall into local optimization

trans_eps : 0.01 # Maximum tolerance, once the twice conversion matrix is less than trans_eps exit iteration

max_iter : 30 # Maximum number of iterations

ICP_PCL

FILE: icp_registration.cpp

private:

pcl::IterativeClosestPoint<CloudData::POINT, CloudData::POINT>::Ptr icp_ptr_;

bool ICPRegistration::SetInputTarget(const CloudData::CLOUD_PTR& input_target) {

icp_ptr_->setInputTarget(input_target);

return true;

}

bool ICPRegistration::ScanMatch(const CloudData::CLOUD_PTR& input_source,

const Eigen::Matrix4f& predict_pose,

CloudData::CLOUD_PTR& result_cloud_ptr,

Eigen::Matrix4f& result_pose) {

icp_ptr_->setInputSource(input_source); // Enter the point cloud to be registered

icp_ptr_->align(*result_cloud_ptr, predict_pose); // Registration

result_pose = icp_ptr_->getFinalTransformation(); // Get transformation matrix

return true;

}

NDT_PCL

FILE: ndt_registration.cpp

private:

pcl::NormalDistributionsTransform<CloudData::POINT, CloudData::POINT>::Ptr ndt_ptr_;

bool NDTRegistration::SetInputTarget(const CloudData::CLOUD_PTR& input_target) {

ndt_ptr_->setInputTarget(input_target);

return true;

}

bool NDTRegistration::ScanMatch(const CloudData::CLOUD_PTR& input_source,

const Eigen::Matrix4f& predict_pose,

CloudData::CLOUD_PTR& result_cloud_ptr,

Eigen::Matrix4f& result_pose) {

ndt_ptr_->setInputSource(input_source);

ndt_ptr_->align(*result_cloud_ptr, predict_pose);

result_pose = ndt_ptr_->getFinalTransformation();

return true;

}

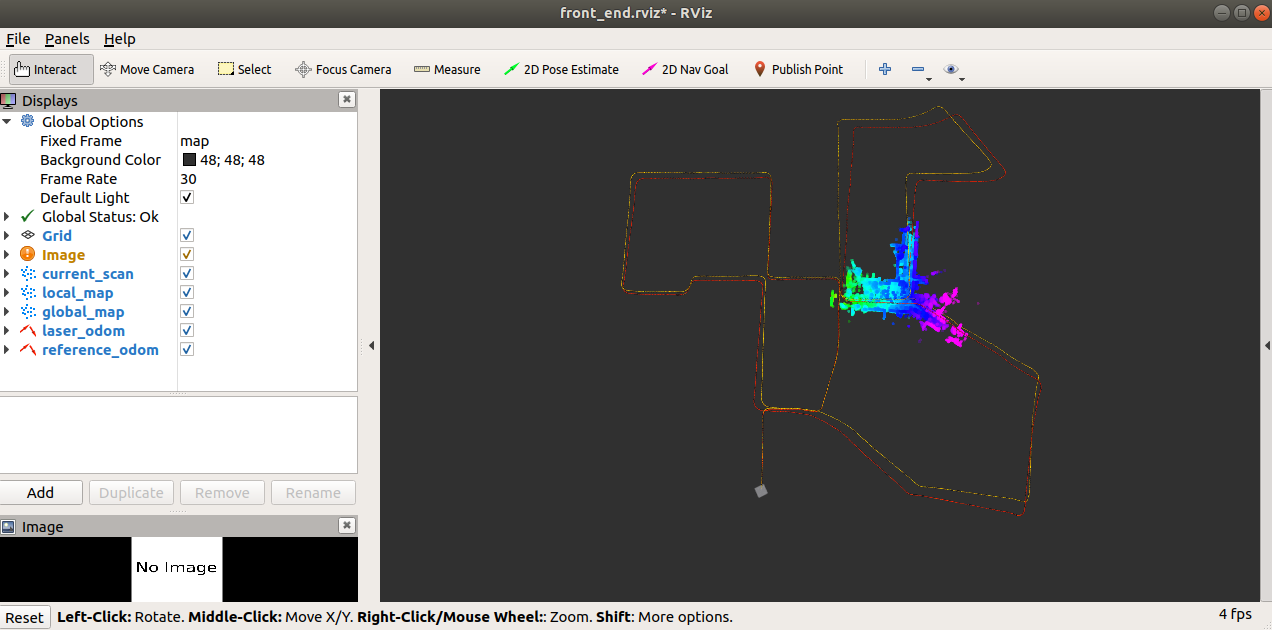

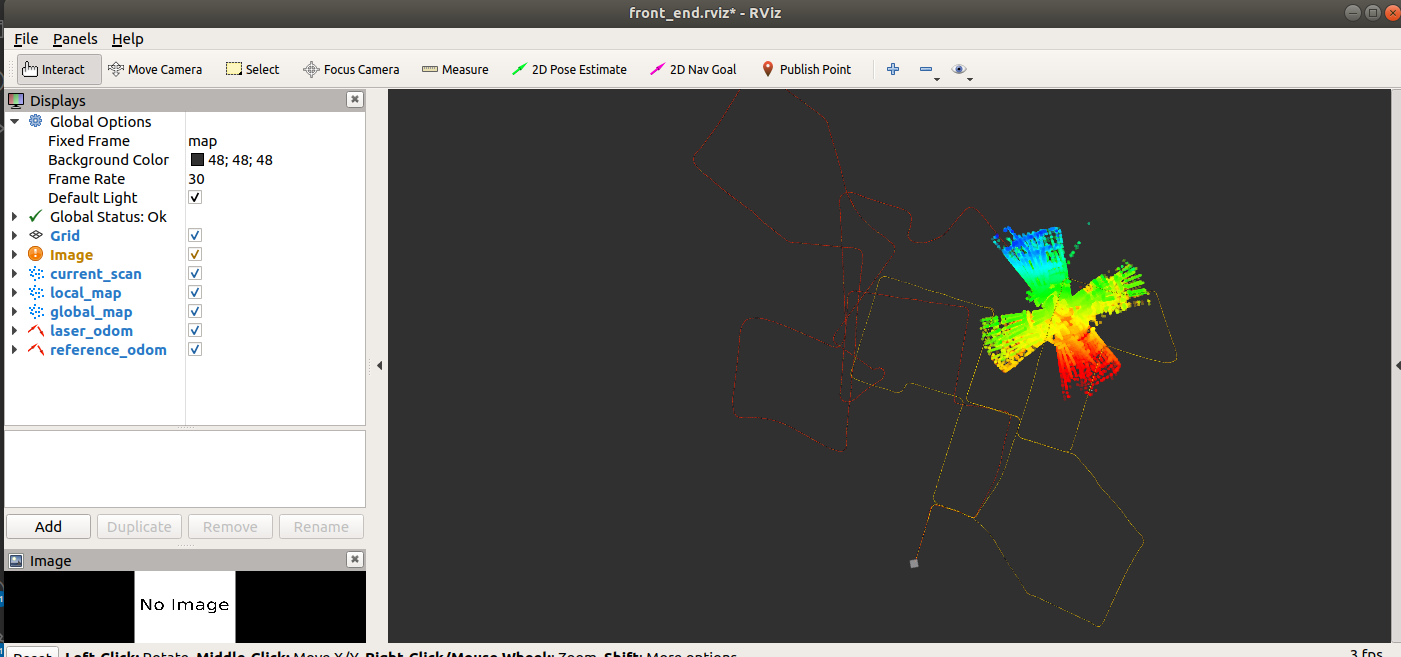

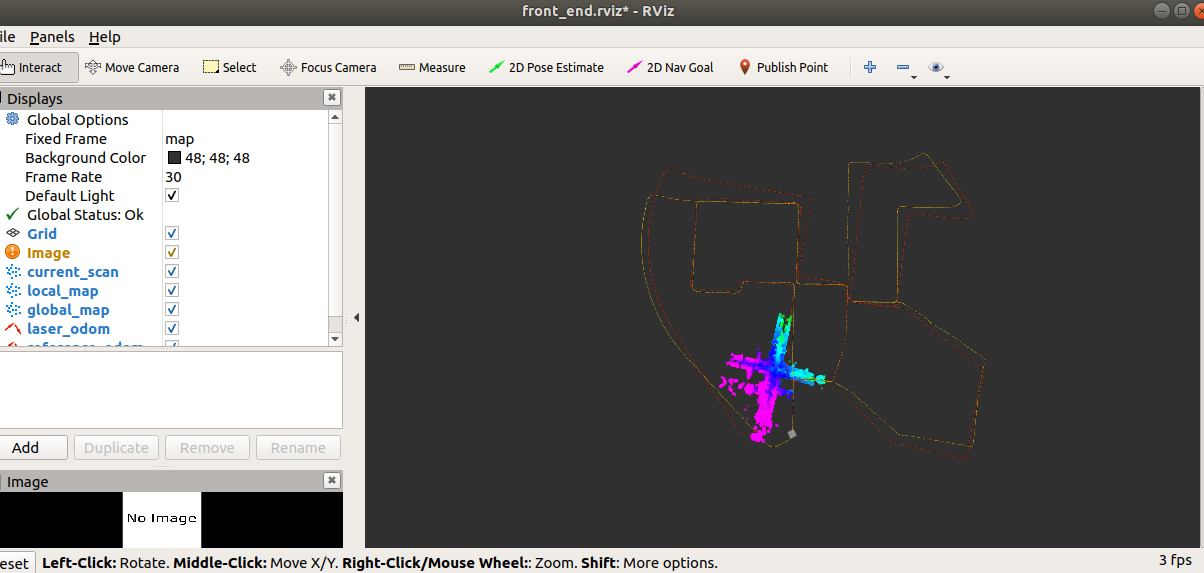

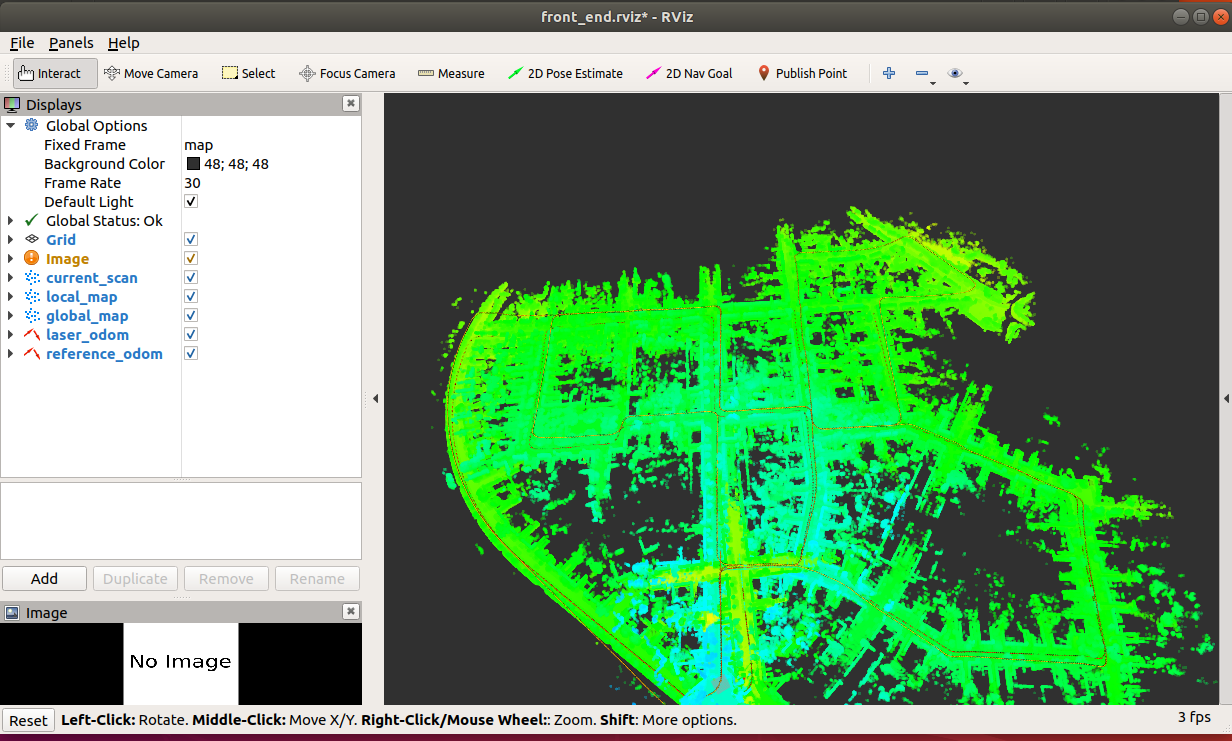

Running

Parameter configuration selection

lidar_localization/config/front_end/config.yaml selects the point cloud matching method and specifies the data storage path

data_path: /home/x/catkin_ws/src/lidar_localization # Data storage path

# matching

# TODO: implement your custom registration method and add it here

registration_method: ICP_SVD # Select the point cloud matching method. At present, it supports: ICP, ICP_SVD, NDT, SICP

# Local map

key_frame_distance: 2.0 # Key frame distance

local_frame_num: 20

local_map_filter: voxel_filter # Select the sliding window map point cloud filtering method. Currently, voxel is supported_ filter

# rviz display

display_filter: voxel_filter # rviz real-time display point cloud filtering method, currently support: voxel_filter

# Current frame

frame_filter: voxel_filter # Select the current frame point cloud filtering method. Currently, voxel is supported_ filter

## Filter related parameters

voxel_filter:

local_map:

leaf_size: [0.6, 0.6, 0.6]

frame:

leaf_size: [1.3, 1.3, 1.3]

display:

leaf_size: [0.5, 0.5, 0.5]

# Corresponding parameters of each configuration option

## Matching related parameters

ICP:

max_corr_dist : 1.2

trans_eps : 0.01

euc_fitness_eps : 0.36

max_iter : 30

ICP_SVD:

max_corr_dist : 0.5

trans_eps : 0.01

euc_fitness_eps : 0.36

max_iter : 10

NDT:

res : 1.0

step_size : 0.1

trans_eps : 0.01

max_iter : 30

SICP:

p : 1.0

mu : 10.0

alpha : 1.2

max_mu : 1e5

max_icp : 100

max_outer : 100

max_inner : 1

stop : 1e-5

Run map creation and save the map

roslaunch lidar_localization front_end.launch

rosbag play kitti_lidar_only_2011_10_03_drive_0027_synced.bag

Save map to slam_data folder

rosservice call /save_map

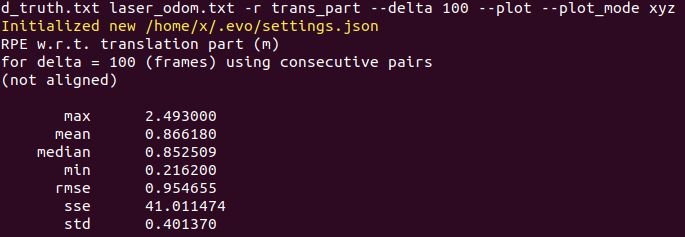

evo trajectory evaluation

lidar_ localization/slam_ There is ground in the data / trajectory path_ truth. txt laser_ odom. Txt two files

Download evo

pip install evo --upgrade --no-binary evo

There are two modes of EVO evaluation data, and the corresponding instructions are evo_rpe and evo_ape, the former evaluates the error in each distance, and the latter evaluates the accumulation of absolute error with distance.

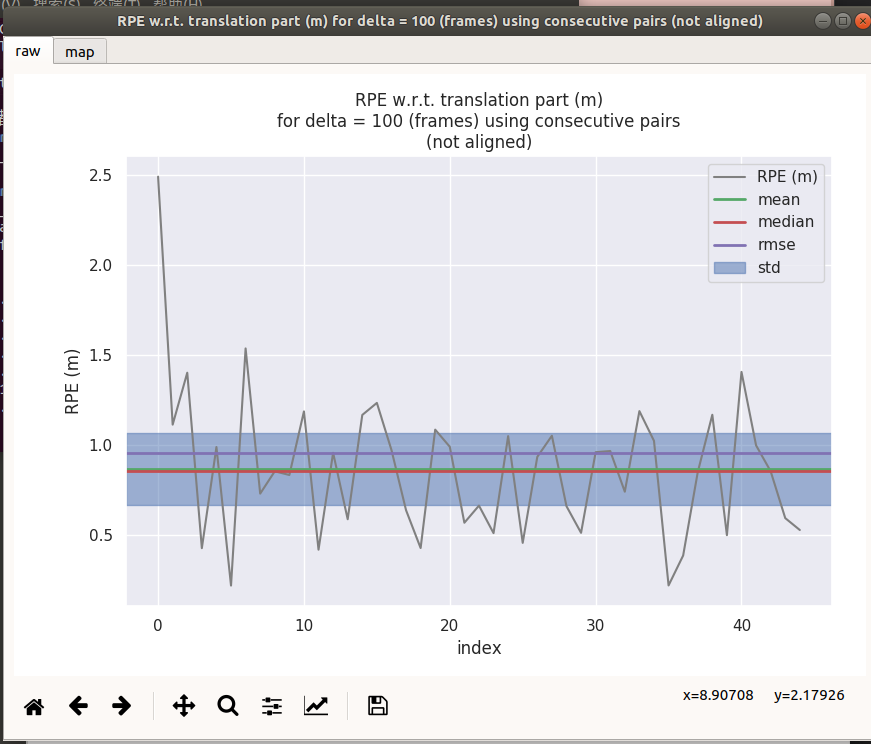

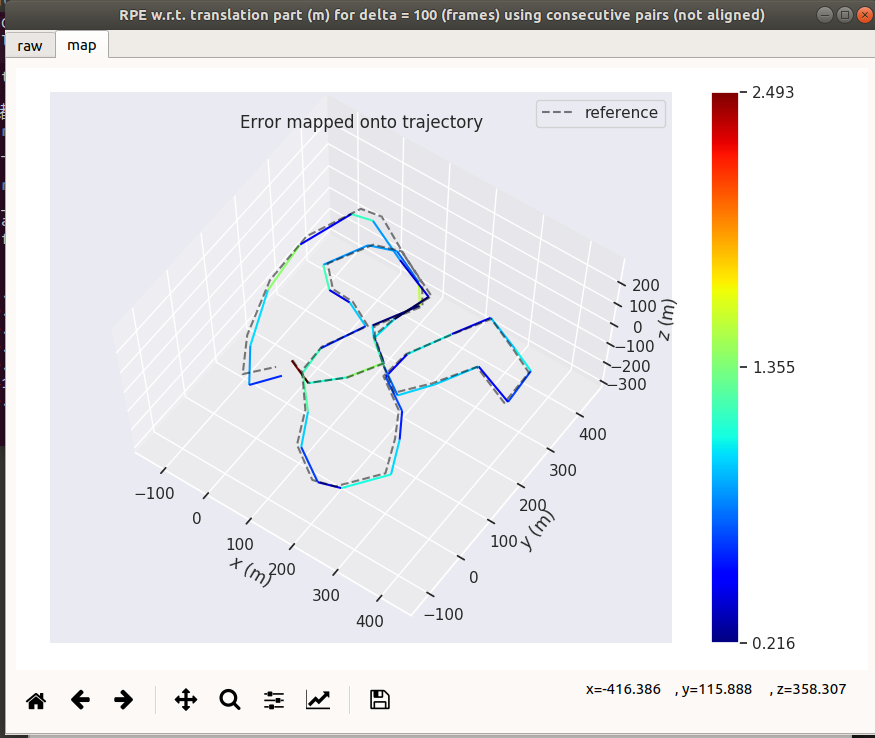

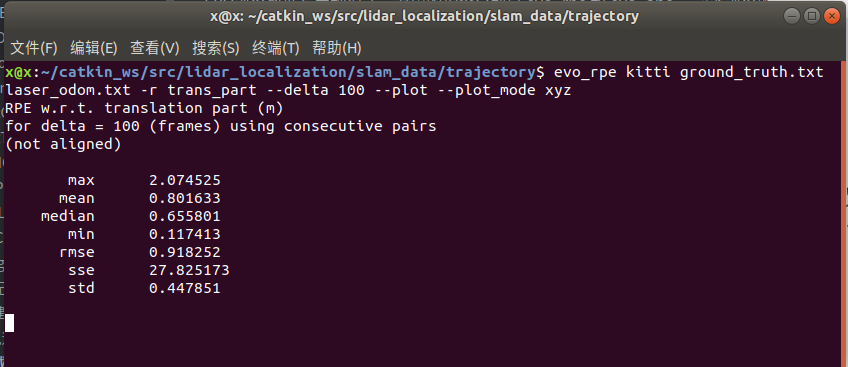

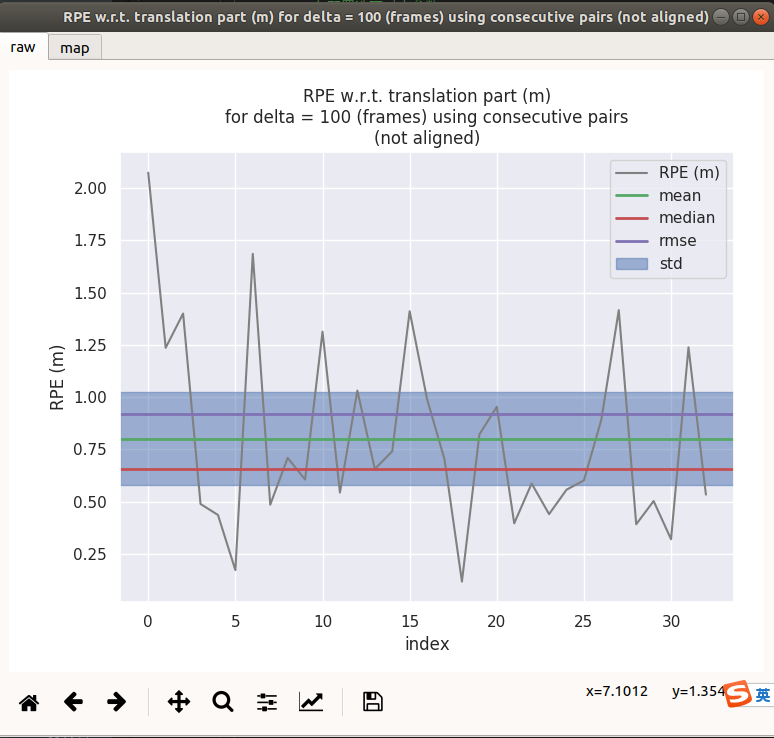

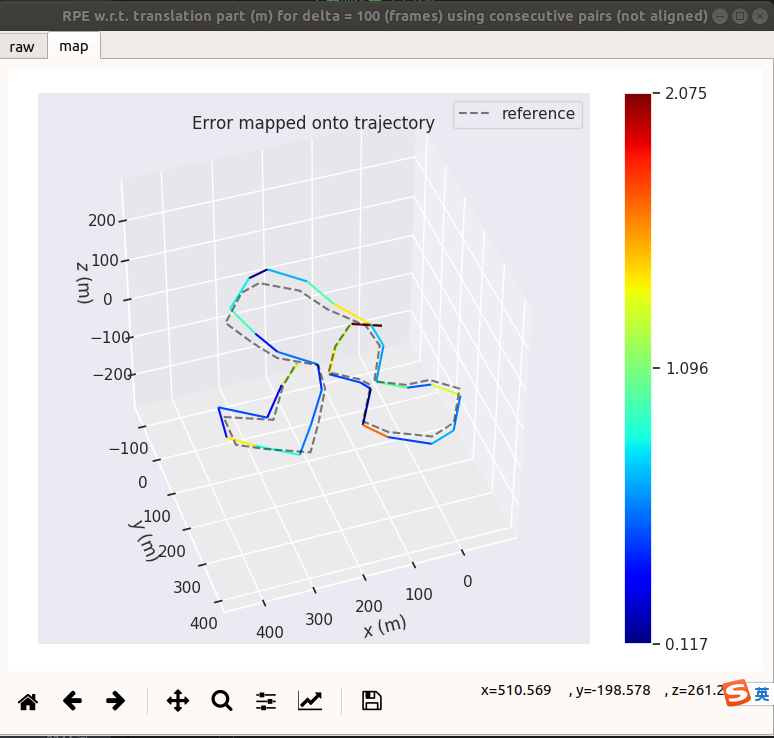

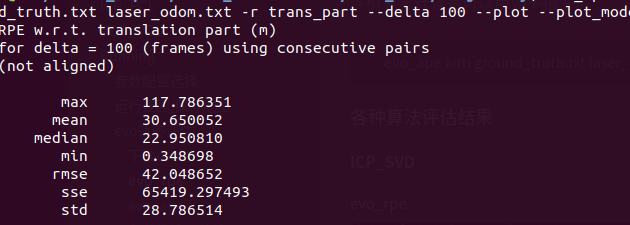

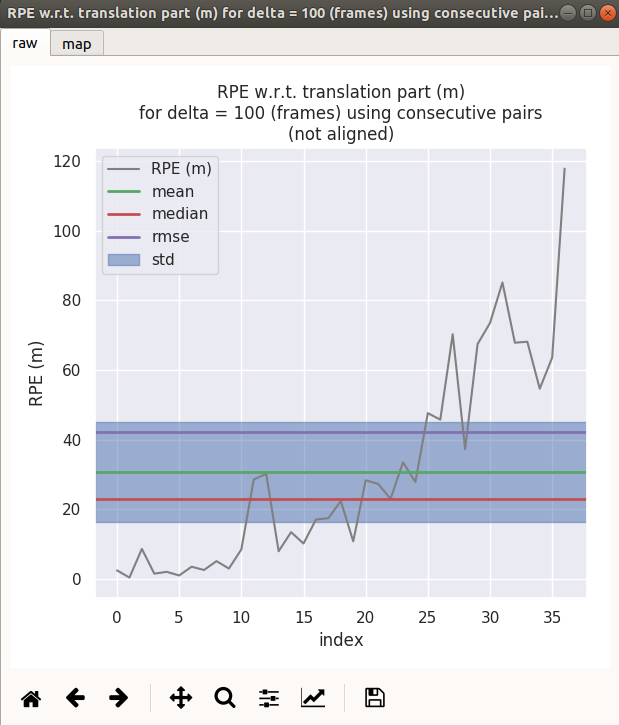

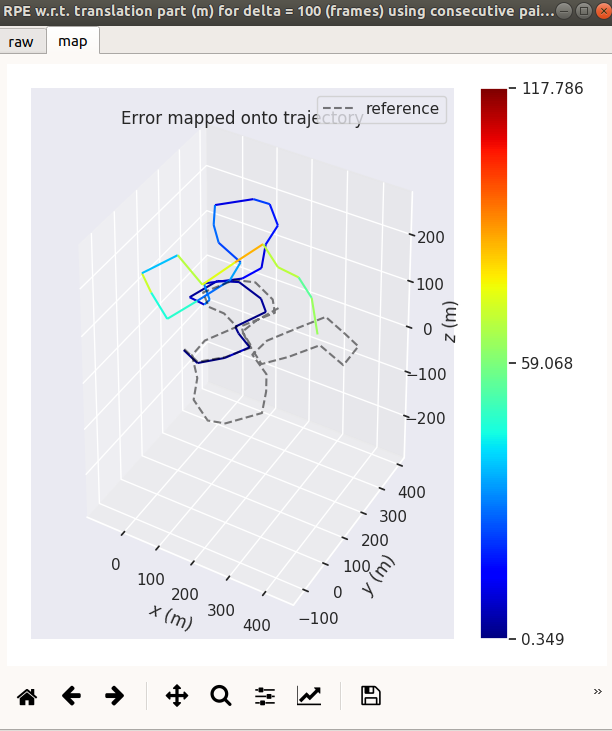

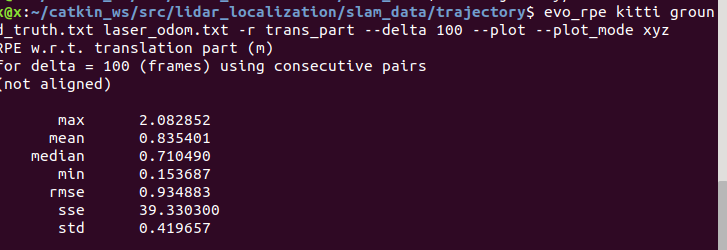

evo_rpe

The following instructions can be used to evaluate the error within each distance

evo_rpe kitti ground_truth.txt laser_odom.txt -r trans_part --delta 100 --plot --plot_mode xyz

Where – delta 100 refers to the error statistics every 100m, so the statistics is actually the percentage of error, which can directly correspond to the distance error index in kitti's odometry list.

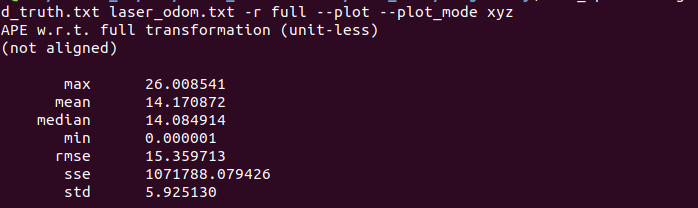

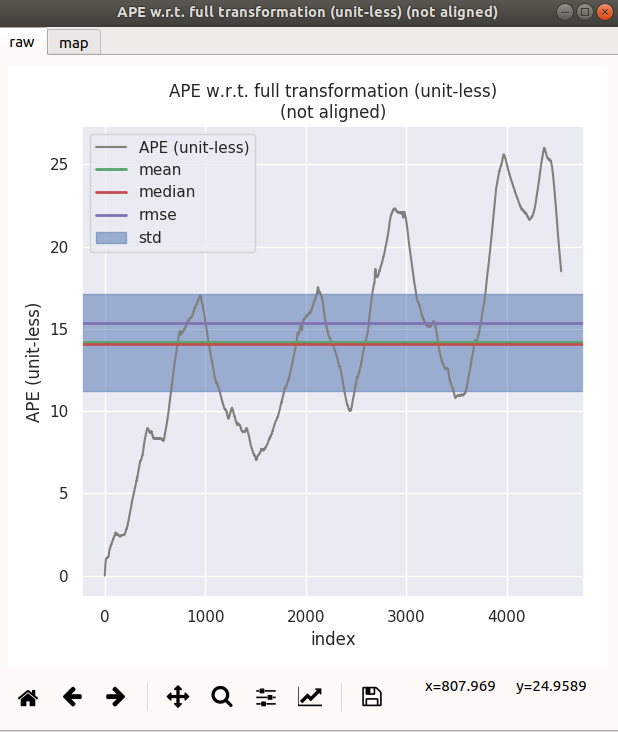

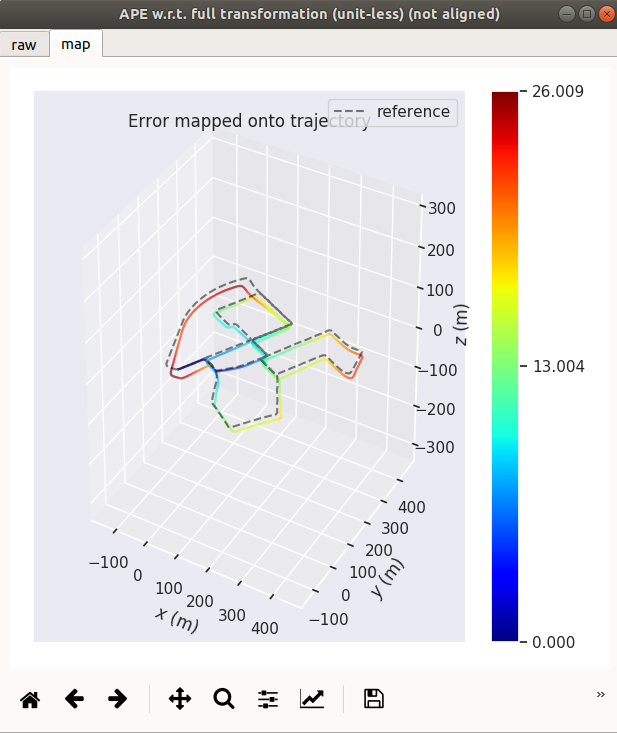

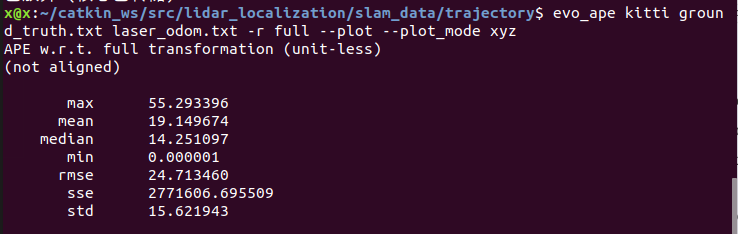

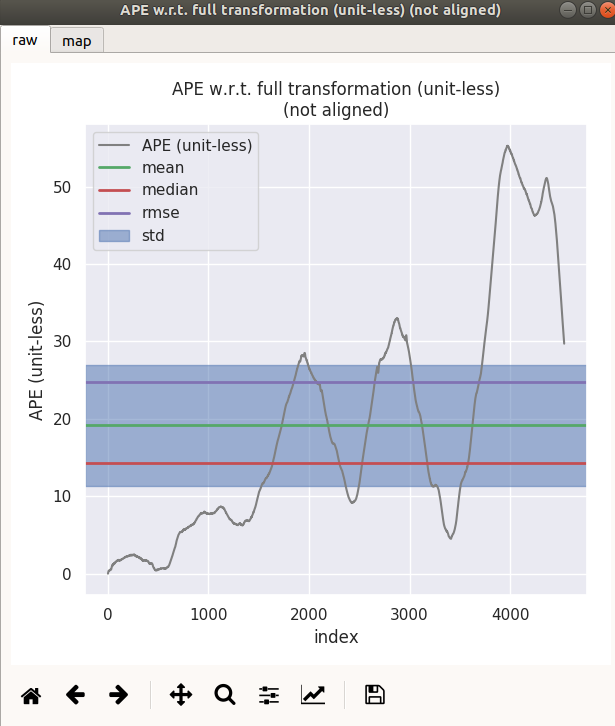

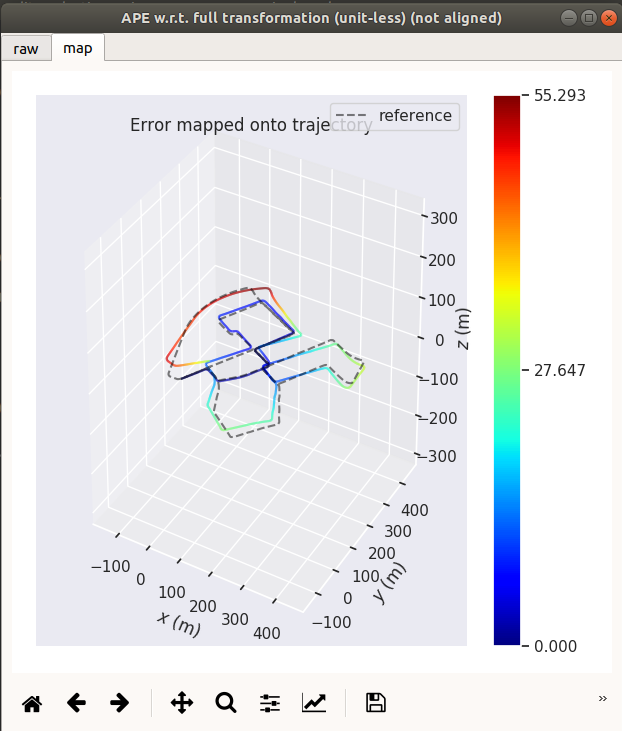

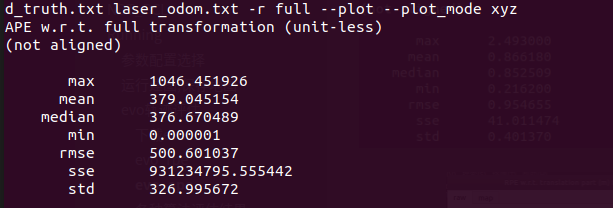

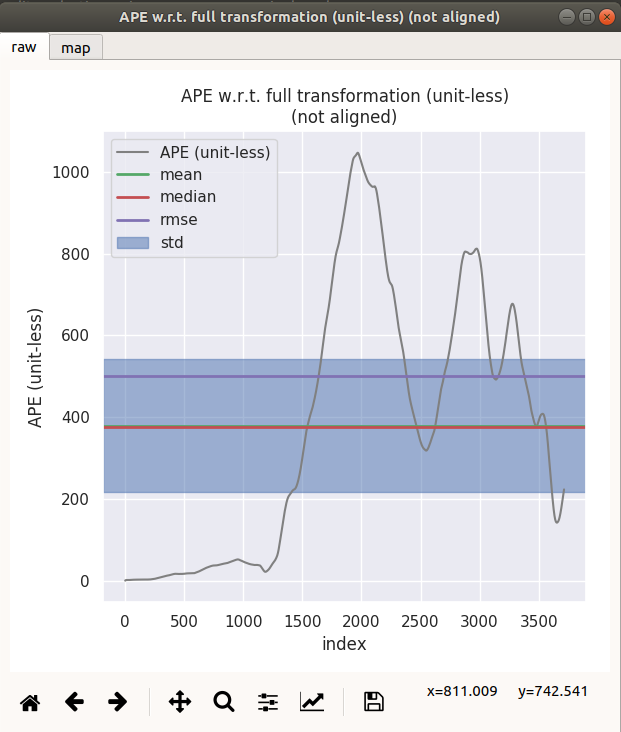

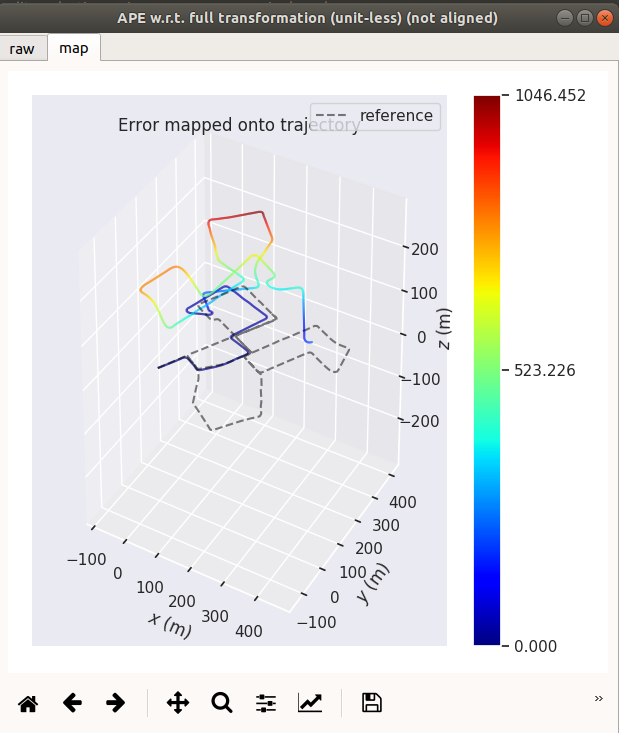

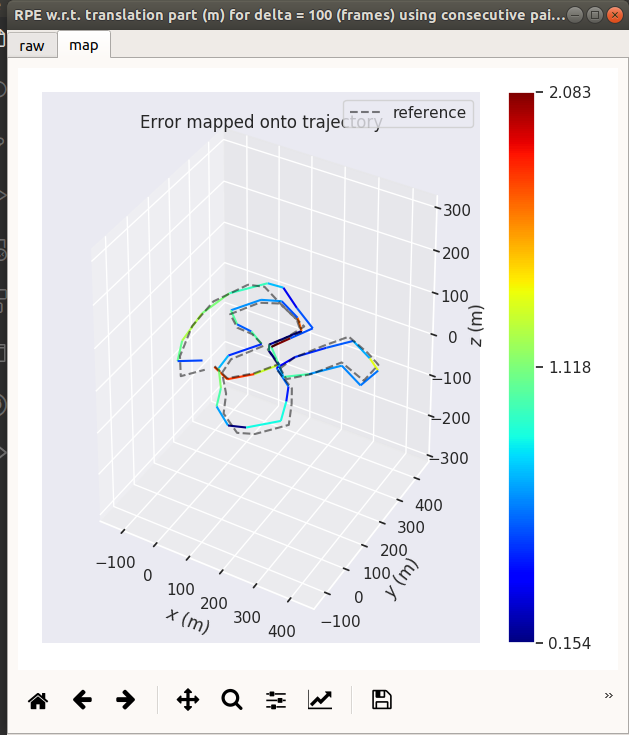

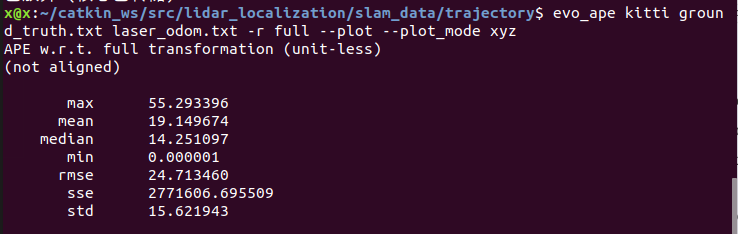

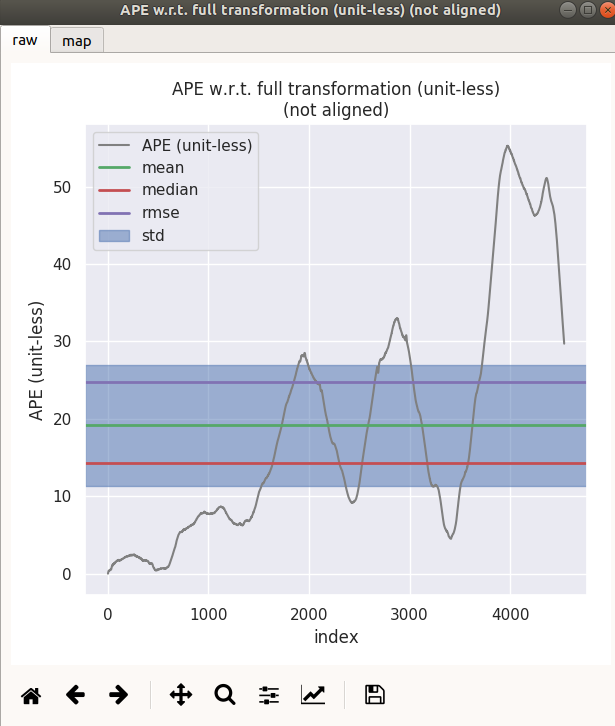

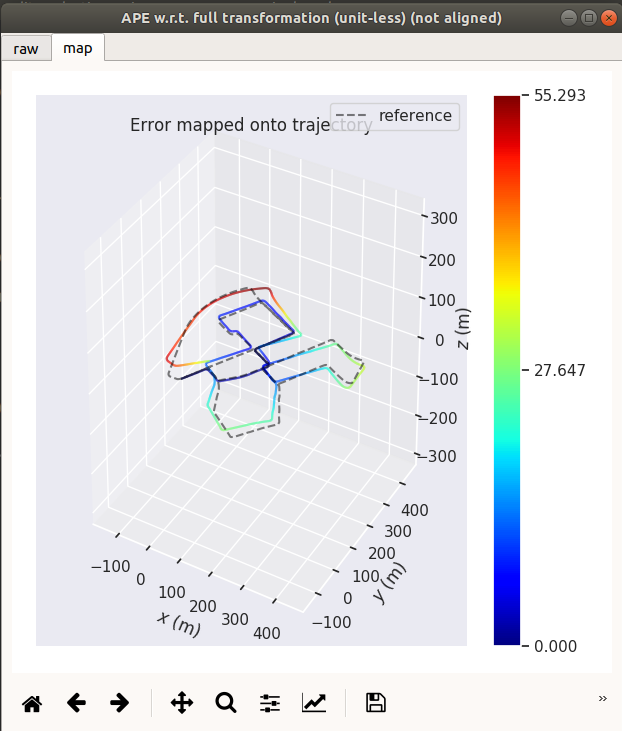

evo_ape

The following instructions can be used to evaluate the total cumulative error

evo_ape kitti ground_truth.txt laser_odom.txt -r full --plot --plot_mode xyz

Evaluation results of various algorithms

ICP_SVD

evo_rpe

evo_ape

NDT_CPU

evo_rpe

evo_ape

ICP_PCL

evo_rpe

evo_ape

ICP_NDT

evo_rpe

evo_ape

edited by kaho 2021.8.17