introduce

Blocking queue means that when the queue is full, the queue will block the thread inserting elements until the queue is full; when the queue is empty, the queue will block the thread obtaining elements until the queue becomes non empty. Blocking queue is the container used by producers to store elements and consumers to obtain elements.

When the thread insert / get action is blocked due to the queue full / empty, the queue also provides some mechanisms to handle, throw exceptions, return special values, or the thread has been waiting

Method / treatment | Throw exception | Return special value | Always blocked | Timeout exit |

|---|---|---|---|---|

Insertion method | add(e) | offer(e) | put(e) | offer(e, timeout, unit) |

Remove Method | remove(o) | poll() | take() | poll(timeout, unit) |

Inspection method | element() | peek() - do not remove elements | Not available | Not available |

tips: if it is an unbounded blocking queue, the put method will never be blocked; The offer method always returns true.

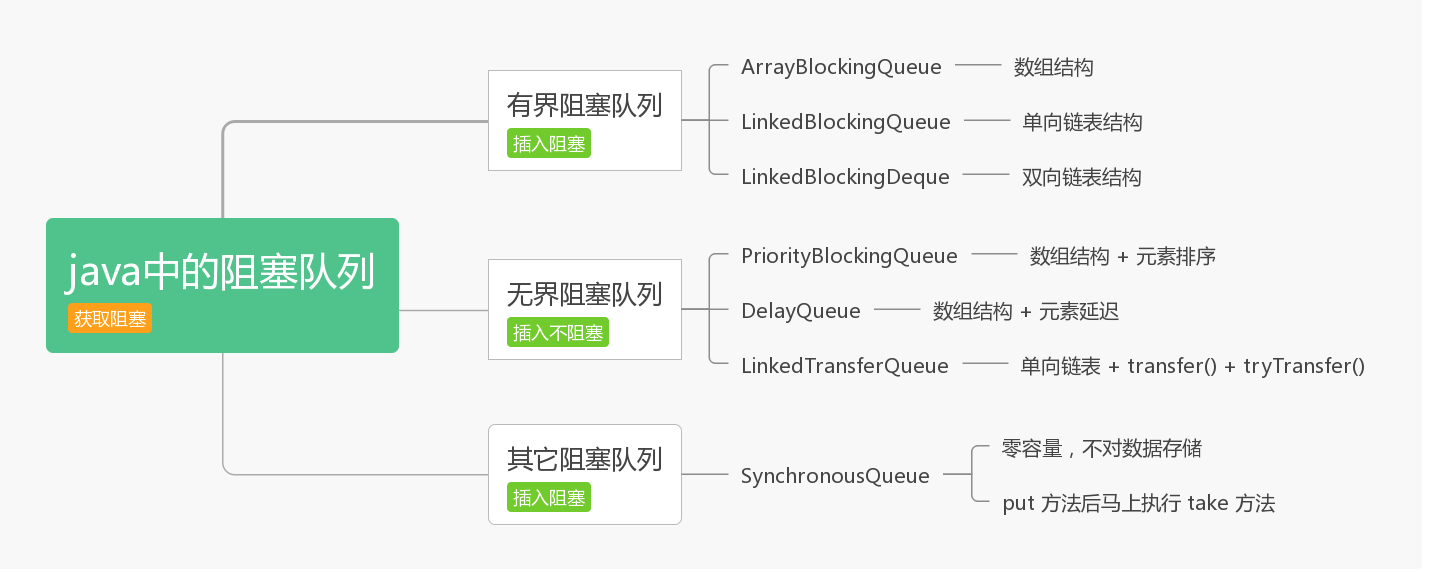

Blocking queue in Java:

ArrayBlockingQueue

ArrayBlockingQueue is a bounded blocking queue implemented with an array. This queue sorts elements according to the first in first out (FIFO) principle. By default, fair access of threads is not guaranteed.

Concurrency is controlled by reentrant exclusive lock ReentrantLock, and blocking is realized by Condition.

public class ArrayBlockingQueueTest {

/**

* 1. Because it is a bounded blocking queue, you need to set the initial size

* 2. Fair access to blocked threads is not guaranteed by default. Fairness can be set

*/

private static ArrayBlockingQueue<String> QUEUE = new ArrayBlockingQueue<>(2, true);

public static void main(String[] args) throws InterruptedException {

Thread put = new Thread(() -> {

// 3. Try to insert an element

try {

QUEUE.put("java");

QUEUE.put("javaScript");

// 4. The element is full, which will block the thread

QUEUE.put("c++");

} catch (InterruptedException e) {

e.printStackTrace();

}

});

put.start();

Thread take = new Thread(() -> {

try {

// 5. Get an element

System.out.println(QUEUE.take());

} catch (InterruptedException e) {

e.printStackTrace();

}

});

take.start();

// 6 javaScript,c++

System.out.println(QUEUE.take());

System.out.println(QUEUE.take());

}

}LinkedBlockingQueue

LinkedBlockingQueue is a bounded blocking queue implemented with a one-way linked list. The default maximum length for this queue is integer MAX_ VALUE. This queue sorts elements according to the first in first out principle, and the throughput is usually higher than that of ArrayBlockingQueue. Executors.newFixedThreadPool() uses this queue.

Like ArrayBlockingQueue, ReentrantLock is used to control concurrency. The difference is that it uses two exclusive locks to control consumption and production. It uses takeLock and putLock to control production and consumption without interference. As long as the queue is not full, the production thread can always produce; As long as the queue is not empty, consuming threads can consume all the time without blocking each other because of exclusive locks.

tips: because double locks are used to avoid inaccurate concurrent calculation, an AtomicInteger variable is used to count the total number of elements.

LinkedBlockingDeque

LinkedBlockingDeque is a bounded blocking queue composed of two-way linked list structure, which can insert and remove elements from both ends of the queue. It implements the BlockingDeque interface and adds addFirst, addLast, offerFirst, offerLast, peekFirst and peekLast methods. The method ending with the word First represents inserting, obtaining or removing the First element of the double ended queue. A method ending with the Last word that inserts, gets, or removes the Last element of a double ended queue.

The Node of LinkedBlockingDeque implements a variable prev pointing to the previous Node, so as to realize a two-way queue. Similar to ArrayBlockingQueue in concurrency control, a single ReentrantLock is used to control concurrency. Because both ends of the double ended queue can be consumed and produced, a shared lock is used.

Bidirectional blocking queues can be used in "work stealing" mode.

public class LinkedBlockingDequeTest {

private static LinkedBlockingDeque<String> DEQUE = new LinkedBlockingDeque<>(2);

public static void main(String[] args) {

DEQUE.addFirst("java");

DEQUE.addFirst("c++");

// java

System.out.println(DEQUE.peekLast());

// java

System.out.println(DEQUE.pollLast());

DEQUE.addLast("php");

// c++

System.out.println(DEQUE.pollFirst());

}

}tips: the take() method calls takeFirst(), which should be noted when using.

PriorityBlockingQueue

PriorityBlockingQueue is an unbounded blocking queue implemented by an array at the bottom, with sorting function. Because it is an unbounded queue, the insert will never be blocked. By default, elements are arranged in natural ascending order. You can also customize the class to implement the compareTo() method to specify the element sorting rule, or specify the construction parameter Comparator to sort the elements when initializing PriorityBlockingQueue.

The bottom layer also uses ReentrantLock to control concurrency. Since only acquisition will block, only one Condition (only notification consumption) is used to realize it.

public class PriorityBlockingQueueTest {

private static PriorityBlockingQueue<String> QUEUE = new PriorityBlockingQueue<>();

public static void main(String[] args) {

QUEUE.add("java");

QUEUE.add("javaScript");

QUEUE.add("c++");

QUEUE.add("python");

QUEUE.add("php");

Iterator<String> it = QUEUE.iterator();

while (it.hasNext()) {

// c++ javaScript java python php

// The same priority does not guarantee the sorting order

System.out.print(it.next() + " ");

}

}

}DelayQueue

DelayQueue is an unbounded blocking queue that supports Delayed acquisition of elements. Queues are implemented using PriorityQueue. The elements in the queue must implement the Delayed interface (the design of the Delayed interface can refer to the ScheduledFutureTask class). The elements are sorted according to the delay priority. The elements with short delay time are in the front. The elements can be extracted from the queue only when the delay expires.

The PriorityQueue in DelayQueue will sort the tasks in the queue. When sorting, the task with the smaller time will be ranked first (the task with the earlier time will be executed first). If the time of the two tasks is the same, compare the sequenceNumber, and the task with the smaller sequenceNumber will be ranked first (that is, if the execution time of the two tasks is the same, the task submitted first will be executed first).

Similar to PriorityBlockingQueue, the underlying layer is also an array, and a ReentrantLock is used to control concurrency.

Application scenario:

- Design of cache system: you can use DelayQueue to save the validity period of cache elements, and use a thread to query DelayQueue circularly. Once you can get elements from DelayQueue, it indicates that the cache validity period has expired.

- Scheduled task scheduling: use DelayQueue to save the tasks and execution time that will be executed on the current day. Once the tasks are obtained from DelayQueue, they will be executed. For example, TimerQueue is implemented using DelayQueue.

public class DelayElement implements Delayed, Runnable {

private static final AtomicLong SEQUENCER = new AtomicLong();

/**

* Identify element order

*/

private final long sequenceNumber;

/**

* Delay time in nanoseconds

*/

private long time;

public DelayElement(long time) {

this.time = System.nanoTime() + time;

this.sequenceNumber = SEQUENCER.getAndIncrement();

}

@Override

public long getDelay(TimeUnit unit) {

return unit.convert(time - System.nanoTime(), NANOSECONDS);

}

@Override

public int compareTo(Delayed other) {

// compare zero if same object

if (other == this) {

return 0;

}

if (other instanceof DelayElement) {

DelayElement x = (DelayElement) other;

long diff = time - x.time;

if (diff < 0) {

return -1;

} else if (diff > 0) {

return 1;

} else if (sequenceNumber < x.sequenceNumber) {

return -1;

} else {

return 1;

}

}

long diff = getDelay(NANOSECONDS) - other.getDelay(NANOSECONDS);

return (diff < 0) ? -1 : (diff > 0) ? 1 : 0;

}

@Override

public void run() {

System.out.println("sequenceNumber" + sequenceNumber);

}

@Override

public String toString() {

return "DelayElement{" + "sequenceNumber=" + sequenceNumber + ", time=" + time + '}';

}

}public class DelayQueueTest {

private static DelayQueue<DelayElement> QUEUE = new DelayQueue<>();

public static void main(String[] args) {

// 1. Add 10 parameters

for (int i = 1; i < 10; i++) {

// 2. Random delay within 5 seconds

int nextInt = new Random().nextInt(5);

long convert = TimeUnit.NANOSECONDS.convert(nextInt, TimeUnit.SECONDS);

QUEUE.offer(new DelayElement(convert));

}

// 3. Sorting of query elements - those with short delay are ranked first

Iterator<DelayElement> iterator = QUEUE.iterator();

while (iterator.hasNext()) {

System.out.println(iterator.next());

}

// 4. Element delay output can be observed

while (!QUEUE.isEmpty()) {

Thread thread = new Thread(QUEUE.poll());

thread.start();

}

}

}LinkedTransferQueue

LinkedTransferQueue is an unbounded blocked TransferQueue queue composed of a linked list structure.

A large number of CAS operations are used in concurrency control, and locks are not used.

Compared with other blocking queues, LinkedTransferQueue has more tryTransfer and transfer methods.

- transfer : Transfers the element to a consumer, waiting if necessary to do so. The stored element must wait until there is consumer consumption before returning.

- tryTransfer: Transfers the element to a waiting consumer immediately, if possible. If a consumer is waiting for a consumer element, the incoming element is passed to the consumer. Otherwise, false is returned immediately without waiting for consumption.

SynchronousQueue

Synchronous queue is a blocking queue that does not store elements. Each put operation must wait for a take operation, otherwise it will be blocked to continue the put operation. Executors.newCachedThreadPool uses this queue.

SynchronousQueue by default, the thread accesses the queue with an unfair policy without using a lock. It all implements concurrency through CAS operations. The throughput is very high, which is higher than LinkedBlockingQueue and ArrayBlockingQueue. It is very suitable for dealing with some efficient transitivity scenarios. Executors.newCachedThreadPool() uses SynchronousQueue for task delivery.

public class SynchronousQueueTest {

private static class SynchronousQueueProducer implements Runnable {

private BlockingQueue<String> blockingQueue;

private SynchronousQueueProducer(BlockingQueue<String> queue) {

this.blockingQueue = queue;

}

@Override

public void run() {

while (true) {

try {

String data = UUID.randomUUID().toString();

System.out.println(Thread.currentThread().getName() + " Put: " + data);

blockingQueue.put(data);

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

private static class SynchronousQueueConsumer implements Runnable {

private BlockingQueue<String> blockingQueue;

private SynchronousQueueConsumer(BlockingQueue<String> queue) {

this.blockingQueue = queue;

}

@Override

public void run() {

while (true) {

try {

System.out.println(Thread.currentThread().getName() + " take(): " + blockingQueue.take());

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

public static void main(String[] args) {

final BlockingQueue<String> synchronousQueue = new SynchronousQueue<>();

SynchronousQueueProducer queueProducer = new SynchronousQueueProducer(synchronousQueue);

new Thread(queueProducer, "producer - 1").start();

SynchronousQueueConsumer queueConsumer1 = new SynchronousQueueConsumer(synchronousQueue);

new Thread(queueConsumer1, "consumer — 1").start();

SynchronousQueueConsumer queueConsumer2 = new SynchronousQueueConsumer(synchronousQueue);

new Thread(queueConsumer2, "consumer — 2").start();

}

}- Reference book: the art of Java Concurrent Programming

- Reference blog: https://www.cnblogs.com/konck/p/9473677.html