My first experience of Go + language - (4) zero basic learning Go + crawler

"My first experience of Go + language" | the essay solicitation activity is in progress

Go + language is very suitable for writing crawler programs. It has the advantages of perfect concurrency mechanism, large number of concurrency, less resource occupation, fast running speed and convenient deployment.

Combined with official documents and Go language materials, this paper introduces Go + crawler programming step by step, and takes you to learn and write crawler programs through multiple complete routines.

All the routines in this paper have been tested in Go + environment.

1. Why write crawler in Go + language

Web crawlers work by examining the HTML content of web pages and performing some type of action based on the content. In particular, extract the data of the current page and crawl the page data according to the queue by capturing and analyzing the exposed links.

Go + language is very suitable for writing crawler programs and has unique advantages:

- Perfect concurrency mechanism

- Large number of concurrent

- Occupy less resources

- Fast running speed

- Easy deployment

2. The http.get method implements a simple request

2.1 http.Get method description

Net package encapsulates network related functions, the most commonly used are http and url. The Go + language can use the net/http package to request web pages.

The basic syntax of http.Get method is as follows:

resp, err := http.Get("http://example.com/")

Parameter: requested destination URL

Send an http get request to the server and get the response.

2.2 http.Get gets the html source file of the web page

We first write a simple crawler with http.Get.

[routine 1] http.Get obtains the html source file of the web page

// 1. http.Get gets the html source file of the web page

package main

import (

"fmt"

"io/ioutil"

"net/http"

)

func main() {

resp, _ := http.Get("http://www.baidu.com")

defer resp1.Body.Close()

contents, _ := ioutil.ReadAll(resp.Body)

fmt.Println(string(contents))

}

Run [routine 1] to grab the html source file of Baidu home page.

Too much data to see clearly? It doesn't matter. We can save the captured data.

2.3 exception handling and web page saving

The network activity is very complex, and the access to the website may not be successful. Therefore, it is necessary to deal with the exceptions in the crawling process, otherwise the crawler will make running errors when encountering exceptions.

The main causes of abnormality are:

- Cannot link to server

- The remote URL does not exist

- No network

- HTTPError triggered

We take the CSDN hot list web page as an example to illustrate exception handling and data saving, and save the crawled web page in the page01.html file in the. vscode directory.

[routine 2] exception handling and web page saving

// 2. Grab web pages, exception handling and data saving

package main

import (

"fmt"

"io/ioutil"

"net/http"

"os"

)

func main() {

resp, err := http.Get("https://blog.csdn.net/rank/list")

if err != nil {

fmt.Printf("%s", err)

os.Exit(1)

}

defer resp.Body.Close()

if resp.StatusCode == http.StatusOK {

fmt.Println(resp.StatusCode)

}

f, err := os.OpenFile("csdnPage01.html", os.O_RDWR|os.O_CREATE|os.O_APPEND, os.ModePerm)

if err != nil {

panic(err)

return

}

defer f.Close()

buf := make([]byte, 1024)

for {

n, _ := resp.Body.Read(buf)

if 0 == n {

break

}

f.WriteString(string(buf[:n]))

}

}

After adding exception handling, you can handle the exceptions in web page access and ensure the successful acquisition of web pages from the server.

But have we really caught the page we want? Open the csdnPage01.html file, as shown in the following figure.

[routine 2] did successfully capture some contents in the CSDN hot list page, but the specific contents of the key hot list articles were not captured. It doesn't matter. Let's try it step by step.

3. The client.get method implements the initiation request

3.1 Client object description

The Client method is the component that initiates the request within the http package. It can control the timeout, redirection and other settings of the request.

Client is defined as follows:

type Client struct {

Transport RoundTripper // Timeout control management

CheckRedirect func(req *Request, via []*Request) error // Control redirection

Jar CookieJar // Objects that manage cookies

Timeout time.Duration // Limit the time to establish a connection

}

The simple calling method of the Client is similar to the http.Get method, for example:

resp, err := client.Get("http://example.com")

//Parameter: requested destination URL

3.2 control the header structure of HTTP client

More commonly, to set header parameters, cookie s, certificate validation and other parameters during a Request, you can first construct a Request and then call the Client.Do() method when using the Client.

[routine 3] control the header structure of HTTP client

// 3. Control the header structure of HTTP client

package main

import (

"encoding/json"

"fmt"

"io/ioutil"

"net/http"

"os"

)

func checkError(err error) {

if err != nil {

fmt.Printf("%s", err)

os.Exit(1)

}

}

func main() {

url := "https://blog.csdn.net/youcans "/ / generate url

client := &http.Client{} // Generate client

req, err := http.NewRequest("GET", url, nil) // Submit request

checkError(err) // exception handling

// Custom Header

cookie_str := "your cookie" // cookie string copied from browser

req.Header.Set("Cookie", cookie_str)

userAgent_str := "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)"

req.Header.Set("User-Agent", userAgent_str) // Generate user agent

resp, err := client.Do(req) // Processing returned results

checkError(err)

defer resp.Body.Close() // Close related links

// Status code verification (http.StatusOK=200)

if resp.StatusCode == http.StatusOK {

fmt.Println(resp.StatusCode)

}

/*

contents, err := ioutil.ReadAll(resp.Body)

checkError(err)

fmt.Println(string(contents))

*/

f, err := os.OpenFile("csdnPage02.html", os.O_RDWR|os.O_CREATE|os.O_APPEND, os.ModePerm)

if err != nil {

panic(err)

return

}

defer f.Close()

buf := make([]byte, 1024)

for {

n, _ := resp.Body.Read(buf)

if 0 == n {

break

}

f.WriteString(string(buf[:n]))

}

}

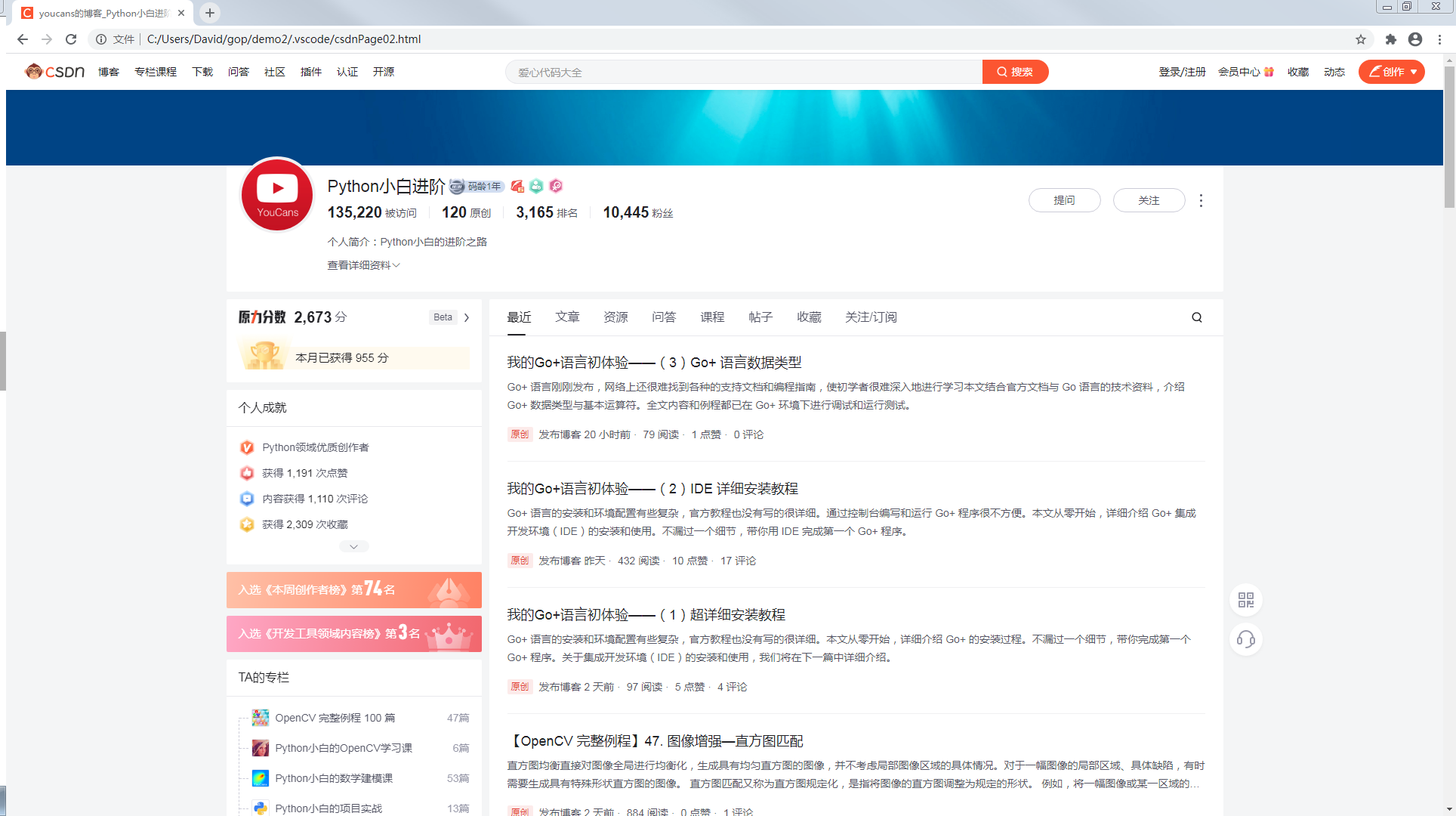

[routine 3] successfully grab the youcans page of CSDN website. Open csdnPage01.html file, as shown below:

3.3 parsing web page data

The obtained web page source code needs to be parsed to get the data we need.

Different methods can be selected according to different types of responses:

-

For data in html format, you can select regular expression or Css selector to obtain the required content;

-

For json format data, you can use the encoding/json library to deserialize the obtained data and obtain the required content.

Regular expressions are tools for pattern matching and text manipulation. Go + language supports regular expressions through regexp package and adopts RE2 syntax, which is consistent with the regular expressions of go and Python languages.

[function description] func MustCompile(str string) *Regexp

MustCompile allows you to create a Regexp object

MustCompile is used to parse whether the regular expression str is legal

If it is legal, a Regexp object is returned; if it is not legal, an exception is thrown

For example:

rp :=regexp.MustCompile(`<div class="hd">(.*?)</div>`) // Finds and returns a string beginning with < div class = "HD" > and ending with < / div > titleRe := regexp.MustCompile(`<span class="title">(.*?)</span>`) // Finds and returns a string beginning with < span class = "title" > and ending with < / span >

**[function description] func (re *Regexp) FindAllStringSubmatch(s string, n int) string**

Find the regular expression compiled in re in s and return all matching contents

At the same time, the matching content of the sub expression is returned

**[function description] func (re *Regexp) FindStringSubmatchIndex(s string) []int**

Find the regular expression compiled in re in s and return the first matching position

At the same time, the position where the sub expression matches is returned

[function description] func (re *Regexp) FindStringSubmatch(s string) []string

Find the regular expression compiled in re in s and return the first matching content

At the same time, the matching content of the sub expression is returned

[routine 4] parsing web page data

import (

"fmt"

"io/ioutil"

"net/http"

"os"

"regexp"

"strconv"

"strings"

"time"

)

// exception handling

func checkError(err error) {

if err != nil {

fmt.Printf("%s", err)

panic(err)

}

}

// URL request

func fetch(url string) string {

fmt.Println("Fetch Url", url)

client := &http.Client{} // Generate client

req, err := http.NewRequest("GET", url, nil) // Submit request

checkError(err)

// Custom Header

userAgent_str := "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; http://www.baidu.com)"

req.Header.Set("User-Agent", userAgent_str) // Generate user agent

resp, err := client.Do(req) // Processing returned results

checkError(err)

defer resp.Body.Close()

// Status code verification (http.StatusOK=200)

if resp.StatusCode == 200 {

body, err := ioutil.ReadAll(resp.Body) // Read the body content of resp

checkError(err)

return string(body)

} else {

fmt.Printf("%s", err)

return ""

}

}

// Parse page

func parseUrls(url string, f *os.File) {

//func parseUrls(url string) {

body := fetch(url)

body = strings.Replace(body, "\n", "", -1) // Remove carriage return from body content

rp := regexp.MustCompile(`<div class="hd">(.*?)</div>`)

titleRe := regexp.MustCompile(`<span class="title">(.*?)</span>`)

idRe := regexp.MustCompile(`<a href="https://movie.douban.com/subject/(\d+)/"`)

items := rp.FindAllStringSubmatch(body, -1) // Parsing results that conform to regular expressions

for _, item := range items {

idItem := idRe.FindStringSubmatch(item[1])[1] // Find the first matching result ID

titleItem := titleRe.FindStringSubmatch(item[1])[1] // Find the first matching result TITLE

//fmt.Println(idItem, titleItem)

_, err := f.WriteString(idItem + "\t" + titleItem + "\n")

checkError(err)

}

return

}

func main() {

f, err := os.Create("topMovie.txt") // create a file

defer f.Close()

_, err = f.WriteString("ID\tTitle\n") // Description field

checkError(err)

start := time.Now()

// Grab all Top250

for i := 0; i < 10; i++ {

parseUrls("https://movie.douban.com/top250?start="+strconv.Itoa(25*i), f)

} // Convert a number to a string

elapsed := time.Since(start)

fmt.Printf("Took %s", elapsed)

}

The program content of the URL request to obtain web page data in [routine 4] is the same as that in [routine 3], except that it is encapsulated as a function fetch() for ease of use.

[routine 4] grab the information of the Top250 movie from Douban. After running, the content displayed on the console is:

Fetch Url https://movie.douban.com/top250?start=0

Fetch Url https://movie.douban.com/top250?start=25

Fetch Url https://movie.douban.com/top250?start=50

Fetch Url https://movie.douban.com/top250?start=75

Fetch Url https://movie.douban.com/top250?start=100

Fetch Url https://movie.douban.com/top250?start=125

Fetch Url https://movie.douban.com/top250?start=150

Fetch Url https://movie.douban.com/top250?start=175

Fetch Url https://movie.douban.com/top250?start=200

Fetch Url https://movie.douban.com/top250?start=225

The contents saved in the file topMovie.txt are:

ID Title

1292052 Shawshank Redemption

1291546 farewell my concubine

1292720 Forrest Gump

...

1292528 guess the train

1307394 Millennium actress

Note: [routine 4] refers to the article of Senior Engineer [Golang programming]: Write reptiles in Golang (I) , thank you! The author adapted, annotated and tested it in Go + environment.

3. Summary

Go + language is very suitable for writing crawler programs, which is also the advantage area of go +.

Combined with official documents and Go language materials, this paper introduces Go + crawler programming step by step, and takes you to learn and write crawler programs through multiple complete routines.

All the routines in this article have been debugged and tested in the Go + environment.

[end of this section]

Copyright notice:

Original works, reprint must be marked with the original link:( https://blog.csdn.net/youcans/article/details/121644252)

[routine 4] refer to the article of senior engineer "Golang programming": Write reptiles in Golang (I) , thank you.

Copyright 2021 youcans, XUPT

Crated: 2021-11-30

Welcome to "my first experience in Go + language" series, which is constantly updated

My first experience of Go + language - (1) super detailed installation tutorial

My first experience of Go + language - (2) detailed IDE installation tutorial

My first experience of Go + language - (3) Go + data type

My first experience of Go + language - (4) zero basic learning Go + crawler

"My first experience of Go + language" | the essay solicitation activity is in progress