[network communication] select, poll, epoll

catalogue

[network communication] select, poll, epoll

0. Basic principles of network programming

1 network programming (Socket) concept

1.2 pseudo asynchronous IO (multithreaded BIO)

Scatter and gather of multiple buffer s

2, Examples of using C language

preface

- Address: https://blog.csdn.net/hancoder/article/details/108899013

- Recommended Videos:

- NIO multiplexing + system call integration explanation: https://www.bilibili.com/video/BV1Ka4y177gs

- Multiplexing source level explanation Video: https://www.bilibili.com/video/BV1pp4y1e7xN

- Recommended Videos: https://www.bilibili.com/video/BV1VJ411D7Pm

- Knowledge of interruption and zero copy: https://blog.csdn.net/hancoder/article/details/112149121

0. Basic principles of network programming

1 network programming (Socket) concept

First of all, note that Socket is not a unique concept in Java, but a language independent standard. Any programming language that can realize network programming has Socket.

1.1 what is a Socket

Two programs on the network realize data exchange through a two-way communication connection. One end of this connection is called a socket.

At least one port number is required to establish a network communication connection. socket is essentially a programming interface * (API) *, which encapsulates TCP/IP,

TCP/IP , should also provide an interface for programmers to do network development, which is the , socket , programming interface; HTTP # is a car, which provides a specific form of encapsulating or displaying data; Socket # is an engine that provides the ability of network communication.

The original English meaning of Socket is * "hole" or "Socket". As the process communication mechanism of BSD UNIX, it takes the latter meaning. Commonly known as "Socket" *, it is used to describe the IP address and port. It is a handle to the communication chain and can be used to realize the communication between different virtual machines or different computers. Hosts on the Internet # generally run multiple service software and provide several services at the same time. Each service opens a Socket and is bound to a port. Different ports correspond to different services.

Socket is like a porous socket just like its original English meaning. A host is like a room full of various sockets. Each socket has a number. Some sockets provide 220 V AC, some provide 110 V AC, and some provide cable TV programs. The customer software can get different services by inserting the plug into different numbered sockets.

1.2 Socket connection steps

According to the method of connection initiation and the target of local socket connection, the connection process between sockets can be divided into three steps: server listening, client request and connection confirmation. [if data interaction * + * disconnection is included, there are five steps in total]

(1) Server monitoring: the server-side socket does not locate the specific client socket, but is in the state of waiting for connection to monitor the network state in real time.

(2) Client request: refers to the connection request made by the socket of the client. The target to be connected is the socket of the server. Therefore, the socket of the client must first describe the socket of the server to which it is connected, point out the address and port number of the server socket, and then make a connection request to the server socket.

(3) Connection confirmation: when the server-side socket hears or receives the connection request of the client socket, it responds to the request of the client socket, establishes a new thread, and sends the description of the server-side socket to the client. Once the client confirms the description, the connection is established. The server-side socket continues to be in the listening state and continues to receive connection requests from other client-side sockets.

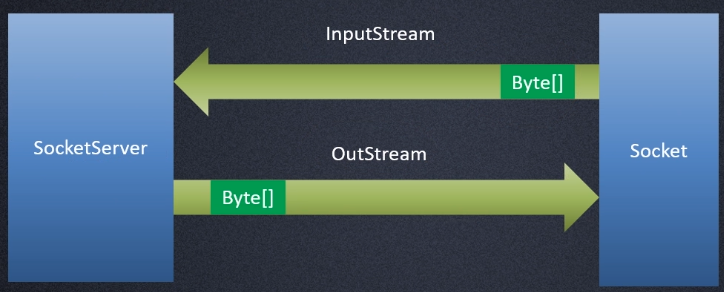

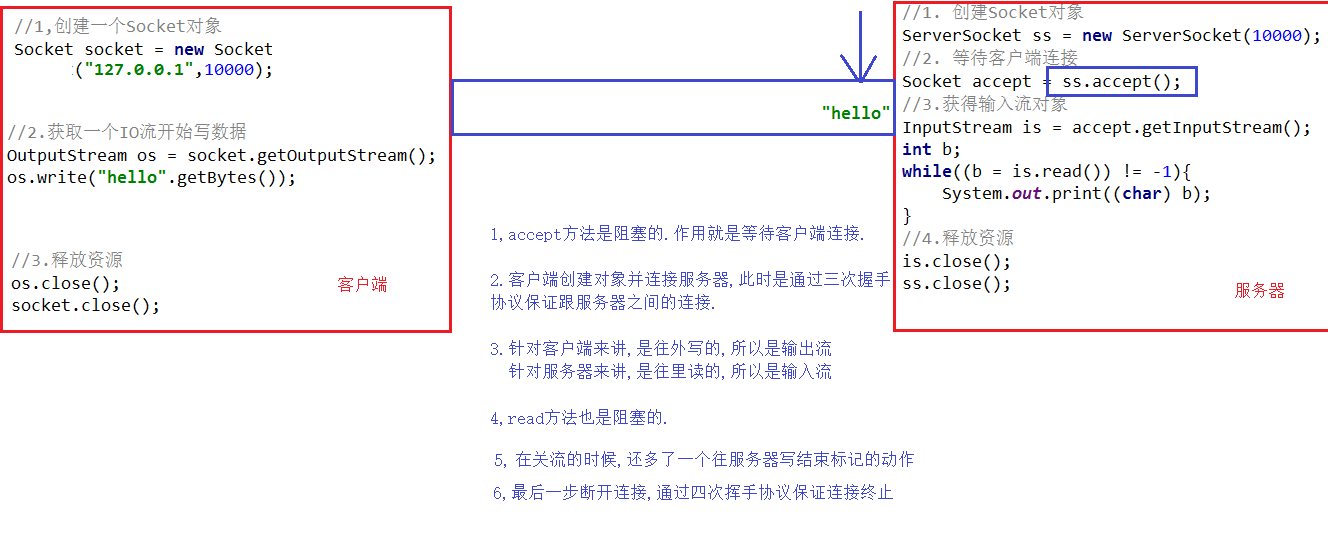

1.3 Socket in Java

In Java Net package is the basic class library of network programming. ServerSocket and socket are the basic types of network programming. ServerSocket # is the application type of the server. Socket # is the type of connection. After the connection is established successfully, both the server and the client will have a socket object example, through which all operations of the session can be completed.

For a complete network connection, sockets # are equal, and there is no server client classification.

After the construction method of ServerSocket is executed successfully, other method calls must be placed in the try finally block to ensure that the ServerSocket can be closed normally.

Similarly, if the socket returned by accept() fails to accept, it must be ensured that the socket no longer exists or contains any resources so that they do not have to be cleared. However, if the execution is successful, the subsequent statements must enter a try finally block to ensure that the socket can be correctly understood when an exception occurs.

There are three sockets for a complete communication. After the server socket on the server side accept s successfully, it will generate a socket to communicate with the client

1, BIO (Blocking I/O)

In synchronous blocking I/O mode, data reading and writing must be blocked in a thread and wait for it to complete.

https://zhuanlan.zhihu.com/p/23488863

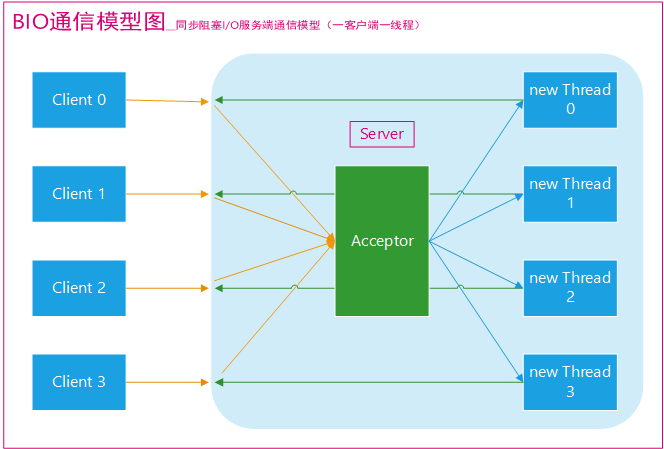

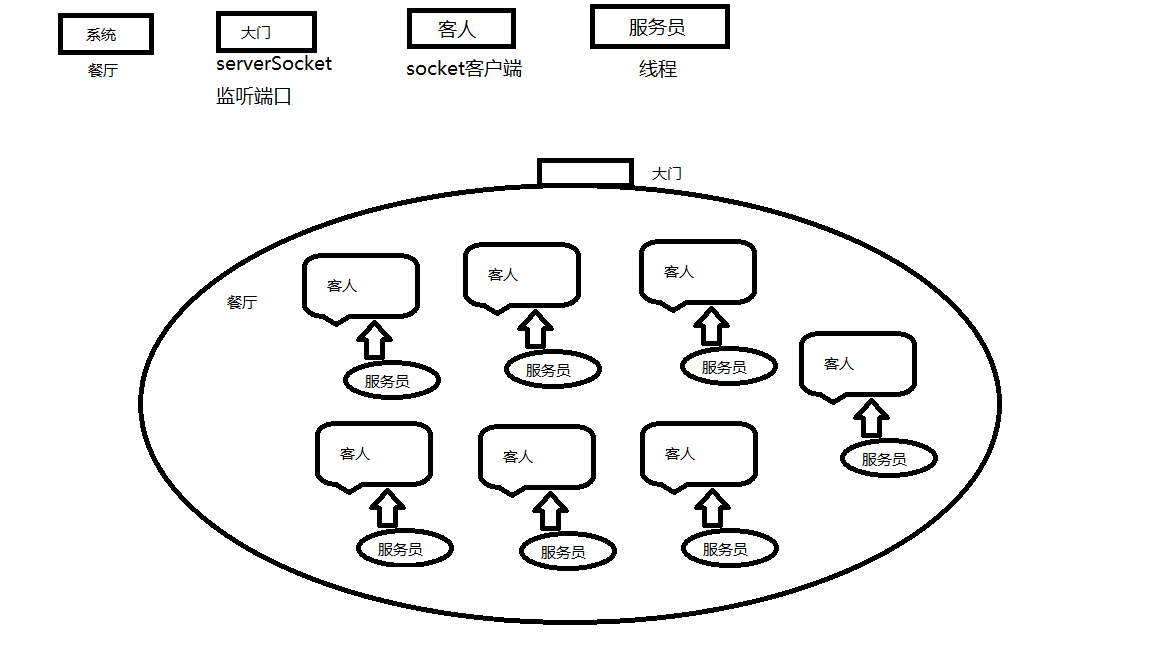

1.1 traditional BIO

The model diagram of BIO communication (one request and one response) is as follows:

For the server using # BIO communication model # an independent Acceptor thread is usually responsible for monitoring the connection of the client. In the while(true) loop, the server will call the accept() method to wait for receiving the connection from the client. Once a connection request is received, a communication socket can be established to read and write on the communication socket. At this time, other client connection requests cannot be received, You can only wait for the operation of the currently connected client to complete, but you can support the connection of multiple clients through multithreading, as shown in the above figure.

- accept() / / block and establish three handshakes

- read() / / read data

If you want the BIO communication model to handle multiple client requests at the same time, you must use multithreading (mainly due to socket.accept(), socket read(),socket. The three main functions involved in write () are synchronous blocking), that is, the server creates a new thread for each client to process the link after receiving the client connection request. After the processing is completed, the response is returned to the client through the output stream, and the thread is destroyed. This is a typical request response communication model.

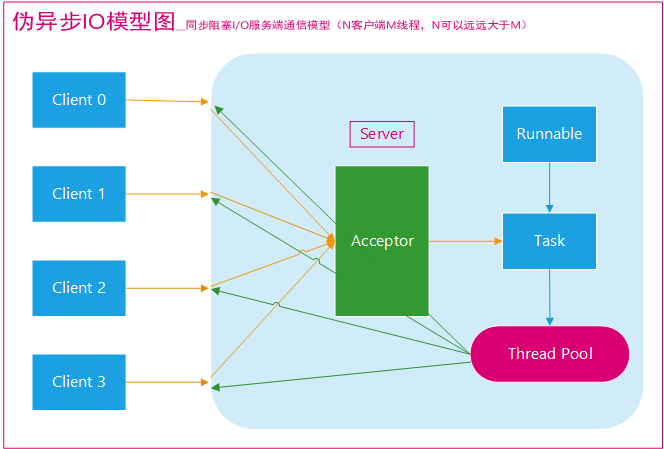

If we can make the thread pool do anything unnecessary, we can also improve the thread pool. Using FixedThreadPool ¢ can effectively control the maximum number of threads, ensure the control of the limited resources of the system, and realize the pseudo asynchronous I/O model of N (number of client requests): M (number of threads processing client requests) (N can be much greater than M). It will be described in detail in the following section "pseudo asynchronous BIO".

Let's imagine what happens to this model when the number of concurrent accesses on the client increases?

In Java virtual machine, thread is a valuable resource, and the cost of thread creation and destruction is very high. In addition, the cost of thread switching is also very high. Especially in operating systems such as Linux, thread is essentially a process. Creating and destroying threads are heavyweight system functions. If the concurrent access volume increases, the number of threads will expand sharply, which may lead to thread stack overflow, failure to create new threads and other problems, and eventually lead to process downtime or dead, and unable to provide external services.

Working mechanism of Java BIO:

-

- Start a ServerSocket on the server side

-

- The client starts the Socket to communicate with the server. By default, the server needs to establish a thread for each client to communicate with it

-

- After the client sends a request, it first asks the server whether there is a thread response. If not, it will wait or be rejected

-

- If there is a response, the client thread will wait for the end of the request and continue to execute

public class BIOServer {

public static void main(String[] args) throws IOException {

byte[] bytes = new byte[1024];

ServerSocket serverSocket = null;

Socket socket=null;//client

InputStream in=null;//Input stream

OutputStream out = null;//Output stream

//The following content should be try catch, which is abbreviated here

serverSocket = new ServerSocket();

// Port number + ip, ip is local by default

serverSocket.bind(new InetSocketAddress(9876));//Specify the interface and listen

//serverSocket = new ServerSocket(port);// Specified port listening

// Blocking -- the program releases cpu resources and the program does not execute downward

// accept, responsible for communication

while(true){

System.out.println("--Waiting for connection--");

socket =serverSocket.accept();//Blocking, three handshakes

System.out.println("--Connection successful--");

// Unblock and execute downward, and read will also be blocked

in = socket.getInputStream();

byte[] buffer = new byte[1024];

int length=0;

System.out.println("--Start reading data--");

while((length=in.read(buffer))>0){//block

System.out.println("input is:"+new String(buffer,0,length));

out = socket.getOutputStream();

out.write("success".getBytes);//

}

System.out.println("--Data reading completed:" + read + "--");

}

// finally close

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

public class Client {

public static void main(String[] args) throws IOException {

Socket socket = new Socket();

socket.connect(new InetSocketAddress("127.0.0.1", 9876));

System.out.println("--Please enter the content--");

Scanner scanner = new Scanner(System.in);

while (true) {

String next = scanner.next();

socket.getOutputStream().write(next.getBytes());

}

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

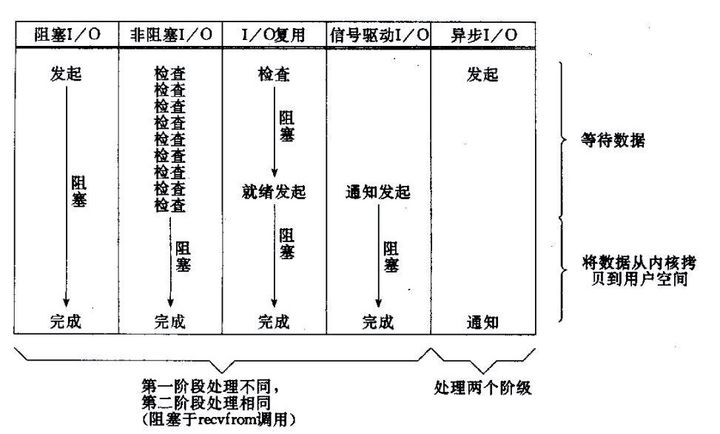

Blocking and non blocking refer to different methods adopted by the process according to the ready state of IO operation when accessing data. In short, it is an implementation method of read or write operation method. In blocking mode, the read or write function will always wait, while in non blocking mode, the read or write method will immediately return a status value.

Take bank withdrawals as an example:

Blocking: ATM queues for withdrawal, and you can only wait (when blocking IO is used, Java calls will be blocked until reading and writing are completed);

Non blocking: withdraw money from the counter, take a number, and then sit on the chair to do other things. The equal sign broadcast will inform you to handle it. You can't go until the number arrives. You can keep asking the lobby manager whether it's in line, If the lobby manager says you can't go before it arrives (when using non blocking IO, if you can't read and write, the java call will return immediately. When the IO event distributor notifies you to read and write, continue reading and writing, and keep cycling until the reading and writing is completed)

1.2 pseudo asynchronous IO (multithreaded BIO)

In order to solve the problem that a link in synchronous blocking I/O needs to be handled by one thread, someone later optimized its thread model - the backend processes the request access of multiple clients through a thread pool, forming a proportional relationship between the number of clients M: the maximum number of threads n in the thread pool, where M can be much greater than N. thread resources can be flexibly allocated through the thread pool, Set the maximum value of threads to prevent thread exhaustion due to massive concurrent access.

A pseudo asynchronous I/O communication framework can be implemented by using thread pool and Task queue. Its model diagram is shown in the figure above. When a new client is accessed, the Socket of the client is encapsulated into a Task (the Task implements the java.lang.Runnable interface) and delivered to the back-end thread pool for processing. The thread pool of JDK maintains a message queue and N active threads to process the tasks in the message queue. Because the thread pool can set the size and maximum number of threads of the message queue, its resource occupation is controllable. No matter how many clients access concurrently, it will not lead to resource depletion and downtime.

The pseudo asynchronous I/O communication framework adopts the implementation of thread pool, so it avoids the problem of thread resource depletion caused by creating an independent thread for each request. However, because its bottom layer is still the BIO model of synchronous blocking, it can not fundamentally solve the problem. When the number of active connections is not particularly high (less than 1000 for a single machine), this model is relatively good. Each connection can focus on its own I/O, and the programming model is simple, without too much consideration of system overload, current limit and other problems. Thread pool itself is a natural vulnerability, which can buffer some connections or requests that the system cannot handle. However, when faced with 100000 or even millions of connections, the traditional BIO model is powerless. Therefore, we need a more efficient I/O processing model to deal with higher concurrency.

Each thread manages one connection

// Multithreaded BIO server

public class IOServer {

public static void main(String[] args) throws IOException {

// The server handles the client connection request

ServerSocket serverSocket = new ServerSocket(3333);

/*

ServerSocket serverSocket = new ServerSocket();

serverSocket.bind(new InetSocketAddress(9876));

*/

// After receiving the client connection request, create a new thread for each client for link processing

new Thread(() -> {

while (true) {//loop

try {

// Blocking method to get a new connection, blocking

Socket socket = serverSocket.accept();

// Each new connection creates a thread that reads data

new Thread(() -> {

try {

int len;

byte[] data = new byte[1024];

InputStream inputStream = socket.getInputStream();

// Read data in byte stream mode

while ((len = inputStream.read(data)) != -1) {

System.out.println(new String(data, 0, len));

}

} catch (IOException e) {

}

}).start();

/* You can also submit all threads to the thread pool

newCachedThreadPool.execute(new Runnable() {

@Override

public void run() {

//Business processing

myHandler(socket);// Write the business code into it

}

});

*/

} catch (IOException e) {

}

}

}).start();

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

Or adopt the scheme of thread pool on the server

1) use BIO The model writes a server side to listen to port 6666. When there is a client connection, it starts a thread to communicate with it.

2) It is required to improve the thread pool mechanism, which can connect multiple clients.

3) The server can receive the data sent by the client(telnet Way is enough).

// Try to use thread pool to solve BIO problem

public class BIOServer {

public static void main(String[] args) throws Exception {

//Thread pool mechanism

//thinking

//1. Create a thread pool

//2. If there is a client connection, create a thread and communicate with it (write a separate method)

ExecutorService newCachedThreadPool = Executors.newCachedThreadPool();

//Create ServerSocket

ServerSocket serverSocket = new ServerSocket(6666);

System.out.println("The server started");

while (true) {

System.out.println(" Thread information id =" + Thread.currentThread().getId() + " name =" +

Thread.currentThread().getName());

//Listen and wait for the client to connect

System.out.println("Waiting for connection....");

final Socket socket = serverSocket.accept();

System.out.println("Connect to a client");

//Create a thread and communicate with it (write a separate method)

newCachedThreadPool.execute(new Runnable() {

public void run() {

//Process connections / / can communicate with clients

handler(socket);

}

});

}

}

//Write a handler method to communicate with the client

public static void handler(Socket socket) {

try {

System.out.println(" Thread information id =" + Thread.currentThread().getId() +

" name =" +Thread.currentThread().getName());

byte[] bytes = new byte[1024];

//Get the input stream through socket

InputStream inputStream = socket.getInputStream();

//Cyclic reading of data sent by the client

while (true) {

System.out.println(" Thread information id =" + Thread.currentThread().getId() +

" name =" + Thread.currentThread().getName());

System.out.println("read....");

int read = inputStream.read(bytes);

if(read != -1) {

System.out.println(new String(bytes, 0, read)); //Output data sent by client

} else {

break;

}

}

}catch (Exception e) {

e.printStackTrace();

}finally {

System.out.println("Close and client Connection of");

try {

socket.close();

}catch (Exception e) {

e.printStackTrace();

}

}

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

// client

public class IOClient {

public static void main(String[] args) {

// TODO creates multiple threads to simulate multiple clients connecting to the server

new Thread(() -> {

try {

Socket socket = new Socket("127.0.0.1", 3333);

while (true) {

try {

socket.getOutputStream().write((new Date() + ": hello world").getBytes());

Thread.sleep(2000);

} catch (Exception e) {

}

}

} catch (IOException e) {

}

}).start();

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

BIO problems

Java BIO problem analysis

-

Each request needs to create an independent thread to Read, process and Write data with the corresponding client.

-

When the number of concurrency is large, a large number of threads need to be created to process the connection, which takes up a large amount of system resources.

-

After the connection is established, if the current thread has no data readable temporarily, the thread will block the Read operation, resulting in a waste of thread resources

Communication process of BIO

- Join us. You are playing games at home and have just ordered takeout. The state of eating is sleep stop and playing games is running

- When the takeout comes, i.e. interrupt the request IRQ, interrupt the handler, archive the game (user mode to kernel mode), take the takeout, and change the eating state to ready state

- After taking it, you can not only play games, but also eat. Both are ready

Interrupt and system call

For zero copy, DMA, interrupt and other contents, please refer to:

https://blog.csdn.net/hancoder/article/details/112149121

Programmable interrupt controller:

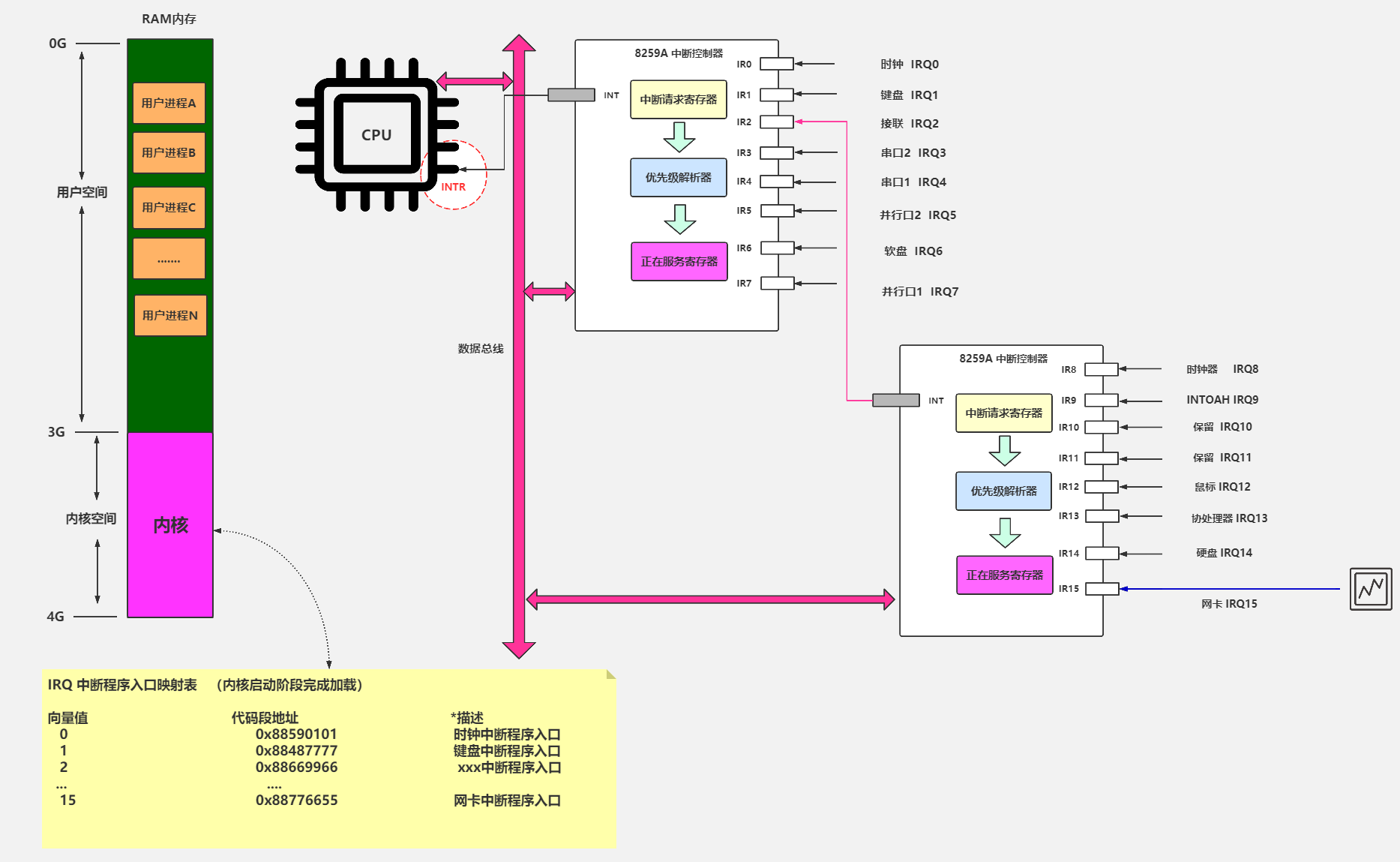

There are user space and kernel space as follows.

INTR is the interrupt pin. The interrupt controller has 8 IR, and there are 64 possibilities for two cascades. The interrupt request register stores interrupt signals, such as keyboard characters. The priority parser is used to distinguish the priority of interrupts. The service register is to be cleared after execution, and then the register can add value to it and give it to the CPU for processing

Programmable interrupt controller:

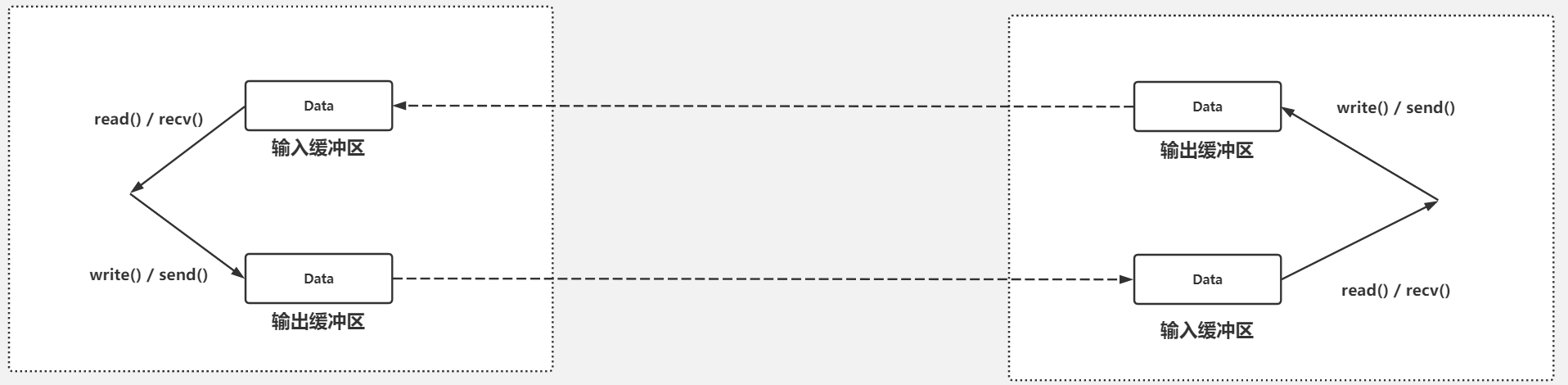

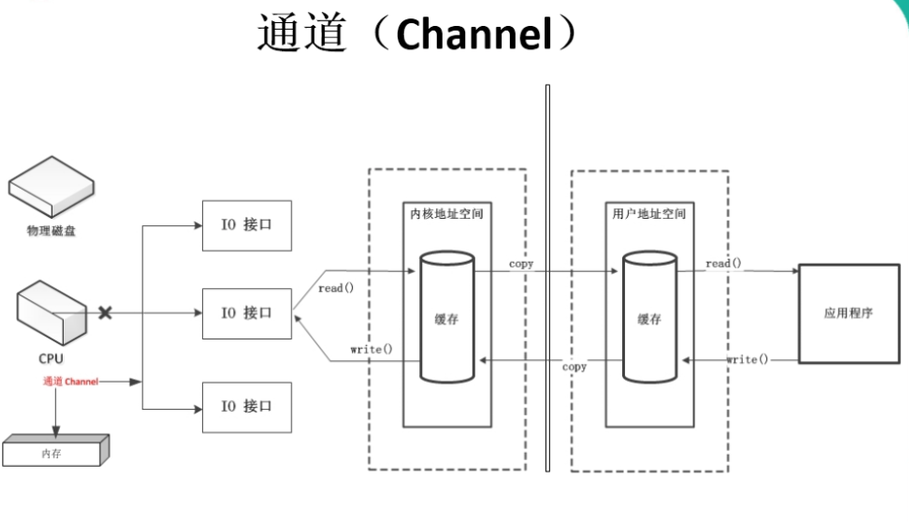

Socket buffer: each socket has two buffers: input buffer and output buffer

- Write operations are mapped to write and send of the kernel. First copy the data from the buffer in user space to the buffer in kernel space, and then TCP/IP will package the data into a message to send.

- Read operations are mapped to read and recv of the kernel. When reading, first map the data to the kernel space buffer, and then copy it from the kernel space buffer to the user space buffer.

After the upper socket is sent and close d, it will not affect the sending of kernel space, and the data will not be lost. TCP/IP will guarantee. However, if the input buffer is not completely read, it will be directly discarded

socket buffer:

Difference between BIO and NIO:

- Bio write: when bio outputs, the available space of the output buffer will be checked. If the available size is not enough, it will be blocked until the available space is enough. For example, 2mb will be written, but the kernel space is only 1mb. It will be blocked until TCP sends the data in the kernel space and 2mb will be put in after making space. If TCP is writing, it will lock the kernel buffer. For example, if you want to send 10mb, but the maximum kernel space is only 5mb, you will send 5mb first and then suspend the process. After TCP sends the last 5mb, wake up the process and send the remaining 5mb

- bio read: it will map to the kernel's read and recv system calls, check whether the kernel buffer has data, read data, or block the current calling process, until the data is input to the input buffer, the program will interrupt the process. If the read cache is not as large as the input buffer, it is read multiple times. If the buffer size is small, it can be read multiple times.

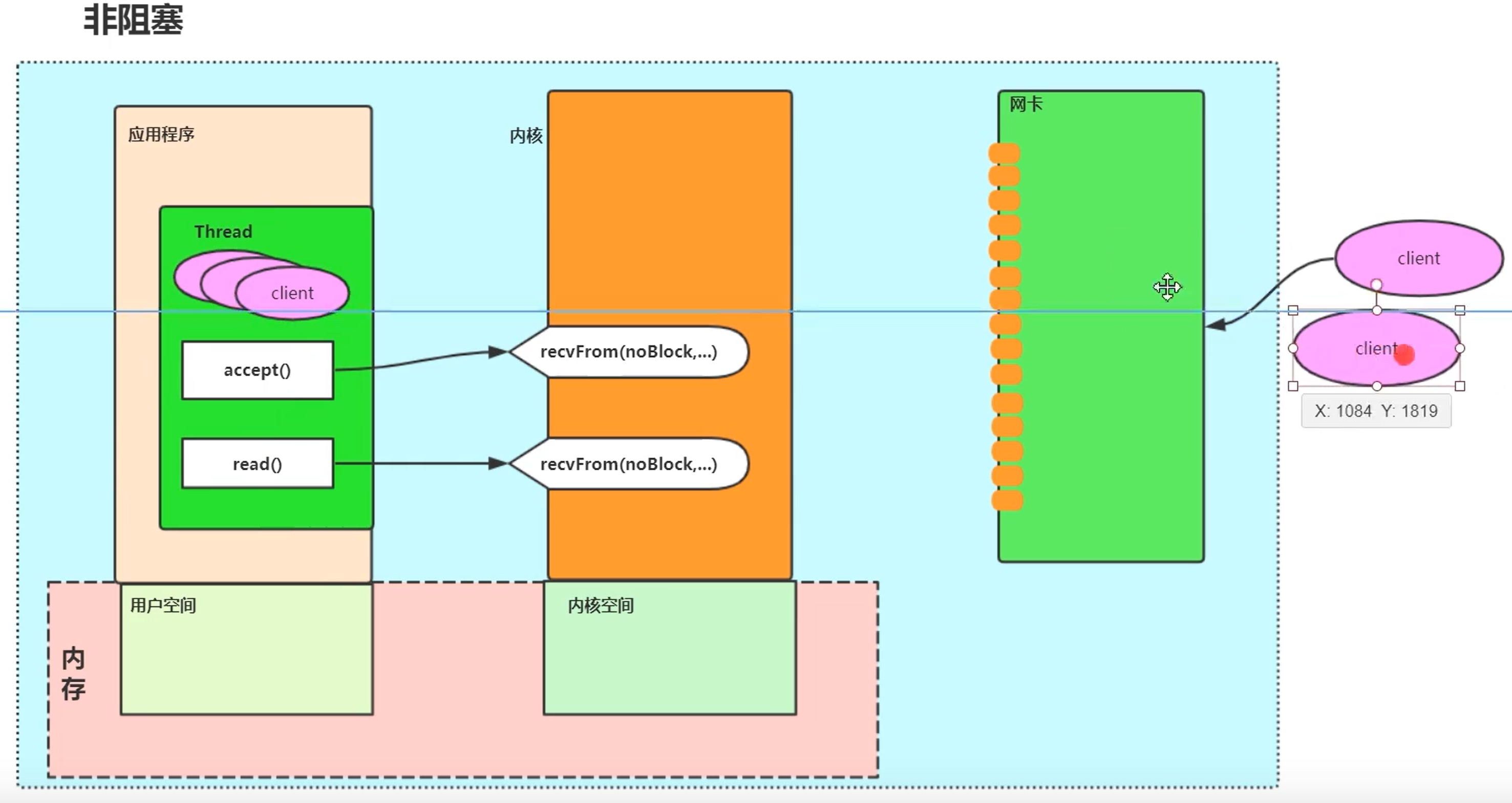

- NIO:

- nio write: it will also copy from user space to kernel space, but at this time, it will check whether the size of the kernel output buffer is enough. If not, copy as much as possible. For example, if 2mb is to be written and 1mb is available, write 1mb first, and then return to the user state to tell how much is written. If the kernel free space is 0, it will also return - 1 immediately (BIO will wait), you can choose to wait for a while or retry immediately.

- nio read: it will also be mapped to the read and recv system calls of the kernel to check whether there is data in the kernel buffer. If there is data, it will be returned. If there is no data, it will not be blocked.

- Multiplexing:

- Problem Description: to solve the 1-to-1 problem between the client and server processes above, we want one process on the server to monitor multiple processes on the client. For example, the client keeps sending the input buffer to the server. The server load is very high, and the cpu is not idle, so the server process can't grab the cpu resources all the time. If the traffic control congestion control is always full, the retransmission time will be extended. If the client is BIO, the client will also be blocked. If the client is NIO, it will return - 1

- Solution: one process listens to multiple sockets. select/epoll is to solve this problem. The selector tells the kernel the selection information, that is, which sockets to listen on. The state of being concerned. When returning, it will tell the number of sockets ready and which descriptors are ready. You can click the read-write function, and the patient will not be called,

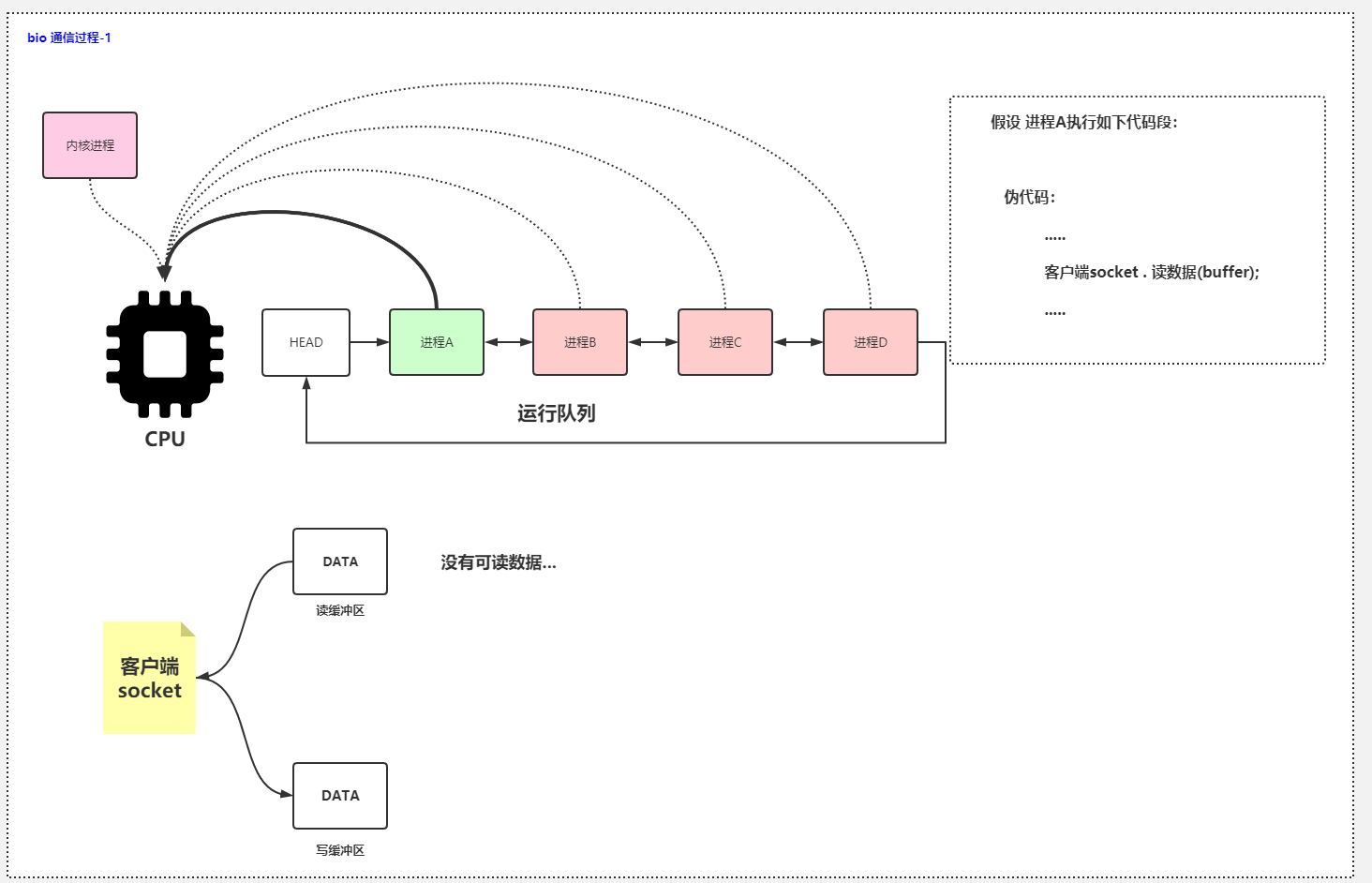

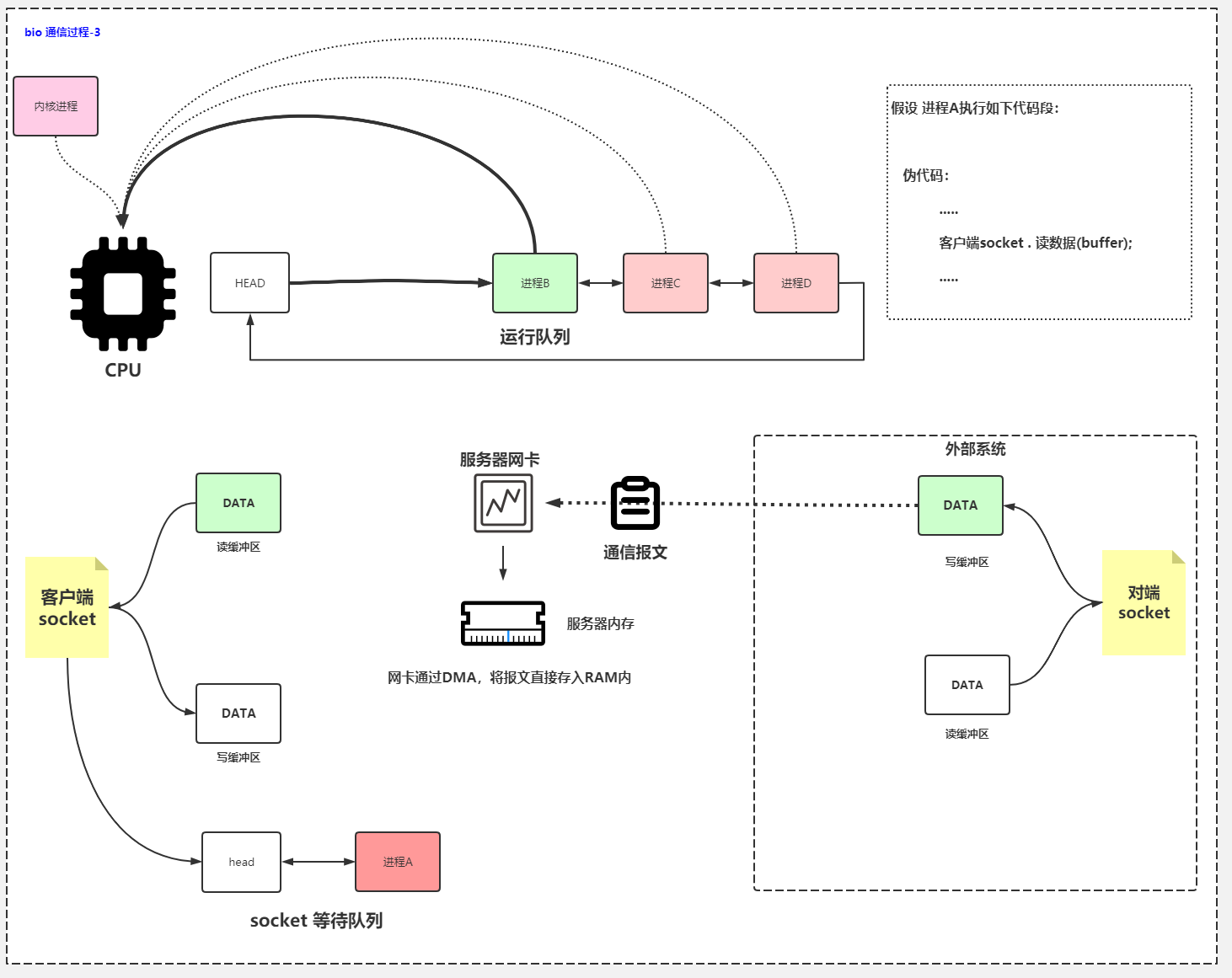

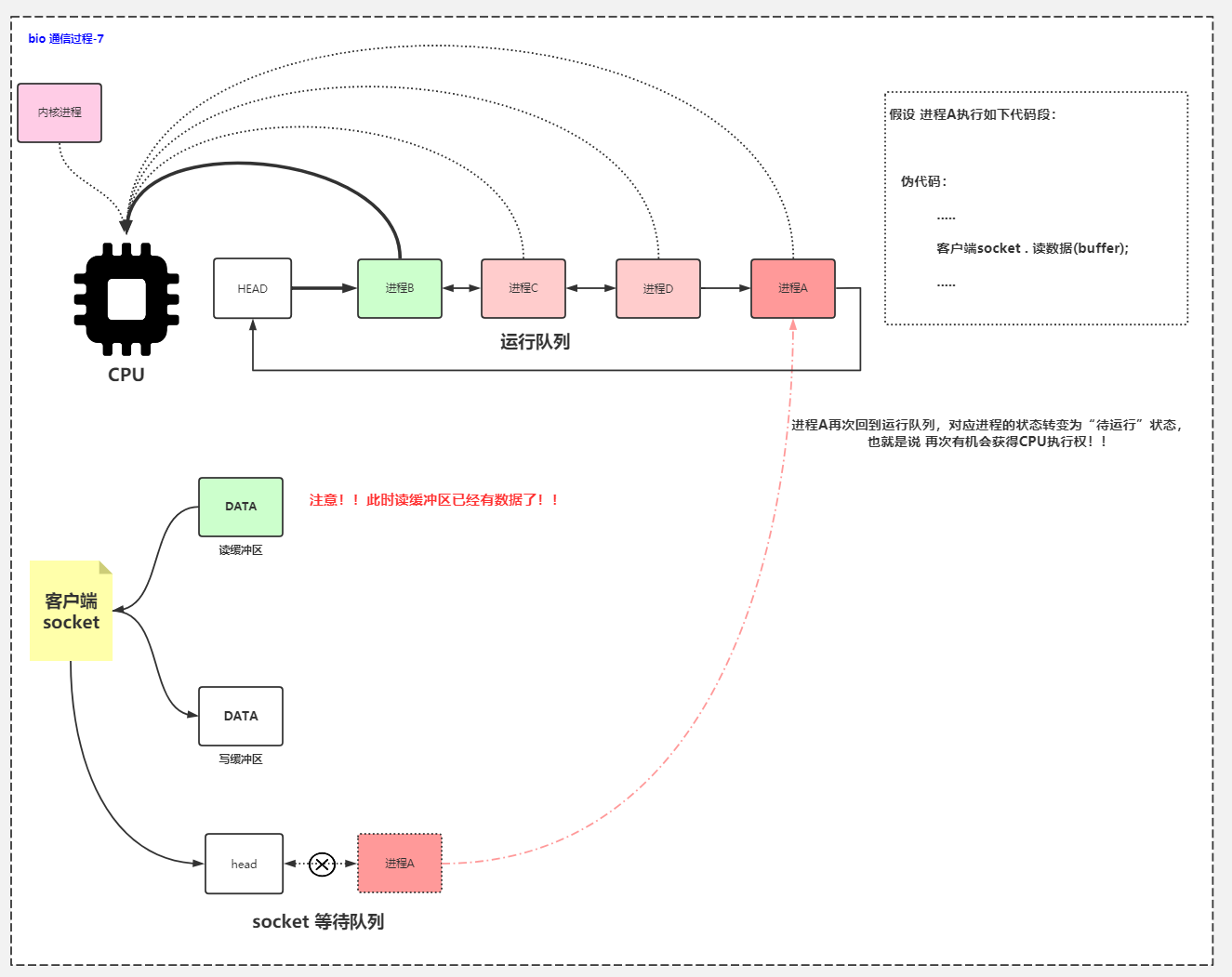

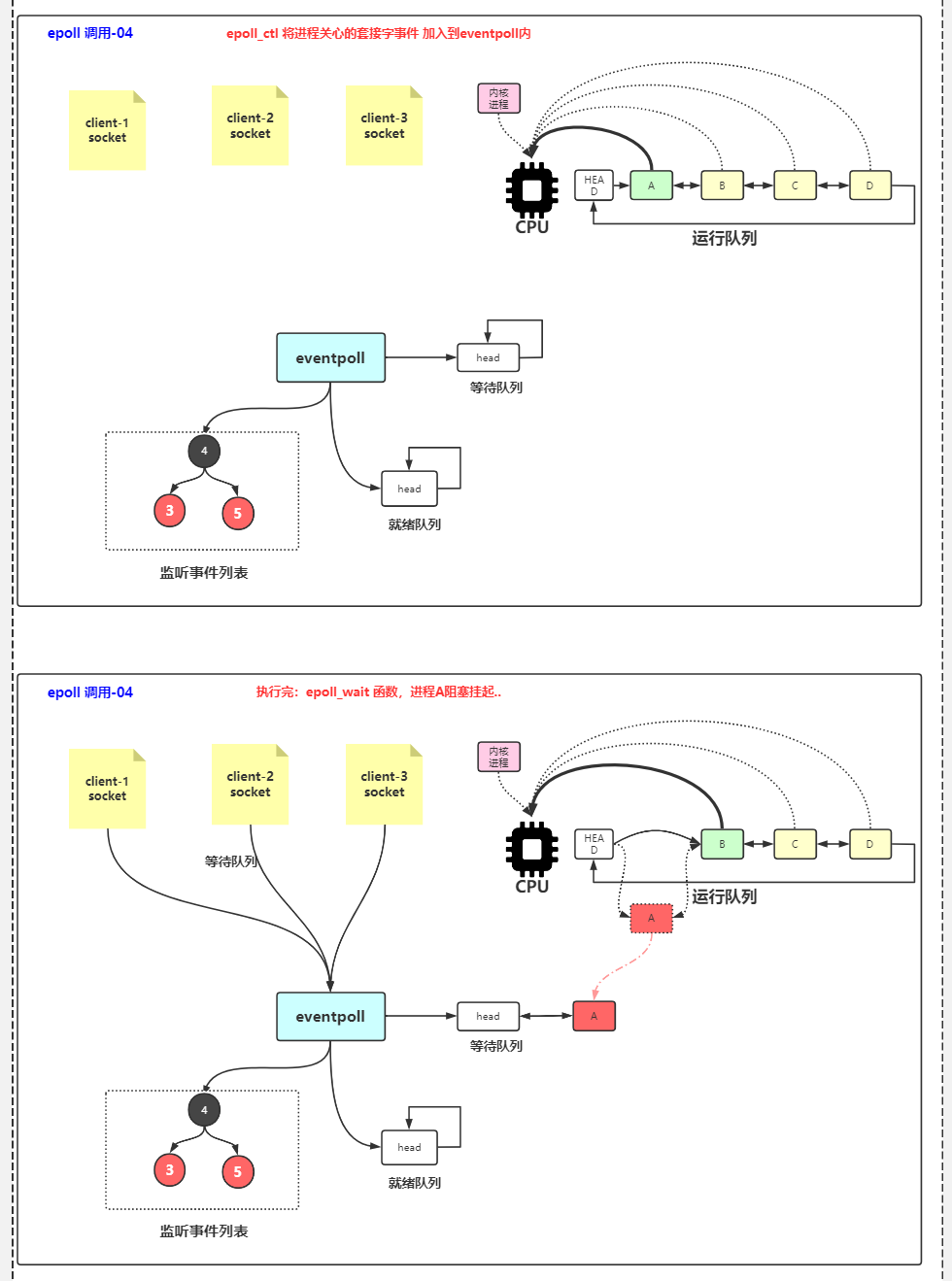

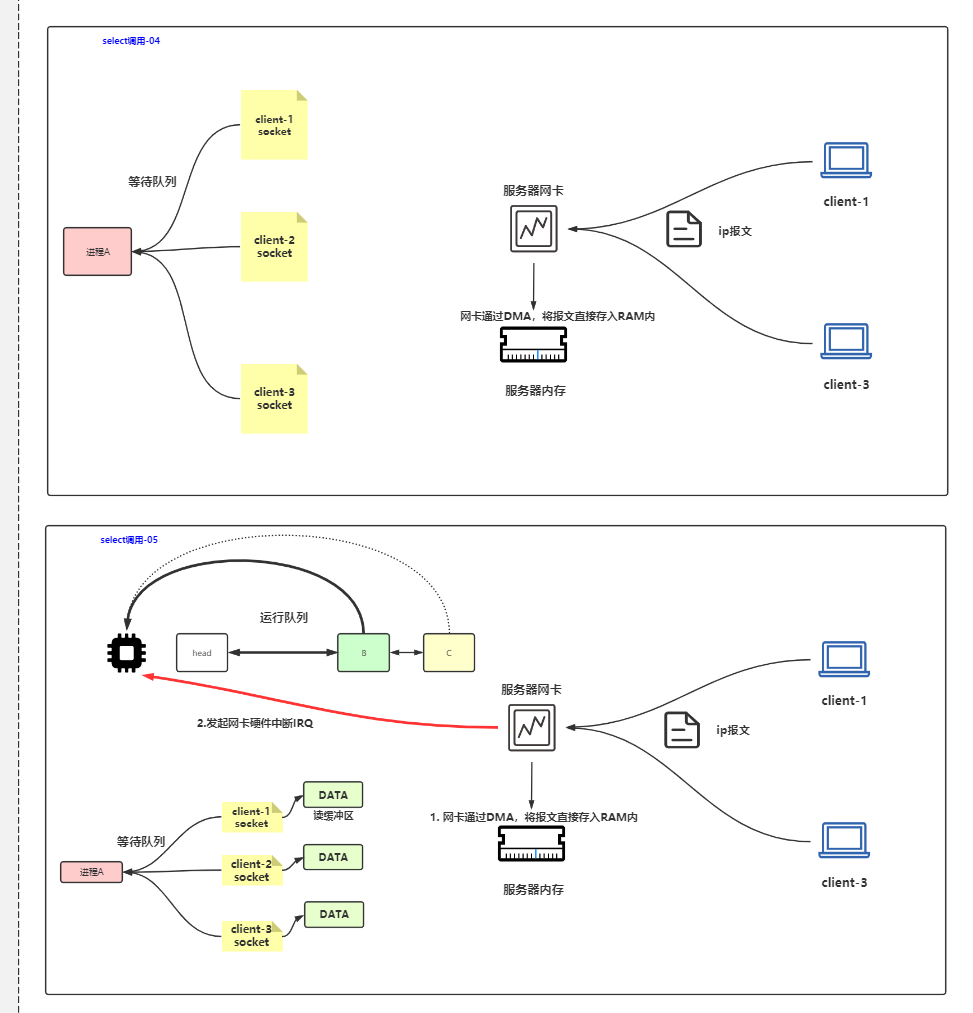

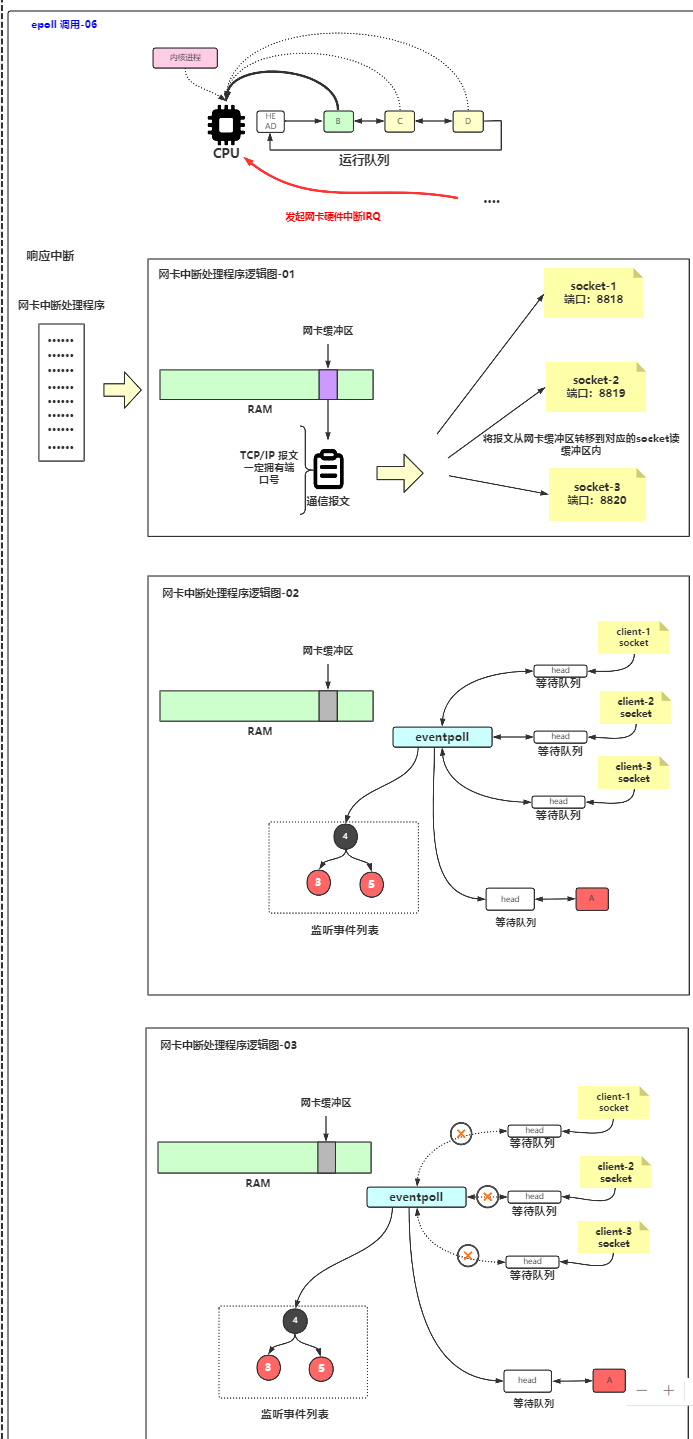

1 BIO read data

There is A run queue (ready queue) on the CPU. Select one to run. For example, when A runs, it executes socket Read() reads data. No readable data will be blocked

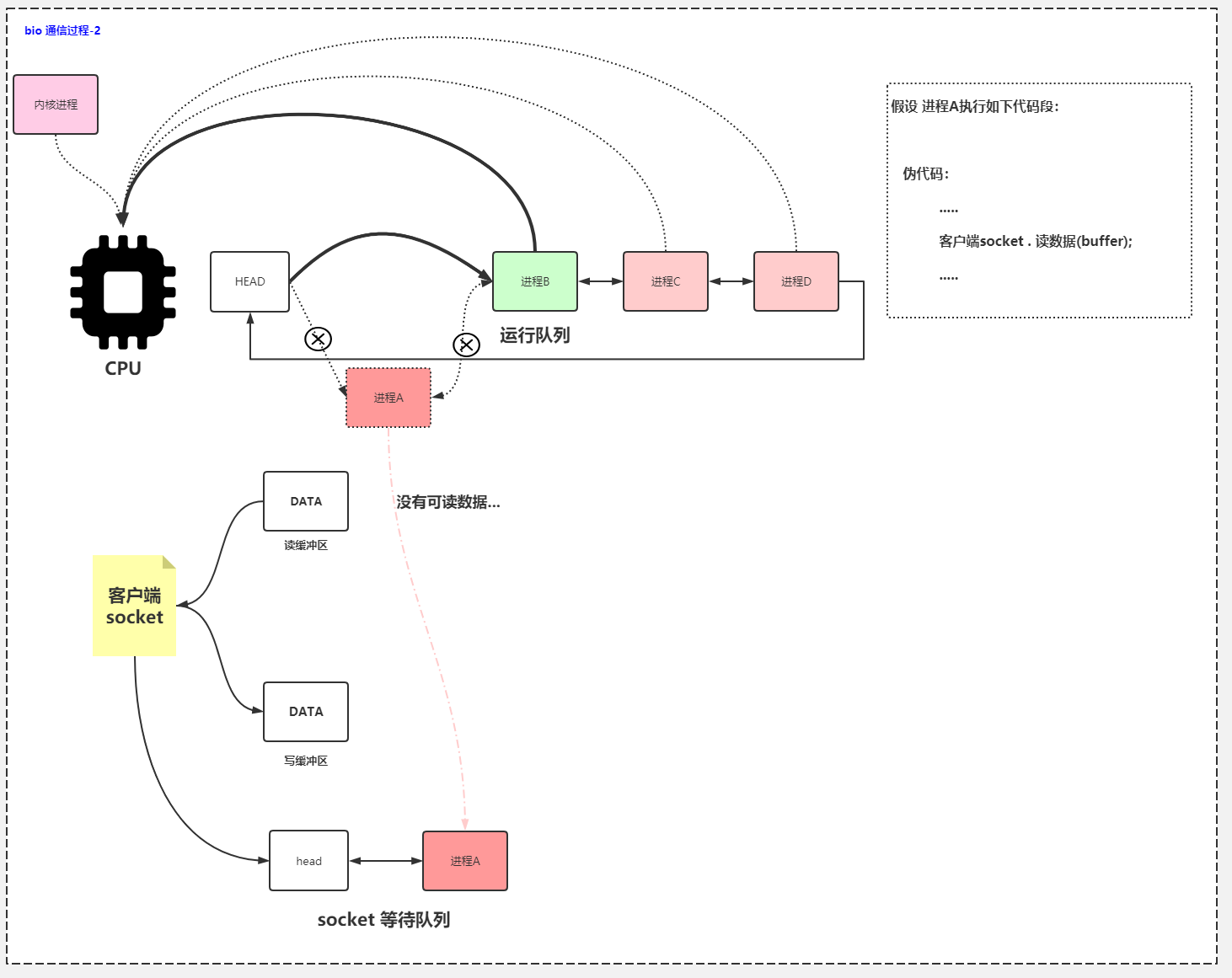

2 blocking after no data

After being blocked, process A takes it out of the running queue and moves it to the waiting queue (blocking / waiting queue), and process B/C/D executes it

Here comes the data

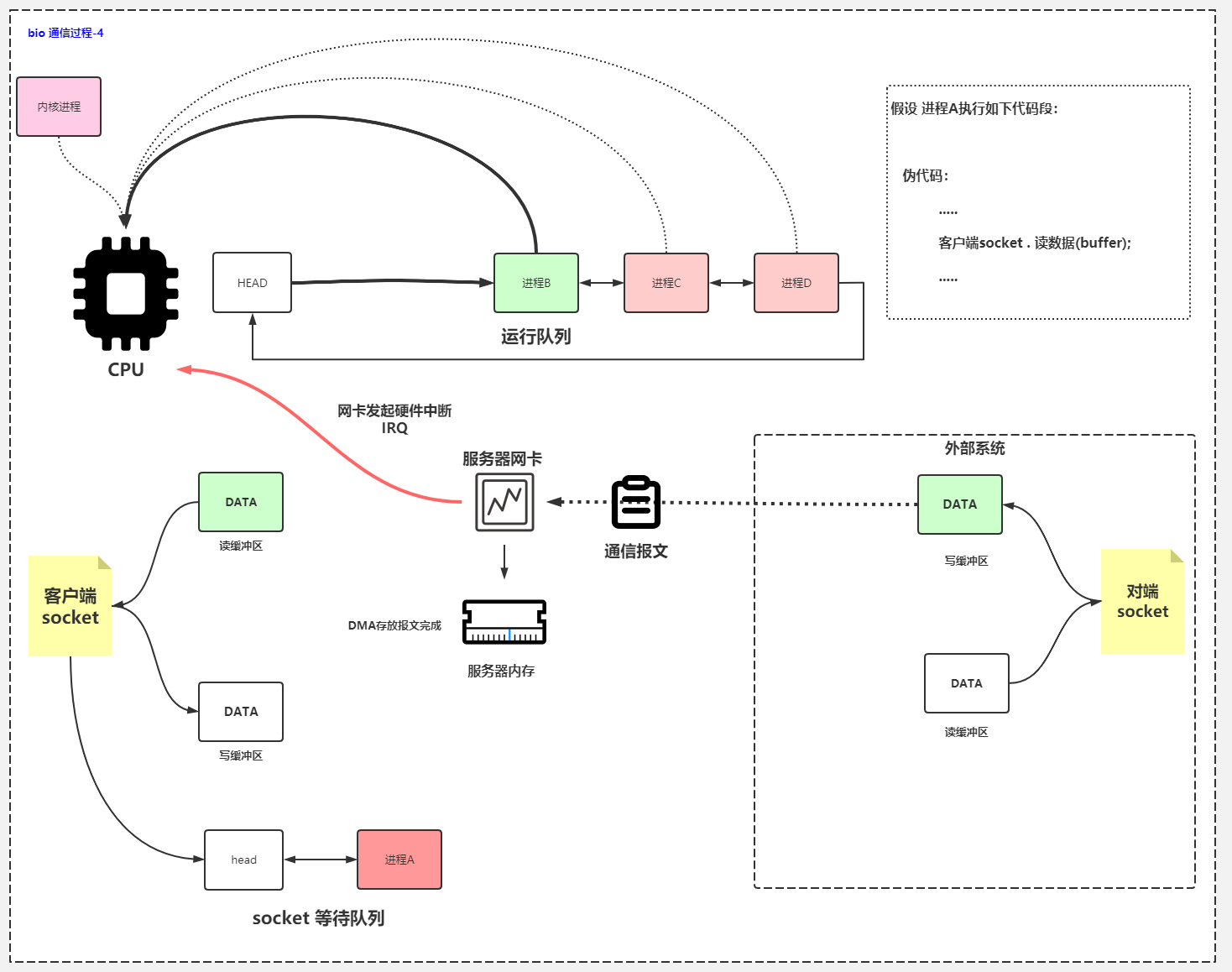

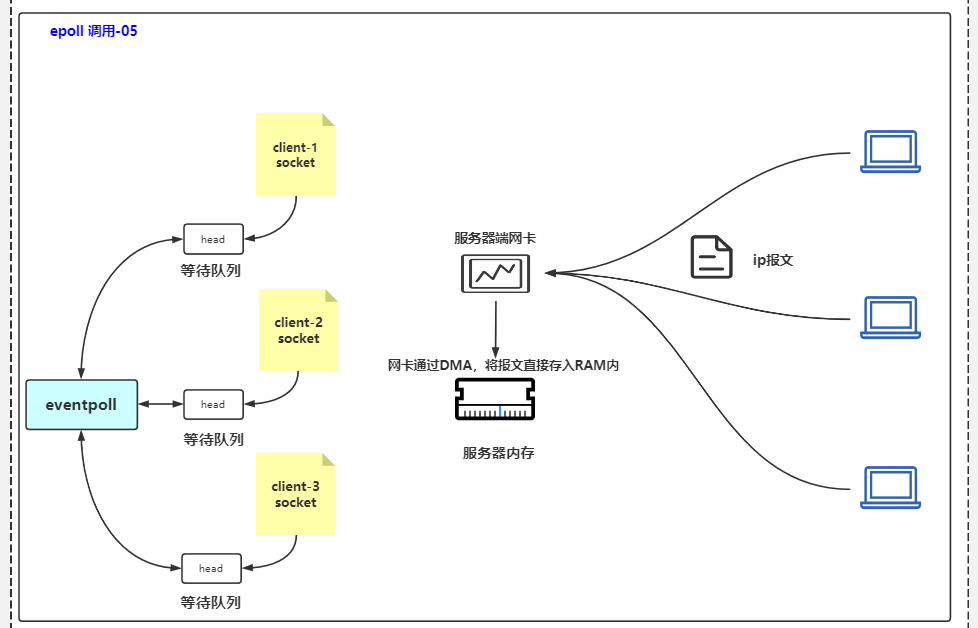

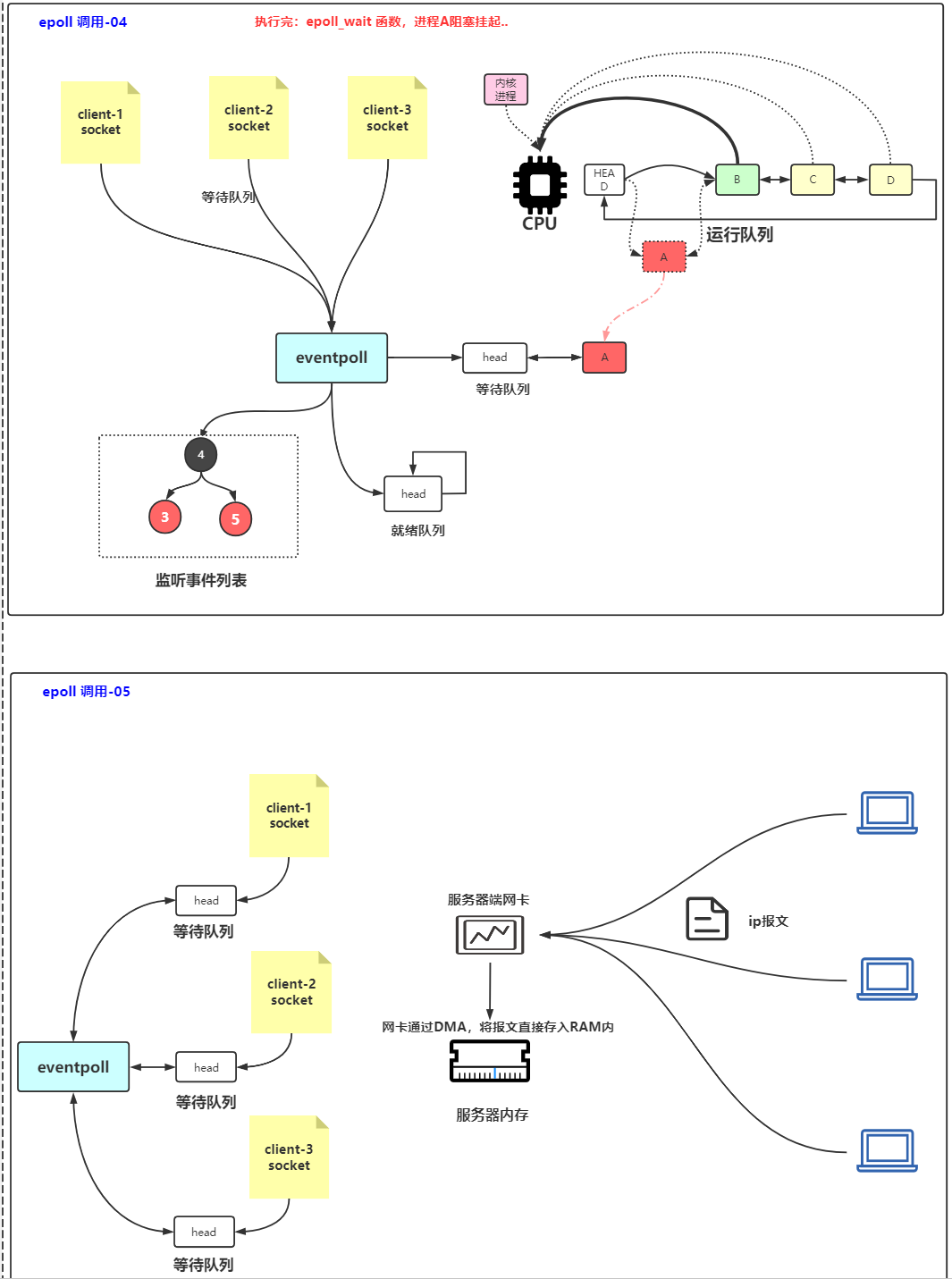

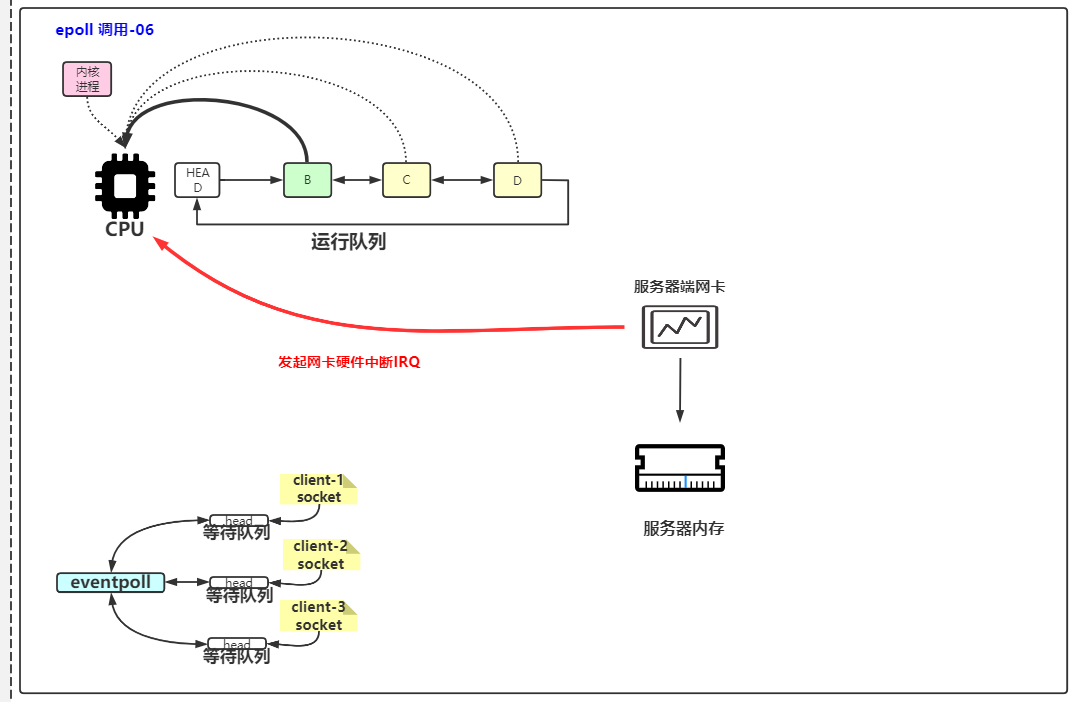

The client sends data. After the message reaches the server network card, the network card directly stores the message into the kernel buffer through DMA, and the network card initiates hardware interrupt

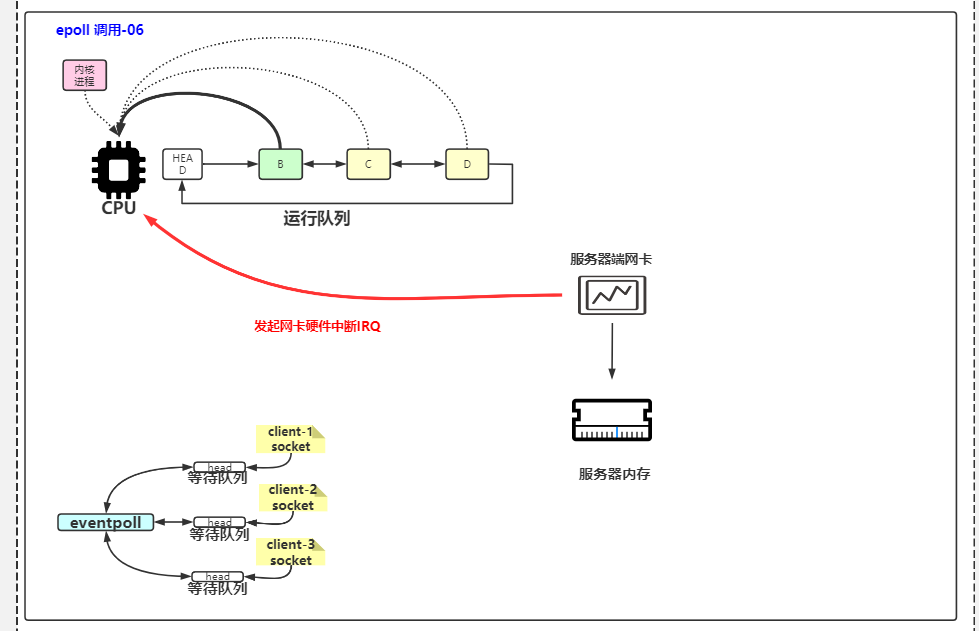

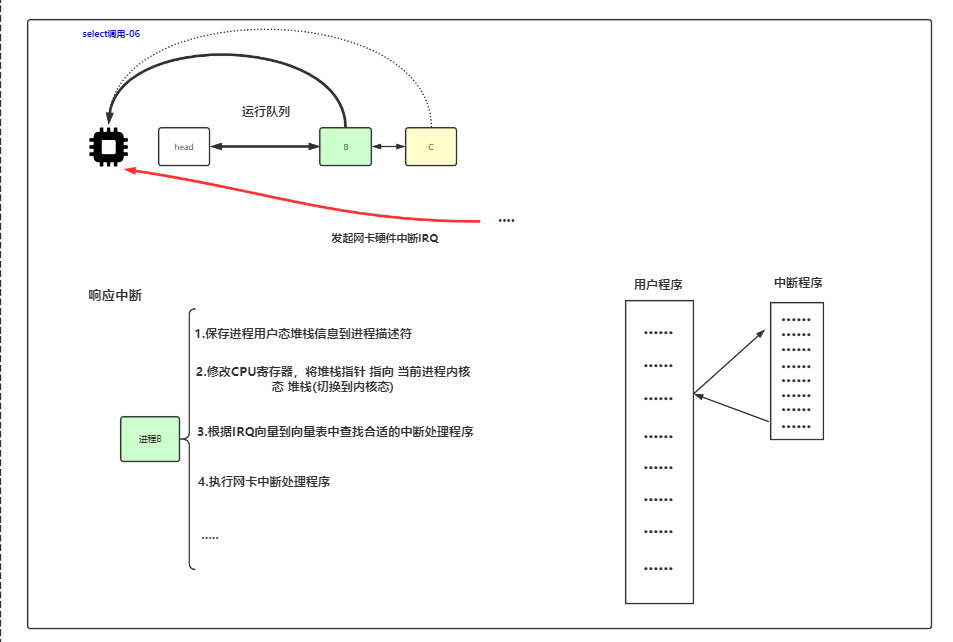

4. The network card initiates a hardware interrupt

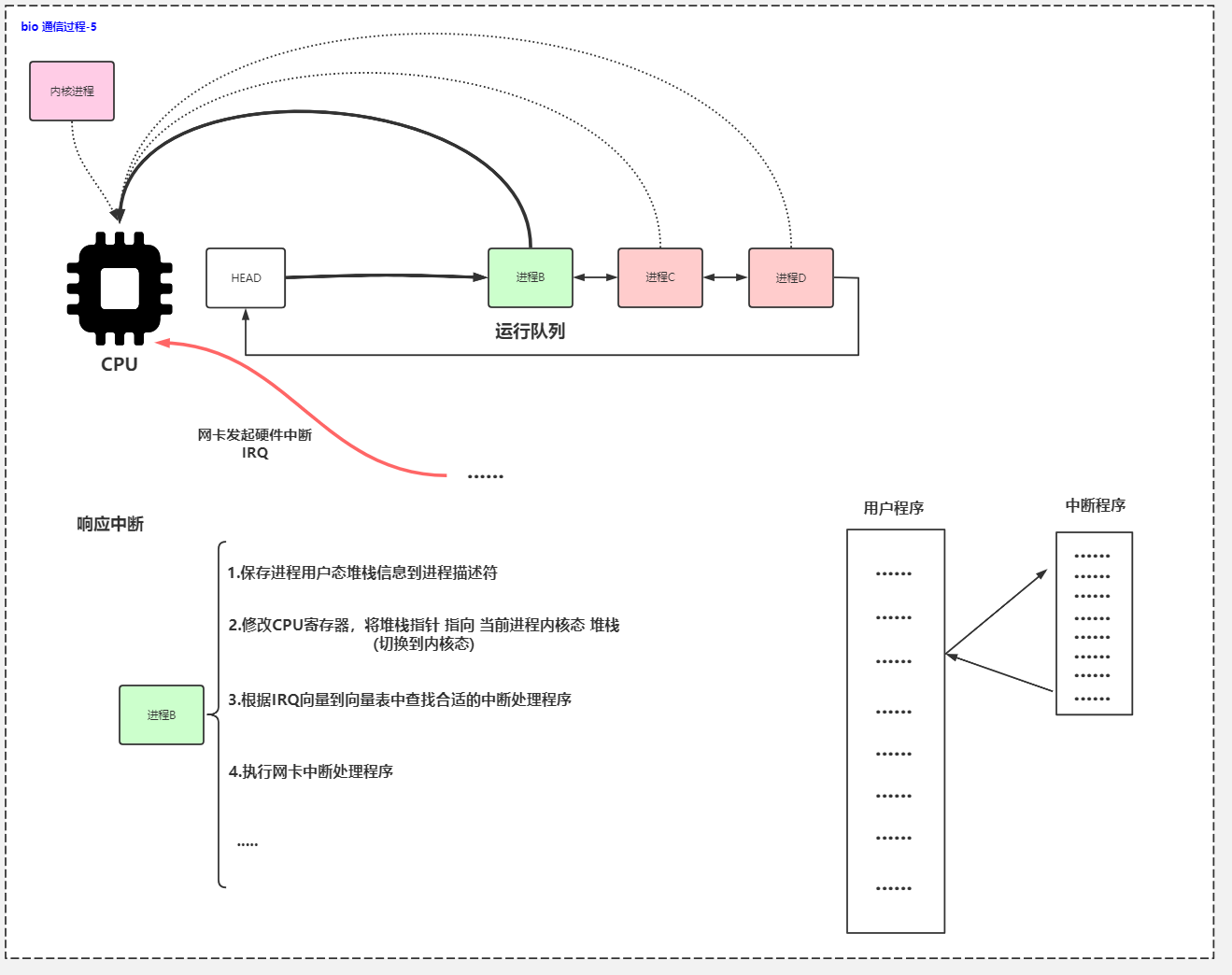

The network card initiates a hardware interrupt to let the cpu enter the kernel state

5 respond to interruption, save information and switch status

The cpu needs to enter the kernel state, so suspend the executing process B. in this process, you need to

- Save user state stack information to process descriptor

- Modify the CPU register and point the stack pointer to the kernel state stack of the current process

- Switch to kernel mode

- Find the appropriate interrupt handler in the adjacent vector table according to IRQ

- Point to network card interrupt program

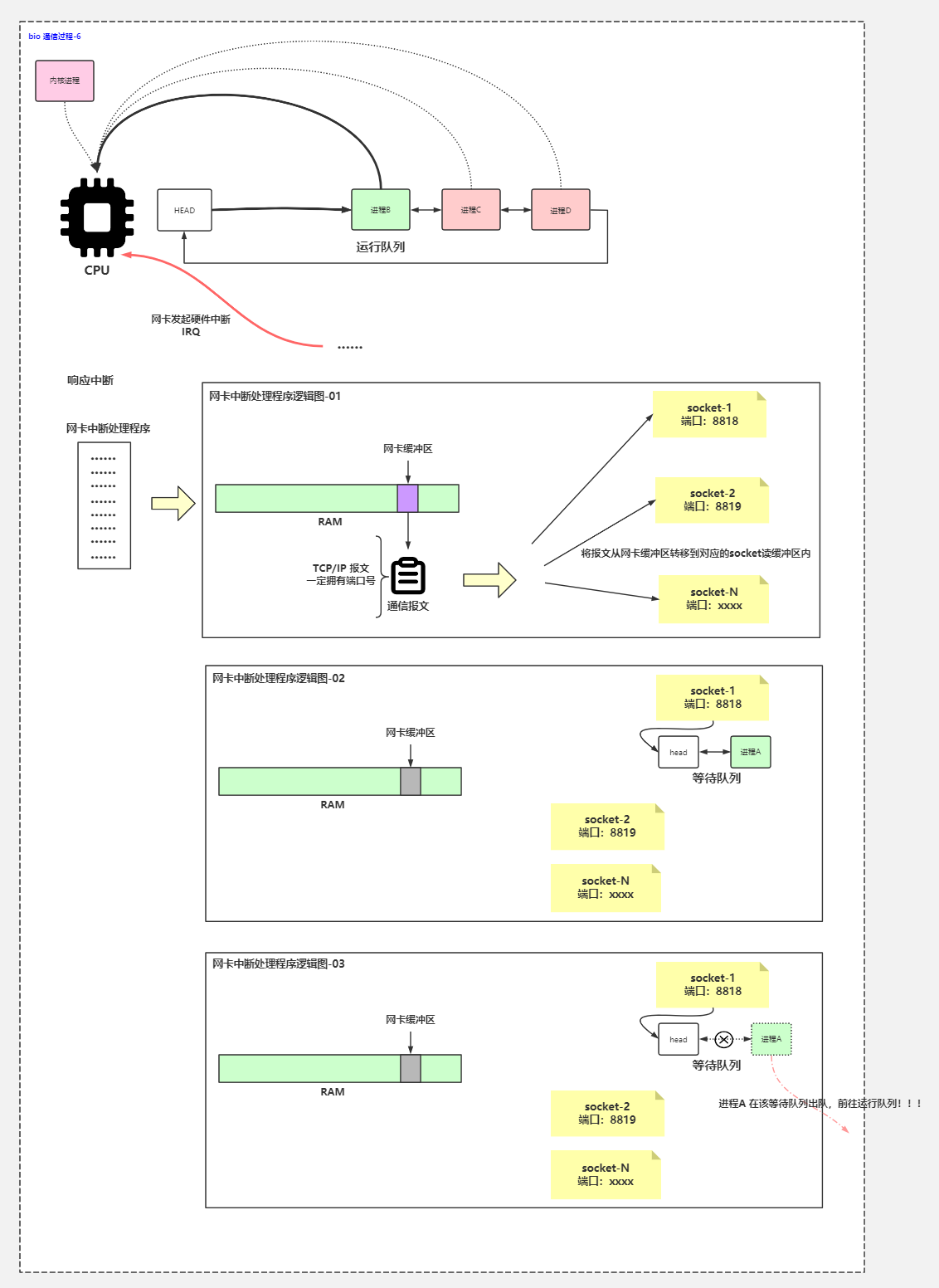

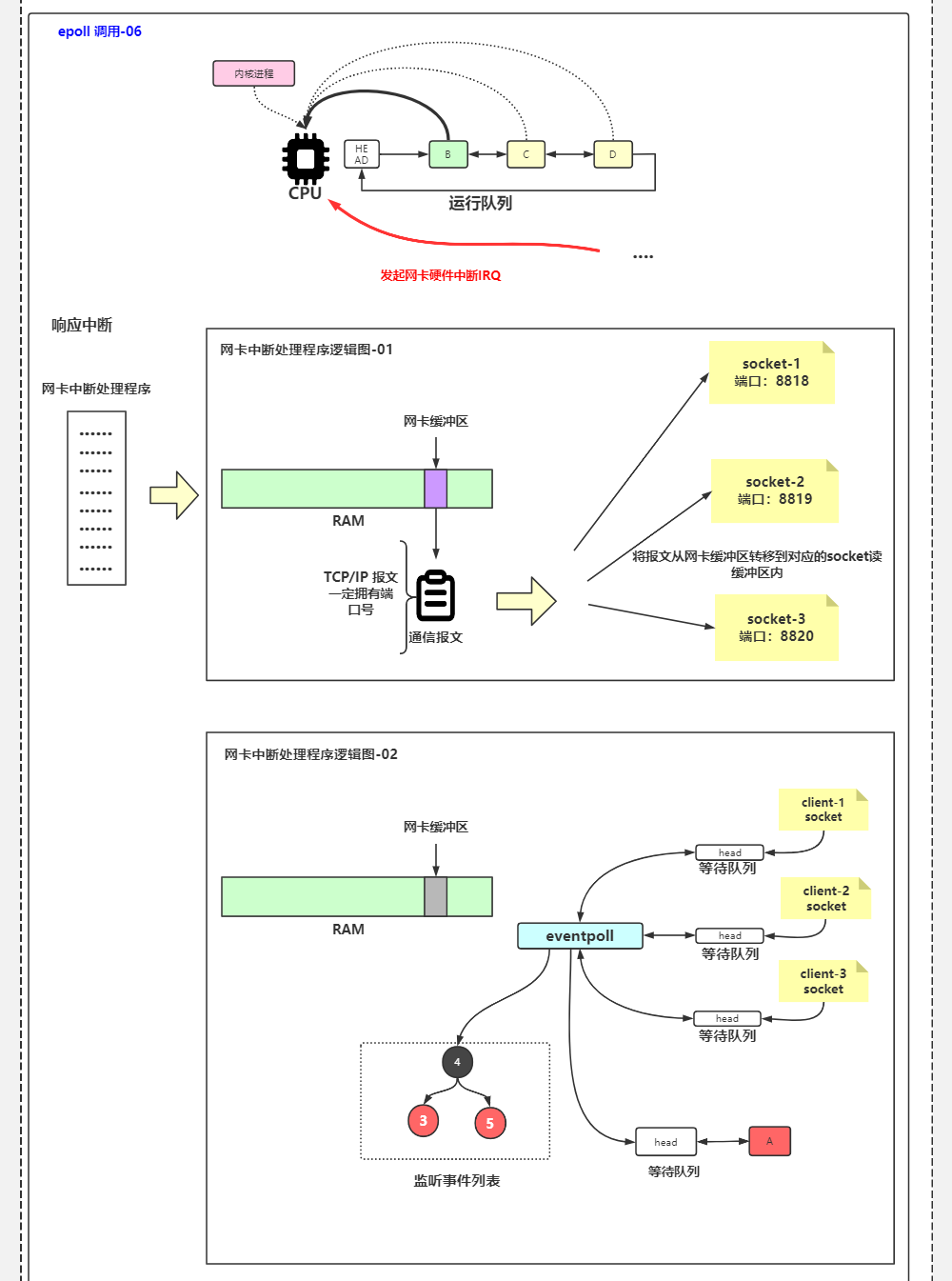

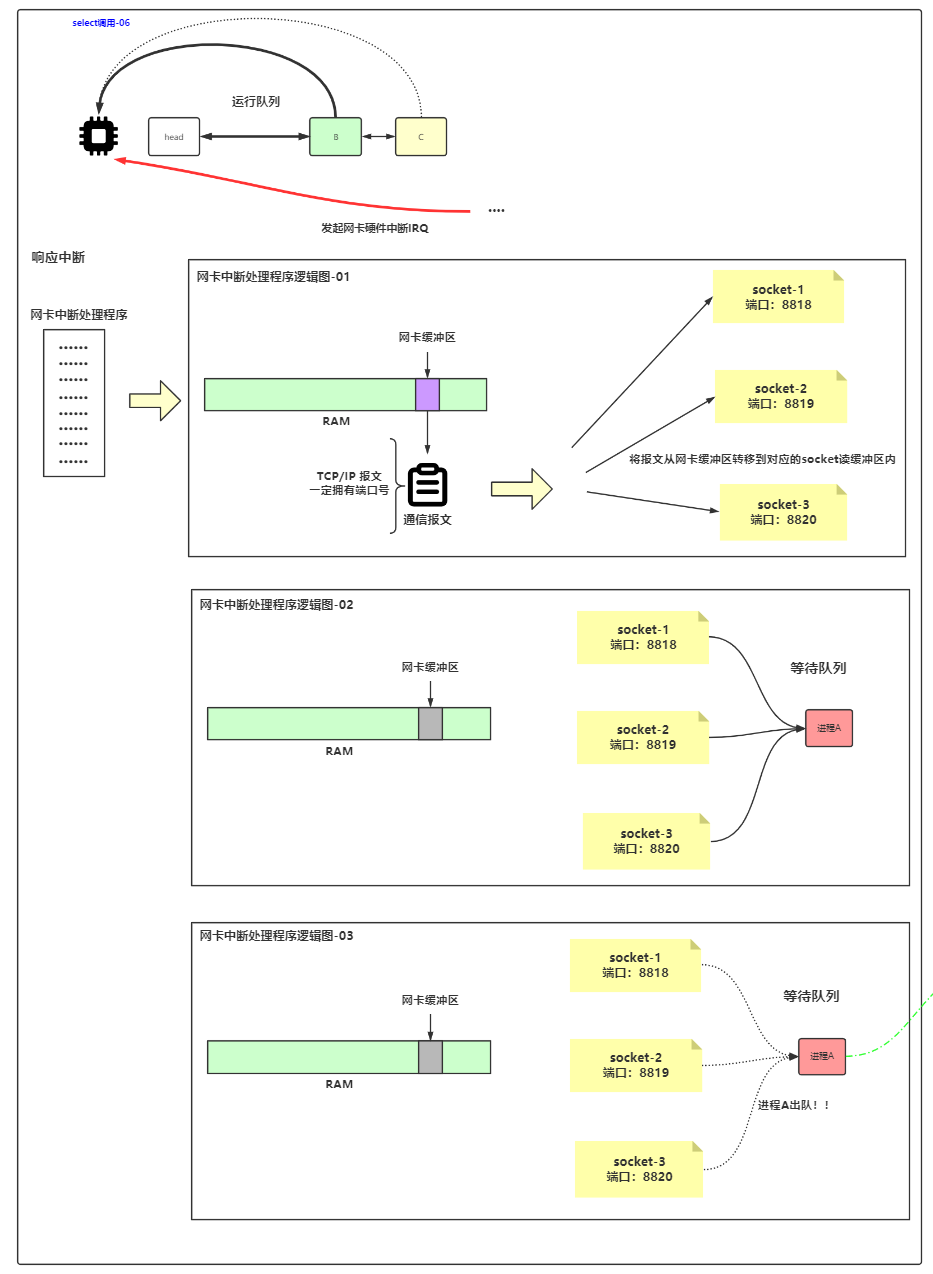

6 interrupt handler

Process messages one by one. If there is port information in the message, you can find the corresponding socket and put the data into the read buffer of the corresponding socket.

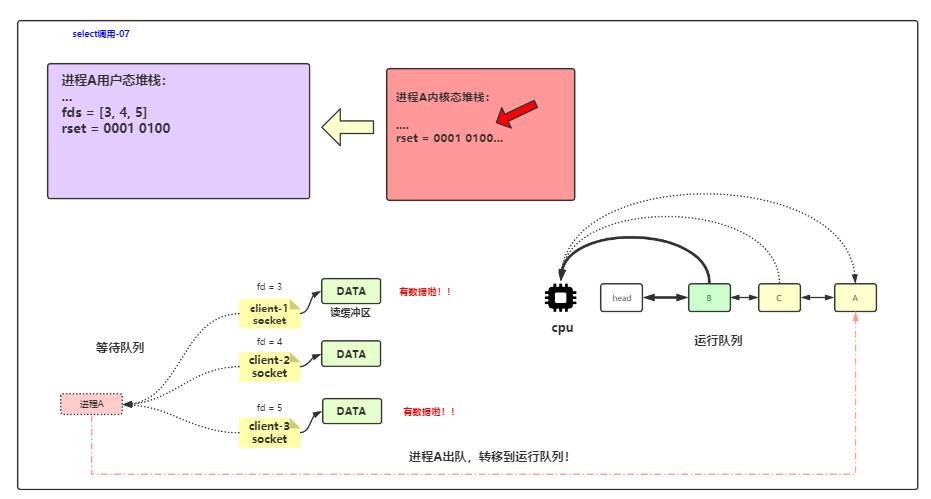

Process A is dequeued from the waiting queue and transferred to the running queue

7 get the data to run

Process A enters the run queue

Disadvantages: server process and client process are 1 to 1

RandomAccessFile

Support random read and write seek. If you write after seek, it will cover the original place, which is equivalent to covering the middle

It supports both reading and writing, and specifies the mode

His write is written in the file

Network IO process

The socket of the client comes in from the network card

The application program passes through the kernel, and the kernel goes to the network card to get data. After getting the data, go to the kernel first, and then pass it to the application.

One thread performs accept and read operations in turn. After the second client comes, it is only on the network card and is not connected to the network

Byte fetching stream of read,

After another client, the application thread is blocked

Figure source: to date https://www.bilibili.com/video/BV1VJ411D7Pm

Non blocking IO (NIO) maintains multiple client s

linux select Function explanation stay Linux In, we can use select Function implementation I/O The reuse of the port is passed to select The parameters of the function tell the kernel: select) 1,File descriptors that we care about 2.For each descriptor, we are concerned about the state. 3.How long do we have to wait. from select After the function returns, the kernel tells us the following information: 1.Number of descriptors ready for our requirements 2.For three conditions, which descriptors are ready (read, write, exception) With this return information, we can call the appropriate I/O Function (usually read or write),And these functions will no longer block. Syllabus: http://note.youdao.com/s/GkwuyYC7 Programmable interrupt controller: https://www.processon.com/view/link/5f5b1d071e08531762cf00ff Procedure of system call: https://www.processon.com/view/link/5f5edf94637689556170d993 socket buffer: https://www.processon.com/view/link/5f5c4342e0b34d6f59ef7057 bio Underlying principle of communication: https://www.processon.com/view/link/5f61bd766376894e32727d66 linux select function API: https://www.processon.com/view/link/5f601ed86376894e326d9730 linux select schematic diagram: https://www.processon.com/view/link/5f62b9a6e401fd2ad7e5d6d1 linux epoll Function: https://www.processon.com/view/link/5f6034210791295dccbc1426 linux epoll schematic diagram: https://www.processon.com/view/link/5f62f98f5653bb28eb434add

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

On multiple threads, and then continue to run. For example, the client needs to send data to the server. The selector will assign the registered channel to one or more threads on the server only after all the data of the client is ready. During the period when the client prepares data, the server thread can perform other tasks.

III AIO

(Asynchronous I/O) AIO asynchronous IO is NIO 2. Introduced in Java 7, it is an asynchronous non blocking IO model.

Asynchronous IO is implemented based on event and callback mechanism, that is, it will return directly after application operation without blocking there. When the background processing is completed, the operating system will notify the corresponding thread for subsequent operation.

Problems solved by AIO: Although NIO solves the blocking problem, it is still synchronous, which means that you have to ask the selector whether there is data and whether it is ready. AIO is asynchronous, which is equivalent to a callback function. It will notify you when there is data.

In addition to AIO, other IO types are synchronous. This can be explained from the underlying IO thread model. I recommend an article: What are the five IO models of Linux?

At present, AIO is not widely used. Netty has tried to use AIO before, but gave up.

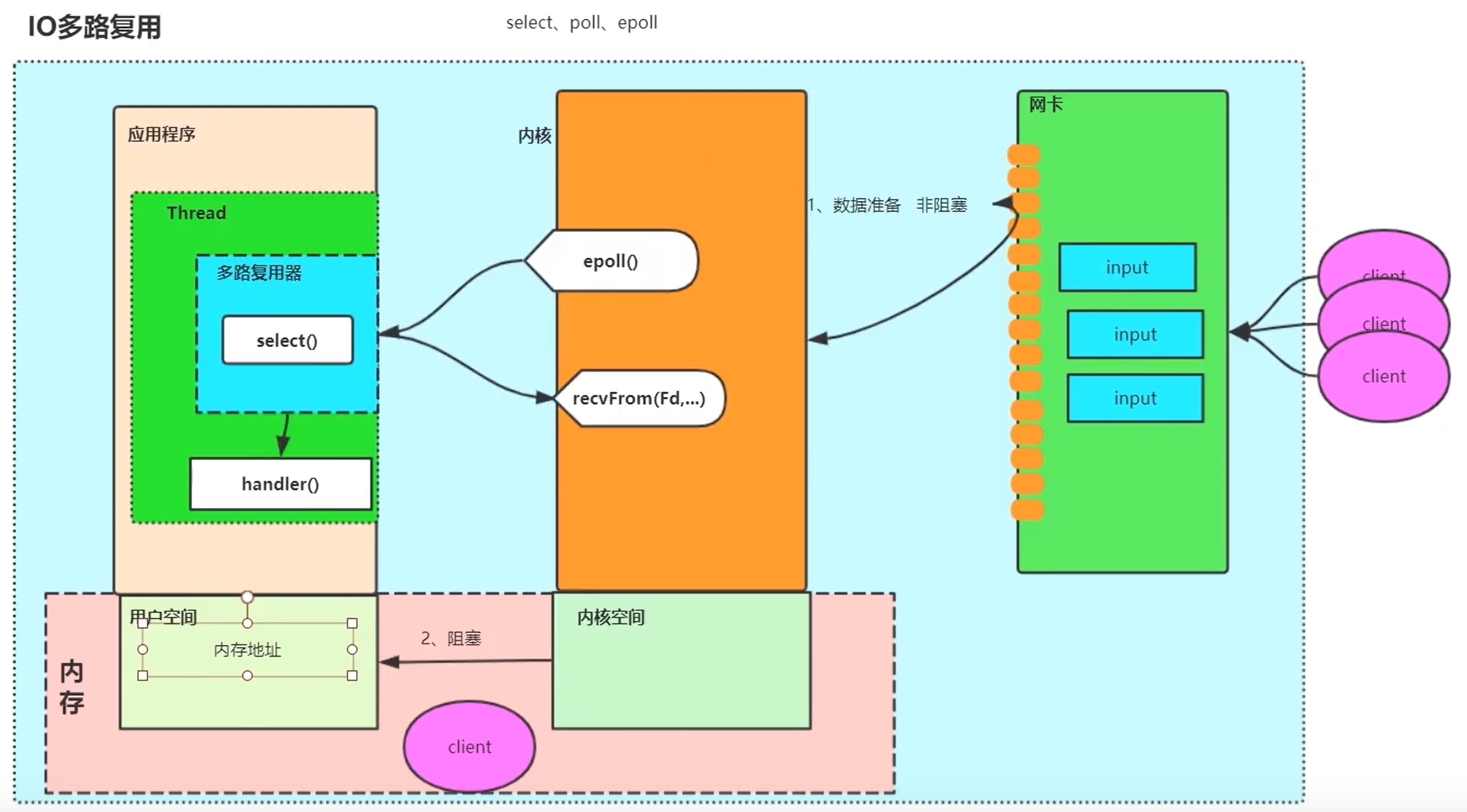

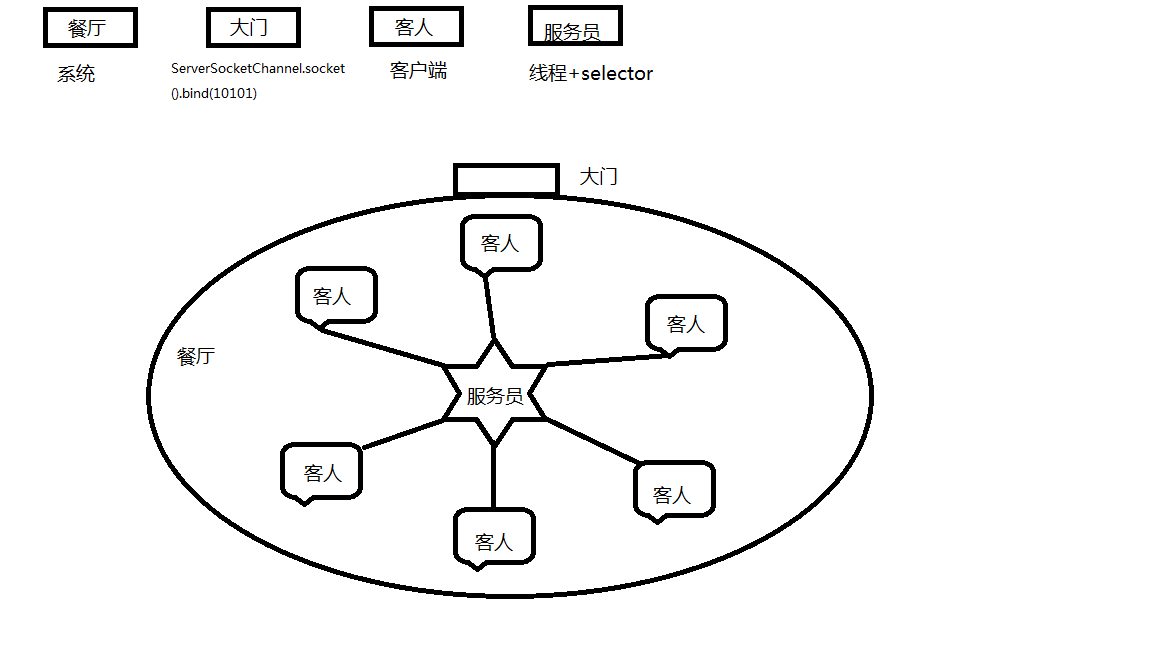

4, Multiplex interpretation

Multithreading requires context switching, which is time-consuming

We use single thread.

network connections

sockfd = socket(AF_INET,SOCK_STREAM,0); memset(&addrm0,sizeof(addr)); addr.sin_family = AF_INET; addr.sin_port=htons(2000);

- 1

- 2

- 3

- 4

NIO and IO applicable scenarios:

NIO was born to make up for the shortcomings of traditional IO, but there are some shortcomings, and * * NIO also has some disadvantages, because NIO is a buffer oriented operation. Every data processing is carried out on the buffer, so there will be a problem. Before data processing, you must judge whether the data in the buffer is complete or has been read. If not, Assuming that only part of the data is read, there is no significance for incomplete data processing** Therefore, buffer data should be detected before each data processing.

what are the applicable scenarios for NIO and IO?

if you need to manage thousands of connections opened at the same time, and these connections only send a small amount of data at a time, such as chat server, NIO may be a good choice to process data at this time.

if there are only a few connections and these connections need to send a large amount of data each time, the traditional IO is more suitable. What kind of data processing to use needs to be compared between the response waiting time of the data and the time to check the buffer data.

Java IO is blocked. If the data is not ready or not writable at the time of a read-write data call, the read-write operation will be blocked until the data is ready or the target is writable. Java NIO is non blocking. Every data read-write call will return immediately, and the currently readable (or writable) content will be written to or output from the buffer. Even if there is no available data, the call will still return immediately and do nothing to the buffer. This is like going to the supermarket to buy things. If there are no needed goods in the supermarket or the quantity is not enough, Java IO will wait until the quantity of goods needed in the supermarket is enough to bring back all the needed goods. Java NIO is different. No matter how many goods are needed in the supermarket, it will immediately buy all the needed goods that can be bought and return, Even goods that are not needed will be returned immediately.

Blocking IO will make threads waste a lot of time waiting for IO, which is very uneconomical. However, this blocking can obtain and process data immediately when data is available, while non blocking IO must obtain all data through repeated calls.

Java NIO uses Selector to manage multiple channels in a single thread. Through the select call, you can obtain the prepared channels and process them accordingly.

Multiple clients are connected to the network card of the server. epoll will take these links to monitor. The data preparation is non blocking, and the data copy is blocked one by one, but the clients will be processed in the end. epoll improves the data copy work. Zero copy, from the network card to the hard disk, from the kernel space to the user space, is the memory address

The difference between select and epoll

File descriptor (FD): it is an abstract concept, formally an integer, but actually an index value. A log table that points to the files opened by the maintenance process for each process in the kernel. When a program opens a file or creates a file, the kernel returns an FD to the process. Unix,Linux

- select mechanism: it will maintain a GC collection fd_set, FD_ Copy set from user space to kernel space and activate socket. fd_set is an array structure

- poll mechanism: it is similar to the select mechanism. By optimizing the fdset structure, the size of the FD set breaks through the limitation of the operating system. pollfd structure replaces fdset and is realized through linked list

- epoll Event Poll: instead of scanning all FDS, only the FD events concerned by users are stored in an event table of the kernel. In this way, the need to copy data between user space and kernel space can be reduced

| Operation mode | Bottom implementation | maximum connection | IO efficiency | |

|---|---|---|---|---|

| select | ergodic | array | Limited by kernel | commonly |

| poll | ergodic | Linked list | No upper limit | commonly |

| epoll | event callbacks | Mangrove black | No upper limit | high |

DefaultSelectorProvider

while(1) traverses the file descriptor set fdA-E.

nginx and redis adopt epoll

There is a multiplexer in the application thread. The multiplexer has a select function and calls the epoll() of the kernel

The kernel has epoll (select()/poll(), depending on the version)

Suppose there are multiple clients linked to the network card. epoll will prepare the data of the three clients, put them into the kernel space, and then return the index values of the three data to the multiplexer of the application. The select of the multiplexer then calls the recFrom method to tell the kernel which data to copy one by one, and then copies the data from the kernel space to the user space.

Data preparation is non blocking and data copy is blocking. The thread will eventually process the client's data, which is also non blocking for the application, because the multiplexer can take this pile of data. Just do some read-write operations according to business operations. handler(). The multiplexer manages multiple clients.

Copying from kernel space to user space is very expensive, so epoll has zero copy. That is, the common memory space, that is, the direct buffer

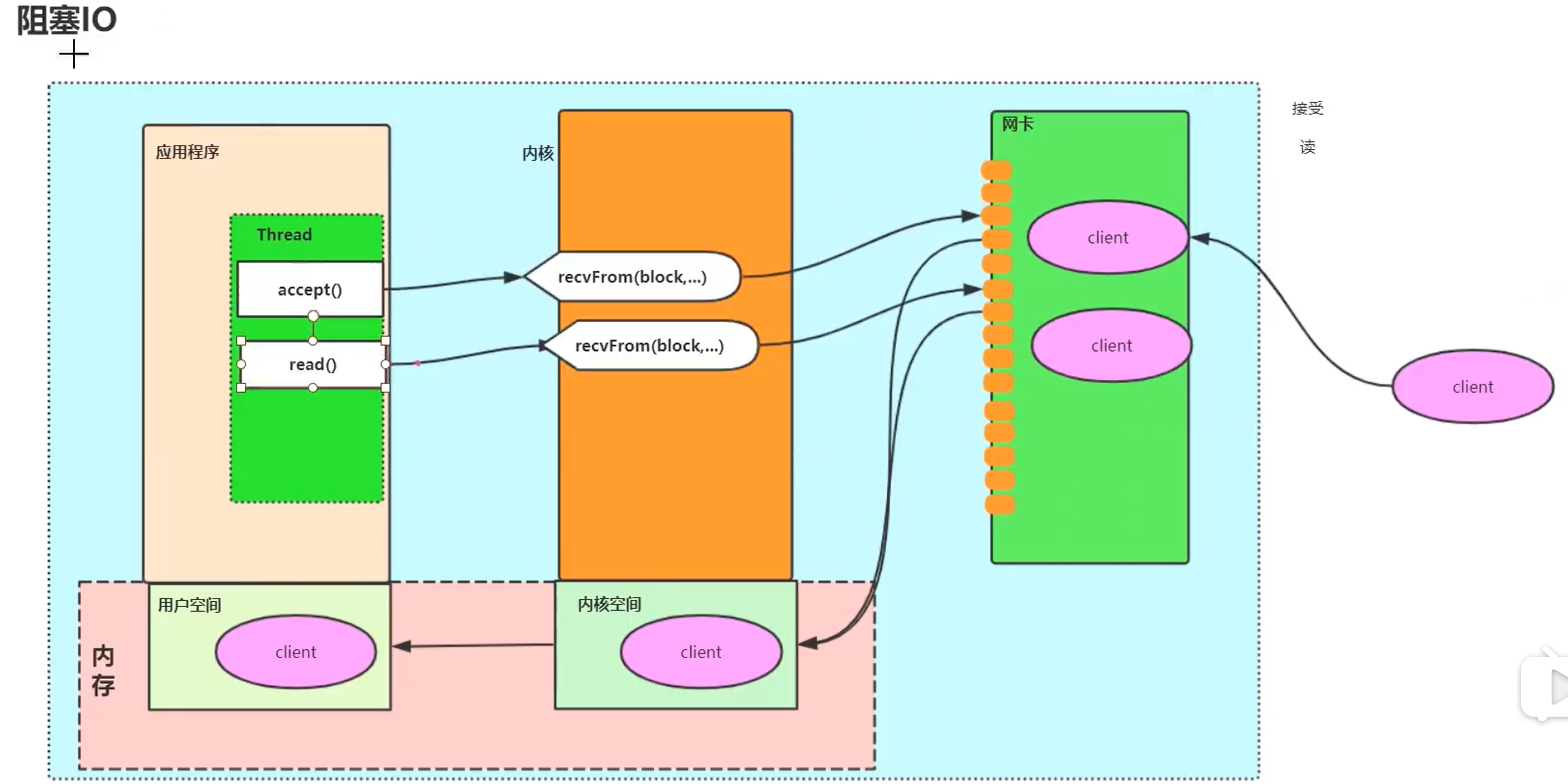

Blocking IO

The application accepts() is blocked, and the socket is taken

The application read() is blocked and takes the byte stream

Kernel recvFrom(block,...)

NIO Reactor (NIO improvement)

Why use Reactor: in common network services, if each client maintains a connection with the login server. Then, the server will maintain multiple connections with clients to connect, read and write with clients. Especially for long link services, how many clients need to maintain the same IO connection on the server. This is a big overhead for the server.

Long link: one socket handles multiple connection requests

BIO:

We use thread pool to process read-write services. However, there are still obvious disadvantages:

- Synchronization blocking IO, read-write blocking, thread waiting time is too long

- When formulating the thread policy, the available thread resources can only be limited according to the number of CPU s, not according to the number of concurrent connections, that is, the connection is limited. Otherwise, it is difficult to ensure the efficiency and fairness of client requests.

- The context switching between multiple threads leads to the low efficiency of threads and is not easy to expand

- Concurrent synchronization control is required for status data and other data that need to be consistent

NIO:

In fact, NIO has solved the 1 & 2 problems exposed by the above BIO. The number of concurrent clients of the server has been improved. It is no longer limited to one client and one thread to process, but one thread can maintain multiple clients (the selector supports listening to multiple socket channels).

However, this is still not a perfect Reactor Pattern. First, Reactor is a design pattern. A good pattern should support better scalability. Obviously, the above is not supported. In addition, a good Reactor Pattern must have the following characteristics:

- With less resource utilization, one client and one thread are usually not required

- Less overhead, less context switching and locking

- Ability to track server status

- Be able to manage the binding of handler to event

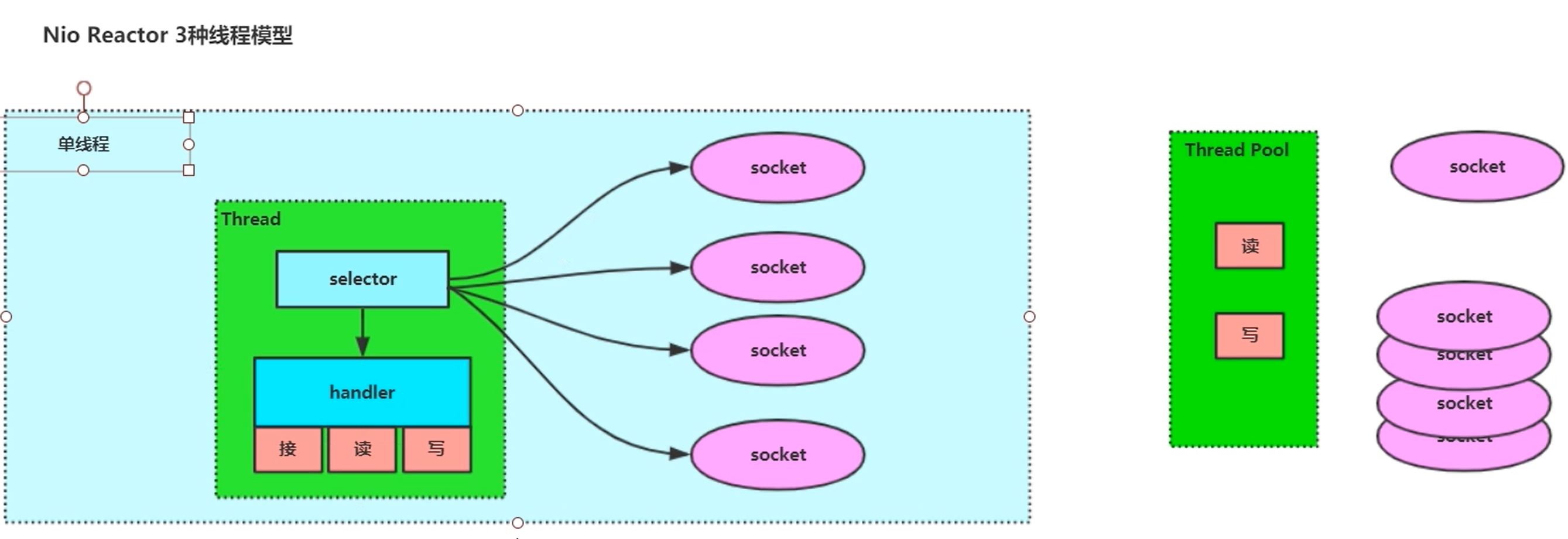

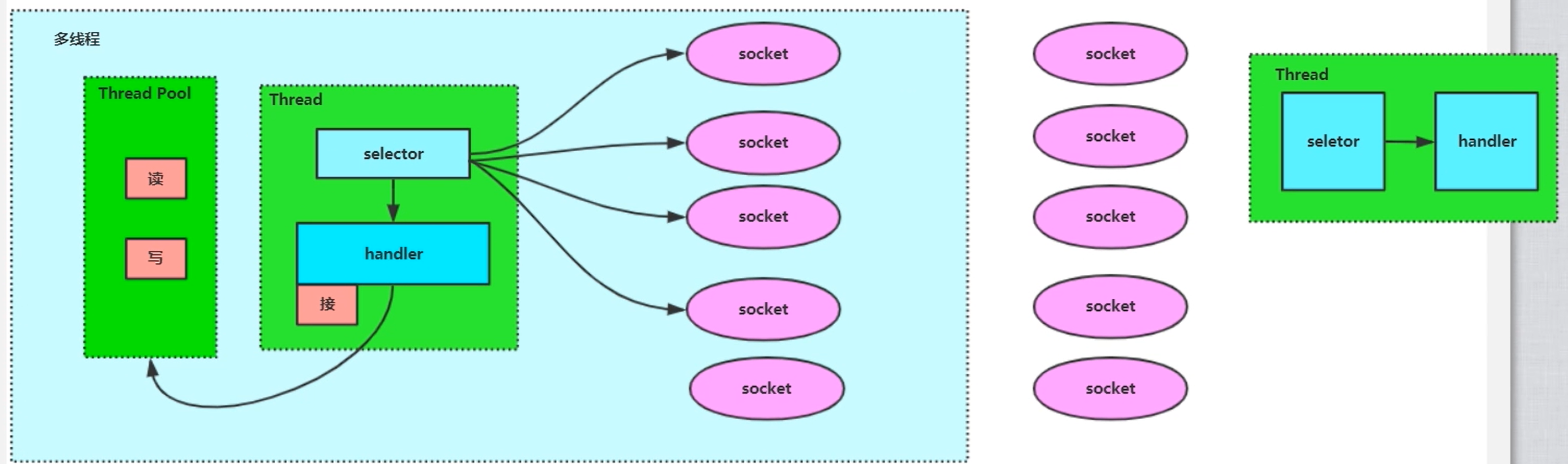

NIO Reactor single thread

After an event occurs, it is handed over to a handler for processing

public class TCPReactor{

private final ServerSocketChannel ssc;

private final Selector selector;

TCPReactor(int port){

selector = Selector.open();// Create selector object

ssc = ServerSocketChannel.open();//Open the server socket

InetSocketAddress addr = new InetSocketAddress(port);

ssc.socket().bind(addr);//Bind ports in ServerSocketChannel

ssc.configureBlocking(false);//Set ServerSocketChannel non blocking

Selection sk = ssc.register(selector,SelectionKey.OP_ACCEPT);//Register ServerSocketChannel into the selector and listen for the ACCEPT event. / / the server is notified when the client has a connection

// This Acceptor is associated with the above server ssc

sk.attach(new Acceptor(selector,ssc));// Given a key and an additional Acceptor object, if the event is not / /, this sentence can be written in the previous sentence

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

NIO Reactor multithreading

After an event occurs, it is handed over to multiple handler s for processing

If reading and writing takes time, we can open another thread to process reading and writing

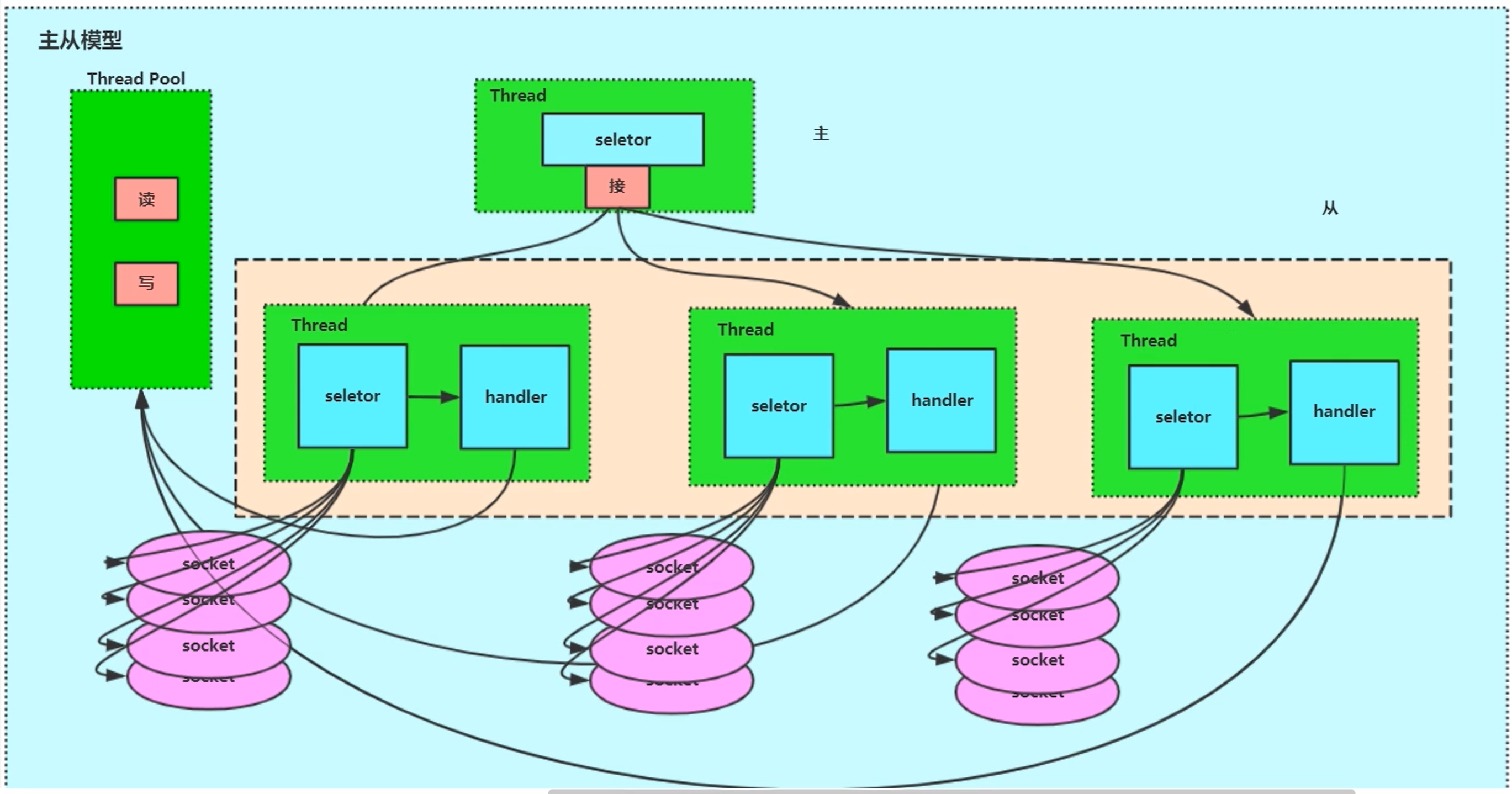

NIO Reactor master-slave model

Multiple selector s

Promotion: take more selector s

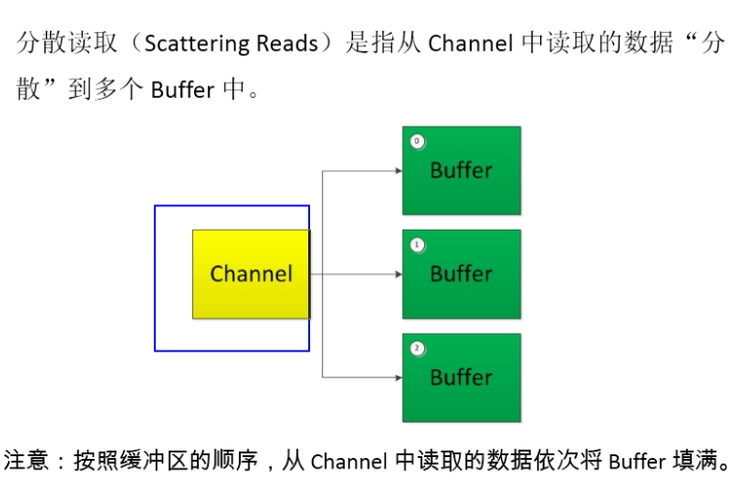

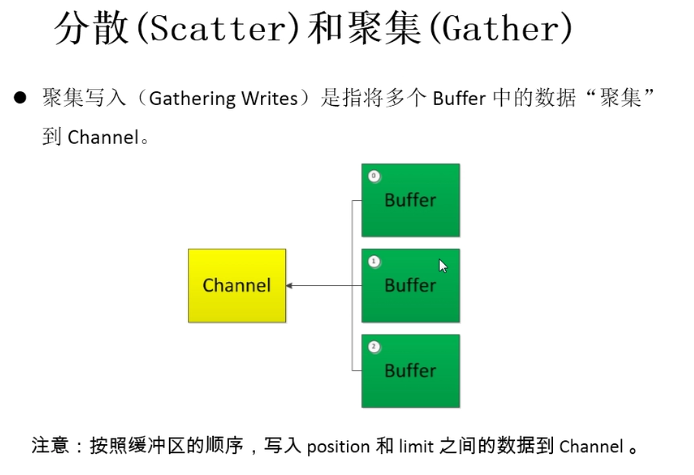

Scatter and gather of multiple buffer s

- Scattering reads: scatter the data in the channel into multiple buffers

- Gathering writes: aggregates data from multiple buffers into channels

Read and write case code

@Test

public void test1() throws IOException {

// rw stands for read-write mode

RandomAccessFile file = new RandomAccessFile("D:\\Learn to use NIO.md","rw");

FileChannel channel = file.getChannel();

// Allocate multiple buffers

ByteBuffer byteBuffer1 = ByteBuffer.allocate(1024*2);

ByteBuffer byteBuffer2 = ByteBuffer.allocate(1024*6);

ByteBuffer byteBuffer3 = ByteBuffer.allocate(1024*5);

// Decentralized reading, reading from the channel to multiple caches

ByteBuffer[] buffers= {byteBuffer1,byteBuffer2,byteBuffer3};

channel.read(buffers);

//Flip multiple buffers

for (ByteBuffer buffer : buffers) {

buffer.flip();

}

// Aggregate write

RandomAccessFile file2 = new RandomAccessFile("D:\\nio2.txt","rw");

// Get channel

FileChannel channel2 = file2.getChannel();

channel2.write(buffers);

channel.close();

channel2.close();

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

Charset

Set the character set to solve the problem of garbled code

Encoding: String - > byte array

Decoding: byte array - > string

thinking

Use charset Forname (string) constructs an encoder or decoder. The encoder and decoder are used to encode CharBuffer and decode ByteBuffer.

It should be noted that before encoding CharBuffer and decoding ByteBuffer, please remember to flip() CharBuffer and ByteBuffer to read mode.

If the encoding and decoding formats are different, garbled code will appear

@Test

public void CharacterEncodingTest() throws CharacterCodingException {

//Load character set

Charset charset = Charset.forName("utf-8");

Charset charset1 = Charset.forName("gbk");

// Get encoder utf-8

CharsetEncoder encoder = charset.newEncoder();

// Get decoder gbk

CharsetDecoder decoder = charset1.newDecoder();

CharBuffer buffer = CharBuffer.allocate(1024);//char

buffer.put("Ha ha ha ha!");//5 Chinese characters, 10B

// code

buffer.flip();//switch

ByteBuffer byteBuffer = encoder.encode(buffer);//Encoding buffer

for (int i = 0; i < 10; i++) {

System.out.println(byteBuffer.get());//Display number

}

// decode

byteBuffer.flip();//switch

CharBuffer charBuffer = decoder.decode(byteBuffer);//Decoding buffer

System.out.println(charBuffer.toString());//There is a tostring method

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

ByteBuffer's get() method was used in a for loop. At the beginning, we habitually added the variable i to the get () method. Then there was a problem and we couldn't get the data. Comment code ByteBuffer flip(); It can then be executed. When the get () method is used directly, ByteBuffer is not added flip(); An error will be reported. So let's distinguish between get() in ByteBuffer; And get(int index); The difference between.

View get(); Method source code:

/*** Relative <i>get</i> method. Reads the byte at this buffer's * current position, and then increments the position. * @return The byte at the buffer's current position * * @throws BufferUnderflowException * If the buffer's current position is not smaller than its limit */ public abstract byte get(); You can see that the returned value is“ The byte at the buffer's current position",Is the byte that returns the current location of the buffer."then increments the position"It also shows that after returning bytes, position It will automatically add 1, that is, point to the next byte.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

If the above situation is get(index), the following method is used:

/**

* Absolute <i>get</i> method. Reads the byte at the given index.

* @param index

* The index from which the byte will be read

* @return The byte at the given index

* @throws IndexOutOfBoundsException

* If <tt>index</tt> is negative or not smaller than the buffer's limit

*/

public abstract byte get(int index);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

From "The byte at the given index", you can know that the returned byte is The byte at the given index. position did not move. If flip() is executed later; Operation, no data can be read. The reason goes on.

Multiplexing

Link: https://www.zhihu.com/question/32163005/answer/55772739

If you regard each route as a Sock(I/O flow) and ATC as your server Sock management code

The first method is the most traditional multi process concurrency model (each new I/O flow will be assigned a new process management.)

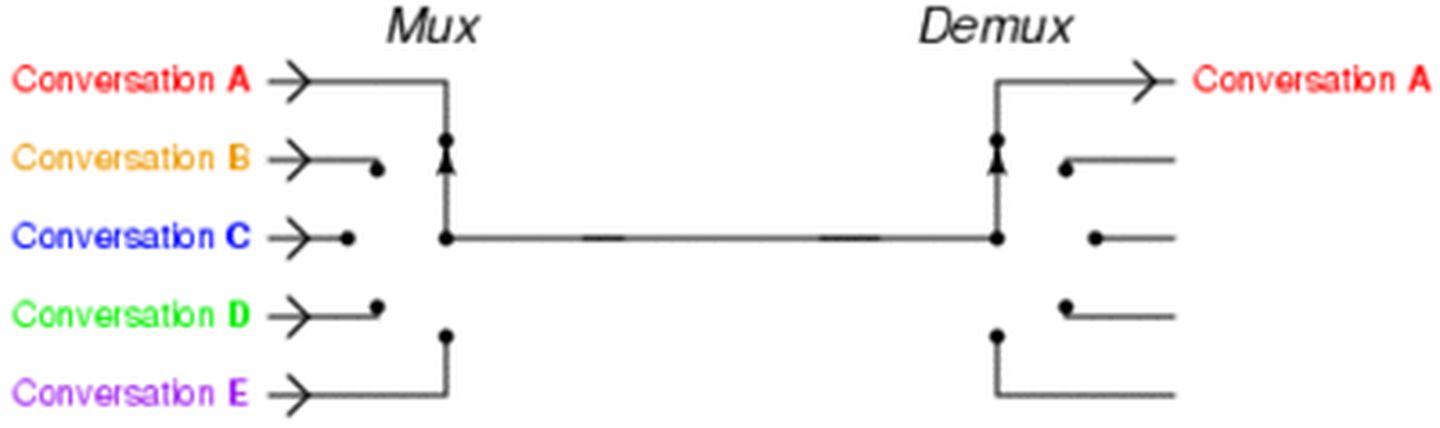

The second method is I/O multiplexing (a single thread manages multiple I/O streams at the same time by recording and tracking the state of each I/O stream (sock)

*In fact, the translation of "I/O multiplexing" may be the reason why this concept is so difficult to understand in Chinese. The so-called I/O multiplexing is actually called I/O multiplexing in English If you search for the meaning of multiplexing, you will basically get this picture:

*

Therefore, most people are directly associated with the concept of "one network cable, multiple sock multiplexing", including the above answers. In fact, whether you use multi process or I/O multiplexing, there is only one network cable. Multiple socks reuse a network cable. This function is implemented in the kernel + driver layer.

Important thing to repeat: I/O multiplexing in this context means that a single thread manages multiple I/O streams simultaneously by recording and tracking the status of each socket (corresponding to the Fight progress strip slot in the air traffic control tower) The reason for its invention is to improve the throughput of the server as much as possible.

Does it sound awkward? Just look at the picture

In the same thread, multiple I/O streams can be transmitted at the same time by dialing the switch (people who have studied EE can now stand up and call this "time division multiplexing" in righteous and strict terms).

What? You haven't understood "how nginx uses epoll to receive requests when a request comes". Just look at this figure. Reminding that ngnix will have many links, epoll will monitor them. Then, like the dial switch, who has data to dial to whom, and then call the corresponding code processing.

------------------------------------------

After understanding this basic concept, others will be well explained.

Select, poll and epoll are concrete implementations of I/O multiplexing. The reason why these three ghosts exist is that they appear in order.

After the concept of I/O multiplexing was proposed, select was the first implementation (implemented in BSD around 1983).

After the implementation of select, many problems were exposed soon.

- select will modify the passed in parameter array, which is very unfriendly for a function that needs to be called many times.

- Select if there is data in any sock(I/O stream), select will only return, but it will not tell you that there is data in that sock, so you can only find it one by one. More than 10 socks may be OK. If tens of thousands of socks are found every time, this unnecessary expense will be quite the pride of a feast of the sea and the sky.

- select can only monitor 1024 links. For the links defined in the header file, see FD_SETSIZE.

- Select is not thread safe. If you add a sock to select, and then suddenly another thread finds that NIMA, this sock is not used and needs to be recovered. Sorry, this select is not supported. If you turn off this sock in a frenzy, the standard behavior of select is.. Uh.. Unpredictable, this is written in the document

"If a file descriptor being monitored by select() is closed in another thread, the result is unspecified"

So 14 years later (1997), a group of people implemented poll, which fixed many problems of select, such as

- poll removes the 1024 link limit

- poll is not designed to modify the incoming array, but it depends on your platform, so it's better to be careful when wandering the Jianghu.

- poll is still not thread safe, which means that no matter how powerful the server is, you can only process a set of I/O streams in one thread. Of course you can cooperate with that many processes, but then you have all kinds of problems with multiple processes.

In fact, it's not an efficiency problem to delay for 14 years, but the hardware in that era is too weak. One server handles more than 1000 links, which is like God. select has met the demand for a long time.

So five years later, in 2002, the great God Davide Libenzi realized epoll

epoll is the latest implementation of I/O multiplexing. epoll fixes most problems of poll and select, such as:

- EPL is now thread safe.

- epoll now not only tells you the data in the sock group, but also tells you which sock has data. You don't have to find it yourself.

- Only supported by linux

epoll's patch is still there. The following link /dev/epoll Home Page

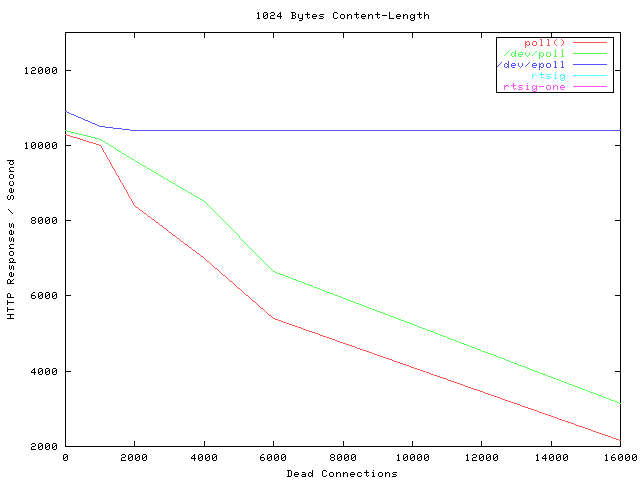

Post a domineering picture to see the same performance as God in those years (the test code is dead chain. If someone can dig the grave and find it, you can study how to test the details)

Horizontal axis Dead connections means the number of links. Its name is just that its testing tool is deadcon The vertical axis is the number of requests processed per second. You can see that the number of requests processed per second by epoll will not decrease with the increase of links. Poll and / dev/poll are miserable.

But epoll has a fatal flaw.. Only linux supports. For example, the corresponding implementation of BSD is kqueue.

In fact, some well-known domestic manufacturers cut epoll from Android. I will take the initiative to tell you this kind of brain damage. What, you said no one uses android as a server. NIMA, you despise p2p software.

In the design principle of ngnix, it will use the most efficient I/O multiplexing model on the target platform, which is why it has this setting. Generally, if possible, try to use epoll/kqueue.

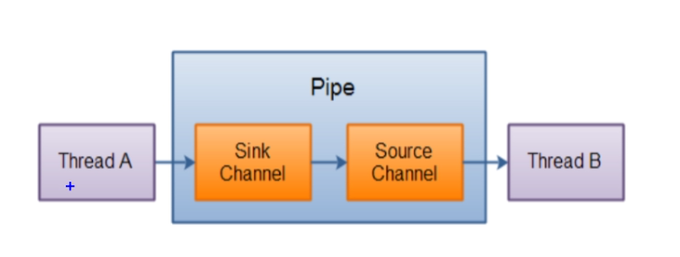

Pipe

The Java NIO pipeline is a one-way data connection between two threads. Pipe has a source channel and a sink channel. The data will be written to the sink channel and read from the source channel.

Code example

@Test

public void test() throws IOException {

// Get pipeline

Pipe pipe = Pipe.open();

ByteBuffer buffer = ByteBuffer.allocate(1024);

// Write data from buffer to pipeline

Pipe.SinkChannel sinkChannel = pipe.sink();

buffer.put("Dying, dying, dying, save the child".getBytes());

buffer.flip();

sinkChannel.write(buffer);

// In order to save trouble, we don't write two threads

// Read data from buffer

Pipe.SourceChannel sourceChannel = pipe.source();

buffer.flip();

System.out.println(new String(buffer.array(),0,sourceChannel.read(buffer)));

sinkChannel.close();

sourceChannel.close();

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

epoll process

5

6

Chat room case

BIO chat (single thread)

Disadvantages: the server can only serve one user

ServerSocket serverSocket = new ServerSocket(81);

System.out.println("Start server"+serverSocket);

try {

Socket socket = serverSocket.accept();

try {

System.out.println("Client connection"+ socket);

BufferedReader in = new BufferedReader(new InputStreamReader(socket.getInputStream()));

PrintWriter out =

new PrintWriter(new BufferedWriter(new OutputStreamWriter(socket.getOutputStream())), true);//The bool value of the second parameter of PrintWriter indicates whether to automatically refresh the output buffer at the end of each println() (but it does not apply to the print() statement. After each write of the output content (write in and out), its buffer must be refreshed so that the information can be officially transmitted through the network.

while (true){

String str = in.readLine();

if (str.equals("END"))

break;

System.out.println(str);

out.println("Server reply"+str);//The server replied to a letter from the client: 0

}

}finally {

System.out.println("close");

socket.close();

}

}finally {

serverSocket.close();

}

/*

Start the server ServerSocket[addr=0.0.0.0/0.0.0.0,localport=8080]

Client connection Socket[addr=/127.0.0.1,port=54036,localport=8080]

Client letter: 0

Client letter: 1

Client letter: 2

Client letter: 3

Client letter: 4

Client letter: 5

Client letter: 6

Client letter: 7

Client letter: 8

Client letter: 9

close

* */

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

public class Client_Socket {

public static void main(String[] args) throws IOException {

InetAddress addr = InetAddress.getByName(null);//null will find localhost by default

System.out.println("Address:"+addr);

Socket socket = new Socket(addr, Server_Socket.PORT);

try {

System.out.println("Socket="+socket);

BufferedReader in = new BufferedReader(new InputStreamReader(socket.getInputStream()));

PrintWriter out =

new PrintWriter(new BufferedWriter(new OutputStreamWriter(socket.getOutputStream())),true);

for (int i = 0; i < 10; i++) {

out.println("Client letter:"+i);// To the server

String str = in.readLine();

System.out.println(str);

}

out.println("END");

}finally {

System.out.println("close");

socket.close();

}

}

}

/*

Address: localhost/127.0.0.1

Socket=Socket[addr=localhost/127.0.0.1,port=8080,localport=54036]

The server replied to a letter from the client: 0

The server replies to the client's letter: 1

The server replies to the letter from the client: 2

The server replies to the letter from the client: 3

The server replies to the letter from the client: 4

The server replies to the letter from the client: 5

The server replies to the letter from the client: 6

The server replies to the letter from the client: 7

The server replies to the letter from the client: 8

The server replies to the letter from the client: 9

close

*/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

The next part of the program is to create streams for reading and writing, except that InputStream and OutputStream are created from Socket objects.

Using two "converter" classes InputStreamReader and OutputStreamWriter, InputStream and OutputStream objects have been converted into Reader and Writer objects respectively.

You can also directly use lnputstream and OutputStream classes, but for output, using Writer has obvious advantages.

This advantage is demonstrated by PrintWriter, which has an overloaded construction method and can obtain the second parameter: a boolean flag indicating whether to automatically refresh the output buffer at the end of each println() (but it does not apply to the print() statement. After each write of the output content (write in and out), its buffer must be refreshed, So that information can be formally transmitted through the network.

For the current example, refresh is particularly important, because the client and server have to wait for the arrival of a line of text content before taking the next step. If the refresh does not occur, the information will not enter the network unless the buffer is full (overflow), which will bring many problems to this program

When writing network applications, pay special attention to the use of automatic refresh mechanism. Each time the buffer is refreshed, a data packet (data packet) must be created and sent.

In the current situation, this is exactly what we want, because if the package contains text lines that have not been issued, the interaction between the server and the client will stop.

In other words, the end of a line is the end of a message. However, in many other cases, messages are not separated by lines, so it is better to use the built-in buffer decision mechanism to decide when to send a packet instead of the automatic refresh mechanism. In this way, we can send larger packets and speed up the processing process.

Note that, like almost all data streams we open, they need to be buffered. Infinite while loop reads the text line from BufferedReader in and writes the information to system Out, and then write out of PrintWriter type. Note that this can be any data stream. They are only connected to the network on the surface. After the client program issues a line containing "END", the program will abort the loop and close the Socket

If the client does not refresh, the whole session will be suspended because the "client letter" used for initialization will never happen (the buffer is not full enough to cause the automatic sending action)

BIO chat (program pool)

Server in previous BIO_ Socket can only serve one client program at a time. In the server, we want to process the requests of multiple users at the same time. The solution is the thread pool.

1, Source code

Preliminary knowledge:

0 bitmap

fd set. 1024bit, sock value, use include posix types h

// cat usr/include/linux/posix_types.h

/*

0 Standard input

1 standard output

2 Standard error

3 listenfd

4 connfd1

5 connfd2

6 connfd3

. . .

*/

#undef __FD_SETSIZE

#define __FD_SETSIZE 1024

typedef struct {

unsigned long fds_bits[__FD_SETSIZE / (8 * sizeof(long))];

} __kernel_fd_set; // This is our FD set. Is an array. The total number of bit s is 1024 / / the corresponding sock is one of them

/* Type of a signal handler. */

typedef void (*__kernel_sighandler_t)(int);

/* Type of a SYSV IPC key. */

typedef int __kernel_key_t;

typedef int __kernel_mqd_t;

#include <asm/posix_types.h>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

/* Because I haven't seen the C language for a long time, I always feel that there is a problem of shallow copy like java, but I reviewed the C language,

It is found that structures can be assigned to each other, and the assigned value is member data, not address. And each member will make a deep copy.

In addition, although arrays can not be assigned to each other directly in C language, mset is generally used, but they can also be assigned to each other directly by using structures. (tips for using structure to achieve deep copy of array) https://blog.csdn.net/junkeal/article/details/86764461

C Language structure initialization problem: https://blog.csdn.net/weixin_42445727/article/details/81191327

*/

#include "stdio.h"

struct date

{

int i;

float x;

} d1={10,12.5};

void main()

{

struct date d2;

d2=d1;

printf("%d, %f\n",d2.i,d2.x);

}

// The following code failed to compile

int a[5] = {1,2,3,4,5};

int b[5];

b = a;

// The following code is completely chengque, and is a deep copy

typedef struct{

int a[10];

}S;

S s1 = { {1,2,3,4,5,6,7,8,9,0} }, s2;

s2 = s1;

//Print the following S1 and S2 respectively

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

View several macros: used to set fd_set

//man FD_SET

NAME

select, pselect, FD_CLR, FD_ISSET, FD_SET, FD_ZERO - synchronous I/O multiplexing

SYNOPSIS

int select(int nfds,

fd_set *readfds, //Read file descriptor

fd_set *writefds, // Write file descriptor

fd_set *exceptfds, // Unexpected file descriptor

struct timeval *timeout);

void FD_CLR(int fd, fd_set *set);//Set 0 to a certain position

int FD_ISSET(int fd, fd_set *set);//Judge someone

void FD_SET(int fd, fd_set *set);//Set 1 to a certain position

void FD_ZERO(fd_set *set);//Set 0 all

#include <sys/select.h>

int pselect(int nfds, fd_set *readfds, fd_set *writefds,

fd_set *exceptfds, const struct timespec *timeout,

const sigset_t *sigmask);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

1 select function

man 2 select

Listen to multiple file descriptors and know that one or more file descriptors are ready for IO operation

select() and pselect() allow a program to monitor multiple file descriptors, waiting until one or more of the file descriptors become "ready" for some class of I/O operation (e.g., input possible).

A file descriptor is considered ready if it is possible to perform a corresponding I/O operation (e.g., read(2) without blocking, or a sufficiently small write(2)).

Schematic diagram of linux select: https://www.processon.com/view/link/5f62b9a6e401fd2ad7e5d6d1

Detailed explanation of linux select() function

In Linux, we can use the select function to reuse I/O ports. The parameters passed to the select function will tell the kernel:

- 1. File descriptors we care about

- 2. For each descriptor, the state we care about.

- 3. How long do we have to wait.

After the kernel traverses the file descriptor and returns from the select function (the return value of select()), the kernel tells us the following information:

- 1. Number of descriptors ready for our requirements

- Positive number: the number of file descriptors ready,

- 0: timeout

- -1: Mistake

- 2. For the three conditions, which descriptors are ready (read, write, exception) (with these return information, we can call the appropriate I/O functions (usually read or write), and these functions will not be blocked again.)

technological process:

- Create a set of socket s fd_set is a bitmap structure, which is binary and 1024 in length

- Add the monitored socket and the socket of the client to the set fd_set

- select(maxfd,fd_set,NULL,NULL,NULL)

- Put FD_ISSET judgment FD_ socket with event in set

- An event in the listening socket indicates a connection request from a new client segment. At the same time, add new clients to fd_set

- The socket of the client has events:

- Data readable: read data

- Event of socket disconnection: remove socket from FD_ Remove from set. When a client is newly connected or disconnected fd_set is only updated

Lnux select function interface

select disadvantages

- ① bitmap has only 1024 bits,

- ② In addition, after fd is set, rset has been modified. The next time while, rset will be changed, and then rset will be assigned. FDset is not reusable

- ③ It takes time to copy rset from user mode to kernel mode

- ④ When returning, I don't know which one it is and need to traverse, so O(n)

#include<sys/select.h>

// Select is only a function provided by linux, not the implementation of select in the kernel, but for us to use. Use 80 interrupt to switch the current process to the kernel state. At this time, rset will be copied to the kernel state

int select(int nfds, //Description FD_ The size of the bitmap of set must scan the entire bitmap, so that 1024 bits do not need to be scanned / / 0 is normal when counting from 0, and + 1 is required when counting from 1

// A file description set is saved in DF_ DF in set type_ Set is actually a bitmap

fd_set *readfds, //Read set of interest * readset / / descriptor set, bitmap. The first bit is 1, which indicates which file descriptor is enabled / monitored. Specify what conditions are met / / rset is readable in user space and will be copied to kernel space for judgment, which is efficient. If there is no data, the kernel state will always judge, that is, select is a blocking function. When there is data, the FD of the corresponding position of the data will be set (rset), indicating that there is data, and the select function returns. Then traverse the FD to determine which FD has been set, read the set data and put it.

// The disadvantage is

// ① bitmap has only 1024 bits,

// ② In addition, after fd is set, rset has been modified. The next time while, rset will be changed, and then rset will be assigned. FDset is not reusable

// ③ It takes time to copy rset from user mode to kernel mode

// ④ When returning, I don't know which one it is and need to traverse, so O(n)

fd_set *writefds,//Write set of interest * writeset

fd_set *exceptfds, //Exception set of interest * exceptset

struct timeval *timeout);//Waiting time, NULL is blocking, 0 is non blocking, and positive number is time

// For example, concern about the read event with socket number 567, readfds=00000111000, writefds=0000000000

struct timeval{//Waiting time

/*

timeout==NULL Waiting for an infinite time

timeout->tv_sec==0 && timeout->tvtv_usec_sec==0 No waiting, direct return (non blocking)

timeout->tv_sec!=0 || timeout->tvtv_usec_sec!=0 Wait for the specified time

*/

long tv_sec;//second

long tv_usec;//subtle

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

There are some macros in linux to facilitate the operation of bitmap in the select function above

//Accordingly, Linux provides a set of macros. To assign values to fdset// In fact, it was just a tool, not provided to the socket, but the socket is available #include<sys/select.h> /**FDZERO The macro sets all bits of an fdset type variable to 0*/ int FDZERO(fdset* fdset); /**FDCLR can be used to clear a bit*/ int FDCLR(int fd,fdset* fdset); /**Use FDSET to set the bit value of the specified position to 1*/ int FDSET(int fd,fdset* fdset); /**FDISSET To test whether a bit is set to 1*/ int FDISSET(int fd,fdset* fdset); int maxfdp;Is an integer value. It refers to the range of all file descriptors in the collection, that is, the maximum value of all file descriptors plus 1. No error is allowed.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

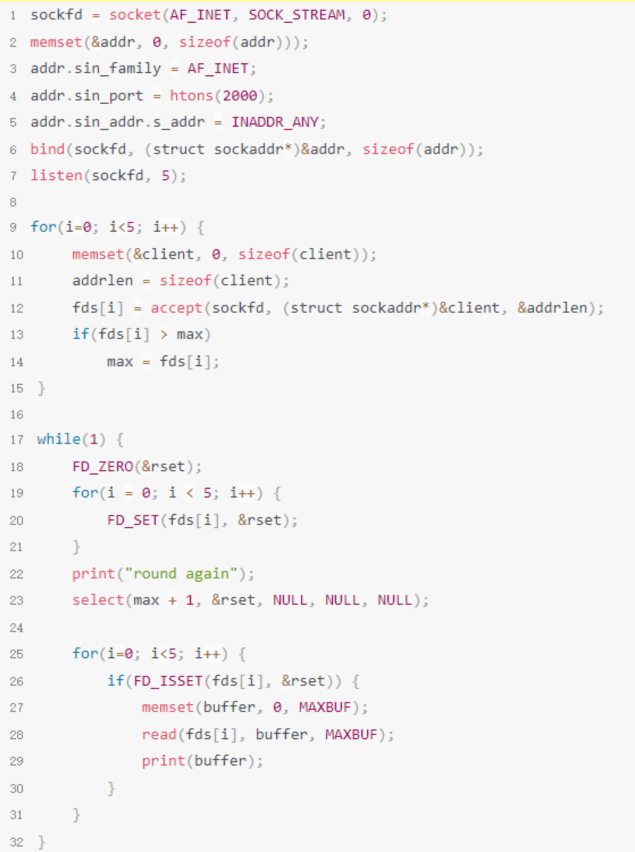

demo of c + + using select: it can be observed from the following that we have to clear every time we use select, and then assign a value to for. After the return of select, continue to traverse and read

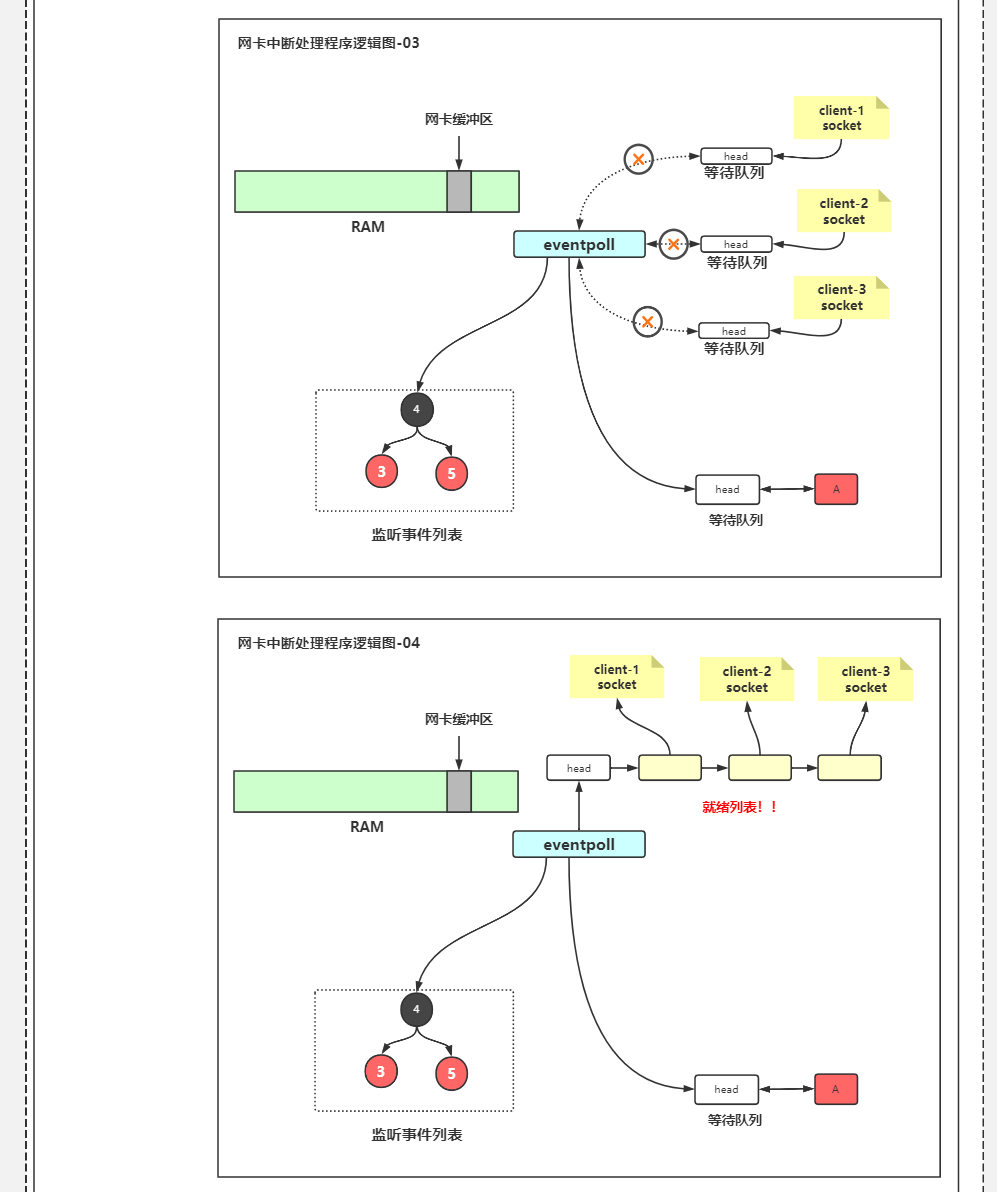

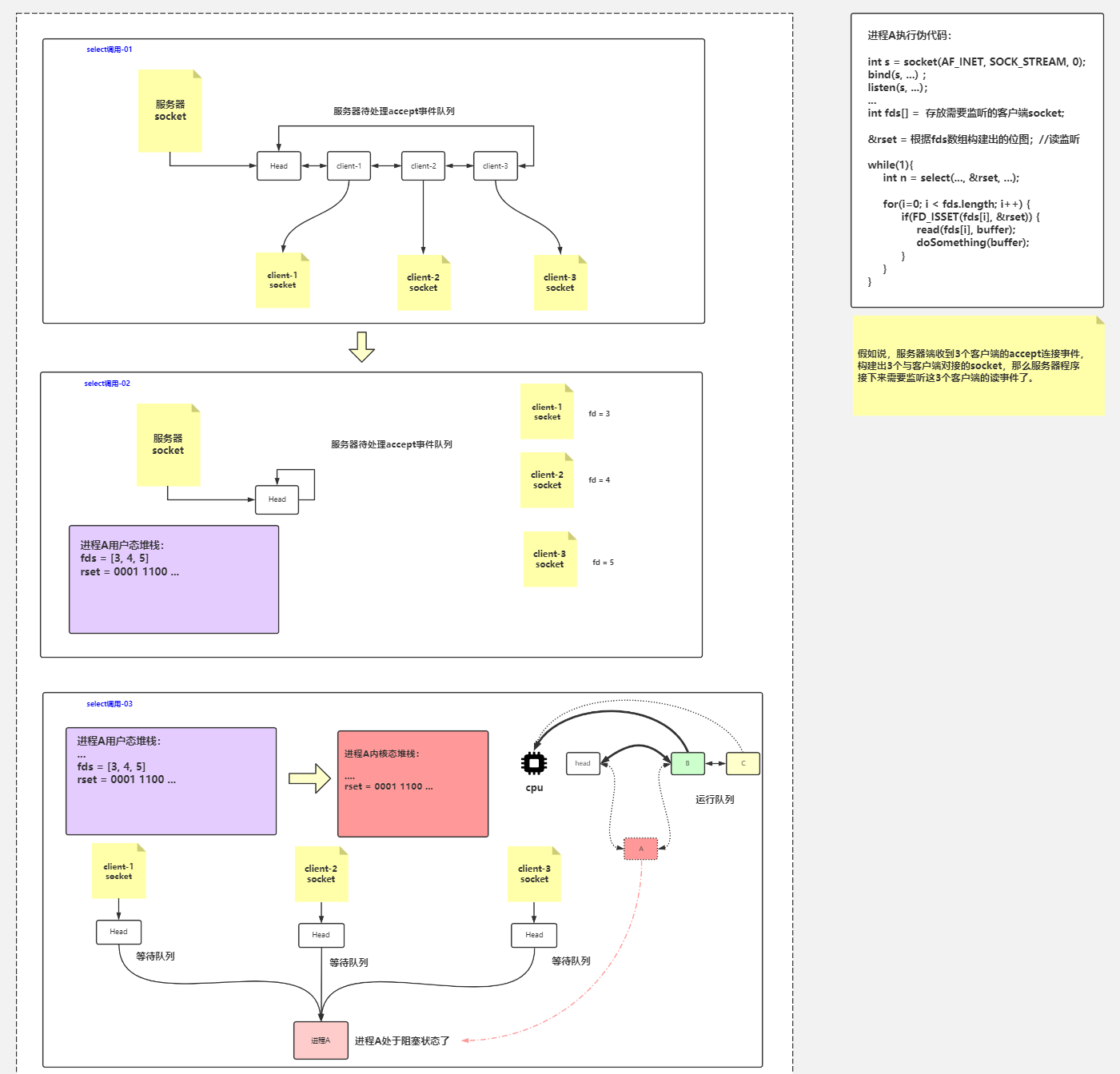

select process

- When three connection requests arrive, the server processes the accept event queue, establishes a connection, and then reads it

- Process A enters kernel state from user state and then blocks

- After the data arrives, DMA reads the data from the network card to the kernel buffer, initiates a hardware interrupt, and the CPU enters the kernel state,

- The CPU saves the user state stack information to pd before entering the kernel state

- Modify the CPU register and point the stack pointer to the kernel state stack of the current process

- Find the appropriate interrupt handler according to the IRQ vector to the vector table

- Execute the network card interrupt handler

- Traverse the array to get whose data is coming,

- Wake up process A, and process A goes to the ready queue

2 poll

linux epoll function: https://www.processon.com/view/link/5f6034210791295dccbc1426

- There is no difference between poll and select in essence. Multiple file descriptors are managed and polled, and processed according to the status of descriptors,

- However, poll has no limit on the maximum number of file descriptors.

- select uses bitmap to copy between user mode and kernel mode, and poll uses array.

- poll and select also have a disadvantage that the array of file descriptors is copied between the address space of user state and kernel state as a whole. Whether these file descriptors have events or not, its overhead increases linearly with the increase of the number of file descriptors

- In addition, after the poll returns, you also need to traverse the entire array of descriptors to get the descriptor with events

- Thread unsafe

- It solves the problem of quantity limitation

int poll(struct pollfd *fds,//Structure array pointer, but not infinite, ulimit -n, i.e. 100001

mfds_t nfds,

int timeout);//In microseconds

struct pollfd{

int fd;//File descriptor / / if it is negative, it will be ignored, so we can initialize the attribute of all elements in the array to - 1

short events;//Request events, care events, such as read and write

short revents;//Return event, feedback, starting with 0

};

//man poll

Events have

POLLIN ,Data read There is data to read.

POLLPRI

POLLOUT,Writing is now possible, though a write larger that the available space in a socket or pipe will still block (unless O_NONBLOCK is set).

POLLRDHUP

POLLERR

POLLHUP

POLLPRI

There is some exceptional condition on the file descriptor. Possibilities include:

* There is out-of-band data on a TCP socket (see tcp(7)).

* A pseudoterminal master in packet mode has seen a state change on the slave (see ioctl_tty(2)).

* A cgroup.events file has been modified (see cgroups(7)).

while(1){

puts("round again");

poll(pollgds,5,5000);//5 elements, 5000 timeout

for(i=0;i<5;i++){

}

}

It's also blocked

Not used bitmap,Using pollfd,

Set yes revent,So it can be reused

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

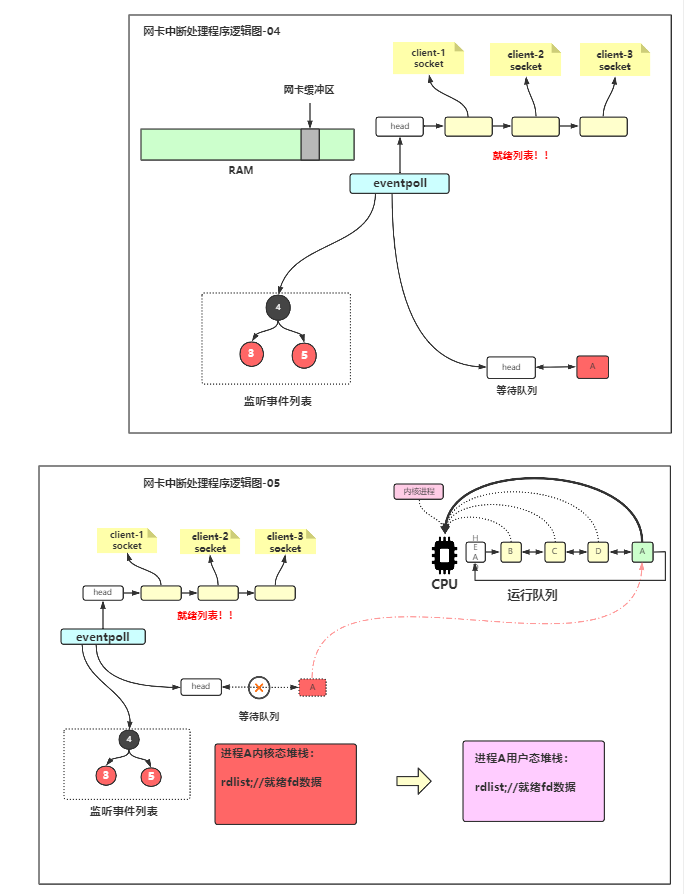

3 epoll function

Schematic diagram of linux epoll: https://www.processon.com/view/link/5f62f98f5653bb28eb434add

Epoll is proposed in the 2.6 kernel and is an enhanced version of the previous select and poll. Compared with select and poll, epoll is more flexible and has no descriptor restrictions. Epoll uses one file descriptor to process multiple descriptors, and stores the events of file descriptors concerned by users in an event table of the kernel, so that it only needs to copy once in user space and kernel space.

- Returns the specific event that occurred without traversing fd Set

- The events of file descriptors concerned by users are put into the kernel and only need to be copied once

- Thread safety

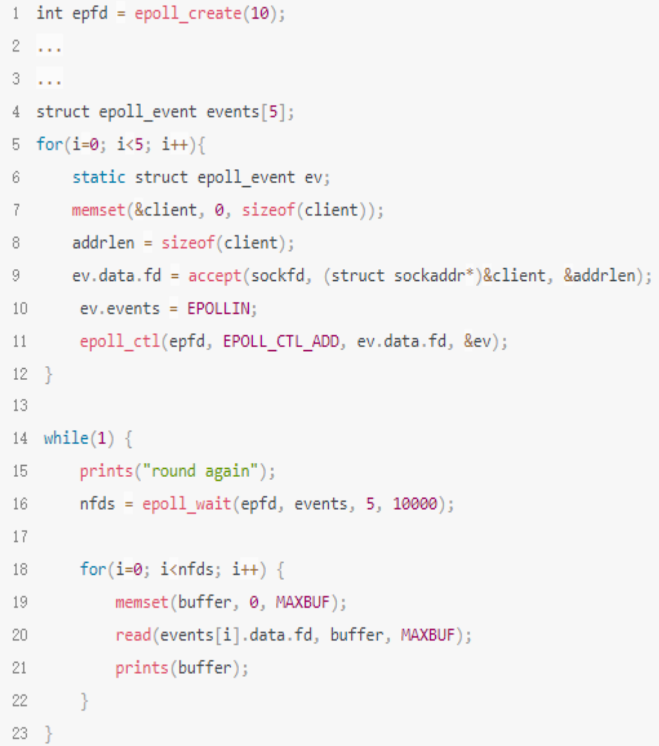

epoll interface

Epoll operation requires three interfaces, which are as follows:_ create,epoll_ctl,epoll_wait

// For example, nginx, first have fd6 of serverSocket, and then use epoll_create creates fd8 and epoll_ CTL (8, 6) let 8 hold 6, then epoll_ The wait (8) kernel will tell what events arrive

#include<sys/epoll.h>

int epoll_create(int size);//Create an epoll handle, which itself is an fd descriptor id / / open up a space in the kernel, that is, like our previous rset, called the epoll space, which can be obtained through fd. There is a list of events to manage. / / how many socket s can be managed

//Add, delete and modify the data in the above space// Registration requires monitoring fd events

int epoll_ctl(int epfd,//To change the number of epoll, that is, the fd number of epoll and the process fd number of epoll structure, the function will rely on this number to find the corresponding epoll structure.

int op,//Operation type

int fd,//Which socket to add, delete or change

Struct epollevent* event);

// Waiting for an event to occur is a blocking operation

// There is also a ready list in the epoll space. Save the ready list above, epoll_wait is to access the ready list

int epoll_wait(//The return value is the number of events

int epfd,

struct epollevent* events,//The socket s with events will be put into this array

int maxevents,//The length of the ready array, because the c language array has no length attribute

int timeout);//0 means non blocking, a positive number means waiting time, and - 1 means blocking all the time

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

struct epoll_event{

_uint32_t events;/*Epoll events*/

epoll_data_t data;/*User data variable*/

};

// man epoll

events It can be a collection of the following macros:

EPOLLIN,//read

EPOLLOUT,//write

EPOLLPRI,

EPOLLERR,

EPOLLHUP(Hang up)

EPOLLET(Edge triggered)

EPOLLONESHOT(Listen only once. After the event is triggered, start from epoll The list is automatically cleared fd)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

You don't need to copy the kernel back and forth in the oll space

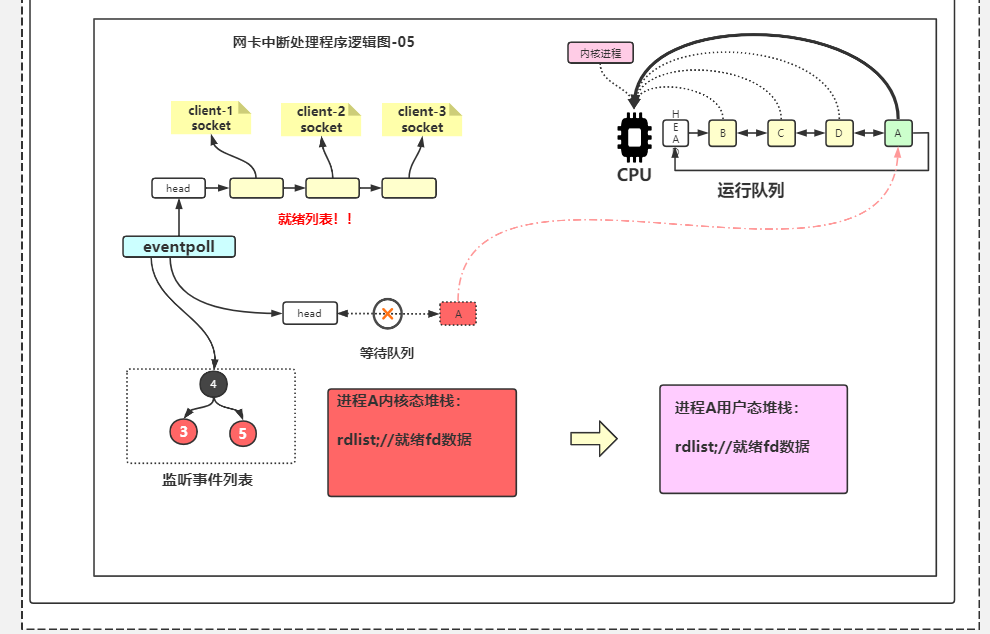

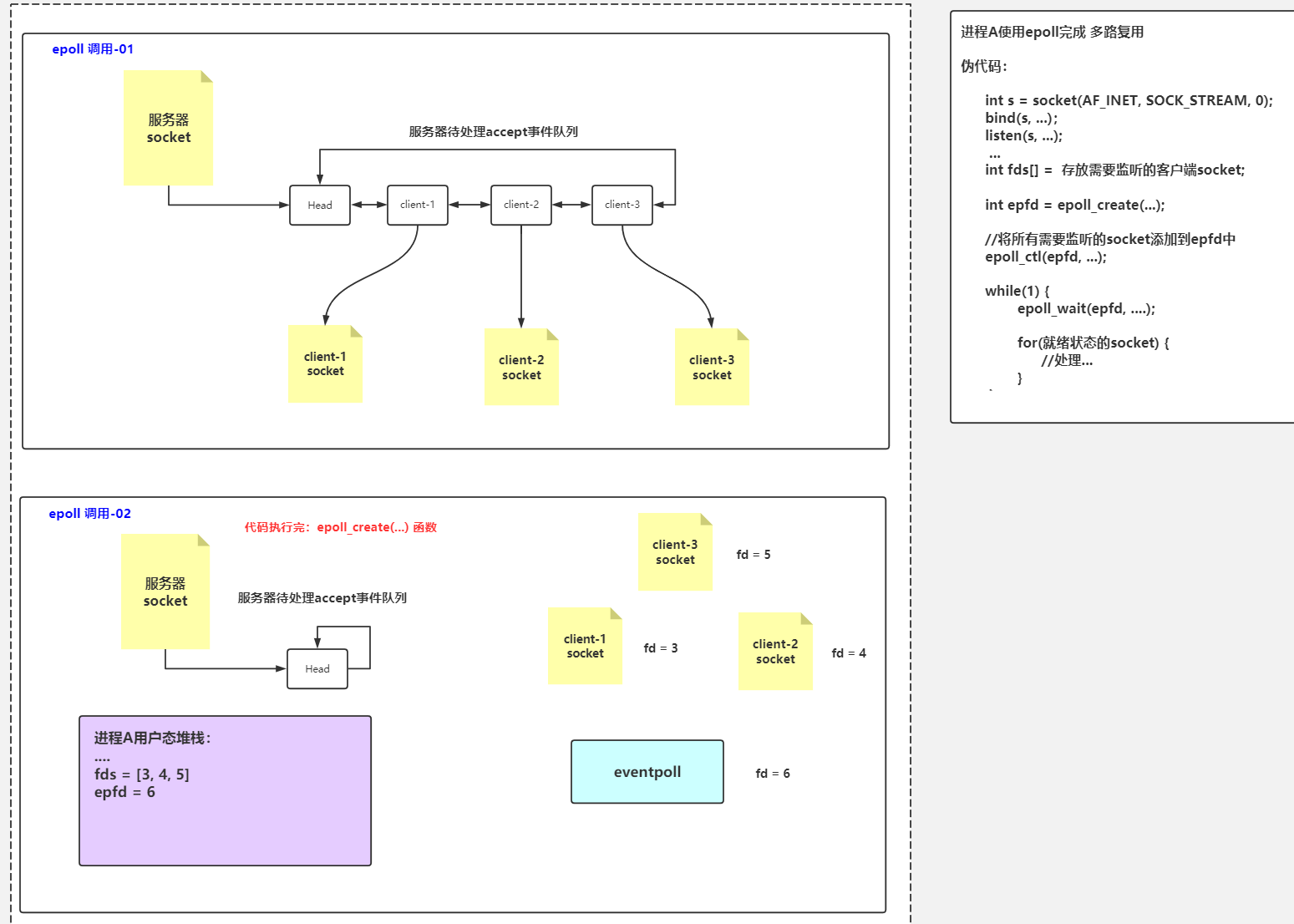

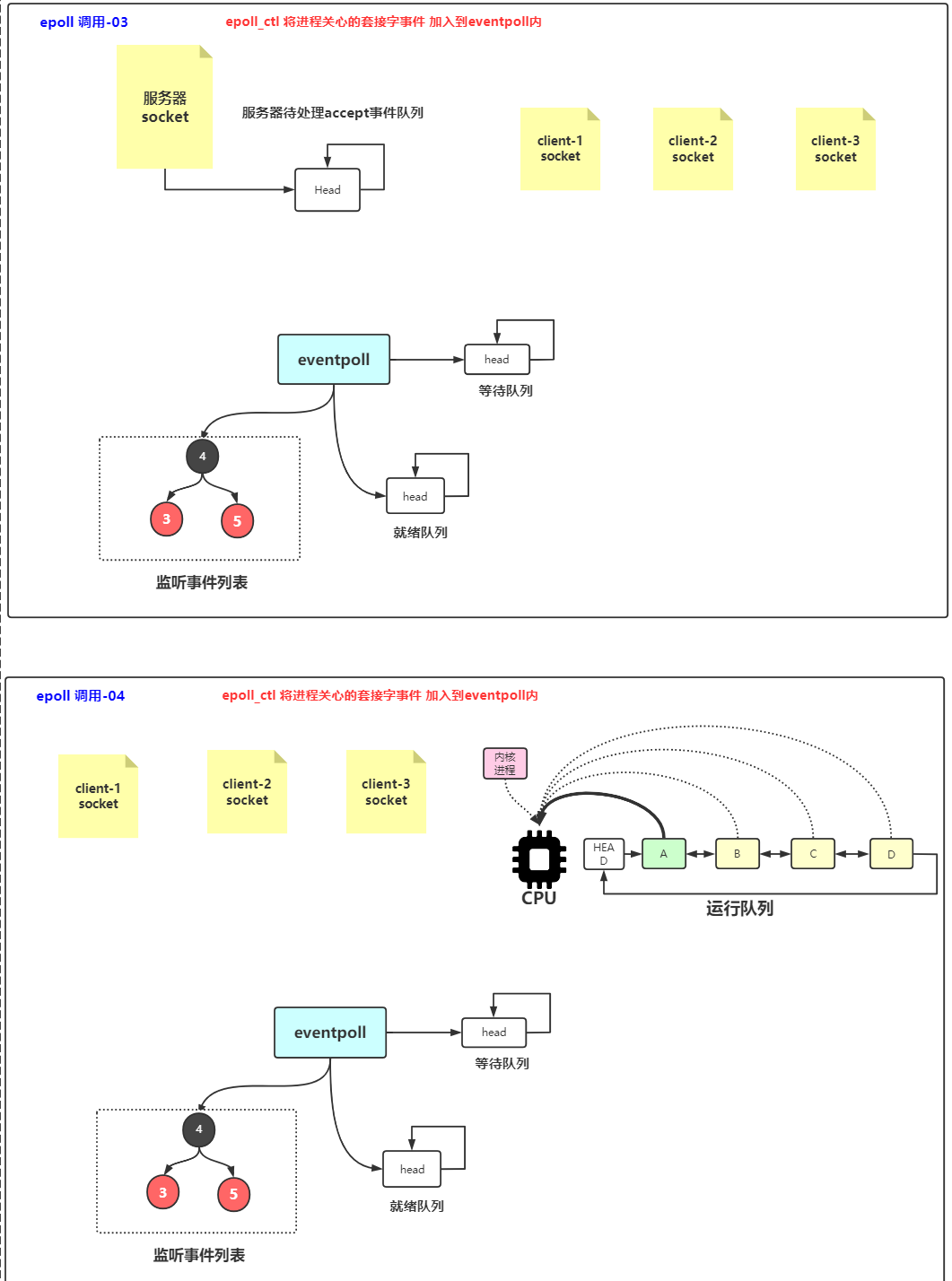

epoll process

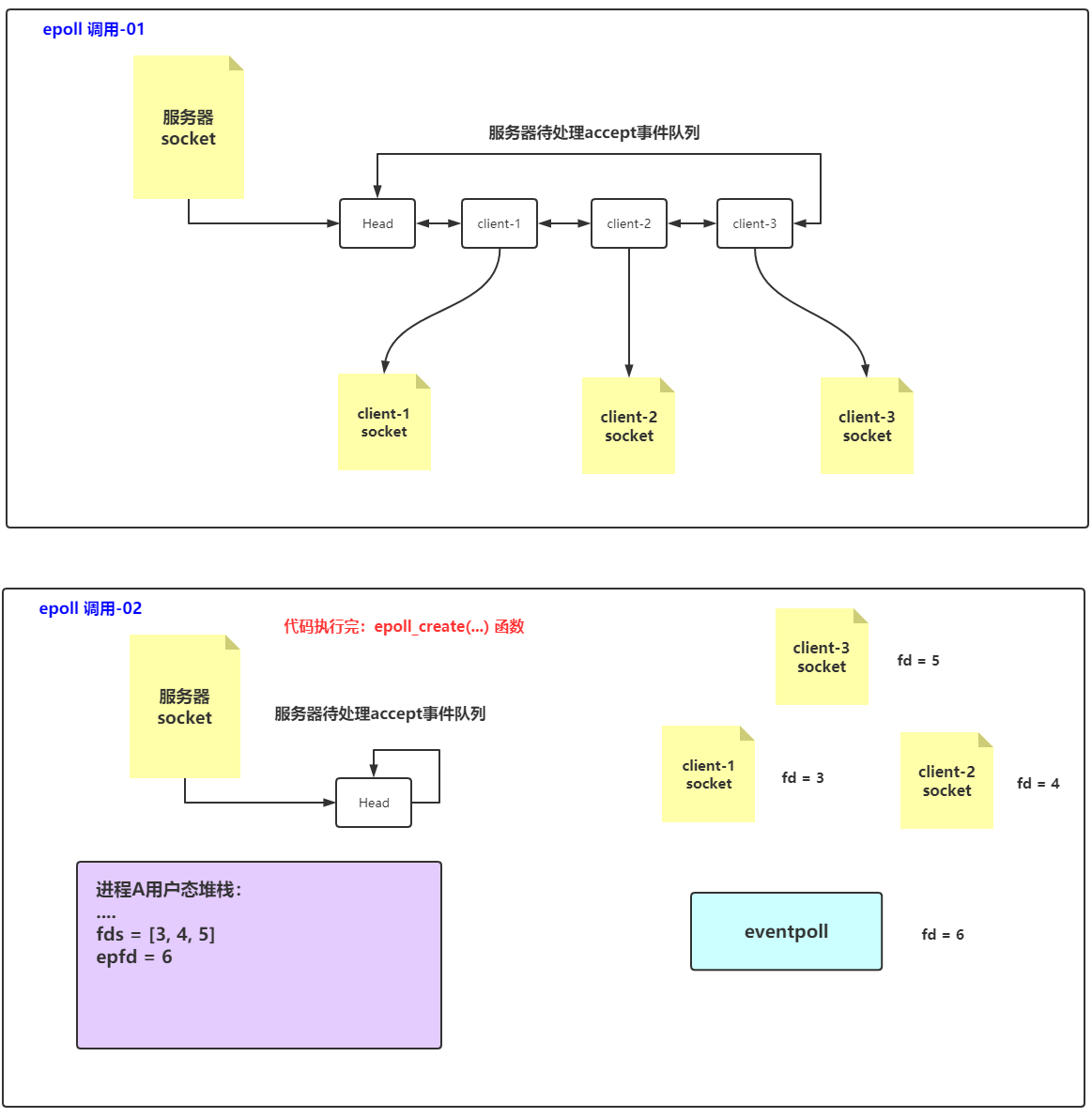

(1)epoll_ The create function is a system function, which will open up a new space in the kernel space, which can be understood as the epoll structure space, and the return value is the file descriptor number of epoll, which is convenient for subsequent operations.

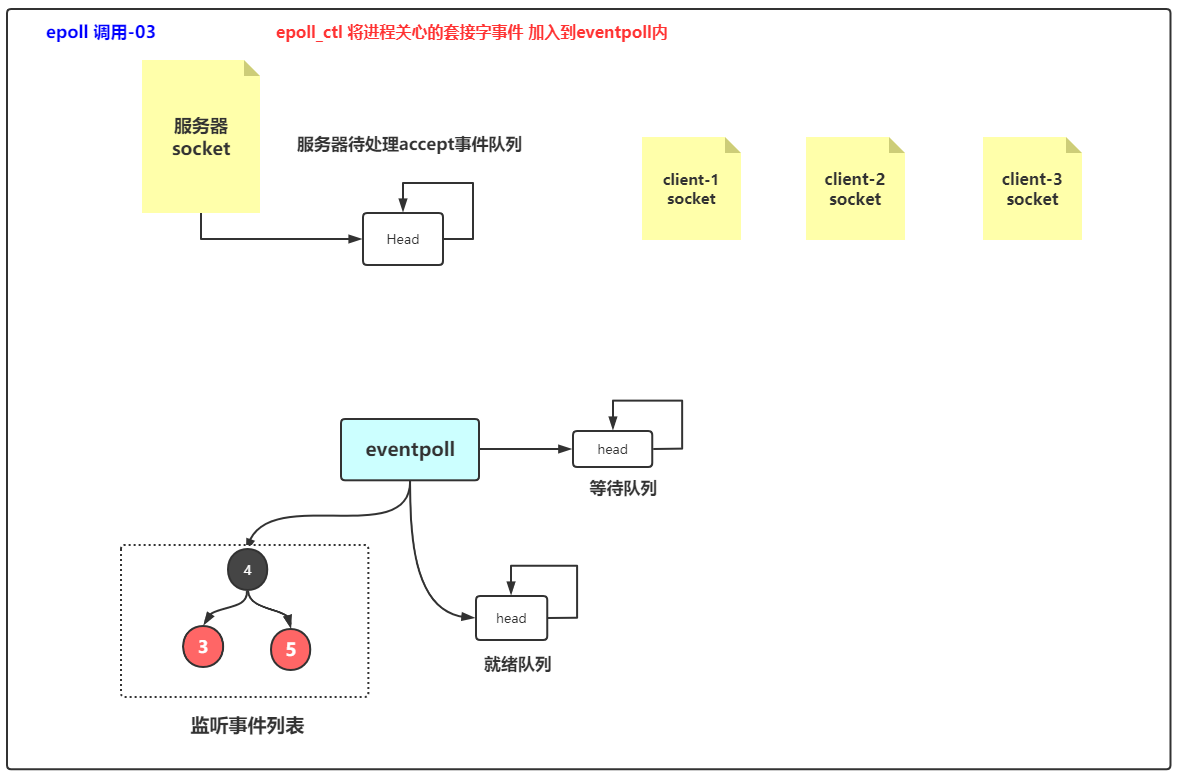

(2)epoll_ctl is the event registration function of epoll. Epoll is different from select. The select function specifies the descriptors and events to listen to when calling. Epoll first registers the descriptor events of interest to the user in epoll space. This function is a non blocking function, which is only used to add, delete and modify the descriptor information in epoll space.

-

Parameter 1: epfd, very simple. The process fd number of epoll structure. The function will find the corresponding epoll structure by relying on this number.

-

Parameter 2: op, which represents the current request type and is defined by three macros

(EPOLL_CTL_ADD: register a new fd into epfd) (EPOLL_CTL_MOD: modify the listening event of registered fd), (EPOLL_CTL_DEL: delete an fd from epfd) -

Parameter 3: FD, the file descriptor to be monitored. Generally refers to socket_fd

-

Parameter 4: event, which tells the kernel the events interested in this fd resource.

(3)epoll_wait waits for the event to occur, similar to the select() call. Determine whether to block according to the parameter timeout.

- Parameter 1: epfd, specifies the list of epoll events of interest.

- Parameter 2: * events is a pointer and must point to an epoll_event structure array. When the function returns, the kernel will copy the ready data into the array! Carry back the data of the event

- Parameter 3: maxevents, indicating the maximum amount of data that can be received by the epoevent array of parameter 2, that is, the maximum amount of ready data that can be obtained in this operation

- Parameter 4: timeout, in milliseconds.

- 0: it means to return immediately, which is called by feizui plug.

- -1: Block the call until an event of interest to the user is ready.

- >0: block the call. If an event is ready within the specified time, it will return in advance; otherwise, it will return after waiting for the specified time.

- Return value: the number of fd ready this time.

Working mode