Definition of Neural Network

In the field of machine learning and cognitive science, artificial neural network (ANN) is a mathematical model or computational model which imitates the structure and function of biological neural network (animal central nervous system, especially brain), and is used to estimate or approximate functions.

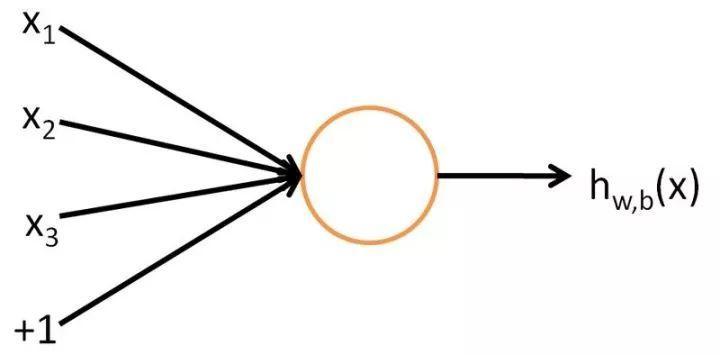

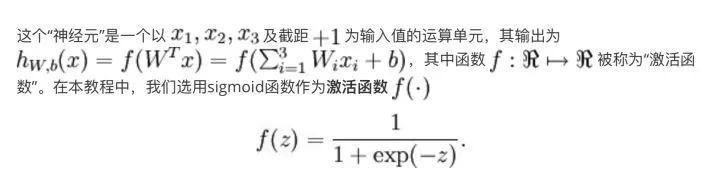

In order to describe the neural network, we start with the simplest neural network, which consists of only one "neuron". The following is the diagram of the "neuron":

It can be seen that the input-output mapping of a single "neuron" is actually a logistic regression.

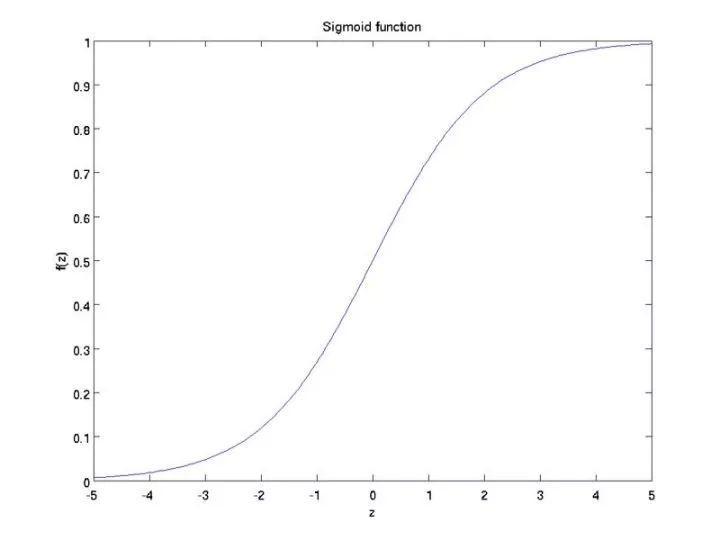

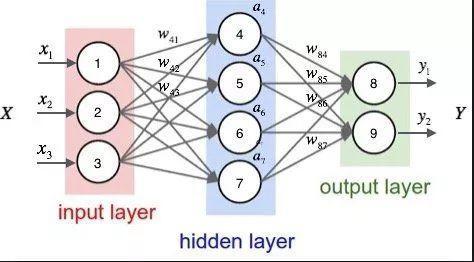

Neural Network Model

The so-called neural network is to connect many single "neurons" together, so that the output of one "neuron" can be the input of another "neuron". For example, the following figure is a simple neural network:

Keras actual combat

Keas is used to implement the following network structure and train the model:

Input values (x1,x2,x3) represent height, weight and age, and output values (y1,y2)

importnumpyasnp #The total number is 1000, half of which are boys. n =1000 #All body index data are standardized, with an average of 0 and a standard deviation of 1. tizhong = np.random.normal(size = n) shengao = np.random.normal(size=n) nianling = np.random.normal(size=n) #Gender data, the top 500 students are boys, expressed in number 1 gender = np.zeros(n) gender[:500] =1 #Boys weigh heavier, so let boys weigh + 1 tizhong[:500] +=1 #Boys are taller, so let boys rise + 1 shengao[:500] +=1 #Boys are on the younger side of the age, so let the age of boys decrease by 1 nianling[:500] -=1

Create model

fromkerasimportSequential fromkeras.layersimportDense, Activation model = Sequential() #Only one neuron, three input values model.add(Dense(4, input_dim=3, kernel_initializer='random_normal', name="Dense1")) #The activation function uses softmax model.add(Activation('relu', name="hidden")) #Adding output layer model.add(Dense(2, input_dim=4, kernel_initializer='random_normal', name="Dense2")) #The activation function uses softmax model.add(Activation('softmax', name="output"))

Compilation model

Optimizers and loss functions need to be specified:

model.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=['accuracy'])

Training model

#Converting to one-hot format fromkerasimportutils gender_one_hot = utils.to_categorical(gender, num_classes=2) #Physical indicators are put into a matrix data. data = np.array([tizhong, shengao, nianling]).T #Training model model.fit(data, gender_one_hot, epochs=10, batch_size=8)

output(stream): Epoch1/10 1000/1000[==============================] -0s235us/step - loss:0.6743- acc:0.7180 Epoch2/10 1000/1000[==============================] -0s86us/step - loss:0.6162- acc:0.7310 Epoch3/10 1000/1000[==============================] -0s88us/step - loss:0.5592- acc:0.7570 Epoch4/10 1000/1000[==============================] -0s87us/step - loss:0.5162- acc:0.7680 Epoch5/10 1000/1000[==============================] -0s89us/step - loss:0.4867- acc:0.7770 Epoch6/10 1000/1000[==============================] -0s88us/step - loss:0.4663- acc:0.7830 Epoch7/10 1000/1000[==============================] -0s87us/step - loss:0.4539- acc:0.7890 Epoch8/10 1000/1000[==============================] -0s86us/step - loss:0.4469- acc:0.7920 Epoch9/10 1000/1000[==============================] -0s88us/step - loss:0.4431- acc:0.7940 Epoch10/10 1000/1000[==============================] -0s88us/step - loss:0.4407- acc:0.7900 //Output (plain)://Python Learning Development 705673780

Forecast

test_data = np.array([[0,0,0]]) probability = model.predict(test_data) ifprobability[0,0]>0.5: print('Girl student') else: print('Schoolboy') ### //Output (stream): //Girl student

Keyword Interpretation

input_dim: Number of Dimensions Input

kernel_initializer: A numerical initialization method, usually orthogonal distribution

Bach_size: In a training session, the sample data is divided into several small pieces, each containing a number of samples called batch_size.

epochs: If all data training is called one round at a time. epochs decided to do a few rounds of training altogether.

optimizer: optimizer, which can be understood as a gradient method

Loss: Loss function can be understood as a measure of the difference between estimated and observed values. The smaller the gap, the smaller the loss.

Metrics: similar to loss, but metrics does not participate in gradient calculation. It is only an index to measure the accuracy of the algorithm. The classification model uses accuracy.

After reading, those who feel that they have gained something can give some praise and attention. Thank you for your support! __________