Neural network -- pytoch implementation residual network ResNet18

preface

ResNet18 is a reference 👉 https://www.bilibili.com/video/BV1154y1S7WC?share_source=copy_web It is realized by the idea of building ResNet. If there are errors or other problems, please point them out!!!

In addition, this paper only introduces the idea of implementation, and does not make a specific interpretation of ResNet.

ResNet18 structure introduction

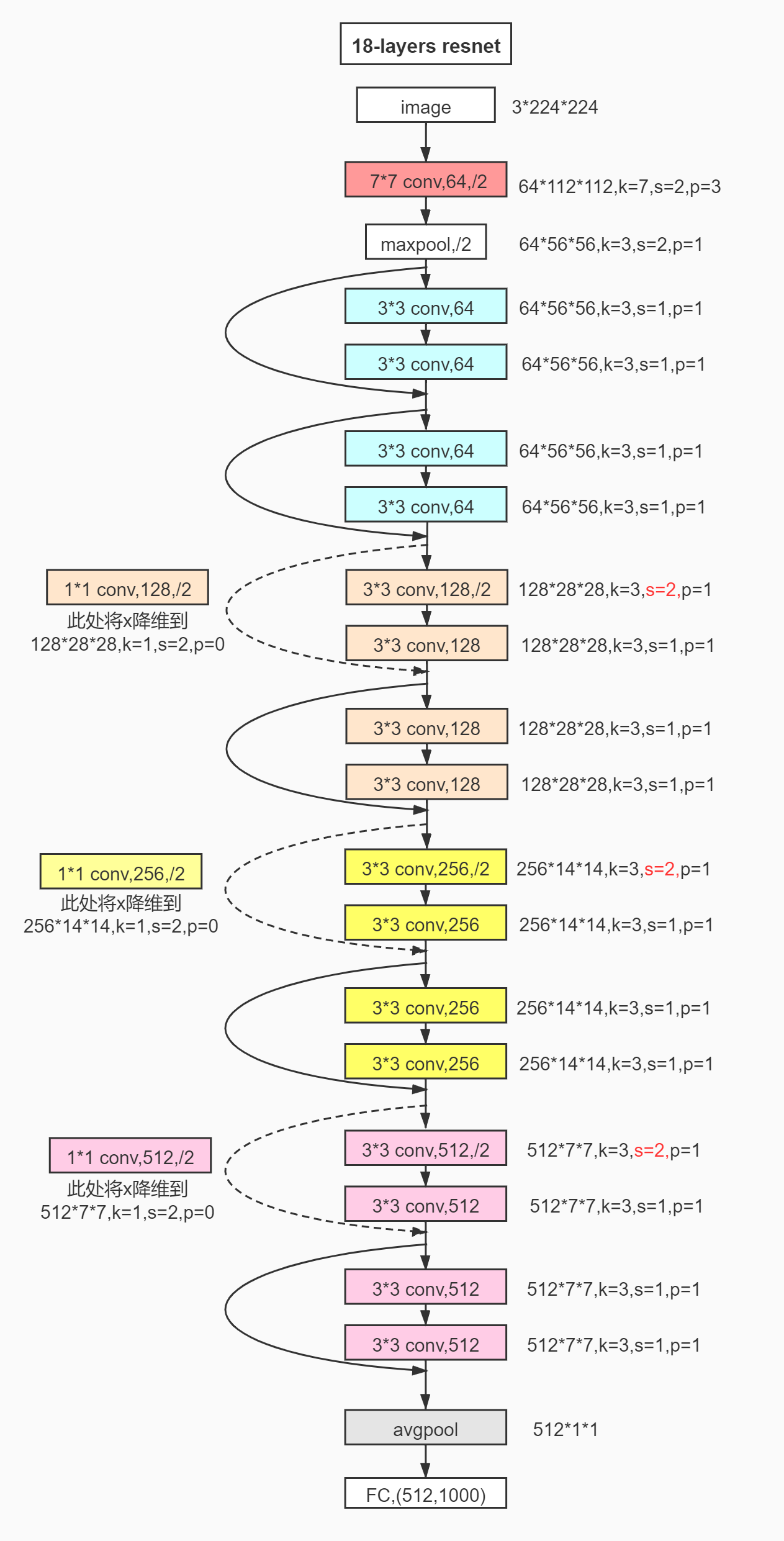

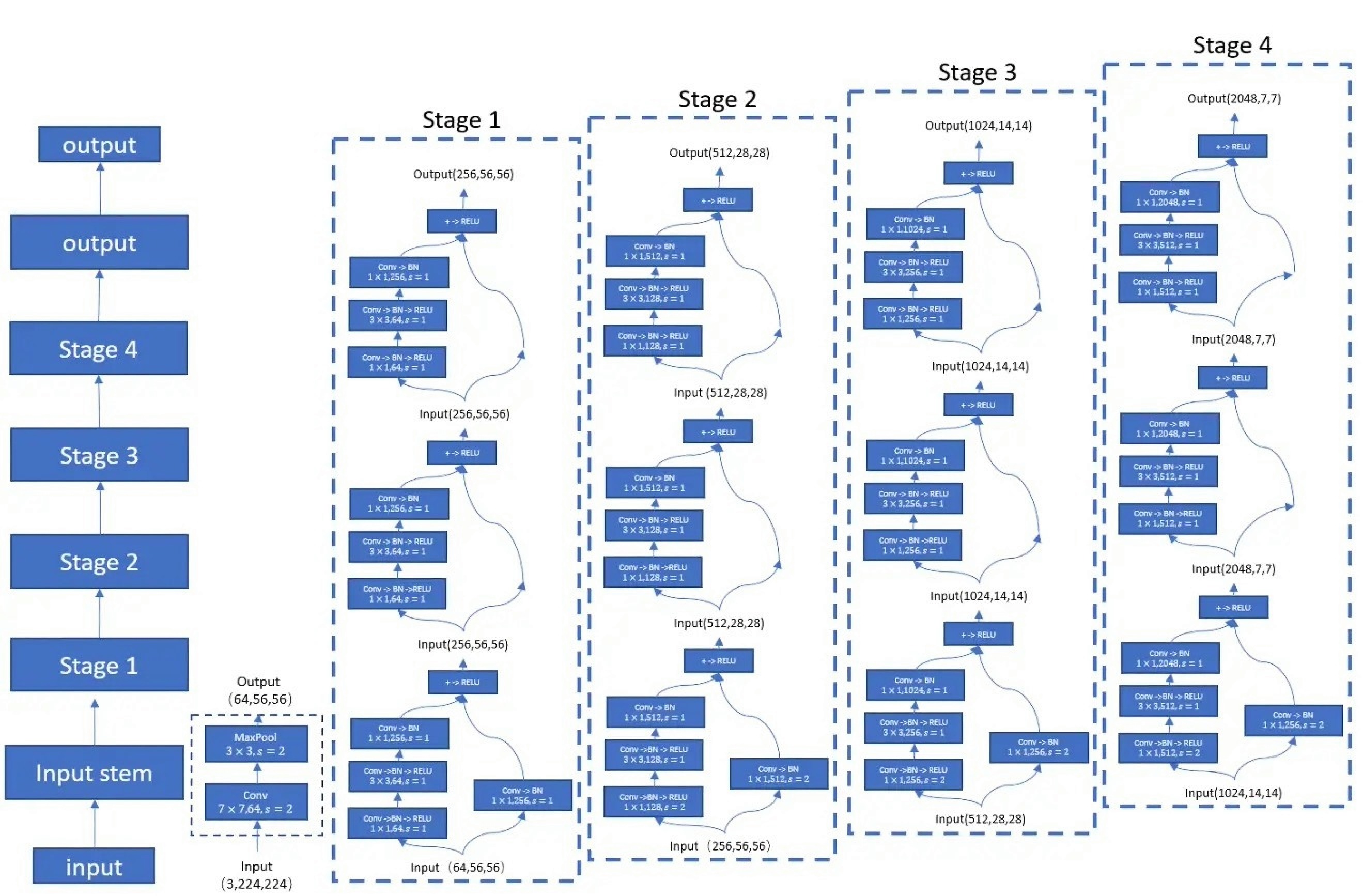

The overall structure of the network is shown in the figure below

From the figure, you can see eight special blocks in the red box. These blocks are the characteristics of the network: residual structure

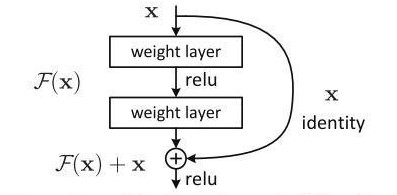

Residual structure

As shown in the figure, the final output includes two parts: Processed F(x) and original input X

ResNet18

From the overall network structure diagram just now, we can see that the blocks in the red box are not exactly the same. There are two types: Identity Block and Conv Block.

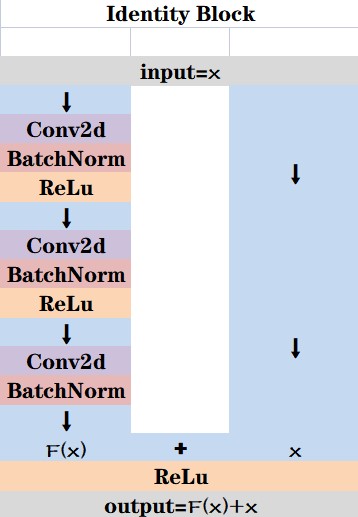

Identity Block Identity Block

|

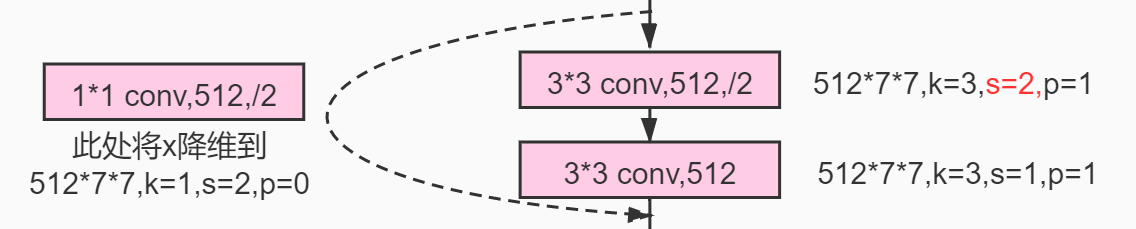

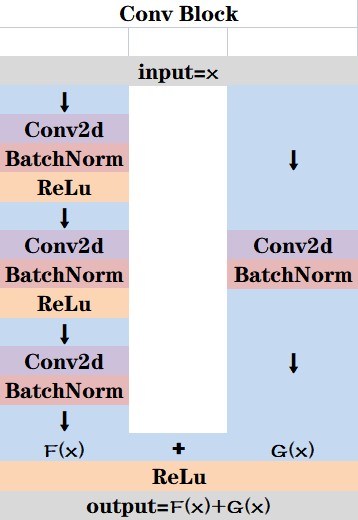

Conv Block Conv Block

|

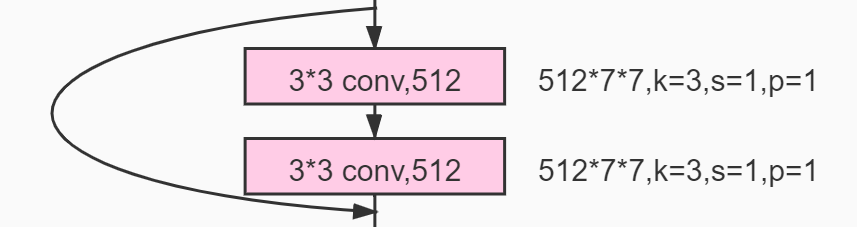

The structure of the two is shown in the figure below. The main part on the left is the same: twice [convolution, standardization, activation function] and once [convolution, standardization].

The difference is that at the residual edge on the right, the Identity Block directly connects the original input with the result on the left, while the Conv Block performs a [convolution, standardization] on the original input and then connects with the result on the left.

|

|

Comparison between ResNet18 and ResNet50 networks

Before we start, we will introduce the structural pronouns that will be used later

Similarities

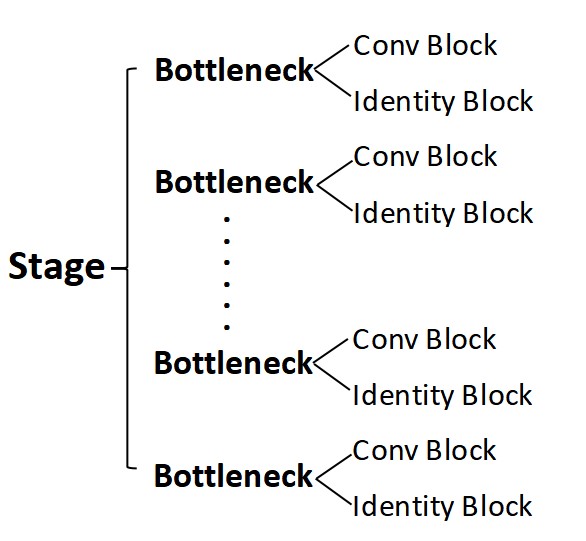

C1: structural segmentation

In the reference video, there is such a picture

The author integrates Bottleneck (including identity block and Conv Block) with the same number of output channels into one Stage, so four stages with different number of output channels are obtained.

Comparing ResNet18 and ResNet50 below, we can also divide ResNet18 into four stages.

C2: treatment of residual edge

The residual edge is determined by two parts. If the Bottleneck is Conv Block at this time, x is processed and the result is assigned to side; For Identity Block, side = None.

Subsequently, define the residual edge residual = initial input x, and then read the side. If the side is not None, it indicates that the residual edge has been processed and its value is assigned to residual; If the side is None, it means that the residual edge has not been processed, and the residual is still X.

difference

D1: the number of bottlenecks in each Stage in ResNet18 is the same

- In ResNet50, the number of bottlenecks in each Stage is different, and the first one is Conv Block, and the rest are identity blocks.

Therefore, the author divides the Stage into two parts: 1) deal with the Conv Block of the first layer separately, and 2) the subsequent Identity Block is read as a block by passing in a list: [3, 4, 6, 3]_ Num is put into the loop to implement the remaining Identity Block.

# Conv Block

conv_block = block(self.inplane, midplane, stride=stride, downsample=downsample)

# Identity Block

for i in range (1, block_num):

block_list.append(block(self.inplane, midplane, stride=1))

- In ResNet18, the number of bottlenecks in each Stage is the same, so we don't need to pass in a list or loop, so we can implement it directly

block1 = block(in_ch, self.out_ch, stride=stride, side=side) block2 = block(self.out_ch, self.out_ch, stride=1, side=None)

D2: the first Bottleneck of Stage1 in ResNet18 is Identity Block instead of Conv Block

- In ResNet50, Identity Block has two characteristics:

1)stride=1

2) Number of final output result channels of each Identity Block = number of intermediate processing layer [convolution, standardization, activation function] result channels * 4

Therefore, Conv Block does not meet these two characteristics, so we need to process the x input at the residual edge

if stride != 1 or self.inplane != midplane*block.extention:

downsample = nn.Sequential(

nn.Conv2d(self.inplane, midplane*block.extention, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(midplane*block.extention)

)

- In ResNet18, Identity Block and Conv Block can be distinguished by using string

if stride != 1:

side = nn.Sequential(

nn.Conv2d(in_ch, self.out_ch, kernel_size=1, stride=2, bias=False),

nn.BatchNorm2d(self.out_ch)

)

D3: number of output channels of Conv1 in the last three stages in ResNet18 = number of input channels * 2

Number of input channels of Conv2 = number of output channels

-

In ResNet50, the author defined the initial number of input channels * * * self.inplane = 64 * * * in Stage 1, and directly input the first two Conv output channels * * * midplane of each Bottleneck in each Stage***

class Resnet(nn.Module): # Initialize the structure and parameters of the network def __init__(self, block, layers, num_classes=1000): # self.inplane is the current number of fm channels self.inplane = 64 # The second parameter is the intermediate dimension of Identity Block self.stage1 = self.make_layer(self.block, 64, self.layers[0], stride=1) self.stage2 = self.make_layer(self.block, 128, self.layers[1], stride=2) self.stage3 = self.make_layer(self.block, 256, self.layers[2], stride=2) self.stage4 = self.make_layer(self.block, 512, self.layers[3], stride=2)Number of first two Conv output channels of Bottleneck in each Stage * * * midplane * * * * 4 = number of output channels of Conv3 = number of final output channels of Stage

The number of input channels of Bottleneck needs to be divided into Conv Block and Identity Block:

Number of input channels of Conv Block = number of final output channels of last Stage = midplane * 4 of last Stage

Number of input channels of Identity Block = number of output channels of Conv Block = midplane * 4 of this Stage

So * after Conv Block * adjust the number of input channels * * * self.inplane***

class Bottleneck(nn.Module): #Multiple of dimension expansion in each stage extention = 4 # Define initialized network and parameters def __init__(self, inplane, midplane, stride, downsample=None): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(inplane, midplane, kernel_size=1, stride=stride, bias=False) self.bn1 = nn.BatchNorm2d(midplane) self.conv2 = nn.Conv2d(midplane, midplane, kernel_size=3, stride=1, padding=1, bias=False) self.bn2 = nn.BatchNorm2d(midplane) self.conv3 = nn.Conv2d(midplane, midplane*self.extention, kernel_size=1, stride=1, bias=False) self.bn3 = nn.BatchNorm2d(midplane*self.extention) self.relu = nn.ReLU(inplace=False) class Resnet(nn.Module): def make_layer(self, block, midplane, block_num, stride): # Conv Block conv_block = block(self.inplane, midplane, stride=stride, downsample=downsample) self.inplane = midplane*block.extention # Identity Block for i in range (1, block_num): block_list.append(block(self.inplane, midplane, stride=1)) -

In ResNet18, I only defined the number of input channels * * * self.in for Stage1_ Ch = 64 * * * and number of output channels * * * self.out_ch = 64 * * *. The number of output channels for each Stage is calculated

class Resnet(nn.Module): def __init__(self, block, num_classes=1000): self.in_ch = 64 self.out_ch = 64 # block network self.stage1 = self.make_layer(self.block, self.in_ch, stride=1) self.stage2 = self.make_layer(self.block, self.in_ch, stride=2) self.stage3 = self.make_layer(self.block, self.in_ch, stride=2) self.stage4 = self.make_layer(self.block, self.in_ch, stride=2)The number of input and output channels of each Conv in the first Stage is the same, and no processing is required

Starting from the second Stage, the number of input channels of Conv1 in each Stage = the number of final output channels of the previous Stage;

Conv1 number of output channels = number of input channels * 2;

Number of input channels of Conv2 = number of output channels of Conv2 = number of output channels of Conv1;

class Bottleneck(nn.Module): extention = 2 # Specific network structure in Stage def __init__(self, in_ch, out_ch, stride, side): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(in_ch, out_ch, kernel_size=3, stride=stride, padding=1) self.bn1 = nn.BatchNorm2d(out_ch) self.conv2 = nn.Conv2d(out_ch, out_ch, kernel_size=3, padding=1, stride=1) self.bn2 = nn.BatchNorm2d(out_ch) self.relu = nn.ReLU(inplace=False) class Resnet(nn.Module): def make_layer(self, block, in_ch, stride): block1 = block(in_ch, self.out_ch, stride=stride, side=side) block2 = block(self.out_ch, self.out_ch, stride=1, side=None)Therefore, adjust the number of channels at the end of each Stage

self.in_ch = self.out_ch self.out_ch = self.in_ch * block.extention

Implementation code

import torch

import torch.nn as nn

class Bottleneck(nn.Module):

extention = 2

def __init__(self, in_ch, out_ch, stride, side):

super(Bottleneck, self).__init__()

# Specific network structure in Stage

self.conv1 = nn.Conv2d(in_ch, out_ch, kernel_size=3, stride=stride, padding=1)

self.bn1 = nn.BatchNorm2d(out_ch)

self.conv2 = nn.Conv2d(out_ch, out_ch, kernel_size=3, padding=1, stride=1)

self.bn2 = nn.BatchNorm2d(out_ch)

self.relu = nn.ReLU(inplace=False)

self.side = side

def forward(self, x):

# Initial input of residual edge

residual = x

# Two bottlenecks in Stage

out = self.relu(self.bn1(self.conv1(x)))

out = self.relu(self.bn2(self.conv2(out)))

# Is the input x of the residual edge processed

if self.side != None:

residual = self.side(x)

out += residual

out = self.relu(out)

return out

class Resnet(nn.Module):

def __init__(self, block, num_classes=1000):

self.block = block

# Number of initial I / O channels of Stage1

self.in_ch = 64

self.out_ch = 64

super(Resnet, self).__init__()

# Network of stage0

self.conv1 = nn.Conv2d(3, self.out_ch, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.out_ch)

self.relu = nn.ReLU()

self.maxpooling = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

# 4 Stage networks in the middle

self.stage1 = self.make_layer(self.block, self.in_ch, stride=1)

self.stage2 = self.make_layer(self.block, self.in_ch, stride=2)

self.stage3 = self.make_layer(self.block, self.in_ch, stride=2)

self.stage4 = self.make_layer(self.block, self.in_ch, stride=2)

# Subsequent classification processing network

self.avgpool = nn.AvgPool2d(7) # 7???

self.fc = nn.Linear(self.in_ch, num_classes)

def make_layer(self, block, in_ch, stride):

block_list = []

# Determine whether it is Identity Block or Conv Block, so as to determine the residual edge processing value

if stride != 1:

side = nn.Sequential(

nn.Conv2d(in_ch, self.out_ch, kernel_size=1, stride=2, bias=False),

nn.BatchNorm2d(self.out_ch))

else:

side = None

block1 = block(in_ch, self.out_ch, stride=stride, side=side)

block_list.append(block1)

block2 = block(self.out_ch, self.out_ch, stride=1, side=None)

block_list.append(block2)

self.in_ch = self.out_ch

self.out_ch = self.in_ch * block.extention

return nn.Sequential(*block_list)

def forward(self, x):

# Implementation of stage0

out = self.maxpooling(self.relu(self.bn1(self.conv1(x))))

# block

out = self.stage1(out)

out = self.stage2(out)

out = self.stage3(out)

out = self.stage4(out)

# classification

out = self.avgpool(out)

out = torch.flatten(out, 1)

out = self.fc(out)

return out

# call

resnet = Resnet(Bottleneck)

x = torch.rand(1, 3, 224, 224)

x = resnet(x)

print(x.shape)

epilogue

Although ResNet18 is finally implemented, there are still some problems in the code that I don't understand very well, because I didn't (didn't) learn classes well before 😭. I fully understand the follow-up, and then make a detailed interpretation of Resnet50 (digging a pit)

aaaaa this is my first blog!!! After a year of formal study, I finally started to produce one 👊👊👊 I hope you can support and praise! 🌹🌹🌹