I wrote the last blog in 2021 and the first blog in 2022 at 23:04 on December 31, 2021.

This year's motto: if there is a heat and a light, it will make a firefly. You can also make a light in the dark. You don't have to wait for the torch. After that, if there is no torch, I will be the only light.

Introduction

A few days ago, I sent an article on MNIST image recognition, but there are still some things that I didn't say very well. MNIST image is a gray image. Many small partners want to see the writing method of RGB. Let's make a cell classification with RGB map this time~

be careful

This blog is a revision, supplement and expansion of the previous blog.

Please refer to the previous blog: [pytoch] MNIST image classification code - super detailed interpretation_ CSDN_ Qianyugan blog

It's okay if you don't understand something. Read the supplementary content of this article after reading it.

program

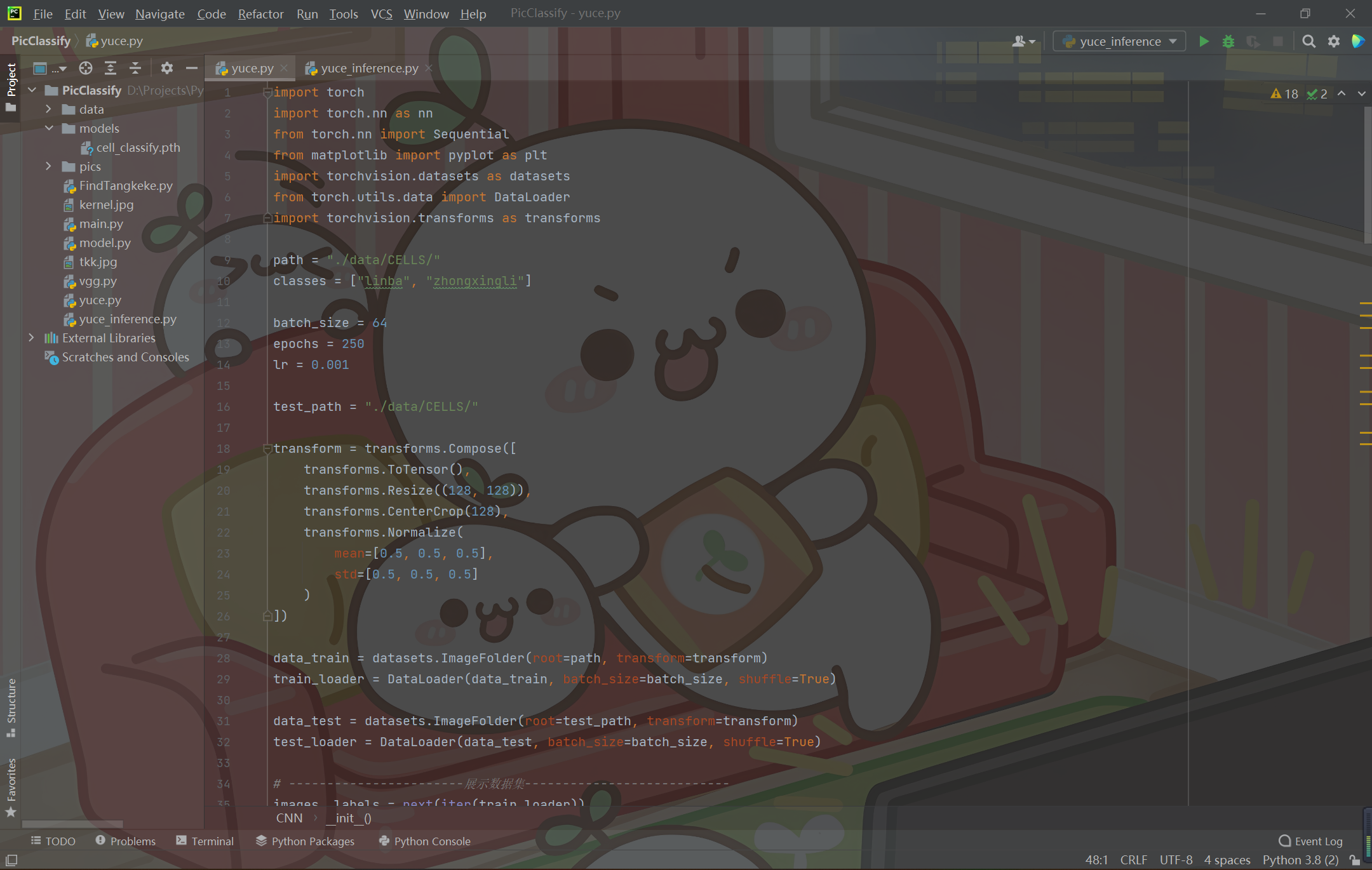

Libraries to import

import torch import torch.nn as nn from torch.nn import Sequential from matplotlib import pyplot as plt import torchvision.datasets as datasets from torch.utils.data import DataLoader import torchvision.transforms as transforms

The torch library doesn't explain.

torch.nn library is a module that contains neural networks, packages for inheritance, and some function methods, such as nn functional. Because when we define the network later, we start from nn Module inherits and uses convolution and activation functions in nn, so this library is essential.

Sequential (Chinese: sequence) is torch NN for the "integration" layer. It's like a biscuit box. Put the biscuits "like a sequence".

matplotlib here is a library for displaying images. We introduced pyplot to display images.

torchvision contains some data sets, models and image processing methods. Here we use datasets to process datasets (our own images).

torch. utils. The DataLoader in data is used to load data sets for training.

torchvision.transforms is used here to define the form of data set processing. Just look down.

Set data set

Here, let's assume that the path of our dataset is data/CELLS / in the folder where your python file is currently located. There are two subfolders under this folder, one is "linba" and the other is "xianxingli"

In a word, it's 2022-1-1 0:00. This blog has been written for a year (laughter)

That is, our data set is divided into pictures of lymphocytes and linear granulocytes.

So we need to set two categories: classes = ["linba", "zhongxingli"]

Let's set the batch size bit 64, iterate 250 times, and the learning rate is 0.001.

Choose a small number for the learning rate, which will help the gradient decline. See the previous blog for specific reasons.

There is also a get to write here_ Variable() function to obtain the automatic derivation result after cuda acceleration.

Here we will explain epochs and batch in detail again_ szie:

->batch_ Size represents the amount of data for each training in each round of iterative training;

->Epochs indicates the number of rounds of training.

Each Iteration is a weight update, and each weight update requires a batch_ The loss function is obtained by Forward transfer of size data, and then the parameters are updated by Backward conduction (note that in this process, the gradient needs to be set to 0, which will be described later). One Iteration is equal to using batch_size samples are trained once. For example, there are 256 sample data. After training these sample data completely, you need to:

->batch_size=64;

->4 iterations;

->epochs=1.

Usually, epochs is set to not only once, which is the same as flour grinding. One round is not enough, and more rounds can get more fine flour.

At this time, because we deal with pictures, we should deal with tensors (Pytorch deals with tensors, similar to vectors and matrices). How do we turn dataset images into dataset matrices?

At this time, it is necessary to set the transform.

At the same time, our pictures should not be too large, otherwise it will make the training very slow.

So we first convert the picture into a tensor, and then cut it into

w

∗

h

=

128

∗

128

w*h=128*128

w * h=128 * 128 (w is width, h is height, c is channel, channel is color channel, RGB is red, green and blue). At the same time, we also make each pixel value obey the normal distribution with the standard of 0.5.

Because we need two parts: training set and test set, the data set is divided into two processing contents. Finally, the training set and test set are loaded and the data set is processed.

Finally, we show what a dataset image looks like after it is converted into a vector and processed (optional).

All codes are as follows:

path = "./data/CELLS/"

classes = ["linba", "zhongxingli"]

def get_variable(x):

x = torch.autograd.Variable(x)

return x.cuda() if torch.cuda.is_available() else x

batch_size = 64

epochs = 250

lr = 0.001

test_path = "./data/CELLS/"

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Resize((128, 128)),

transforms.CenterCrop(128),

transforms.Normalize(

mean=[0.5, 0.5, 0.5],

std=[0.5, 0.5, 0.5]

)

])

data_train = datasets.ImageFolder(root=path, transform=transform)

train_loader = DataLoader(data_train, batch_size=batch_size, shuffle=True)

data_test = datasets.ImageFolder(root=test_path, transform=transform)

test_loader = DataLoader(data_test, batch_size=batch_size, shuffle=True)

# -----------------------Presentation dataset---------------------------

images, labels = next(iter(train_loader))

img = images[0].numpy().transpose(1, 2, 0)

plt.imshow(img)

plt.title(labels[0])

plt.show()

# -----------------------Presentation dataset---------------------------

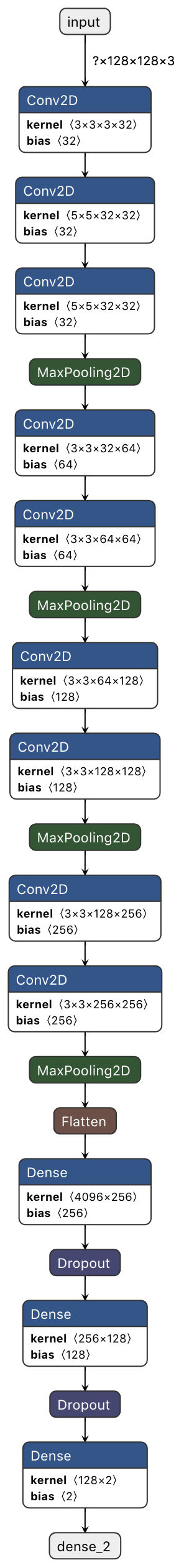

Define network

The network structure is shown in the figure below:

Note that the entered "x128x128x3" means "?" Picture, "128x128x3" is

w

∗

h

∗

c

=

128

∗

128

∗

3

w*h*c=128*128*3

w∗h∗c=128∗128∗3.

How to define the network? First, we'll start with NN Module inherits, then "fills" into our architecture, and finally performs forward calculation in the forward function.

Note here that the forward function does not need to be explicitly called because NN A function in the module class will automatically call forward.

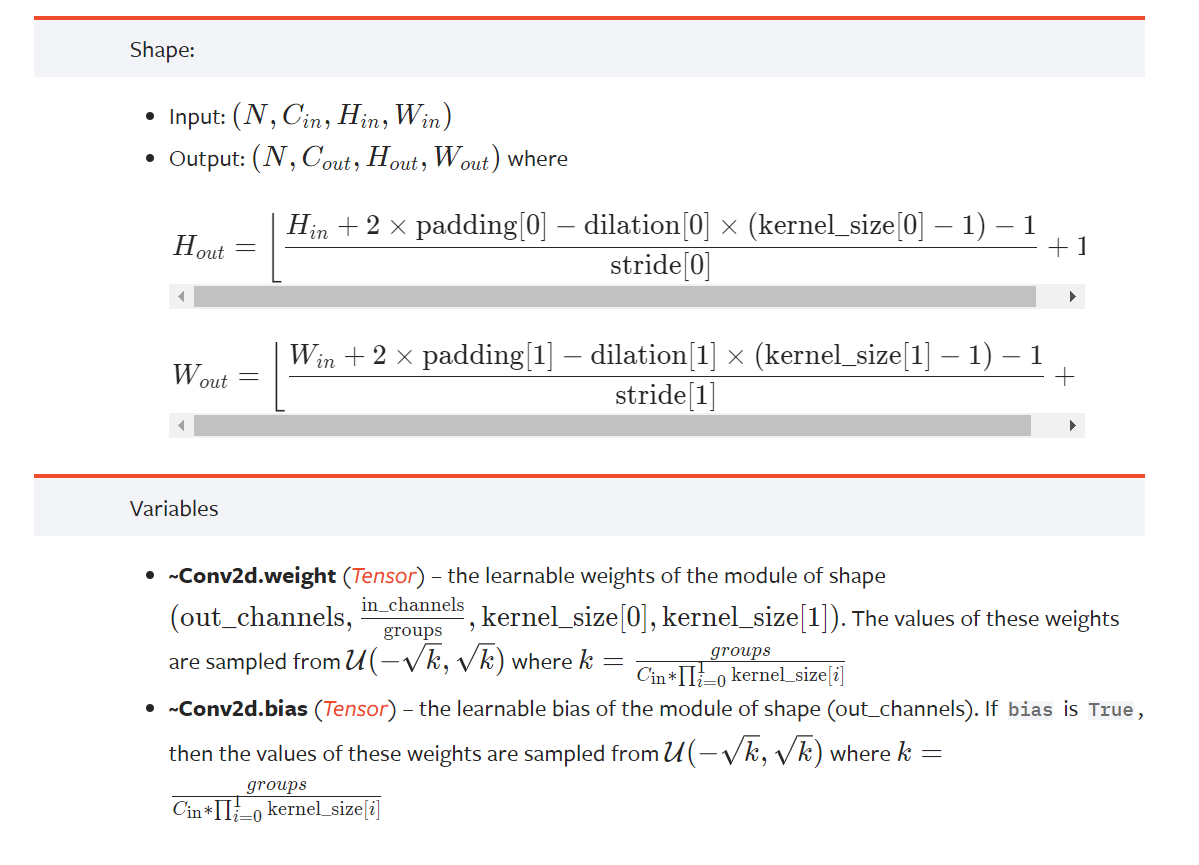

The last blog did not specify how the parameters of each layer are calculated here. This part is very important, because the input and output must match when finally passed into the full connection layer, but I didn't talk about it in detail in the previous article.

NN is posted here Figure of conv2d() in pytoch official document:

- > poke me - original link < –

Notice the symbols here,

H

o

u

t

H_{out}

Hout , is rounded down.

The way classes are defined is similar to the previous article.

code:

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = Sequential(

nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv2 = Sequential(

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv3 = Sequential(

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(in_channels=128, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv4 = Sequential(

nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.dense = Sequential(

nn.Linear(8 * 8 * 256, 256),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(256, 128),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(128, 2)

)

def forward(self, x):

x1 = self.conv1(x)

x2 = self.conv2(x1)

x3 = self.conv3(x2)

x4 = self.conv4(x3)

x5 = x4.view(-1, 8 * 8 * 256)

out = self.dense(x5)

return out

train

I talked about this part in detail in my last article. I only post code:

cnn = CNN()

if torch.cuda.is_available():

cnn = cnn.cuda()

lossF = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(cnn.parameters(), lr=lr)

cnn.train()

loss_pth = 999999999.99

i_pth = 0

for epoch in range(epochs):

running_loss = 0.0

running_correct = 0.0

print("Epochs [{}/{}]".format(epoch, epochs))

for data in train_loader:

X_train, y_train = data

X_train, y_train = get_variable(X_train), get_variable(y_train)

outputs = cnn(X_train)

_, predict = torch.max(outputs.data, 1)

# ----------------------------------

optimizer.zero_grad()

loss = lossF(outputs, y_train)

loss.backward()

optimizer.step()

# ----------------------------------

running_loss += loss.item()

running_correct += torch.sum(predict == y_train.data)

testing_correct = 0.0

for data in test_loader:

X_test, y_test = data

X_test, y_test = get_variable(X_test), get_variable(y_test)

outputs = cnn(X_test)

_, predict = torch.max(outputs.data, 1)

testing_correct += torch.sum(predict == y_test.data)

print("Loss: {} Training Accuracy: {}% Testing Accuracy:{}%".format(

running_loss,

100 * running_correct / len(data_train),

100 * testing_correct / len(data_test)

))

if running_loss < loss_pth:

loss_pth = running_loss

torch.save(cnn, "./models/cell_classify_%d.pth" % i_pth)

i_pth = i_pth + 1

torch.save(cnn, "cell_classify.pth")

print("Training complete! The minimum loss is:%f" % loss_pth)

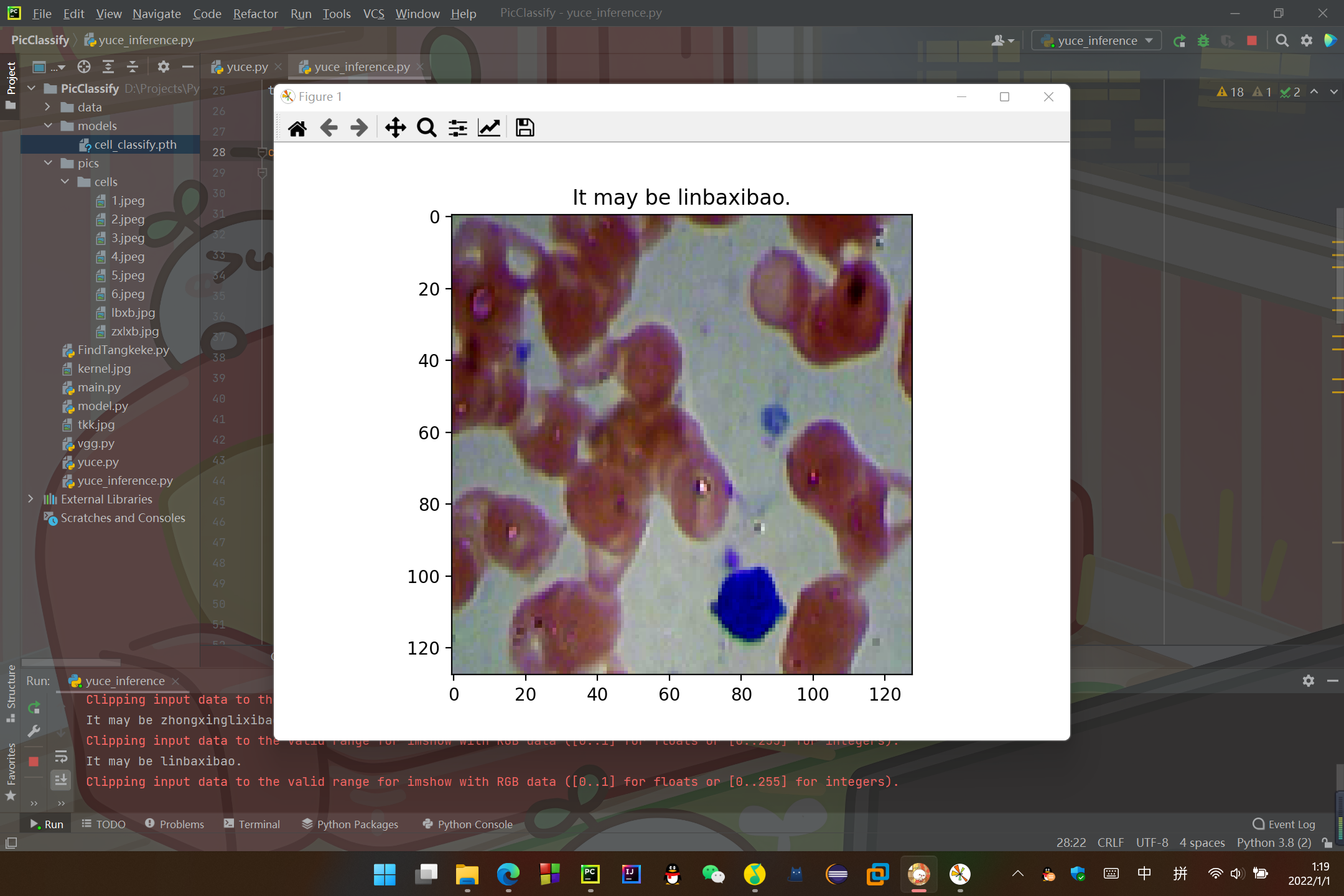

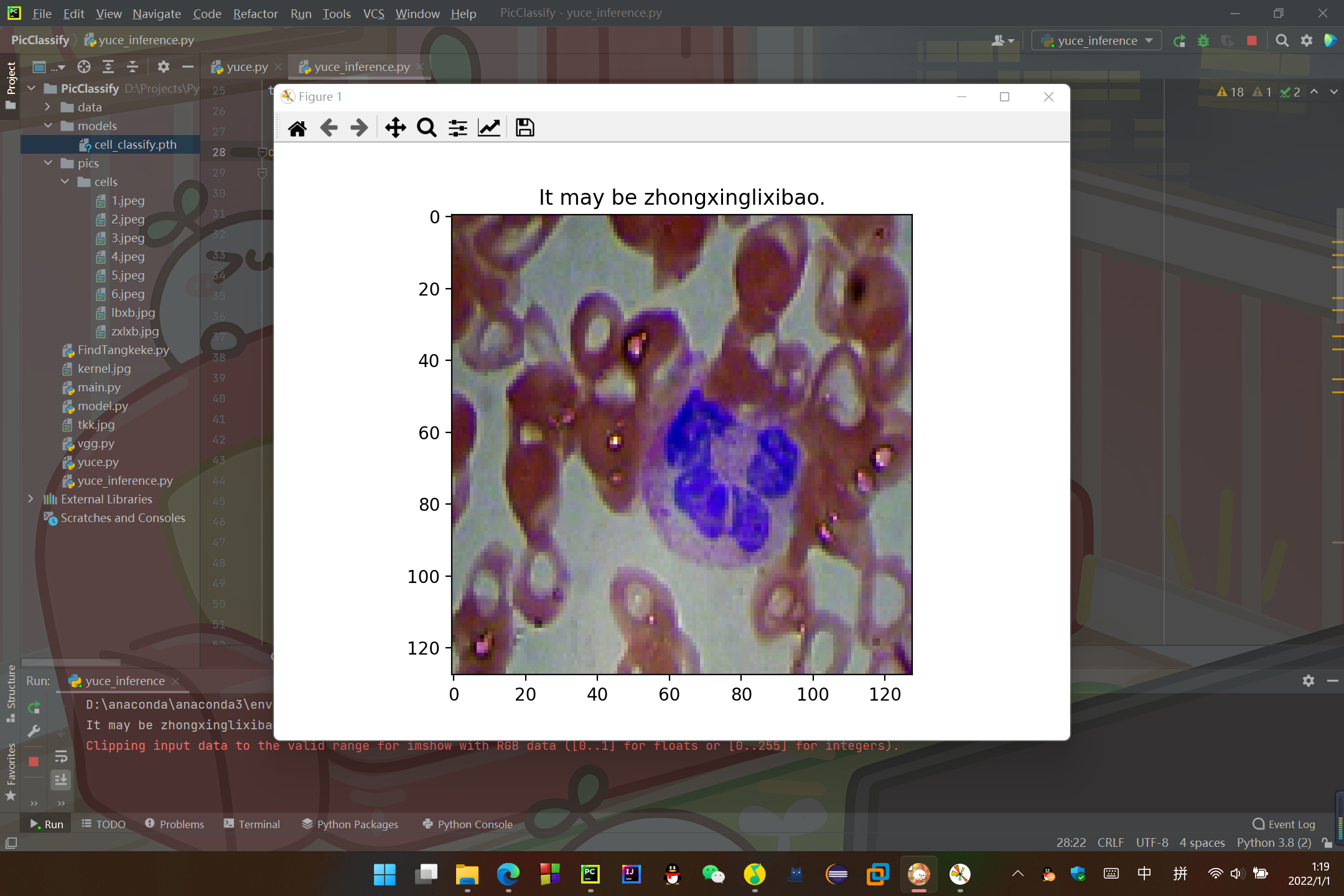

forecast

This part was also discussed in detail in my last blog. I only post code.

There is also a folder under the pics folder, which contains pictures to be predicted:

import torch

import torch.nn as nn

from torch.nn import Sequential

from matplotlib import pyplot as plt

import torchvision.datasets as datasets

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

model_path = "./models/cell_classify.pth"

test_path = "./pics/"

classes = ["linbaxibao", "zhongxinglixibao"]

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Resize((128, 128)),

transforms.CenterCrop(128),

transforms.Normalize(

mean=[0.5, 0.5, 0.5],

std=[0.5, 0.5, 0.5]

)

])

data_test = datasets.ImageFolder(root=test_path, transform=transform)

test_loader = DataLoader(data_test, batch_size=64, shuffle=True)

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = Sequential(

nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv2 = Sequential(

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv3 = Sequential(

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(in_channels=128, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv4 = Sequential(

nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.dense = Sequential(

nn.Linear(8 * 8 * 256, 256),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(256, 128),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(128, 2)

)

def forward(self, x):

x1 = self.conv1(x)

x2 = self.conv2(x1)

x3 = self.conv3(x2)

x4 = self.conv4(x3)

x5 = x4.view(-1, 8 * 8 * 256)

out = self.dense(x5)

return out

net = torch.load(model_path)

net.eval()

def get_variable(x):

x = torch.autograd.Variable(x)

return x.cuda() if torch.cuda.is_available() else x

def inference_model(test_img):

for data in test_loader:

test, _ = data

img, _ = data

test = get_variable(test)

outputs = net(test)

rate, predict = torch.max(outputs.data, 1)

for i in range(len(data_test)):

print("It may be %s." % classes[predict[i]])

img0 = img[i]

img0 = img0.numpy().transpose(1, 2, 0)

plt.imshow(img0)

plt.title("It may be %s." % classes[predict[i]])

# plt.title("It may be %s, probability is %f." % (classes[predict[i]], rate[i]))

plt.show()

inference_model(test_path)

Results (the prediction results are at the top of the picture and in the console):

You can see that the forecast is quite accurate~

Some gossip

It's 1:24 on January 1, 2022. I've grown up another year (alas, I'm old again)

Looking back on 2021. On April 19, 2021, I suddenly felt a fever in my head and wrote down this sentence:

Unfortunately, this wish has only been realized, but it has not been fully realized (who says that artificial intelligence must be just a robot that can chat (~ o ~ 3 ~ ~)). My original intention is to be an artificial intelligence like Xiaoai's classmates

At present, super-resolution reconstruction is being done.

At the beginning of 2021, I signed up for an in-depth learning class on CSDN. At that time, I just planned to listen and play. Who knew that I was interested in listening, I taught myself. Without guidance, I took many detours, but I still came down.

I hated computers most when I was a child (before grade 6 of primary school). But in a sixth grade computer class, I came into contact with painting. I thought it was very fun. I went to study how to download such software. As a result, I came into contact with Photoshop. Anyone who knows the charge for this thing will learn how to crack it by himself. In the first grade of junior middle school, I think why can't I make such a program myself? That's how I came into contact with programming. (later, I almost caused an accident because I entered the background of a website. Fortunately, I stopped at the precipice).

The author likes programming very much. In order to program, he is often scolded by his parents before the college entrance examination. Don't do these useless things. I don't understand why people hate programming (see the following paragraph for details of this place).

The author didn't like mathematics before. In the past, mathematics has always been one of my most hated subjects, because I think my mathematical knowledge has no place to use. It's very boring. I don't understand why some people like mathematics so much (I used to think these people were deceptive) (although I have to cheat myself for the college entrance examination, I love mathematics very much). But I also don't understand why people hate programming (I really don't understand, really good things hate). Gradually, I understand - I like programming and those who like mathematics are not the same nature! So those who like math are telling the truth. They really like math. Nevertheless, I didn't like math before the college entrance examination.

I studied computer science and technology in an ordinary undergraduate university. I studied ACM for half a year in my freshman year. I was exposed to machine learning during the winter vacation of my freshman year. Interestingly, since I came into contact with machine learning, I found that mathematics turned out to be so practical. Combined with programming, it seems to give birth to some magical power.

Ah. It's already 1:47. Talk later. I'm asleep.

Finally, I wish you all:

Happy New Year 2022!

Post a favorite picture (invasion and deletion):