Figure 2.1 distribution of news categories

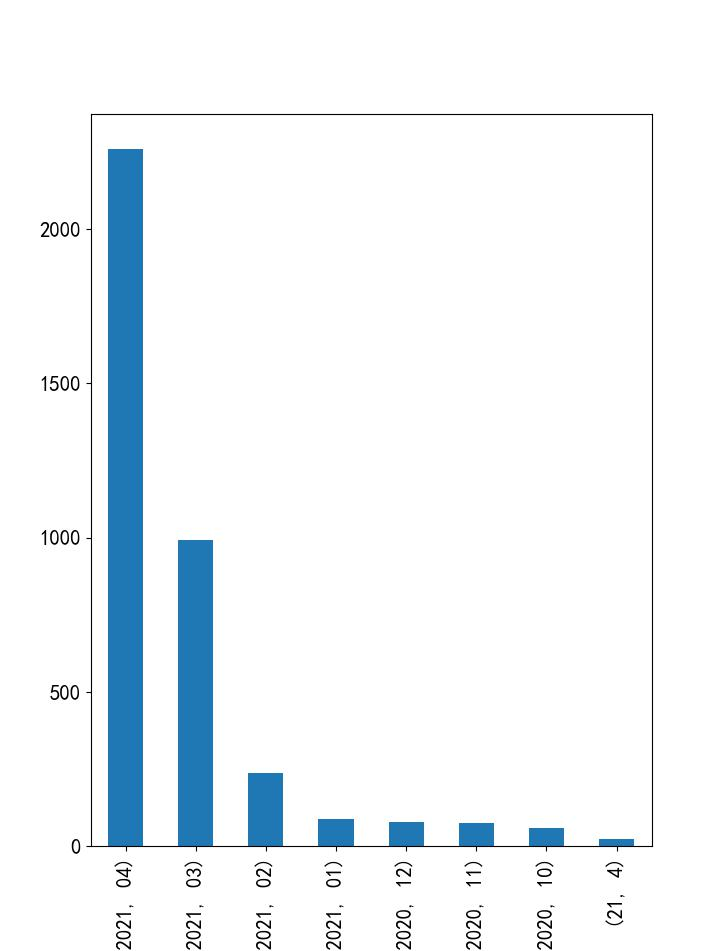

Figure 2.2 news time distribution

| coefficient | 0-0.2 | 0.2-0.4 | 0.4-0.6 | 0.6-0.8 | 0.8-1 |

| Consistency coefficient | Very low | commonly | secondary | height | Almost identical |

Table 2.5 advantages and disadvantages of evaluation indicators

Classification accuracy: 0.6059661620658949

| precision | recall | f1-score | support | |

| 0 | 0.57 | 0.95 | 0.71 | 298 |

| 1 | 0.47 | 0.76 | 0.58 | 241 |

| 2 | 0.66 | 0.91 | 0.77 | 236 |

| 3 | 0.59 | 0.61 | 0.60 | 259 |

| 4 | 0.81 | 0.86 | 0.83 | 264 |

| 5 | 0.50 | 0.62 | 0.55 | 259 |

| 6 | 0.83 | 0.42 | 0.56 | 146 |

| 7 | 0.69 | 0.15 | 0.25 | 133 |

| 8 | 1.00 | 0.02 | 0.04 | 92 |

| 9 | 0.75 | 0.04 | 0.08 | 68 |

| 10 | 0.00 | 0.00 | 0.00 | 66 |

| 11 | 0.00 | 0.00 | 0.00 | 48 |

| 12 | 1.00 | 1.00 | 1.00 | 47 |

| 13 | 0.00 | 0.00 | 0.00 | 50 |

| 14 | 0.00 | 0.00 | 0.00 | 39 |

| precision | recall | f1-score | support | |

| accuracy | 0.61 | 2246 | ||

| macro avg | 0.52 | 0.42 | 0.40 | 2246 |

| weighted avg 0.59 0.61 0.54 2246 Kappa:0.5562815523035762 | ||||

import pandas as pd

import matplotlib.pyplot as plt

import warnings

plt.rcParams["font.family"] = "SimHei"

plt.rcParams["axes.unicode_minus"] = False

plt.rcParams["font.size"] = 15

warnings.filterwarnings("ignore")

#Read data

news = pd.read_csv("news (1).csv", encoding="gbk")

print(news.head())

###Missing value processing

news.info()

##Fill in the missing news title with a news introduction

index = news[news.title.isnull()].index

news["title"][index]=news["brief"][index]

##Fill in the missing news profile with news headlines

index = news[news.brief.isnull()].index

news["brief"][index]=news["title"][index]

news.isnull().sum()

#Duplicate value processing

print(news[news.duplicated()])

news.drop_duplicates(inplace=True)

#News category distribution

###Data exploration descriptive analysis

t = news["News category"].value_counts()

print(t)

t.plot(kind="bar")

#Time of occurrence

t = news["Release time"].str.split("-", expand=True)

t2 = t[[0,1]].value_counts()

t2.plot(kind="bar")

#Text content cleaning

import re ####Text processing sub calls the compiled regular object to process the text

re_obj = re.compile(r"['~`!#$%^&*()_+-=|\';:/.,?><~·!@#¥%......&*()-+-=": ';,. ,?><{}': []<>''""\s]+")

def clear(text):

return re_obj.sub("", text)

news["brief"] = news["brief"].apply(clear)

news.sample(10)

#participle

import jieba

def cut_word(text): ###Word segmentation: use jieba's lcut method to segment words and generate a list,

####cut() generates a generator that takes no or very little space. It can be converted into a list using list()

return jieba.lcut(text)

news["brief"] = news["brief"].apply(cut_word)

news.sample(5)

print(news["brief"].head())

#Stop word processing

def get_stopword(): ####Deleting stop words means that a large number of words appear in the text, which are useless for classification, reduce storage and computing time

s = set() ###The key mapping data list processed by hash stores the mapping data in the order of subscripts

with open("Chinese stop list.txt", encoding="gbk") as f:

for line in f:

s.add(line.strip())

return s

def remove_stopword(words):

return [word for word in words if word not in stopword]

stopword = get_stopword()

news["brief"] = news["brief"].apply(remove_stopword)

news.sample(5)

print(news["brief"].head())

#Label conversion

label_mappping = {"Painting and Calligraphy": 0, "character": 1, "international":2,"domestic": 3,"healthy": 4,"Sociology": 5,"rule by law": 6,"life": 7,"science and technology": 8,"education": 9,"Entertainment": 10,

"rural economy": 11,"Agriculture, rural areas and farmers": 12,"military": 13,"Economics": 14}

news["News category"] = news["News category"].map(label_mappping)

print(news.head())

print("--------------------------------------3------------------------------------------")

#Segmentation data

from sklearn.model_selection import train_test_split

x =news["brief"]

y = news["News category"]

x_train, x_test, y_train, y_test = train_test_split(x.values, y.values, test_size=0.5)

def format_transform(X): #x is the data set (training set or test set)

words =[]

for line_index in range(len(X)):

try:

words.append(" ".join(X[line_index]))

except:

print("There is a problem with the data format")

return words

#train

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

words_train = format_transform(x_train)

vectorizer = TfidfVectorizer(analyzer='word', max_features=4000,ngram_range=(1, 3),lowercase = False)

vectorizer.fit(words_train)#Convert to vector format

classifier = MultinomialNB()

classifier.fit(vectorizer.transform(words_train), y_train)

#Test and inspection related results

from sklearn.metrics import classification_report

words_test = format_transform(x_test)

score = classifier.score(vectorizer.transform(words_test), y_test)

print("----------------------------------Classification result report-----------------------------------------")

print("Classification accuracy:" + str(score))

y_predict = classifier.predict(vectorizer.transform(words_test))

print(classification_report(y_test, y_predict))

#evaluating indicator

from sklearn.metrics import cohen_kappa_score

kappa = cohen_kappa_score(y_test, y_predict)

print(kappa)