Basic concepts of Nginx

definition

Nginx (engine x) is a high-performance HTTP and reverse proxy web server. It also provides IMAP/POP3/SMTP services. Nginx was developed by Igor sesoyev for Rambler.ru, the second most visited site in Russia. It is famous for its stability, rich feature set, simple configuration file and low consumption of system resources

Nginx is a lightweight web server / reverse proxy server and e-mail (IMAP/POP3) proxy server, which is distributed under BSD like protocol. It is characterized by being distributed under the BSD like protocol. It is characterized by small memory and strong concurrency. In fact, nginx's concurrency is better in the same type of web server

Nginx is specially developed for performance optimization. Performance is its most important consideration. It attaches great importance to efficiency in implementation, can withstand the test of high load, and supports up to 50000 concurrent connections

Reverse proxy

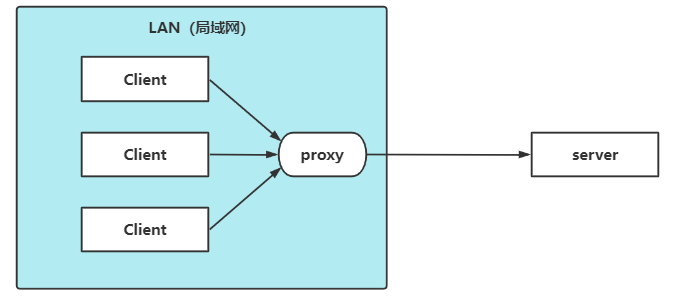

Forward proxy

definition

The general access process is that the client directly accesses, sends a request to the target server and obtains the content. After using the forward proxy, the client sends a request to the proxy server and specifies the target server (original server). Then the proxy server communicates with the original server, transfers the request and obtains the content, and then returns it to the client. The forward proxy hides the real client and sends and receives requests for the client, making the real client invisible to the server

For example, if the browser cannot access Google, you can use a proxy server to help you access Google. This server is called forward proxy

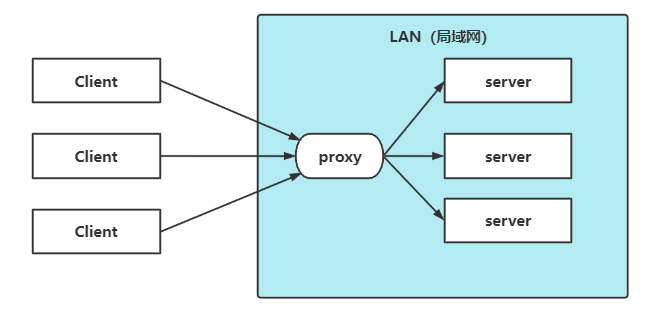

Reverse proxy

definition

Compared with the general process, after using the reverse proxy, the server that directly receives the request is the proxy server, and then forwards the request to the server that actually processes it on the internal network, and the obtained results are returned to the client. Reverse proxy hides the real server and sends and receives requests for the server, making the real server invisible to the client. It is generally used when processing cross domain requests

For example, when you eat in a restaurant, you can order Sichuan food, Guangdong food and Jiangsu and Zhejiang food. The restaurant also has chefs of three cuisines 👨🍳, But as a customer, you don't care which chef makes the dishes for you, just order. The waiter assigns the dishes in your menu to different chefs for specific processing. Then the waiter is the reverse proxy server

In short, generally, the forward proxy is used as the proxy for the client, and the reverse proxy is used as the proxy for the server

Advantages of using reverse proxy

The reverse proxy server can hide the existence and characteristics of the source server. It acts as an intermediate layer between the Internet cloud and the web server. This is good for security, especially when you use web hosted services

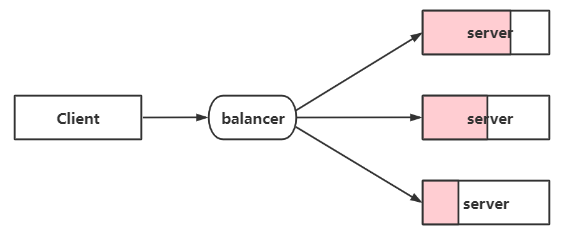

load balancing

Generally, the client sends multiple requests to the server, and the server processes the requests. Some of them may need to operate some resources, such as database and static resources. After the server processes them, the results will be returned to the client

For the early rising system, this mode is competent when the functional requirements are not complex and there are relatively few concurrent requests, and the cost is also low. With the continuous growth of the amount of information, the rapid growth of access and data, and the continuous increase of system business complexity, this approach can not meet the requirements. When the amount of concurrency is particularly large, the server is easy to collapse

In the case of explosive growth of requests, no matter how strong the performance of a single machine is, it can not meet the requirements. At this time, the concept of cluster arises. A single server can use multiple servers, and then distribute the requests to each server and the load to different servers. This is load balancing. The core is "sharing pressure". Nginx implements load balancing, which generally refers to forwarding requests to the server cluster

How is the load balancing algorithm implemented

In order to avoid server crash, the pressure of the server is shared through load balancing. The polymorphic servers are formed into a cluster. When users access, they first access a forwarding server, and then the forwarding server distributes the access to the servers with little pressure

Load balancing strategy

polling

Each request is allocated to different back-end servers one by one in chronological order. If a back-end server goes down, it can automatically eliminate the faulty system

upstream myserver {

server 127.0.0.1:8080;

server 127.0.0.1:8081;

}

weight

- The greater the value of weight, the greater the distribution

- The higher the access probability, it is mainly used when the performance of each back-end server is uneven. The second is to set different weights in the case of master-slave, so as to make rational and effective use of host resources.

upstream myserver {

server 127.0.0.1:8080 weight=8;

server 127.0.0.1:8081 weight=2;

}

- The higher the weight, the greater the probability of being visited. For example, 20% and 80% respectively.

ip_hash(IP binding)

Each request is allocated according to the hash result of the access IP, so that visitors from the same IP can access a back-end server, and can effectively solve the problem of session sharing in dynamic web pages

upstream myserver {

ip_hash

server 127.0.0.1:8080;

server 127.0.0.1:8081;

}

Fair (third party plug-in)

- Upstream must be installed_ Fair module

- Compare weight and ip_hash is a more intelligent load balancing algorithm. fair algorithm can intelligently balance the load according to the page size and loading time, and give priority to the distribution with short response time

- The server that responds quickly assigns the request to that server

upstream myserver {

server 127.0.0.1:8080;

server 127.0.0.1:8081;

fair

}

url_ Hash (third party plug-in)

- The hash package of Nginx must be installed

- Allocating requests according to the hash result of the access url, so that each url is directed to the same back-end server, can further improve the efficiency of the back-end cache server

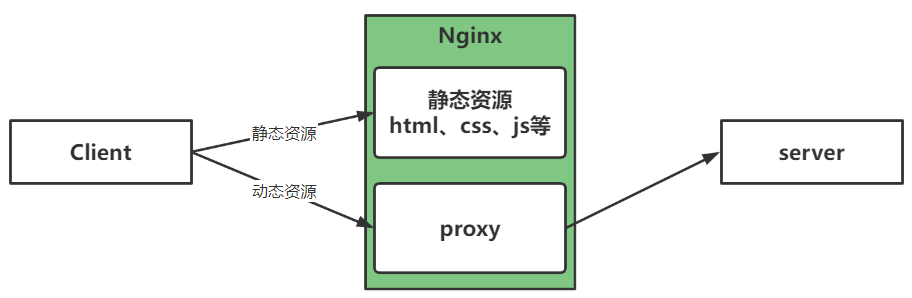

Dynamic and static separation

In order to speed up the resolution of the website, dynamic pages and static pages can be parsed by different servers to speed up the parsing speed and reduce the pressure of the original single server

Generally speaking, it is necessary to separate dynamic resources from static resources. Due to the high concurrency and static resource caching characteristics of Nginx, static resources are often deployed on Nginx. If the request is a static resource, you can obtain the resources directly from the static resource directory. If it is a dynamic resource request, you can use the principle of reverse proxy to forward the request to the corresponding background application for processing, So as to realize dynamic and static separation

After the front and back ends are separated, the access speed of static resources can be greatly improved. Even if the dynamic service is unavailable, the access of static resources will not be affected.

Advantages and disadvantages of Nginx

advantage

- Small memory consumption, high concurrent connection and fast processing response

- It can realize http server, virtual host, direction agent and load balancing

- Nginx configuration is simple

- You can not expose the real server IP address

shortcoming

Poor dynamic processing: nginx handles static files well and consumes less memory, but it is very slow to process dynamic pages. Now the front end generally uses nginx as the reverse agent to resist the pressure

Application scenario

- http server. Nginx is an http service that can provide http services independently. Can do web static server

- Virtual host. Multiple websites can be virtualized on one server, such as virtual machines used by personal websites

- Reverse proxy, load balancing. When the number of visits to the website reaches a certain level, and a single server cannot meet the user's request, multiple server clusters need to be used. nginx can be used as the reverse proxy. In addition, multiple servers can share the load equally, and there should be no downtime of a server due to high load and idle of a server

- Security management can also be configured in Nginx. For example, Nginx can be used to build API interface gateway to intercept each interface service

Nginx installation, common commands and configuration files

Nginx installation

yum could not be installed because the rpm package is missing

rpm -Uvh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

Then install using yum or up2date

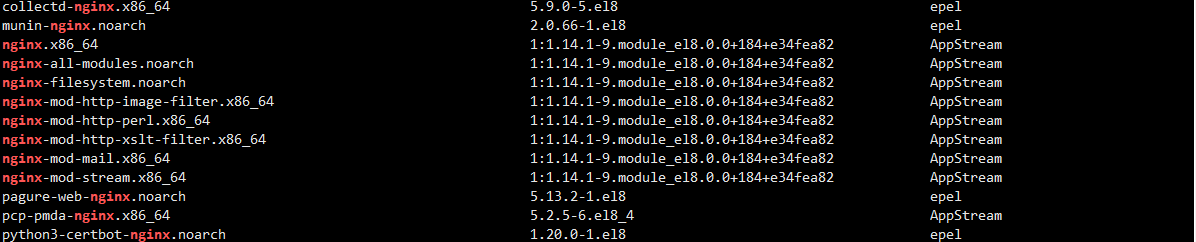

yum list | grep nginx

# install yum install nginx # View installed version nginx - v

View installation folder

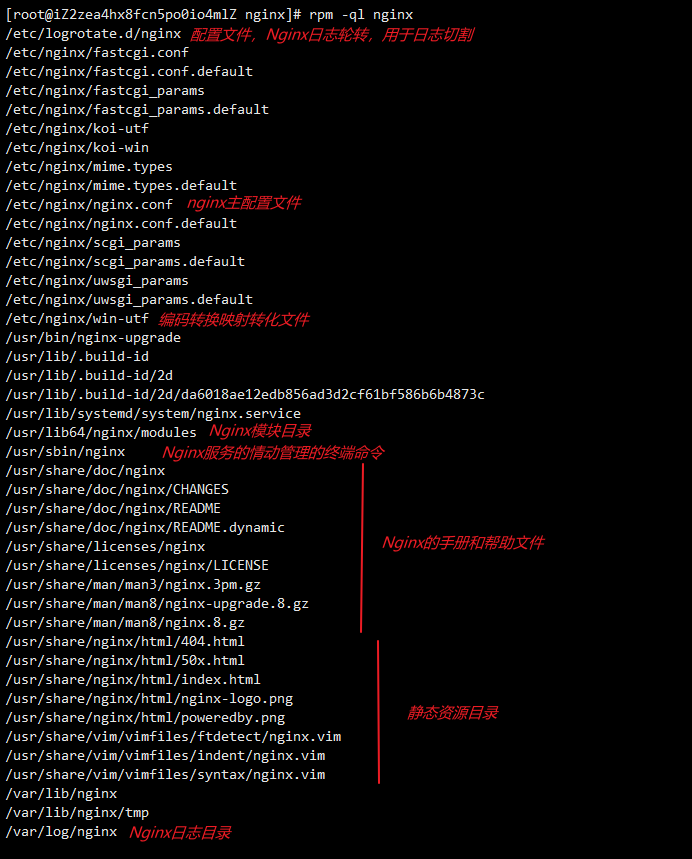

rpm -ql nginx

/The / etc/nginx/conf.d / folder is where we store the configuration items for sub configuration. The / etc/nginx/nginx.conf main configuration file will import all the sub configuration items in this folder by default

/usr/share/nginx/html / folder, usually static files are placed in this folder, or you can put them according to your own habits

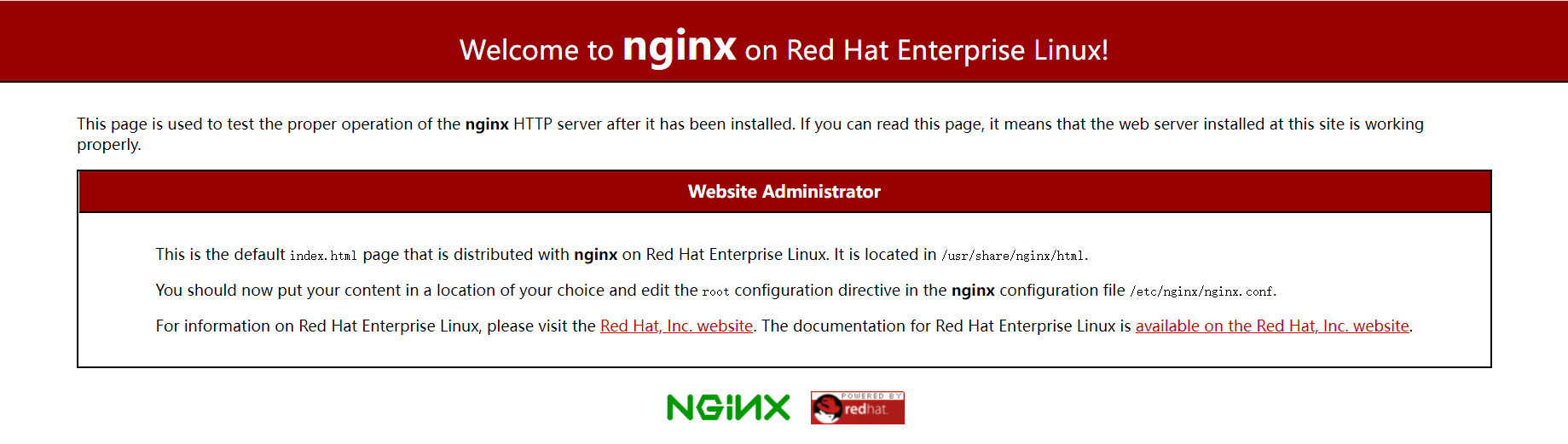

Access nginx server through ip address and port number

Operate the firewall and open port 80

systemctl start firewalld # Turn on the firewall systemctl stop firewalld # Turn off firewall systemctl status firewalld # Check the firewall on status. If running is displayed, it is running firewall-cmd --reload # Restart the firewall and reload the port permanently # Add an open port, -- permanent means permanently open. If not, it is temporarily open. It will become invalid after restart firewall-cmd --permanent --zone=public --add-port=8888/tcp # View the firewall, and you can also see the added ports firewall-cmd --list-all

start nginx

systemctl start nginx

Nginx common commands

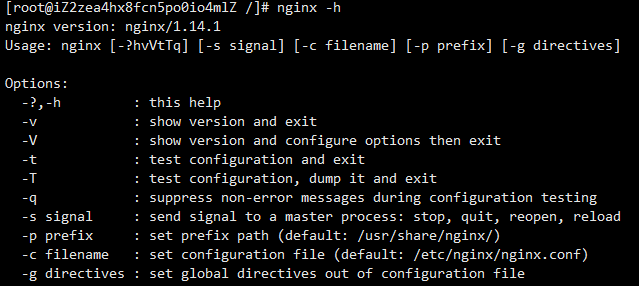

Nginx command, enter nginx -h in the console to see the complete command

nginx -s reload # Send a signal to the main process, reload the configuration file, and hot restart nginx -s reopen # Restart Nginx nginx -s stop # Quick close nginx -s quit # Wait for the worker process to finish processing before closing nginx -T # View the final configuration of the current Nginx nginx -t -c <Configuration path> # Check whether there is a problem with the configuration. If it is already in the configuration directory, - c is not required

systemctl is the main command of the linux system application management tool systemd. It is mainly used to manage the system. We can also use it to manage Nginx

systemctl start nginx # Start Nginx systemctl stop nginx # Stop Nginx systemctl restart nginx # Restart Nginx systemctl reload nginx # Reload Nginx for after modifying the configuration systemctl enable nginx # Set boot to start Nginx systemctl disable nginx # Turn off boot and start Nginx systemctl status nginx # View Nginx running status

Nginx profile

The configuration file location of Nginx is / etc/nginx/nginx.conf

Structure diagram

main # Global configuration, effective for global ├── events # The configuration affects the Nginx server or the network connection to the user ├── http # Configure most functions such as proxy, cache, log definition and the configuration of third-party modules │ ├── upstream # Configuring the specific address of the back-end server is an integral part of load balancing configuration │ ├── server # Configure the relevant parameters of the virtual host. There can be multiple server blocks in an http block │ ├── server │ │ ├── location # The server block can contain multiple location blocks, and the location instruction is used to match the uri │ │ ├── location │ │ └── ... │ └── ... └── ...

Typical configuration

user nginx; # The running user is nginx by default, which can be left unset

worker_processes 1; # The number of Nginx processes is generally set to be the same as the number of CPU cores

error_log /var/log/nginx/error.log warn; # Error log storage directory of Nginx

pid /var/run/nginx.pid; # pid storage location when Nginx service starts

events {

use epoll; # Use epoll's I/O model (if you don't know which polling method Nginx should use, you will automatically select the one that is most suitable for your operating system)

worker_connections 1024; # Maximum concurrency allowed per process

}

http { # Most functions such as proxy, cache, log definition and the configuration of third-party modules are set here

# Set log mode

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main; # Nginx access log storage location

sendfile on; # Turn on efficient transmission mode

tcp_nopush on; # Reduce the number of network message segments

tcp_nodelay on;

keepalive_timeout 65; # The time to remain connected, also known as timeout, in seconds

types_hash_max_size 2048;

include /etc/nginx/mime.types; # File extension and type mapping table

default_type application/octet-stream; # Default file type

include /etc/nginx/conf.d/*.conf; # Load child configuration item

server {

listen 80; # Configure listening ports

server_name localhost; # Configured domain name

location / {

root /usr/share/nginx/html; # Site root

index index.html index.htm; # Default home page file

deny 172.168.22.11; # Forbidden ip address, which can be all

allow 172.168.33.44;# The ip address allowed to access, which can be all

}

error_page 500 502 503 504 /50x.html; # The default access page is 50x

error_page 400 404 error.html; # ditto

}

}

The structure of an Nginx configuration file is as shown in nginx.conf. The syntax rules of the configuration file are as follows:

- The configuration file consists of instructions and instruction blocks;

- Each instruction is in; The semicolon ends, and the instruction and parameter are separated by a space symbol;

- The instruction block organizes multiple instructions together with {} braces;

- The include statement allows multiple configuration files to be combined to improve maintainability;

- Use # symbols to add notes to improve readability;

- Use the $symbol to use variables;

- The parameters of some instructions support regular expressions;

Nginx configuration instance

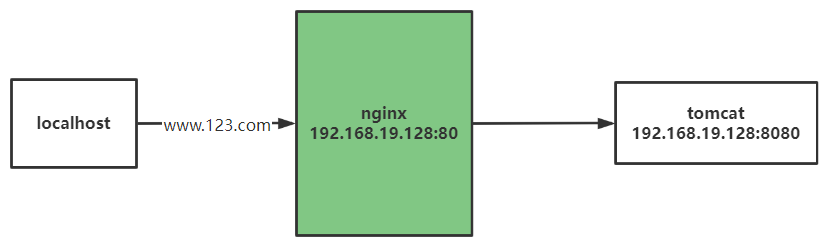

Reverse proxy configuration instance

Example 1

Install tomcat server in linux, and open firewall port 8080 to access the test

Local configuration domain name

C:\Windows\System32\drivers\etc\hosts

Configuring proxy objects in nginx

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

proxy_pass http://127.0.0.1:8080;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

Example 2

nginx configuration file

server {

listen 18081 default_server;

# listen [::]:80 default_server;

server_name _Reverse proxy;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

# regular expression

location ~ /edu/ {

proxy_pass http://127.0.0.1:8080;

}

location ~ /vod/ {

proxy_pass http://127.0.0.1:8081;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

Load balancing configuration instance

The two Tomcat 8080 and 8081 webapps contain / edu/a.html. Monitor port 80 and load balance to ports 8080 and 8081 through nginx

upstream myserver {

server 127.0.0.1:8080;

server 127.0.0.1:8081;

}

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

proxy_pass http://myserver;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

Example of dynamic and static separation

Two ways

- It is also a popular scheme to separate static files into separate domain names and put them on an independent server

- Publish the dynamic and static files together, and separate the bronze drum Nginx configuration

Different request forwarding is realized by specifying different suffix names through location. By setting the expires parameter, you can make the browser cache expiration time and reduce the requests and traffic with the server. The specific definition of expires: it is to set an expiration time for a resource, that is, it does not need to be verified by the server, but directly confirm whether it expires through the browser itself, so no additional traffic will be generated. This approach is well suited to resources that do not change frequently. (if a file is frequently updated, it is not recommended to use expires to cache it). I set 3d here, which means that there is no change in the last update time of the file compared with the server by accessing the URL and sending a request within these three days. It will not be fetched from the server, and the status code 304 will be returned. If it is modified, it will be downloaded directly from the server and the status code 200 will be returned

location /www/ {

root /data/;

}

location /images/ {

root /data/;

autoindex on; # Lists the contents of the folder

}

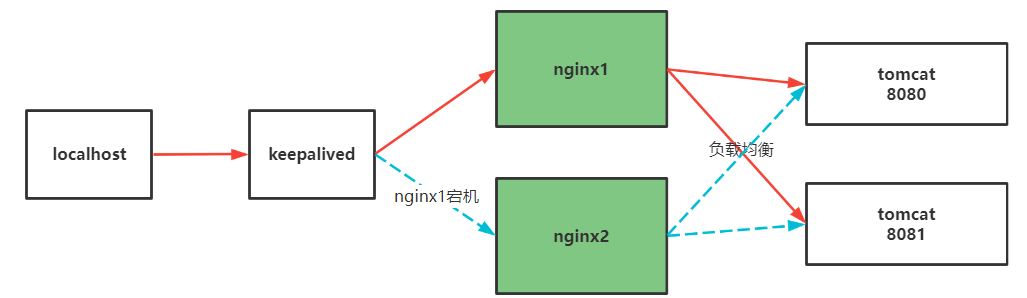

High availability configuration instance

Steps:

-

The remote alicloud server is deployed with Nginx1 server and two Tomcat servers tomcat18080 and tomcat8081

-

Configure Nginx2 server for local Linux e virtual machine

-

Connect the 80 port load balancing of two servers to tomcat for two days through the load balancing instance

-

Install keepalived on both servers

-

Install keepalived

yum install keepalived -y

-

Change the configuration file keepalived.conf under / etc/keepalived / and set it in VRRP_ A peripheral detection mechanism is defined in script and implemented in VRRP_ instanceVI_ Track defined in 1_ Script to track the execution process of the script and realize node transfer

global_defs{ notification_email { acassen@firewall.loc } notification_email_from Alexandre@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 // The above is mail configuration router_id LVS_DEVEL // The name of the current server. Use the hostname command to view it } vrrp_script chk_maintainace { // The script name of the detection mechanism is chk_maintainace // Script "[[- E / etc / kept / down]] & & Exit 1 | exit 0" / / can be script path or script command script "/usr/local/src/nginx_check.sh" // Such script path interval 2 // Test every 2 seconds weight 2 // When the script execution is established, change the current server priority to - 20 } vrrp_instanceVI_1 { // Every vrrp_instance is to define a virtual router state MASTER // The host is MASTER and the standby is BACKUP interface ens33 // The network card name can be found in ifconfig virtual_router_id 51 // The id number of the virtual route is generally less than 255, and the primary and standby machine IDS need to be the same priority 100 // Priority. The priority of the master is higher than that of the backup advert_int 1 // Default heartbeat interval authentication { // Authentication mechanism auth_type PASS auth_pass 1111 // password } virtual_ipaddress { // Virtual address vip 192.168.19.135 } }Detection script nginx under / usr/local/src_ check.sh

#!/bin/bash A=`ps -C nginx --no-header | wc -l` if [ $A -eq 0 ];then /usr/sbin/nginx # Try restarting nginx sleep 2 # Sleep for 2 seconds if [ `ps -C nginx --no-header | wc -l` -eq 0 ];then killall keepalived # Failed to start. Kill the keepalived service. Drift vip to other backup nodes fi fi -

Start nginx and keepalived on both linux

-

Test, turn off the main nginx server to test

Nginx execution principle

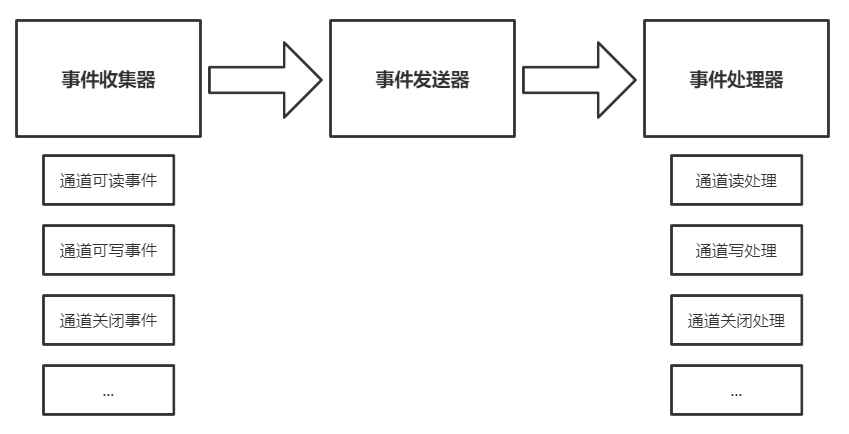

Reactor model

There are many similarities with the underlying java Communication Framework Netty in principle

Nginx uses the Reactor event driven model to handle high concurrent IO. The successor component of Reactor model includes three basic units: event collector, event transmitter and event processor. Its core idea is to register all I/O events to be processed on an I/O multiplexer, and the main thread / process is blocked on the multiplexer. Once an I/O event arrives or is ready (file descriptor or Socket can be read and written), The multiplexer returns and distributes the I/O events registered to the response first to the corresponding processor

- Event collector: responsible for various I/O requests of mobile phone Worker process

- Event sender: responsible for sending I/O events to the event processor

- Event processor: responsible for responding to various events

The event collector puts the IO events of each connection channel into a waiting time column and sends them to the corresponding events for processing through the event transmitter. The reason why the event collector can manage millions of connections at the same time is events, which is based on the "multiplexing IO" technology provided by the operating system. The common models include select and epoll

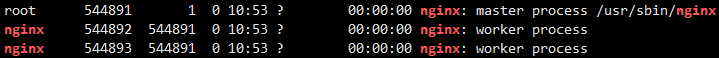

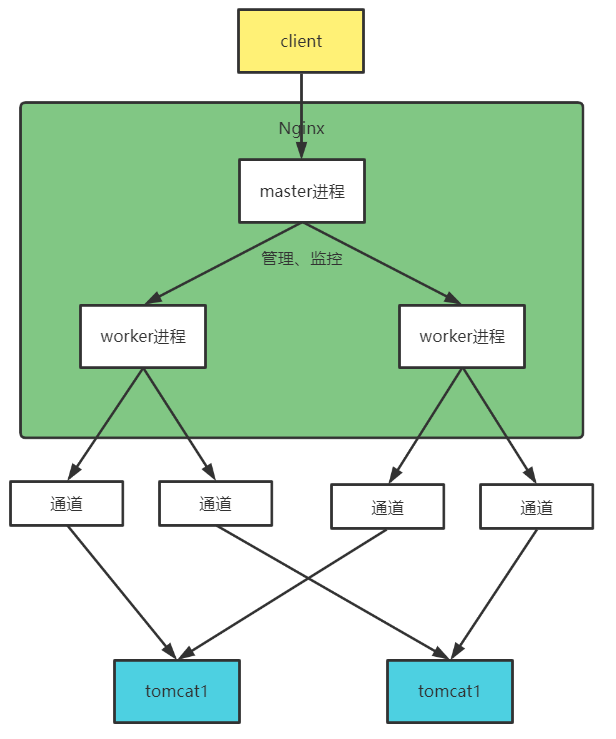

Two types of processes in Nginx

Generally speaking, Nginx will run in the background in daemon mode after startup. There are two types of background processes: one is called master process (equivalent to management process) and the other is called Worker process (work process)

- Master management process is mainly responsible for scheduling Worker work processes, such as loading configuration, starting work processes, receiving external signals, sending signals to each Worker work process, monitoring the running status of Worker processes, etc

- The Master is responsible for creating a monitoring socket interface and handing it over to the Worker process for connection monitoring

- The Worker process is mainly used to handle network events. When a Worker process receives a connection channel, it starts reading requests, parsing requests and processing requests. After processing, it generates data, returns it to the client, and finally disconnects the connection channel

- Each Worker process is peer-to-peer and independent. They compete equally for requests from clients. A request can only be processed in one Worker process (in the typical Reactor model, the Worker process can only be processed)

- If multiple Worker processes are started, each Worker sub process attempts to accept the connected Socket listening channel independently. The accept operation is locked by default, giving priority to the shared memory atomic lock of the operating system. If the operating system does not support it, the file lock is used

Nginx can be started in two ways:

- Single process startup (debugging): at this time, there is only one process in the system, which plays the role of both Master management process and Worker work process

- Multi process startup (production): at this time, the system has and only has one Master process and at least one Worker working process (the number of working processes is different from the CPU core configuration of the machine)

Current limiting principle and Practice

In the field of communication, current limiting technology is used to control the rate of communication data sent and received by the network interface, so as to optimize the performance, reduce the delay and improve the bandwidth

For example: suppose that the QPS that an interface can resist is 10000, then 20000 requests come in. After passing through the current limiting module, 10000 requests will be put first, and the other requests will be blocked for a period of time. It is not easy to return 404 rudely and let the client retry. At the same time, it can play the role of traffic peak clipping

Interface current limiting algorithm: counter current limiting, leaky bucket algorithm, token bucket algorithm

Nginx interview questions

What is Nginx

Nginx is a lightweight and high-performance reverse proxy Web server. It implements very efficient reverse proxy and load balancing. It can handle 20000-30000 concurrent connections. The official detection supports 50000 concurrent connections

Why use Nginx

- Cross platform, simple configuration, direction proxy, high concurrency connection, low memory consumption: only 10 nginx accounts for 150M memory. Nginx handles static files well and consumes less memory

- Nginx has a built-in health check function: if a server goes down, a health check will be performed, and the re sent request will not be sent to the down server. Resubmit the request to another node

- Other features:

- Save bandwidth: support GZIP compression and add browser local cache

- High stability: the probability of downtime is very small

- Accepting user requests is asynchronous

Why is Nginx high performance

Because its event processing mechanism: asynchronous non blocking event processing mechanism: it uses epoll model to provide a queue and queue for solution

How does Nginx handle requests

After Nginx accepts a request, first there are listen and server_ The name command matches the server module and then matches the location in the server module. Location is the actual address

Forward proxy and reverse proxy

- Forward proxy: a person sends a request directly to the target server

- Reverse proxy: requests are uniformly accepted by Nginx. After the Nginx reverse proxy server receives them, they are distributed to the back-end business processing server for processing according to certain rules

How to solve front-end cross domain problems with Nginx

What is cross domain?

The process of sending a request from a currently visited website to another website on the browser to obtain data is a cross domain request

When a browser requests the resources of another domain name from the web page of one domain name, the domain name, port and protocol are all cross domain

How to solve cross domain problems?

jsonp cross domain

JSON with Padding (JSON) is a new method of applying JSON

Difference from JSON:

- JSON returns a string of data, and JSONP returns script code (including a function call)

- JSONP only supports get requests, not post requests

Similarly, adding a script tag to the page triggers the request for the specified address through the src attribute, so it can only be a get request

Nginx reverse proxy

Use Nginx to forward requests, write cross domain interfaces as local domain interfaces, and then forward these interfaces to the address of the real request

www.baidu.com/index.html needs to call www.sina.com/server.php, which can be written as an interface www.baidu.com/server.php. This interface calls www.sina.com/server.php at the back end, gets the return value, and then returns it to index.html

Modify header on PHP side

- header(‘Access-Control-Allow-Origin:*’);// Allow access from all sources

- header(‘Access-Control-Allow-Method:POST,GET’);// Ways to allow access

reference:

Nginx from introduction to practice (super detailed)

Nginx interview questions (summarize the most comprehensive interview questions!!!)

Spring cloud and Nginx high concurrency core programming