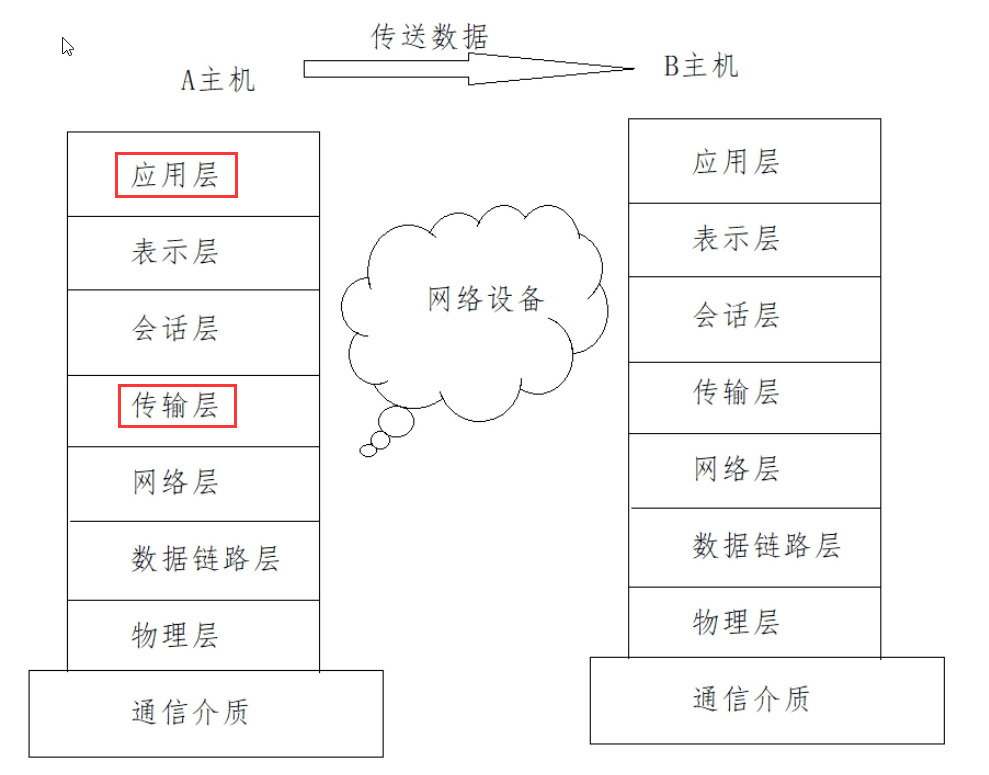

Load balancing is divided into four layers of load balancing and seven layers of load balancing

The so-called four layer load balancing refers to the transport layer in the OSI reference model, which is mainly based on ip + port load balancing

Realize four layer load balancing:

Hardware: F5,BIG-IP,EADWARE

Software: lvs,nginx,hayproxy

The so-called seven layer load balancing refers to the application layer in the OSI reference model, which is mainly based on URL or host ip

Ways to achieve seven layer load balancing:

Software: nginx, hayproxy, etc

Difference between layer 4 load balancing and layer 7 load balancing:

- Layer 4 load balancing distributes data packets at the bottom, while layer 7 distributes data packets at the top, so the efficiency of layer 4 load balancing is higher than that of layer 7

- Layer 4 load balancing cannot identify domain names, while layer 7 load balancing can identify domain names

There are other ways to deal with layers other than layer 4 and layer 7, layer 2 and layer 3 loads. The second layer is the data link layer, which realizes load balancing based on mac address, and the third layer is the network layer, which generally adopts virtual network ip address to realize load balancing

Actually adopted mode = four floors (lvs) + seven floors (nginx)

1: Seven layer proxy_upstream load balancing

To proxy multiple servers with the same content

Environmental Science:

| name | ip | effect |

|---|---|---|

| ecs-node-0001 | 114.115.239.76 | nginx proxy server |

| ecs-node-0002 | 114.115.213.208 | Simulate three proxy servers |

Proxy host: due to limited resources, three different ports are used to simulate three servers

[root@ecs-node-0002 ~]# vi /etc/nginx/nginx.conf

......

server {

listen 9001; #Three different ports are used to simulate three hosts, and the effect is the same when switching to three hosts

server_name localhost;

default_type text/html;

location / {

return 200 '<p>9001</p>'; #In order to make the verification effect obvious, the numbers accessed by the three ports are different

}

}

server {

listen 9002;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9002</p>" ;

}

}

server {

listen 9003;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9003</p>";

}

}

Proxy host:

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......#upstream is to access the same servers together in groups

upstream backend {

server 114.115.213.208:9001;

server 114.115.213.208:9002;

server 114.115.213.208:9003;

}

server {

listen 8080; #Exposed port

server_name localhost;

location / {

proxy_pass http://backend; # The name here should be the same as that of upstream

} #proxy_pass reverse proxy, which sends the access proxy server to upstream at different times

}

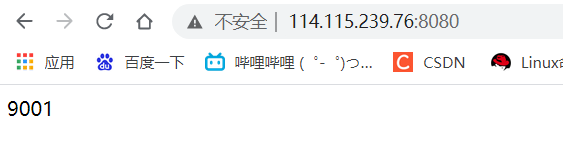

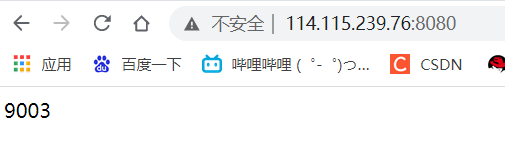

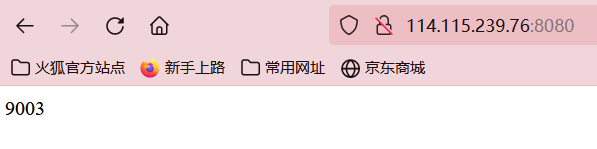

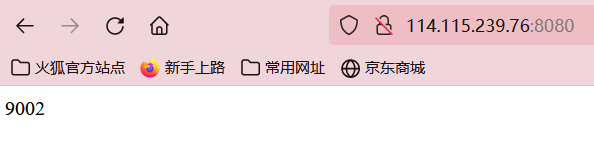

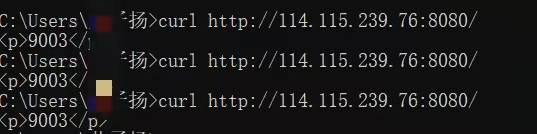

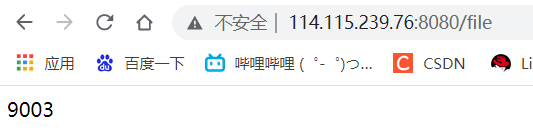

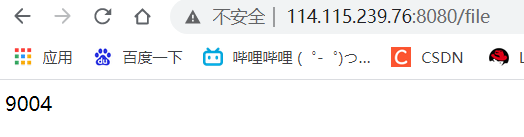

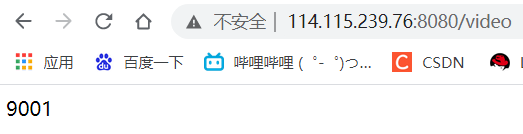

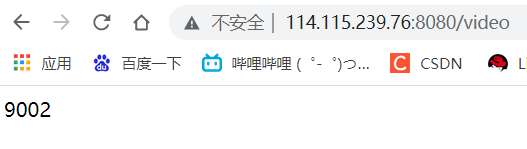

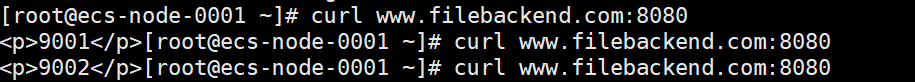

Through the browser to access node1 test, the proxy server is exposed to port 8080, so access through port 8080

As shown below, you can see it by refreshing the browser

2: Proxy server status

The proxy server has the following statuses in load scheduling:

| down | The current server does not participate in load balancing temporarily |

|---|---|

| backup | Reserved backup server |

| max_fails | Maximum number of request failures allowed |

| fails_timeout | After Max_ Service pause time after failures |

| max_conns | Limit maximum number of links |

1. down status

| name | ip | effect |

|---|---|---|

| ecs-node-0001 | 114.115.239.76 | nginx proxy server |

| ecs-node-0002 | 114.115.213.208 | Simulate three proxy servers |

The down status will mark the server as unavailable or unable to participate in load balancing. Generally, this setting is made for the server to be maintained.

Use the above environment to set one of the ports to down (simulate setting a host to down)

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

server 114.115.213.208:9001 down ; #Set one of the ports to down (simulate setting a host to down)

server 114.115.213.208:9002;

server 114.115.213.208:9003;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

Through the test of accessing the ip + listening port of the proxy server through the browser, it is found that 9001 is no longer present

2. backup status

| name | ip | effect |

|---|---|---|

| ecs-node-0001 | 114.115.239.76 | nginx proxy server |

| ecs-node-0002 | 114.115.213.208 | Simulate three proxy servers |

As the name suggests, the backup status means that when the primary node is normal, no service will be performed. When the primary node has a problem, the server set to backup will be on top

To modify the proxy server configuration file:

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

...#Set the 9002 port as backup (set the simulated 9002 host as backup)

upstream backend {

server 114.115.213.208:9001 down ; #The down state host does not participate in load balancing

server 114.115.213.208:9002 backup; #Backup host as backup of 9003

server 114.115.213.208:9003;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

Now the analog port 9003 cannot be accessed, so turn on firewalld and release port 9001-9003, then turn off Port 9003, and finally see whether port 9002 works.

The proxy host opens ports 9001-9003

[root@ecs-node-0002 ~]# firewall-cmd --permanent --add-port=9001/tcp #Permanently open tcp port 9001 success [root@ecs-node-0002 ~]# firewall-cmd --permanent --add-port=9002/tcp success [root@ecs-node-0002 ~]# firewall-cmd --permanent --add-port=9003/tcp success [root@ecs-node-0002 ~]# firewall-cmd --reload #Reload rule

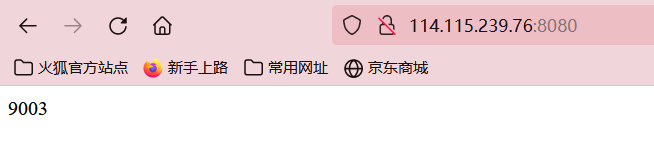

At this time, port 9003 can be accessed through port 8080 of the proxy server, and only port 9003 can be accessed

Next, close the 9003 port of the proxy server, and the simulated 9003 host cannot be used to see if the backup host can top up

Close port 9003 on the proxy server and reload the cache [root@ecs-node-0002 ~]# firewall-cmd --permanent --remove-port=9003/tcp success [root@ecs-node-0002 ~]# firewall-cmd --reload success

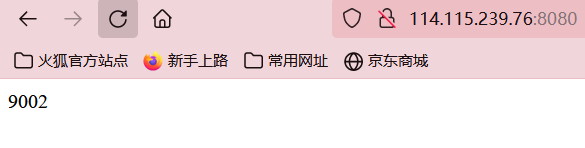

Again, access port 8080 of the proxy server through the browser, and it is found that port 9003 can no longer be accessed, but 9002 can be accessed

3.max_conns & fail_timeout

**max_conns='number' **

It is used to set the maximum number of active links of the proxy server at the same time. The default value is 0, which means there is no limit. If you change the setting, you can set it according to the performance of the back-end server to prevent the server from being overwhelmed

max_fail and fail_timeout

max_fail = 'number': set to allow the proxy server to fail. At this time, the default value is 1

fail_timeout = 'time' set Max_ The service pause time after failure is 10s by default

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

...#Set the 9002 port as backup (set the simulated 9002 host as backup)

upstream backend {

server 114.115.213.208:9001 down ; #The down state host does not participate in load balancing

server 114.115.213.208:9002 backup; #Backup host as backup of 9003

server 114.115.213.208:9003 max_fail=3 fail_timeout=15; #Set the maximum link failure for 3 times, and the link time after failure is 15s

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

3: Load balancing strategy

We have solved the basic load balancing above to distribute different requests to different hosts. Is there any other way? Of course

nginx's upstream supports the following 6 allocation methods:

| Algorithm name | explain |

|---|---|

| Cycle | Default mode |

| weight | Weight method |

| ip_hash | According to ip |

| least_conn | According to the least link method |

| url_hash | Assign by URL |

| fair | Based on response time |

1. Rotation

By default, it is round robin, which distributes the received requests to the back-end server in order. The problem is that when the back-end server is good or bad, it will cause imbalance

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

server 114.115.213.208:9001;

server 114.115.213.208:9002;

server 114.115.213.208:9003;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

2.weight (weight)

weight=number: used to set the weight of the server, which is 1 by default. The greater the weight value, the greater the probability of being assigned. This situation can be divided according to the performance of the back-end server

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

server 114.115.213.208:9001 weight=10;

server 114.115.213.208:9002 weight=5;

server 114.115.213.208:9003 weight=2;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

3.ip_hash (IP based allocation)

When load balancing multiple dynamic application servers on the back end, IJP_ The hash instruction can locate the request of A client P to the same back-end server through the hash algorithm. In this way, when A user from an IP logs in on the back-end web server A, when accessing other URL s of the site, it can be guaranteed that he is still accessing the back-end web server A.

| grammar | ip_hash |

|---|---|

| Default value | - |

| position | upstream |

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

ip_hash ; #Just up_stream add an IP address here_ Just hash

server 114.115.213.208:9001 ;

server 114.115.213.208:9002 ;

server 114.115.213.208:9003 ;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

After accessing the ip address of the proxy server, it is found that nothing has changed

One more thing to say is to use IP The hash instruction cannot guarantee the load balance of the back-end server, which may cause some back-end servers to receive more requests and some back-end servers to receive less requests, and the methods such as setting the weight of the back-end server will not work.

3.least_conn

With the least number of connections, forward the request to the back-end server with less connections. Polling algorithm is to forward requests to each back end evenly to make their load roughly the same; However, some requests take a long time, resulting in a high load on the back end. In this case, least_ conn this way can achieve better load balancing effect.

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

least_conn ;

server 114.115.213.208:9001 ;

server 114.115.213.208:9002 ;

server 114.115.213.208:9003 ;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

This load balancing strategy is suitable for server overload caused by different request times

4.url_hash

The request is allocated according to the hash result of the access url, so that each url is directed to the same back-end server, which should be used in conjunction with the cache hit. Multiple requests for the same resource may reach different servers, resulting in unnecessary multiple downloads, low cache hit rate and waste of some resources and time. Instead, use the url_hash enables the same url (that is, the same resource request) to reach the same server. Once the resource is cached and the request is received, it can be read from the cache.

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

hash $request-uri ;

server 114.115.213.208:9001 ;

server 114.115.213.208:9002 ;

server 114.115.213.208:9003 ;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

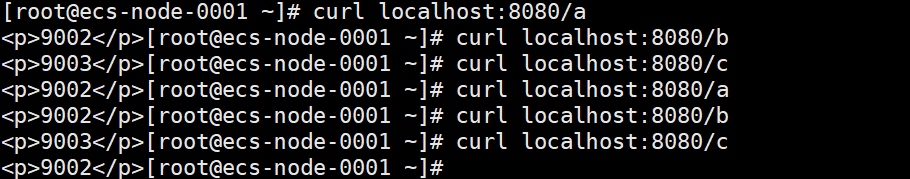

http://114.115.239.76:8080/c

http://114.115.239.76:8080/b

http://114.115.239.76:8080/a

5.fair

Fair does not use the rotation balancing algorithm used by the built-in load balancing, but can intelligently balance the load according to the page size and loading time. Then how to use fair load balancing strategy of third-party module.

Fair is a third-party library, so you should add fair's library before using it

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

fair ;

server 114.115.213.208:9001 ;

server 114.115.213.208:9002 ;

server 114.115.213.208:9003 ;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

4: Case

1. Load balancing specific resources

Proxy server configuration:

Due to limited resources, four different ports are used to simulate four servers

[root@ecs-node-0002 ~]# vim /etc/nginx/nginx.conf

......

server {

listen 9001;

server_name localhost;

default_type text/html;

location / {

return 200 '<p>9001</p>';

}

}

server {

listen 9002;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9002</p>" ;

}

}

server {

listen 9003;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9003</p>";

}

}

server {

listen 9004;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9004</p>";

}

}

Proxy server configuration:

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream videobackend { #Establish two upstream s to distinguish four host resources

server 114.115.213.208:9001 ;

server 114.115.213.208:9002 ;

}

upstream filebackend {

server 114.115.213.208:9003 ;

server 114.115.213.208:9004 ;

}

server {

listen 8080;

server_name localhost;

location /video { #Access to video resources is allocated to back-end servers in the videobackend group

proxy_pass http://videobackend;

}

location /file { #Access to file resources is allocated to back-end servers in the filebackend group

proxy_pass http://filebackend;

}

}

Access test via browser

http://114.115.239.76:8080/file

http://114.115.239.76:8080/video

2. Load balancing for different domain names

Similar to the above configuration

Proxy server:

[root@ecs-node-0002 ~]# vim /etc/nginx/nginx.conf

......

server {

listen 9001;

server_name localhost;

default_type text/html;

location / {

return 200 '<p>9001</p>';

}

}

server {

listen 9002;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9002</p>" ;

}

}

server {

listen 9003;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9003</p>";

}

}

server {

listen 9004;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9004</p>";

}

}

Proxy server:

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream videobackend {

server 114.115.213.208:9001 ;

server 114.115.213.208:9002 ;

}

upstream filebackend {

server 114.115.213.208:9003 ;

server 114.115.213.208:9004 ;

}

server {

listen 8080;

server_name www.videobackend.com; #By accessing this domain name, go to the video backend of the back-end upstream

location / {

proxy_pass http://videobackend;

}

}

server {

listen 8081;

server_name www.filebackend.com; #Access the filebackend of the backend server upstream through this domain name

location / {

proxy_pass http://filebackend;

}

}

Since these two domain names are fake domain names, local mapping should be done in the / etc/hosts file on the proxy server

127.0.0.1 www.filebackend.com

127.0.0.1 www.videobackend.com

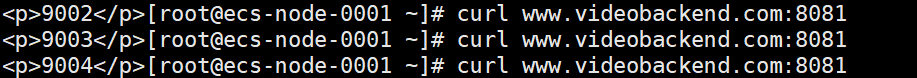

Test:

Because it is a fake domain name, it is tested with cul in the proxy server itself, so as to realize the load balance of the domain name

3. Load balancing of rewriting url

The load balancing of url rewriting is actually that when the client accesses the server, a file under the original server no longer exists (or changes its name), but also wants to automatically lead the name before accessing to the current file

Proxy server configuration:

[root@ecs-node-0001 ~]# vim /etc/nginx/nginx.conf

......

upstream backend {

server 114.115.213.208:9001 ;

server 114.115.213.208:9002 ;

}

server {

listen 8080;

server_name localhost;

location /file { #Will visit http://ip/file Redirect to http://server

rewrite ^(/file/.*) /server/$1 last;

}

location /server {

proxy_pass http://backend;

}

}

Proxy server:

[root@ecs-node-0002 ~]# vim /etc/nginx/nginx.conf

......

server {

listen 9001;

server_name localhost;

default_type text/html;

location / {

return 200 '<p>9001</p>';

}

}

server {

listen 9002;

server_name localhost;

default_type text/html;

location / {

return 200 "<p>9002</p>" ;

}

}

Test: access via browser http://ip/file/ "Just name it." find it or come to the next page