What functions does Nginx have and what problems does it solve?

Imagine a scenario. What if the server can't bear the increased number of stand-alone application users? A very effective way is to split several servers, one into many. The traffic borne by one server is divided into multiple servers.

This is a good solution. The problem is how to distribute requests to multiple servers?, So we need another layer of proxy to access these servers, which is called "reverse proxy".

- What is reverse proxy?

What I understand is the request of proxy server. The client proxy to server (VPN) is called proxy or forward proxy, and the proxy is on the client.

The server proxy to the client, and the proxy service is on the server side, which is the reverse proxy

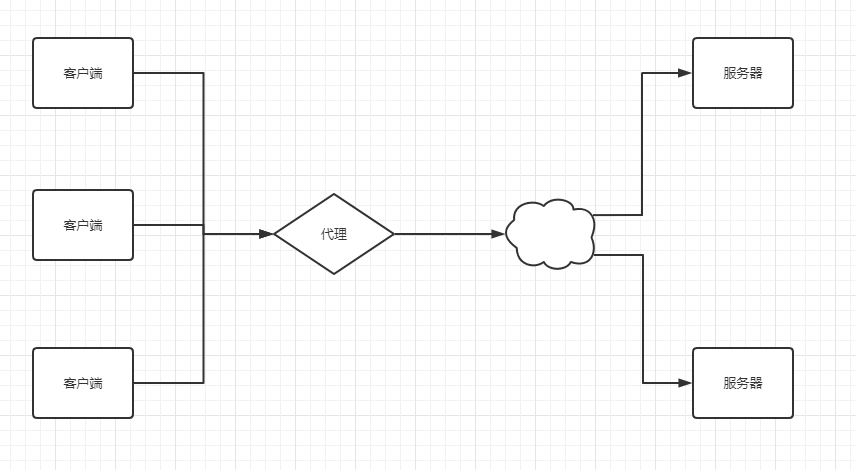

Forward proxy diagram

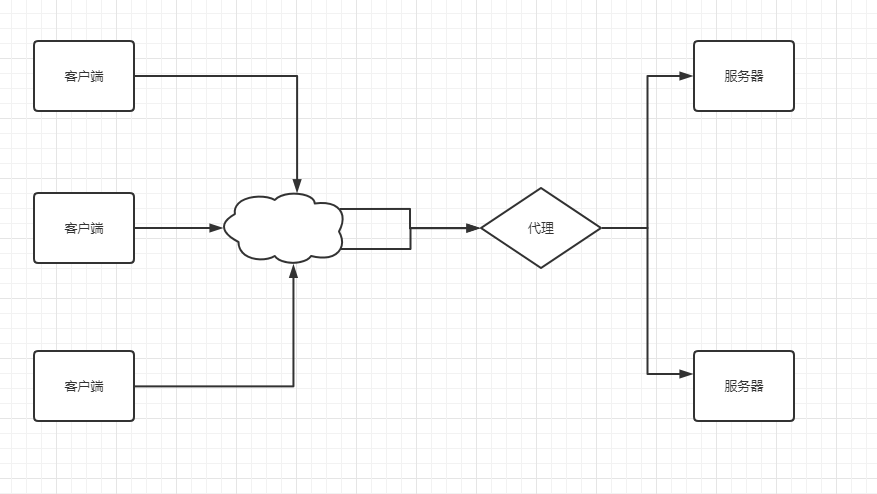

Reverse proxy diagram

In addition to this, there is another problem. How can I distribute requests to multiple servers most efficiently? For example, I poll the proxy server for requests, or give more and less. Therefore, we need a proxy server that can achieve "load balance"

Nginx has the above two functions

Nginx is a high-performance Http and reverse proxy Web server. It also provides IMAP/POP3/SMTP services. It occupies less memory, has strong concurrency, and is easy to install and configure

After testing, Nginx can support 50000 concurrency (tomcat can generally support four or five hundred)

Nginx also provides dynamic and static separation functions, such as some static resources, pictures and files. If there are multiple servers, each service needs to save a separate copy. You can separate a server dedicated to storing static resources and load them with nginx every time

Installation of Nginx

- Installation command

#Update source sudo apt-get update #Installing nginx sudo apt-get install nginx

- Uninstall command

sudo apt-get remove nginx nginx-common # Uninstall deletes all files except configuration files. sudo apt-get purge nginx nginx-common # Uninstall everything, including deleting profiles. sudo apt-get autoremove # After the above command is executed, it is mainly to uninstall and delete the dependent packages of Nginx that are no longer used. sudo apt-get remove nginx-full nginx-common #Uninstall and delete the two main packages.

- Common other commands

sudo systemctl status nginx #View status sudo systemctl start nginx #start nginx sudo systemctl stop nginx #Stop nginx sudo systemctl reload nginx #Reload profile sudo systemctl restart nginx #restart sudo systemctl enable nginx #Set nginx boot (default) sudo systemctl disenable nginx #Turn off nginx boot

-

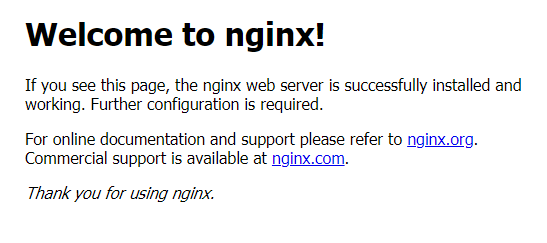

Verify that the installation was successful

Access server IP: 80 (of course, 80 can be omitted). The following screen appears

-

nginx listens to port 80 by default

-

Post installation directory structure

In nginx1 14.0 as an example

The default server configuration is in / etc / nginx / sites available / default:

- The configuration files are all under / etc/nginx /, and the total parent configuration is nginx Conf, but there is no default server information in it

server {

listen 80 default_server;

listen [::]:80 default_server;

# SSL configuration

#

# listen 443 ssl default_server;

# listen [::]:443 ssl default_server;

#

# Note: You should disable gzip for SSL traffic.

# See: https://bugs.debian.org/773332

#

# Read up on ssl_ciphers to ensure a secure configuration.

# See: https://bugs.debian.org/765782

#

# Self signed certs generated by the ssl-cert package

# Don't use them in a production server!

#

# include snippets/snakeoil.conf;

root /var/www/html;

# Add index.php to the list if you are using PHP

index index.html index.htm index.nginx-debian.html;

server_name _;

location / {

# First attempt to serve request as file, then

# as directory, then fall back to displaying a 404.

try_files $uri $uri/ =404;

}

# pass PHP scripts to FastCGI server

#

#location ~ \.php$ {

# include snippets/fastcgi-php.conf;

#

# # With php-fpm (or other unix sockets):

# fastcgi_pass unix:/var/run/php/php7.0-fpm.sock;

# # With php-cgi (or other tcp sockets):

# fastcgi_pass 127.0.0.1:9000;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

Open nginx Conf found that the parent configuration introduces all configuration files under / etc / nginx / sites available and / etc/nginx/conf.d, that is, the configuration items in them will be loaded into nginx. During the convenience period, the configuration files of services can be set separately.

Location of nginx files after installation:

-

/usr/sbin/nginx: main program

-

/etc/nginx: store configuration files

-

/usr/share/nginx: storing static files

-

/var/log/nginx: store logs

Nginx solves cross domain problems

Causes of cross domain problems:

- 1. Browser restrictions, not server restrictions. You can view the Network, the request can respond correctly, and the value returned by the response is also correct

- 2. The domain name or port of the requested address is different from the domain name or port currently accessed

- 3. The XHR (XMLHttpRequest) request is sent. You can compare the a tag (simulate XHR request) with the img tag (simulate json request) (the console only reports a cross domain exception)

Solution:

- 1. The client browser removes the cross domain restriction (theoretically, but not realistically)

- 2. Send JSONP request instead of XHR request (not applicable to all request methods, not recommended)

- 3. Modify the server side (including HTTP server and application server) (recommended)

load balancing

My two test services have only one interface and return a string. According to the following configuration, weighted load balancing can be realized

upstream myserver{

server 123.60.8.97:8081 weight=2;

server 123.60.8.97:8082 weight=1;

}

server {

listen 80 default_server;

listen [::]:80 default_server;

# SSL configuration

#

# listen 443 ssl default_server;

# listen [::]:443 ssl default_server;

#

# Note: You should disable gzip for SSL traffic.

# See: https://bugs.debian.org/773332

#

# Read up on ssl_ciphers to ensure a secure configuration.

# See: https://bugs.debian.org/765782

#

# Self signed certs generated by the ssl-cert package

# Don't use them in a production server!

#

# include snippets/snakeoil.conf;

#root /var/www/html;

# Add index.php to the list if you are using PHP

index index.html index.htm index.nginx-debian.html;

server_name _;

location / {

# First attempt to serve request as file, then

# as directory, then fall back to displaying a 404.

#try_files $uri $uri/ =404;

}

location /aa {

# First attempt to serve request as file, then

# as directory, then fall back to displaying a 404.

#try_files $uri $uri/ =404;

proxy_read_timeout 150;

proxy_pass http://myserver;

}

# pass PHP scripts to FastCGI server

#

#location ~ \.php$ {

# include snippets/fastcgi-php.conf;

#

# # With php-fpm (or other unix sockets):

# fastcgi_pass unix:/var/run/php/php7.0-fpm.sock;

# # With php-cgi (or other tcp sockets):

# fastcgi_pass 127.0.0.1:9000;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}Here, nginx is used to complete the cross domain solution of the third scheme. Let's start with the next scenario. Now many projects are developed separately from the front-end and back-end. The front-end project is easy to have cross domain problems with the back-end project (the port and IP are inconsistent). Nginx can be used to add a layer of agent to the back-end project to solve the cross domain problems. For example, there is an interface at the back-end, It can be configured under the server of nginx

server {

listen 80 default_server;

listen [::]:80 default_server;

root /var/www/html;

# Add index.php to the list if you are using PHP

index index.html index.htm index.nginx-debian.html;

server_name _;

location / {

# First attempt to serve request as file, then

# as directory, then fall back to displaying a 404.

try_files $uri $uri/ =404;

}

#Test resolution cross domain

location /test {

proxy_read_timeout 150;

proxy_pass http://123.60.8.97:8081/test;

}

}At this point, access ip:80/test to proxy to http://123.60.8.97:8081/test Next, notice that the configuration of Nginx ends with a semicolon

Detailed explanation of Nginx file

- nginx.conf directory file

... #Global block

events { #events block

...

}

http #http block

{

... #http global block

server #server block

{

... #server global block

location [PATTERN] #location block

{

...

}

location [PATTERN]

{

...

}

}

server

{

...

}

... #http global block

}- Detailed explanation of each configuration

######Nginx configuration file nginx.conf Detailed explanation in Chinese#####

#Define the users and user groups that Nginx runs

user www www;

#The number of nginx processes is recommended to be equal to the total number of CPU cores.

worker_processes 8;

#Global error log definition type, [debug | info | notice | warn | error | crit]

error_log /usr/local/nginx/logs/error.log info;

#Process pid file

pid /usr/local/nginx/logs/nginx.pid;

#Specifies the maximum number of descriptors that the process can open:

#Working mode and upper limit of connections

#This instruction refers to the maximum number of file descriptors opened by an nginx process. The theoretical value should be the maximum number of open files (ulimit -n) divided by the number of nginx processes. However, the nginx allocation request is not so uniform, so it is best to keep consistent with the value of ulimit -n.

#Now open the file under linux 2.6 kernel. The number of open files is 65535, and the worker_rlimit_nofile should fill in 65535 accordingly.

#This is because the allocation of requests to processes during nginx scheduling is not so balanced. Therefore, if 10240 is filled in, when the total concurrency reaches 30000-40000, the process may exceed 10240, and 502 error will be returned.

worker_rlimit_nofile 65535;

events

{

#Refer to the event model, use [kqueue | rtsig | epoll | / dev / poll | select | poll]; Epoll model

#It is a high-performance network I/O model in the kernel of linux version 2.6 or above. linux recommends epoll. If running on FreeBSD, use kqueue model.

#Supplementary notes:

#Similar to apache, nginx has different event models for different operating systems

#A) Standard event model

#Select and poll belong to the standard event model. If there is no more effective method in the current system, nginx will select select or poll

#B) Efficient event model

#Kqueue: used for FreeBSD 4.1+, OpenBSD 2.9+, NetBSD 2.0 and MacOS X. using kqueue in MacOS X system with dual processors may cause kernel crash.

#Epoll: used in Linux kernel version 2.6 and later systems.

#/dev/poll: used for Solaris 7 11/99 +, HP/UX 11.22+ (eventport), IRIX 6.5.15 + and Tru64 UNIX 5.1A +.

#Eventport: used on Solaris 10. In order to prevent kernel crash, it is necessary to install security patches.

use epoll;

#Maximum connections of a single process (maximum connections = connections * processes)

#According to the hardware adjustment, it can be used together with the previous work process. Try to be as large as possible, but don't run the cpu to 100%. The maximum number of connections allowed per process. Theoretically, the maximum number of connections per nginx server is.

worker_connections 65535;

#keepalive timeout.

keepalive_timeout 60;

#The buffer size of the client request header. This can be set according to your system page size. Generally, the size of a request header will not exceed 1k. However, since the general system page size should be greater than 1k, it is set as the page size here.

#The paging size can be obtained with the command getconf PAGESIZE.

#[root@web001 ~]# getconf PAGESIZE

#4096

#But there are also clients_ header_ buffer_ If the size exceeds 4k, but the client_header_buffer_size this value must be set to an integer multiple of the system page size.

client_header_buffer_size 4k;

#This will specify the cache for the open file. It is not enabled by default. max specifies the number of caches. It is recommended to be consistent with the number of open files. inactive refers to how long the file has not been requested before deleting the cache.

open_file_cache max=65535 inactive=60s;

#This refers to how often to check the valid information in the cache.

#Syntax: open_file_cache_valid time default value: open_file_cache_valid 60 uses the fields: http, server, location. This instruction specifies when to check open_ file_ The valid information of the cache item in the cache

open_file_cache_valid 80s;

#open_ file_ The minimum number of times a file is used in the time of the inactive parameter in the cache instruction. If this number is exceeded, the file descriptor is always opened in the cache. For example, if a file is not used once in the inactive time, it will be removed.

#Syntax: open_file_cache_min_uses number default: open_file_cache_min_uses 1 uses the fields: http, server, location. This instruction specifies the open_ file_ The minimum number of files that can be used within a certain time range in the invalid parameters of the cache instruction. If a larger value is used, the file descriptor is always open in the cache

open_file_cache_min_uses 1;

#Syntax: open_file_cache_errors on | off default: open_ file_ cache_ Error off uses the fields: http, server, location. This instruction specifies whether to search for a file or record cache errors

open_file_cache_errors on;

}

#Set up http server and use its reverse proxy function to provide load balancing support

http

{

#File extension and file type mapping table

include mime.types;

#Default file type

default_type application/octet-stream;

#Default encoding

#charset utf-8;

#hash table size of server name

#The hash table that holds the server name is created by the instruction server_names_hash_max_size and server_ names_ hash_ bucket_ Controlled by size. The parameter hash bucket size is always equal to the size of the hash table and is a multiple of the cache size of all processors. After reducing the number of accesses in memory, it is possible to speed up the search of hash table keys in the processor. If the hash bucket size is equal to the cache size of all processors, the number of searches in memory is 2 in the worst case. The first is to determine the address of the storage unit, and the second is to find the key value in the storage unit. Therefore, if Nginx gives a prompt to increase the hash max size or hash bucket size, the first thing is to increase the size of the previous parameter

server_names_hash_bucket_size 128;

#The buffer size of the client request header. This can be set according to your system page size. Generally, the header size of a request will not exceed 1k. However, since the general system page size should be greater than 1k, it is set as the page size here. The paging size can be obtained with the command getconf PAGESIZE.

client_header_buffer_size 32k;

#Client request header buffer size. nginx uses client by default_ header_ buffer_ The size buffer is used to read the header value. If the header is too large, it will use large_client_header_buffers to read.

large_client_header_buffers 4 64k;

#Set the size of files uploaded through nginx

client_max_body_size 8m;

#Turn on the efficient file transfer mode. The sendfile instruction specifies whether nginx calls the sendfile function to output files. For ordinary applications, it is set to on. If it is used for downloading and other application disk IO heavy load applications, it can be set to off to balance the processing speed of disk and network I/O and reduce the load of the system. Note: if the picture is not normal, change this to off.

#The sendfile instruction specifies whether nginx calls the sendfile function (zero copy mode) to output files. For normal applications, it must be set to on. If it is used for downloading and other application disk IO heavy load applications, it can be set to off to balance the processing speed of disk and network IO and reduce the system uptime.

sendfile on;

#Enable directory list access and download the appropriate server. It is closed by default.

autoindex on;

#This option allows or disables TCP using socke_ Cork option, which is only used when sendfile is used

tcp_nopush on;

tcp_nodelay on;

#Long connection timeout, in seconds

keepalive_timeout 120;

#FastCGI related parameters are to improve the performance of the website: reduce resource occupation and improve access speed. You can understand the literal meaning of the parameters below.

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

fastcgi_buffer_size 64k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

#gzip module settings

gzip on; #Turn on gzip compressed output

gzip_min_length 1k; #Minimum compressed file size

gzip_buffers 4 16k; #Compression buffer

gzip_http_version 1.0; #Compressed version (default 1.1, if the front end is squid2.5, please use 1.0)

gzip_comp_level 2; #Compression grade

gzip_types text/plain application/x-javascript text/css application/xml; #The compression type already contains textml by default, so there is no need to write it below. There will be no problem writing it, but there will be a warn.

gzip_vary on;

#It needs to be used when opening the limit of IP connections

#limit_zone crawler $binary_remote_addr 10m;

#Load balancing configuration

upstream jh.w3cschool.cn {

#For the load balancing of upstream, weight is the weight, which can be defined according to the machine configuration. The weigth parameter represents the weight. The higher the weight, the greater the probability of being assigned.

server 192.168.80.121:80 weight=3;

server 192.168.80.122:80 weight=2;

server 192.168.80.123:80 weight=3;

#nginx's upstream currently supports four modes of allocation

#1. Polling (default)

#Each request is allocated to different back-end servers one by one in chronological order. If the back-end server goes down, it can be automatically eliminated.

#2,weight

#Specifies the polling probability. The weight is directly proportional to the access ratio. It is used in the case of uneven performance of the back-end server.

#For example:

#upstream bakend {

# server 192.168.0.14 weight=10;

# server 192.168.0.15 weight=10;

#}

#2,ip_hash

#Each request is allocated according to the hash result of access ip, so that each visitor can access a back-end server regularly, which can solve the problem of session.

#For example:

#upstream bakend {

# ip_hash;

# server 192.168.0.14:88;

# server 192.168.0.15:80;

#}

#3. fair (third party)

#Requests are allocated according to the response time of the back-end server, and those with short response time are allocated first.

#upstream backend {

# server server1;

# server server2;

# fair;

#}

#4,url_hash (third party)

#The request is allocated according to the hash result of the access url, so that each url is directed to the same back-end server, which is more effective when the back-end server is cache.

#Example: add a hash statement to upstream, and other parameters such as weight cannot be written in the server statement_ Method is the hash algorithm used

#upstream backend {

# server squid1:3128;

# server squid2:3128;

# hash $request_uri;

# hash_method crc32;

#}

#tips:

#upstream bakend{#Define Ip and device status of load balancing device}{

# ip_hash;

# server 127.0.0.1:9090 down;

# server 127.0.0.1:8080 weight=2;

# server 127.0.0.1:6060;

# server 127.0.0.1:7070 backup;

#}

#Add proxy to the server that needs load balancing_ pass http://bakend/ ;

#The status of each device is set to:

#1.down indicates that the server before the order does not participate in the load temporarily

#2.weight means that the greater the weight, the greater the weight of the load.

#3.max_ Failures: the number of times a request is allowed to fail. The default is 1 When the maximum number of times is exceeded, proxy is returned_ next_ Error in upstream module definition

#4.fail_ timeout:max_ Pause time after failures.

#5.backup: when all other non backup machines are down or busy, request the backup machine. So this machine will have the lightest pressure.

#nginx supports setting multiple groups of load balancing at the same time, which is used for unused server s.

#client_ body_ in_ file_ If only is set to On, the data sent by the client post can be recorded in the file for debug ging

#client_body_temp_path sets the directory of the record file. You can set up up to three levels of directories

#location matches the URL Redirection or new proxy load balancing can be performed

}

#Configuration of virtual host

server

{

#Listening port

listen 80;

#There can be multiple domain names separated by spaces

server_name www.w3cschool.cn w3cschool.cn;

index index.html index.htm index.php;

root /data/www/w3cschool;

#Load balancing for ********

location ~ .*.(php|php5)?$

{

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

include fastcgi.conf;

}

#Picture cache time setting

location ~ .*.(gif|jpg|jpeg|png|bmp|swf)$

{

expires 10d;

}

#JS and CSS cache time settings

location ~ .*.(js|css)?$

{

expires 1h;

}

#Log format setting

#$remote_addr and $http_x_forwarded_for is used to record the ip address of the client;

#$remote_user: used to record the client user name;

#$time_local: used to record access time and time zone;

#$request: used to record the url and http protocol of the request;

#$status: used to record request status; Success is 200,

#$body_ bytes_ Send: record the size of the main content of the file sent to the client;

#$http_referer: used to record the links accessed from that page;

#$http_user_agent: record the relevant information of the client browser;

#Usually, the web server is placed behind the reverse proxy, so you can't get the customer's IP address through $remote_ The IP address obtained by add is the IP address of the reverse proxy server. The reverse proxy server can add x to the http header information of the forwarding request_ forwarded_ For information, which is used to record the IP address of the original client and the server address requested by the original client.

log_format access '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" $http_x_forwarded_for';

#Define the access log of this virtual host

access_log /usr/local/nginx/logs/host.access.log main;

access_log /usr/local/nginx/logs/host.access.404.log log404;

#Enable reverse proxy for '/'

location / {

proxy_pass http://127.0.0.1:88;

proxy_redirect off;

proxy_set_header X-Real-IP $remote_addr;

#The back-end Web server can obtain the user's real IP through x-forward-for

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

#The following are some reverse proxy configurations, which are optional.

proxy_set_header Host $host;

#Maximum number of single file bytes allowed for client requests

client_max_body_size 10m;

#The maximum number of bytes that the buffer agent can buffer client requests,

#If you set it to a large value, such as 256k, it is normal to submit any picture less than 256k, whether using firefox or IE browser. If the instruction is commented, the default client is used_ body_ buffer_ Size setting, that is, twice the size of the operating system page, 8k or 16k, the problem occurs.

#Whether you use Firefox 4 0 or IE8 0. Submit a relatively large picture with a size of about 200k, and all return 500 Internal Server Error errors

client_body_buffer_size 128k;

#Indicates that nginx blocks a response with an HTTP response code of 400 or higher.

proxy_intercept_errors on;

#Timeout of back-end server connection_ Timeout for initiating handshake waiting for response

#Timeout of nginx connection with backend server (proxy connection timeout)

proxy_connect_timeout 90;

#Data return time of back-end server (agent sending timeout)

#Data return time of back-end server_ That is, the back-end server must transmit all data within the specified time

proxy_send_timeout 90;

#After the connection is successful, the response time of the back-end server (agent receiving timeout)

#After successful connection_ Waiting for back-end server response time_ In fact, it has entered the back-end queue for processing (it can also be said that it is the time for the back-end server to process the request)

proxy_read_timeout 90;

#Set the buffer size of proxy server (nginx) to save user header information

#Set the buffer size of the first part of the response read from the proxy server. Usually, this part of the response contains a small response header. By default, the size of this value is instruction proxy_ The size of a buffer specified in buffers, but you can set it smaller

proxy_buffer_size 4k;

#proxy_buffers buffer. The average setting of web pages is less than 32k

#Set the number and size of buffers used to read responses (from the proxy server). By default, it is also the paging size. Depending on the operating system, it may be 4k or 8k

proxy_buffers 4 32k;

#Buffer size under high load (proxy_buffers*2)

proxy_busy_buffers_size 64k;

#Set in write proxy_temp_path is the size of data to prevent a worker process from blocking too long when transferring files

#Set the cache folder size. If it is larger than this value, it will be transferred from the upstream server

proxy_temp_file_write_size 64k;

}

#Set the address to view the status of Nginx

location /NginxStatus {

stub_status on;

access_log on;

auth_basic "NginxStatus";

auth_basic_user_file confpasswd;

#The contents of the htpasswd file can be generated using the htpasswd tool provided by apache.

}

#Local dynamic static separation reverse proxy configuration

#All jsp pages are handled by tomcat or resin

location ~ .(jsp|jspx|do)?$ {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://127.0.0.1:8080;

}

#All static files are directly read by nginx without tomcat or resin

location ~ .*.(htm|html|gif|jpg|jpeg|png|bmp|swf|ioc|rar|zip|txt|flv|mid|doc|ppt|

pdf|xls|mp3|wma)$

{

expires 15d;

}

location ~ .*.(js|css)?$

{

expires 1h;

}

}

}