1, Introduction

stay NLP statistical language model This paper has briefly introduced the relevant knowledge of language model. This paper has described the application scenario of language model and some traditional implementation methods. This paper then demonstrates another implementation method of n-gram - neural network. Is this implementation method neural language model?

According to the understanding of this chapter, the answer is No. the neural language model is a class reference, and its essence is an extension and extension of the statistical language model. I can only consider the above n words, or the following n words, or based on the context. The specific situation needs to be determined according to the needs.

2, Text generation practice

1. Training corpus

For and NLP statistical language model In this paper, text generation is still used as a case, and the corpus is still copied (less quantity, more intuitive understanding of the process).

In addition, this paper focuses on understanding and does not split the data for training, verification and testing!

corpus = '''

This life was originally a person. You insisted on being with us, but you held hands in default.

Moved eyes said yes, into my life.

After entering the door and turning on the light, the family hopes to remain a family in the next life.

Confirmed my eyes. I met the right person.

I turned with my sword, and the blood was like red lips.

The memory of the past dynasty crossed the world of mortals. It's not the blade that hurts people, it's your reincarnated soul.

The moonlight on the bluestone shines into the mountain city. I follow your reincarnation all the way. I love you very much.

Who is playing a song with Pipa? The east wind breaks. The maple leaves stain the story. I can see through the ending.

I led you along the ancient road outside the fence. In the years of barren smoke and grass, even breaking up was very silent.

'''

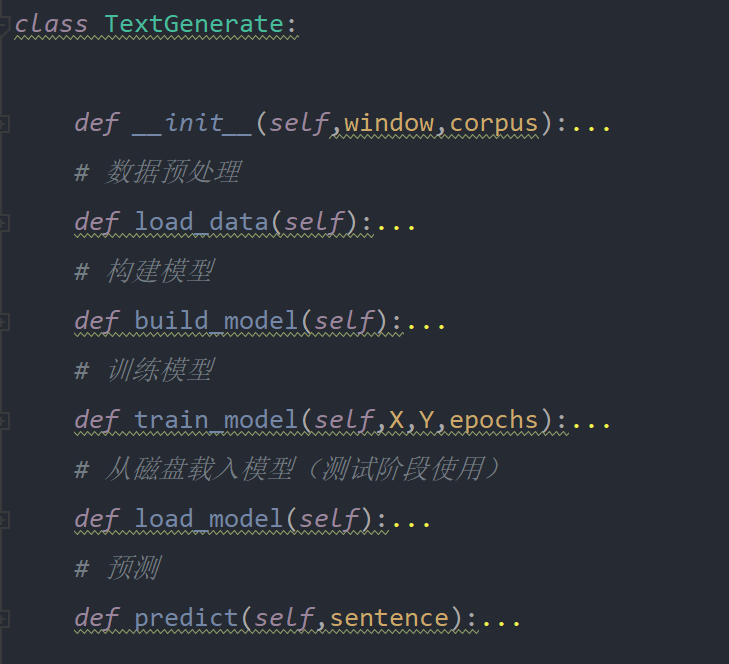

2. Project structure

3. Data processing

This paper assumes that the occurrence of the nth word is only related to the previous n-1 word, but not to any other word. Therefore, when constructing the training data, the corpus is intercepted, such as

This life was originally a person, you insisted on being with us, but holding hands in a small voice

If n is divided into 4, it is

This life->primary Life original->book Raw material->one ...and so on

According to the above segmentation method, the data is segmented and transformed into one hot sequence

def __init__(self,window,corpus):

self.window = window

self.corpus = corpus

self.char2id = None

self.id2char = None

self.char_length = 0

def load_data(self):

X = []

Y = []

# Divide the corpus into sentences according to \ n

corpus = self.corpus.strip().split('\n')

# Get all characters as a dictionary

chrs = set(self.corpus.replace('\n',''))

chrs.add('UNK')

self.char_length = len(chrs)

self.char2id = {c: i for i, c in enumerate(chrs)}

self.id2char = {i: c for c, i in self.char2id.items()}

for line in corpus:

x = [[self.char2id[char] for char in line[i: i + self.window]] for i in range(len(line) - self.window)]

y = [[self.char2id[line[i + self.window]]] for i in range(len(line) - self.window)]

X.extend(x)

Y.extend(y)

# Turn to one hot

X = to_categorical(X)

Y = to_categorical(Y)

return X,Y

4. Model construction

This paper uses two-layer LSTM to build the model

def build_model(self):

model = Sequential()

model.add(Bidirectional(LSTM(100,return_sequences=True)))

model.add(Bidirectional(LSTM(200)))

model.add(Dense(self.char_length, activation='softmax'))

model.compile('adam', 'categorical_crossentropy')

self.model = model

5. Model training method

def train_model(self,X,Y,epochs):

self.model.fit(X, Y, epochs=epochs, verbose=1)

self.model.save('model.model')

6. Model test method

def predict(self,sentence):

input_sentence = [self.char2id.get(char,self.char2id['UNK']) for char in sentence]

input_sentence = pad_sequences([input_sentence],maxlen=self.window)

input_sentence = to_categorical(input_sentence,num_classes=self.char_length)

predict = self.model.predict(input_sentence)

# In this paper, in order to facilitate the direct use of the maximum probability value, it is not absolute. There are many sampling methods, which can be selected by yourself

return self.id2char[np.argmax(predict)]

7. Training and testing

# Split by 5

window = 5

text_generate = TextGenerate(window,corpus)

X,Y = text_generate.load_data()

text_generate.build_model()

text_generate.train_model(X,Y,500)

# text_generate.load_model()

input_sentence = 'Confirmed the eyes'

result = input_sentence

#In the process of constructing corpus, it is set that only one word is predicted at a time. In order to generate a completed sentence, cyclic prediction is required

while not result.endswith('. '):

predict = text_generate.predict(input_sentence)

result += predict

input_sentence += predict

input_sentence = input_sentence[len(input_sentence)-(window if len(input_sentence)>window else len(input_sentence)):]

print(result)

Take "confirmed eyes" as the prompt statement, and the test results are as follows:

''' Confirmed the eyes, Confirmed the eyes, I Confirmed the eyes, I met Confirmed the eyes, I met Confirmed the eyes, I met the right one Confirmed the eyes. I met the right one Confirmed my eyes. I met the right person Confirmed my eyes. I met the right person. '''

It is found from the results that the model has completely remembered the connection relationship of the training corpus, but also because the maximum probability words are greedily sampled as the prediction results during sampling, when the prompt statements are completely outdated in the corpus, the diversity of prediction results is restrained.