Normalized opencv template after pit matching

Direct code

#include<opencv2/opencv.hpp>

using namespace cv;

using namespace std;

int main(){

///Single template matching

//Mat temp = imread("D://jy7188//cppProject//image//23//huge_eye.png"); // Template image

Mat temp = imread("D://jy7188//cppProject//image//23//temp.png "); / / template image

Mat src = imread("D://jy7188//cppProject//image//23//huge.png "); / / image to be searched

imshow("temp", temp);

imshow("src", src);

Mat dst = src.clone(); //Original image backup

int width = src.cols - temp.cols +1; //result width

int height = src.rows - temp.rows +1; //result height

Mat result(height, width, CV_32FC1); //Create result mapping image

matchTemplate(src, temp, result, TM_CCOEFF_NORMED); //The best matching value of correlation coefficient is 1

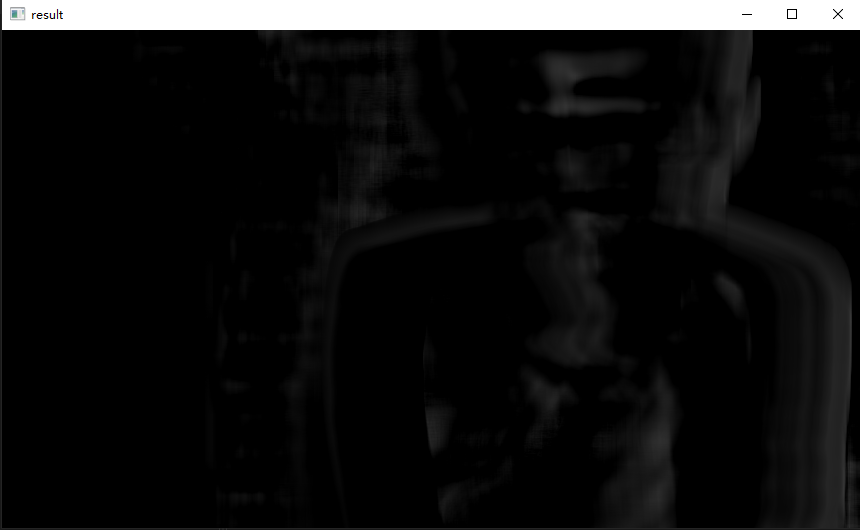

imshow("result", result);

for (int row = 0; row < result.rows; row++)

{

for (int col = 0; col < result.cols; col++)

{

result.at<float>(row, col) = 0.5*(result.at<float>(row, col) + 1);//Reassign pixel values

}

}

//normalize(result, result, 1, 0, NORM_L1, -1, Mat());

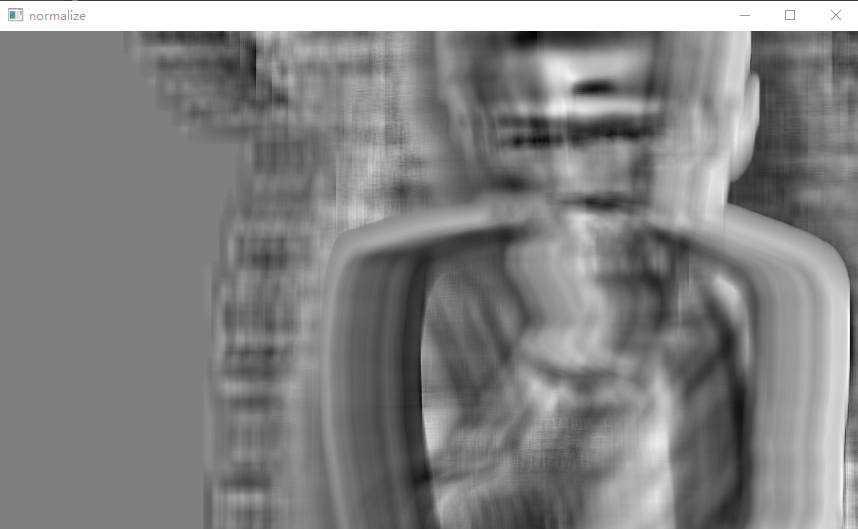

imshow("normalize", result);

double minValue, maxValue;

Point minLoc, maxLoc;

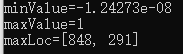

minMaxLoc(result, &minValue, &maxValue, &minLoc, &maxLoc);

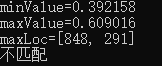

cout << "minValue=" << minValue << endl;

cout << "maxValue=" << maxValue << endl;

cout << "maxLoc=" << maxLoc << endl;

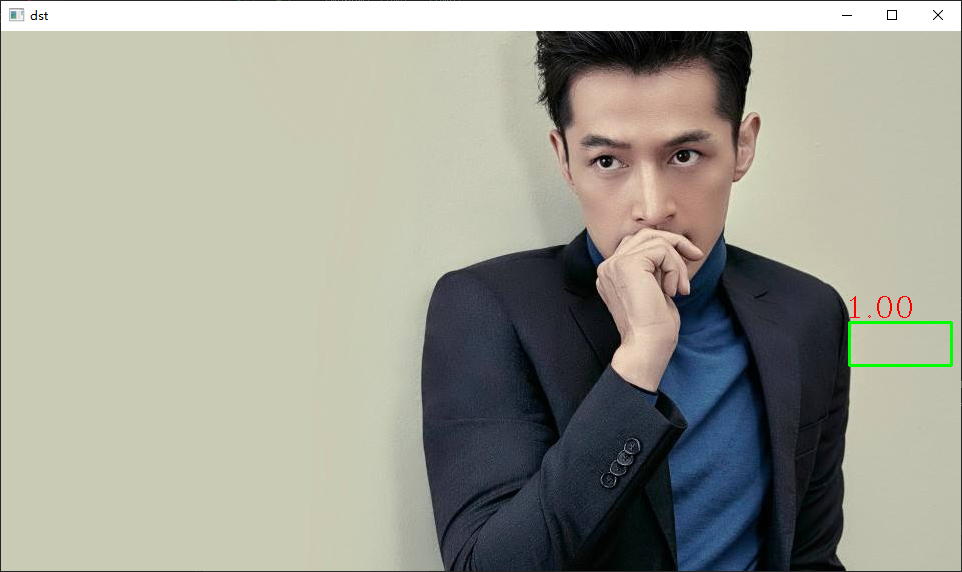

if (maxValue < 0.95) {

cout << "Mismatch" << endl;

}

char matchRate[10];

sprintf_s(matchRate, "%0.2f", maxValue);

putText(dst, matchRate, Point(maxLoc.x - 5, maxLoc.y - 5), FONT_HERSHEY_COMPLEX, 1, Scalar(0, 0, 255), 1);

rectangle(dst, maxLoc, Point(maxLoc.x + temp.cols, maxLoc.y + temp.rows), Scalar(0, 255, 0), 2, 8);

imshow("dst", dst);

waitKey(0);

}

If the template is indeed a part of the original image, the maxValue is 1 whether normalization is performed or not. However, if we perform normalization, when our template is not intercepted from the original image, but does not match any position of the original image, we use normalize to normalize it, We can find this by comparing the result images before and after normalization

normalize(result, result, 0, 1, NORM_MINMAX, -1, Mat()); //Normalized to 0 -- 1 range

Before normalization

After normalization

result

So should we use normalization? We can refer to the following blog first

https://blog.csdn.net/kuweicai/article/details/78988886

https://blog.csdn.net/cosmispower/article/details/64457406?utm_source=copy

- NORM_MINMAX, normalized to (min,max)

- NORM_INF: L ∞ norm of normalized array (Chebyshev distance) (maximum of absolute value)

- NORM_L1: normalized array (Manhattan distance) L1 norm (sum of absolute values)

- NORM_L2: normalized array (Euclidean distance) L2 norm

In my opinion, normalization should not be used if there is wrong matching, because we know that the output image result of the template search result must be a single channel 32-bit floating-point image, and the maximum value is 1. In this way, we can get the image similarity with the highest similarity with the template through minMaxvalue, and set the required confidence interval. For example, if you think that 95% similarity is achieved, you can set maxvalue > 0.95 to determine that the matching is successful.

If you really want to normalize the range, we can normalize the result by ourselves and map the value of [- 1,1] to [0,1]. The formula is for reference https://blog.csdn.net/Touch_Dream/article/details/62076236

The method is as follows

for (int row = 0; row < result.rows; row++)

{

for (int col = 0; col < result.cols; col++)

{

result.at<float>(row, col) = 0.5*(result.at<float>(row, col) + 1);//Reassign pixel values

}

}

At this time, the maximum value will not be 1, but the ratio to the interval [- 1, 1].

result

Ratio of interval [- 1, 1].

result

[external chain picture transferring... (img-cyd1cu9v-164552287546)]

[external chain picture transferring... (IMG mtjfgy3j-164552287547)]