After brushing the system with jetpack, deepstream is normally installed. The source code of deepstream is in / opt/nvidia/deepstream/deepstream-5.0 /.

First understand the application architecture of DeepStream

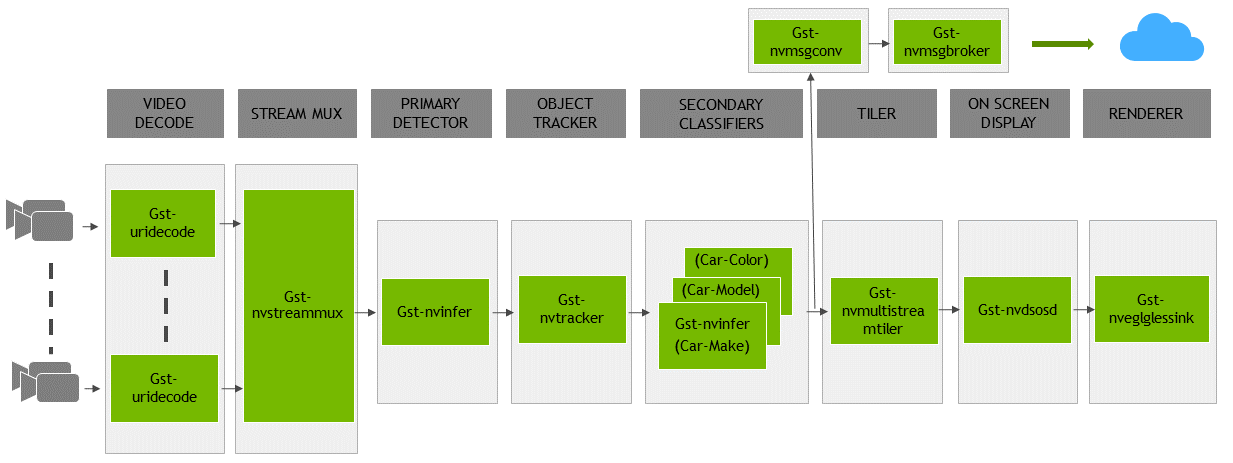

DeepStream reference application is a GStreamer based solution, which is composed of a group of GStreamer plug-ins. These plug-ins encapsulate low-level API s to form a complete graph (for an introduction to GStreamer, please refer to Gstreamer series tutorials ). The reference application has the ability to accept input from various sources (such as Camera, RTSP input, encoded file input), and also supports multi stream / source function. The list of GStreamer plug-ins implemented by NVIDIA and provided as part of the DeepStream SDK includes:

- Streaming media plug-in GST nvstreammux: a batch of buffers can be formed from multiple input sources.

- TensorRT based reasoning plug-in GST nvinfer: used for primary and secondary (attribute classification of main objects) detection and classification respectively.

- OpenCV based tracker plug-in GST nvtracker is used for object tracking with unique ID.

- Multi Stream Tiler plug-in GT nvmultistrumtiler: used to form 2D frame array.

- Screen display plug-in GST nvdsosd: you can use the generated metadata to draw shadow boxes, rectangles and text on the composite frame.

- The message converter GST nvmsgconv is used in combination with the message broker GST nvmsgbroker plug-in to send analysis data to servers in the cloud.

1. Overall structure

If you use the SDK installed by the deb package, the installation directory is located at / opt/nvidia/deepstream/deepstream-x.x. If it is installed in the compressed package, it is located at the location where we unzip the compressed package. Use the tree -d instruction to analyze the directory, and the output is as follows

.

├── samples

│ ├── configs

│ │ └── deepstream-app

│ ├── models

│ │ ├── Primary_Detector

│ │ ├── Primary_Detector_Nano

│ │ ├── Secondary_CarColor

│ │ ├── Secondary_CarMake

│ │ ├── Secondary_VehicleTypes

│ │ └── Segmentation

│ │ ├── industrial

│ │ └── semantic

│ └── streams

└── sources

├── apps

│ ├── apps-common

│ │ ├── includes

│ │ └── src

│ └── sample_apps

│ ├── deepstream-app

│ ├── deepstream-dewarper-test

│ │ └── csv_files

│ ├── deepstream-gst-metadata-test

│ ├── deepstream-image-decode-test

│ ├── deepstream-infer-tensor-meta-test

│ ├── deepstream-nvof-test

│ ├── deepstream-perf-demo

│ ├── deepstream-segmentation-test

│ ├── deepstream-test1

│ ├── deepstream-test2

│ ├── deepstream-test3

│ ├── deepstream-test4

│ ├── deepstream-test5

│ │ └── configs

│ └── deepstream-user-metadata-test

├── gst-plugins

│ ├── gst-dsexample

│ │ └── dsexample_lib

│ ├── gst-nvinfer

│ ├── gst-nvmsgbroker

│ └── gst-nvmsgconv

├── includes

├── libs

│ ├── amqp_protocol_adaptor

│ ├── azure_protocol_adaptor

│ │ ├── device_client

│ │ └── module_client

│ ├── kafka_protocol_adaptor

│ ├── nvdsinfer

│ ├── nvdsinfer_customparser

│ └── nvmsgconv

├── objectDetector_FasterRCNN

│ └── nvdsinfer_custom_impl_fasterRCNN

├── objectDetector_SSD

│ └── nvdsinfer_custom_impl_ssd

├── objectDetector_Yolo

│ └── nvdsinfer_custom_impl_Yolo

└── tools

└── nvds_logger

Next, we will introduce the contents of each folder

2. samples folder

The samples folder contains three subfolders, namely, the configurations, models and streams folders, which represent the sample configuration file, the model running the sample application and the streaming media file directory respectively

2.1 configs subfolder

Under the configs folder are various configuration files of deepstream app,

. ├── configs │ └── deepstream-app │ ├── config_infer_primary_nano.txt(stay nano Admiral nvinfer Element configured as primary detector) │ ├── config_infer_primary.txt(take nvinfer Element configured as primary detector) │ ├── config_infer_secondary_carcolor.txt(take nvinfer Element configured as auxiliary classifier) │ ├── config_infer_secondary_carmake.txt(take nvinfer Element configured as auxiliary classifier) │ ├── config_infer_secondary_vehicletypes.txt(take nvinfer Element configured as auxiliary classifier) │ ├── iou_config.txt(Configure a low-level IOU(Intersection on Union (tracker) │ ├── source1_usb_dec_infer_resnet_int8.txt(Demonstrate a USB Camera as input) │ ├── source30_1080p_dec_infer-resnet_tiled_display_int8.txt │ │ (Demo 30 way 1080 P Video input (decoding, reasoning, display) │ ├── source4_1080p_dec_infer-resnet_tracker_sgie_tiled_display_int8_gpu1.txt │ │ (Demo in gpu1 Shang4 road 1080 P Video input (decoding, reasoning, tracking, display) │ ├── source4_1080p_dec_infer-resnet_tracker_sgie_tiled_display_int8.txt │ │ (Demo 4-way 1080 P Video input (decoding, reasoning, tracking, display) │ └── tracker_config.yml

2.2 models subfolder

├── models │ ├── Primary_Detector(Primary detector) │ │ ├── cal_trt.bin │ │ ├── labels.txt │ │ ├── resnet10.caffemodel │ │ └── resnet10.prototxt │ ├── Primary_Detector_Nano(Primary detector for nano) │ │ ├── labels.txt │ │ ├── resnet10.caffemodel │ │ └── resnet10.prototxt │ ├── Secondary_CarColor(Secondary detector, vehicle color classification) │ │ ├── cal_trt.bin │ │ ├── labels.txt │ │ ├── mean.ppm │ │ ├── resnet18.caffemodel │ │ └── resnet18.prototxt │ ├── Secondary_CarMake(Secondary detector, vehicle color classification) │ │ ├── cal_trt.bin │ │ ├── labels.txt │ │ ├── mean.ppm │ │ ├── resnet18.caffemodel │ │ └── resnet18.prototxt │ ├── Secondary_VehicleTypes(Secondary detector (vehicle category classification) │ │ ├── cal_trt.bin │ │ ├── labels.txt │ │ ├── mean.ppm │ │ ├── resnet18.caffemodel │ │ └── resnet18.prototxt │ └── Segmentation(Split model) │ ├── industrial │ │ └── unet_output_graph.uff │ └── semantic │ └── unetres18_v4_pruned0.65_800_data.uff

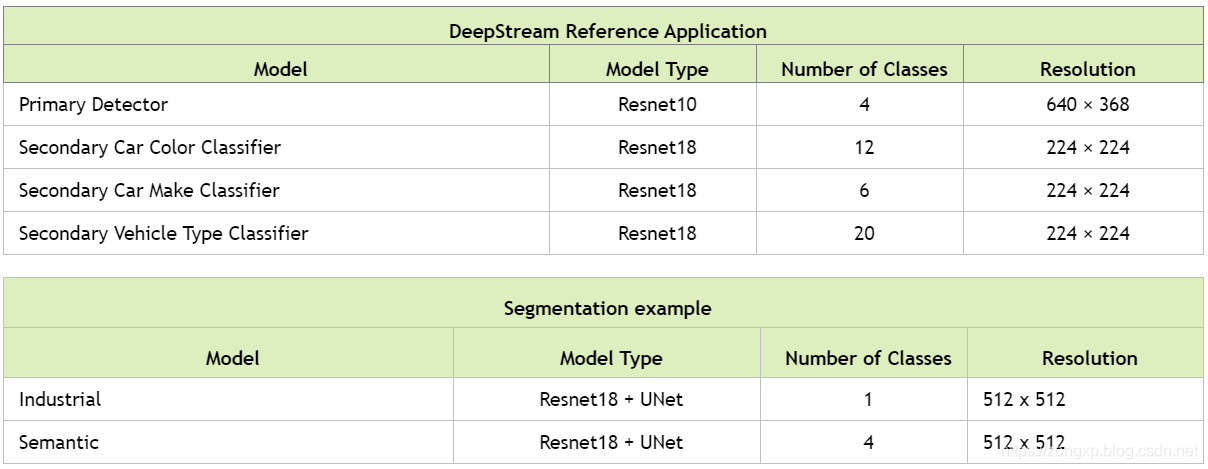

The specific parameters of the model are as follows

2.3 streams subfolder

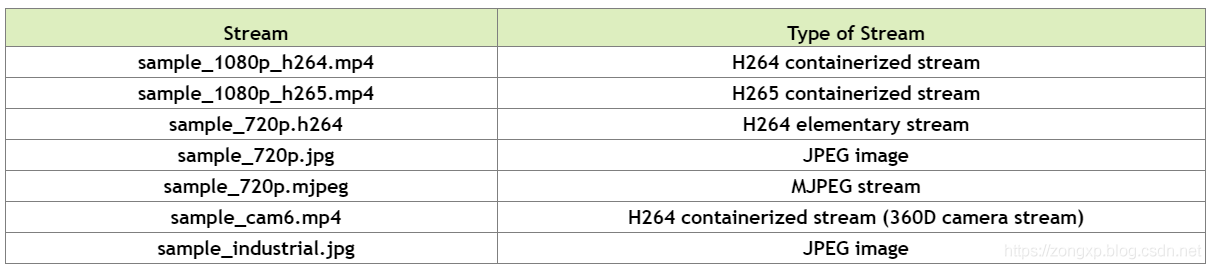

This folder mainly contains some test files. The corresponding types of files are shown in the figure

3. sources folder

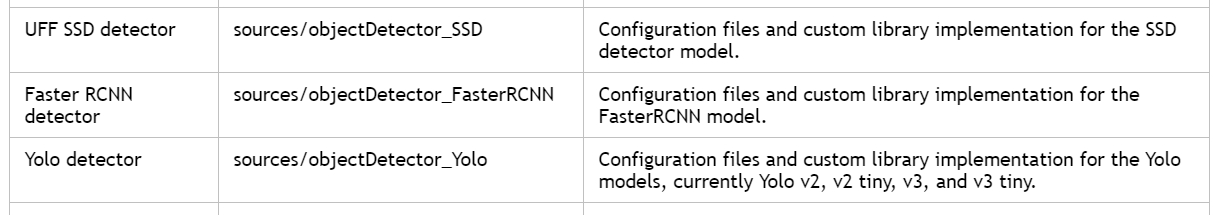

This folder contains the source code of various sample programs and plug-ins, mainly including the following folders

. ├── apps(deepstream-app Test code) ├── gst-plugins(gstreamer Plug in) ├── includes(Various header files) ├── libs(Various libraries) ├── objectDetector_FasterRCNN(faster rcnn Target detector) ├── objectDetector_SSD(SSD Target detector) ├── objectDetector_Yolo(yolo Target detector) └── tools(Log tool)

We can mainly use the sample in apps_ App sample code, GST plugins and three target detectors

3.1 apps subfolder

sample_apps

├── deepstream-app

♪ the end-to-end example demonstrates the multi camera stream of 4 cascaded neural networks (1 primary detector and 3 secondary classifiers) and displays the tiled output.

├── deepstream-dewarper-test

♪ demonstrate the distortion function of single or multiple 360 degree camera streams. Read camera calibration parameters from CSV file,

♪ and render the aisle and speckle surface on the display screen.

├── deepstream-gst-metadata-test

♪ demonstrate how to set metadata before the GST nvstreammux plug-in in the DeepStream pipeline,

♪ and how to access metadata after GST nvstreammux.

├── deepstream-image-decode-test

♪ built on deep stream-test3 to demonstrate image decoding instead of video. This example uses a custom decoding box,

♪ therefore, MJPEG codec can be used as input.

├── deepstream-infer-tensor-meta-test

♪ demonstrate how to pass and access the output of nvinfer tensor as metadata.

├── deepstream-nvof-test

♪ demonstrate the optical flow function of single or multiple streams. This example uses two GStreamer plug-ins (GST nvof and GST nvofvisual).

♪ the GST nvof element generates MV (motion vector) data and attaches it as user metadata. Use of GST nvofvisual element

♪ predefined color wheel matrix to visualize MV data.

├── deepstream-perf-demo

♪ perform single channel cascading reasoning and object tracking on all streams in the directory in sequence.

├── deepstream-segmentation-test

♪ demonstrate the use of semantic or industrial neural networks to segment multi stream video or images and present the output to the display.

├── deepstream-test1

♪ a simple example of how to use the DeepStream element for a single H.264 stream: filesrc → decode → nvstreammux → nvinfer

♪ (main detector) → nvosd → renderer.

├── deepstream-test2

♪ a simple application, built on test1, displays additional attributes, such as tracking and secondary classification attributes.

├── deepstream-test3

♪ build based on deep stream-test1 (simple test application 1) to demonstrate how to:

• use multiple sources in the pipeline

♪ use uridecodebin to accept any type of input (such as RTSP / file), any container format supported by GStreamer, and any codec

• configure GST nvstreammux to generate a batch of frames and infer these frames to improve resource utilization

• extract stream metadata that contains useful information about frames in the batch buffer

├── deepstream-test4

♪ build a single H.264 stream based on deepstream-test1: filesrc, decode, nvstreammux, nvinfer, nvosd, renderer demonstrate how to:

• use the GST nvmsgconv and GST nvmsgbroker plug-ins in the pipeline

• create NVDS_META_EVENT_MSG type metadata and attach it to the buffer

• NVDS_META_EVENT_MSG is used for different types of objects, such as vehicles and people

• realize the "copy" and "free" functions of metadata extended through extMsg field

├── deepstream-test5

♪ built on deep stream app. Exhibition:

• use the GST nvmsgconv and GST nvmsgbroker plug-ins for multiple streams in the pipeline

♪ how to configure the GST nvmsgbroker plug-in as a receiver plug-in from the configuration file (applicable to KAFKA, Azure, etc.)

♪ how to process RTCP sender reports from RTSP servers or cameras, and how to convert Gst Buffer PTS to UTC timestamp.

♪ for more details, please refer to the RTCP sender report callback function test5_ rtcp_ sender_ report_ Deep stream registered and used by callback_ test5_ app_ main. c.

♪ the process of GStreamer callback registration using the "handle sync" signal of rtpmanager element is recorded in apps common / SRC / deepstream_ source_ bin. C.

├──deepstream-user-metadata-test

♪ demonstrate how to add custom or user specific metadata to any component of DeepStream. The test code will be filled with a user

♪ a 16 byte array of data is attached to the selected component. The data is retrieved in another component.

3.2 GST plugins subfolder

gst-plugins ├── gst-dsexample(Template plug-in for integrating custom algorithms into DeepStream SDK (in drawing) │ └── dsexample_lib ├── gst-nvinfer(For reasoning GStreamer Gst-nvinfer Plug in source code) ├── gst-nvmsgbroker(GStreamer Gst-nvmsgbroker Plug in source code for sending data to the server) └── gst-nvmsgconv(GStreamer Gst-nvmsgconv The source code of the plug-in, which is used to convert metadata to schema format.)

3.3 libs subfolder

libs ├── amqp_protocol_adaptor(test AMQP Application.) ├── azure_protocol_adaptor(test Azure MQTT Application.) │ ├── device_client │ └── module_client ├── kafka_protocol_adaptor(test Kafka (New Applications) ├── nvdsinfer(NvDsInfer Library source code, by Gst-nvinfer GStreamer Plug in use.) ├── nvdsinfer_customparser(Custom model output parsing example for detectors and classifiers) └── nvmsgconv(Gst-nvmsgconv GStreamer Required for plug-ins NvMsgConv Library source code)