Did you know that OpenCV has a built-in method to perform pedestrian detection?

OpenCV comes with a pre trained HOG + linear SVM model that can be used to perform pedestrian detection in images and video streams.

Today, we use Opencv's own model to detect pedestrians in the video stream. Just open a new file and name it detect Py, and then add the code:

# import the necessary packages from __future__ import print_function import numpy as np import argparse import cv2 import os

Import the required packages, and then define the methods required by the project.

def nms(boxes, probs=None, overlapThresh=0.3):

# if there are no boxes, return an empty list

if len(boxes) == 0:

return []

# if the bounding boxes are integers, convert them to floats -- this

# is important since we'll be doing a bunch of divisions

if boxes.dtype.kind == "i":

boxes = boxes.astype("float")

# initialize the list of picked indexes

pick = []

# grab the coordinates of the bounding boxes

x1 = boxes[:, 0]

y1 = boxes[:, 1]

x2 = boxes[:, 2]

y2 = boxes[:, 3]

# compute the area of the bounding boxes and grab the indexes to sort

# (in the case that no probabilities are provided, simply sort on the

# bottom-left y-coordinate)

area = (x2 - x1 + 1) * (y2 - y1 + 1)

idxs = y2

# if probabilities are provided, sort on them instead

if probs is not None:

idxs = probs

# sort the indexes

idxs = np.argsort(idxs)

# keep looping while some indexes still remain in the indexes list

while len(idxs) > 0:

# grab the last index in the indexes list and add the index value

# to the list of picked indexes

last = len(idxs) - 1

i = idxs[last]

pick.append(i)

# find the largest (x, y) coordinates for the start of the bounding

# box and the smallest (x, y) coordinates for the end of the bounding

# box

xx1 = np.maximum(x1[i], x1[idxs[:last]])

yy1 = np.maximum(y1[i], y1[idxs[:last]])

xx2 = np.minimum(x2[i], x2[idxs[:last]])

yy2 = np.minimum(y2[i], y2[idxs[:last]])

# compute the width and height of the bounding box

w = np.maximum(0, xx2 - xx1 + 1)

h = np.maximum(0, yy2 - yy1 + 1)

# compute the ratio of overlap

overlap = (w * h) / area[idxs[:last]]

# delete all indexes from the index list that have overlap greater

# than the provided overlap threshold

idxs = np.delete(idxs, np.concatenate(([last],

np.where(overlap > overlapThresh)[0])))

# return only the bounding boxes that were picked

return boxes[pick].astype("int")

image_types = (".jpg", ".jpeg", ".png", ".bmp", ".tif", ".tiff")

def list_images(basePath, contains=None):

# return the set of files that are valid

return list_files(basePath, validExts=image_types, contains=contains)

def list_files(basePath, validExts=None, contains=None):

# loop over the directory structure

for (rootDir, dirNames, filenames) in os.walk(basePath):

# loop over the filenames in the current directory

for filename in filenames:

# if the contains string is not none and the filename does not contain

# the supplied string, then ignore the file

if contains is not None and filename.find(contains) == -1:

continue

# determine the file extension of the current file

ext = filename[filename.rfind("."):].lower()

# check to see if the file is an image and should be processed

if validExts is None or ext.endswith(validExts):

# construct the path to the image and yield it

imagePath = os.path.join(rootDir, filename)

yield imagePath

def resize(image, width=None, height=None, inter=cv2.INTER_AREA):

dim = None

(h, w) = image.shape[:2]

# If the height and width are None, return directly

if width is None and height is None:

return image

# Check if the width is None

if width is None:

# Calculate the proportion of height and calculate the width according to the proportion

r = height / float(h)

dim = (int(w * r), height)

# High to None

else:

# Calculate the width scale and calculate the height

r = width / float(w)

dim = (width, int(h * r))

resized = cv2.resize(image, dim, interpolation=inter)

# return the resized image

return resized

nms function: non maximum suppression.

list_images: read images.

resize: changes the size proportionally.

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--images", default='test1', help="path to images directory")

args = vars(ap.parse_args())

# Initialize HOG descriptor / person detector

hog = cv2.HOGDescriptor()

hog.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

Defines the folder path for the input pictures.

Initialize the HOG detector.

# loop over the image paths

for imagePath in list_images(args["images"]):

# Load and resize the image to

# (1) Reduce detection time

# (2) Improve detection accuracy

image = cv2.imread(imagePath)

image = resize(image, width=min(400, image.shape[1]))

orig = image.copy()

print(image)

# detect people in the image

(rects, weights) = hog.detectMultiScale(image, winStride=(4, 4),

padding=(8, 8), scale=1.05)

# draw the original bounding boxes

print(rects)

for (x, y, w, h) in rects:

cv2.rectangle(orig, (x, y), (x + w, y + h), (0, 0, 255), 2)

# Non maximum suppression is applied to the bounding box using a considerable overlap threshold in an attempt to maintain the overlapping box that is still human

rects = np.array([[x, y, x + w, y + h] for (x, y, w, h) in rects])

pick = nms(rects, probs=None, overlapThresh=0.65)

# draw the final bounding boxes

for (xA, yA, xB, yB) in pick:

cv2.rectangle(image, (xA, yA), (xB, yB), (0, 255, 0), 2)

# show some information on the number of bounding boxes

filename = imagePath[imagePath.rfind("/") + 1:]

print("[INFO] {}: {} original boxes, {} after suppression".format(

filename, len(rects), len(pick)))

# show the output images

cv2.imshow("Before NMS", orig)

cv2.imshow("After NMS", image)

cv2.waitKey(0)

Traverse the images in the -- images directory.

Then, adjust the image to a maximum width of 400 pixels. There are two reasons to try to reduce the image size:

- Reducing the image size can ensure that there are fewer sliding windows in the image pyramid to be evaluated (i.e. extracting the HOG feature from the linear SVM and then passing it to the linear SVM), so as to reduce the detection time (and improve the overall detection throughput).

- Adjusting our image size also improves the overall accuracy of our pedestrian detection (i.e. less false positives).

The pedestrian in the image is detected by calling the detectMultiScale method of the hog descriptor. The detectMultiScale method constructs an image pyramid with a scale of 1.05, and the sliding window steps are (4,4) pixels in the x and y directions respectively.

The size of the sliding window is fixed at 64 x 128 pixels, as suggested in the groundbreaking Dalal and triggers paper directional gradient histogram for human detection. The detectMultiScale function returns the 2-tuple of rects, or the bounding box (x, y) coordinates and weights of each person in the image. SVM returns the confidence value for each detection.

A larger scale size will evaluate fewer layers in the image pyramid, which can make the algorithm run faster. However, Too big (that is, the number of layers in the image pyramid is small) will make pedestrians undetectable. Similarly, a too small scale will significantly increase the number of image pyramid layers to be evaluated. This will not only cause computational waste, but also significantly increase the number of false positives detected by the pedestrian detector. That is, when performing pedestrian detection, the proportion is one of the most important parameters to be adjusted One. I will review each parameter more thoroughly in future blog posts to detect multi-scale.

Get the initial bounding boxes and draw them on the image.

However, for some images, you will notice that each person detects multiple overlapping bounding boxes.

In this case, we have two options. We can detect whether one bounding box is completely contained in another. Alternatively, we can apply non maximum suppression and suppress bounding boxes that overlap important thresholds.

After applying non maximum suppression, the final bounding box is obtained, and then the image is output.

Operation results:

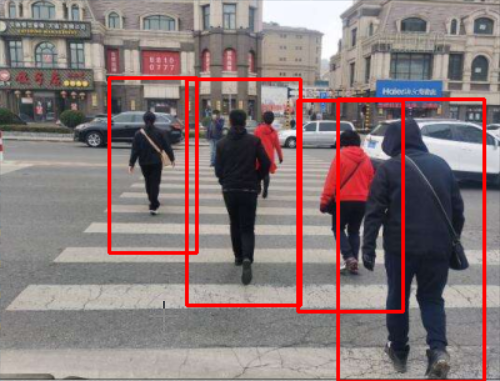

Before nms:

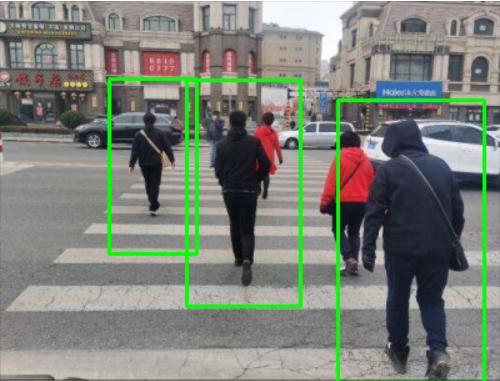

After nms:

Conclusion:

Compared with the current deep learning methods, the accuracy of machine learning is much lower.